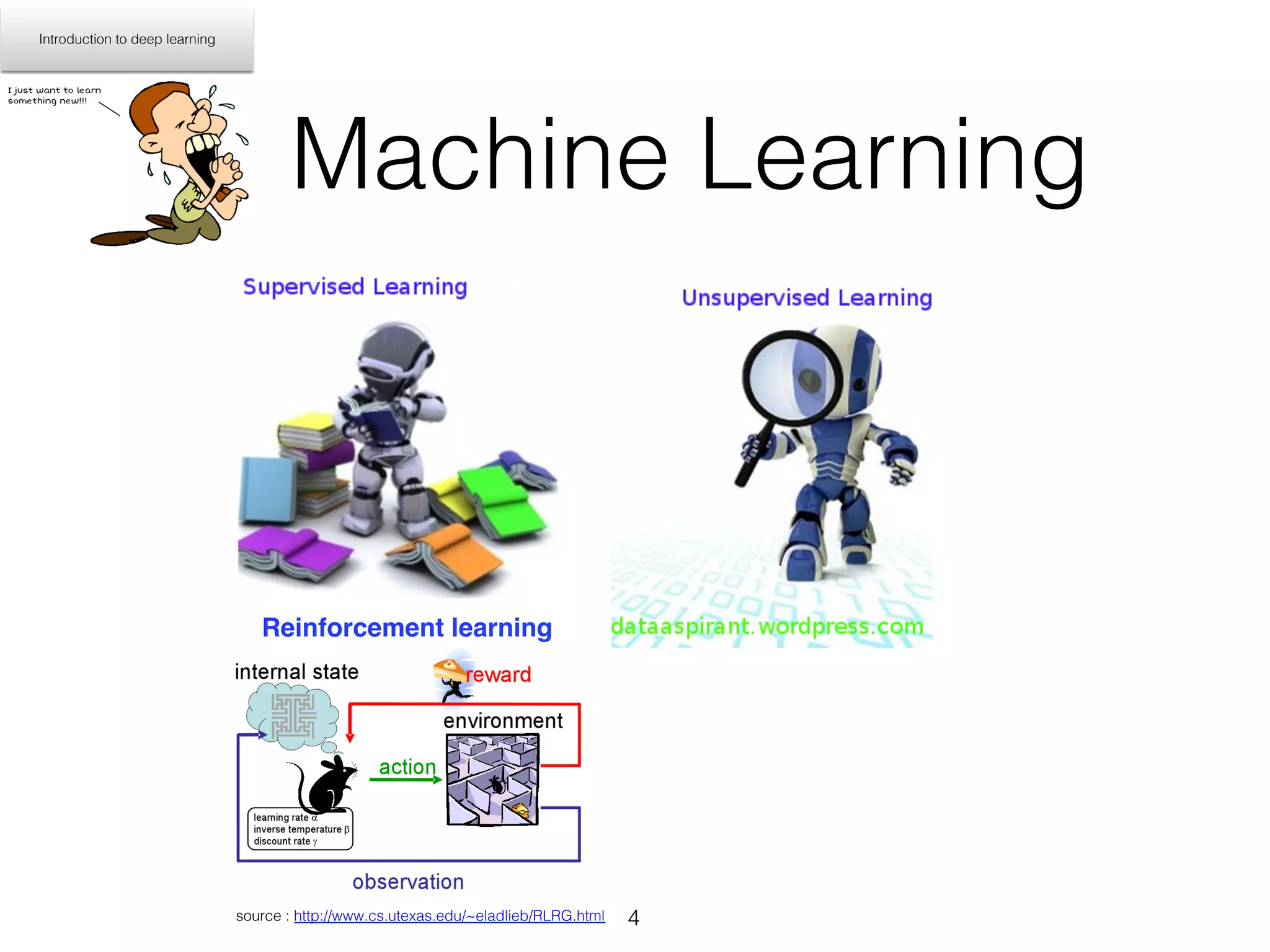

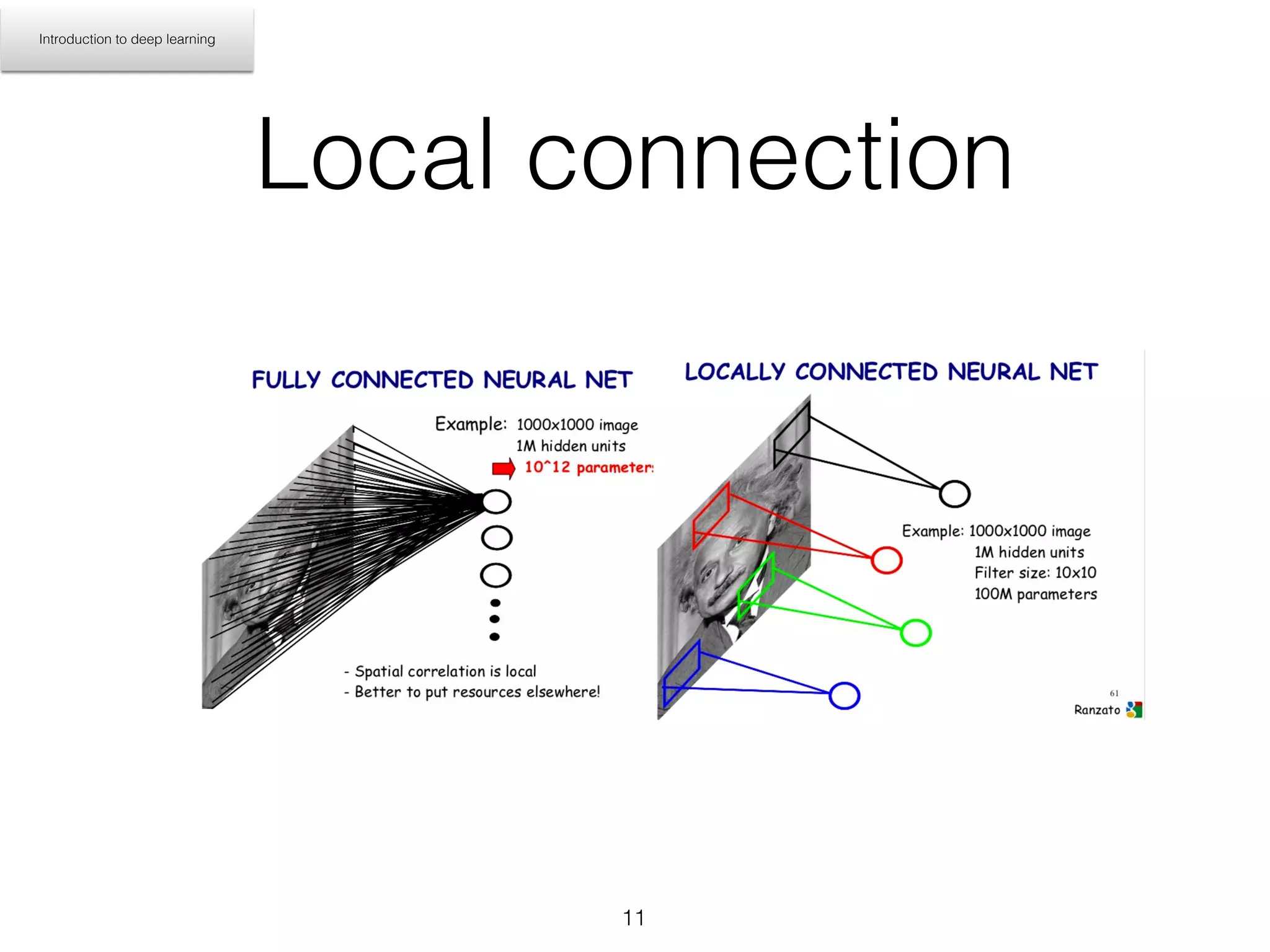

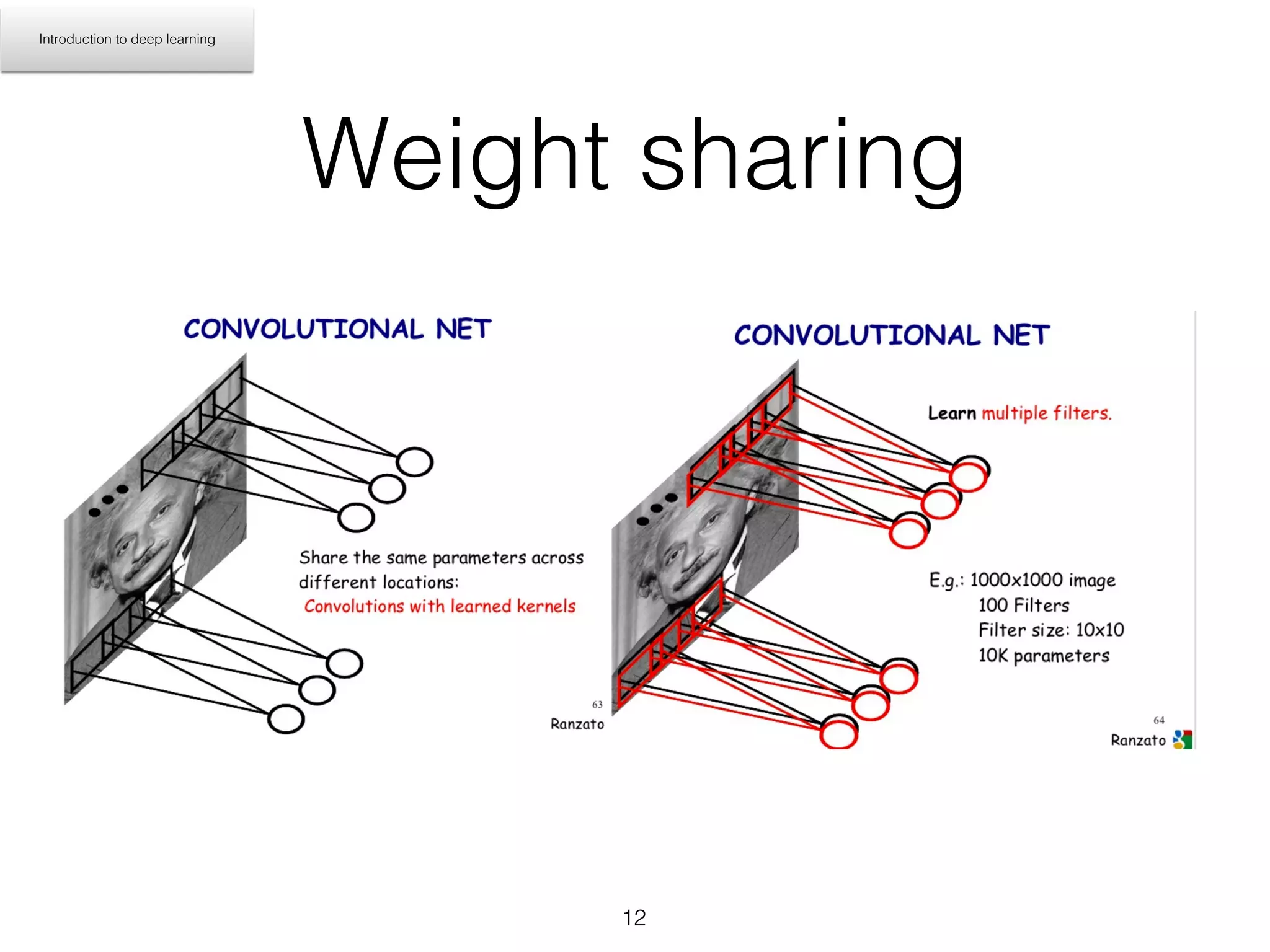

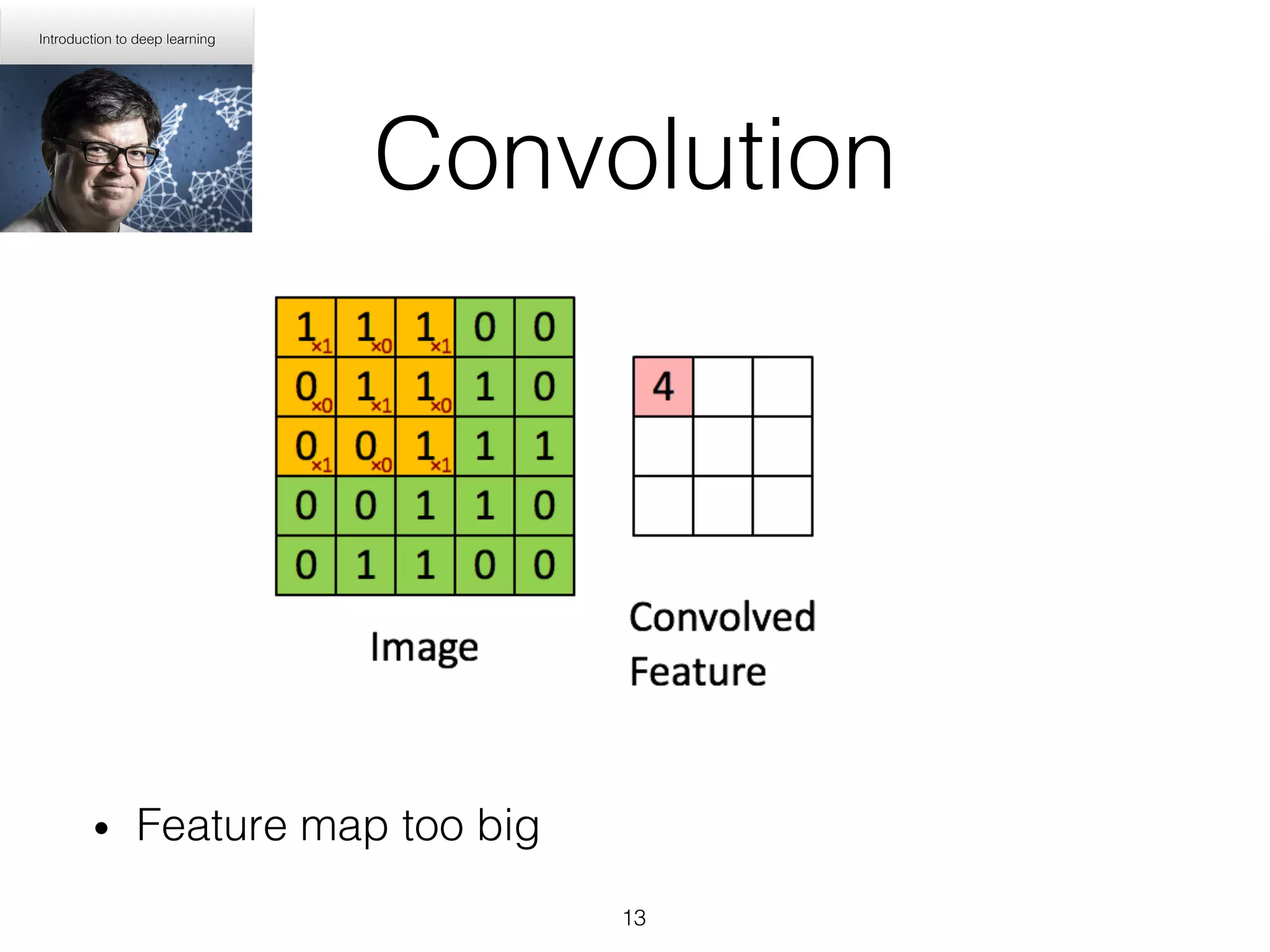

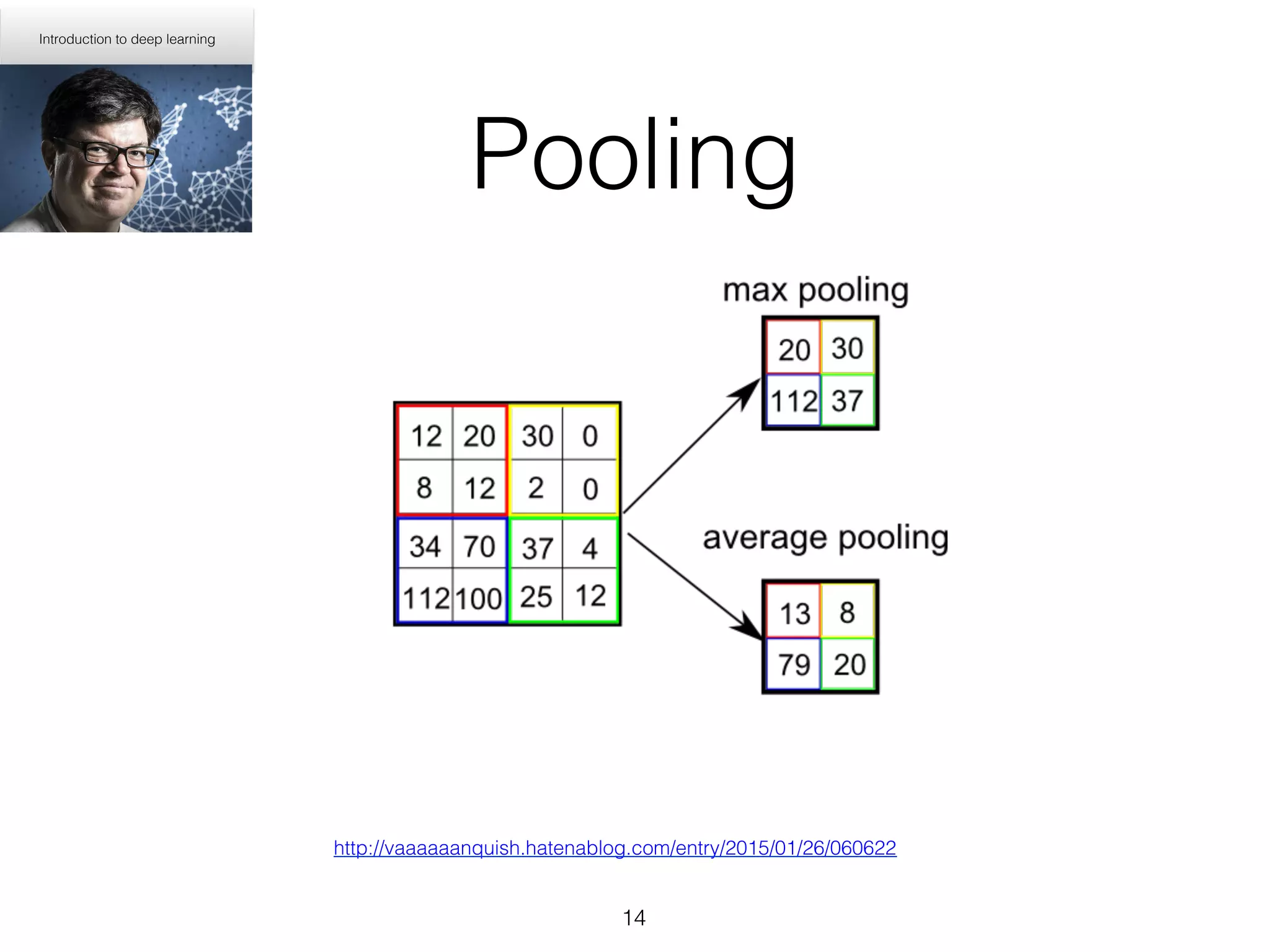

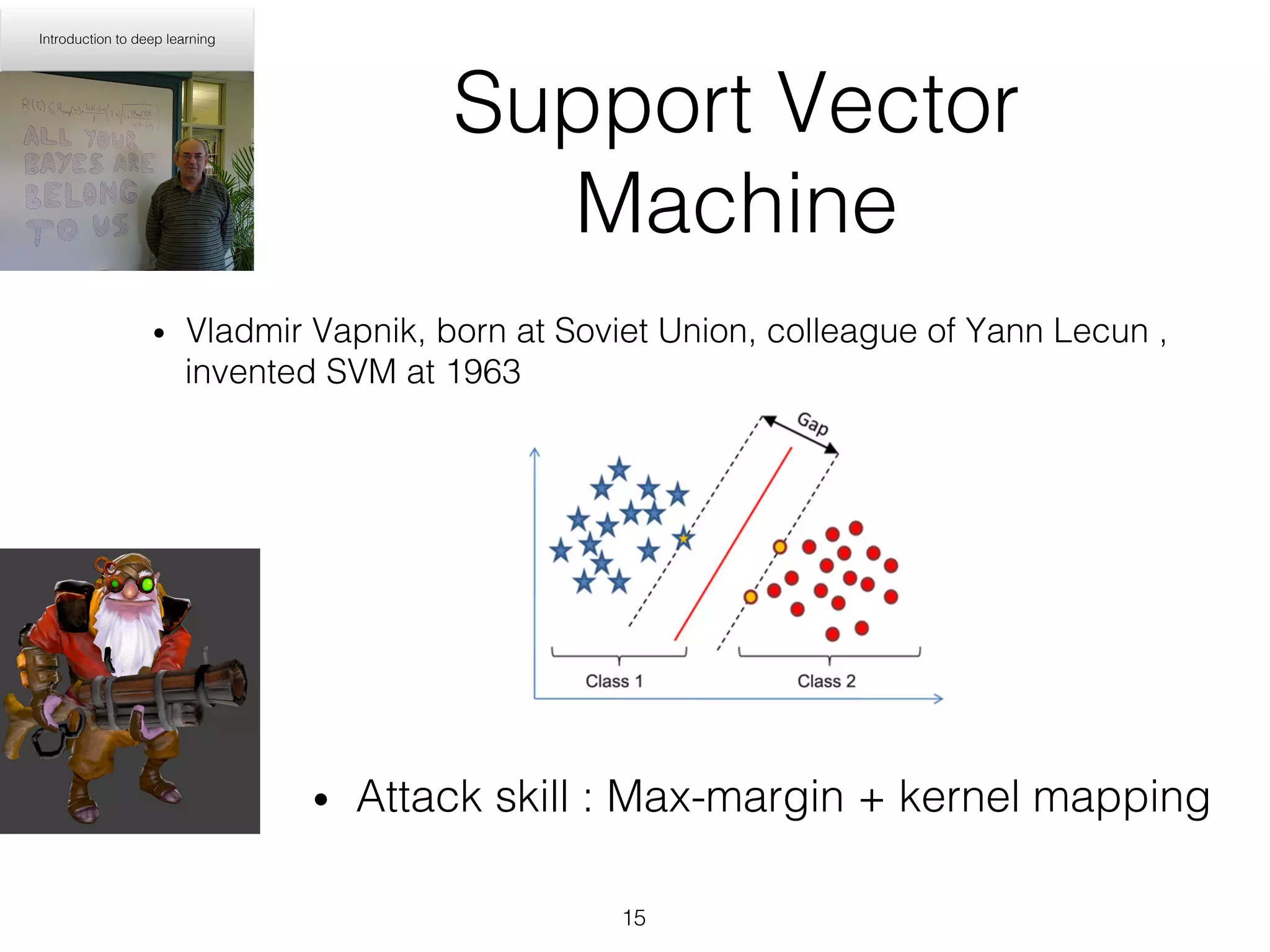

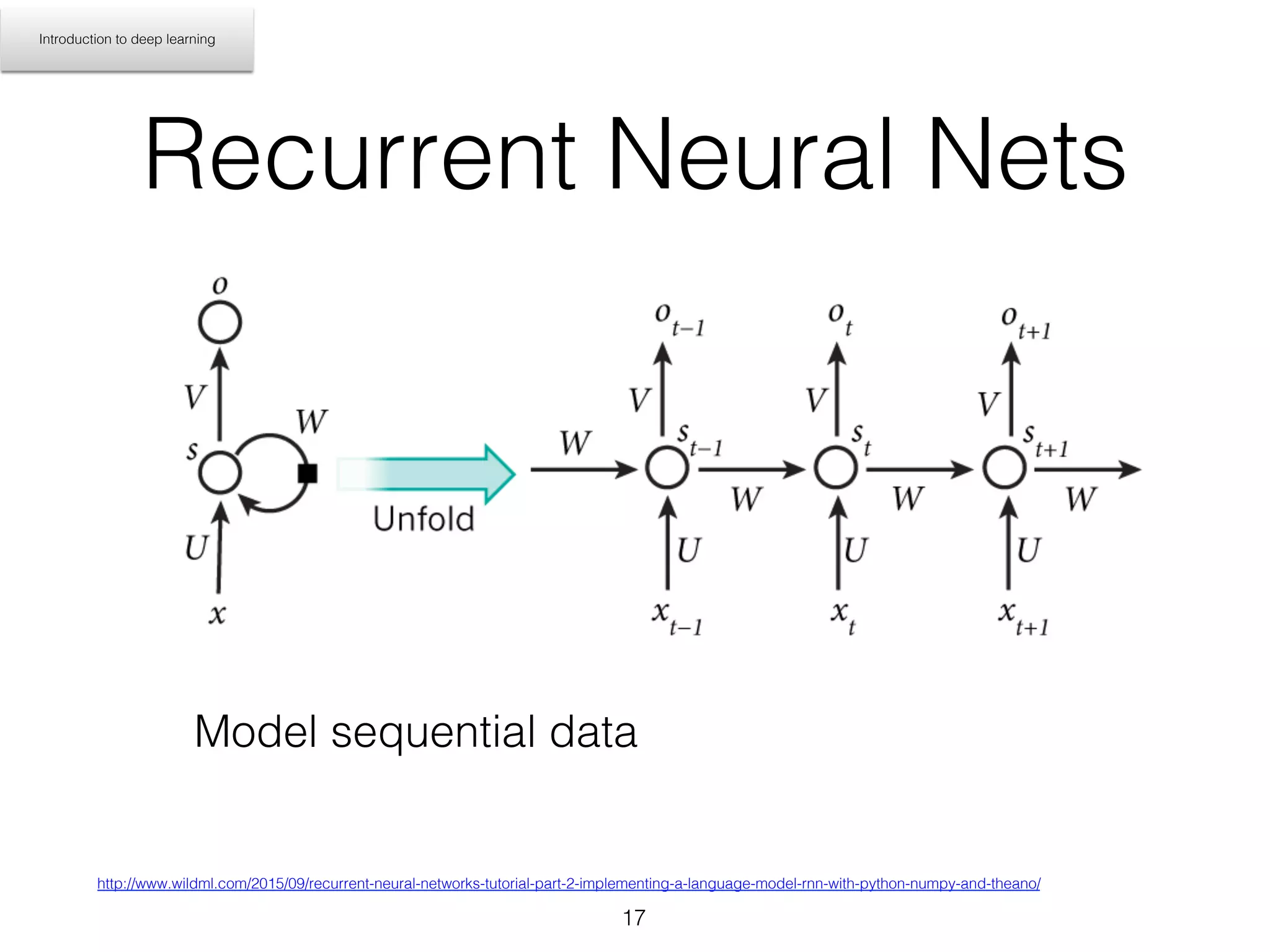

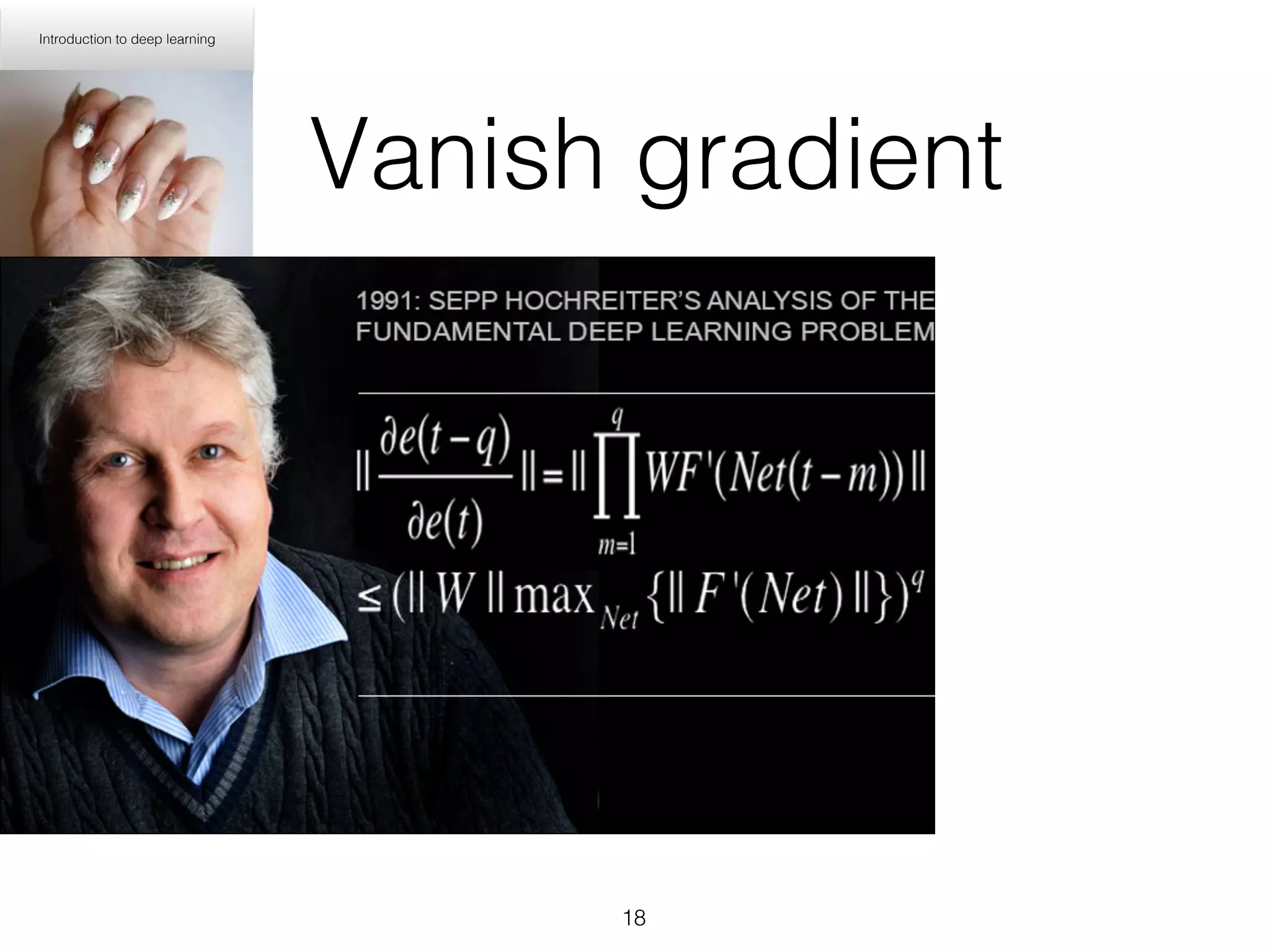

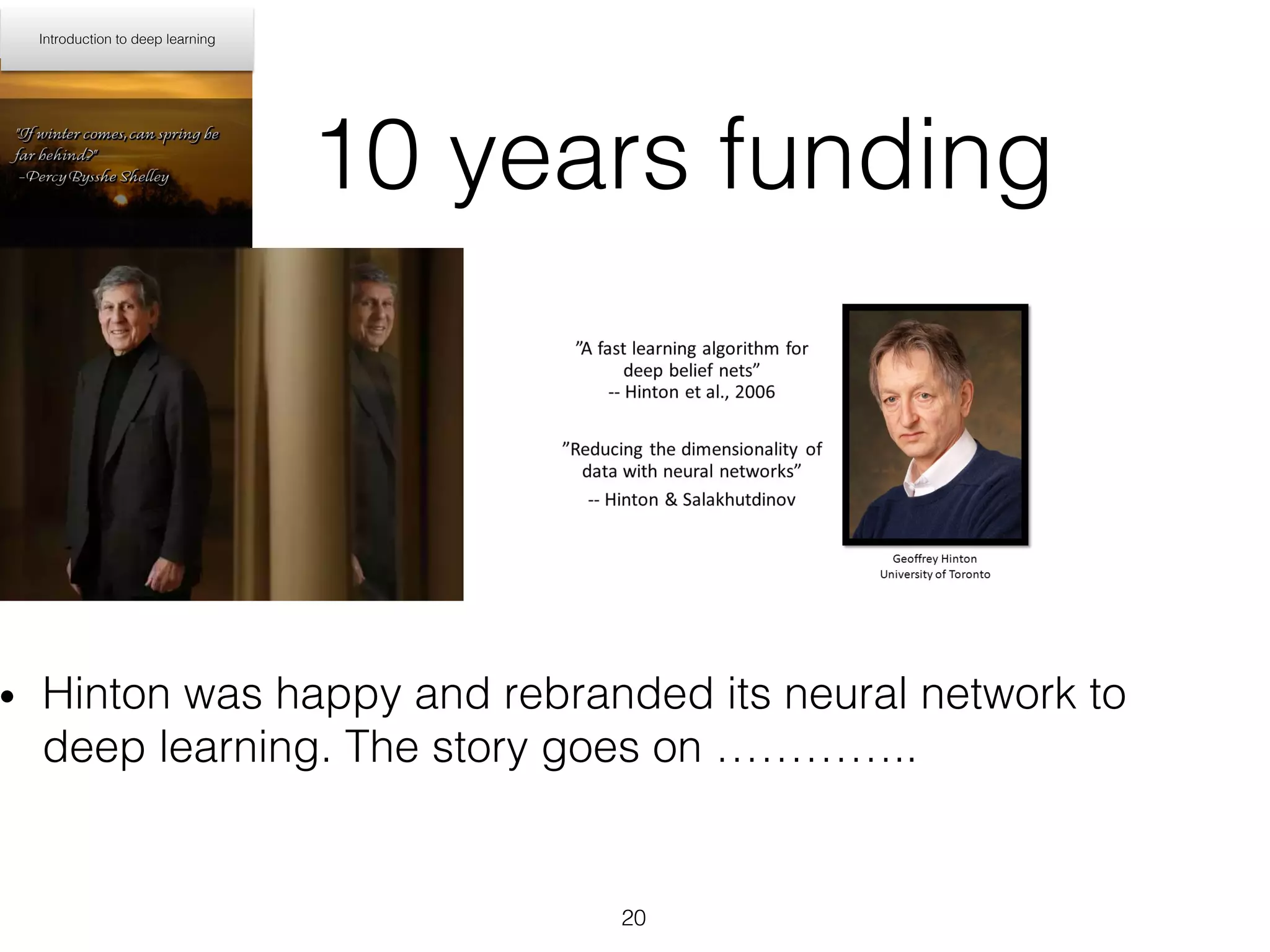

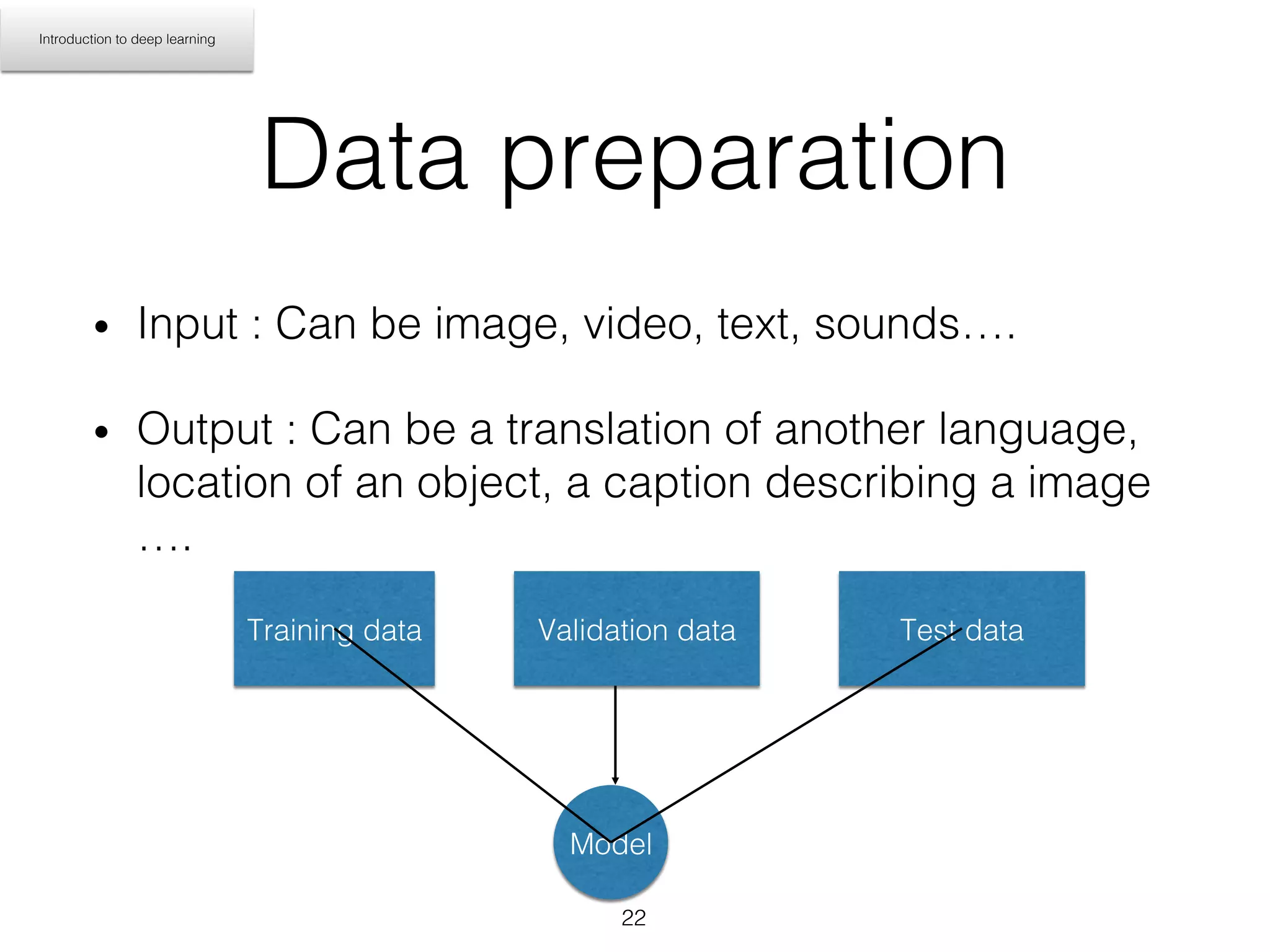

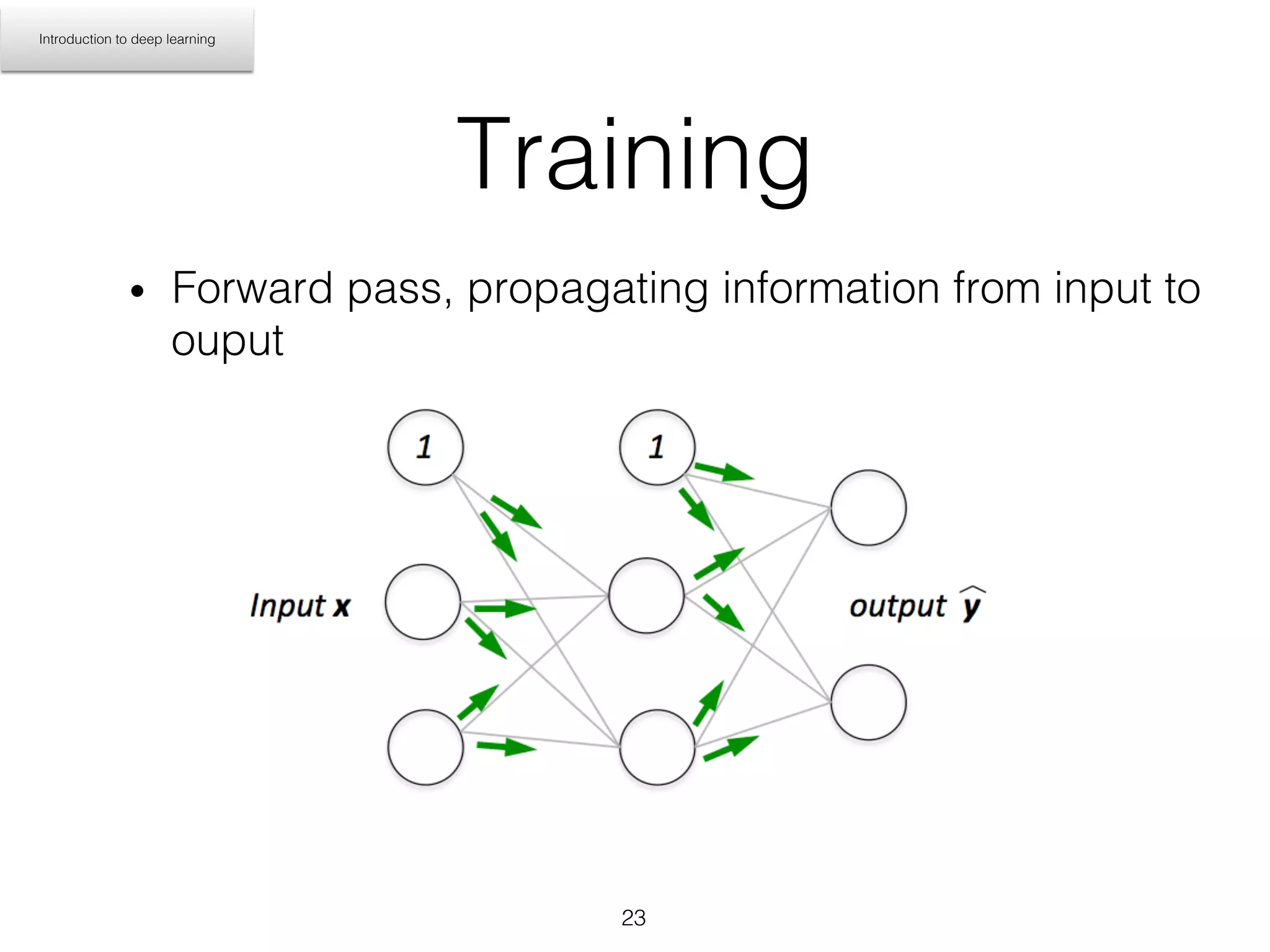

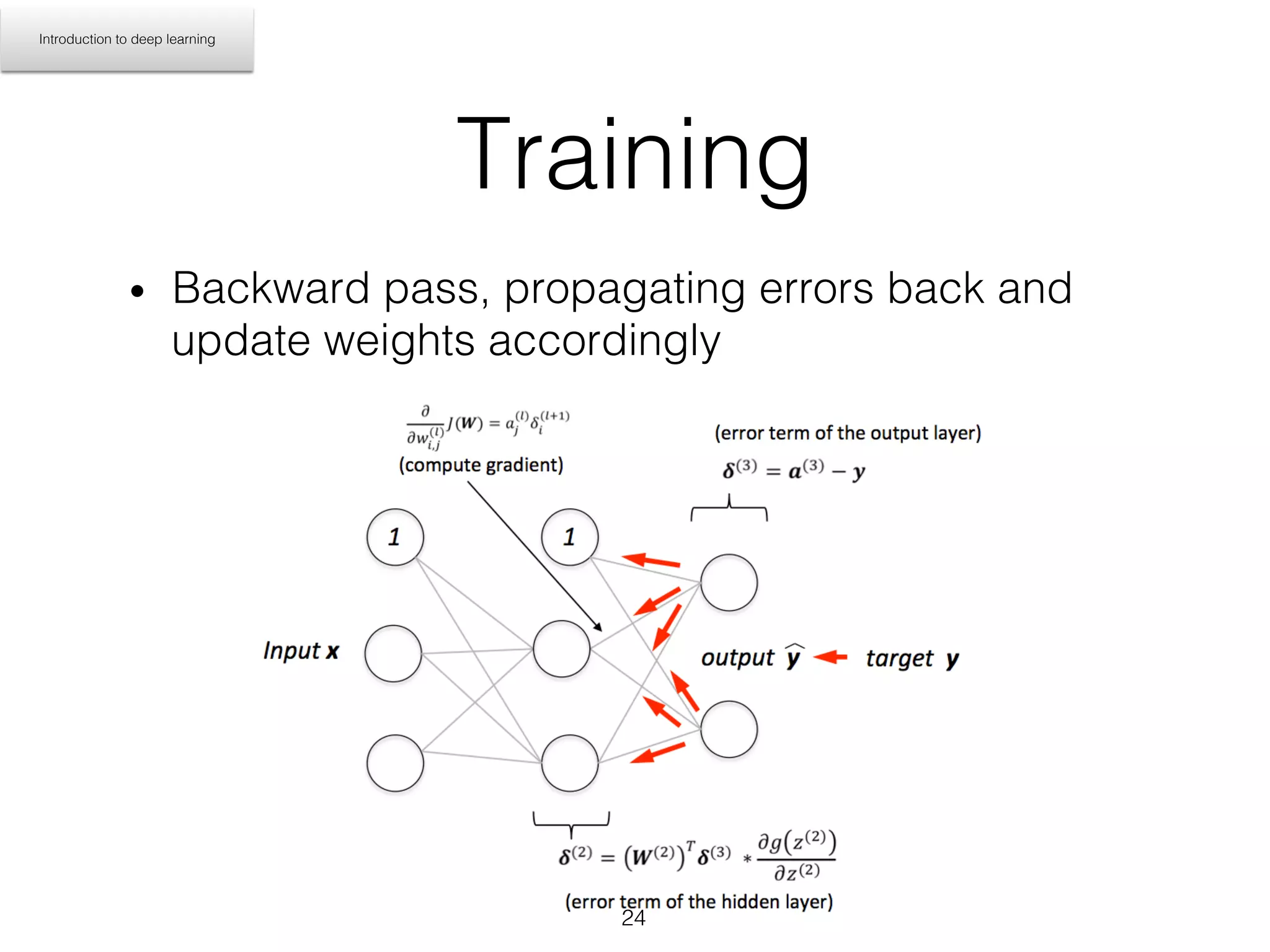

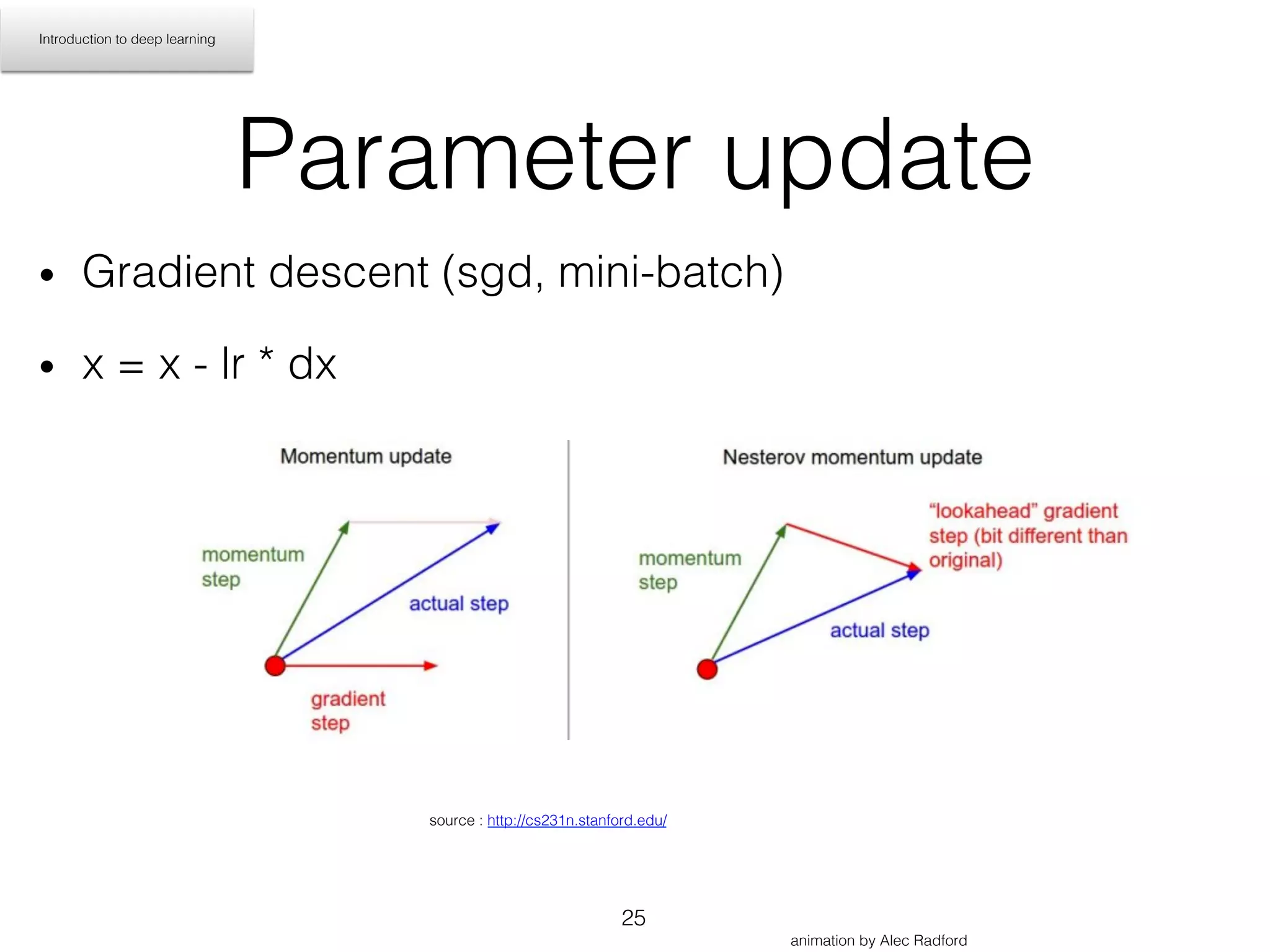

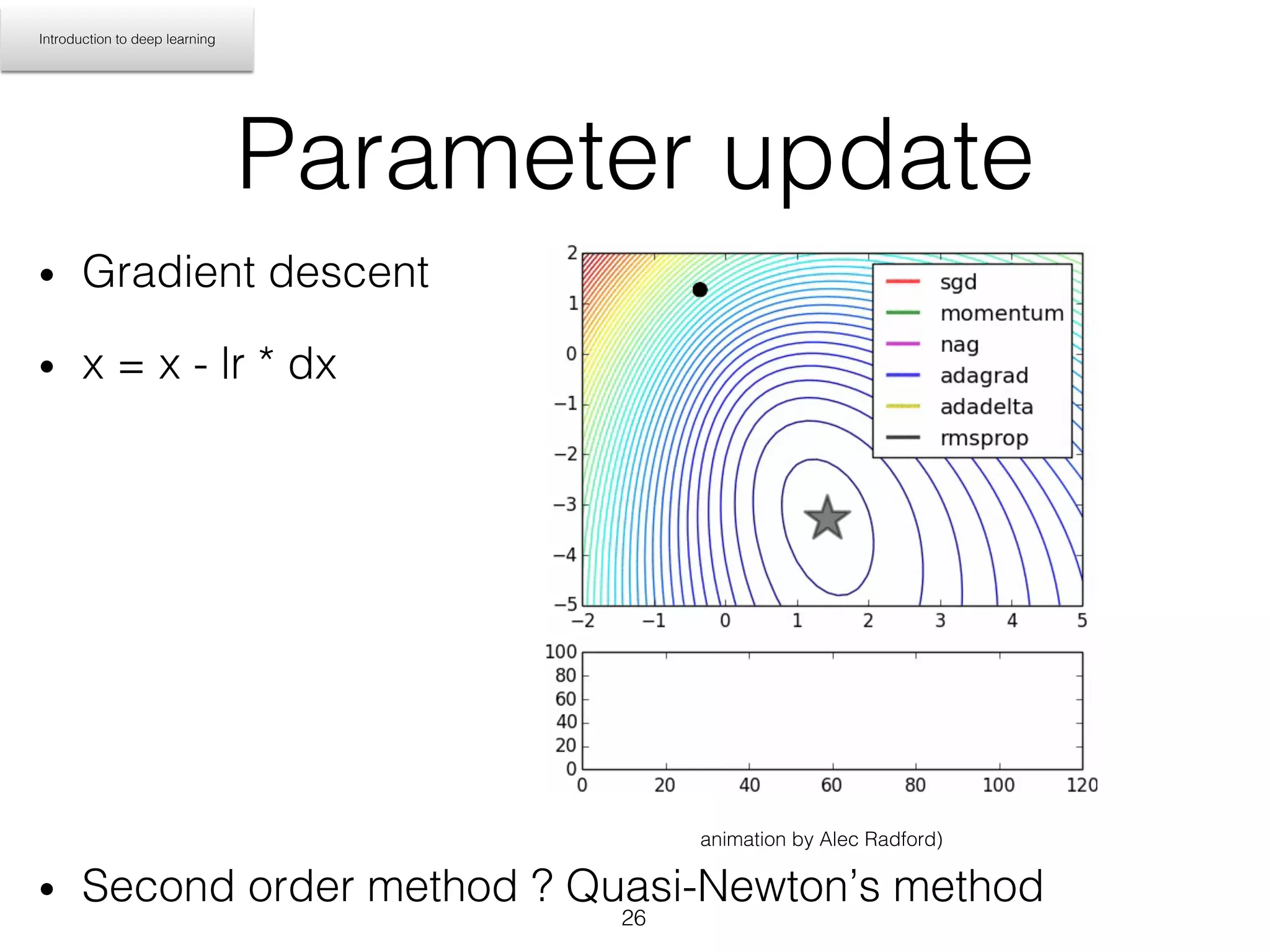

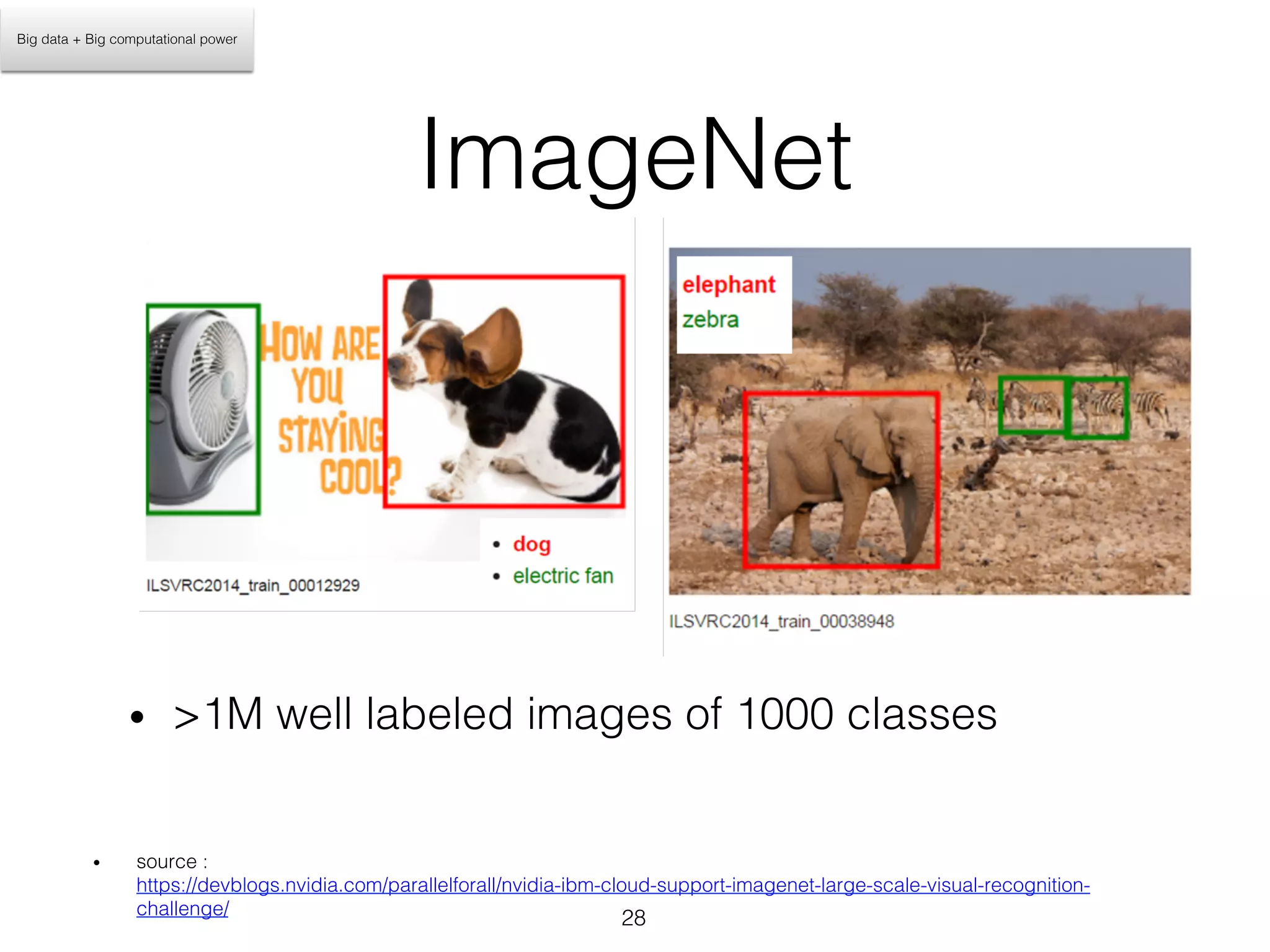

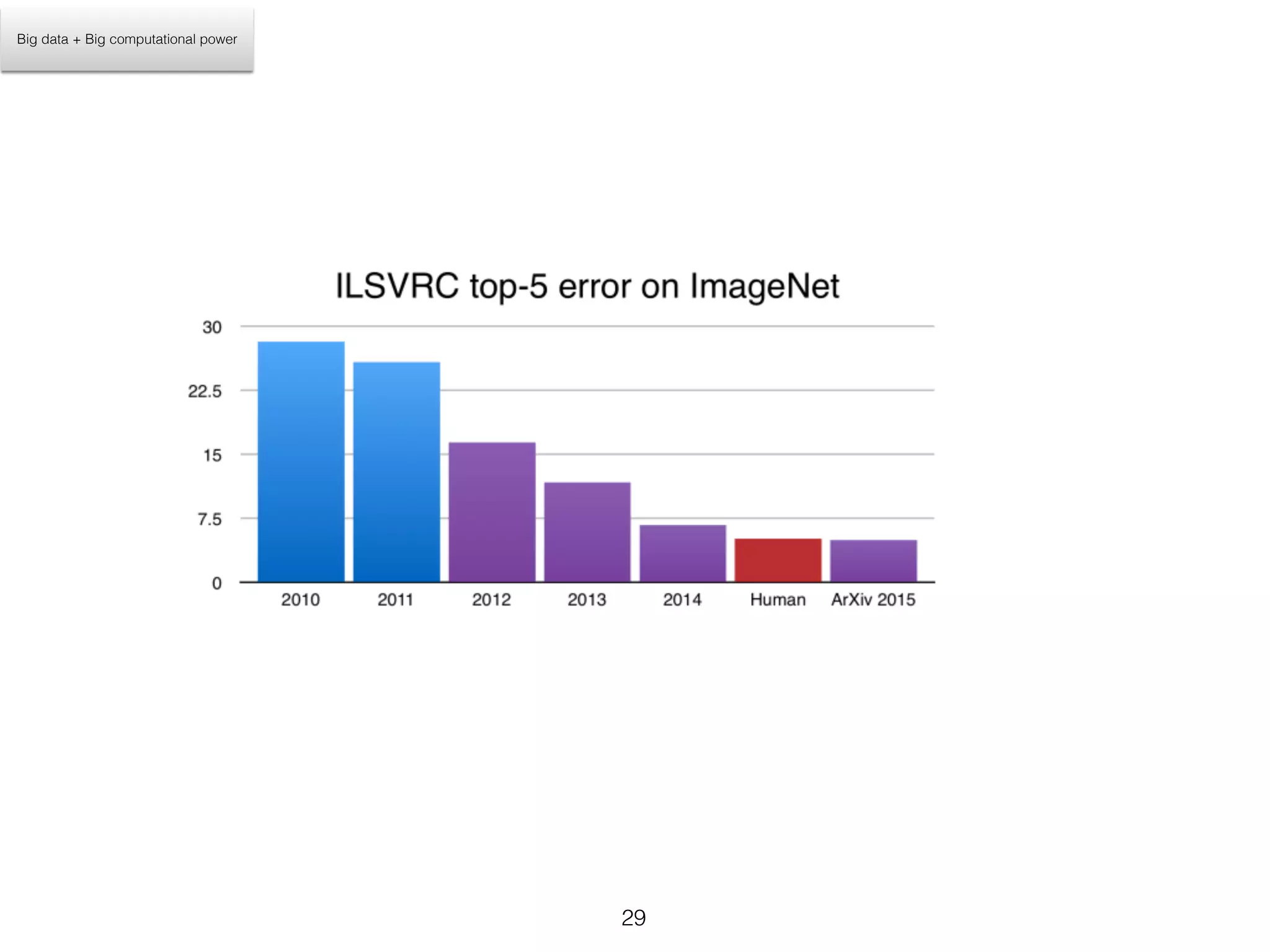

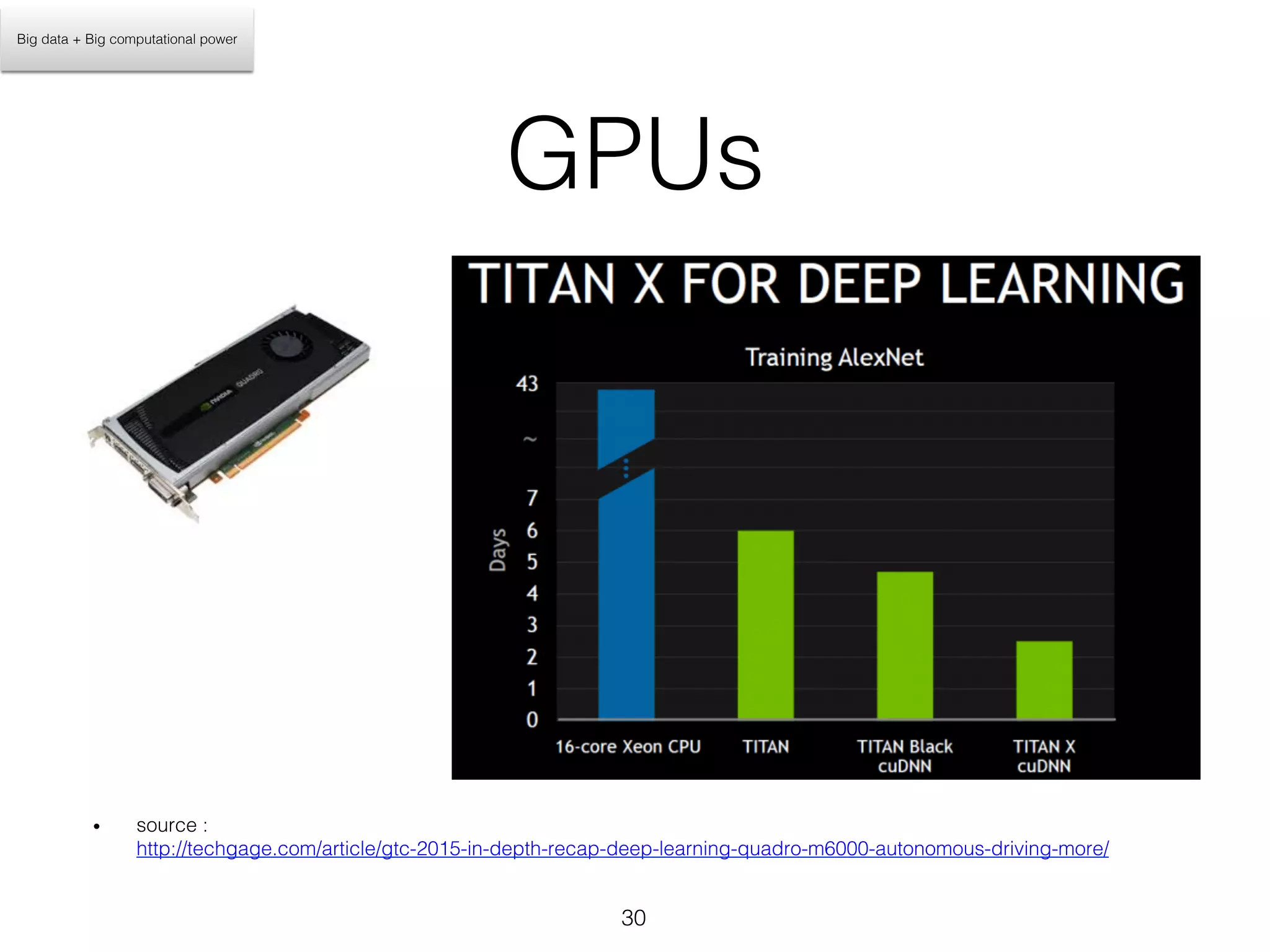

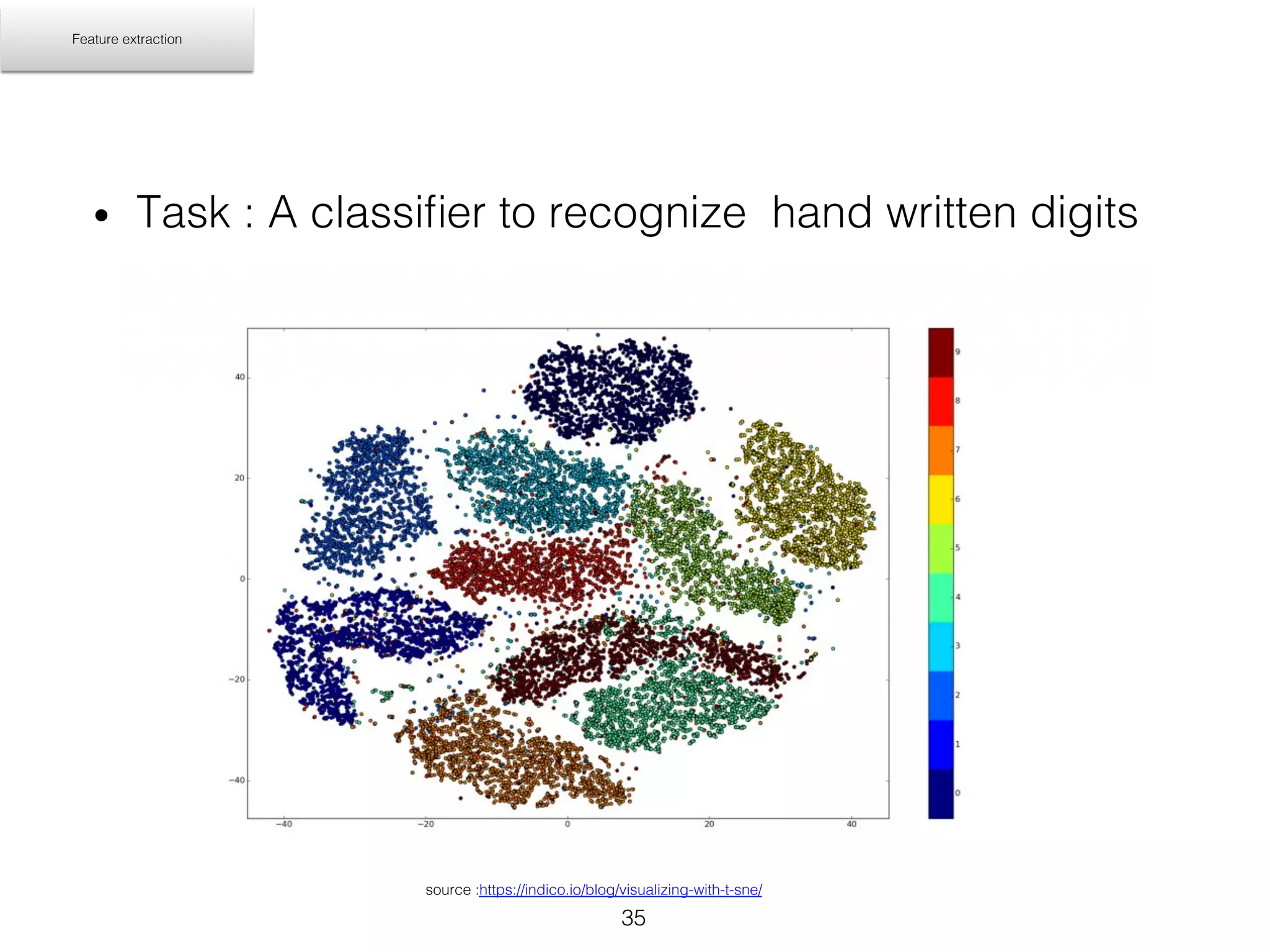

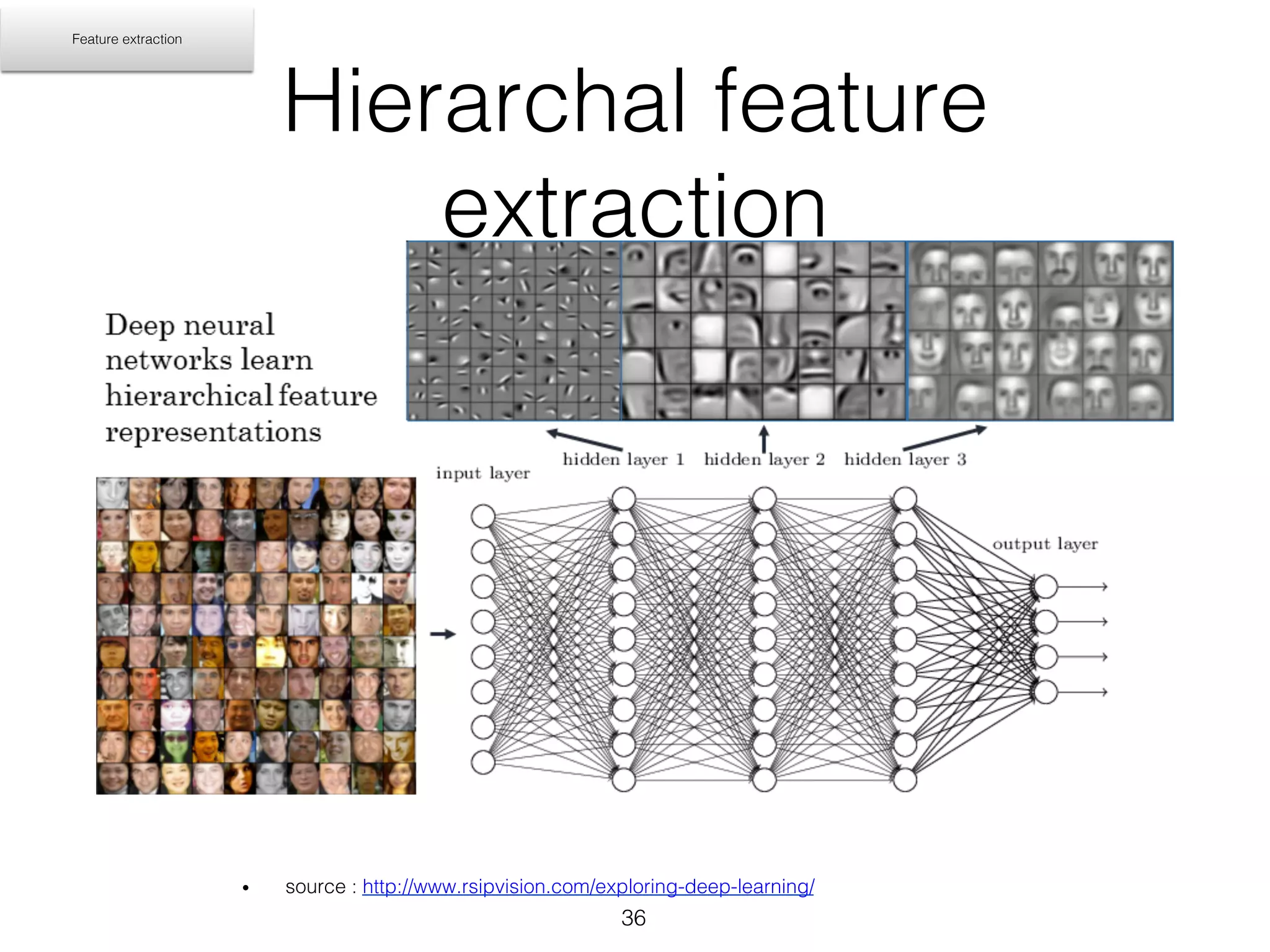

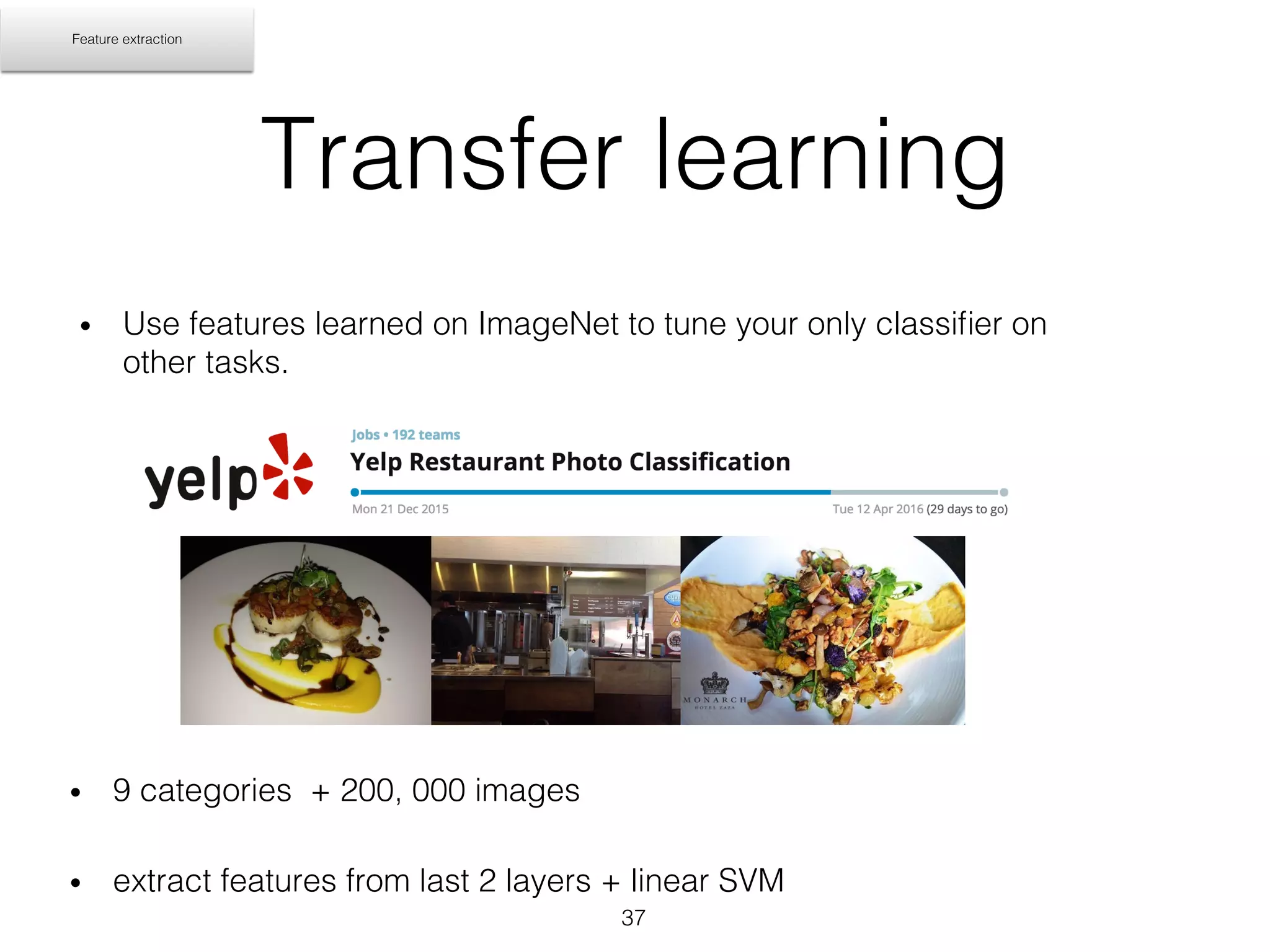

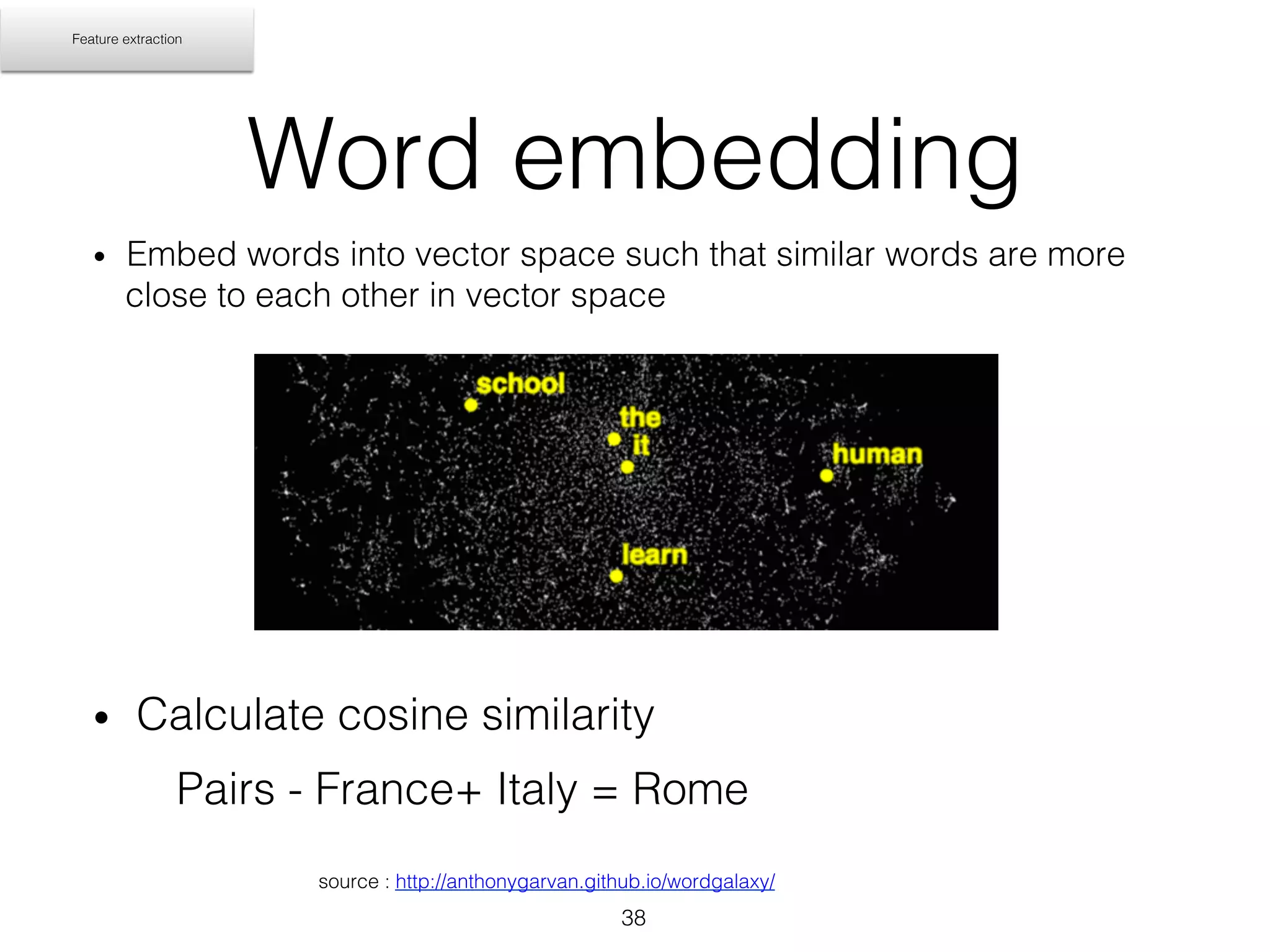

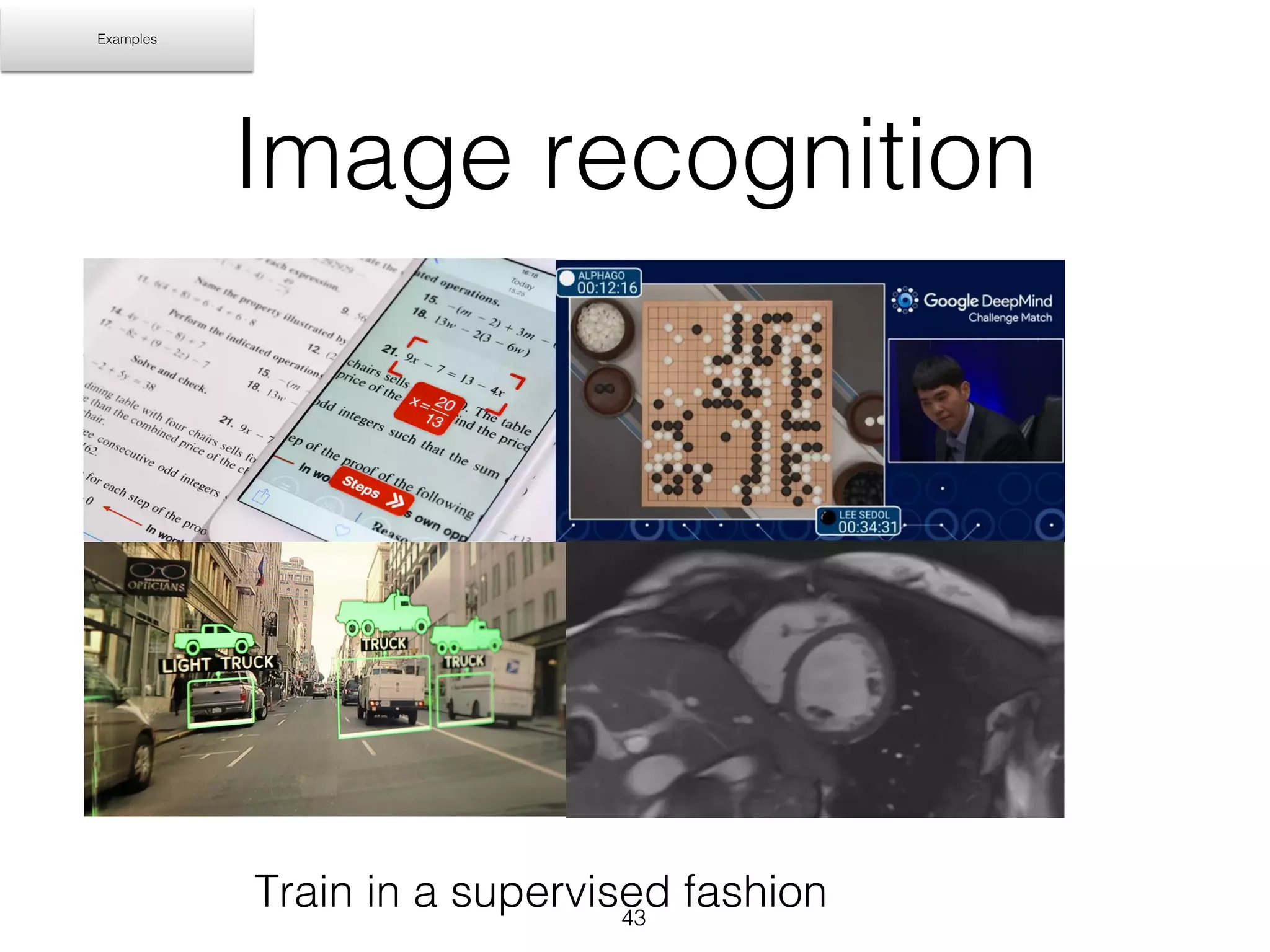

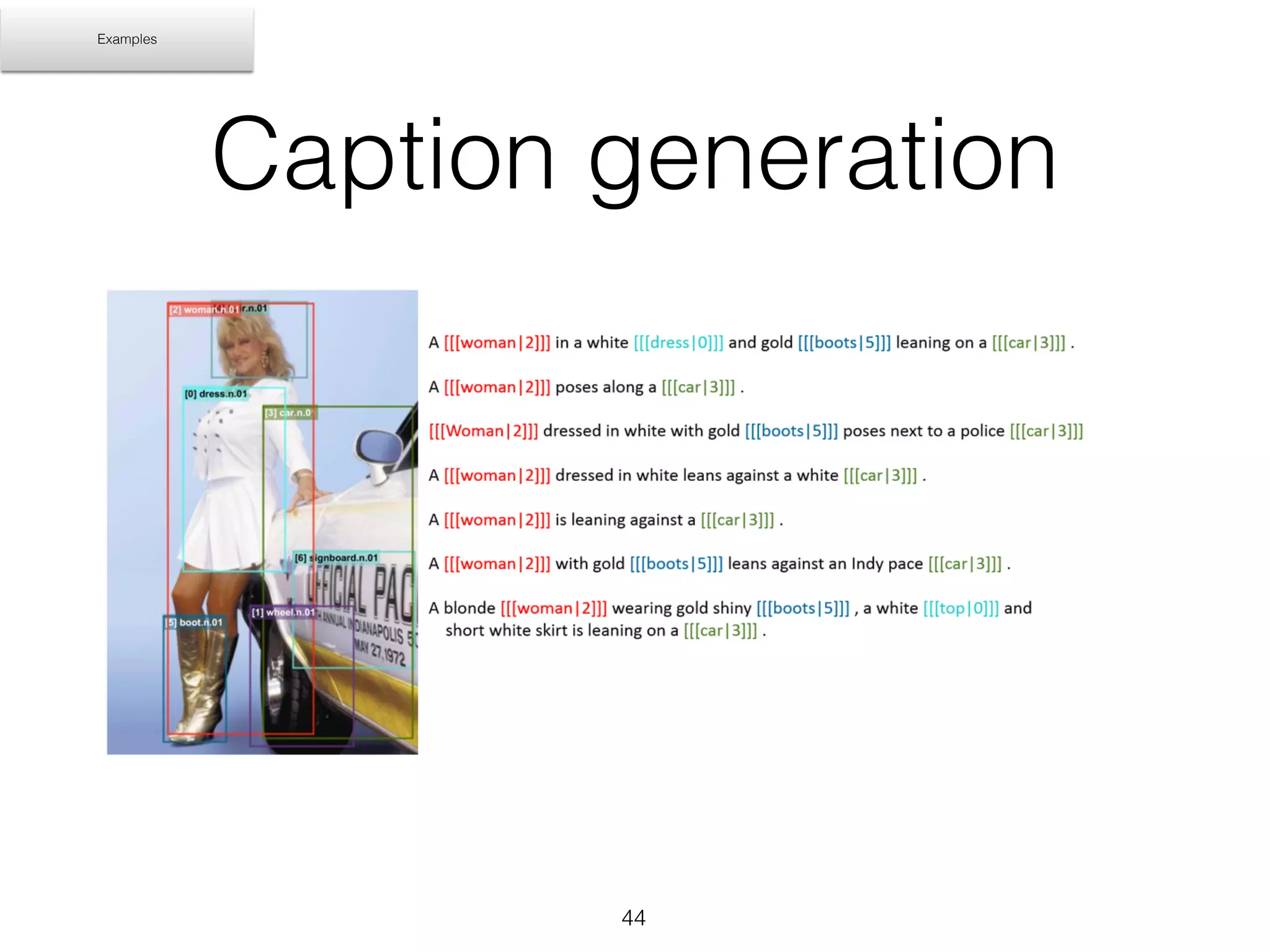

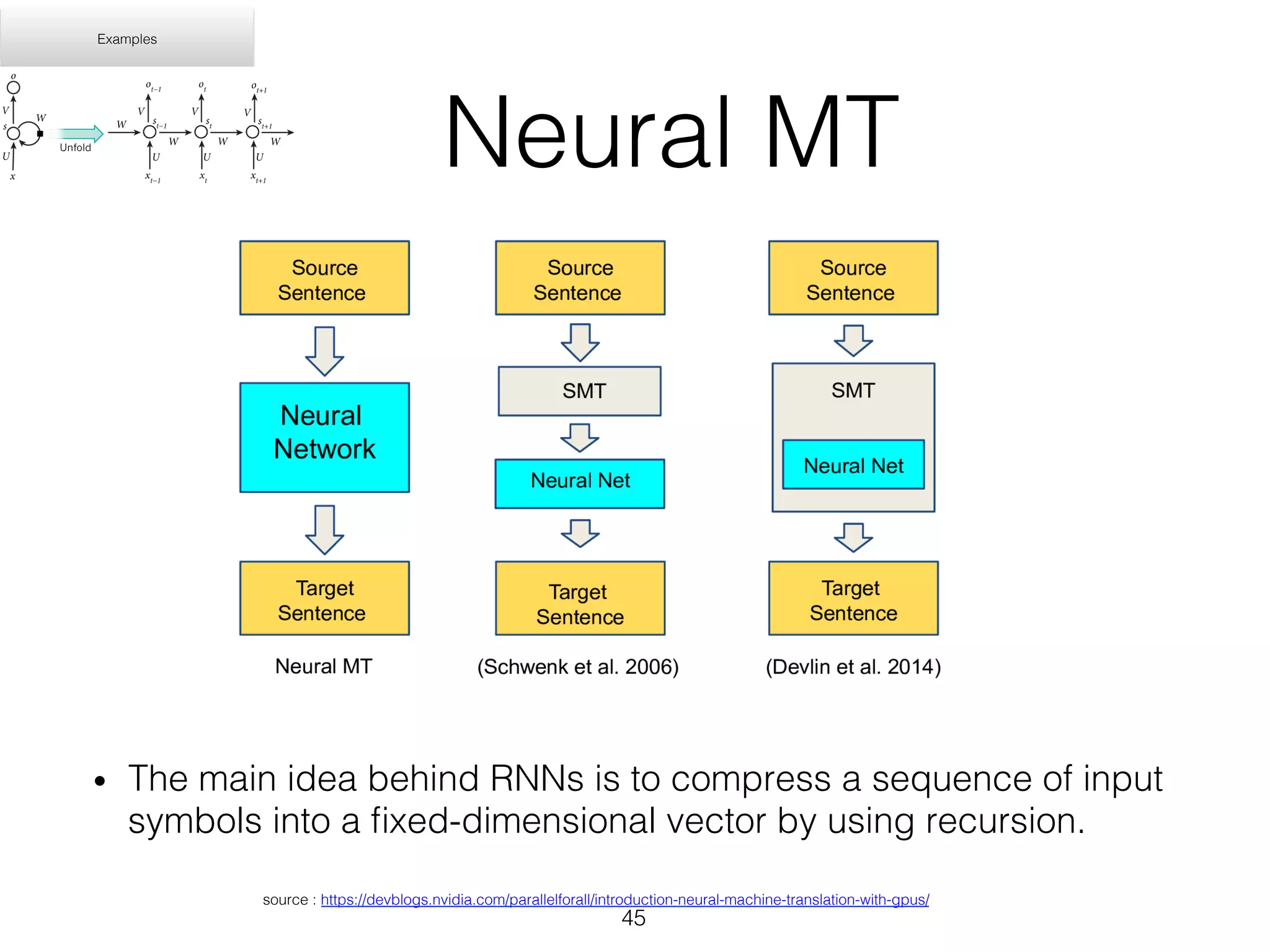

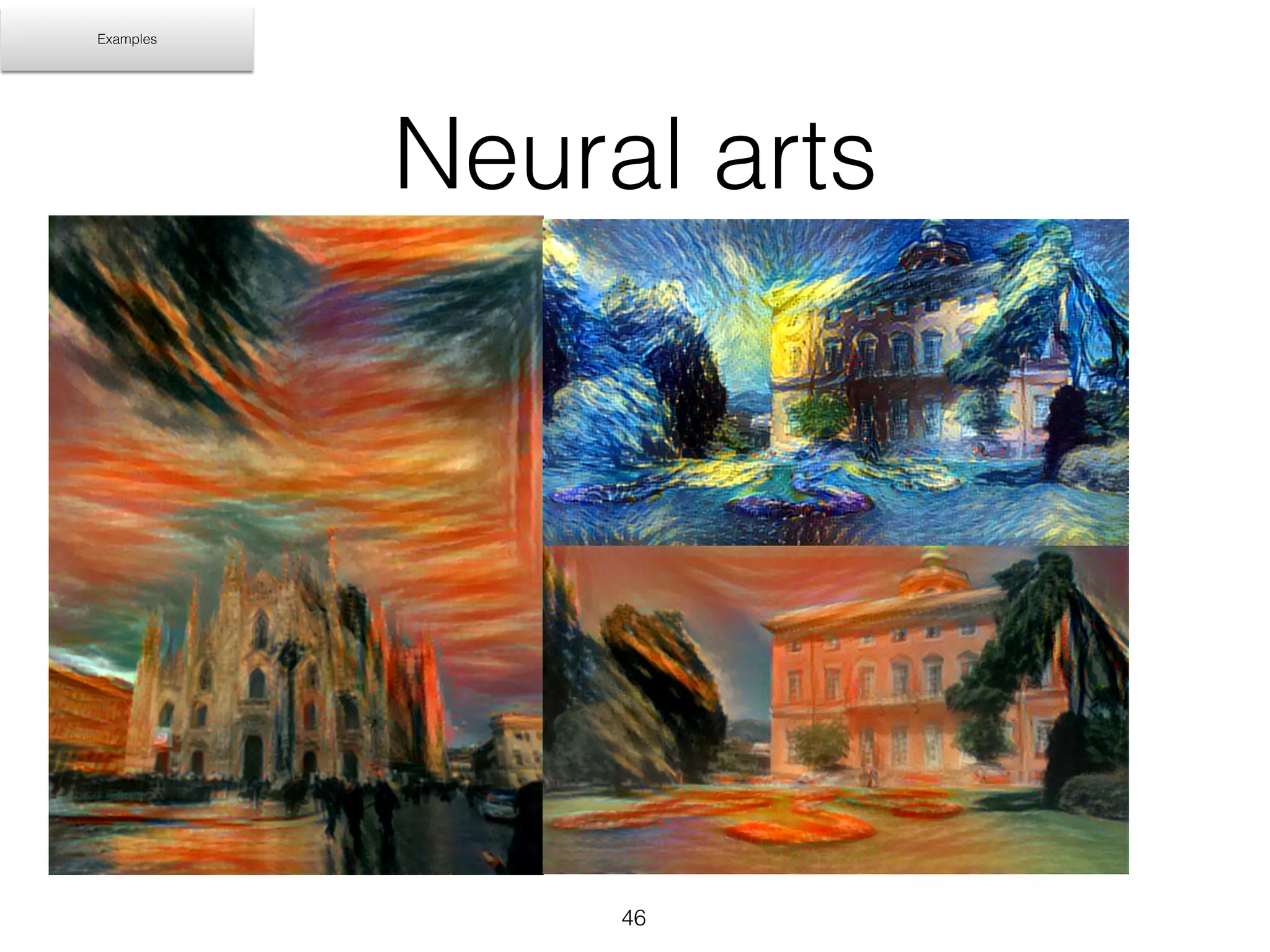

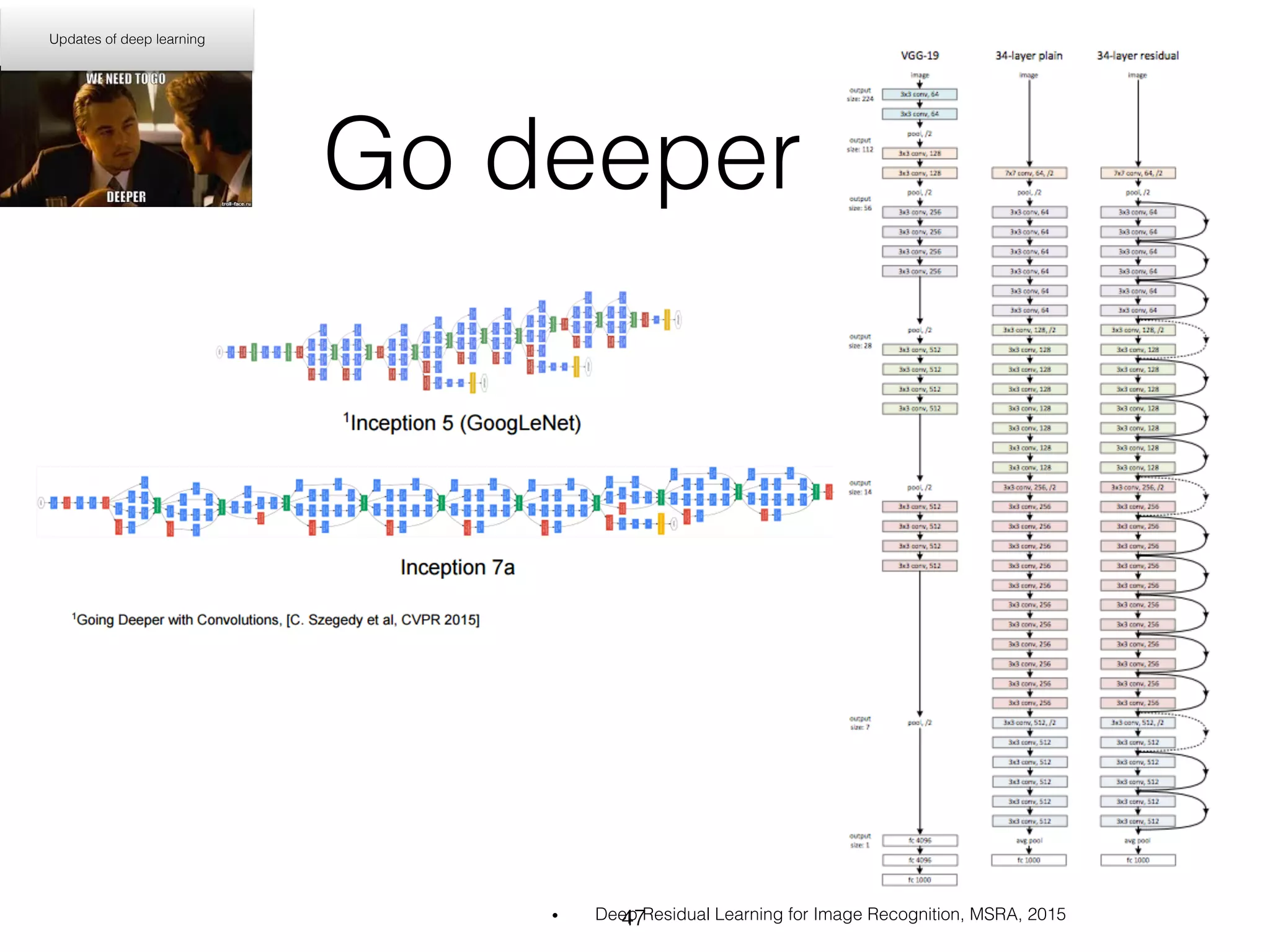

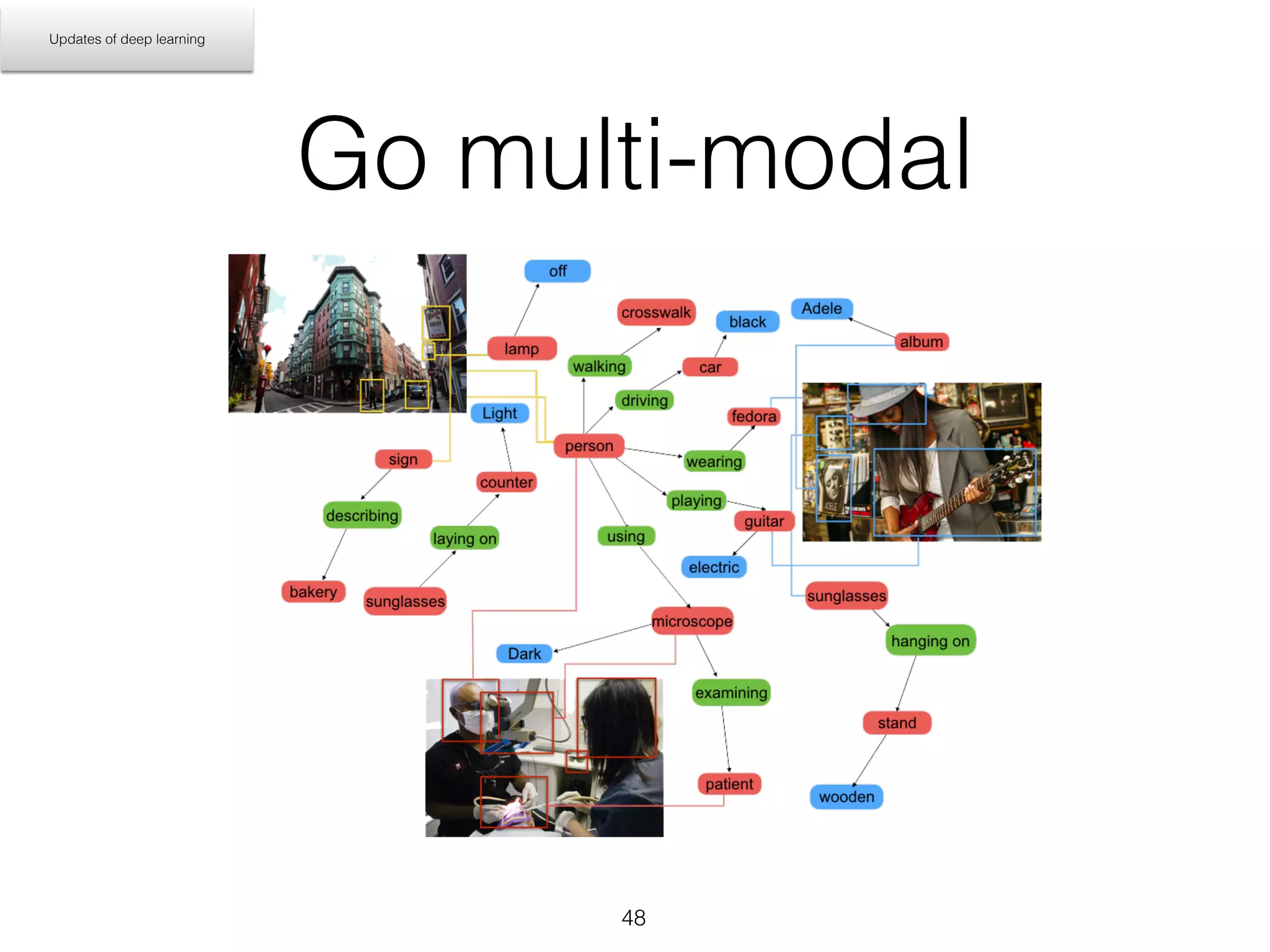

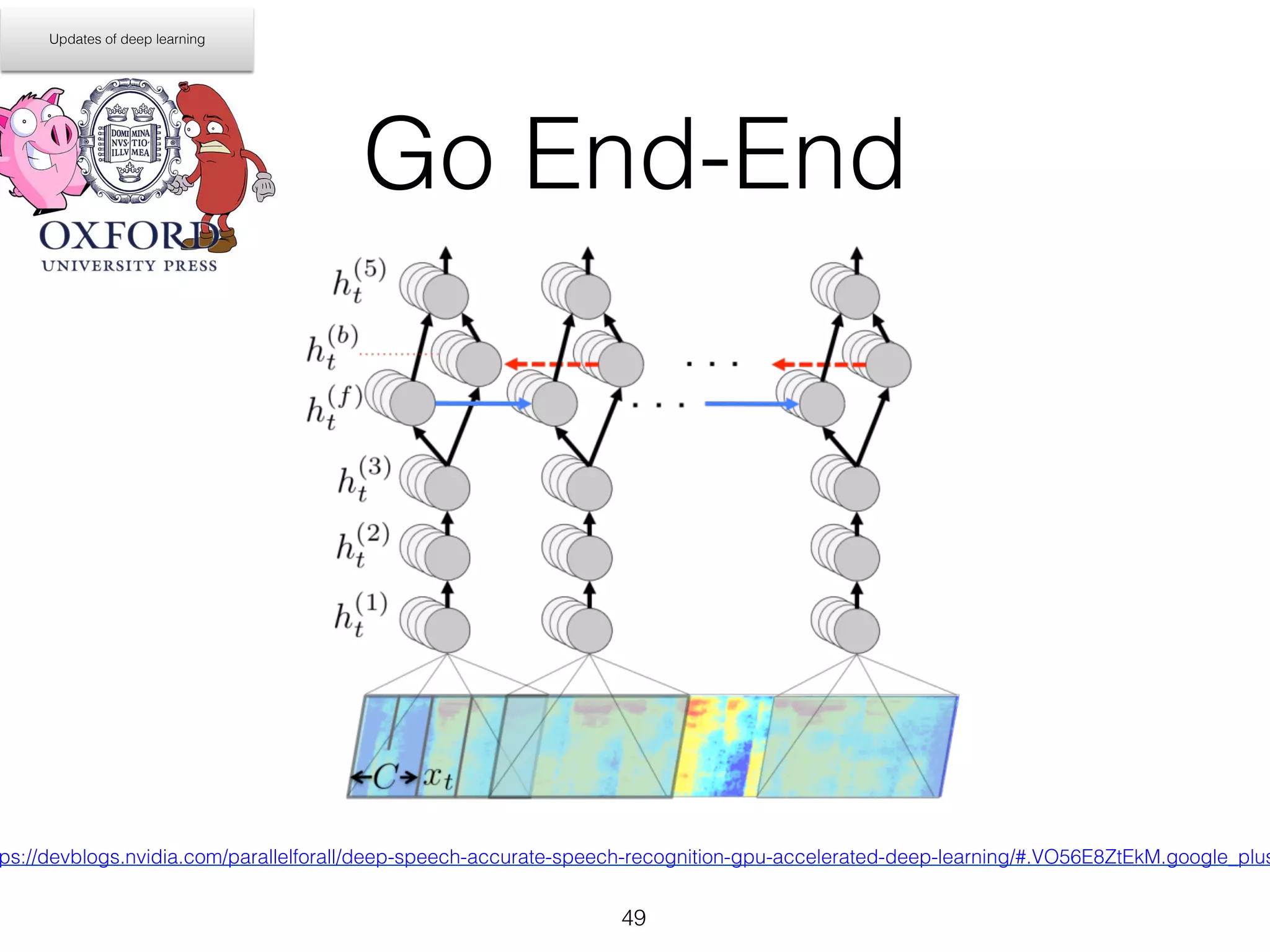

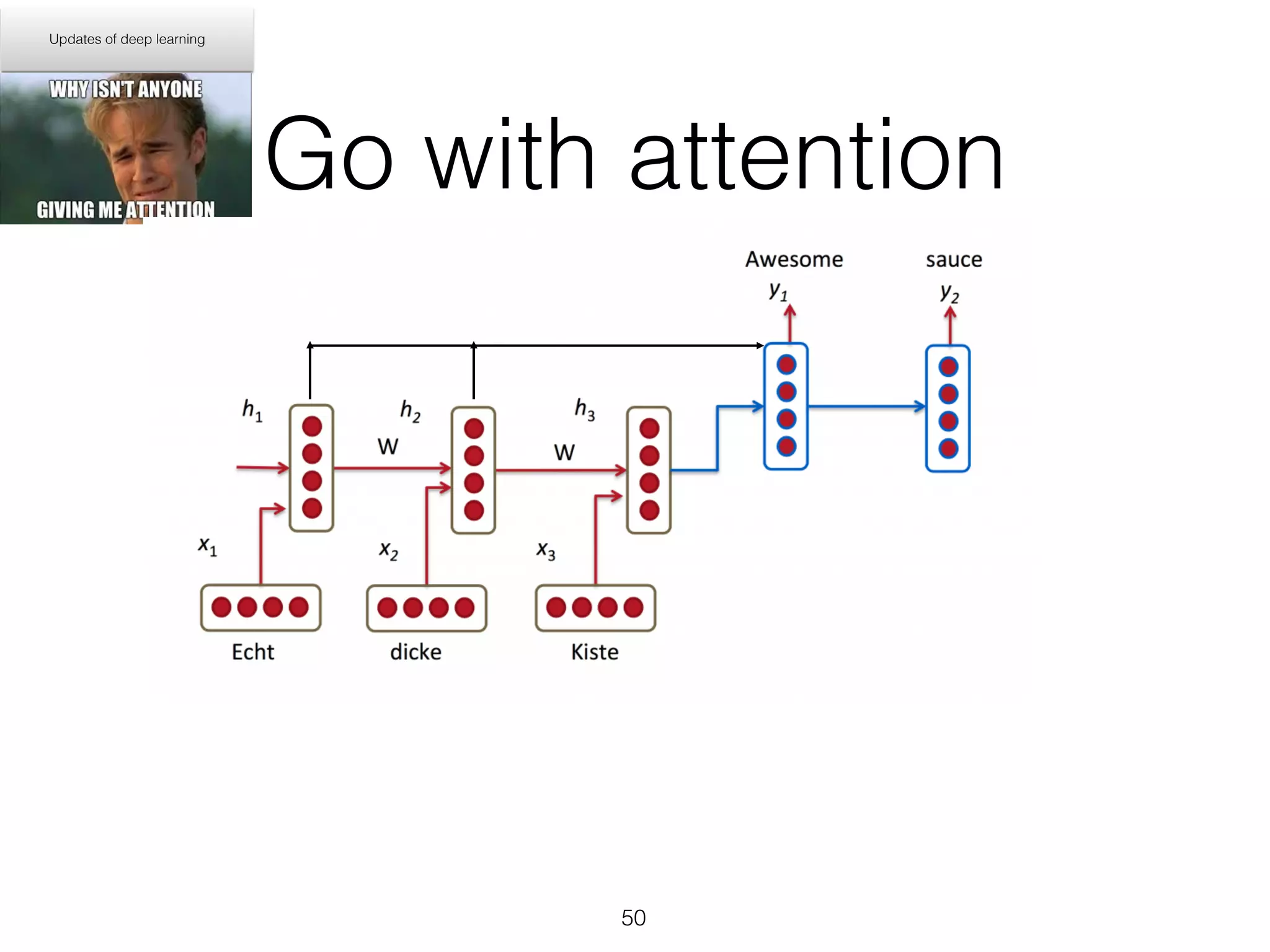

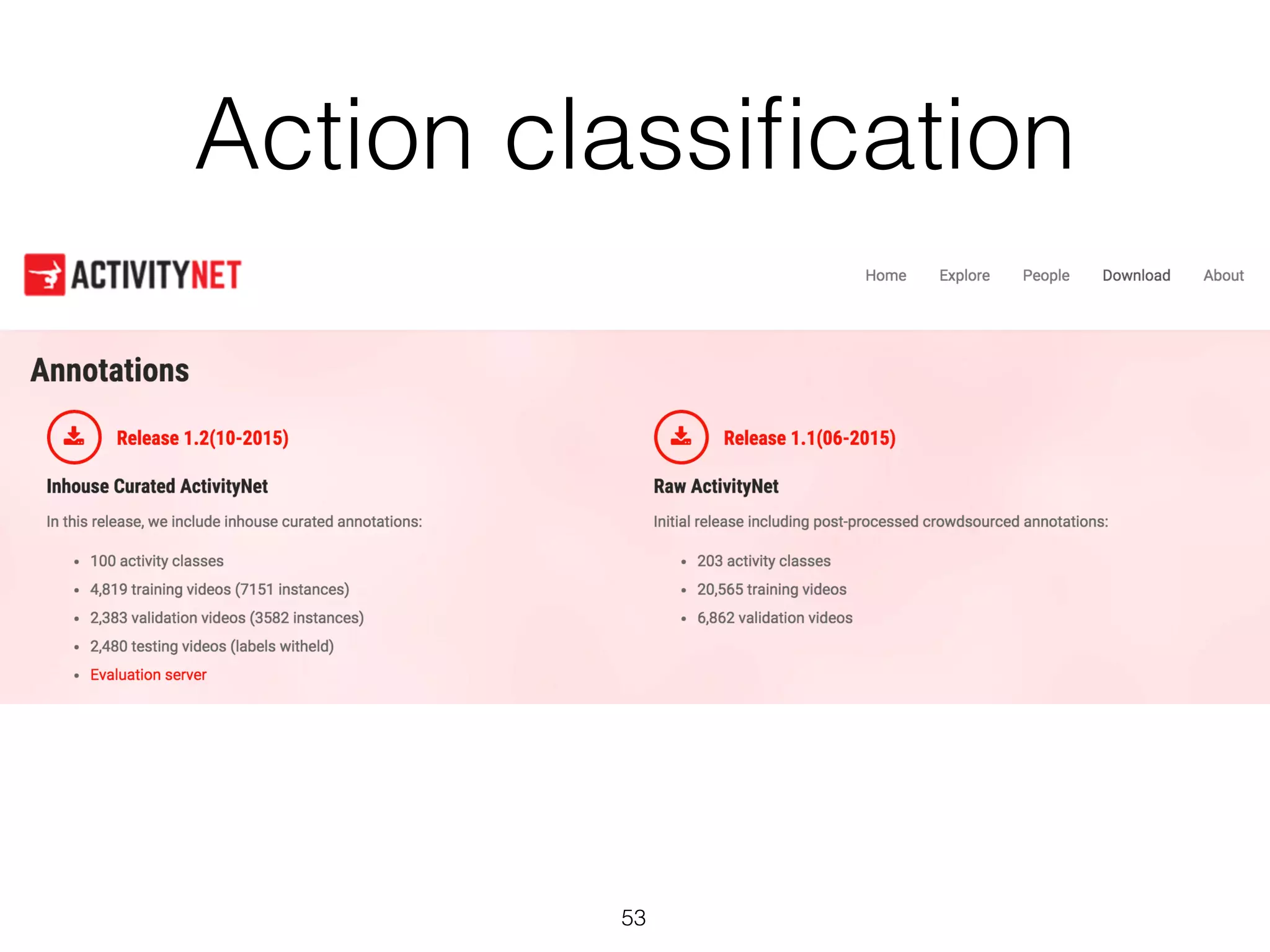

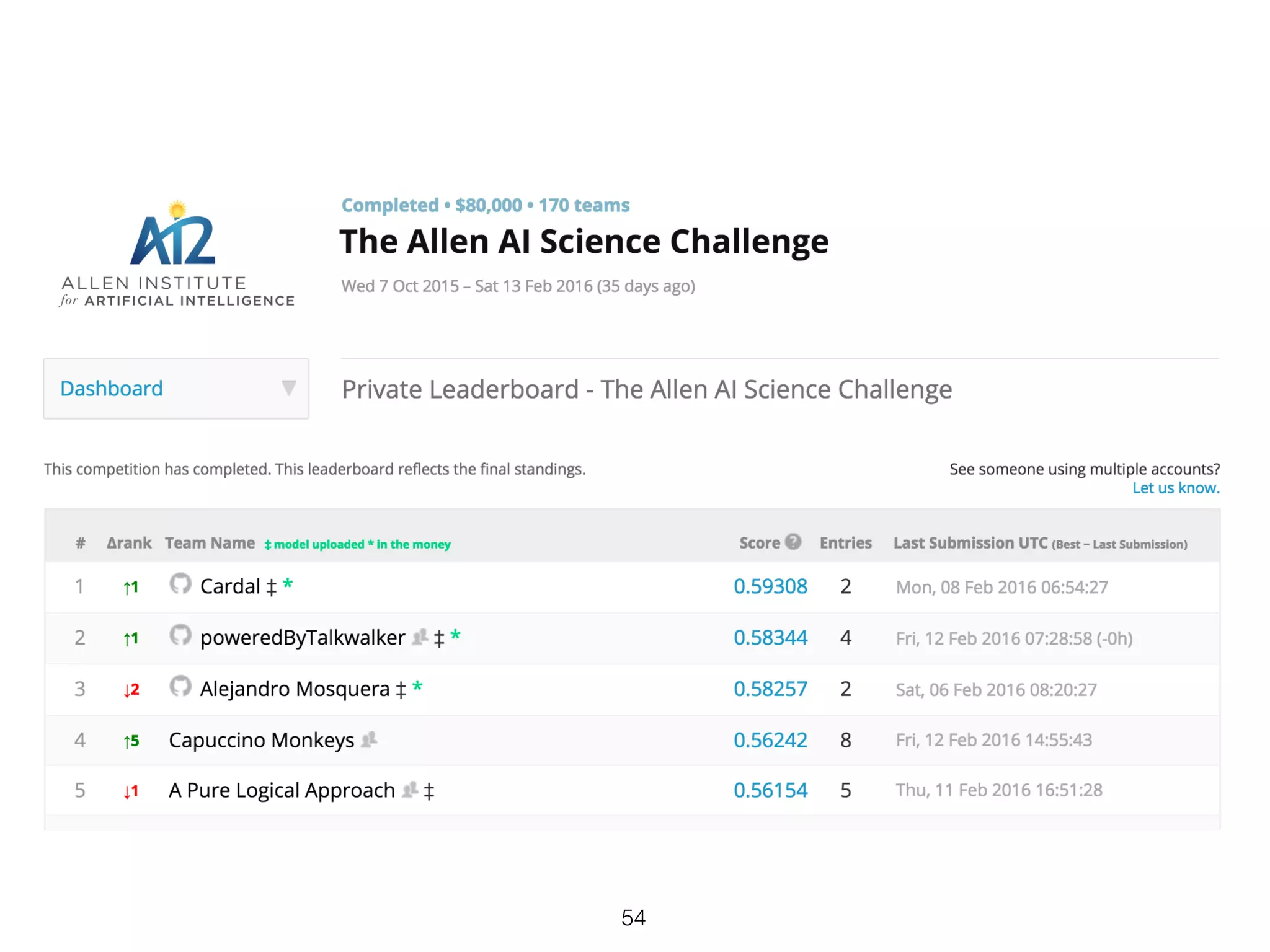

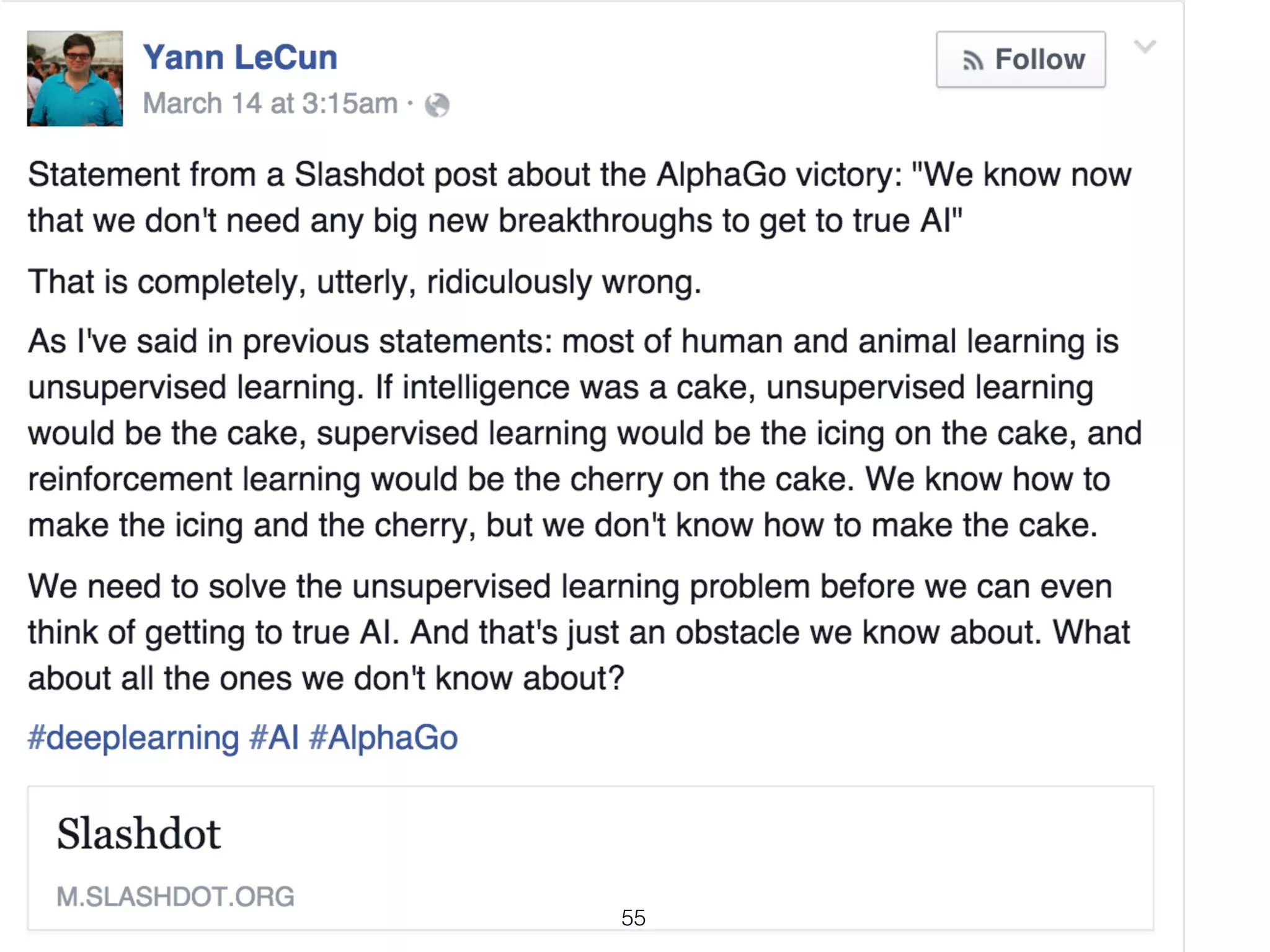

The document provides a comprehensive overview of deep learning, including its historical development, key concepts such as neural networks, and notable figures in the field. It discusses various techniques like back-propagation, convolutional networks, and support vector machines, along with applications in image recognition and natural language processing. The conclusion emphasizes the potential of deep learning to transform industries and improve learning processes, while also noting challenges that remain in achieving advanced AI.