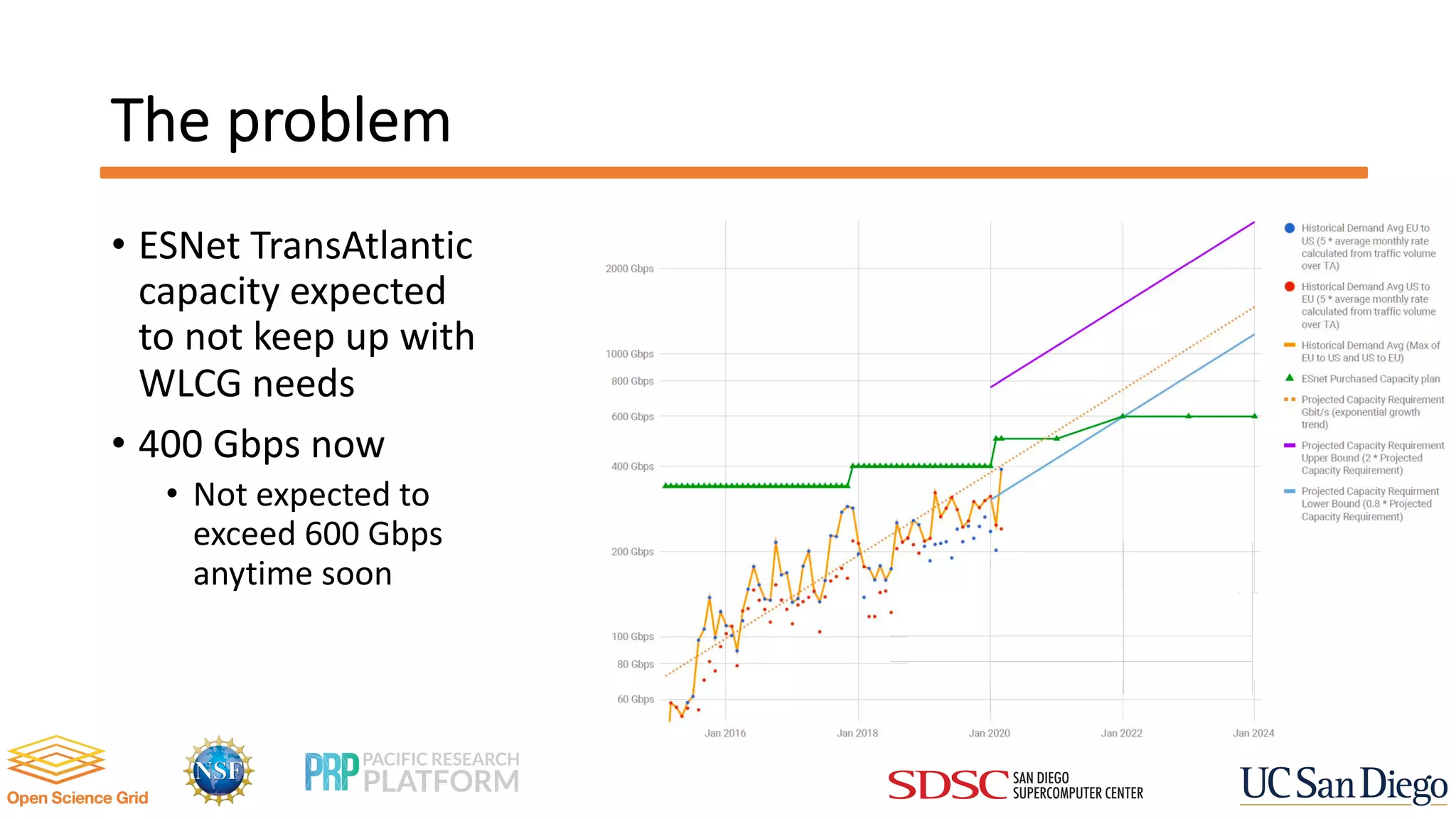

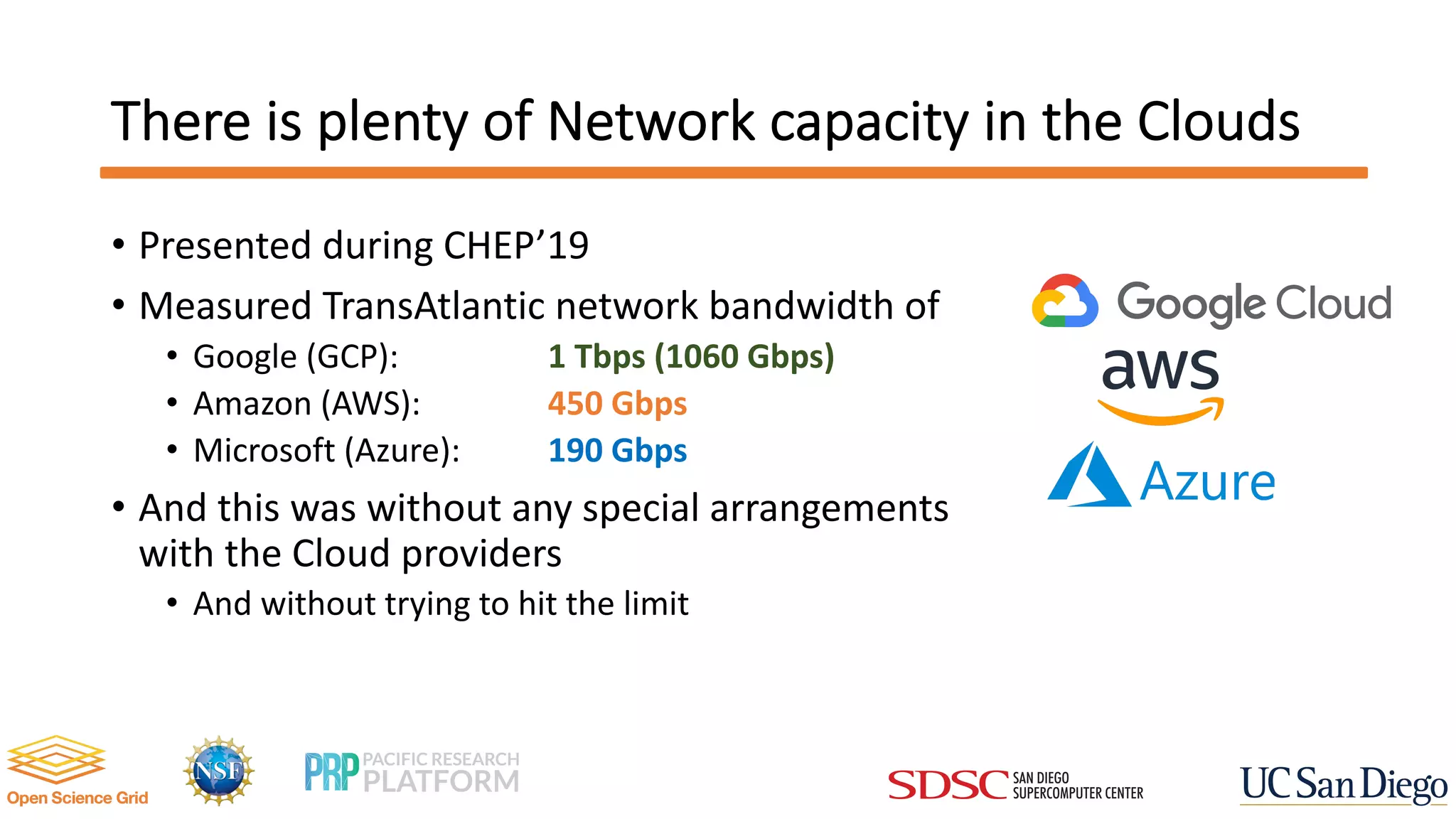

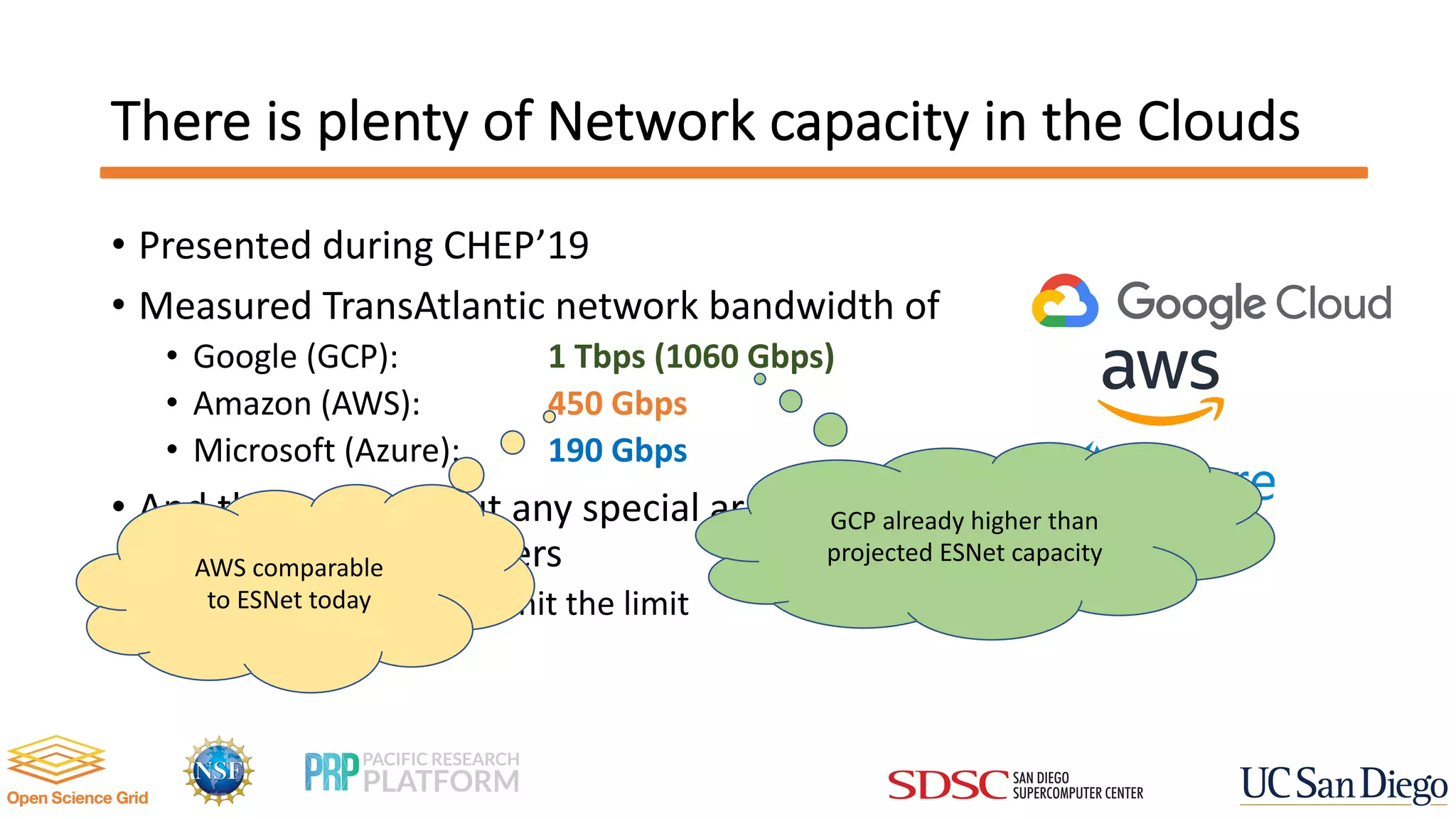

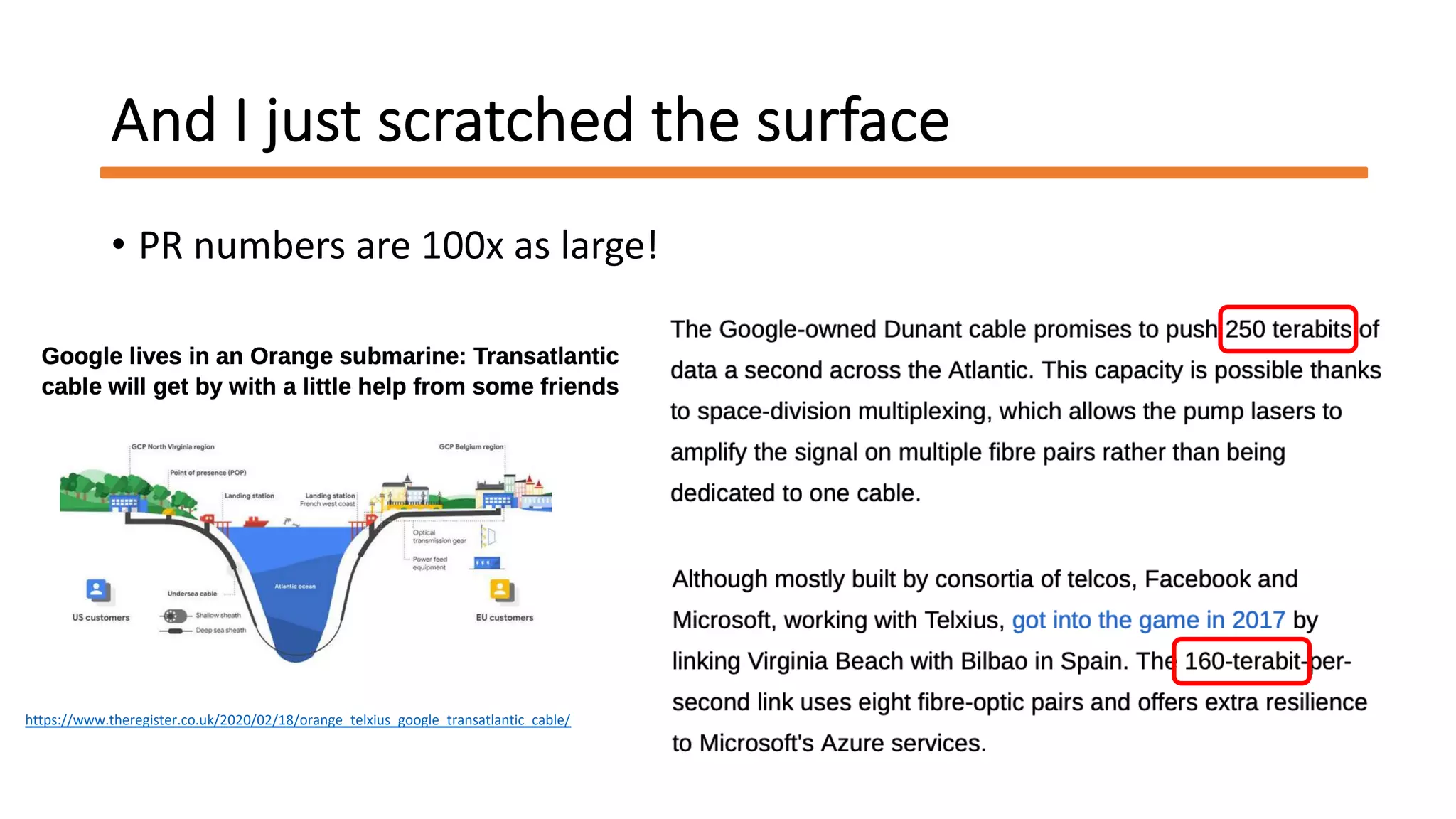

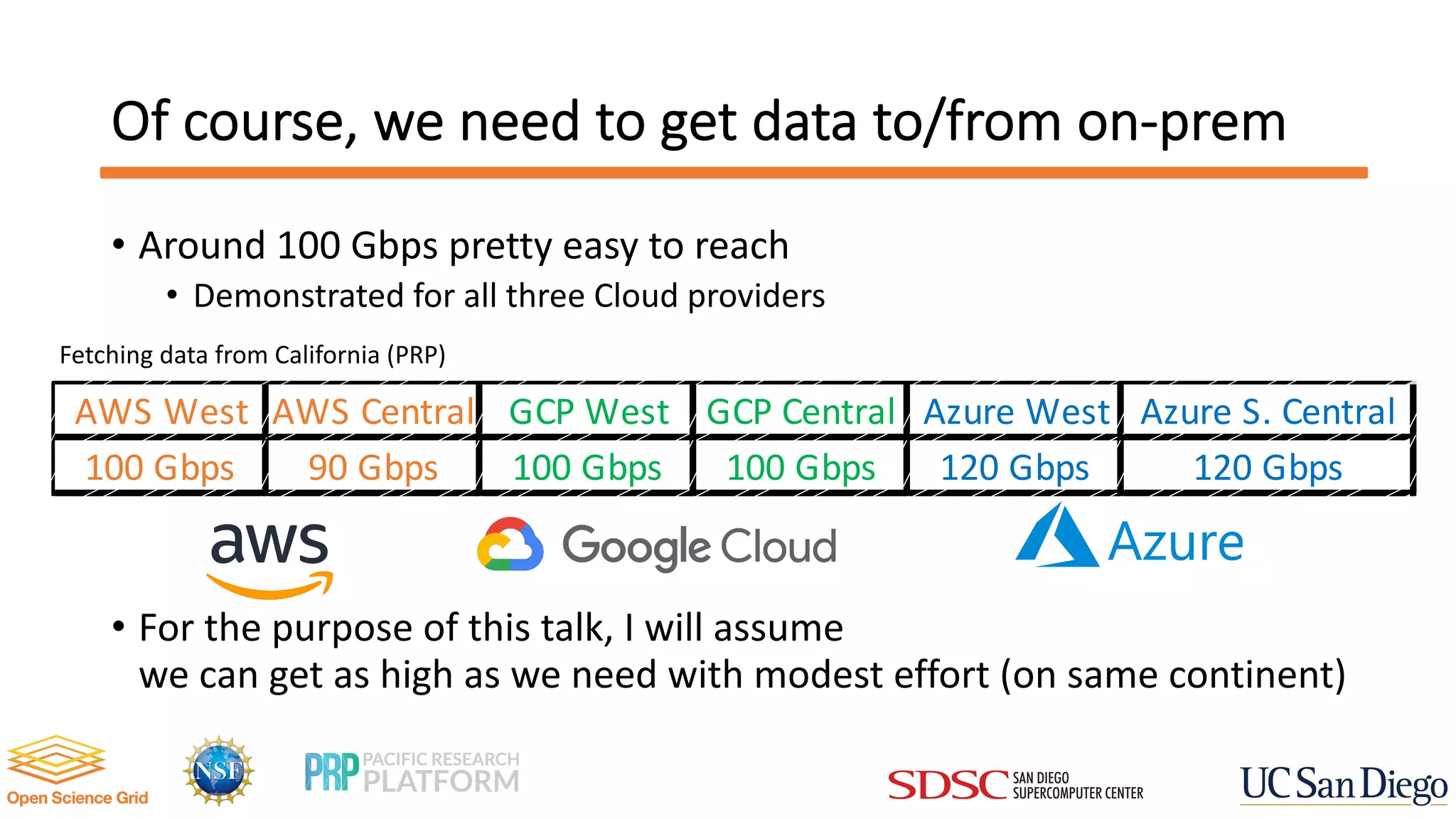

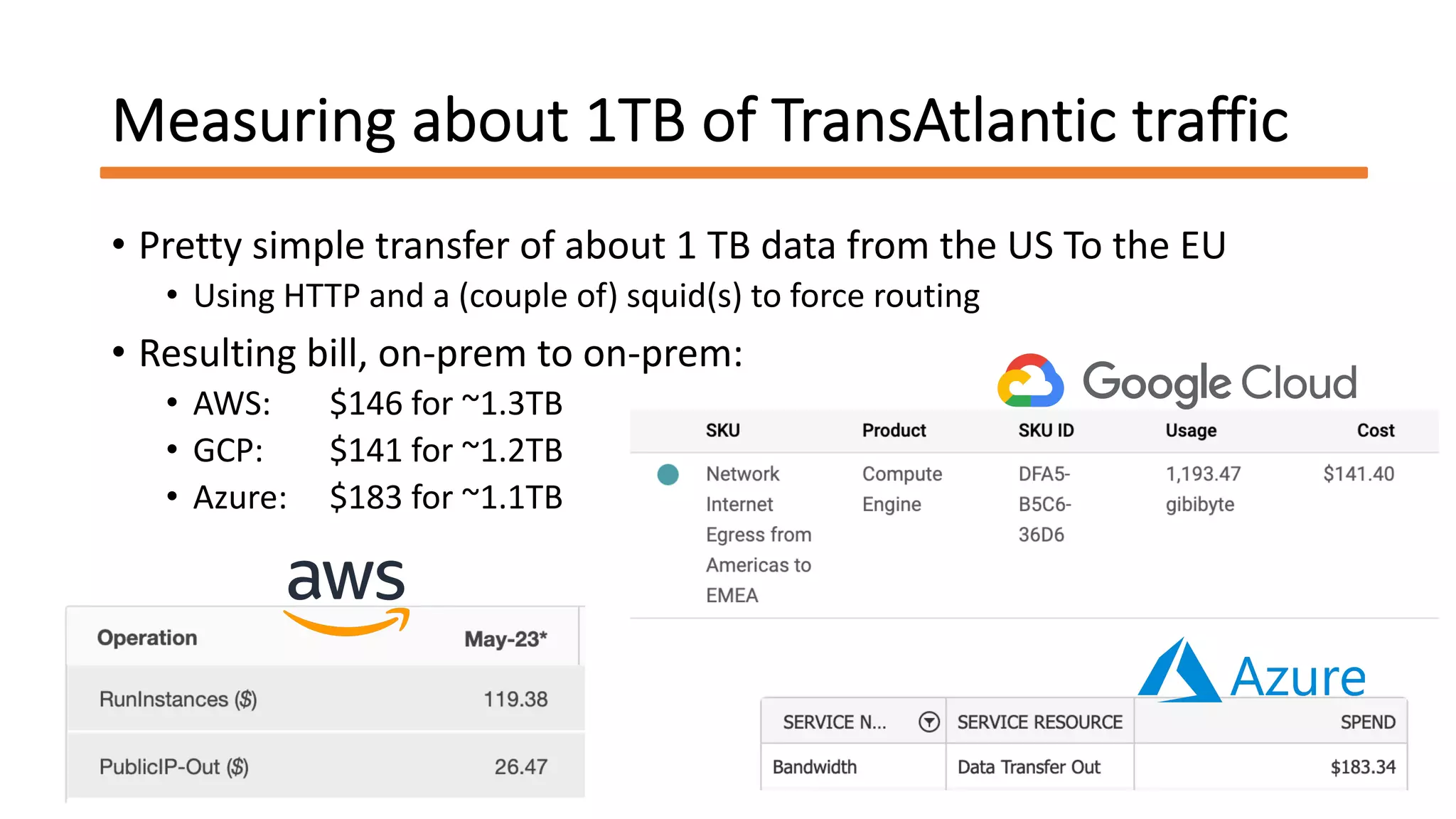

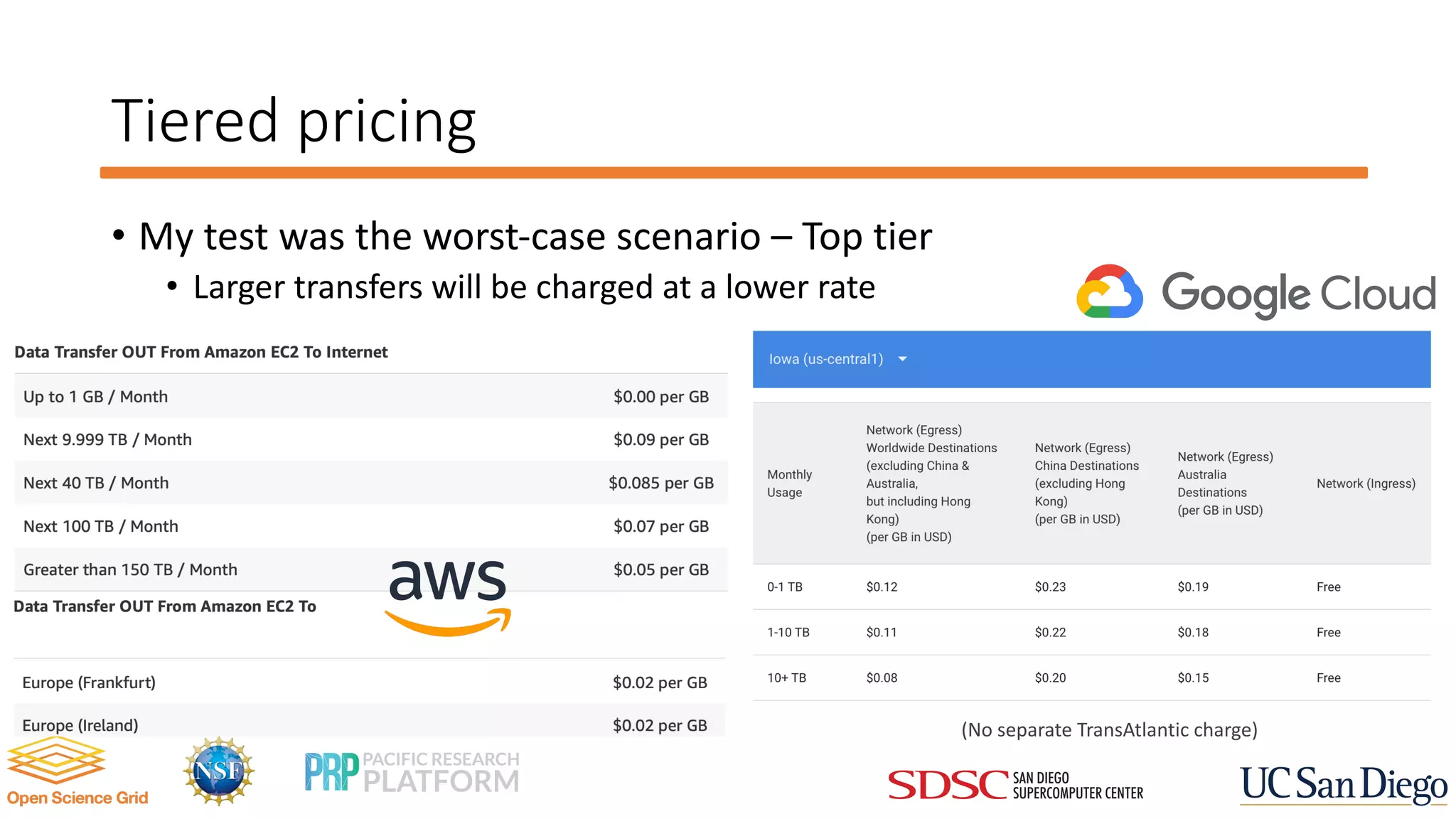

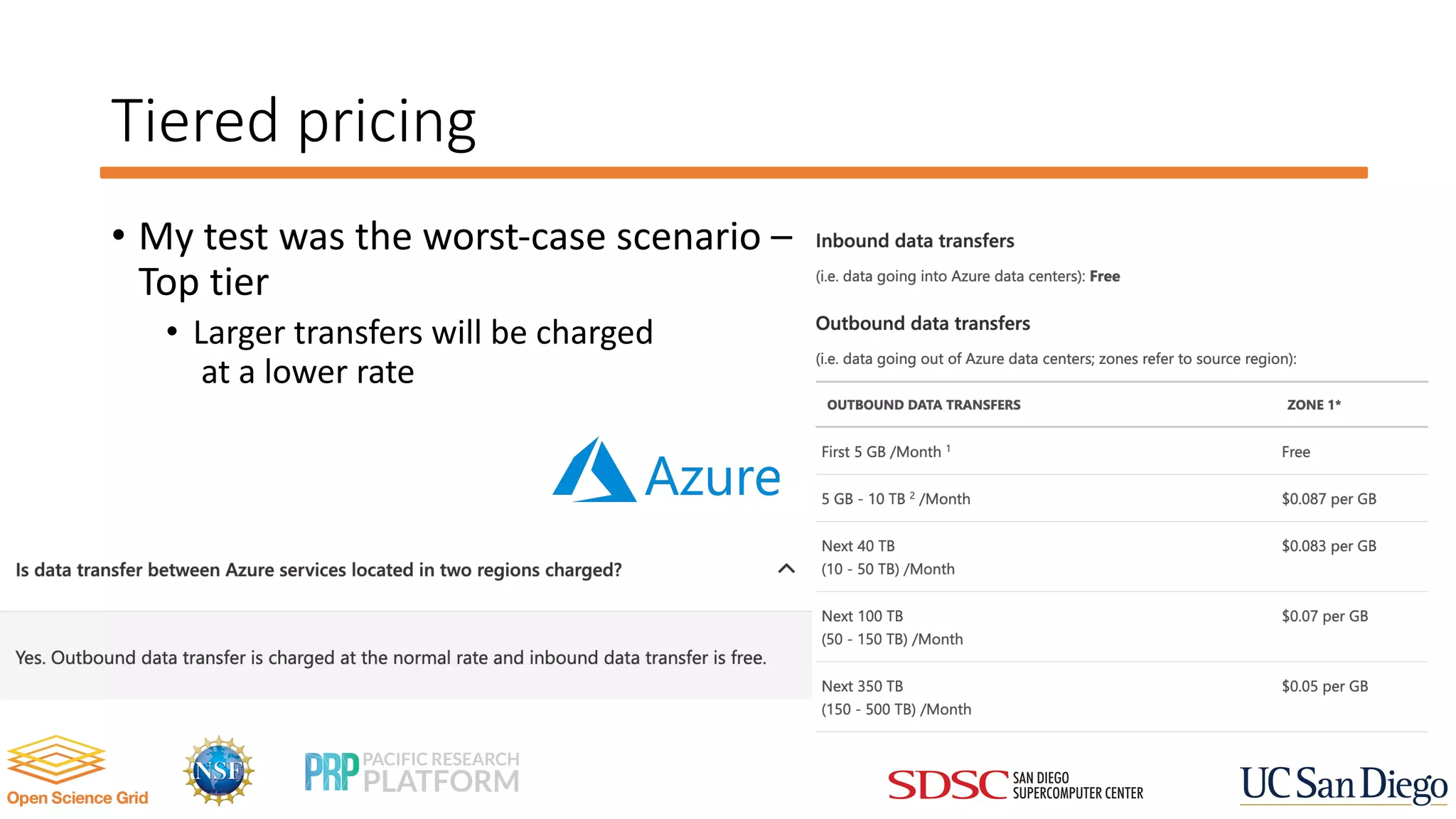

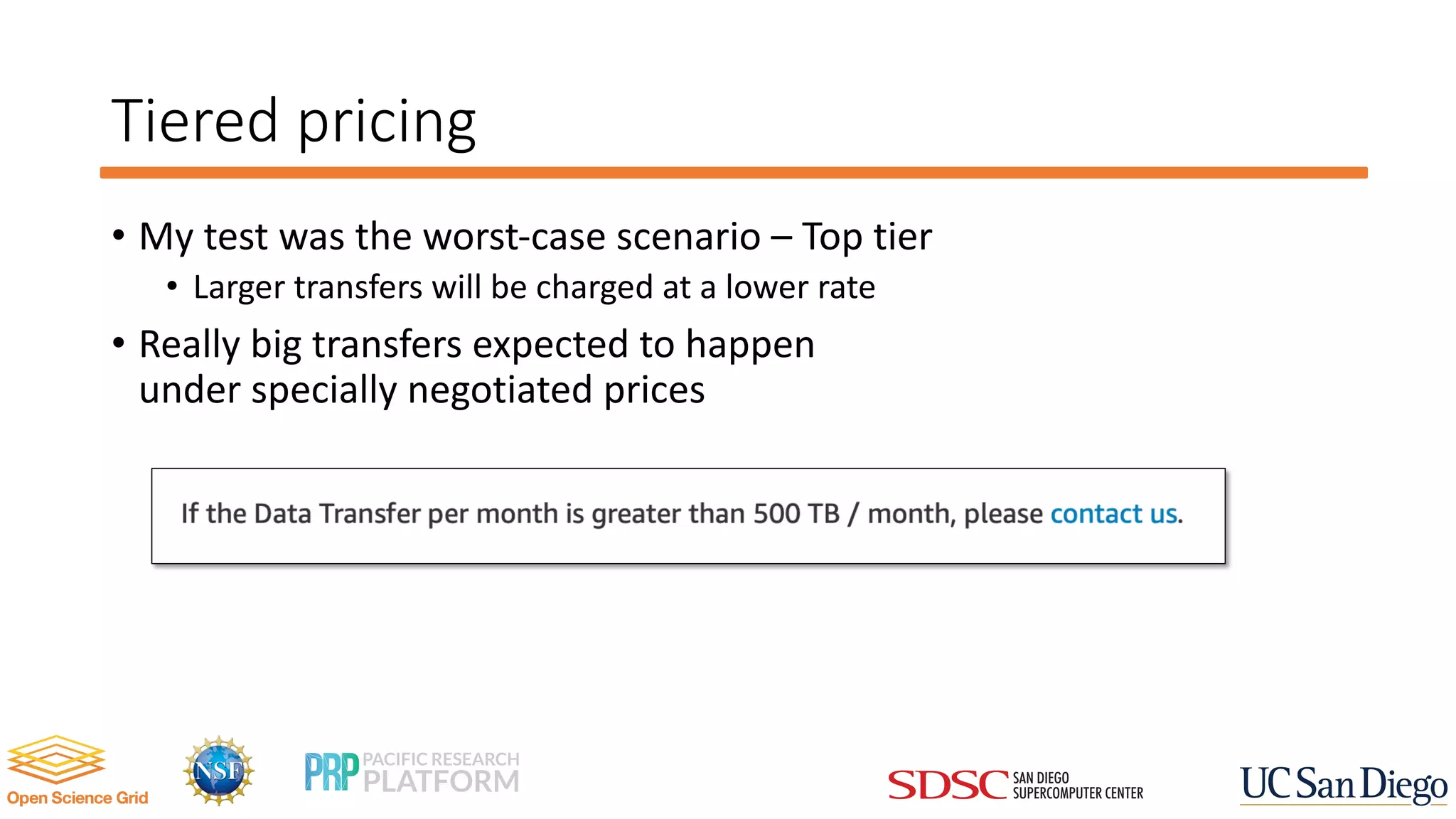

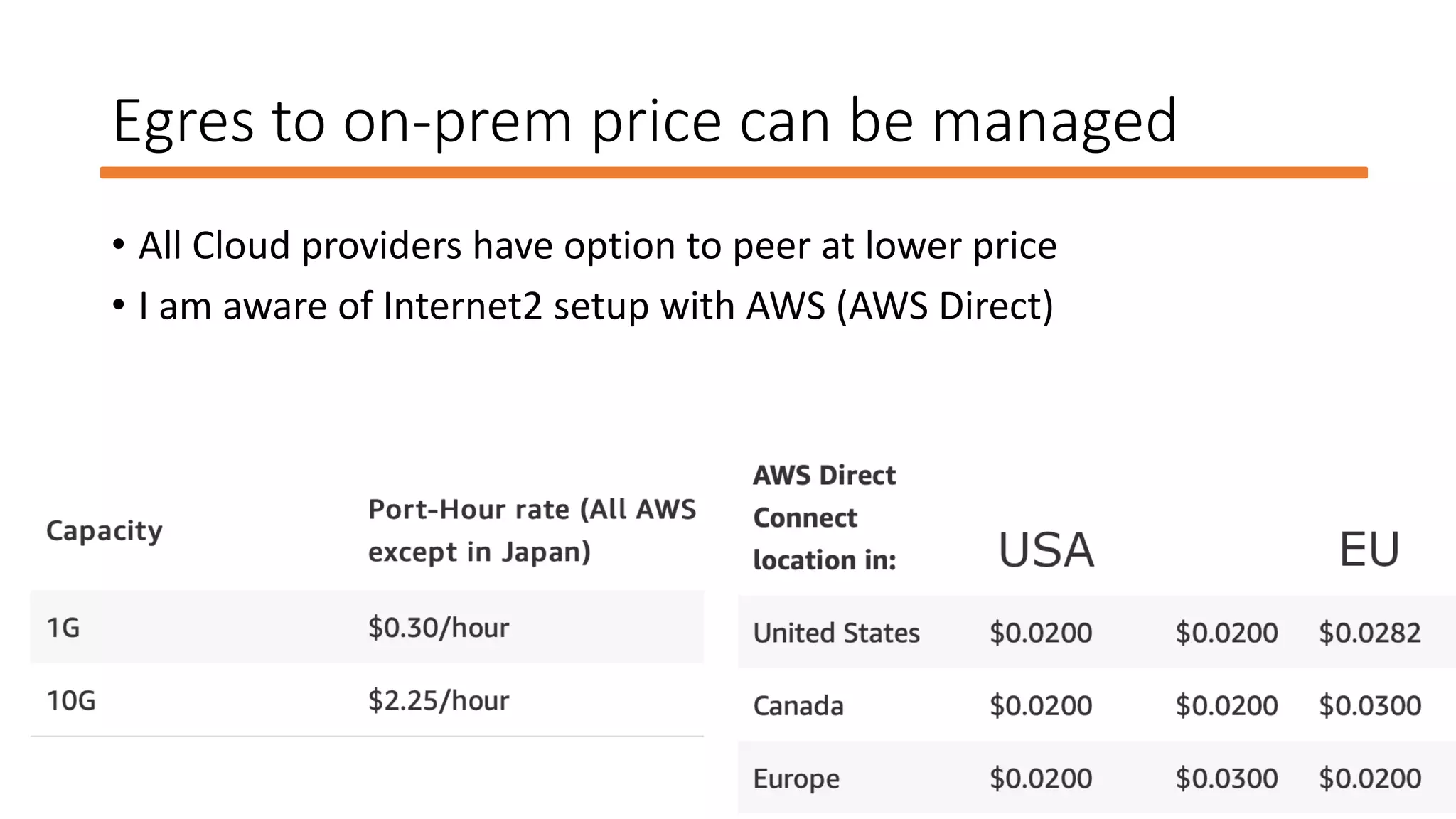

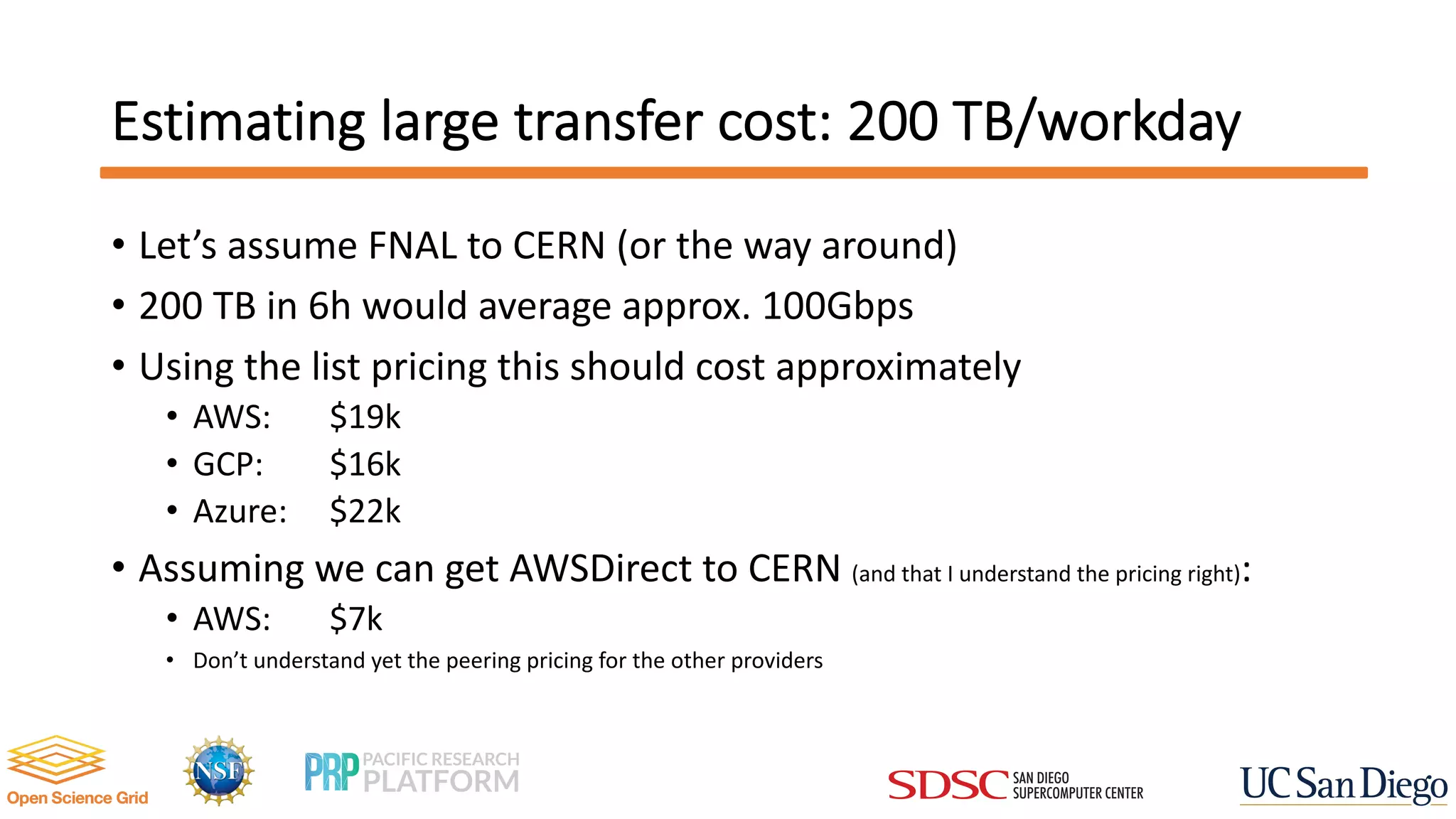

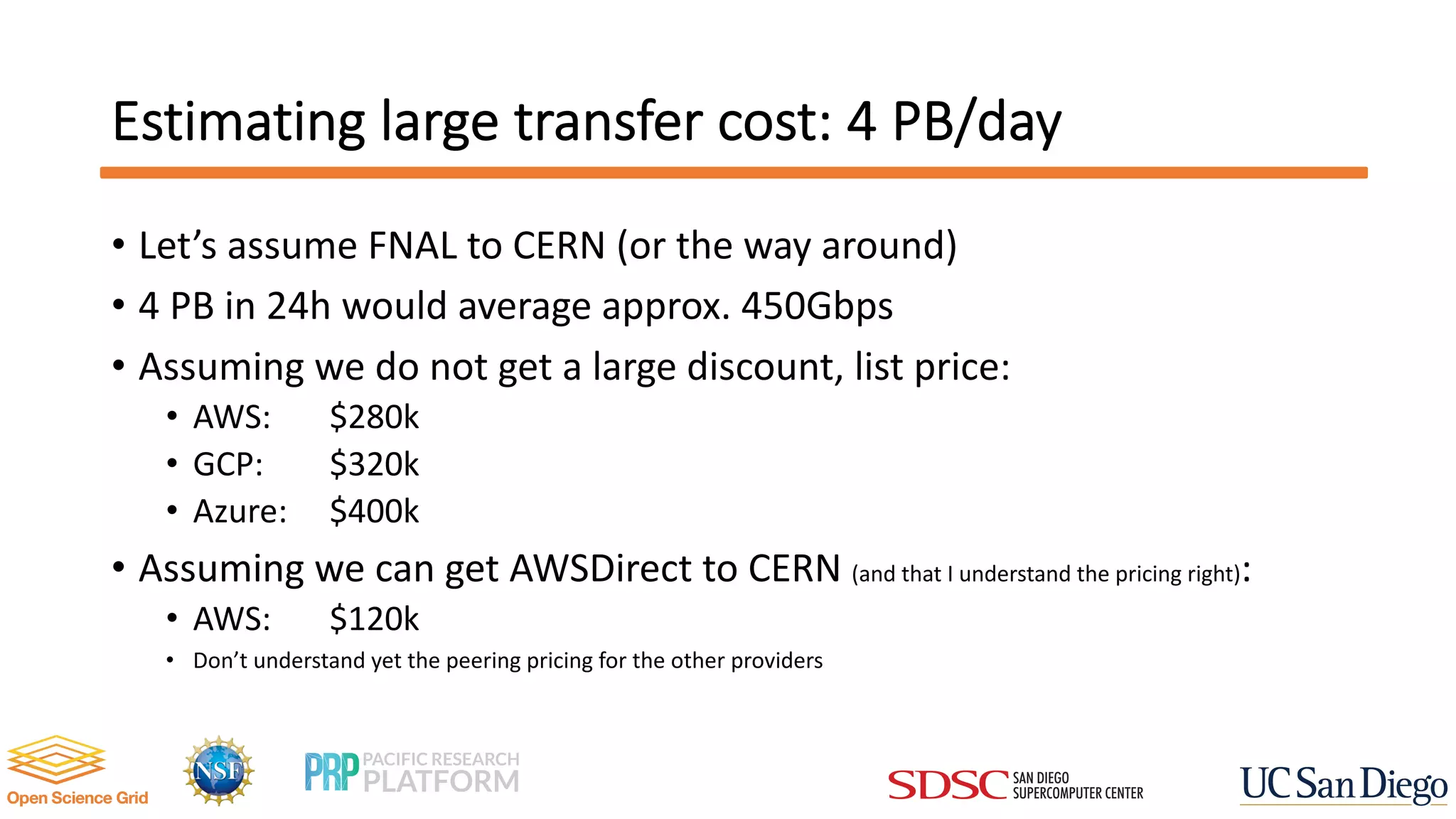

The document discusses the challenges and solutions for transatlantic networking capacity in relation to the WLCG community's needs, which exceed the current ESNet capacity. It highlights the significant bandwidth available through major cloud providers—Google, Amazon, and Microsoft—along with the associated costs for data transfers. The author suggests a hybrid approach of using on-premises resources for base-load and cloud services for burst traffic while acknowledging the need for cost management and potential discounts for larger transfers.