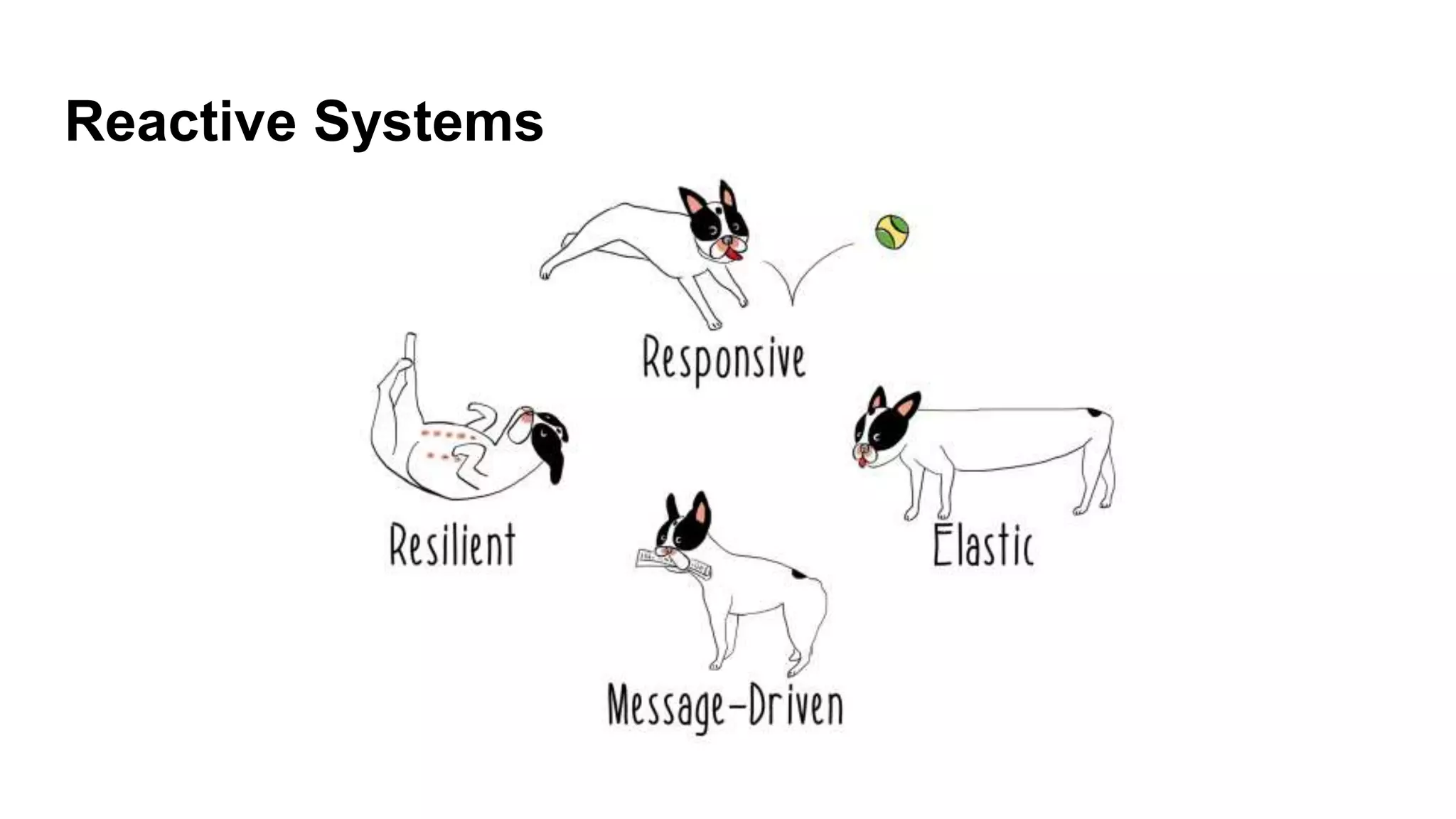

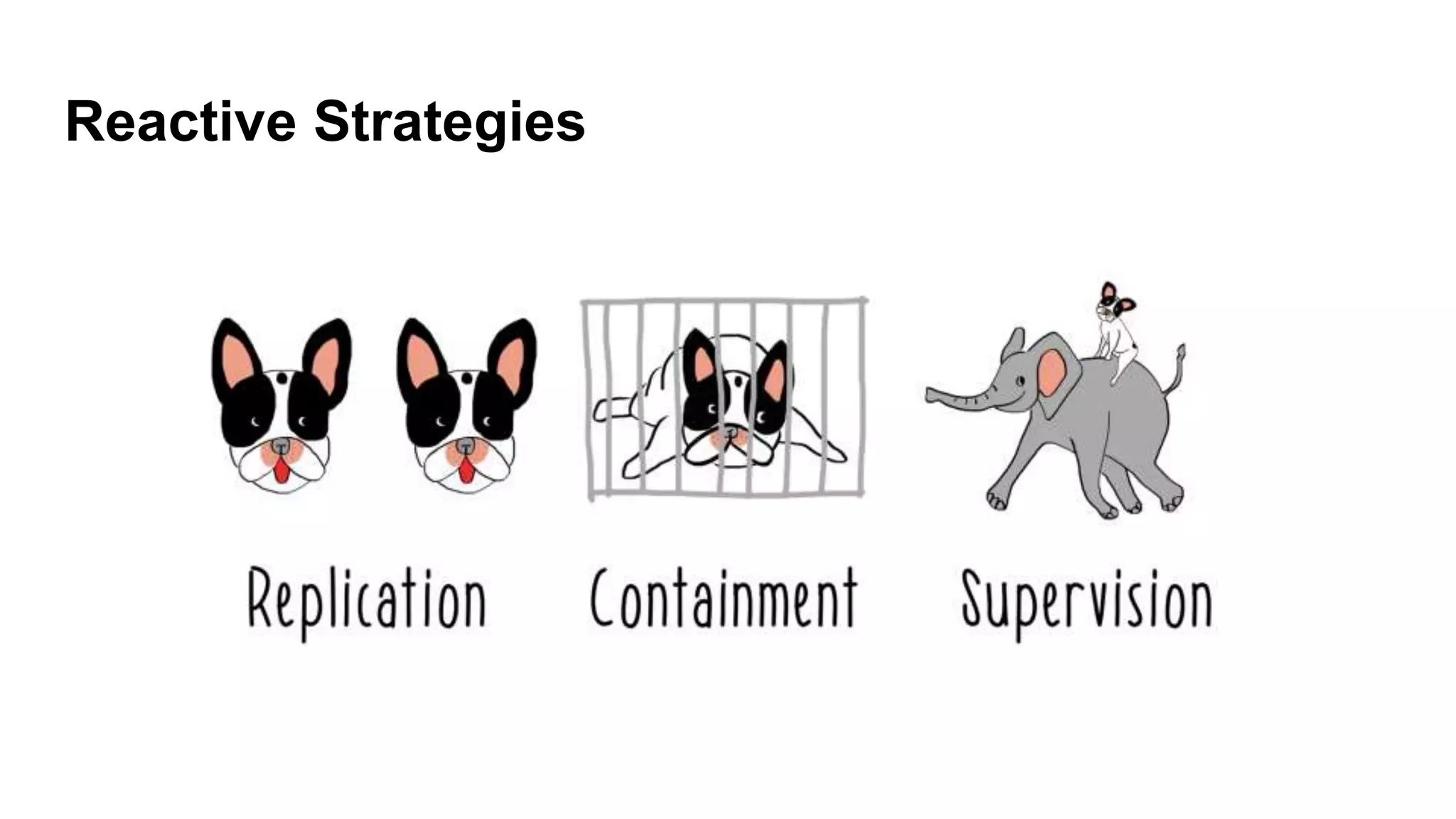

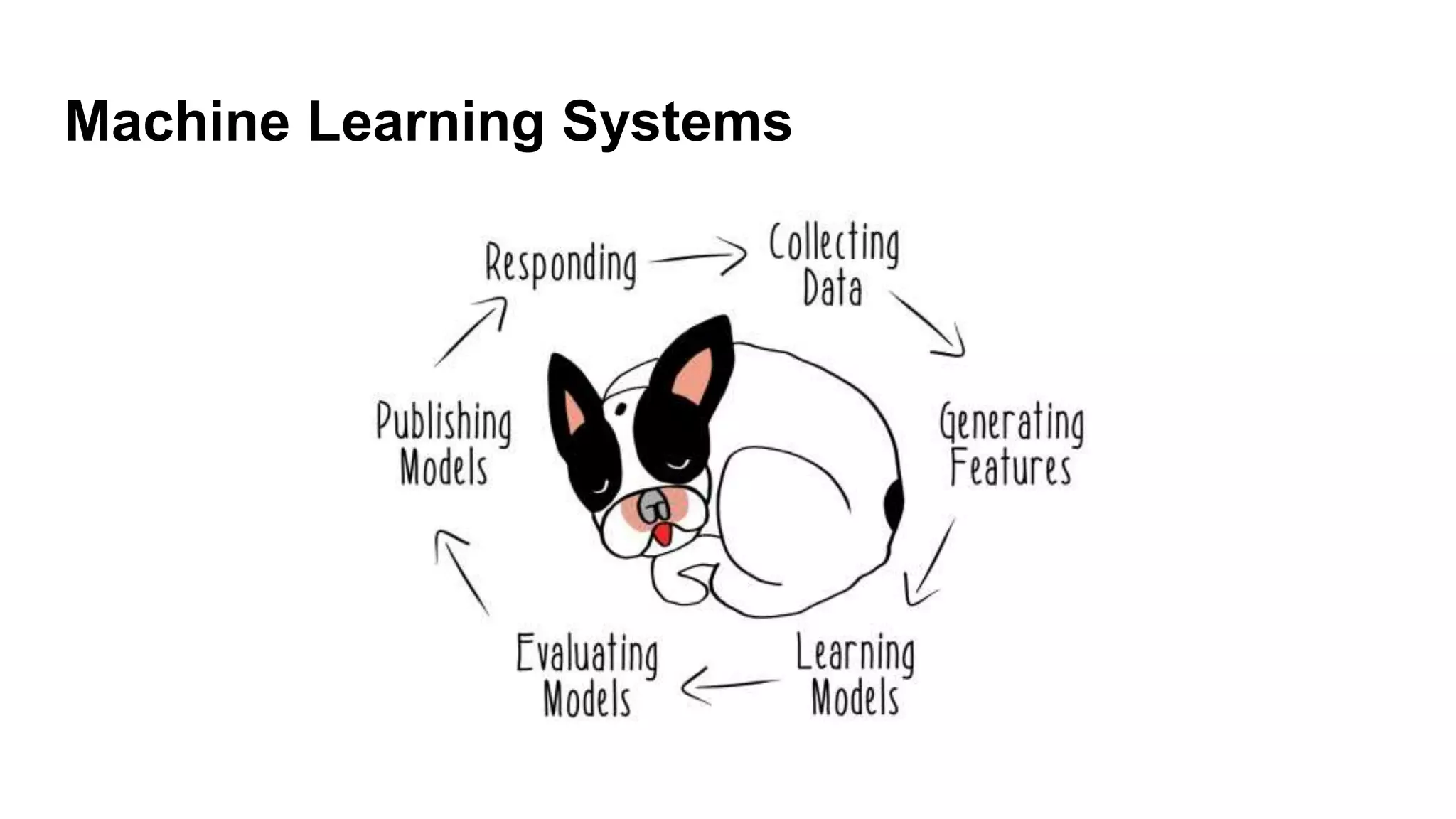

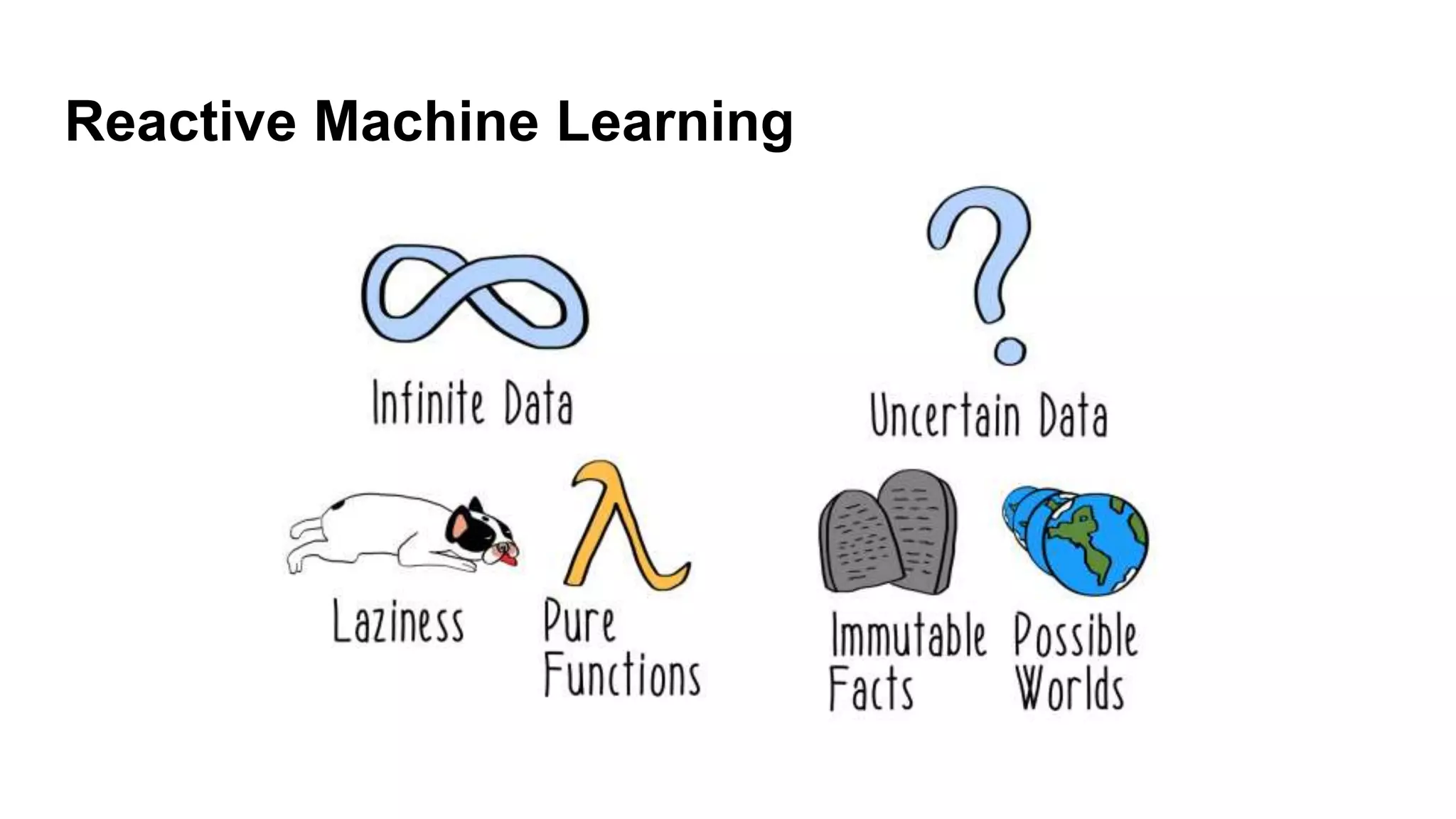

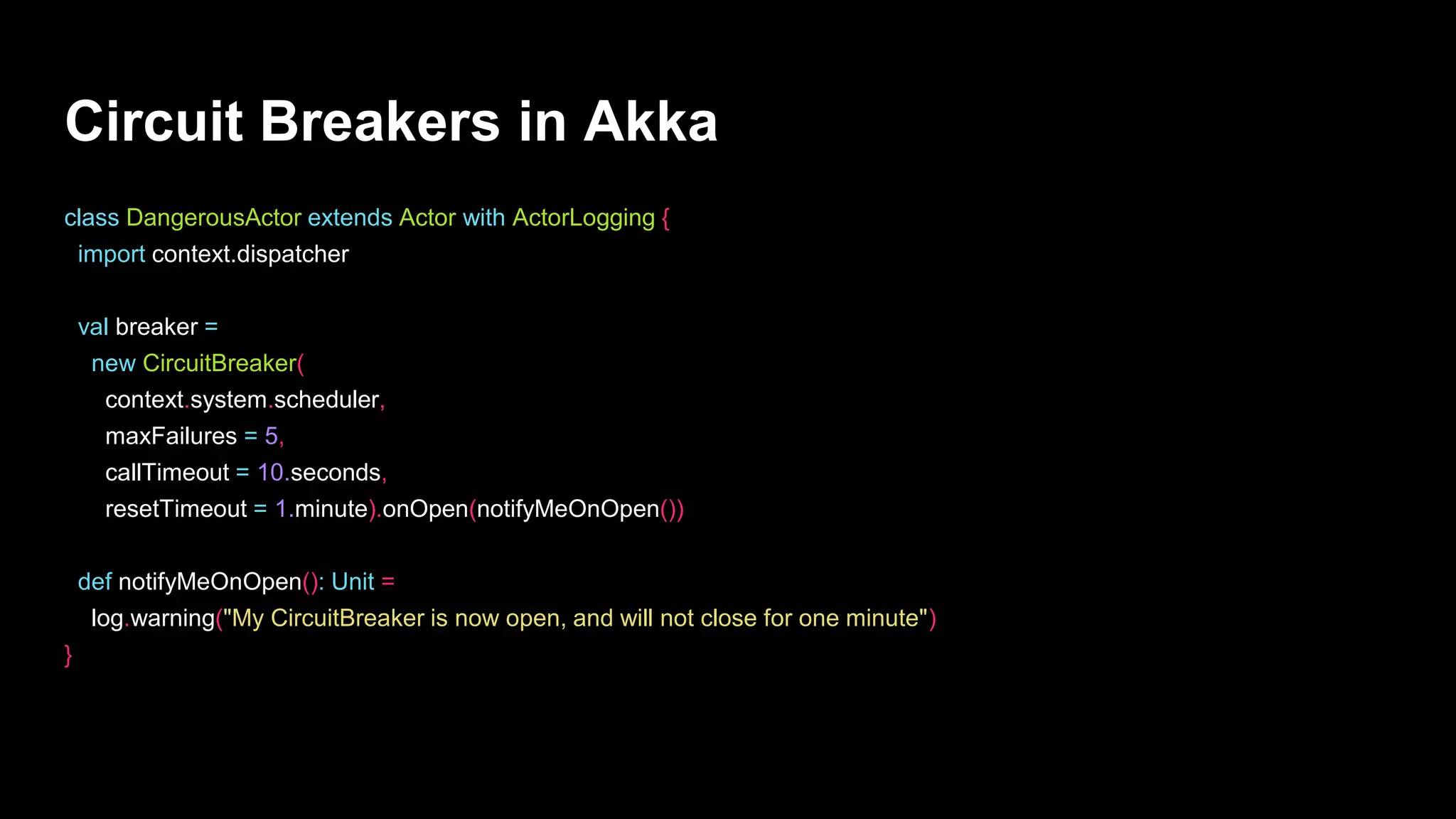

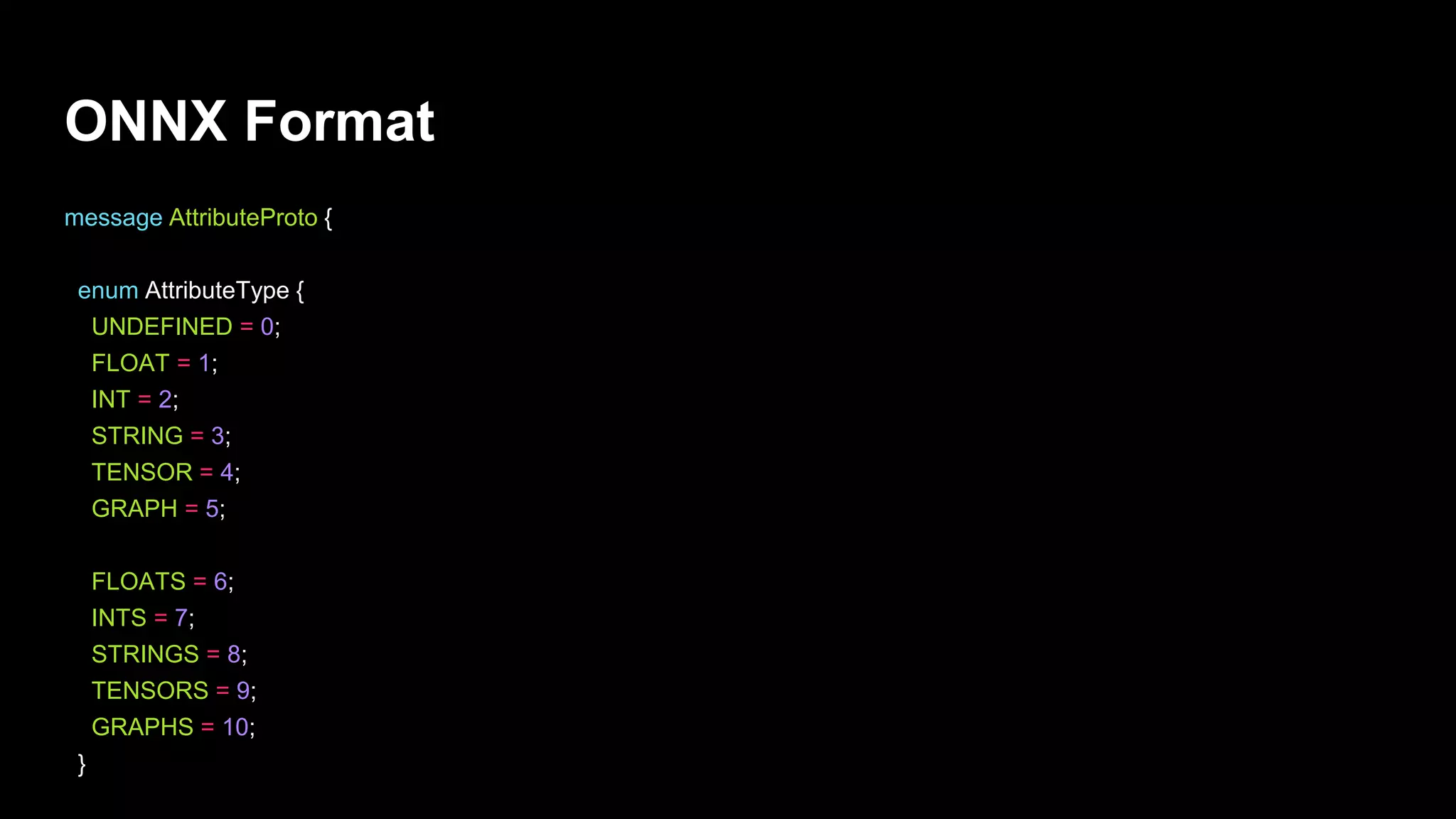

The document discusses tools and methodologies for creating reactive machine learning systems, highlighting various programming languages and frameworks such as Scala, Python, and Akka. It covers aspects of model serialization, microservices, and circuit breakers in different programming environments, emphasizing the importance of reactivity and adaptability in machine learning applications. Furthermore, the author promotes open standards and diverse solutions to tackle complex problems in this field.

![Model Microservice in Akka HTTP

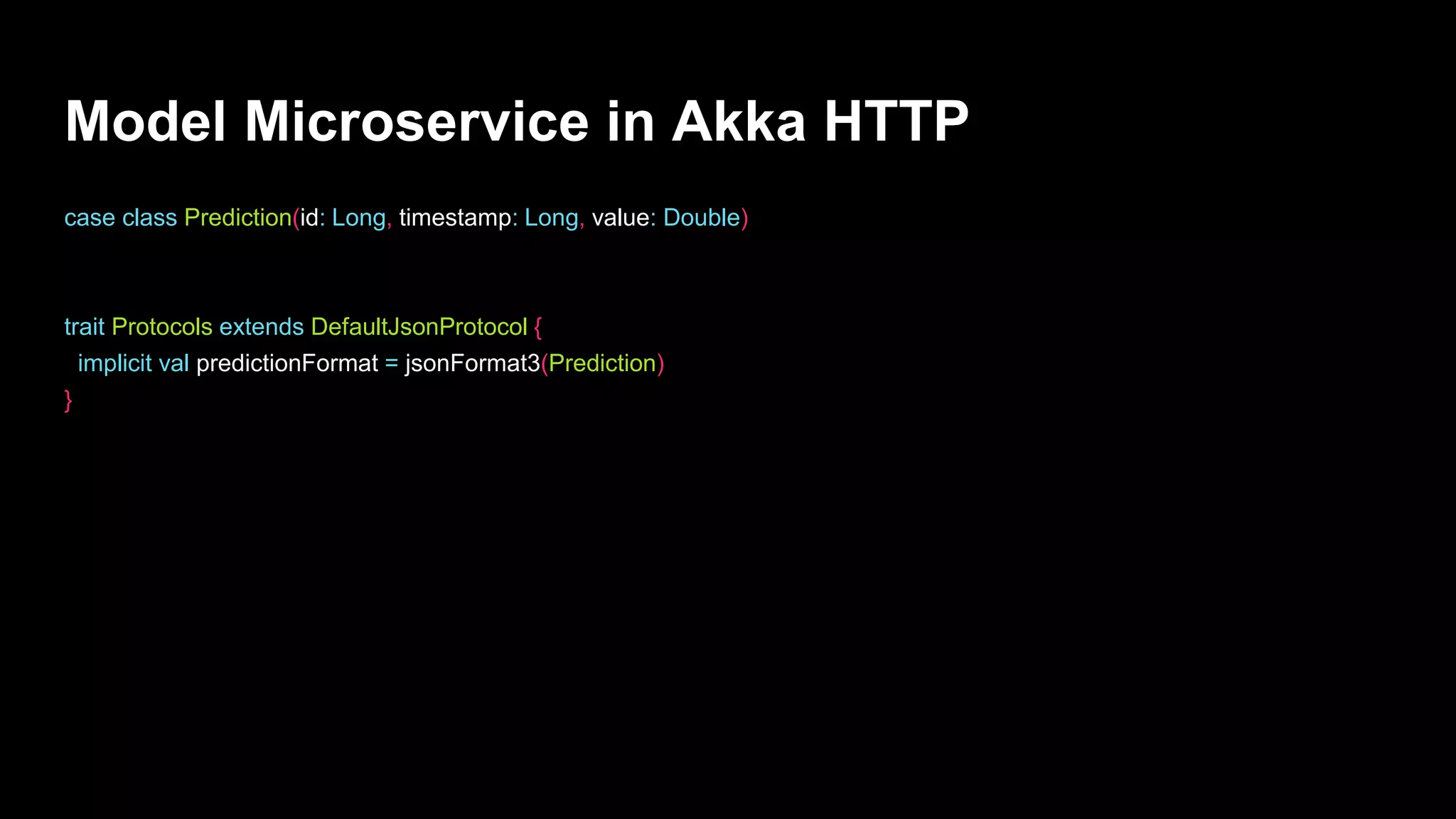

def model(features: Map[Char, Double]) = {

val coefficients = ('a' to 'z').zip(1 to 26).toMap

val predictionValue = features.map {

case (identifier, value) =>

coefficients.getOrElse(identifier, 0) * value

}.sum / features.size

Prediction(Random.nextLong(), System.currentTimeMillis(), predictionValue)

}

def parseFeatures(features: String): Map[String, Double] = {

features.parseJson.convertTo[Map[Char, Double]]

}

def predict(features: String): Prediction = {

model(parseFeatures(features))

}](https://image.slidesharecdn.com/toolsforrml-180528211629/75/Tools-for-Making-Machine-Learning-more-Reactive-16-2048.jpg)

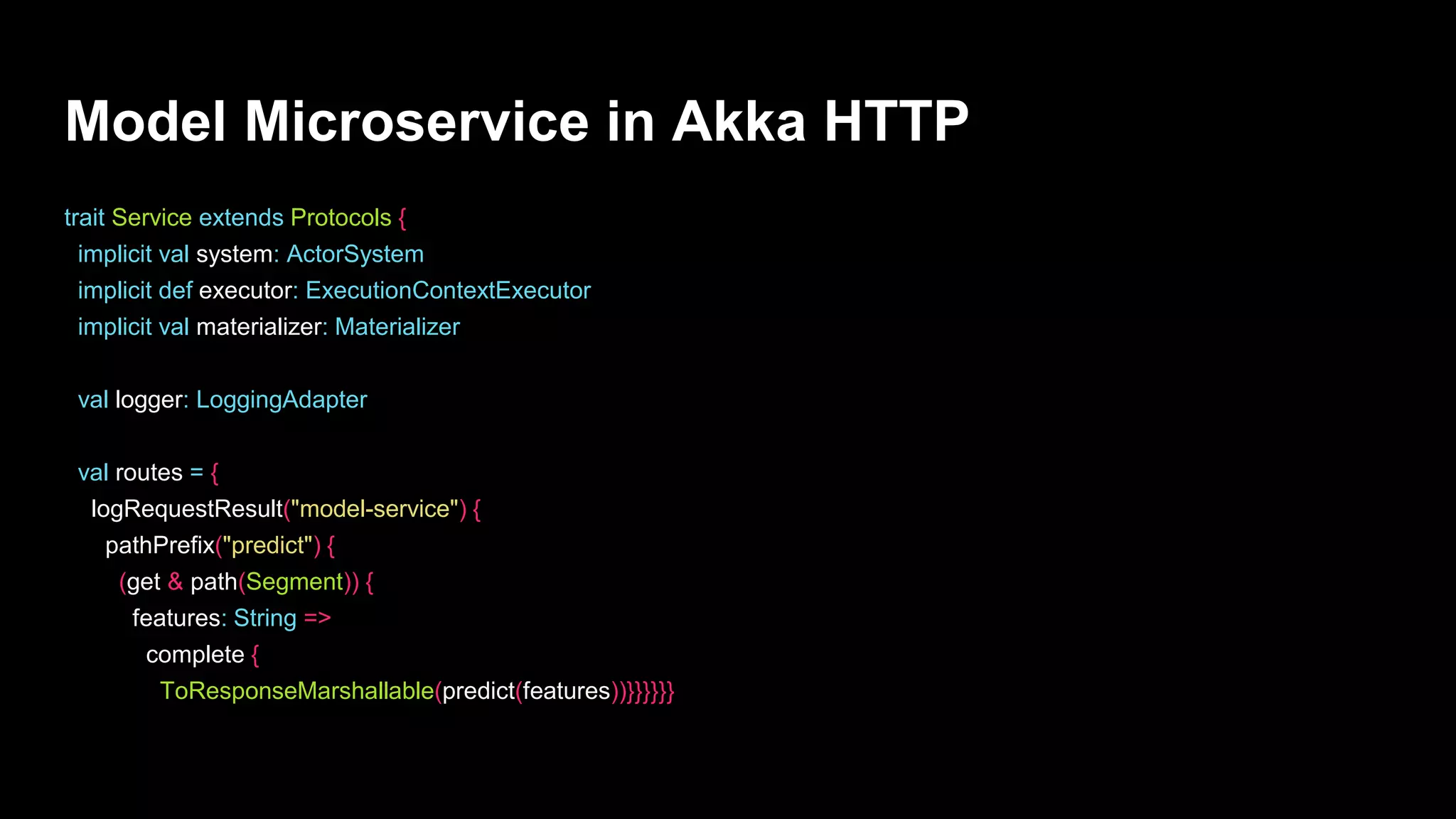

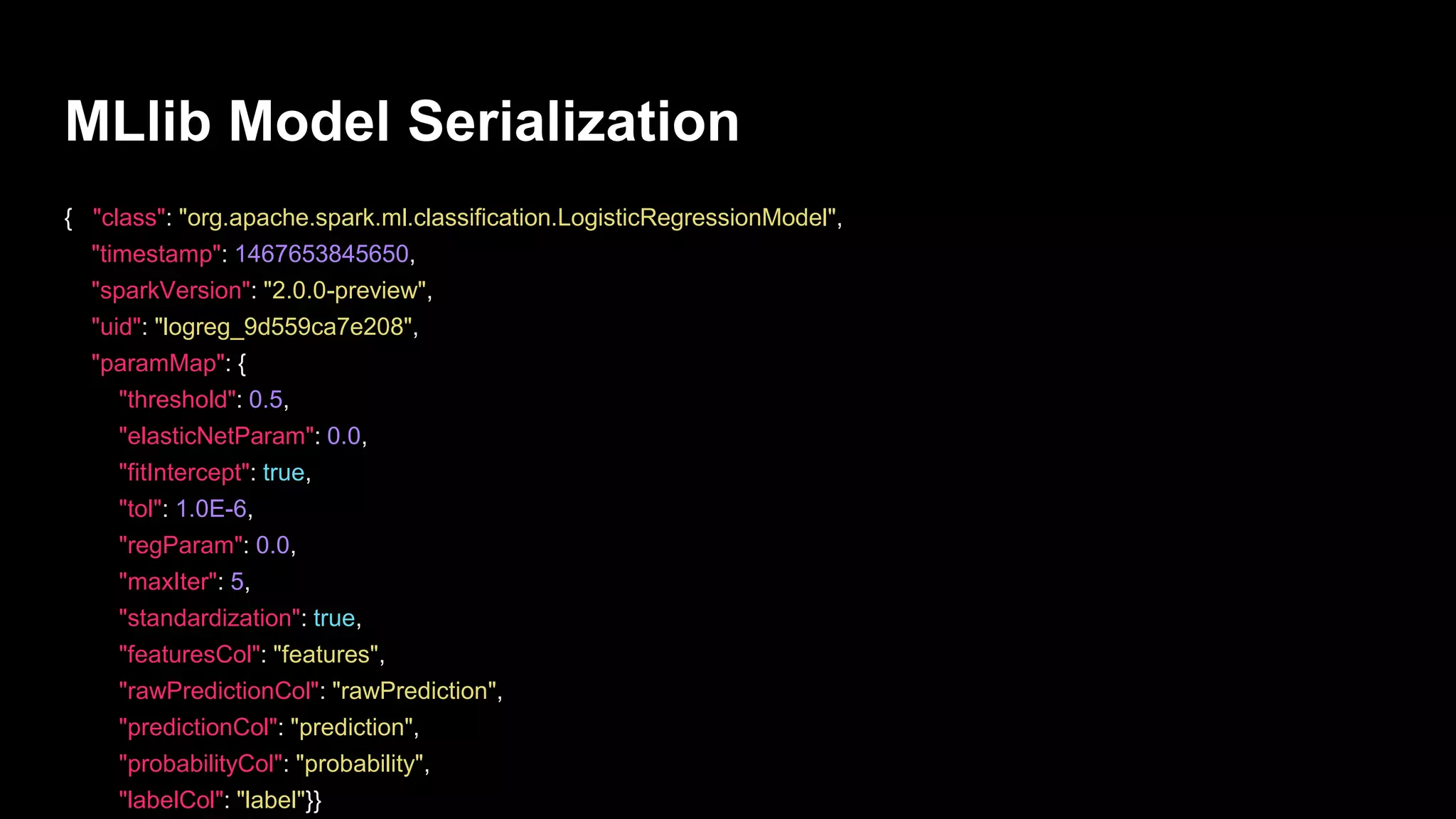

![MLlib Model Serialization

{

"class": "org.apache.spark.ml.PipelineModel",

"timestamp": 1467653845388,

"sparkVersion": "2.0.0-preview",

"uid": "pipeline_6b4fb08e8fb0",

"paramMap": {

"stageUids": ["quantileDiscretizer_d3996173db25",

"vecAssembler_2bdcd79fe1cf",

"logreg_9d559ca7e208"]

}

}](https://image.slidesharecdn.com/toolsforrml-180528211629/75/Tools-for-Making-Machine-Learning-more-Reactive-20-2048.jpg)

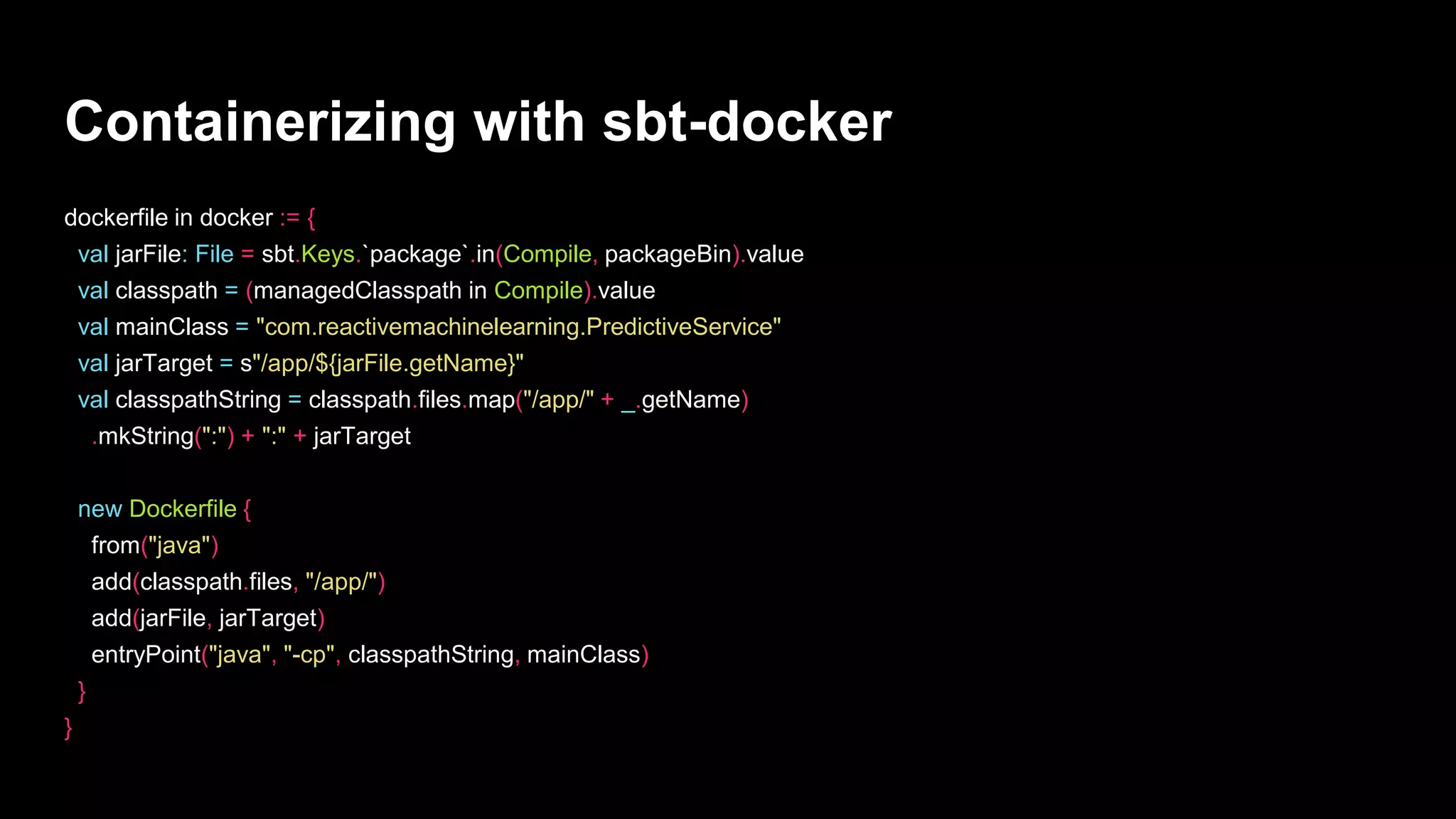

![Containerizing with sbt-docker

FROM java

ADD 0/spark-core_2.11-2.0.0-preview.jar 1/avro-mapred-1.7.7-hadoop2.jar ...

ADD 166/chapter-7_2.11-1.0.jar /app/chapter-7_2.11-1.0.jar

ENTRYPOINT ["java", "-cp", "/app/spark-core_2.11-2.0.0-preview.jar ...

"com.reactivemachinelearning.PredictiveService"]](https://image.slidesharecdn.com/toolsforrml-180528211629/75/Tools-for-Making-Machine-Learning-more-Reactive-25-2048.jpg)

}

def callA(input: String) = call("a", input)

def callB(input: String) = call("b", input)

}](https://image.slidesharecdn.com/toolsforrml-180528211629/75/Tools-for-Making-Machine-Learning-more-Reactive-29-2048.jpg)

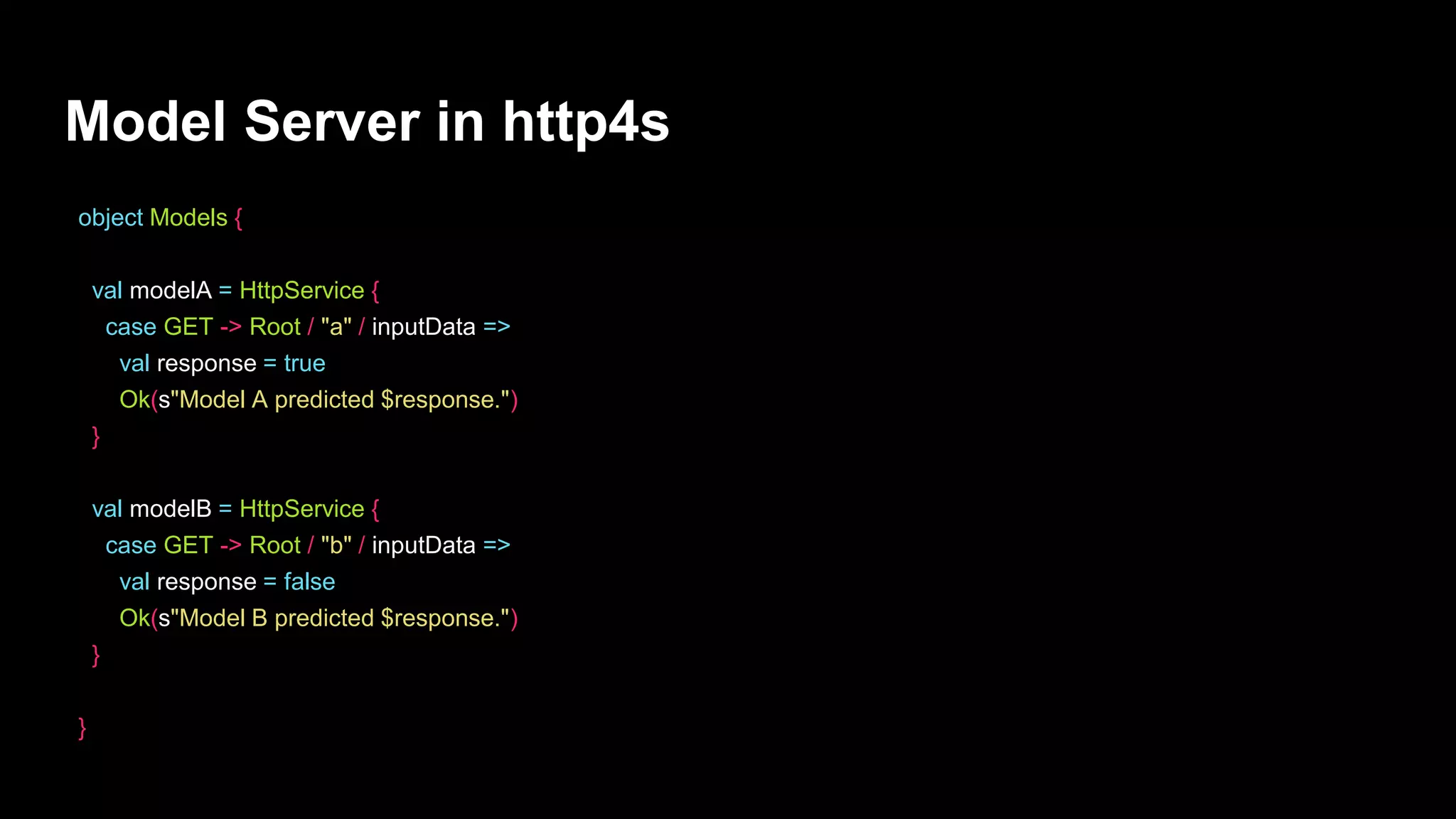

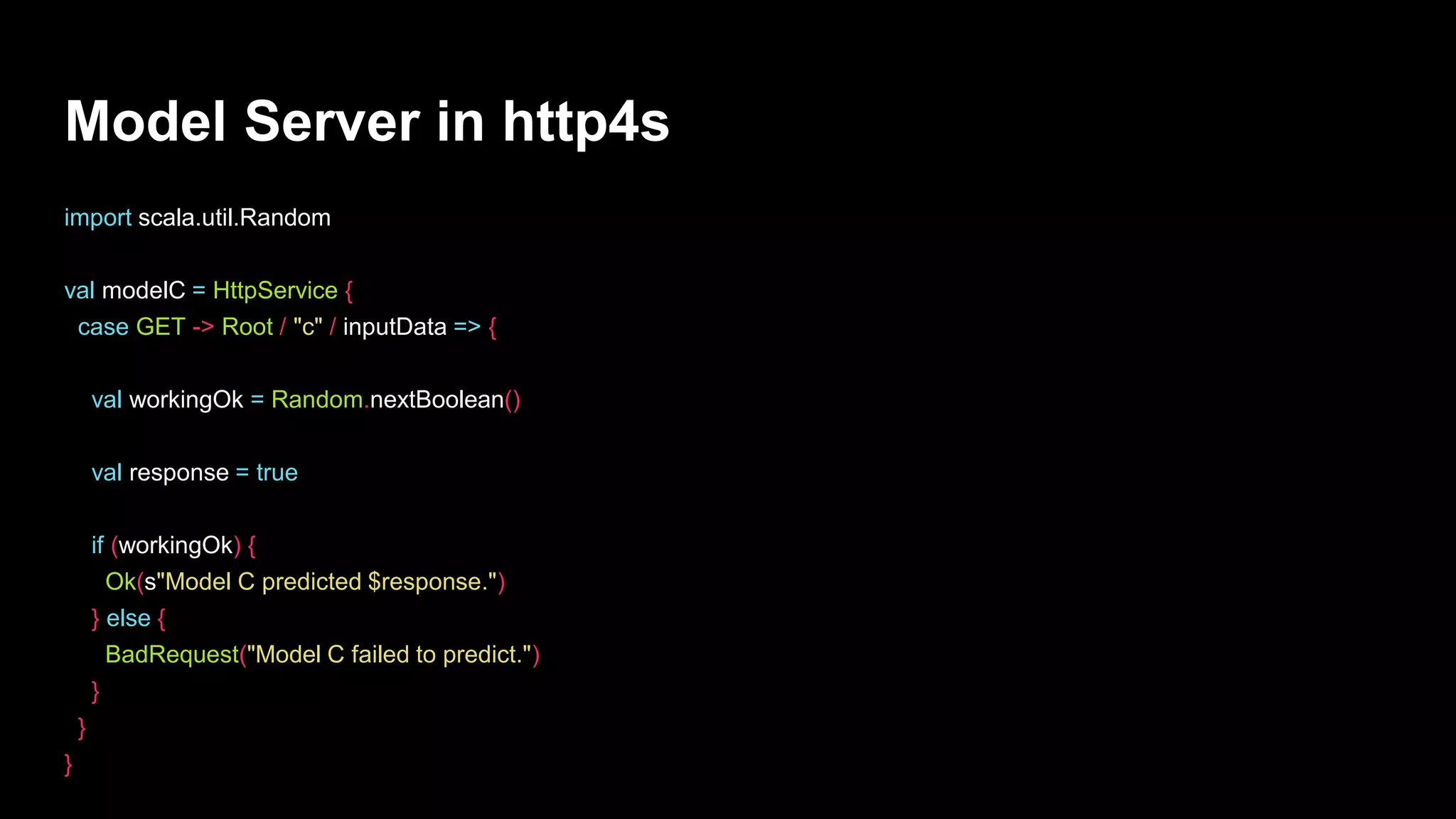

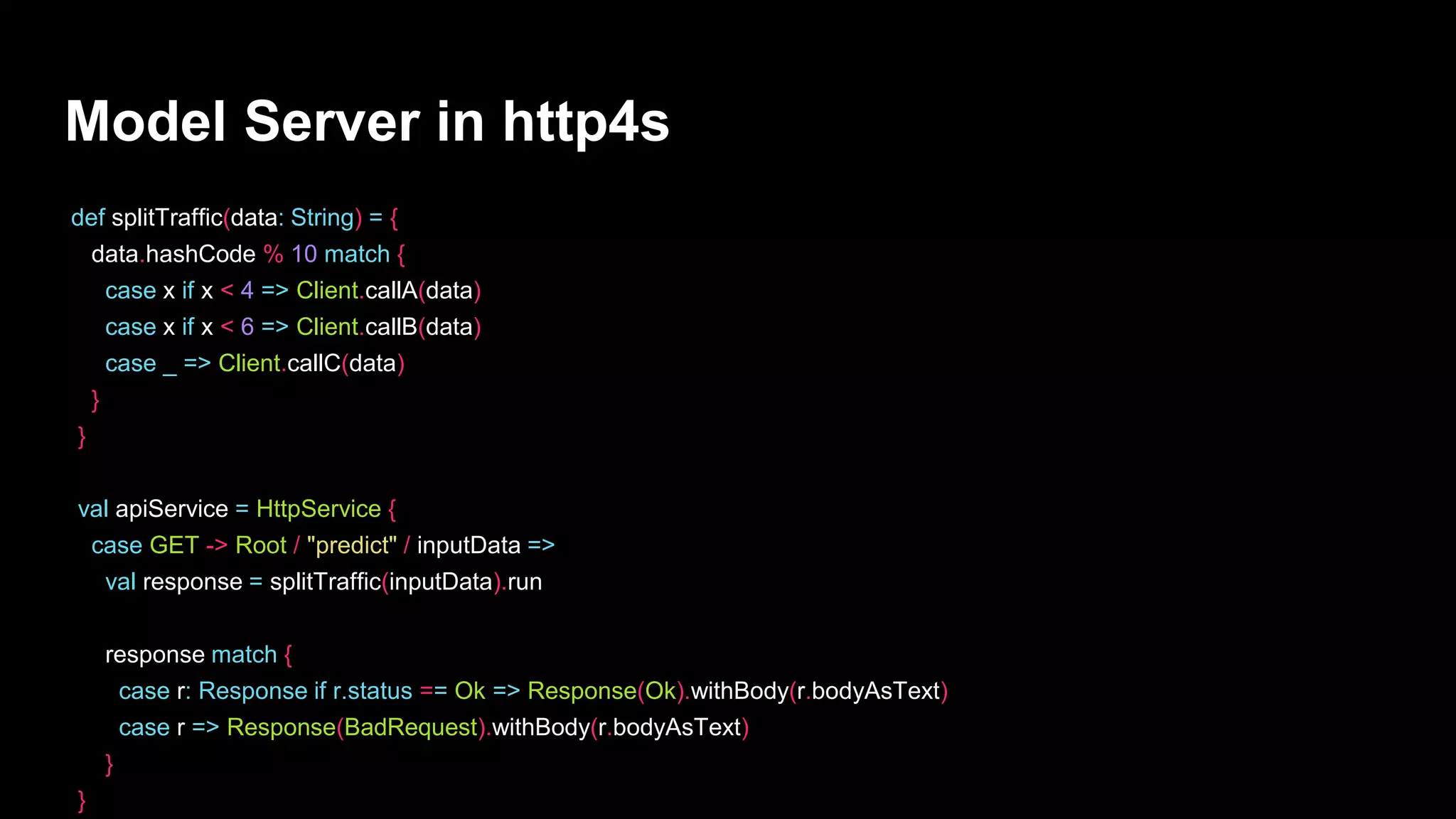

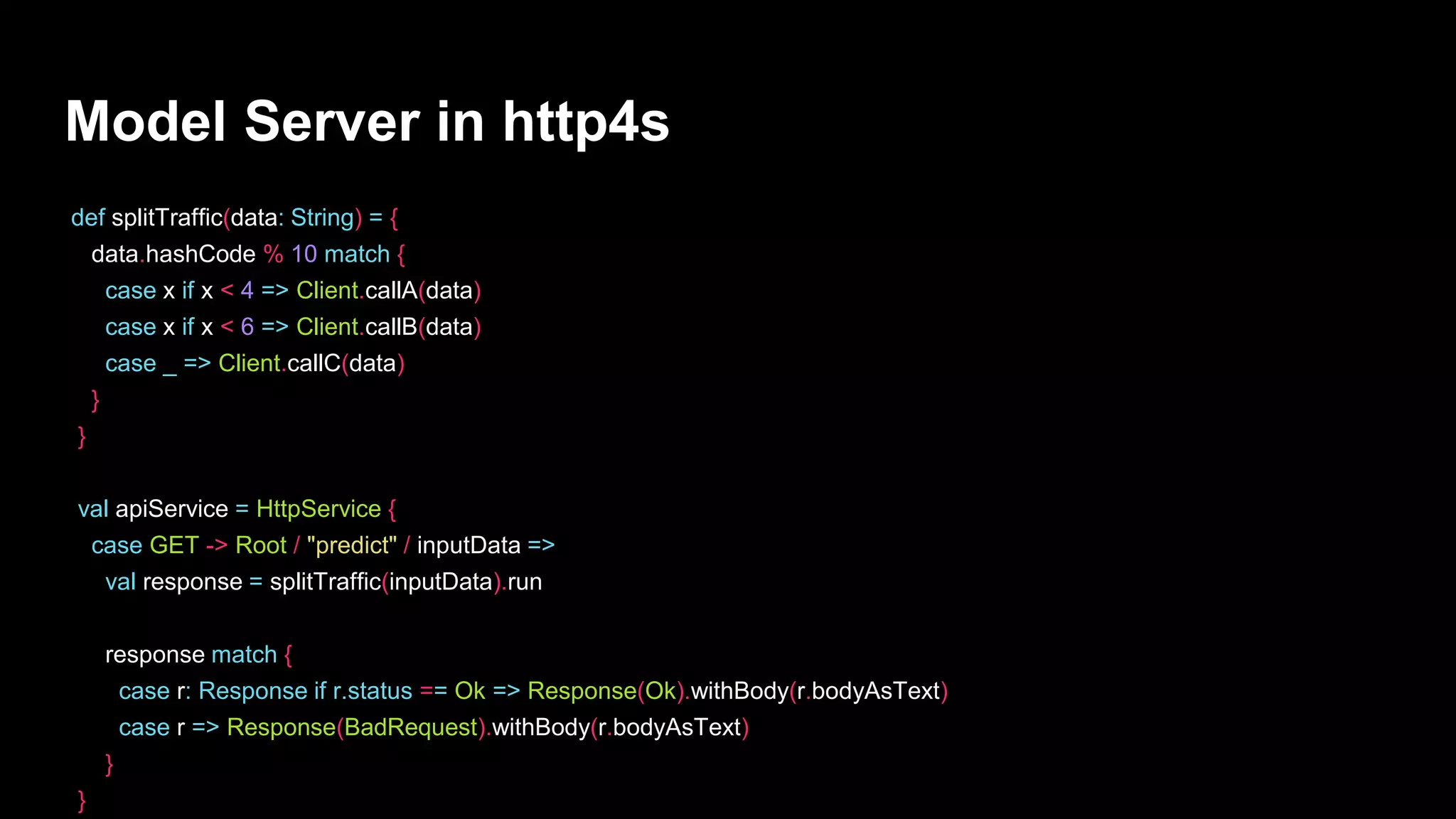

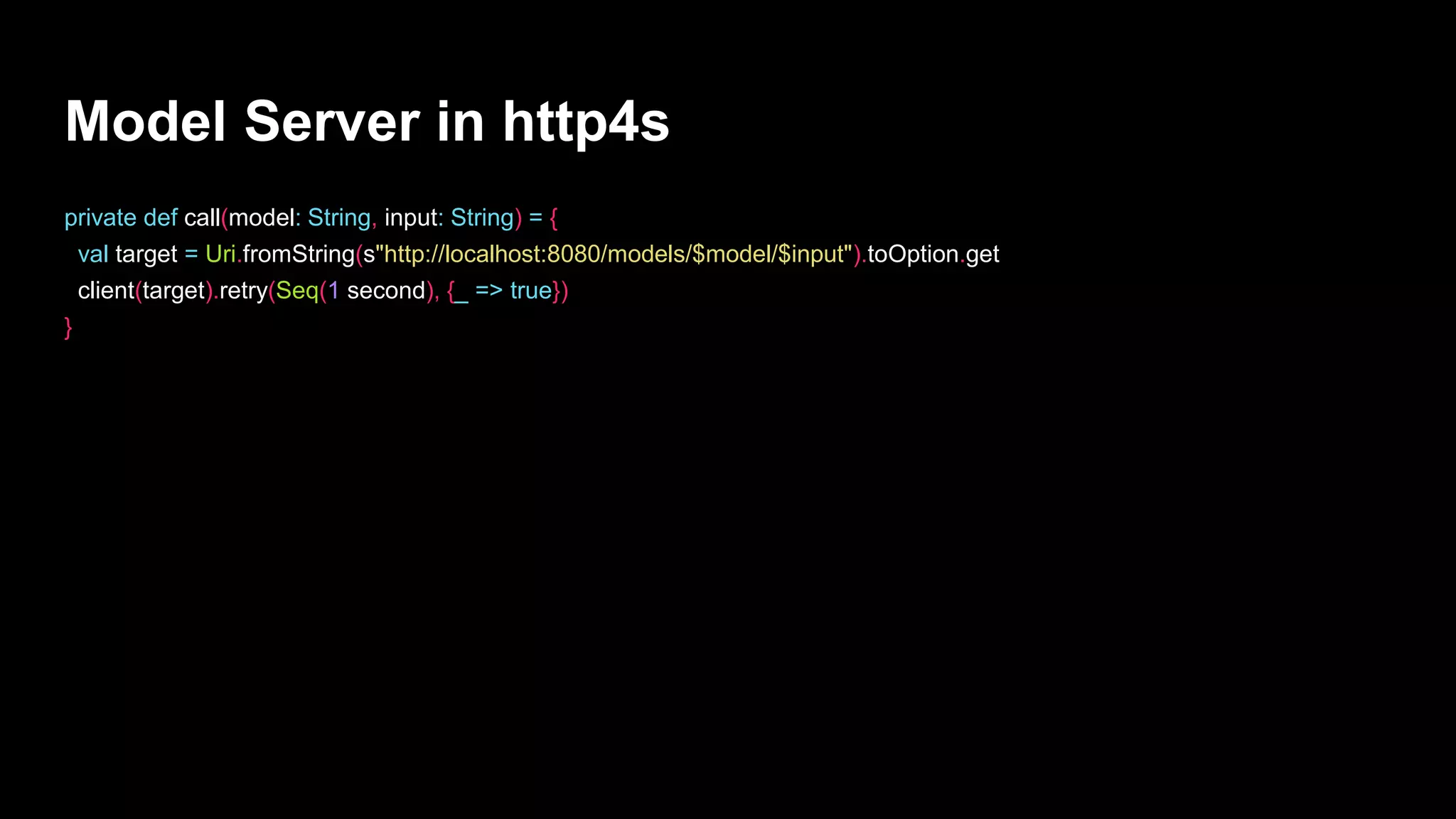

![Model Server in http4s

object ModelServer extends ServerApp {

val apiService = HttpService {

case GET -> Root / "predict" / inputData =>

val response = splitTraffic(inputData).run

Ok(response)

}

override def server(args: List[String]): Task[Server] = {

BlazeBuilder

.bindLocal(8080)

.mountService(apiService, "/api")

.mountService(Models.modelA, "/models")

.mountService(Models.modelB, "/models")

.start

}

}](https://image.slidesharecdn.com/toolsforrml-180528211629/75/Tools-for-Making-Machine-Learning-more-Reactive-31-2048.jpg)

![Model Server in http4s

private def call(model: String, input: String): Task[Response] = {

val target = Uri.fromString(

s"http://localhost:8080/models/$model/$input"

).toOption.get

client(target)

}

def callC(input: String) = call("c", input)](https://image.slidesharecdn.com/toolsforrml-180528211629/75/Tools-for-Making-Machine-Learning-more-Reactive-33-2048.jpg)

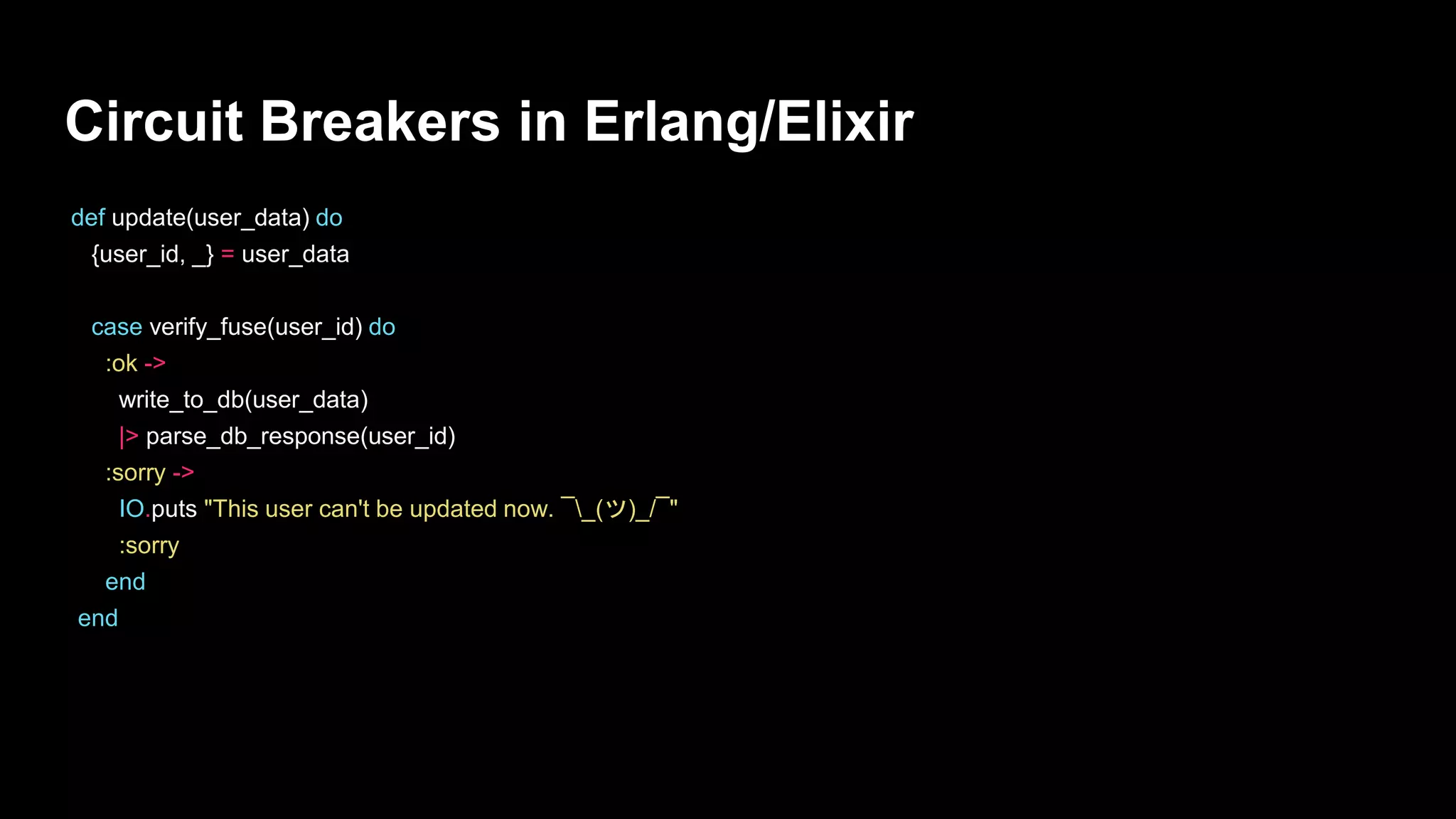

![Circuit Breakers in Erlang/Elixir

defp write_to_db({_user_id, _data}) do

Enum.random([{:ok, ""}, {:error, "The DB dropped the ball. ಠ_ಠ"}])

end](https://image.slidesharecdn.com/toolsforrml-180528211629/75/Tools-for-Making-Machine-Learning-more-Reactive-44-2048.jpg)

![Evolution

def select(pid __MODULE__, nets) do

cutoffs = cutoffs(nets)

for net <- nets do

complexity = length(net.layers)

level = Enum.min(

[Enum.find_index(cutoffs, &(&1 >= complexity)) + 1,

complexity_levels()])

net_acc = net.test_acc

elite_acc = Map.get(get(level), :test_acc)

if is_nil(elite_acc) or net_acc > elite_acc do

put(pid, level, net)

end

end](https://image.slidesharecdn.com/toolsforrml-180528211629/75/Tools-for-Making-Machine-Learning-more-Reactive-56-2048.jpg)

![Evolution

iex(1)> GN.Selection.get_all()

%{

1 => %GN.Network{

id: "0c2020ad-8944-4f2c-80bd-1d92c9d26535",

layers: [

dense: [64, :softrelu],

batch_norm: [],

activation: [:relu],

dropout: [0.5],

dense: [63, :relu]

],

test_acc: 0.8553

},

2 => %GN.Network{

id: "58229333-a05d-4371-8f23-e8e55c37a2ec",

layers: [

dense: [64, :relu],](https://image.slidesharecdn.com/toolsforrml-180528211629/75/Tools-for-Making-Machine-Learning-more-Reactive-57-2048.jpg)

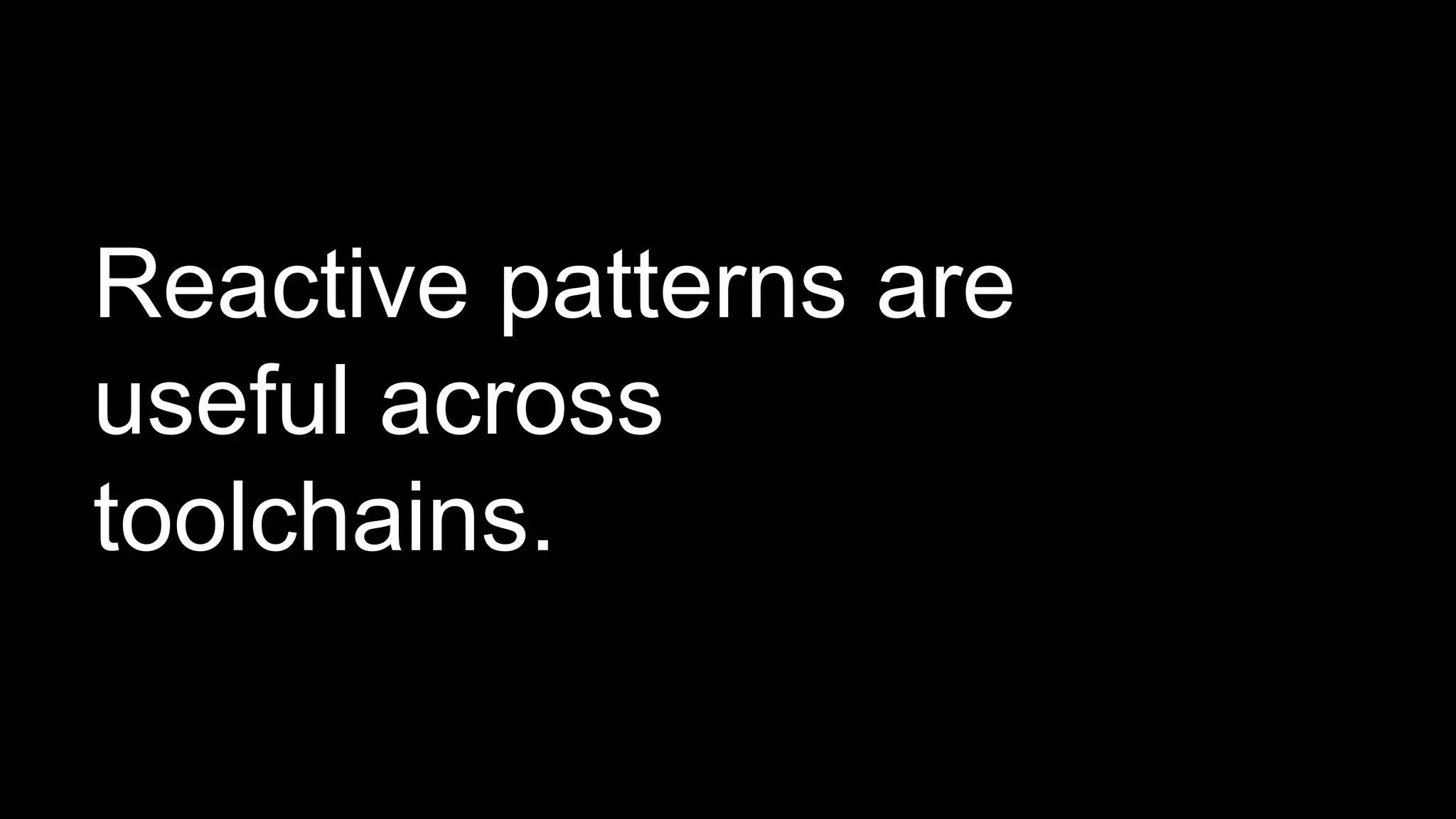

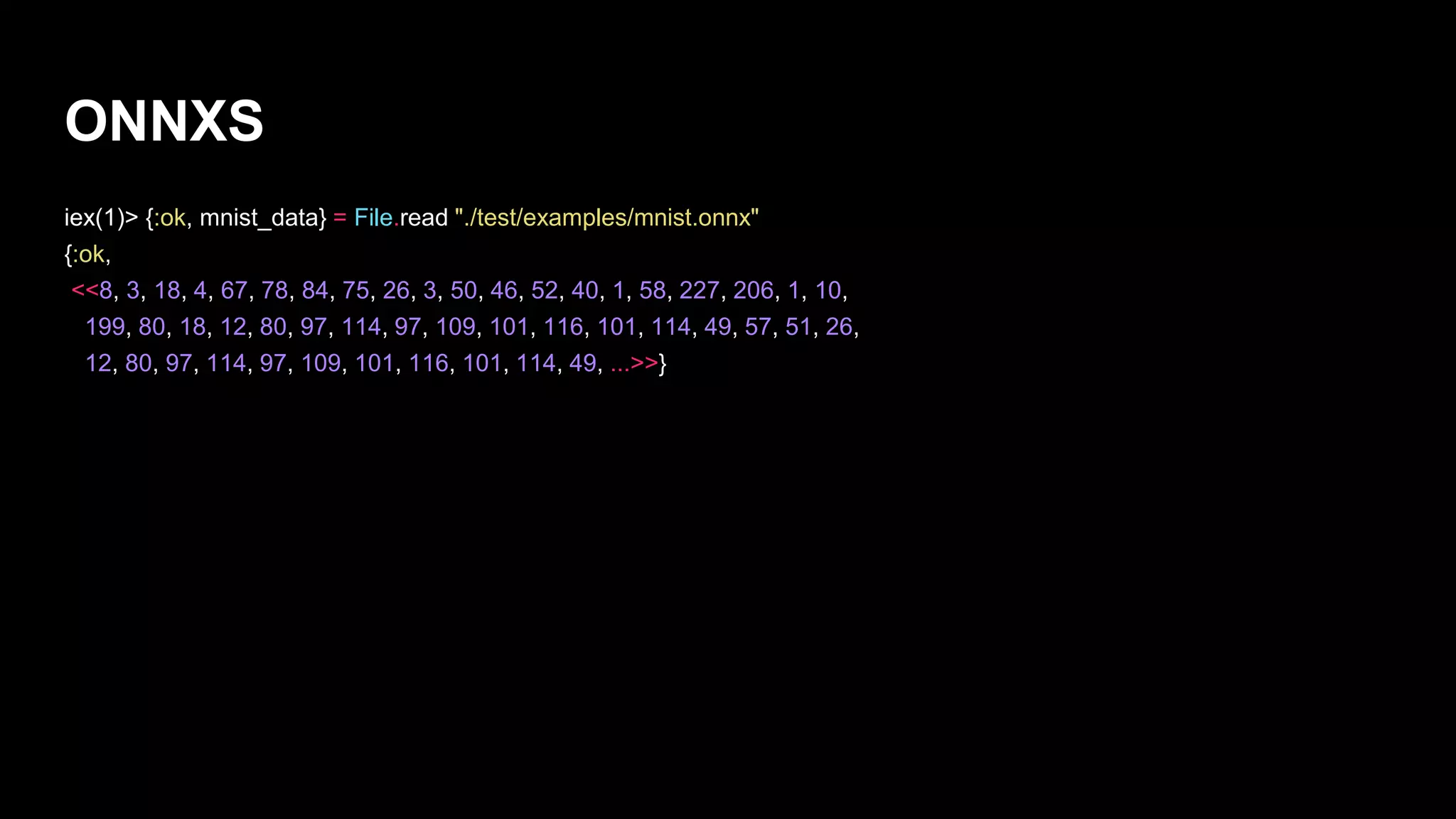

![ONNXS

iex(2)> mnist_struct = Onnx.ModelProto.decode(mnist_data)

%Onnx.ModelProto{

doc_string: nil,

domain: nil,

graph: %Onnx.GraphProto{

doc_string: nil,

initializer: [],

input: [

%Onnx.ValueInfoProto{

doc_string: nil,

name: "Input3",

type: %Onnx.TypeProto{

value: {:tensor_type,

%Onnx.TypeProto.Tensor{

elem_type: 1,

shape: %Onnx.TensorShapeProto

...](https://image.slidesharecdn.com/toolsforrml-180528211629/75/Tools-for-Making-Machine-Learning-more-Reactive-67-2048.jpg)