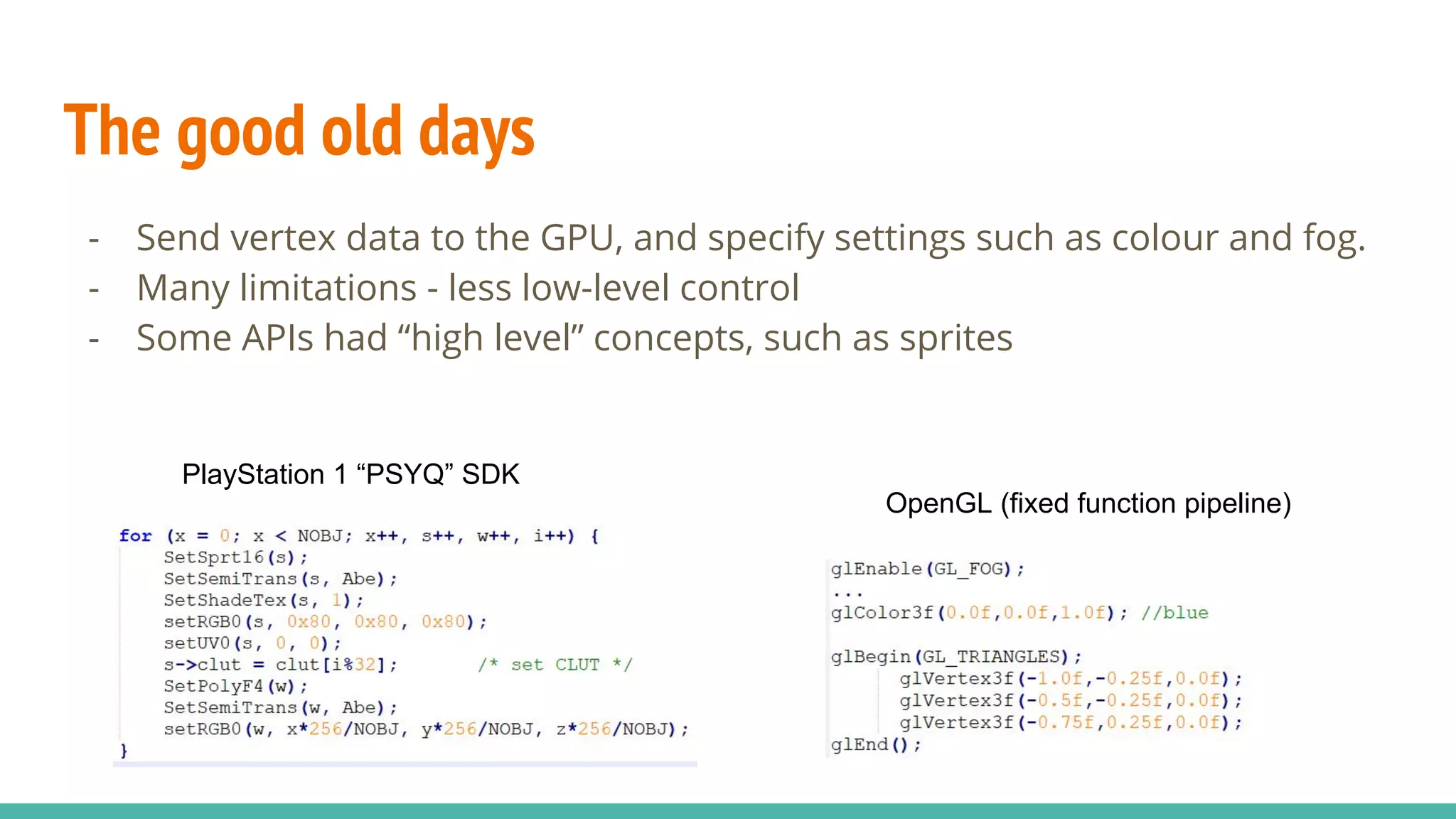

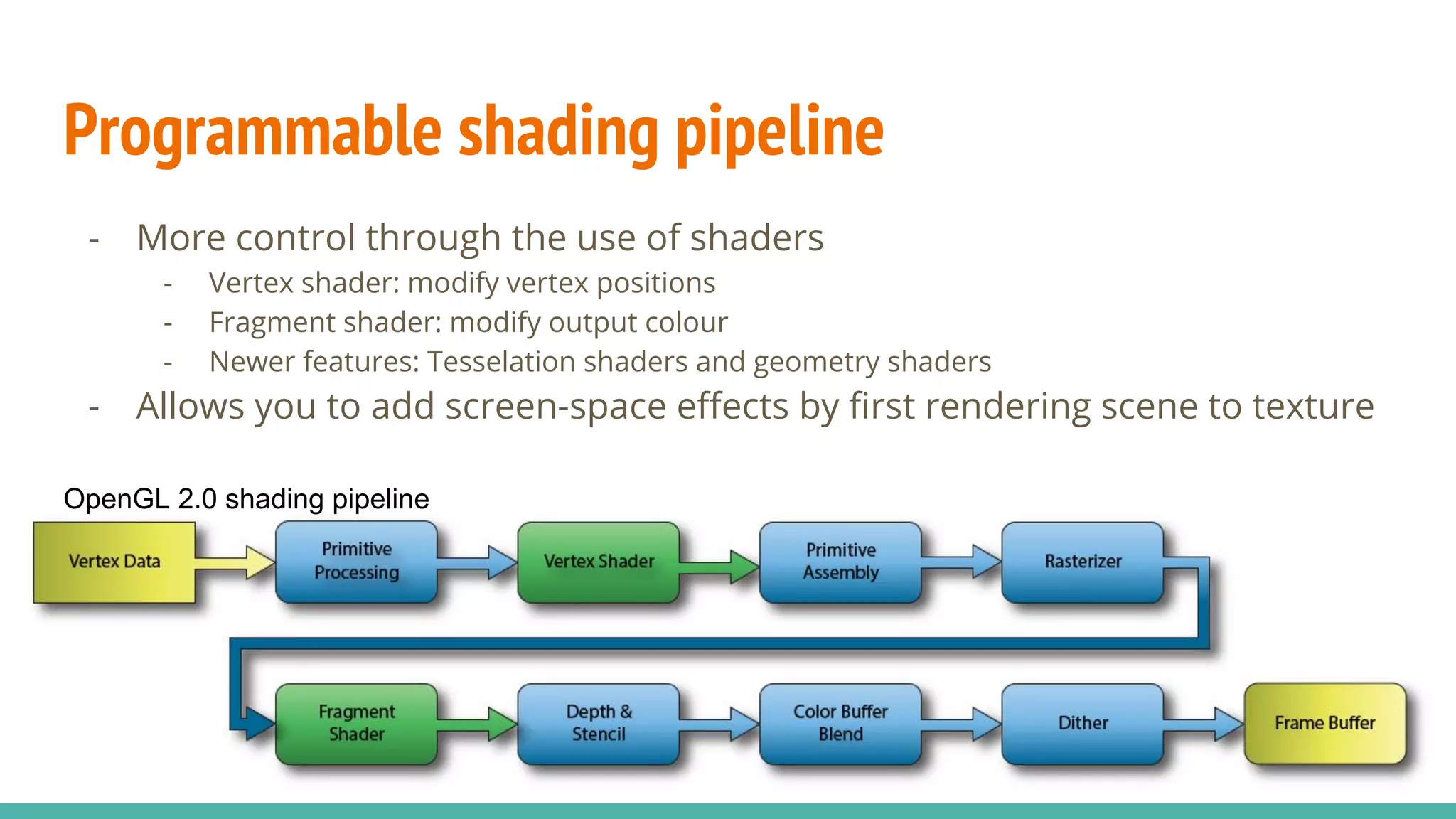

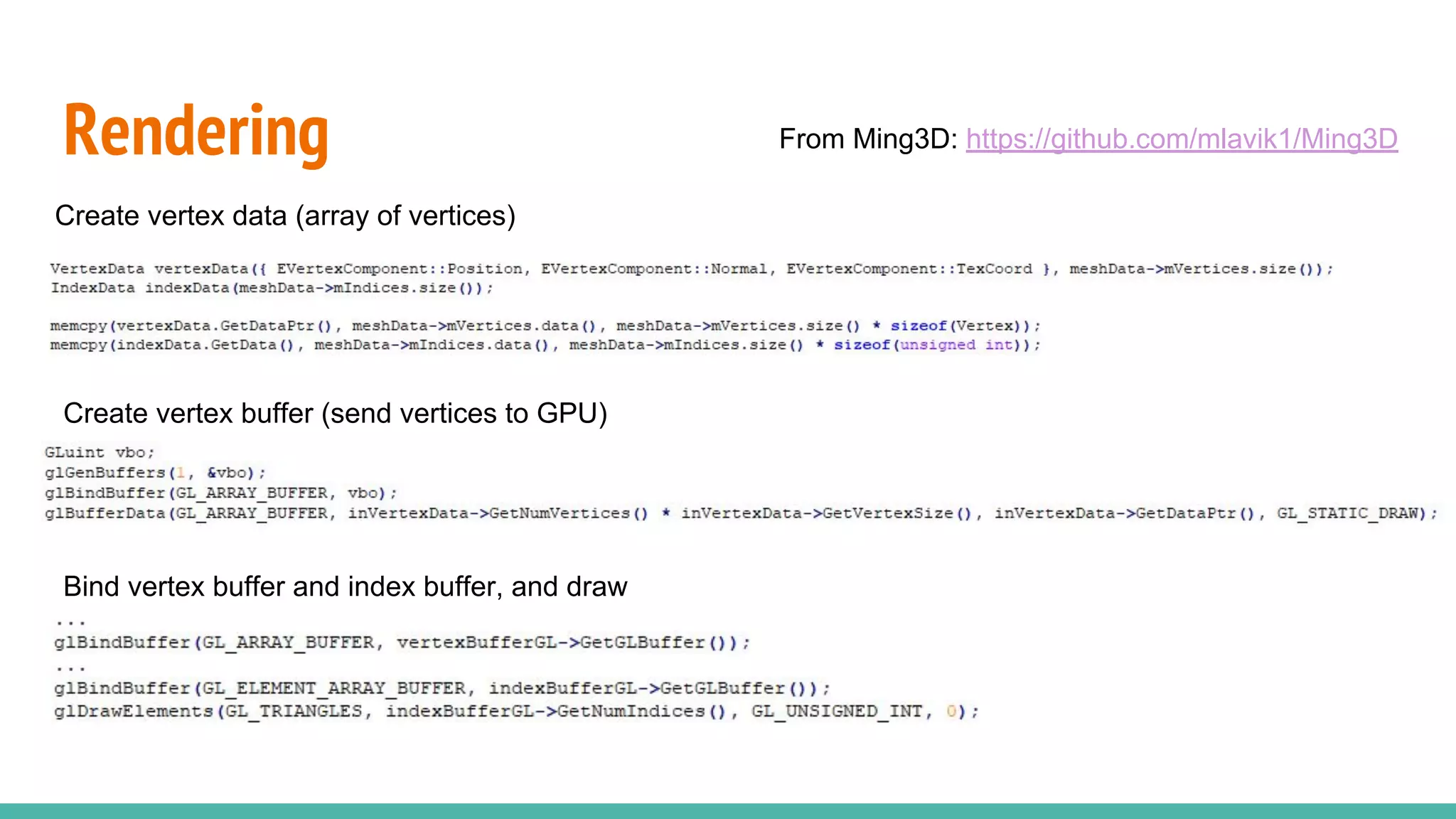

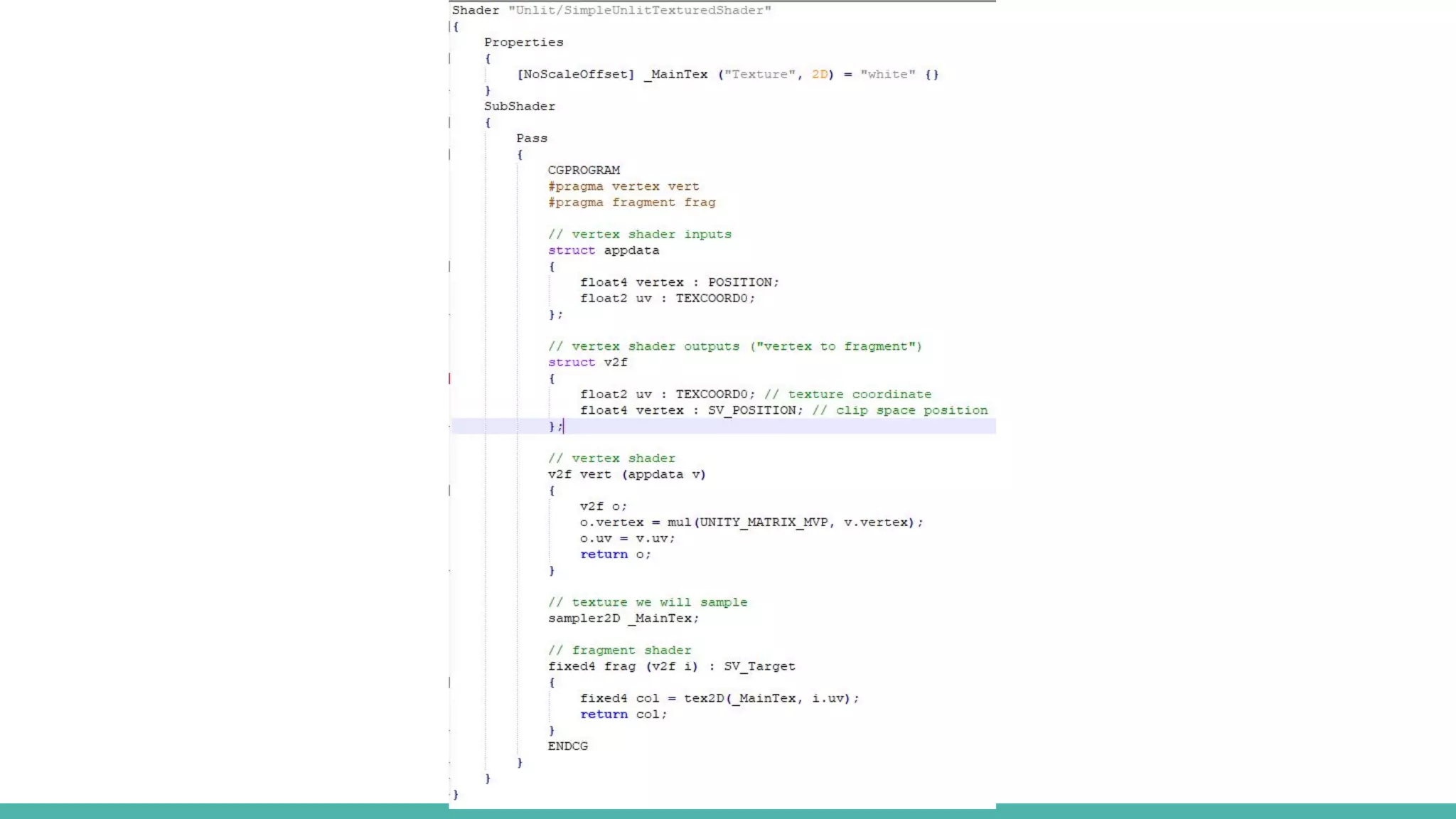

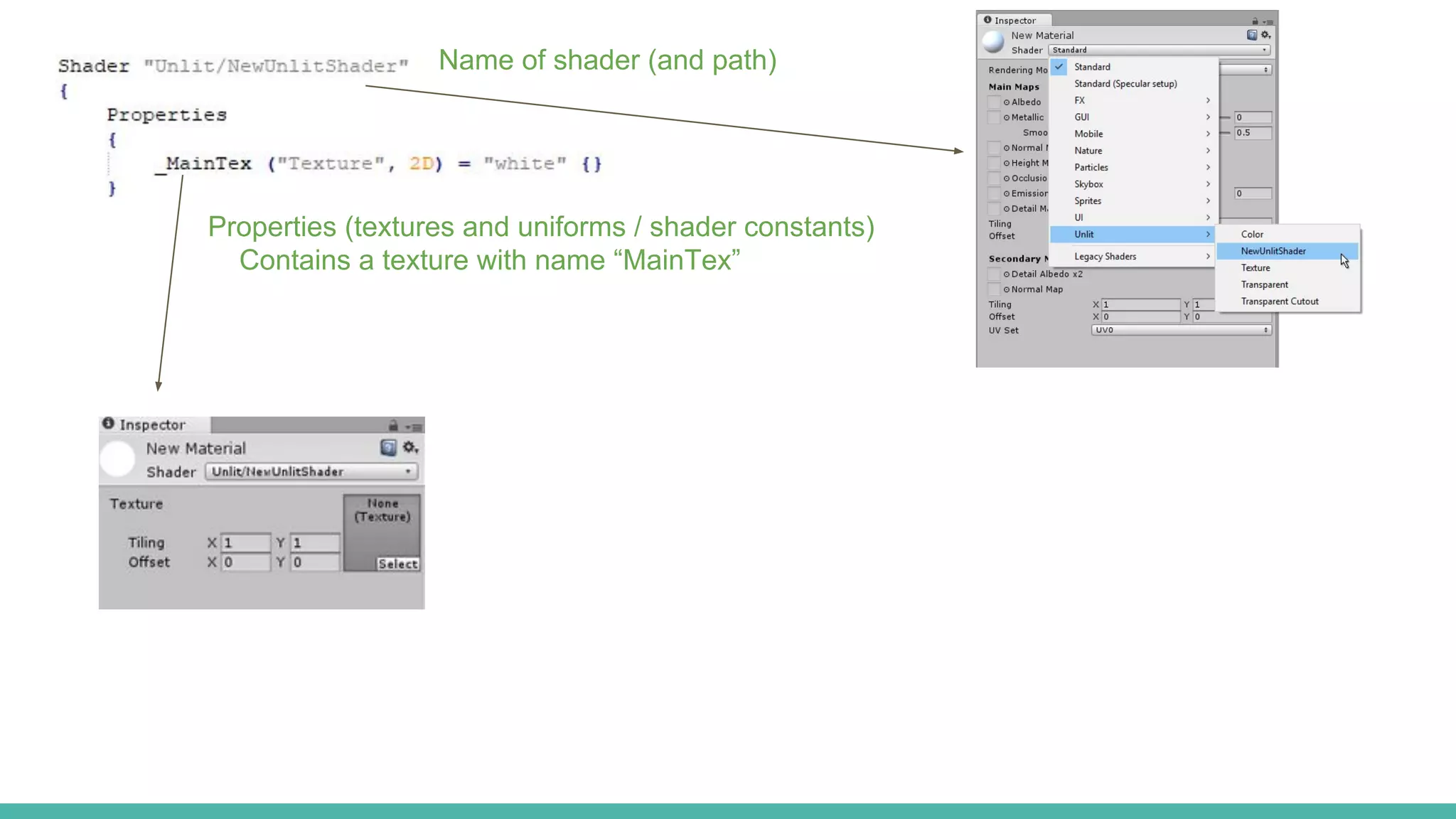

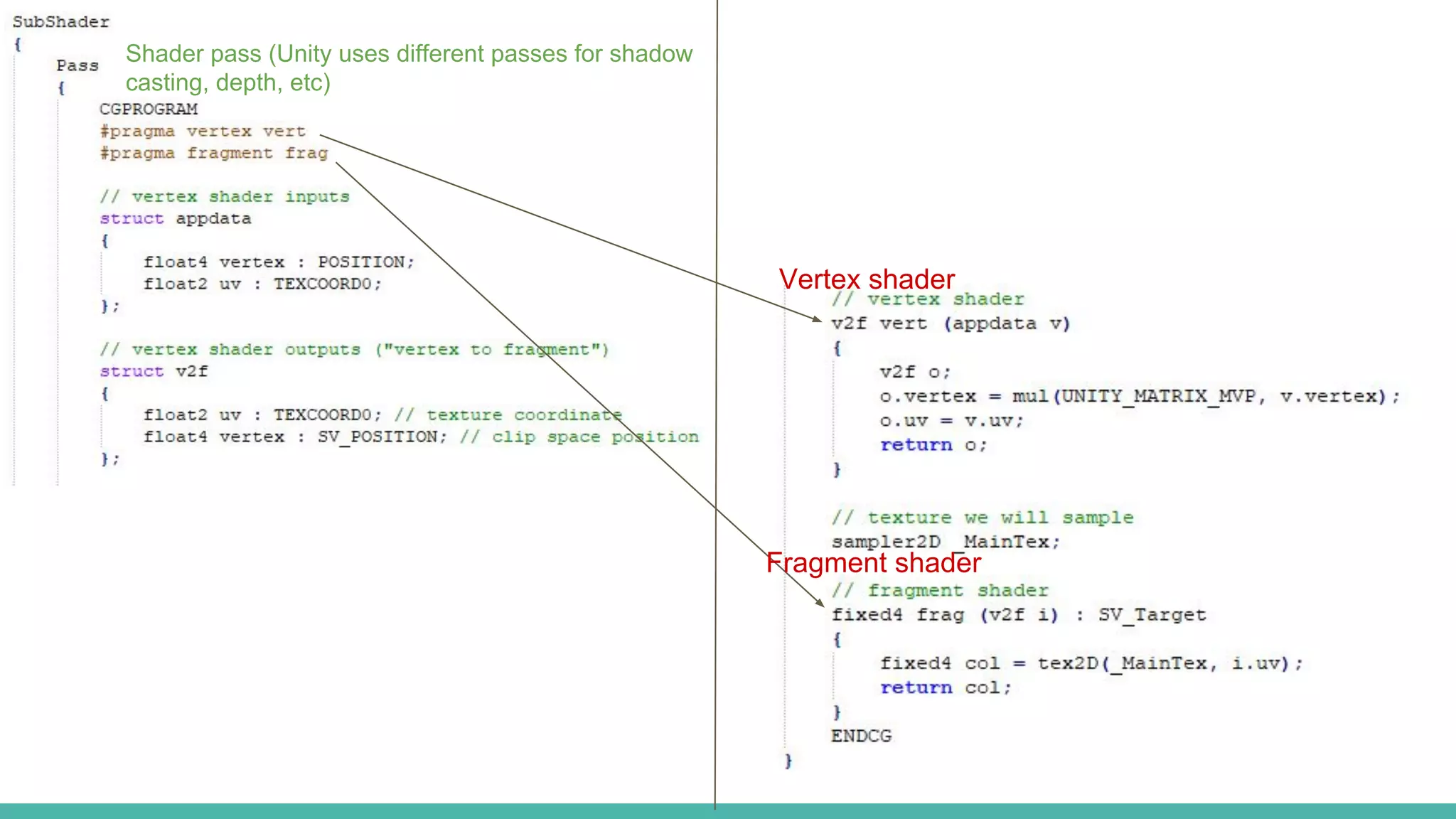

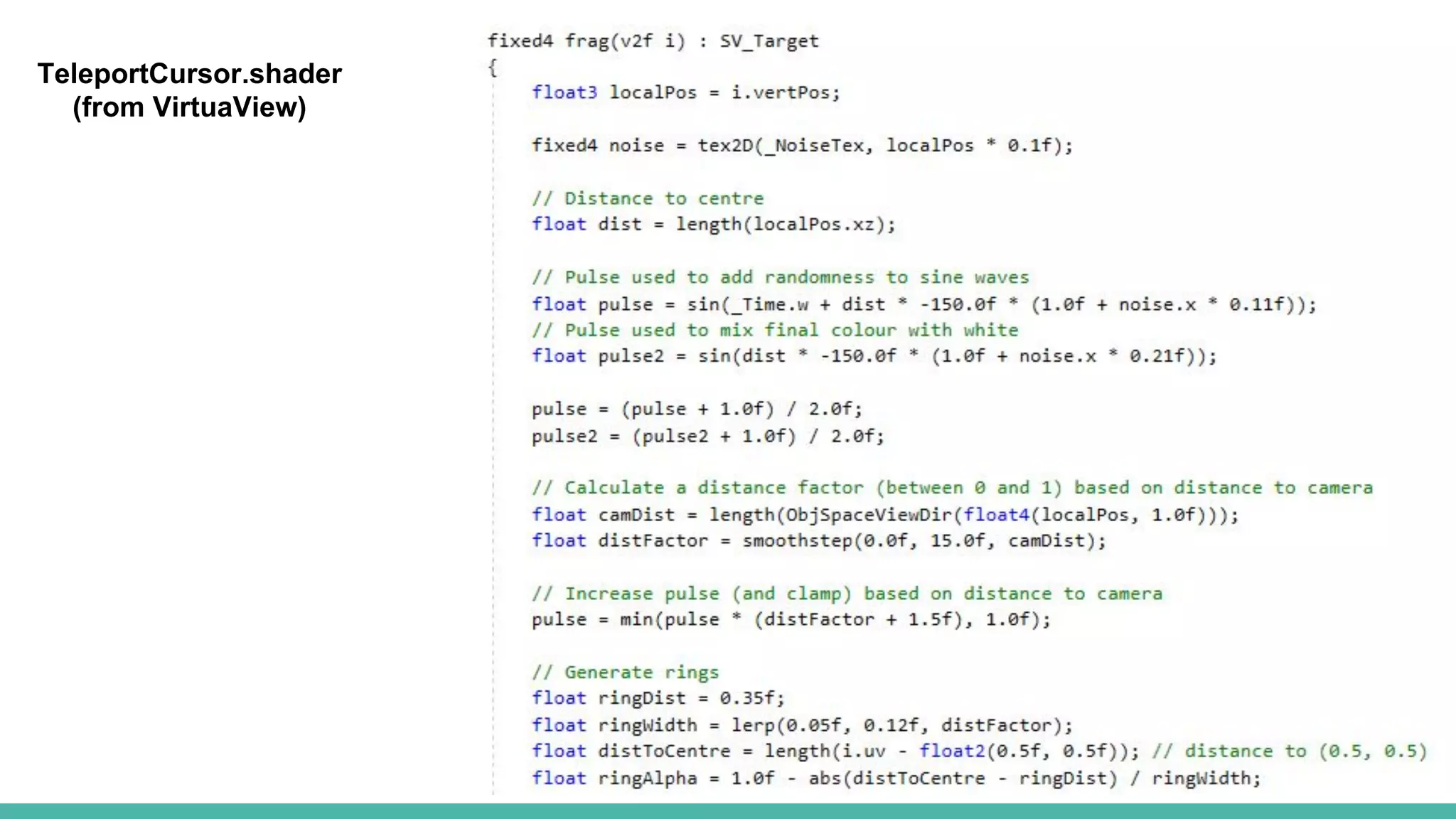

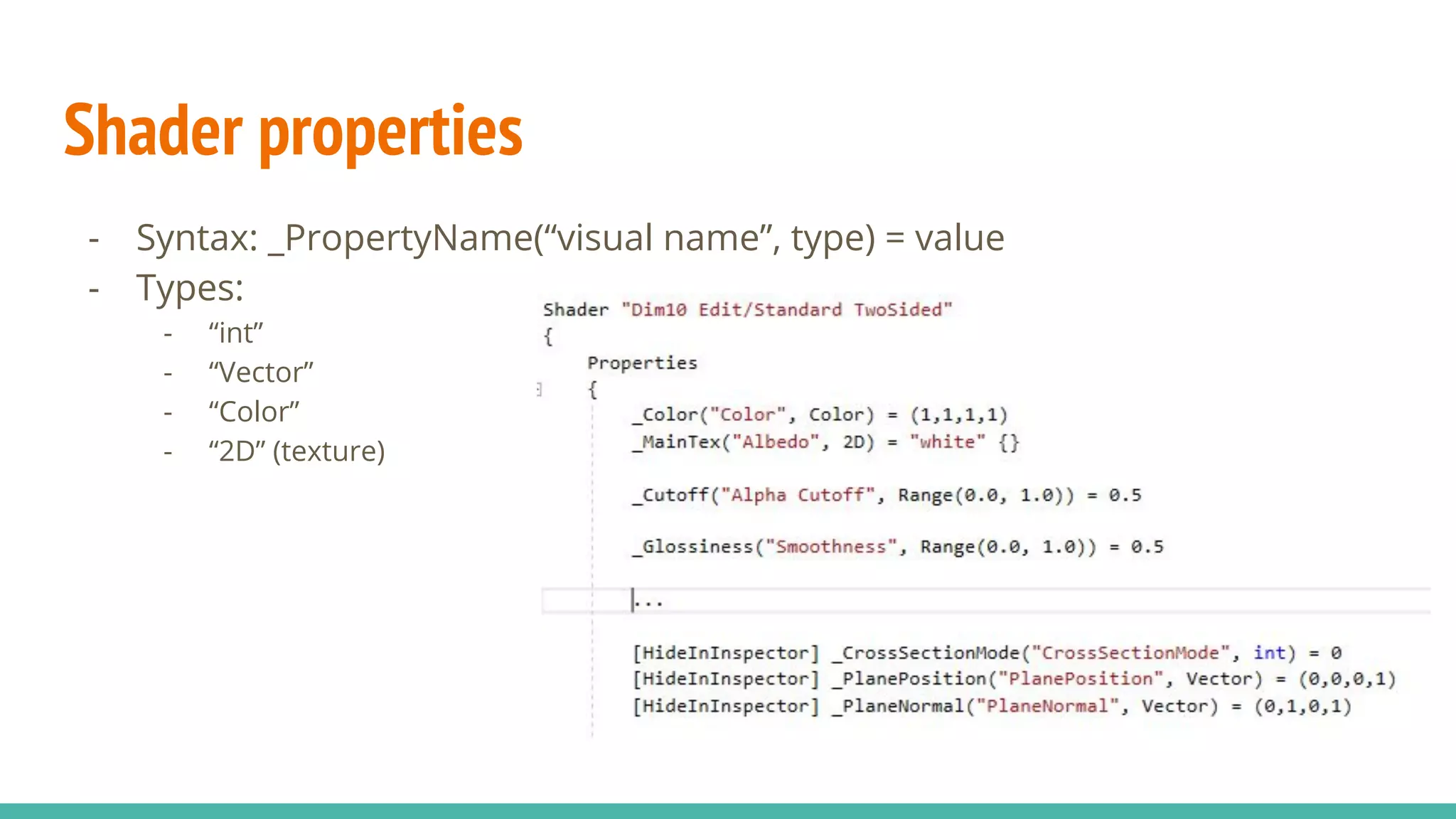

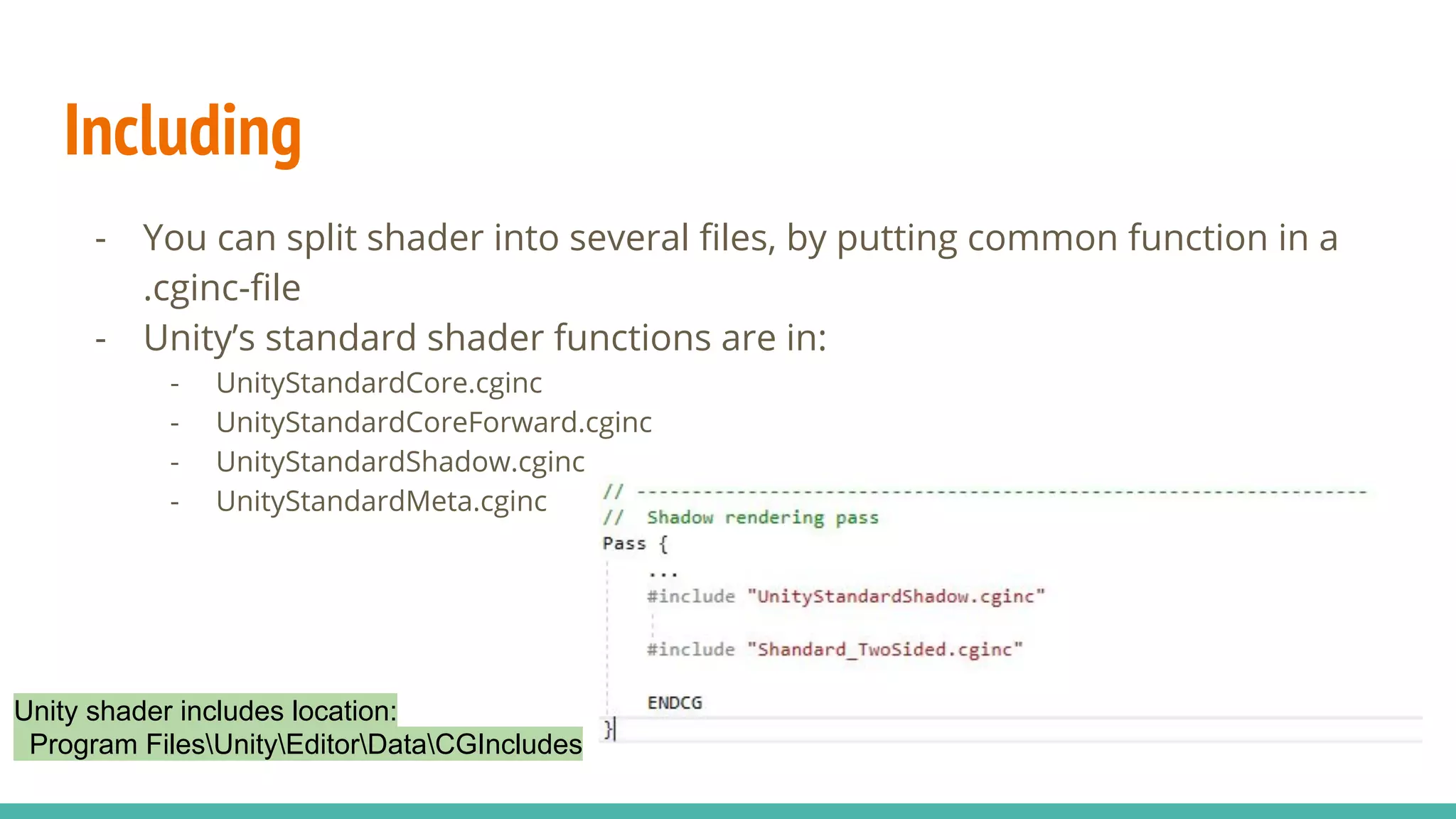

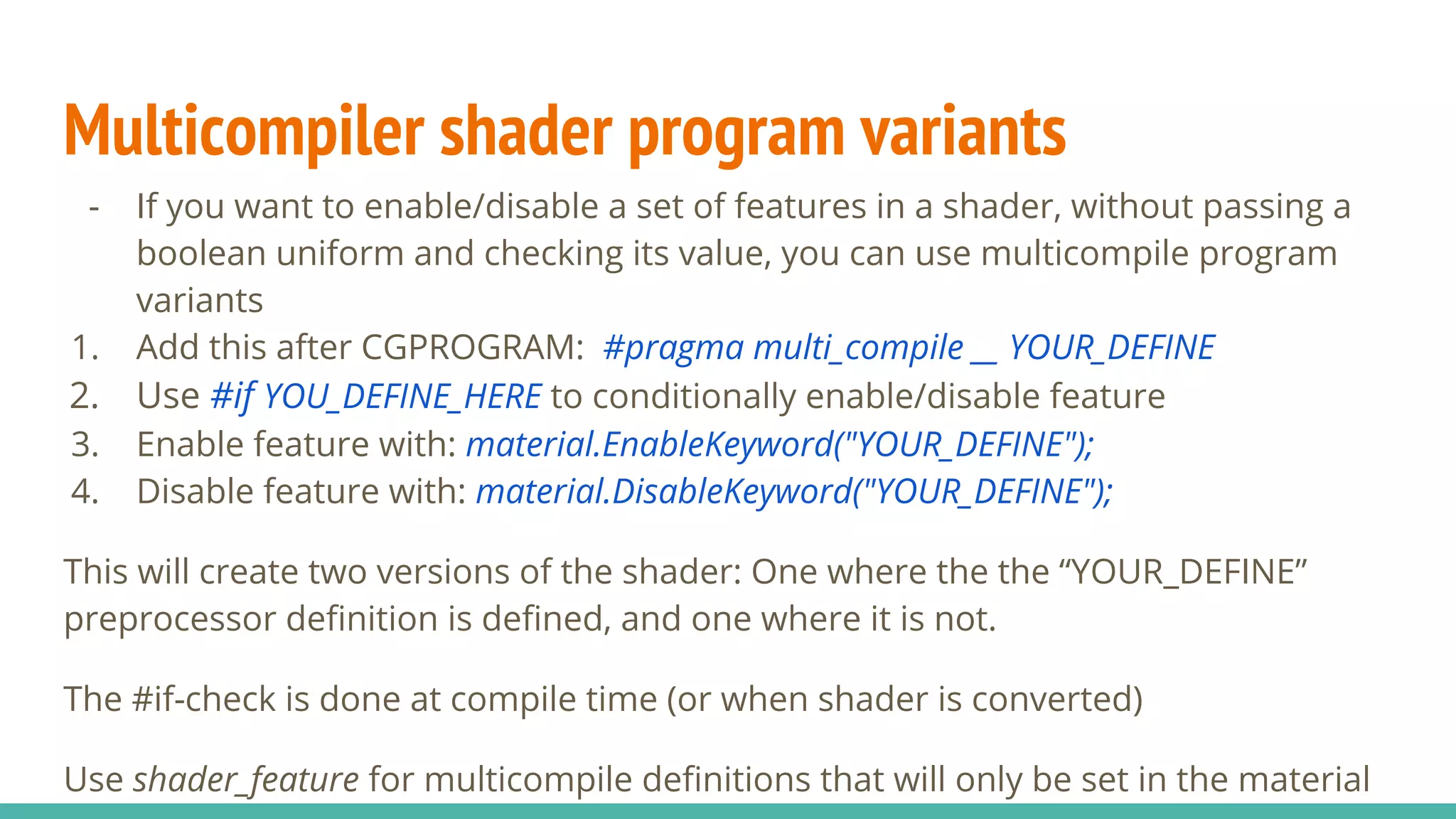

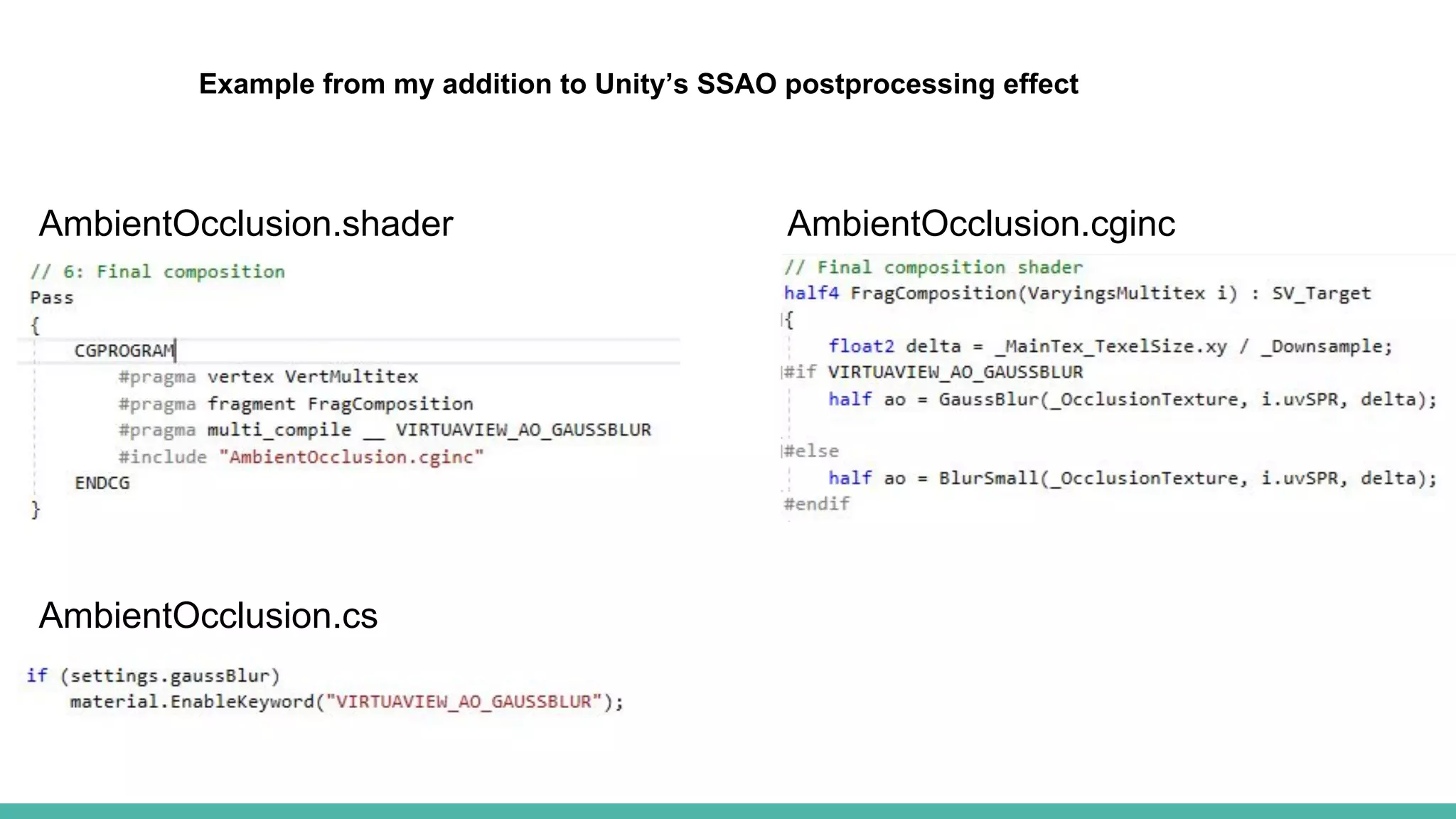

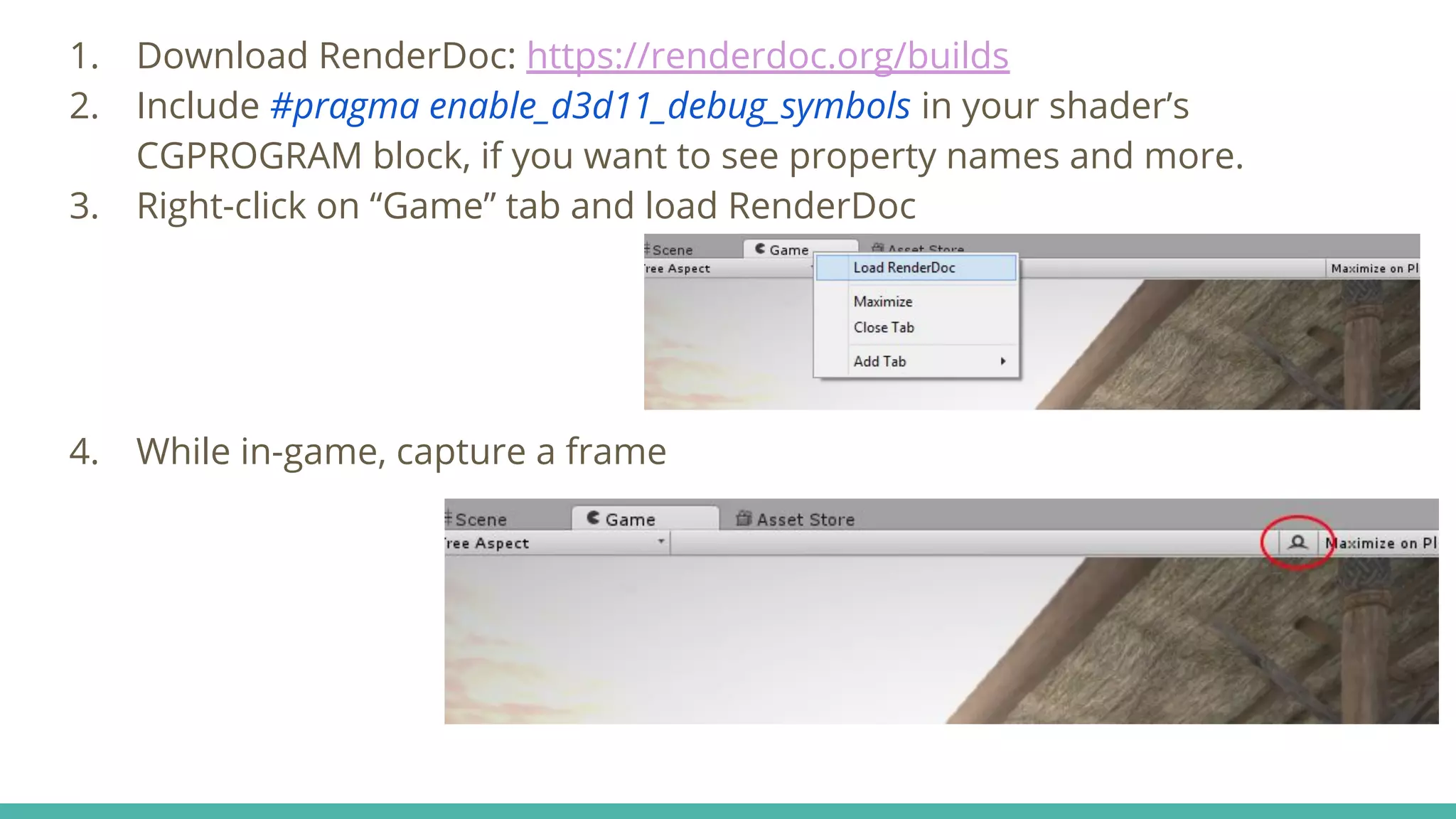

This document provides an introduction to shaders and the programmable shading pipeline. It discusses key shader concepts like vertex and fragment shaders, uniforms, and semantics. It also covers topics like debugging shaders, multicompiler variants, and includes. Unity shader code examples are provided to illustrate concepts.