Rust's Journey to Async/Await

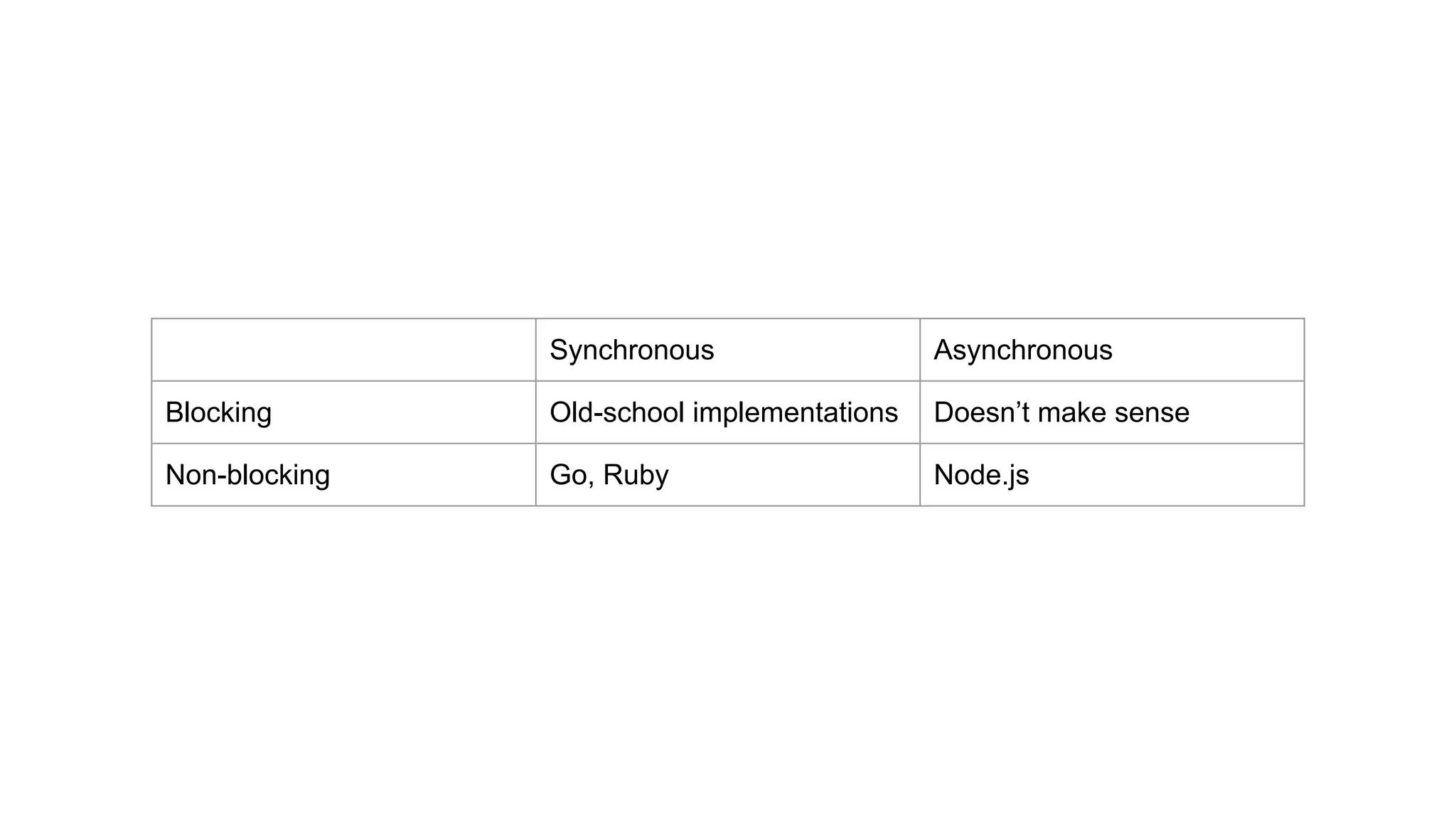

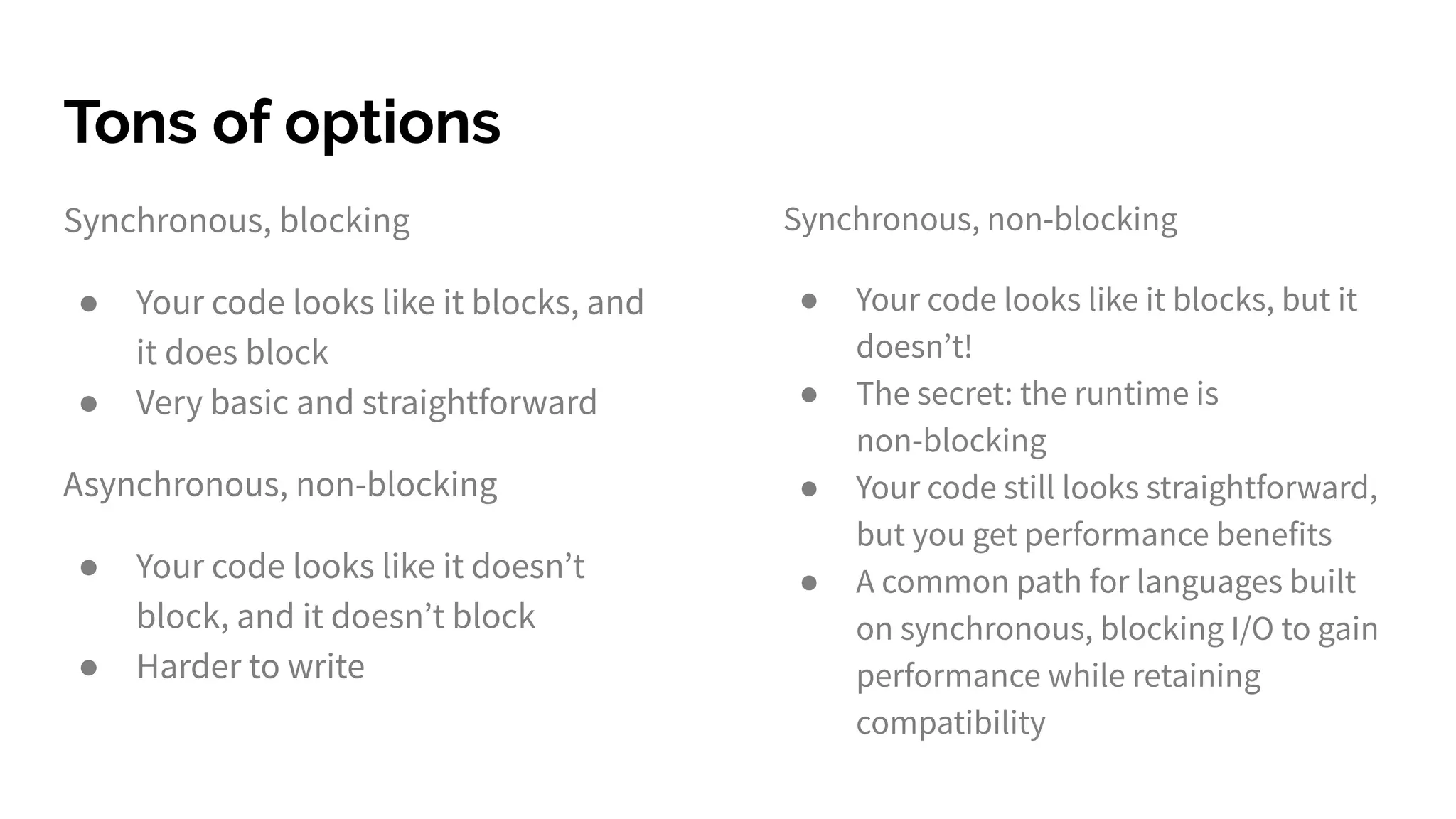

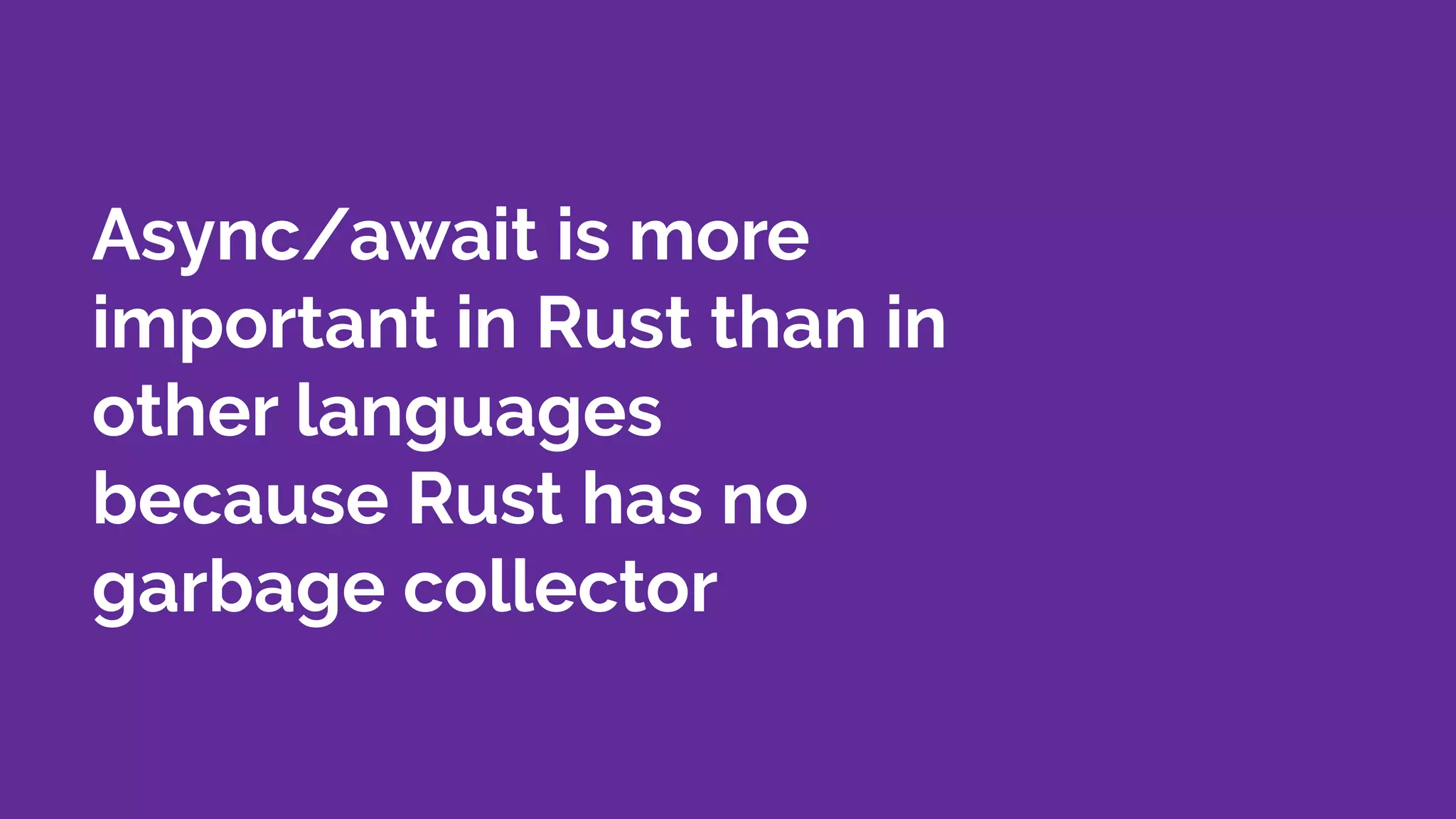

- Rust was initially built for synchronous I/O but the community wanted asynchronous capabilities for building network services.

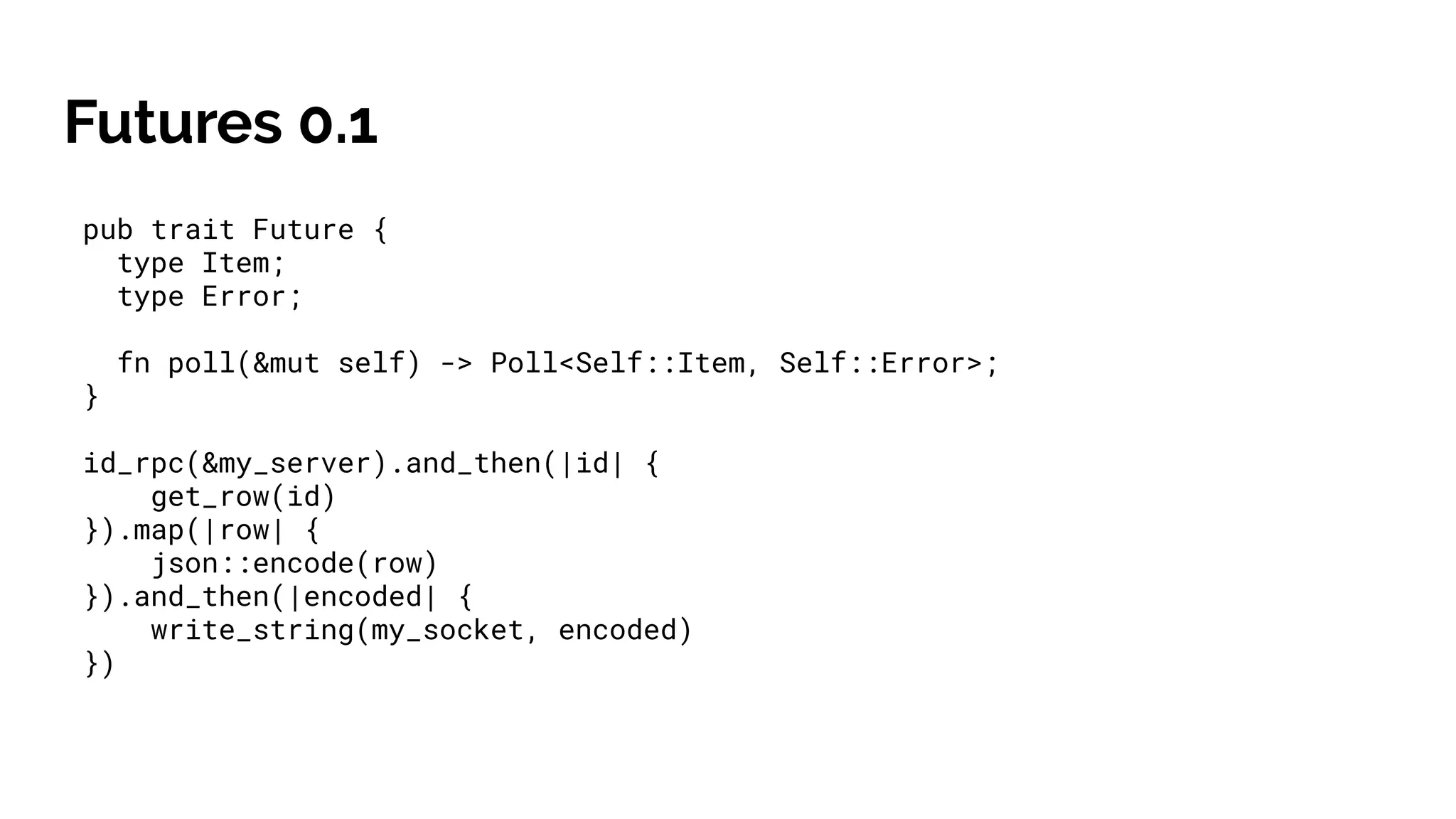

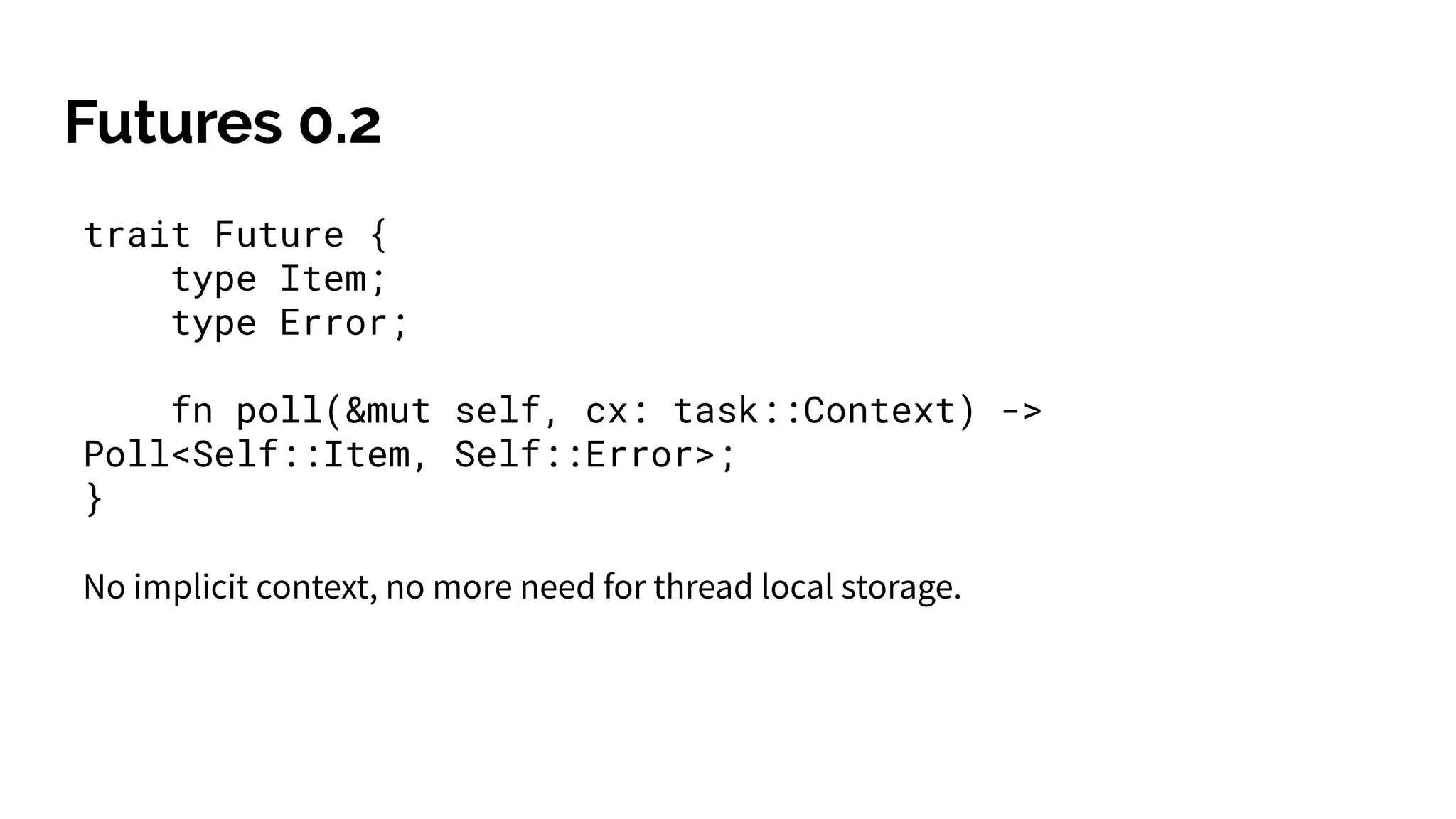

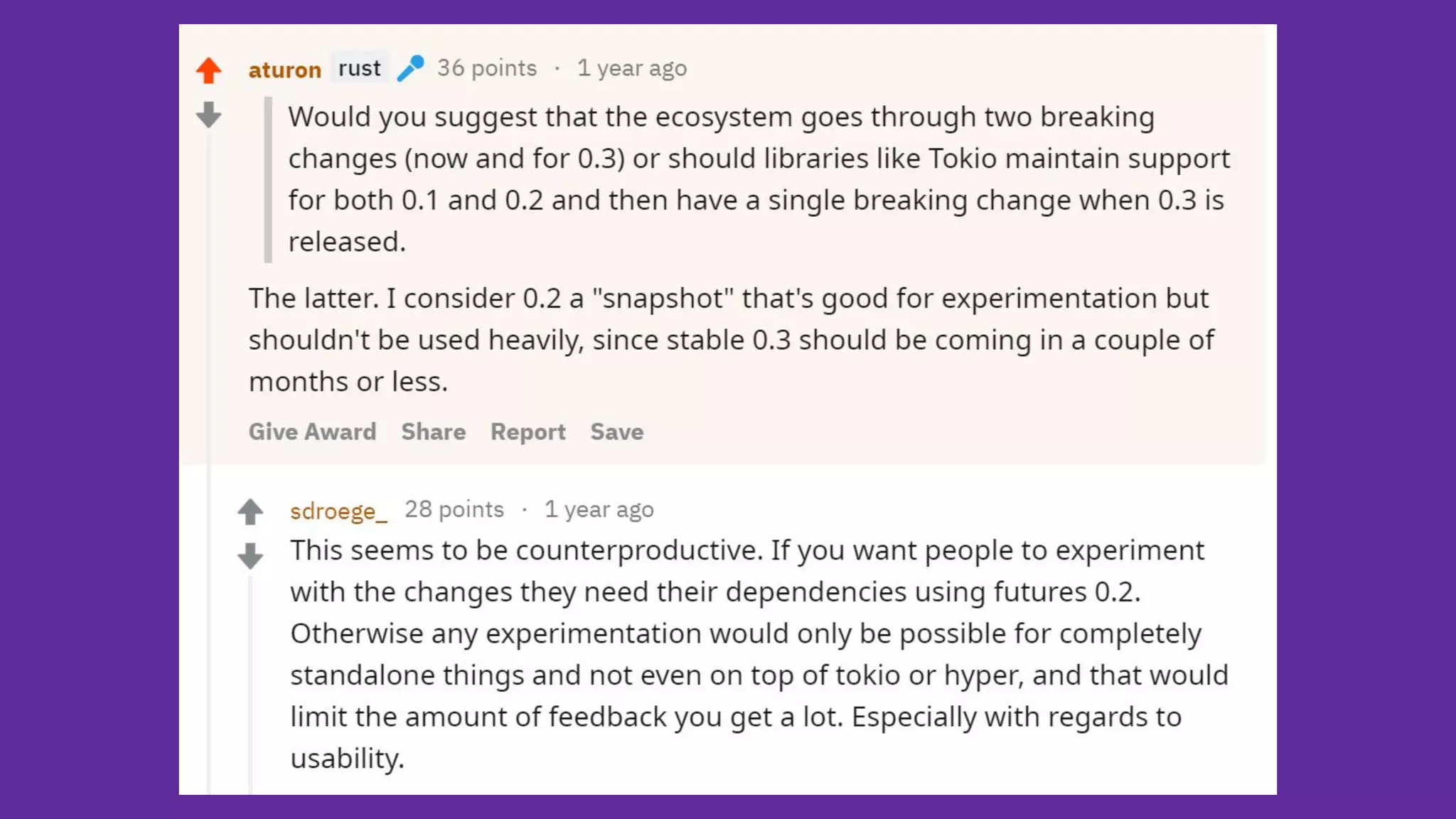

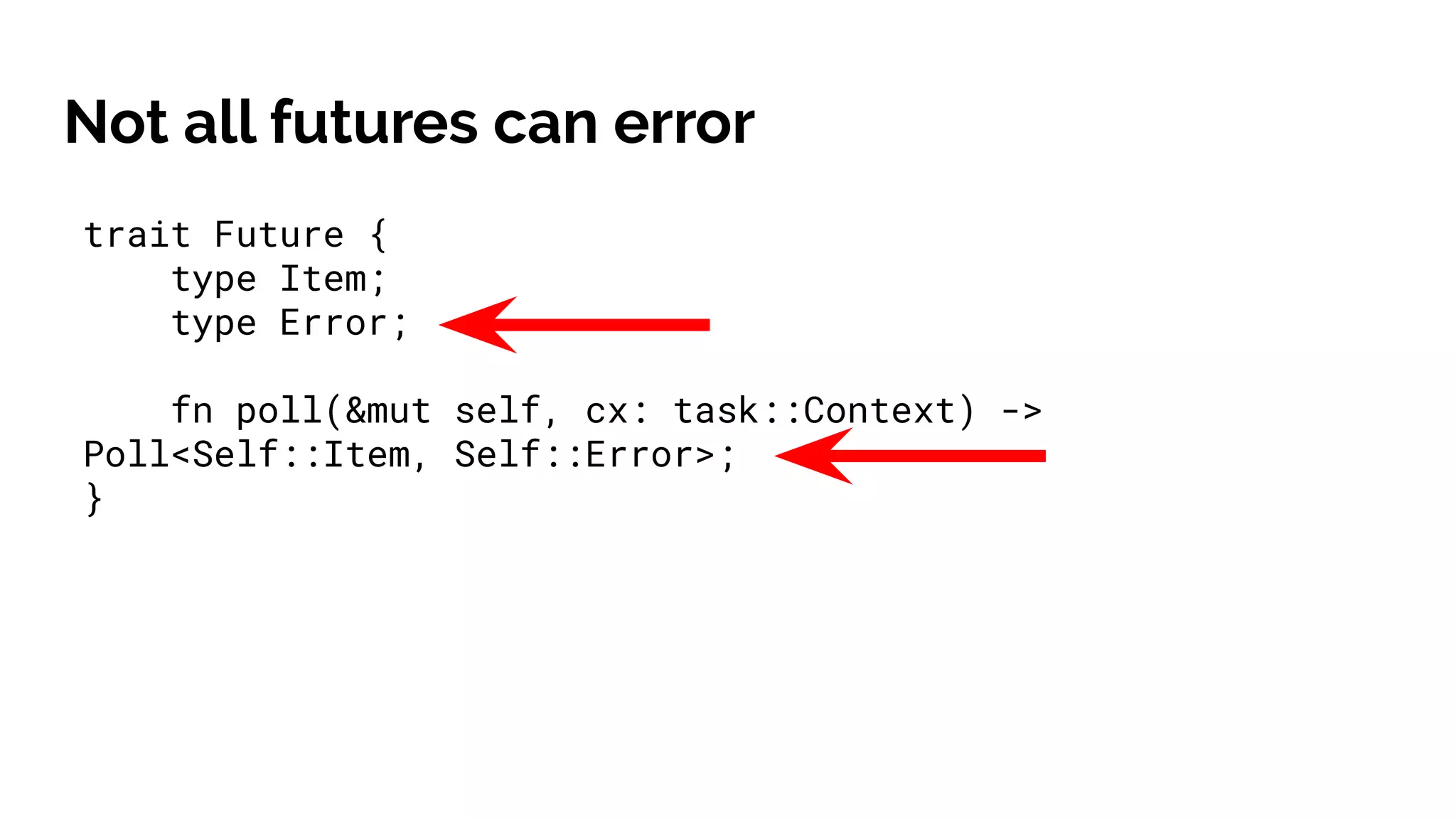

- This led to the development of futures in Rust to support asynchronous programming. However, futures had design issues that motivated futures 2.0.

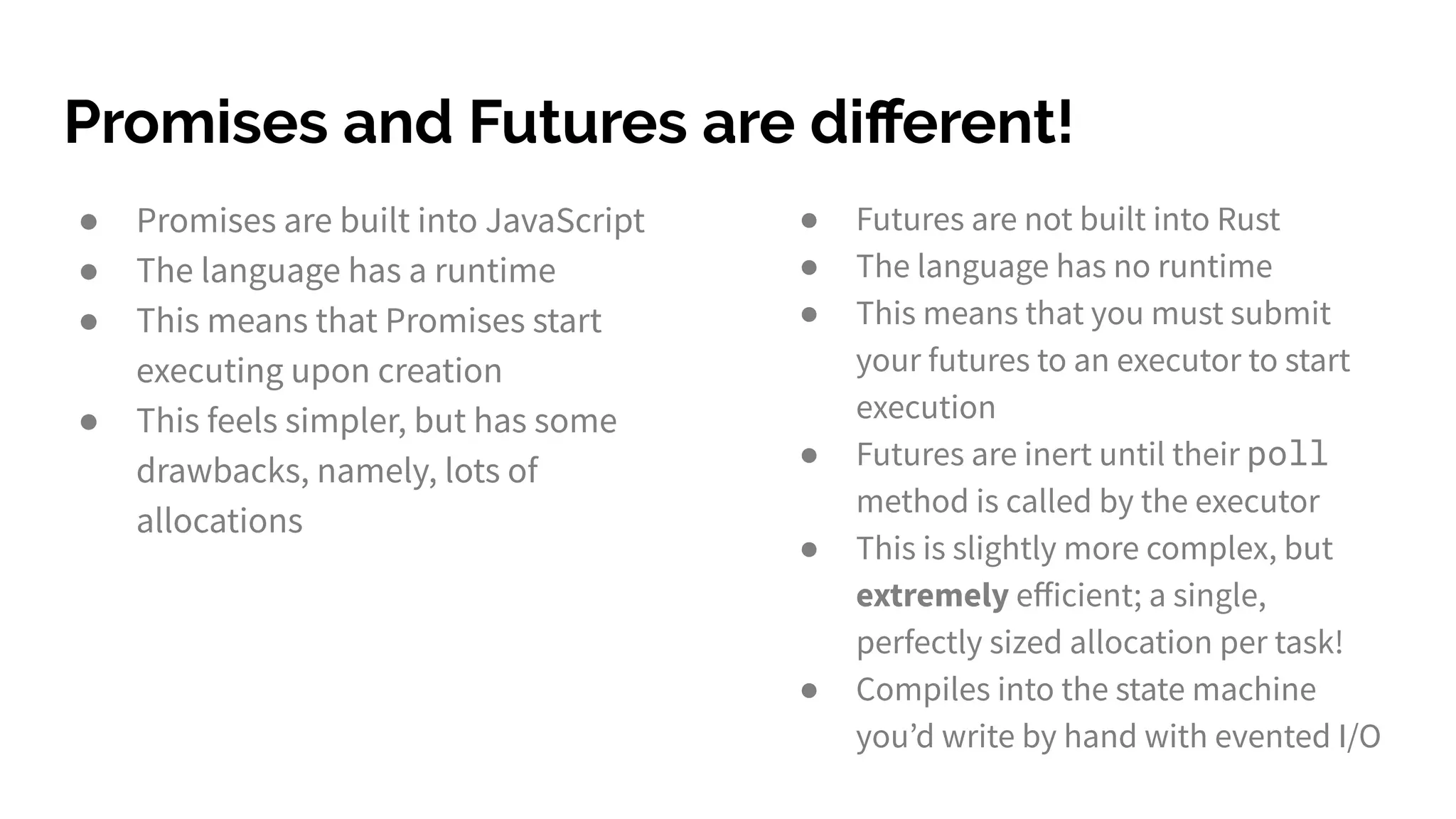

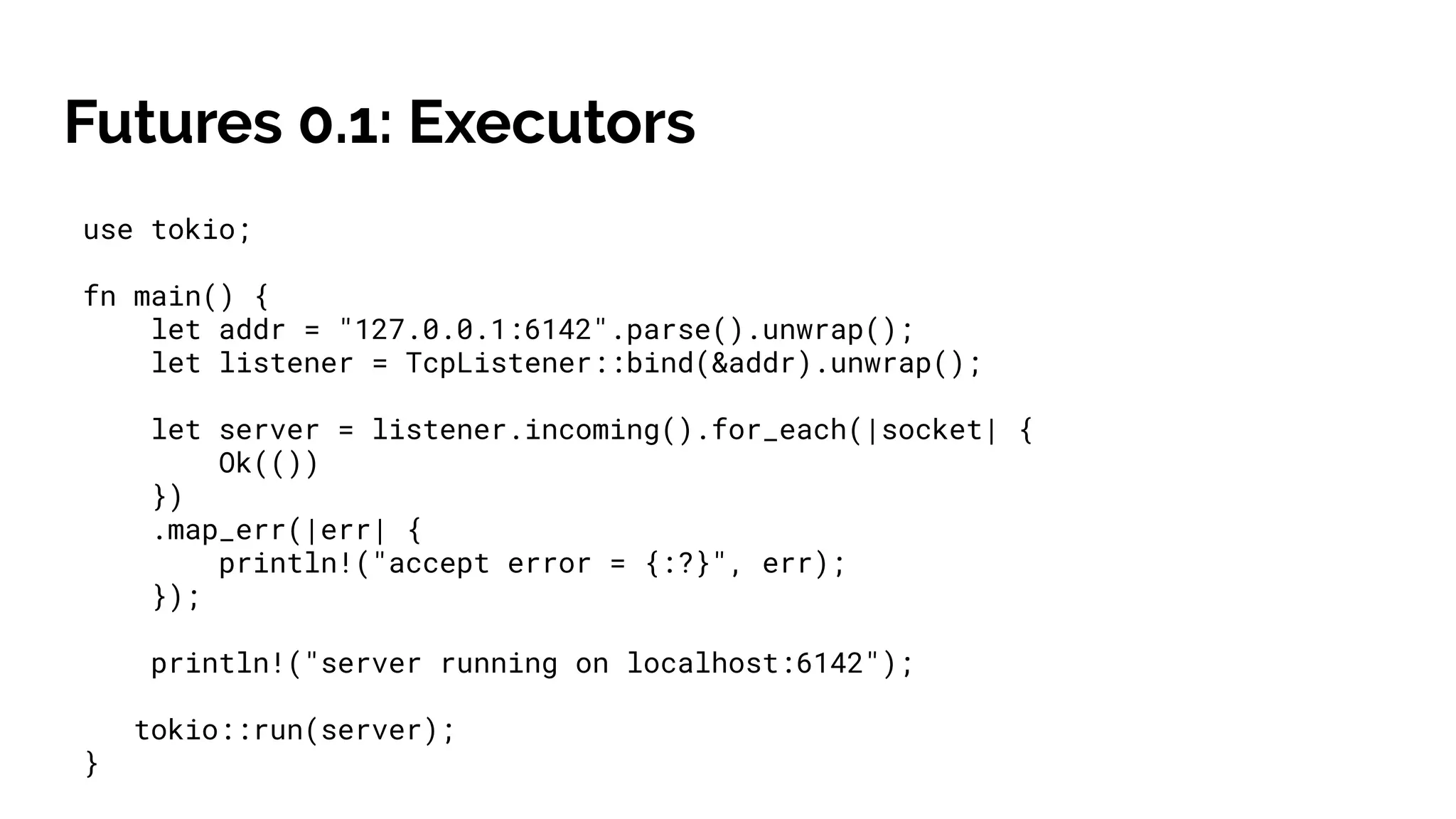

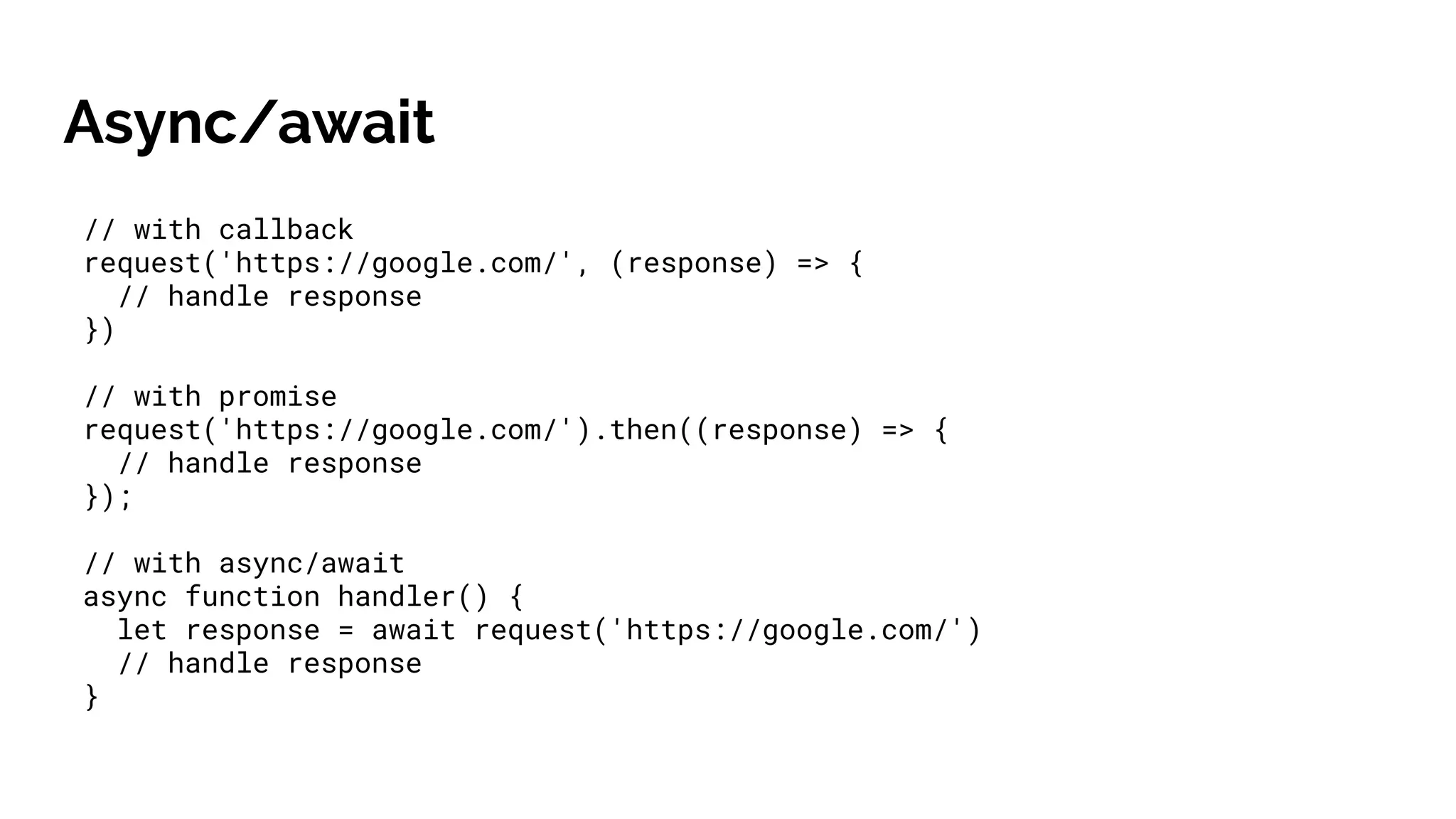

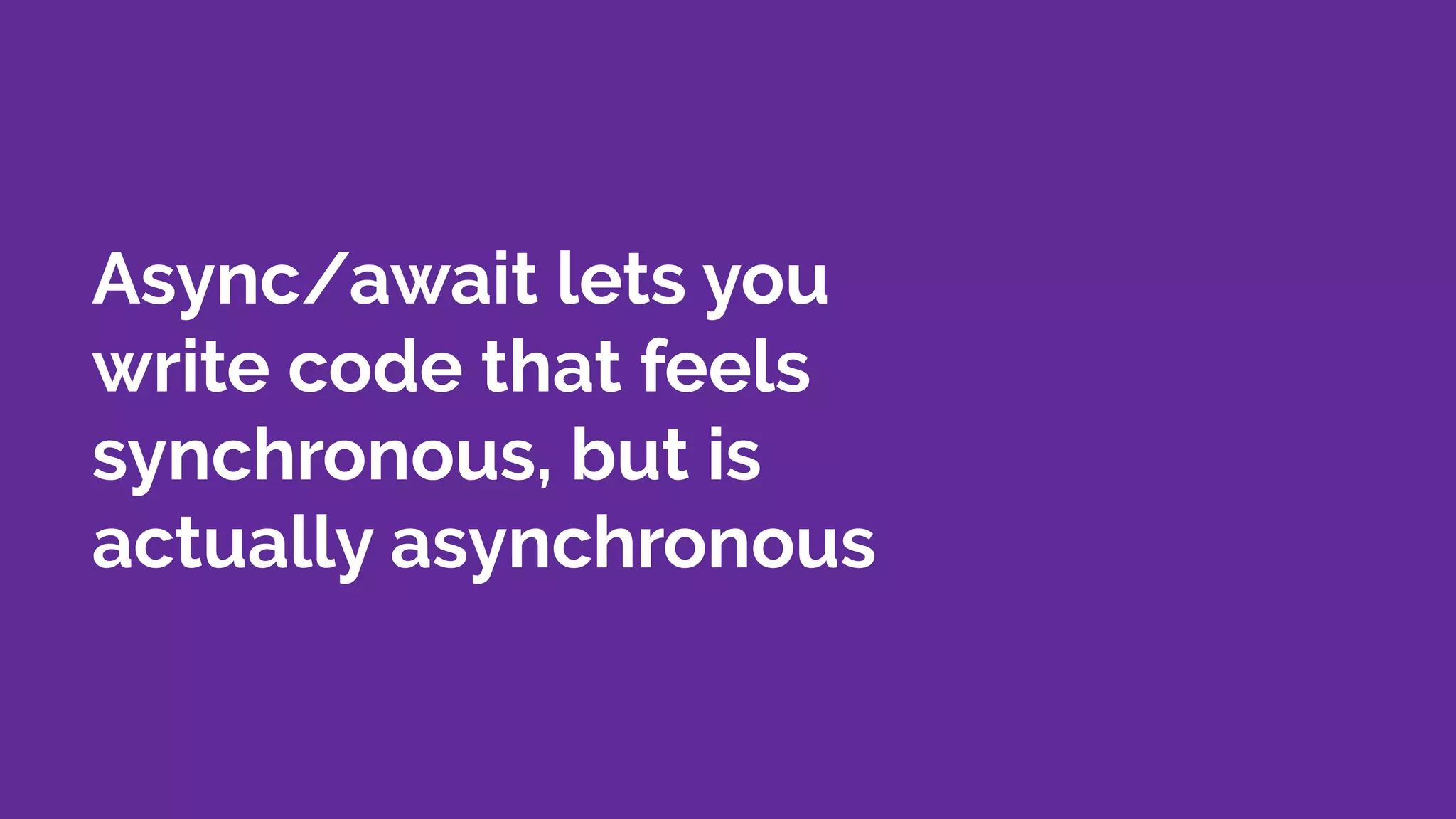

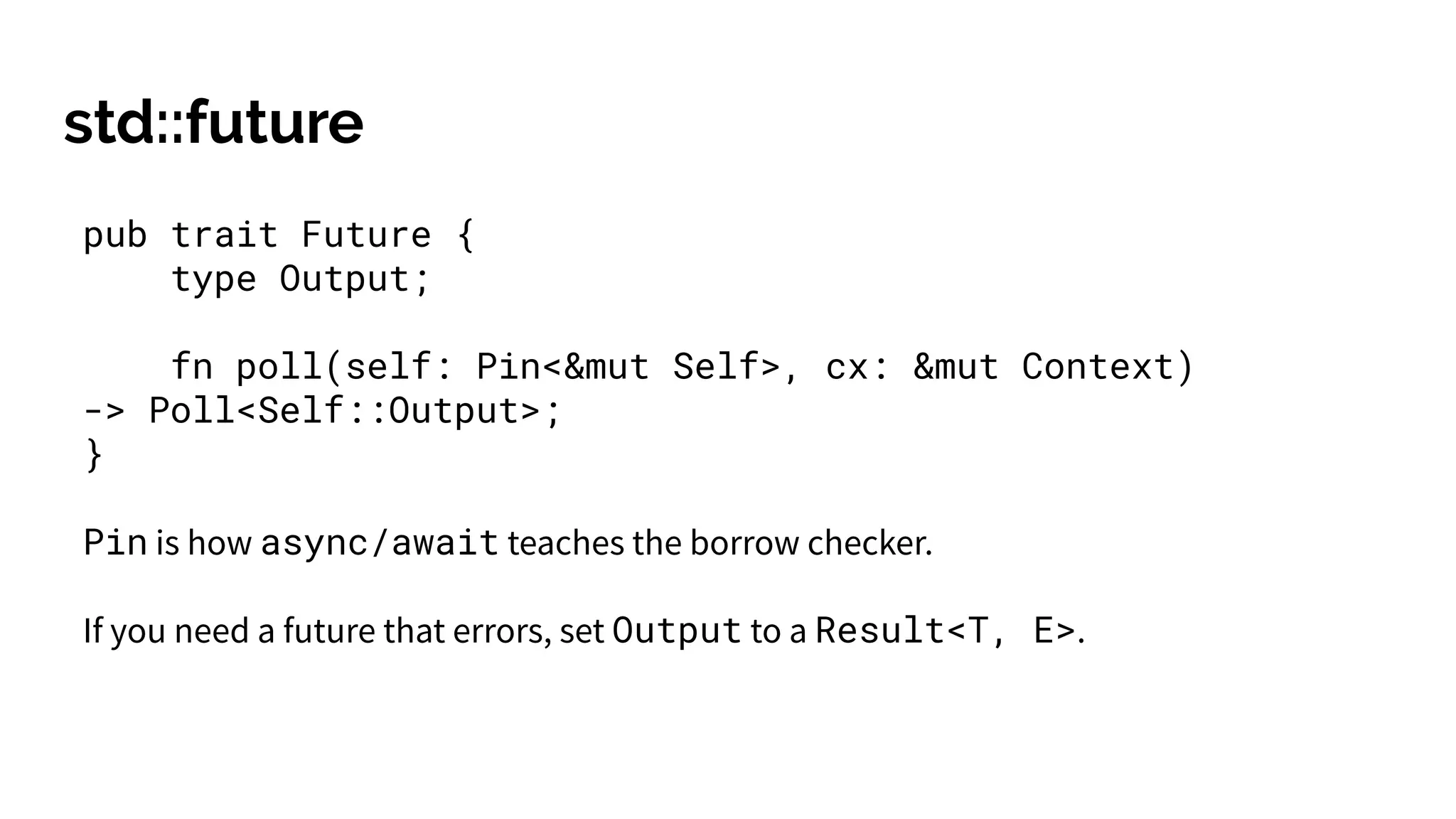

- Async/await was developed to make asynchronous code look synchronous while teaching the borrow checker about asynchronous constraints. This involved debate around syntax.

- After years of work, async/await landed in Rust 1.38, completing Rust's journey to fully supporting asynchronous programming. The implementation involved resolving language-specific challenges.

![Rust example: synchronous

fn read(&mut self, buf: &mut [u8]) -> Result<usize, io::Error>

let mut buf = [0; 1024];

let mut cursor = 0;

while cursor < 1024 {

cursor += socket.read(&mut buf[cursor..])?;

}](https://image.slidesharecdn.com/untitled-191022074031/75/Rust-s-Journey-to-Async-await-79-2048.jpg)

![Rust example: async with Futures

fn read<T: AsMut<[u8]>>(self, buf: T) ->

impl Future<Item = (Self, T, usize), Error = (Self, T, io::Error)>

… the code is too big to fit on the slide

The main problem: the borrow checker doesn’t understand asynchronous

code.

The constraints on the code when it’s created and when it executes are

different.](https://image.slidesharecdn.com/untitled-191022074031/75/Rust-s-Journey-to-Async-await-80-2048.jpg)

![Rust example: async with async/await

async {

let mut buf = [0; 1024];

let mut cursor = 0;

while cursor < 1024 {

cursor += socket.read(&mut buf[cursor..]).await?;

};

buf

}

async/await can teach the borrow checker about these constraints.](https://image.slidesharecdn.com/untitled-191022074031/75/Rust-s-Journey-to-Async-await-81-2048.jpg)

![What syntax for async/await?

async {

let mut buf = [0; 1024];

let mut cursor = 0;

while cursor < 1024 {

cursor += socket.read(&mut buf[cursor..]).await?;

};

buf

}

// no errors

future.await

// with errors

future.await?](https://image.slidesharecdn.com/untitled-191022074031/75/Rust-s-Journey-to-Async-await-89-2048.jpg)

![Additional Ergonomic improvements

use runtime::net::UdpSocket;

#[runtime::main]

async fn main() -> std::io::Result<()> {

let mut socket = UdpSocket::bind("127.0.0.1:8080")?;

let mut buf = vec![0u8; 1024];

println!("Listening on {}", socket.local_addr()?);

loop {

let (recv, peer) = socket.recv_from(&mut buf).await?;

let sent = socket.send_to(&buf[..recv], &peer).await?;

println!("Sent {} out of {} bytes to {}", sent, recv, peer);

}

}](https://image.slidesharecdn.com/untitled-191022074031/75/Rust-s-Journey-to-Async-await-92-2048.jpg)