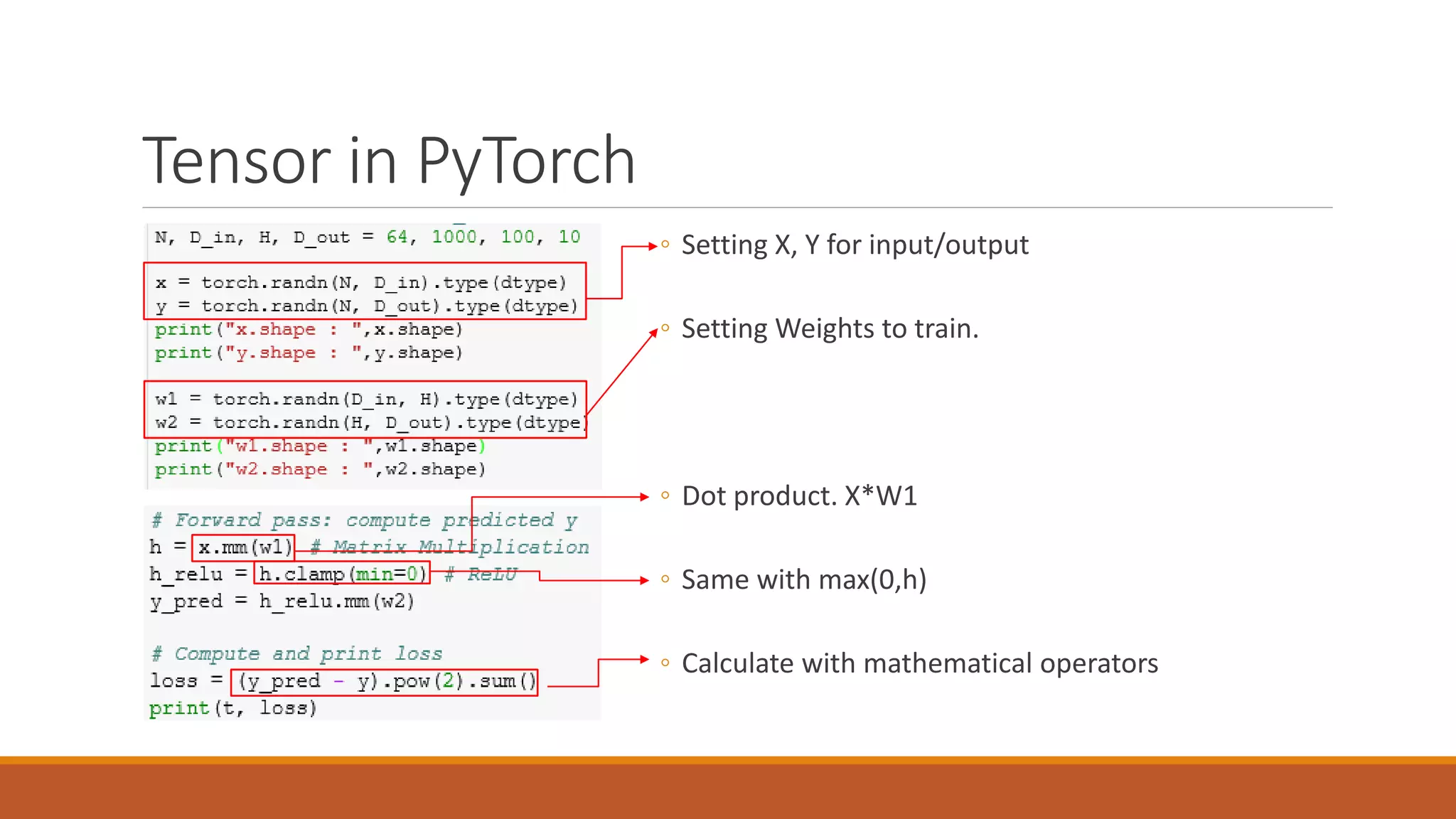

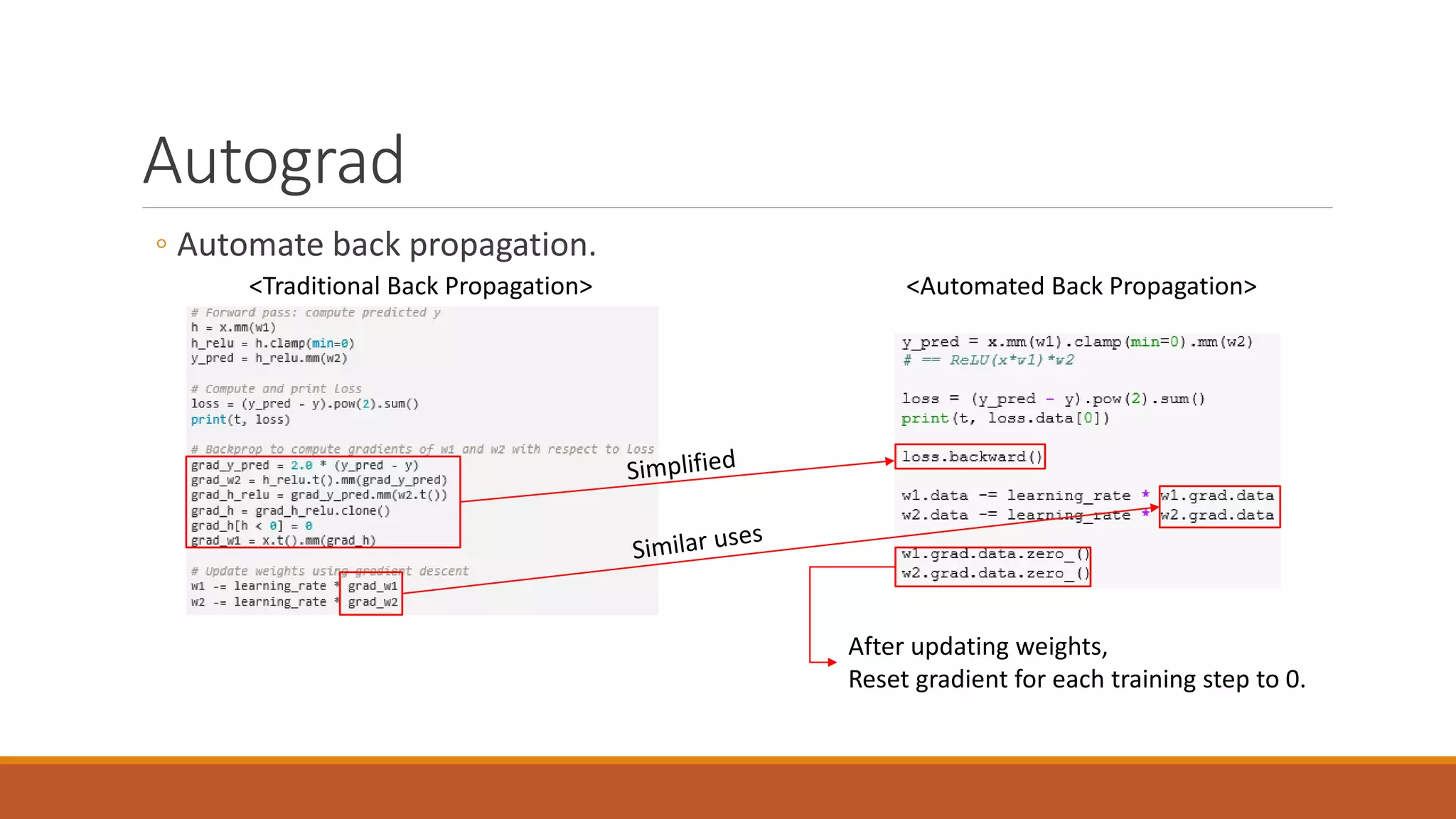

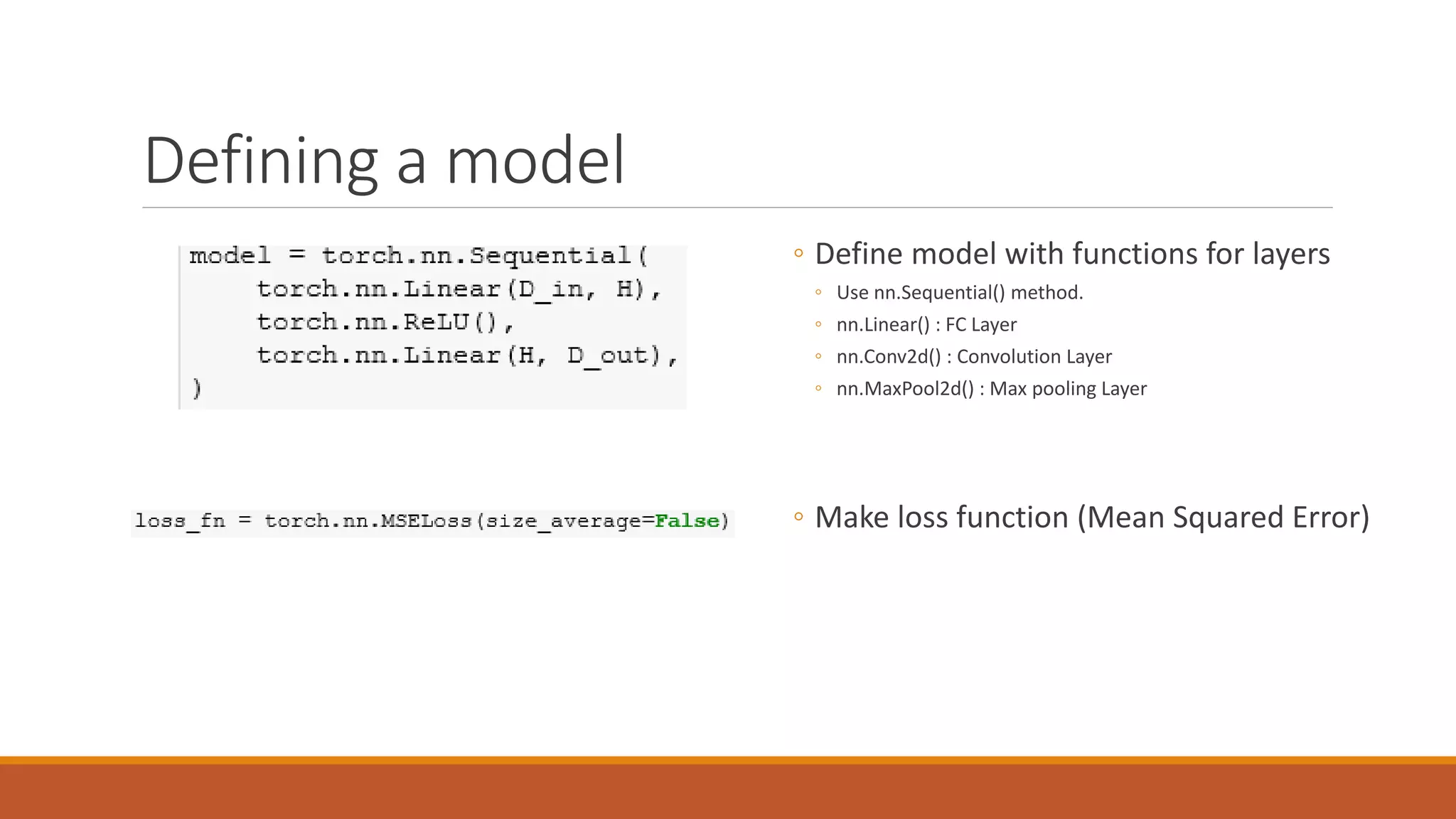

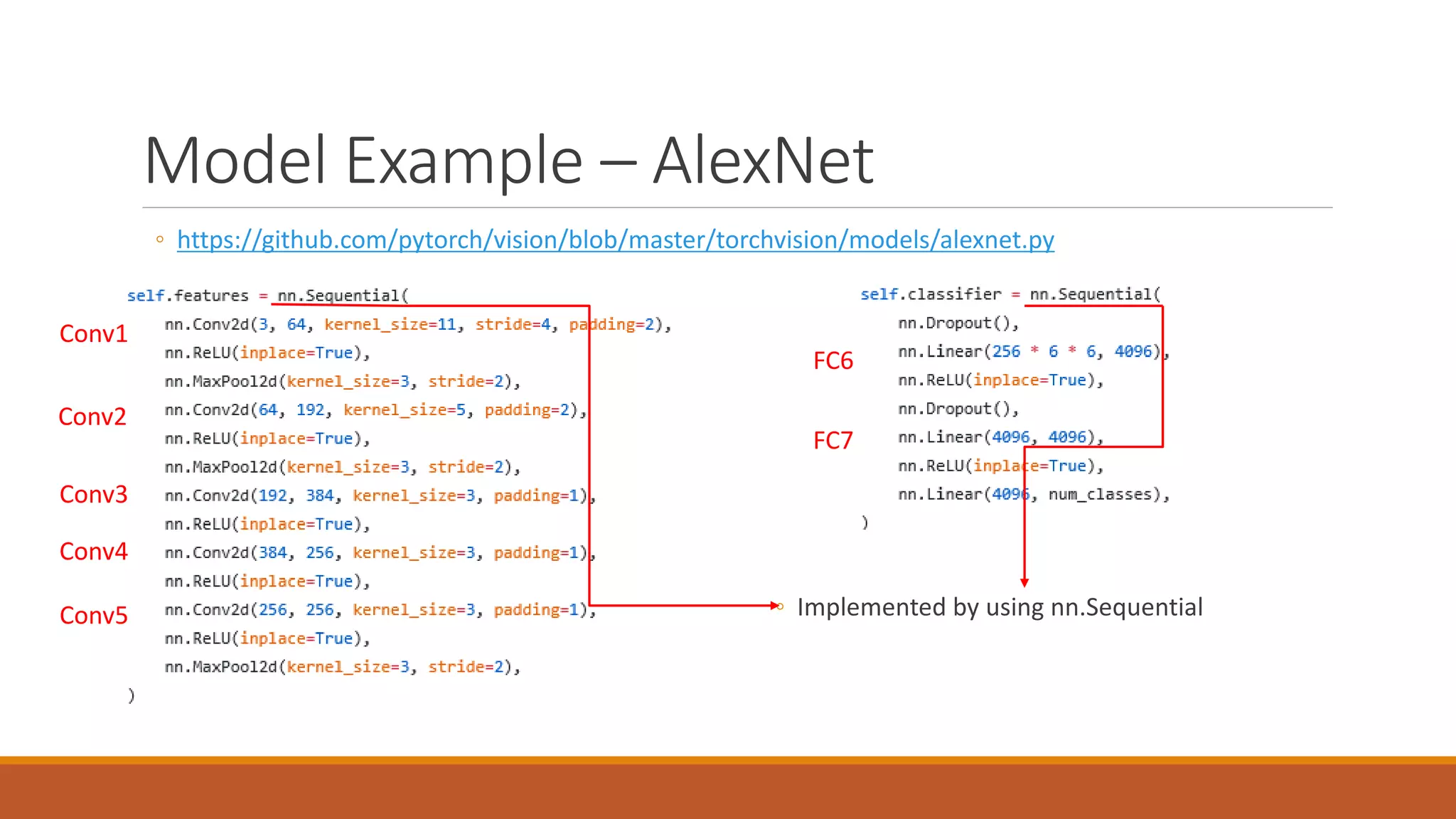

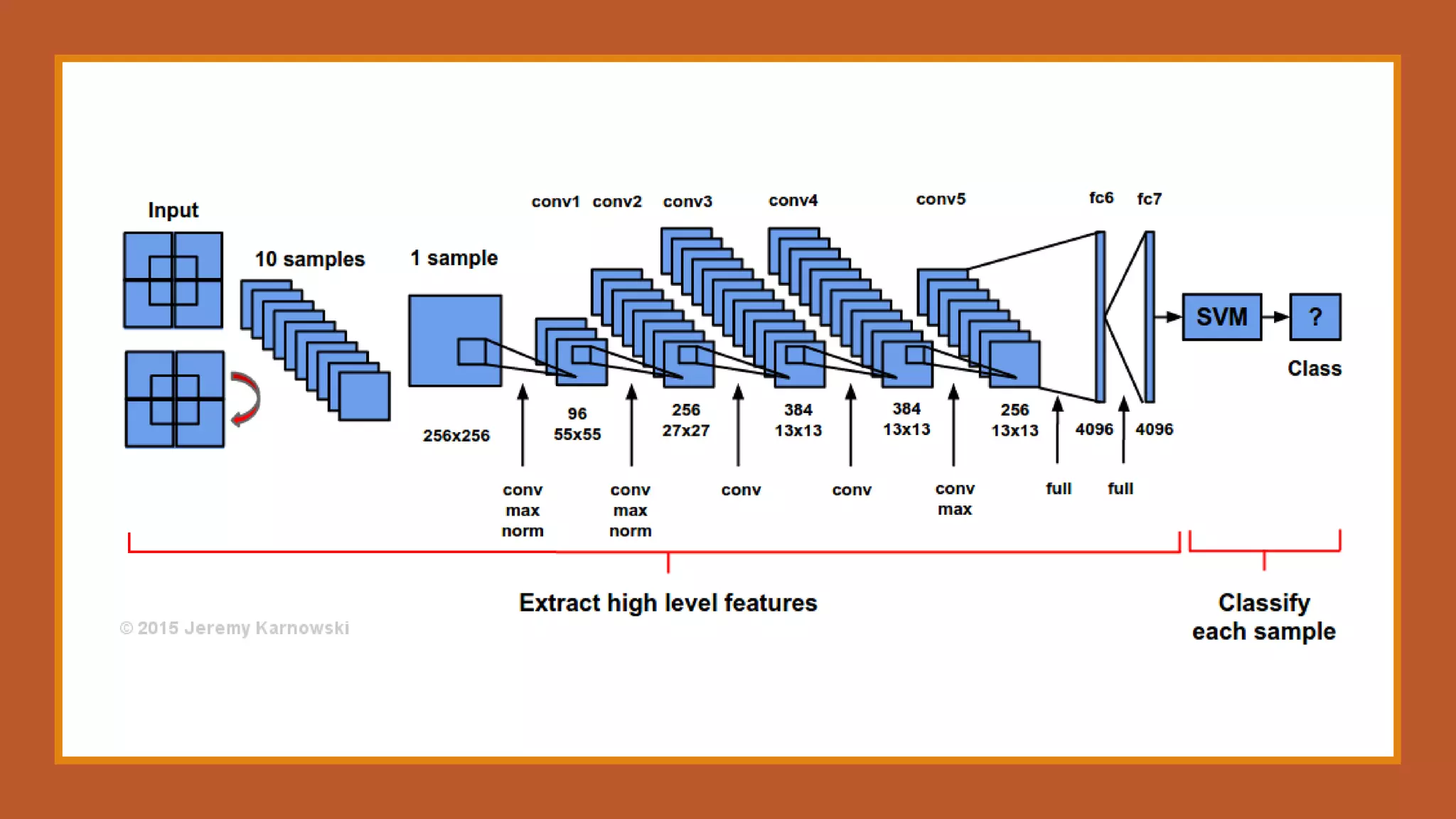

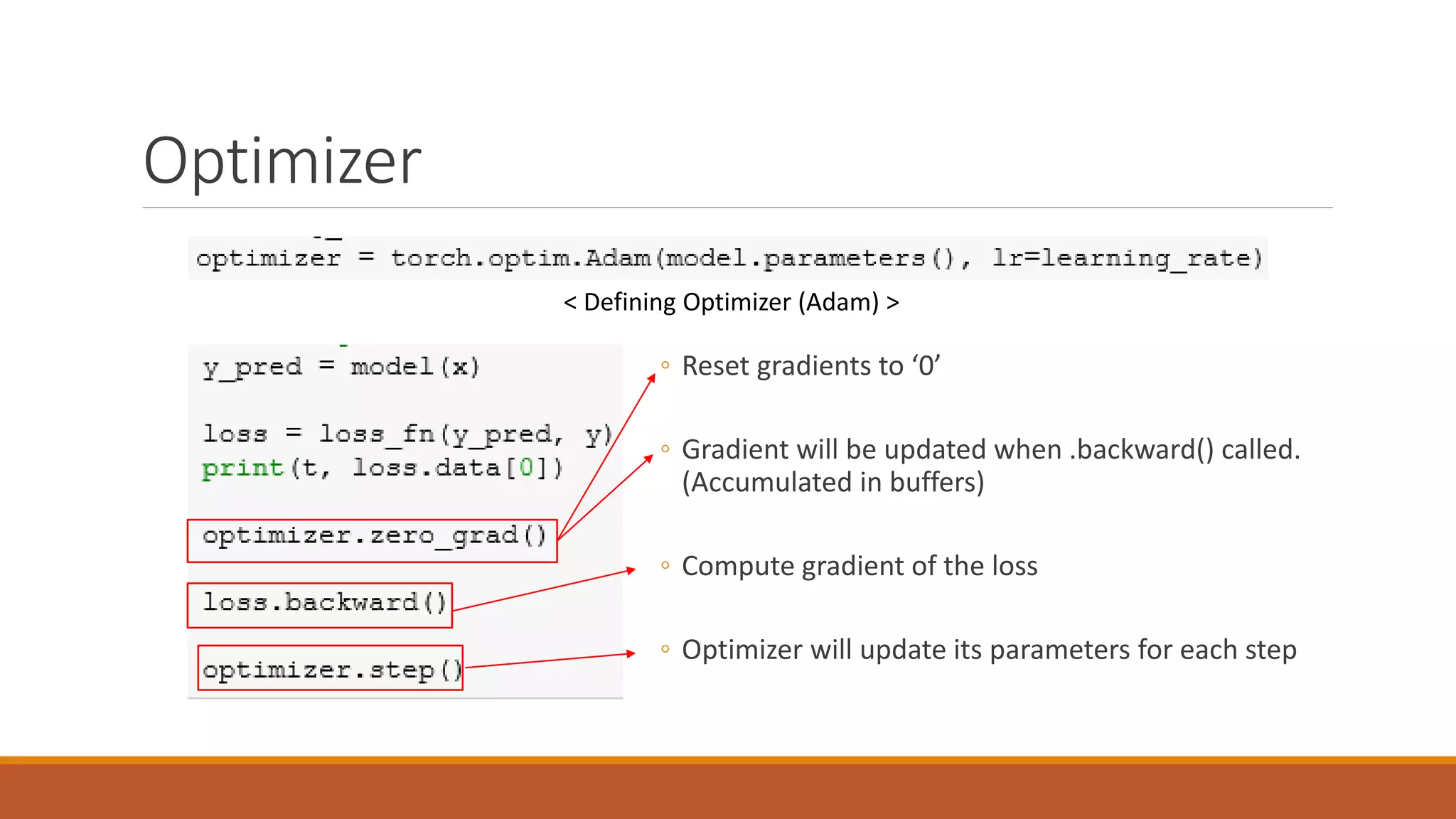

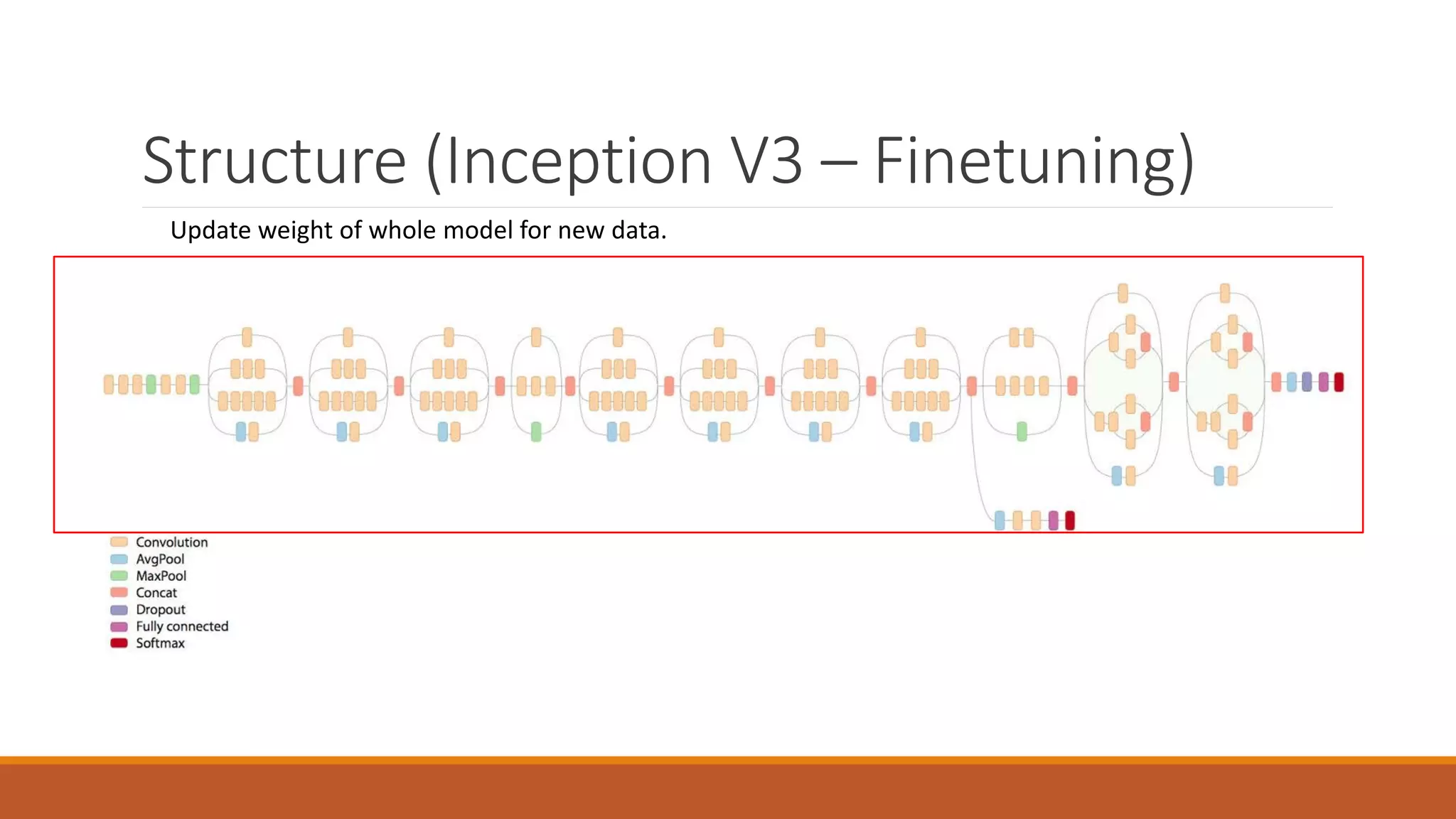

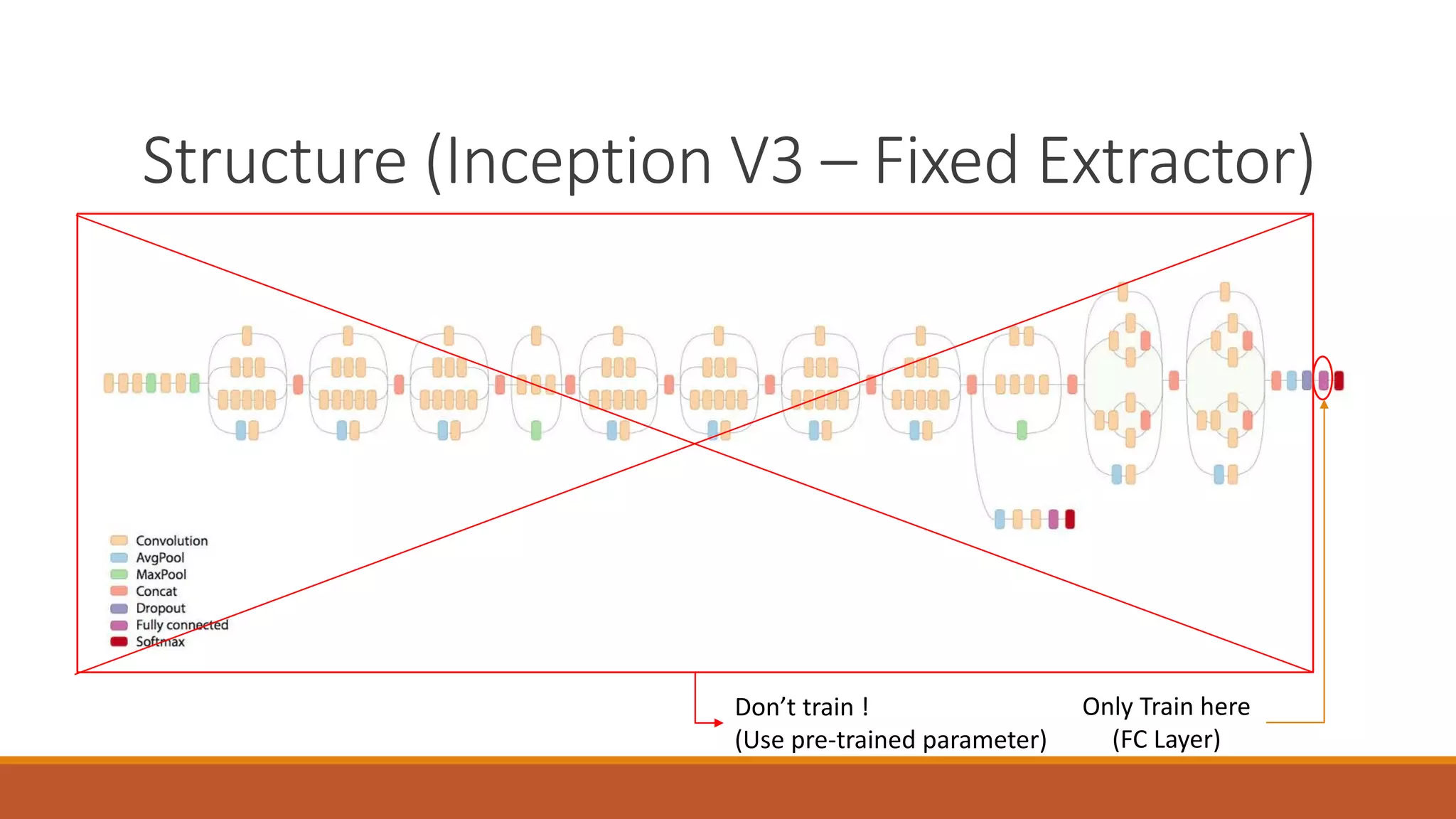

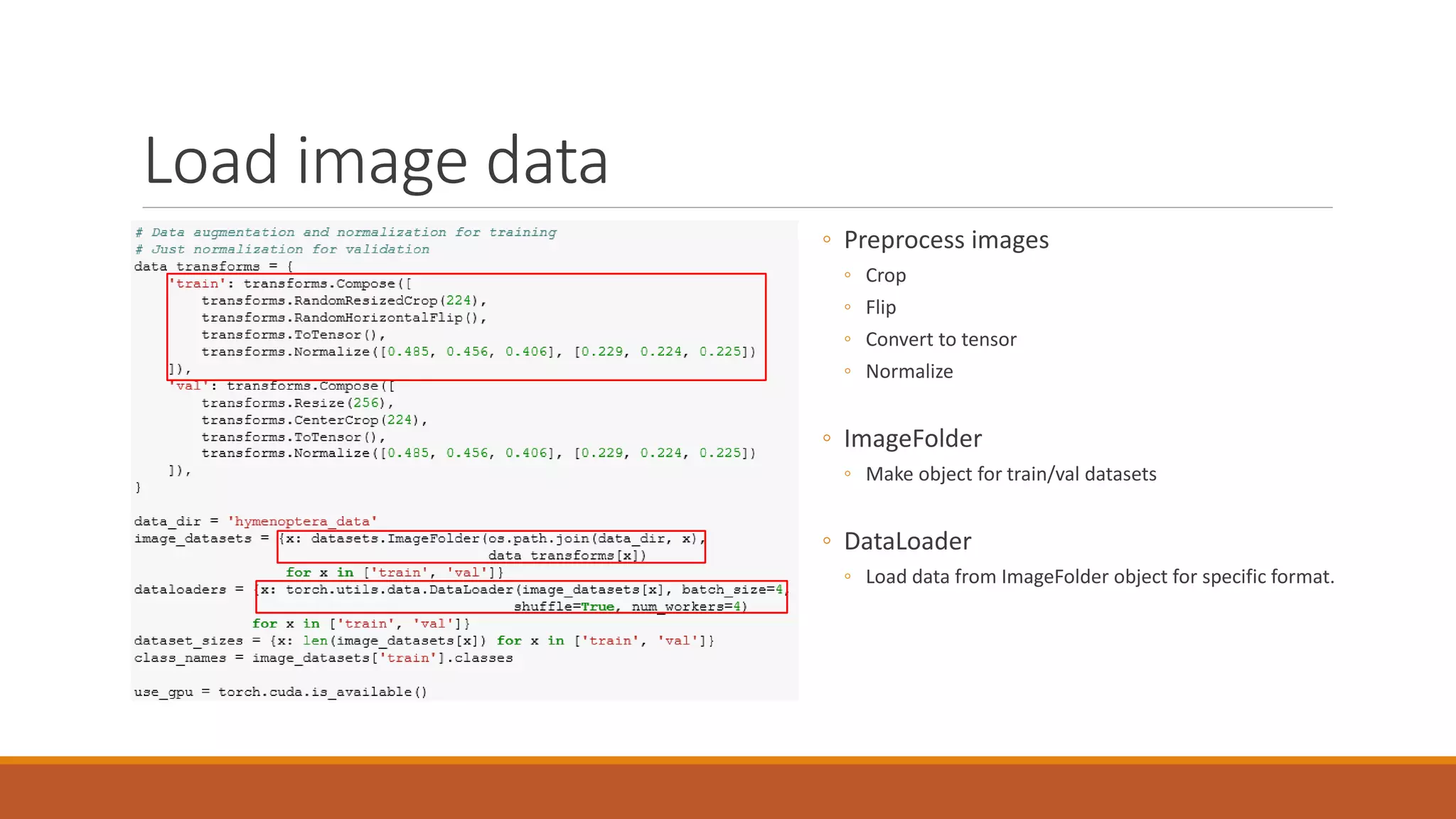

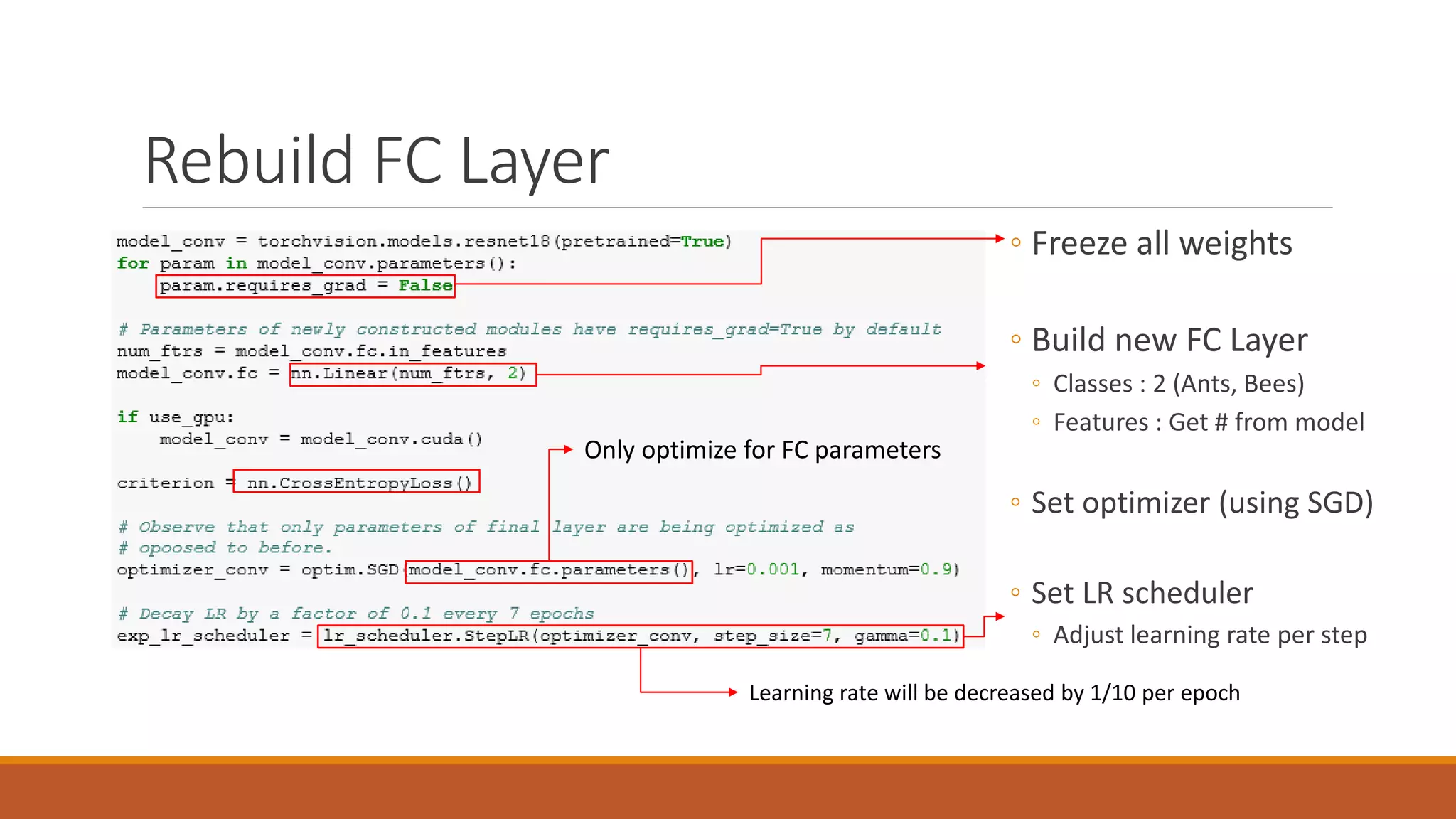

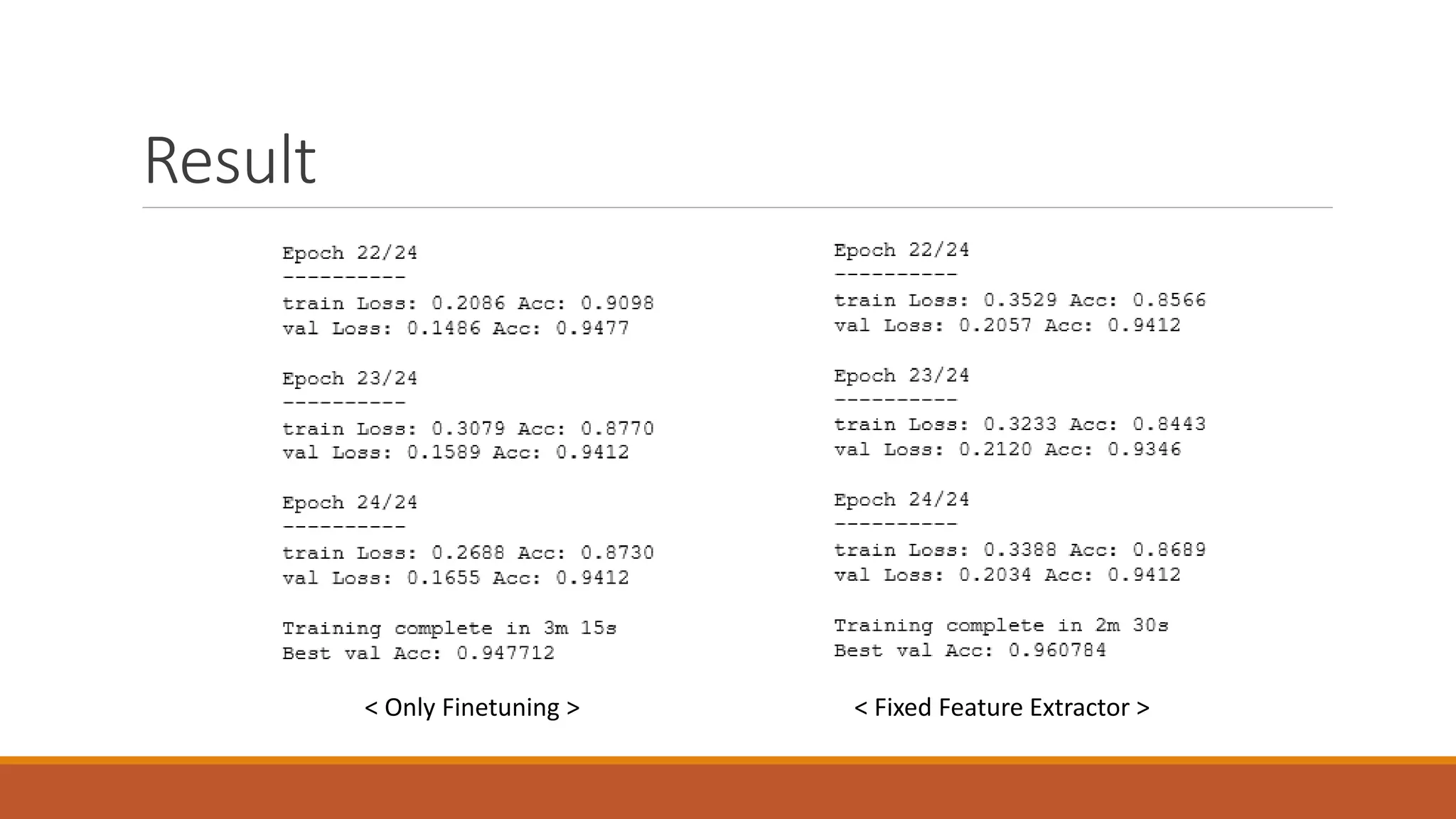

The document provides an introduction to PyTorch, covering essential concepts such as tensors, defining models, and automated backpropagation for training neural networks. It discusses transfer learning, including how to utilize pre-trained models, fine-tuning, and the restructuring of fully connected layers for specific tasks. Additionally, it includes details on image preprocessing and suggestions for further study and self-check questions.