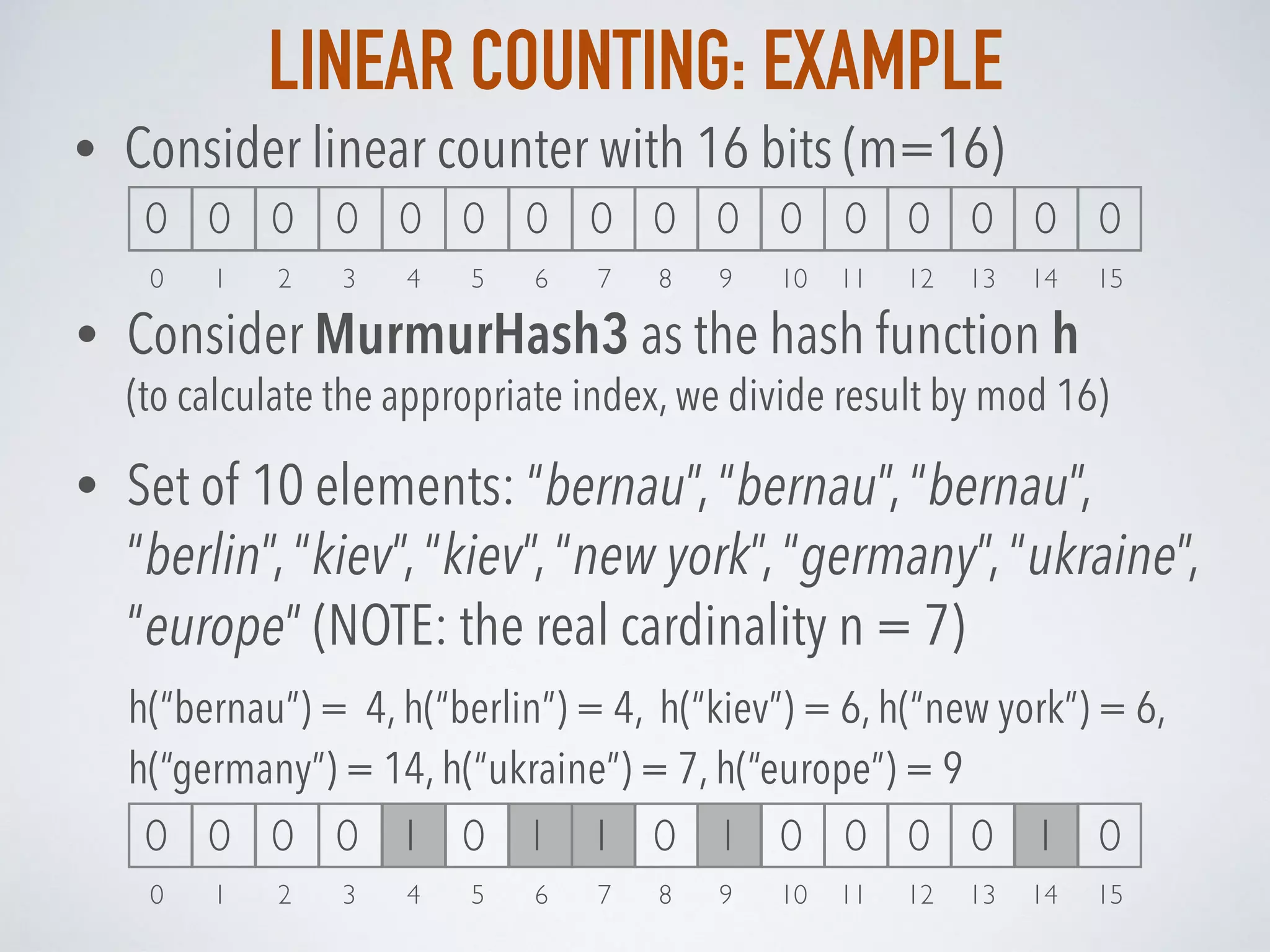

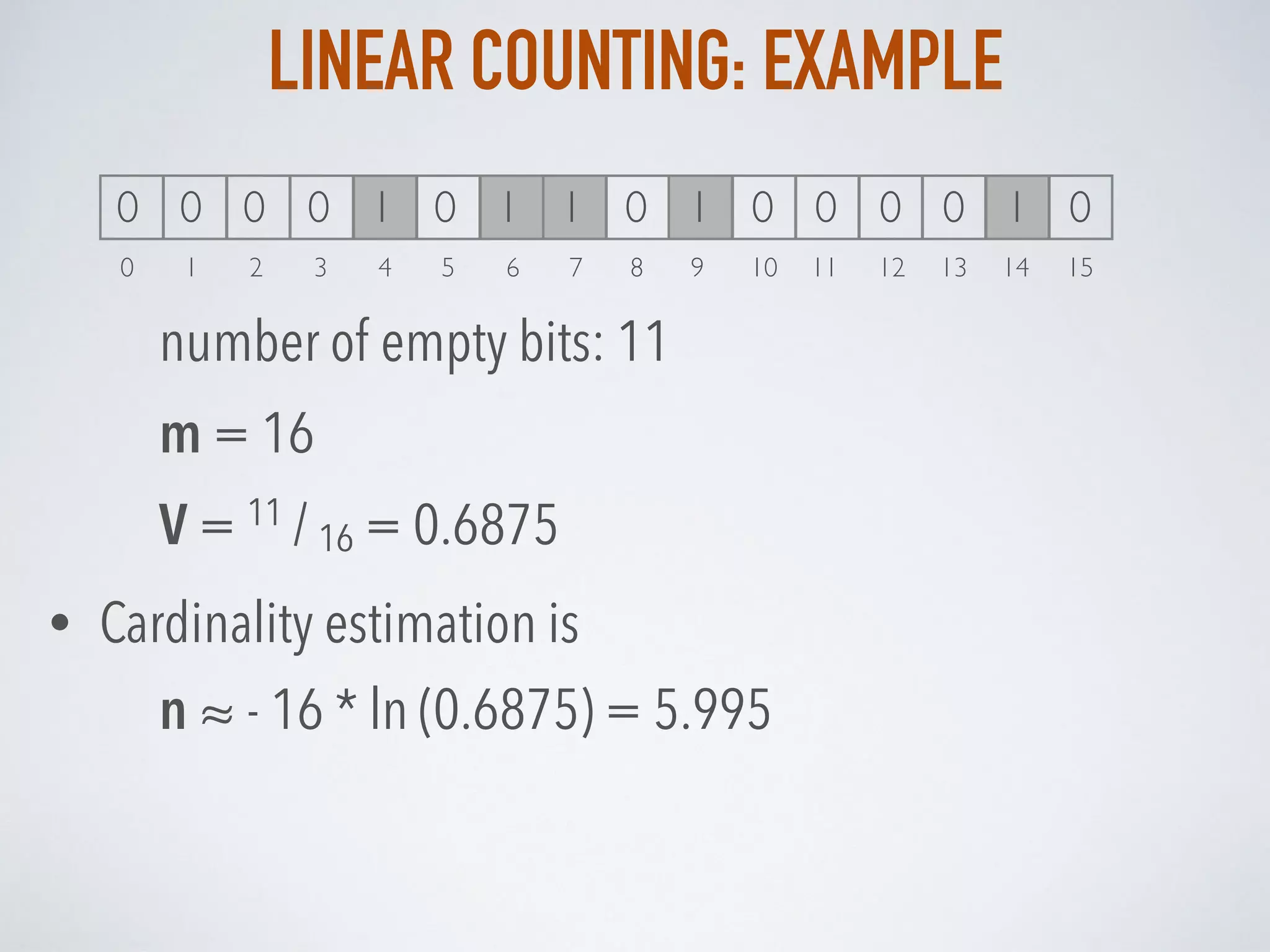

This document discusses various probabilistic data structures for estimating cardinality, including linear counting, HyperLogLog, and HyperLogLog++. It explains the algorithms, advantages, and applications of each method, highlighting memory efficiency and accuracy for large datasets. Additionally, the document presents examples and compares the performance of these techniques in different contexts.

![HYPERLOGLOG: ALGORITHM

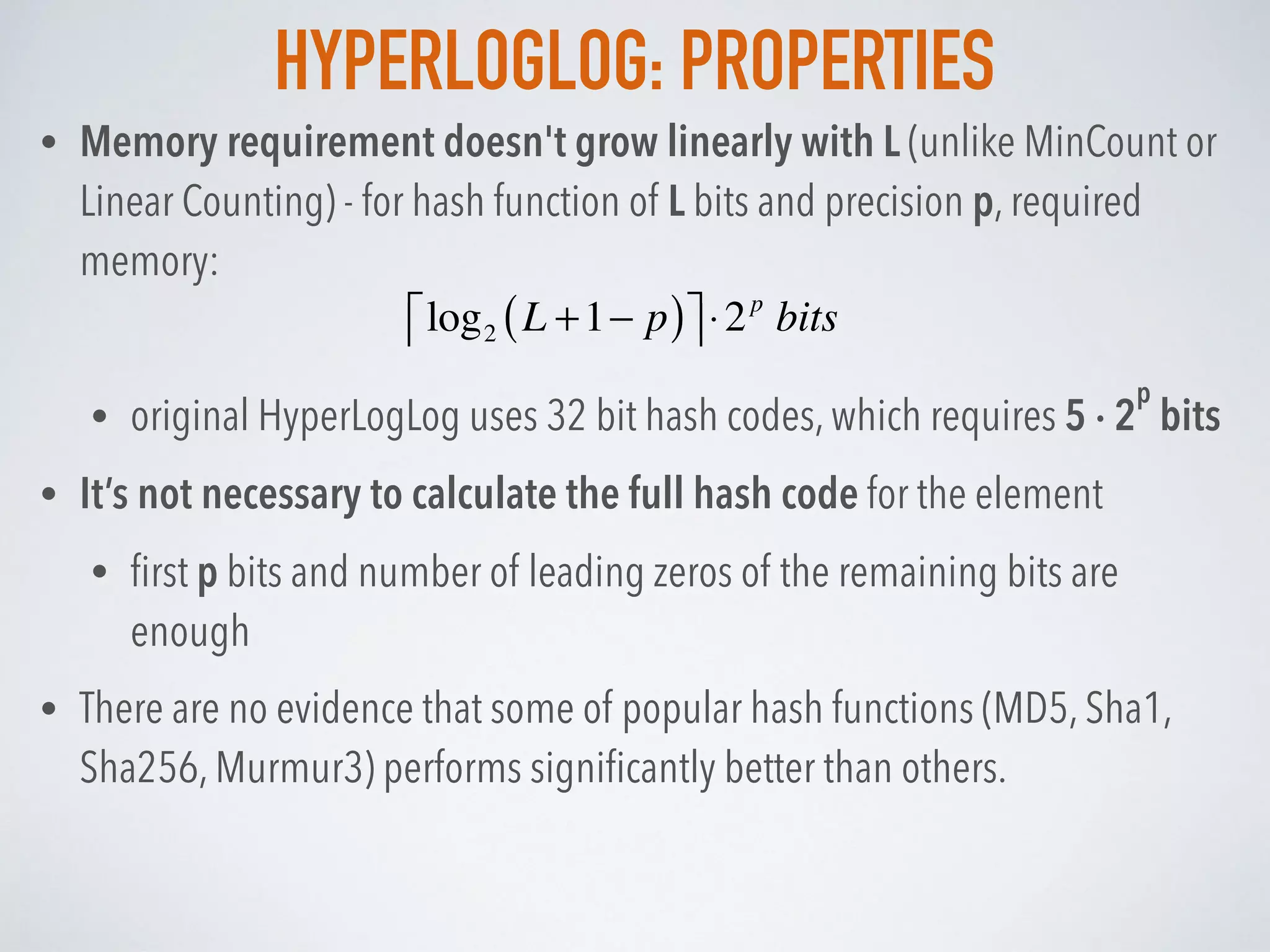

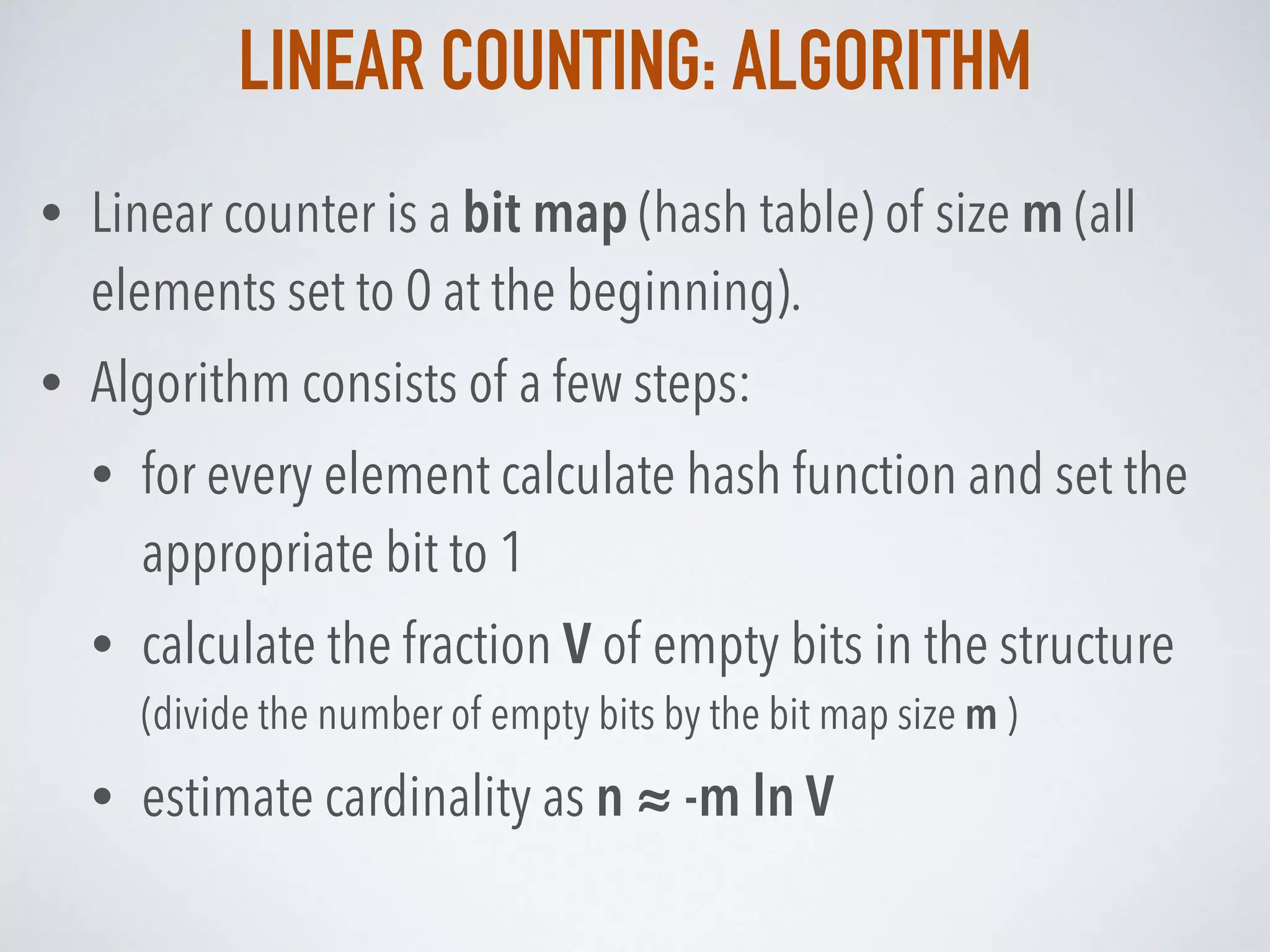

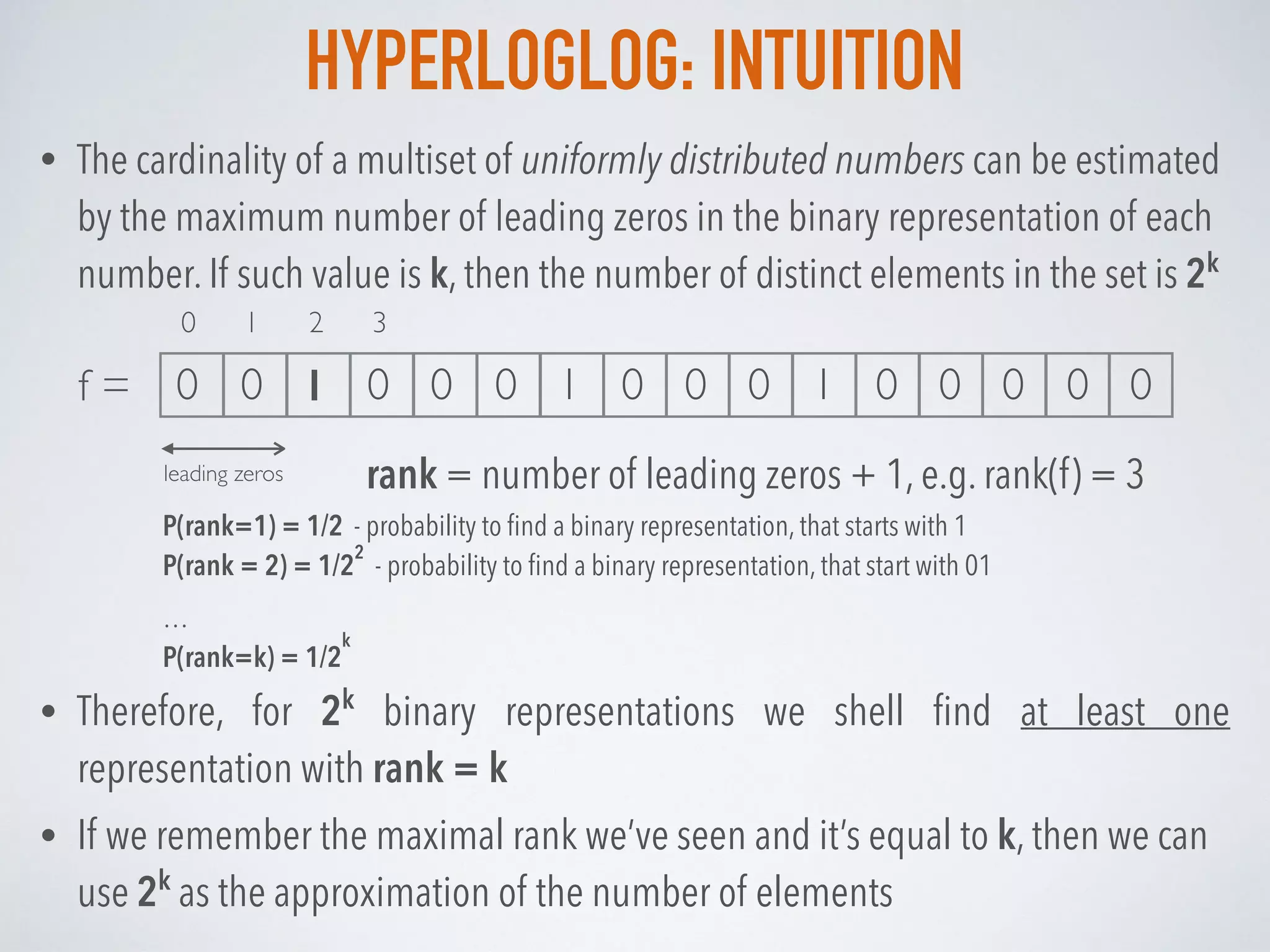

• Stochastic averaging is used to reduce the large variability:

• The input stream of data elements S is divided into m substreams Si using

the first p bits of the hash values (m = 2p)

.

• In each substream, the rank (after the initial p bits that are used for

substreaming) is measured independently.

• These numbers are kept in an array of registers M, where M[i] stores the

maximum rank it seen for the substream with index i.

• The cardinality estimation is calculated computes as the normalized bias

corrected harmonic mean of the estimations on the substreams

DVHLL = const(m)⋅m2

⋅ 2

−M j

j=1

m

∑

⎛

⎝⎜

⎞

⎠⎟

−1](https://image.slidesharecdn.com/probabilisticdatastructurespart2-160726192154/75/Probabilistic-data-structures-Part-2-Cardinality-13-2048.jpg)

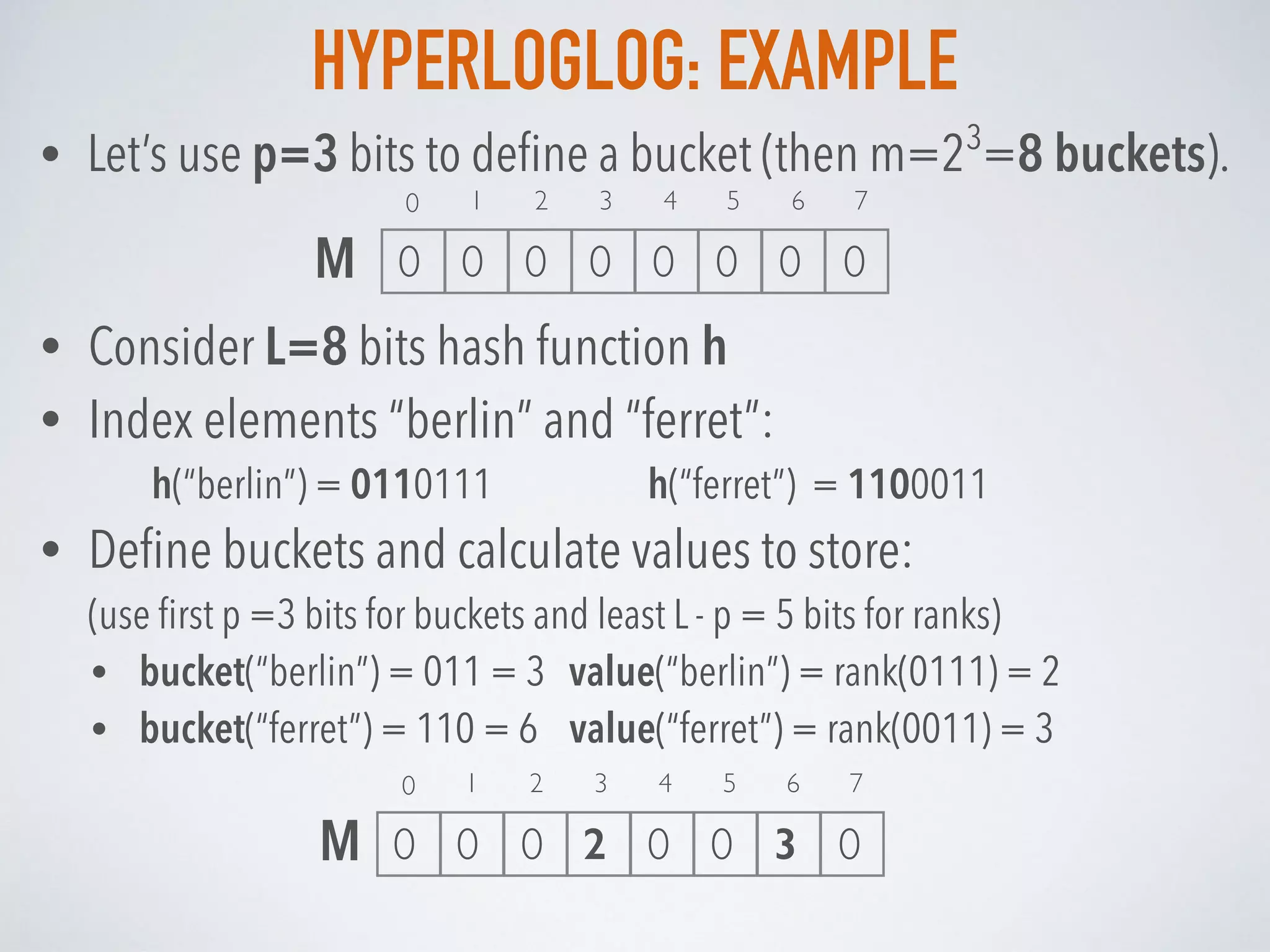

![HYPERLOGLOG: EXAMPLE

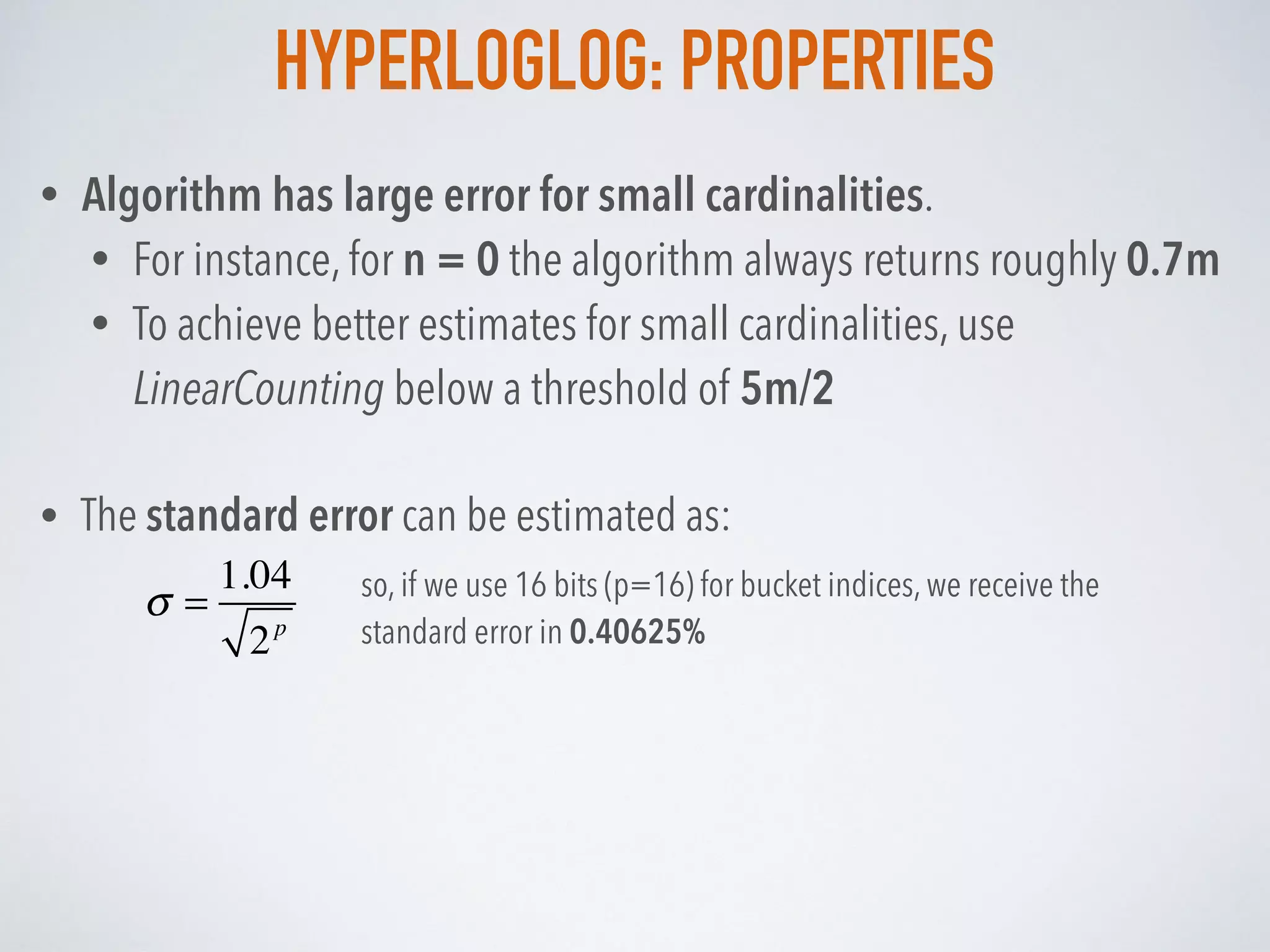

• Estimate the cardinality be the HLL formula (C ≈ 0.66):

DVHLL ≈ 0.66 * 82

/ (2-2

+ 2-4

) = 0.66 * 204.8 ≈ 135≠3

• Index element “kharkov”:

• h(“kharkov”) = 1100001

• bucket(“kharkov”) = 110 = 6 value(“kharkov”) = rank(0001) = 4

• M[6] = max(M[6], 4) = max(3, 4) = 4

1 2 3 4 5 6 7

0 0 0 2 0 0 4 0

0

M

NOTE: For small cardinalities HLL has a strong bias!!!](https://image.slidesharecdn.com/probabilisticdatastructurespart2-160726192154/75/Probabilistic-data-structures-Part-2-Cardinality-15-2048.jpg)