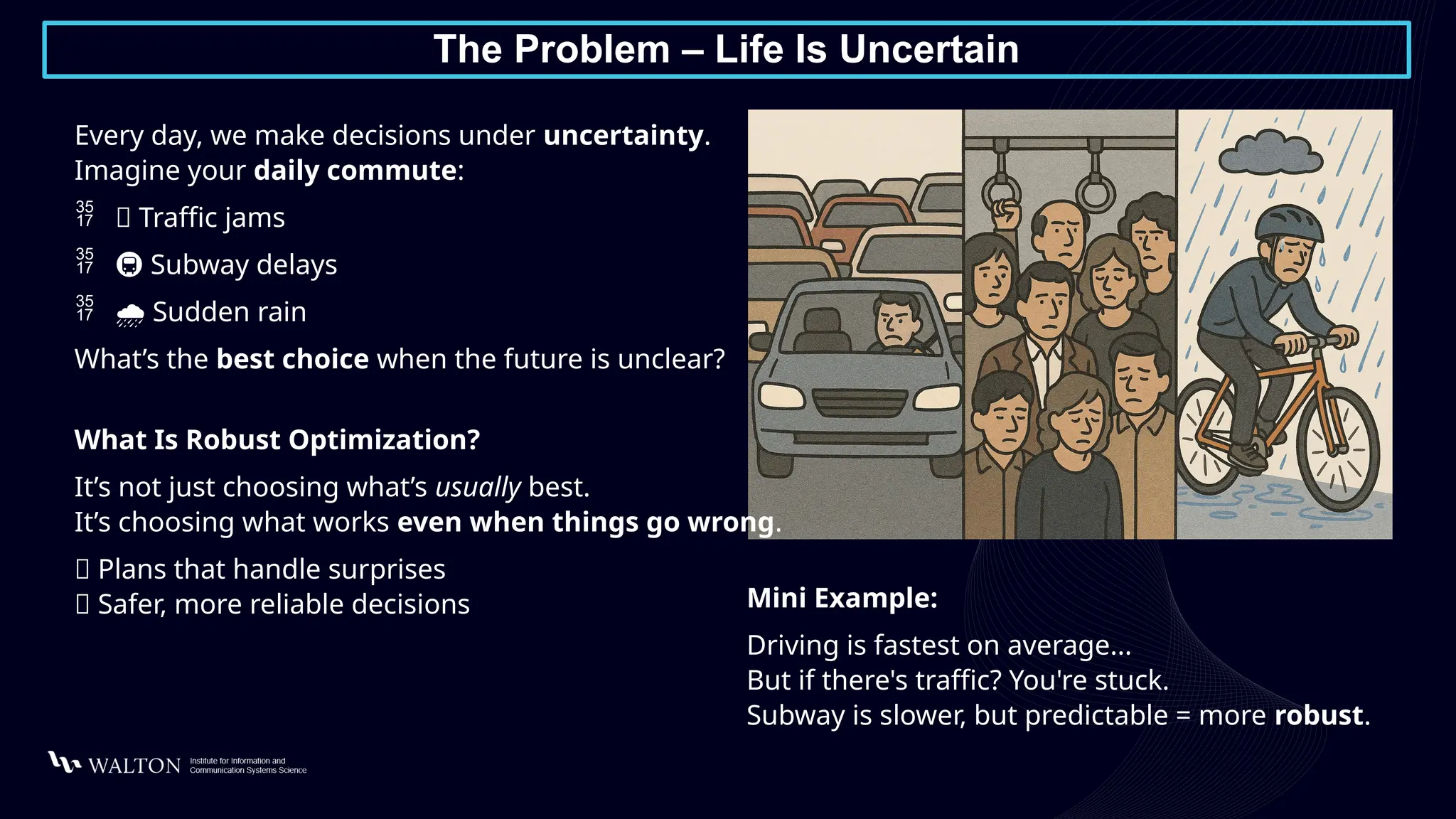

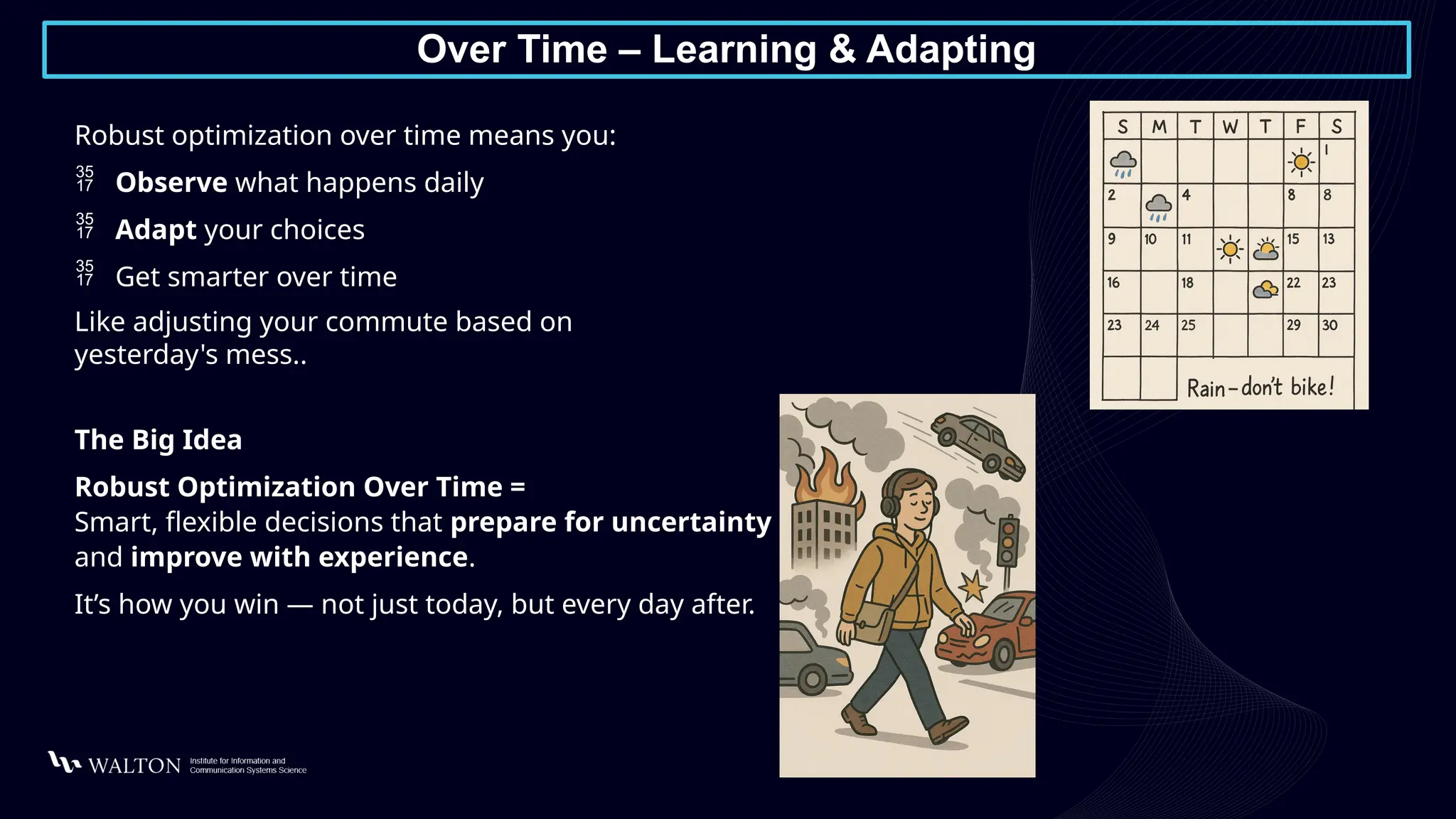

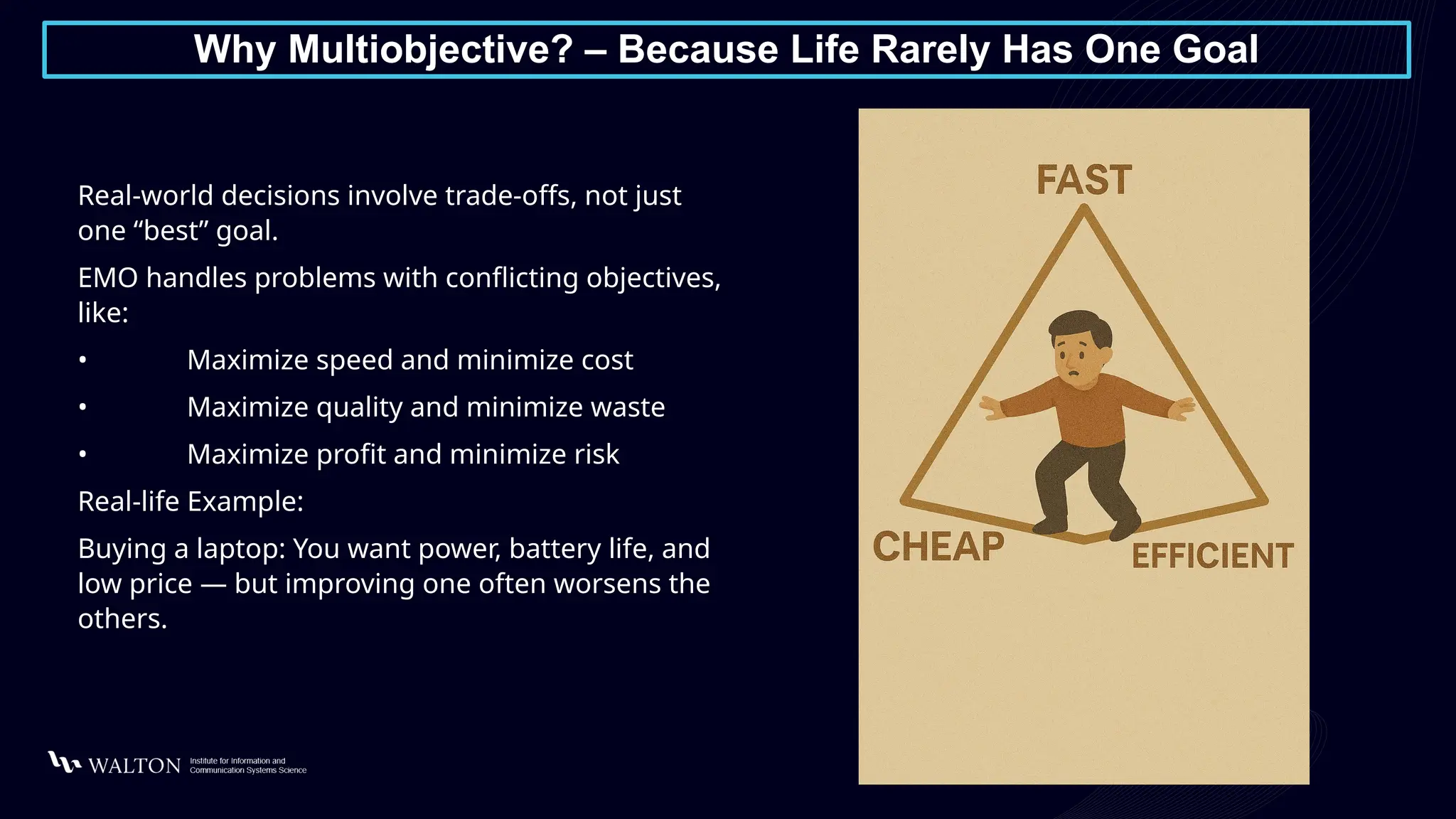

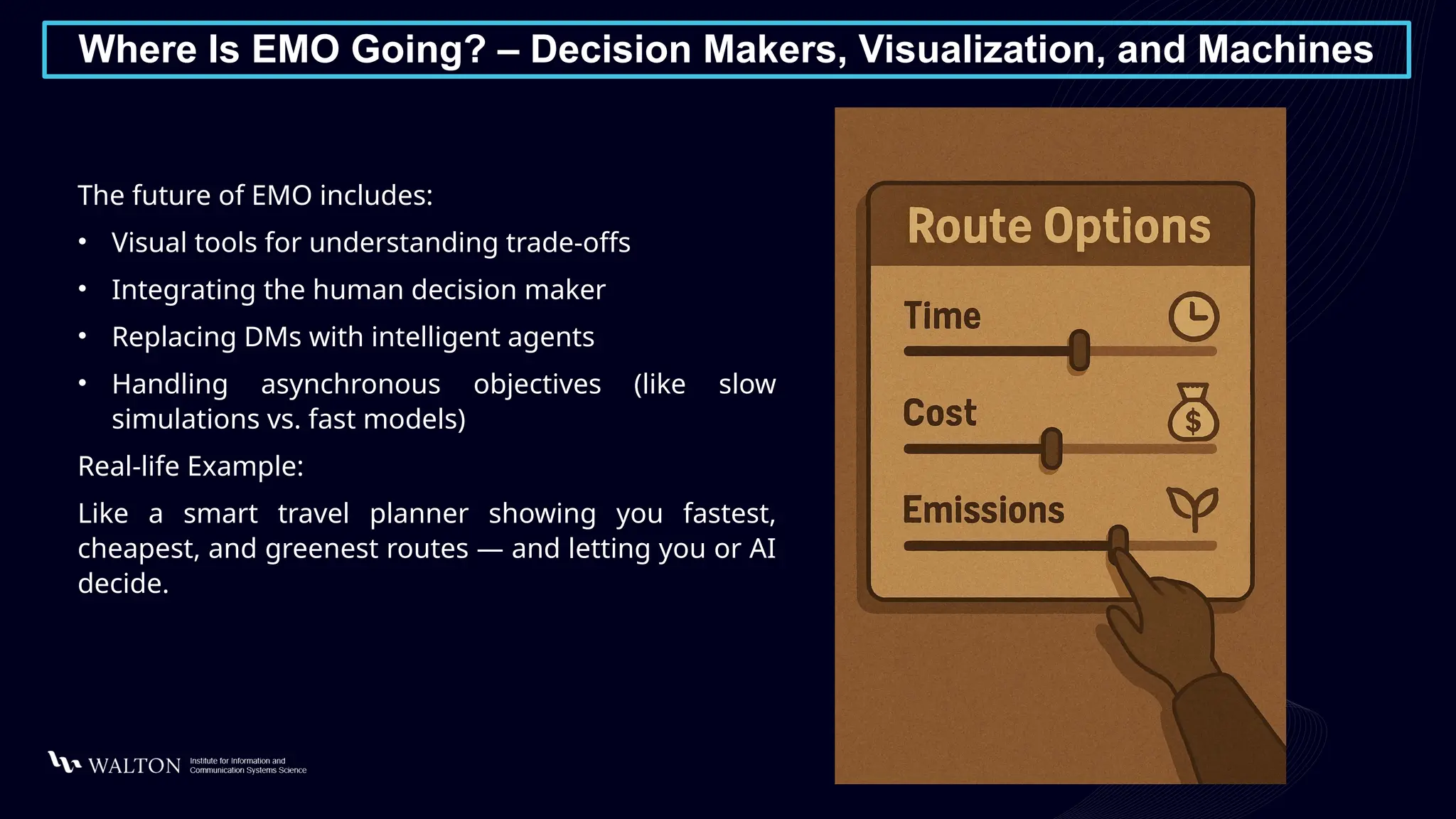

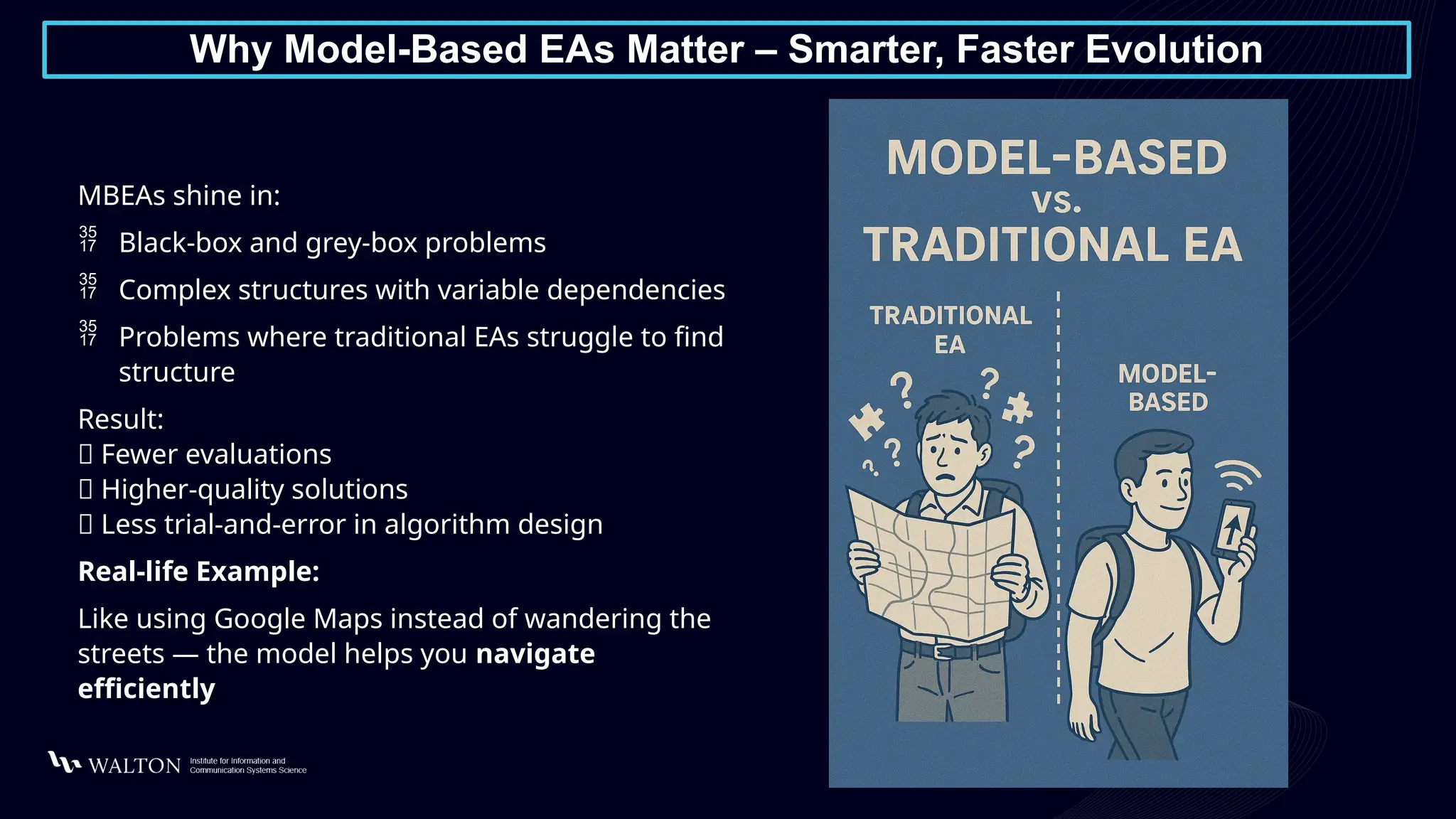

This slide deck provides a practical, example-driven tour of how we optimize when life is uncertain: from robust optimization over time (making choices that still work when things go wrong, and learning/adapting with experience) to landscape analysis (modality, ruggedness, neutrality, global structure) for matching algorithms to problem terrain; theory in evolutionary algorithms, Bayesian Optimization for expensive black-box evaluations (surrogates, explore and exploit), smarter constraint handling beyond simple penalties, stochastic optimization under noisy outcomes, combinatorial optimization powered by Evolution for scalable decision-making, multi-objective optimization, and model-based Evolutionary Algorithms that learn structure to guide variation efficiently.