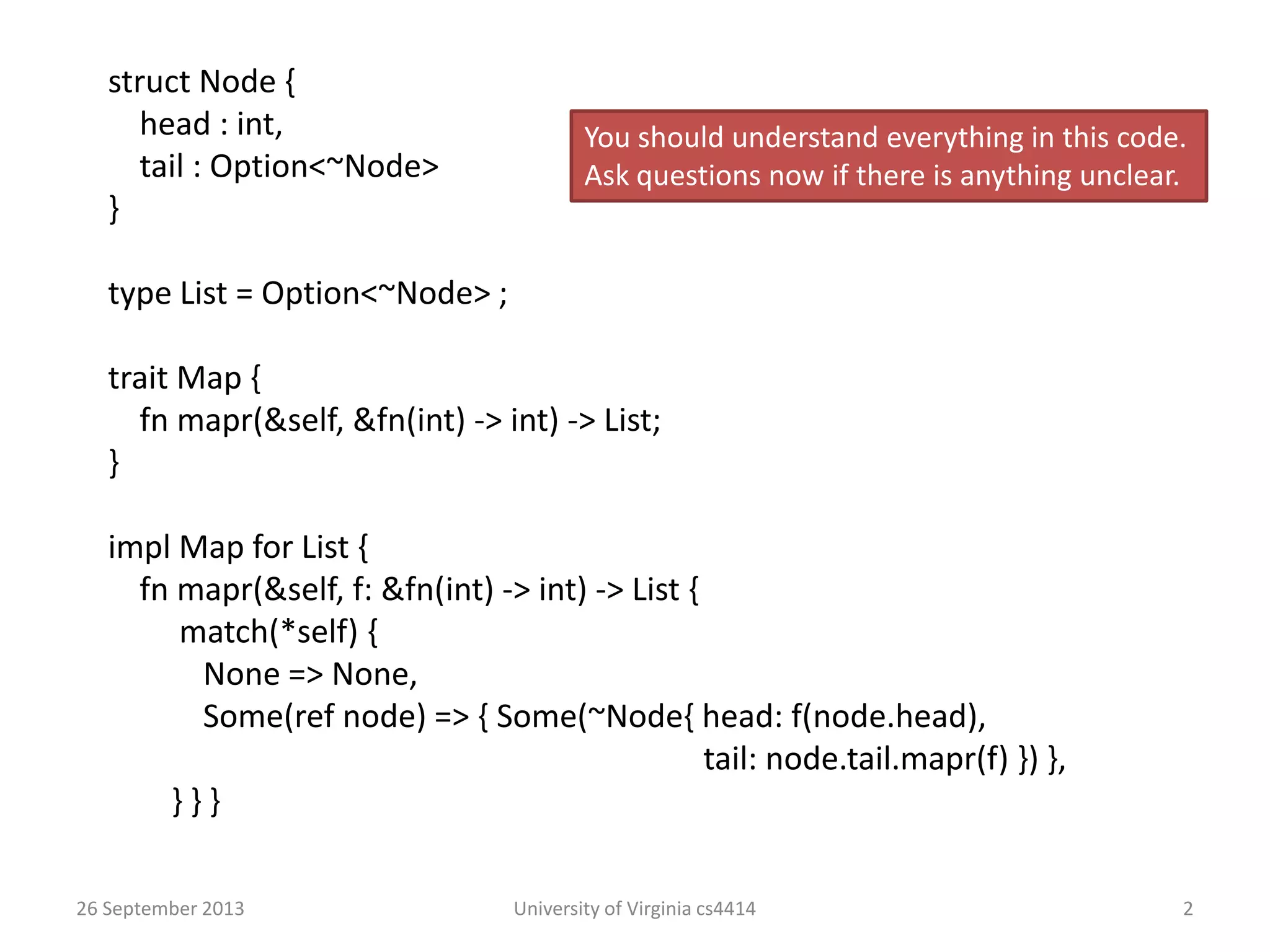

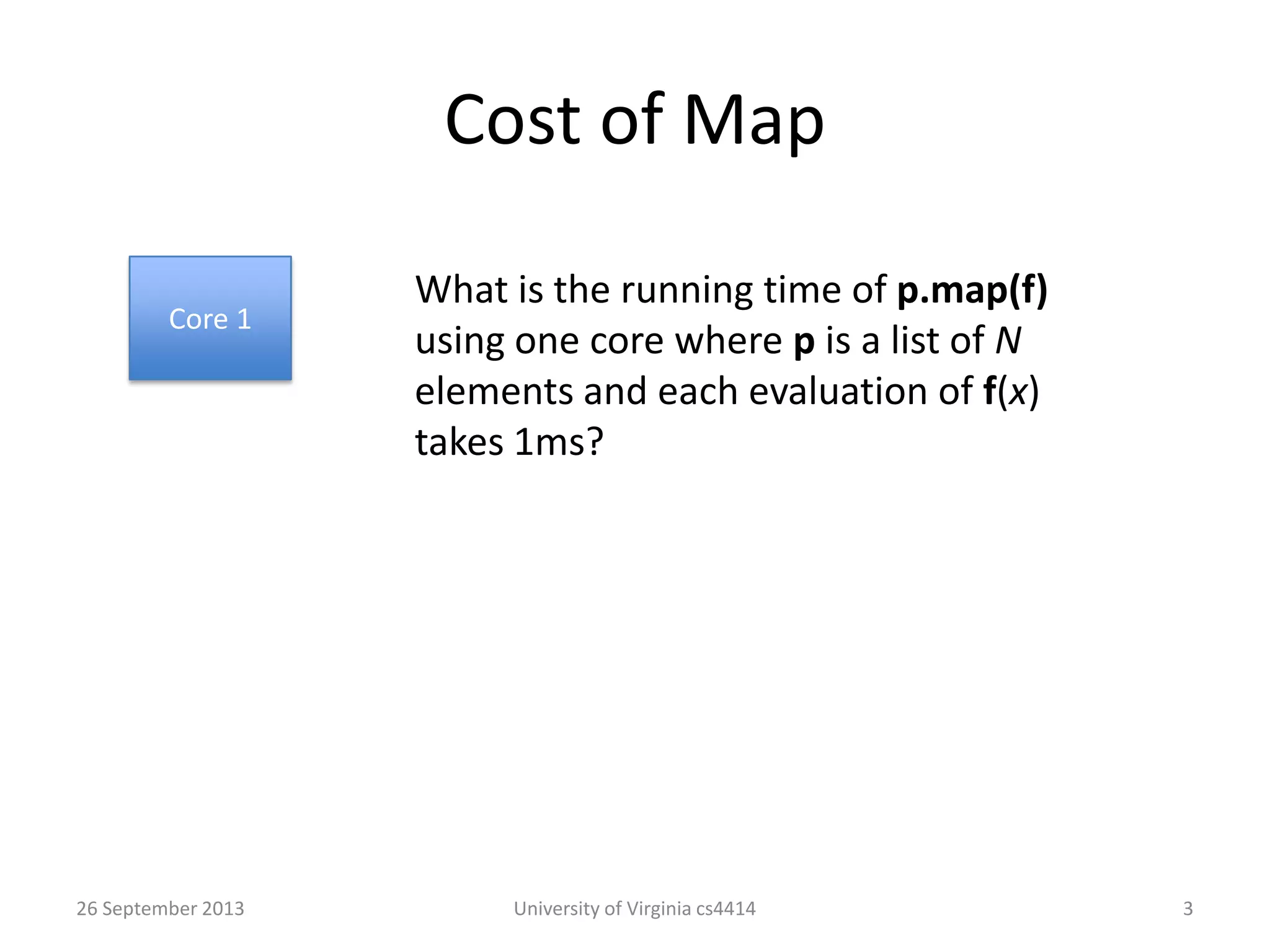

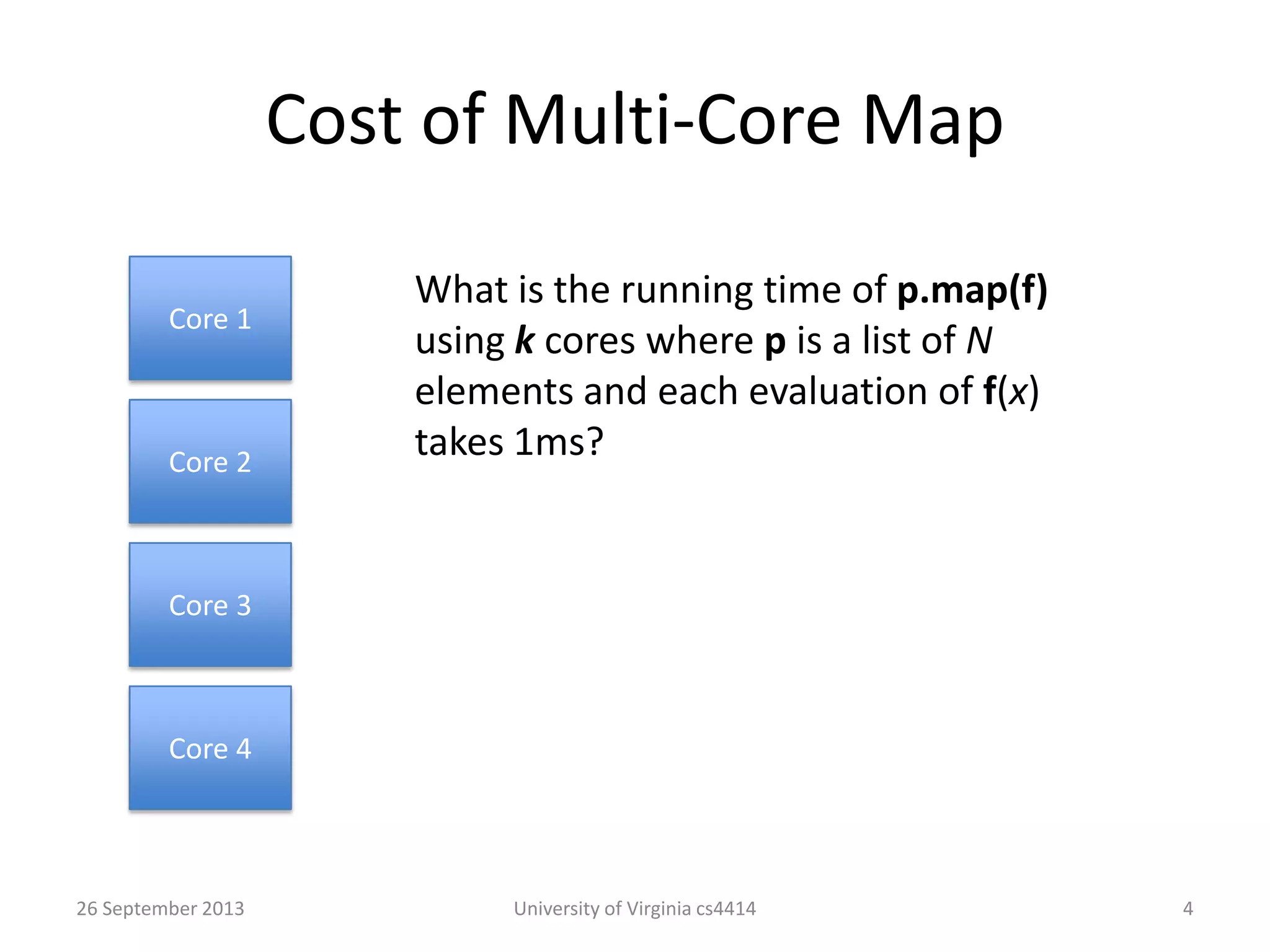

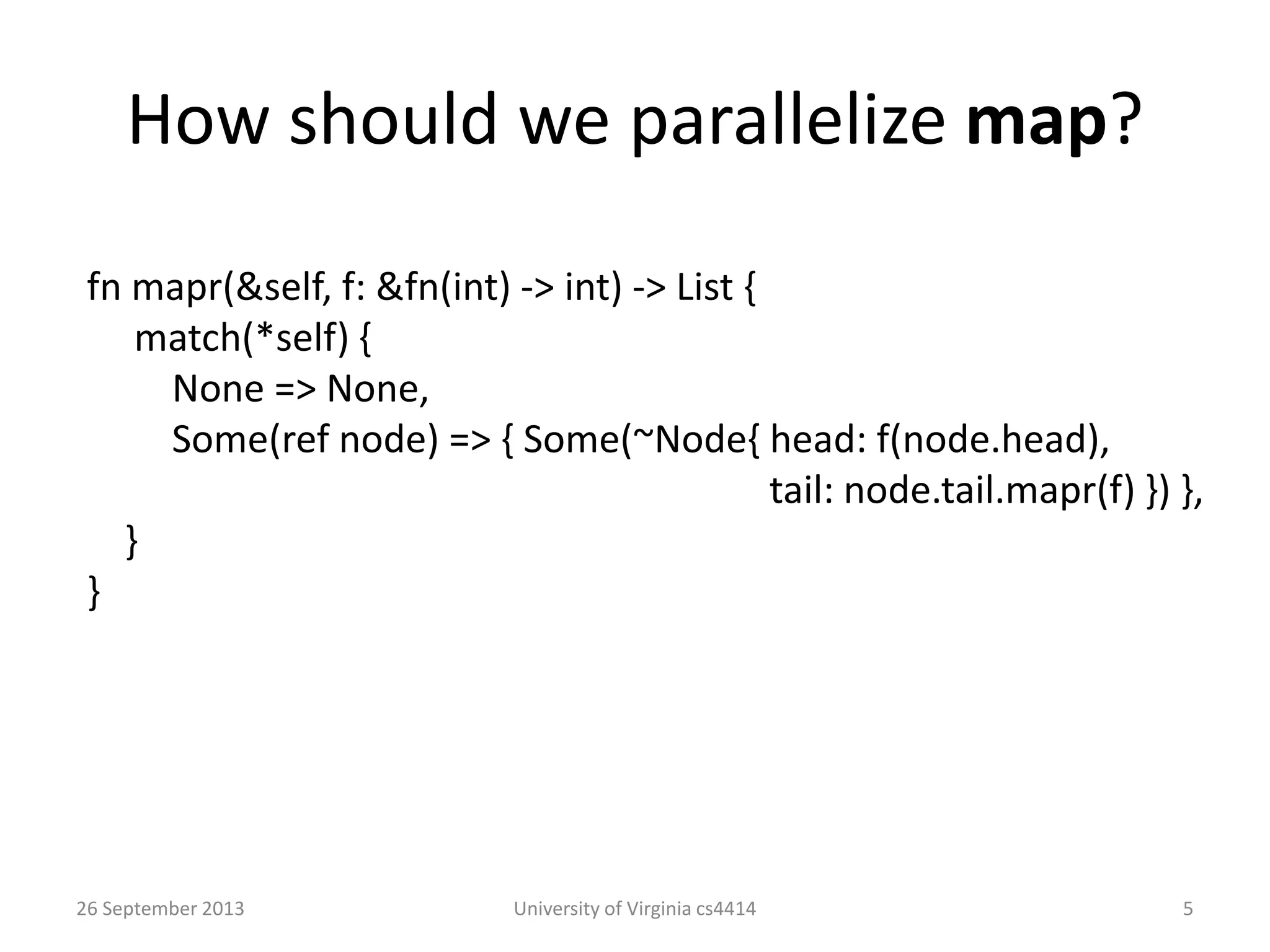

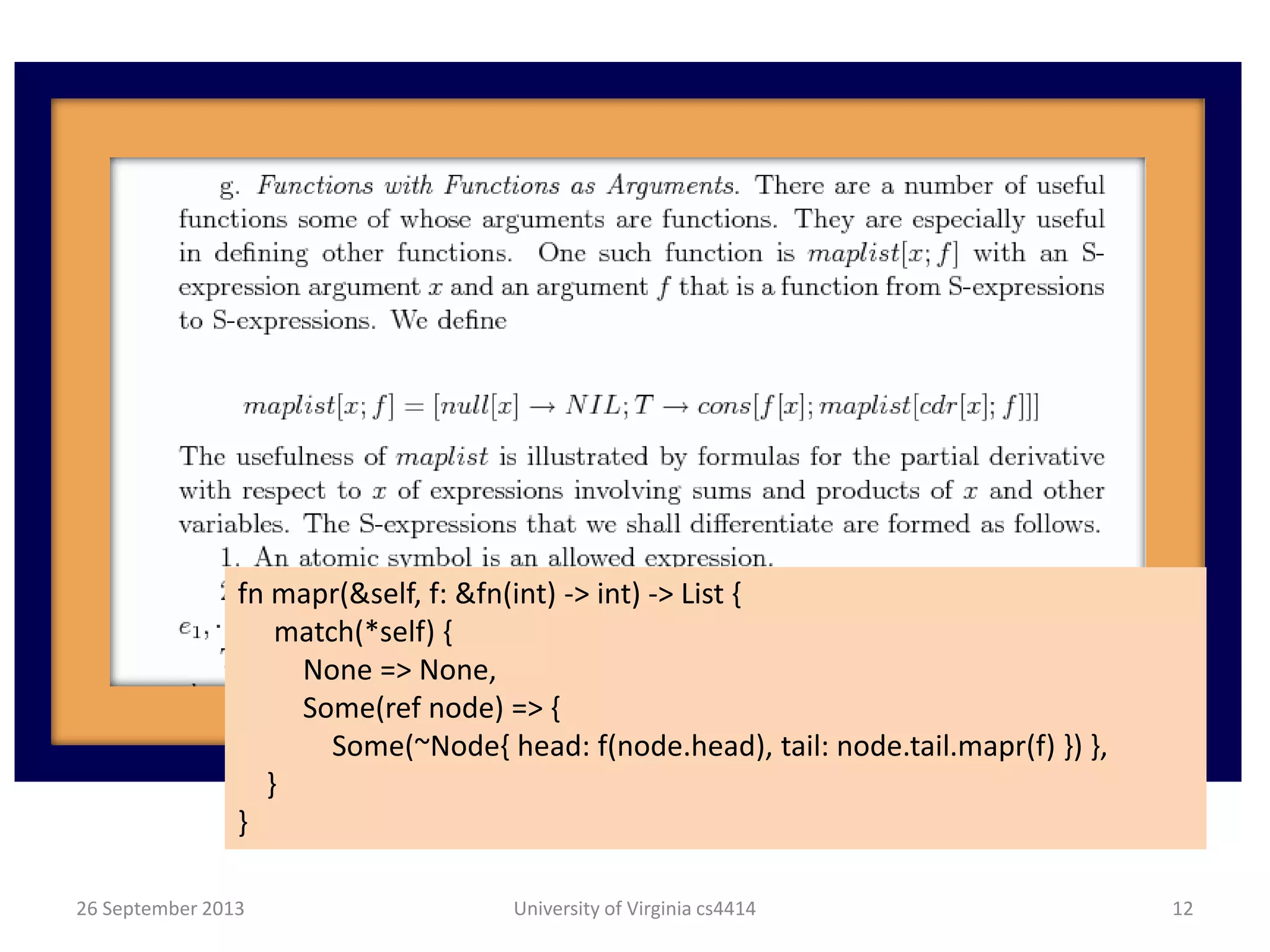

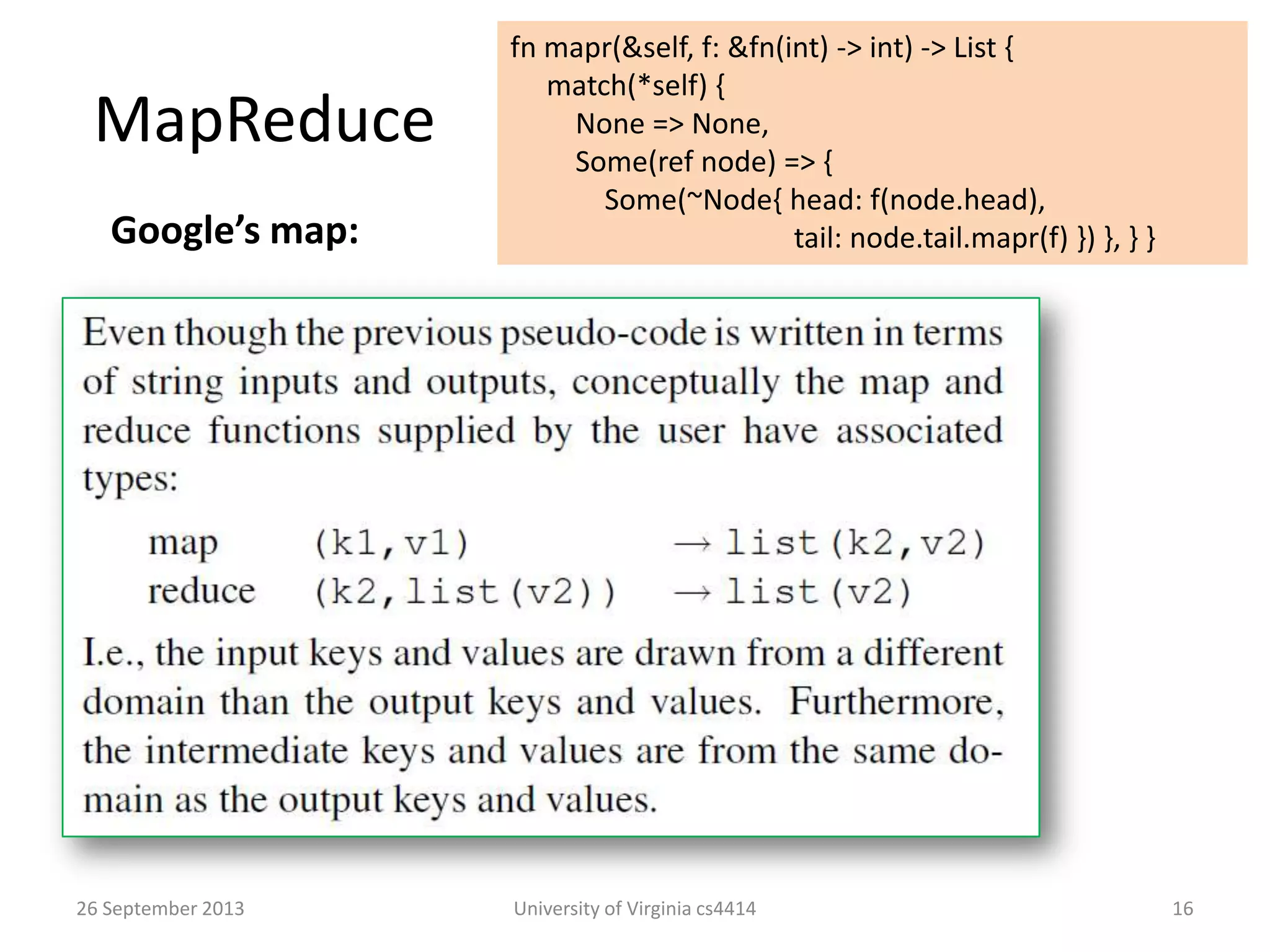

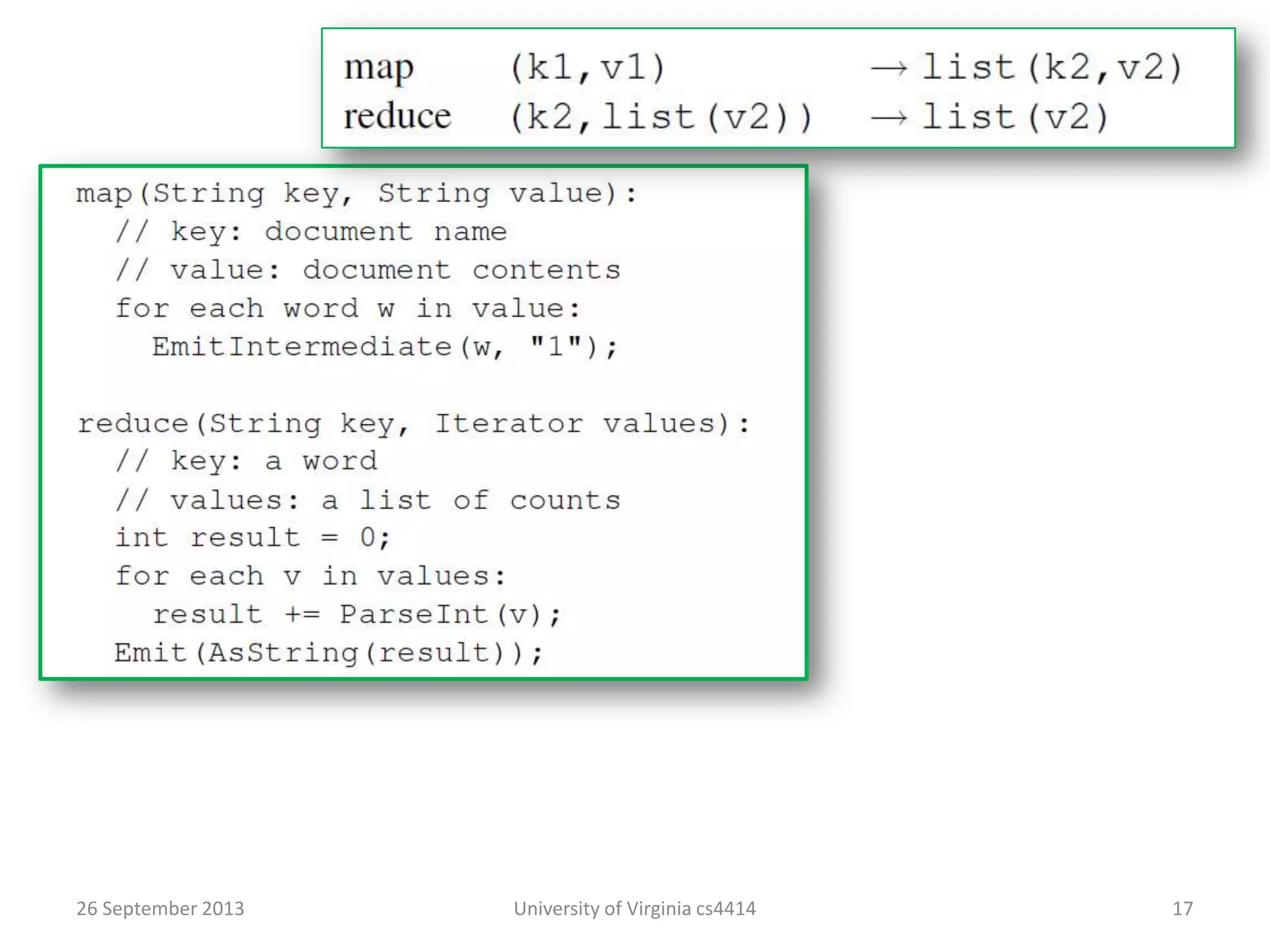

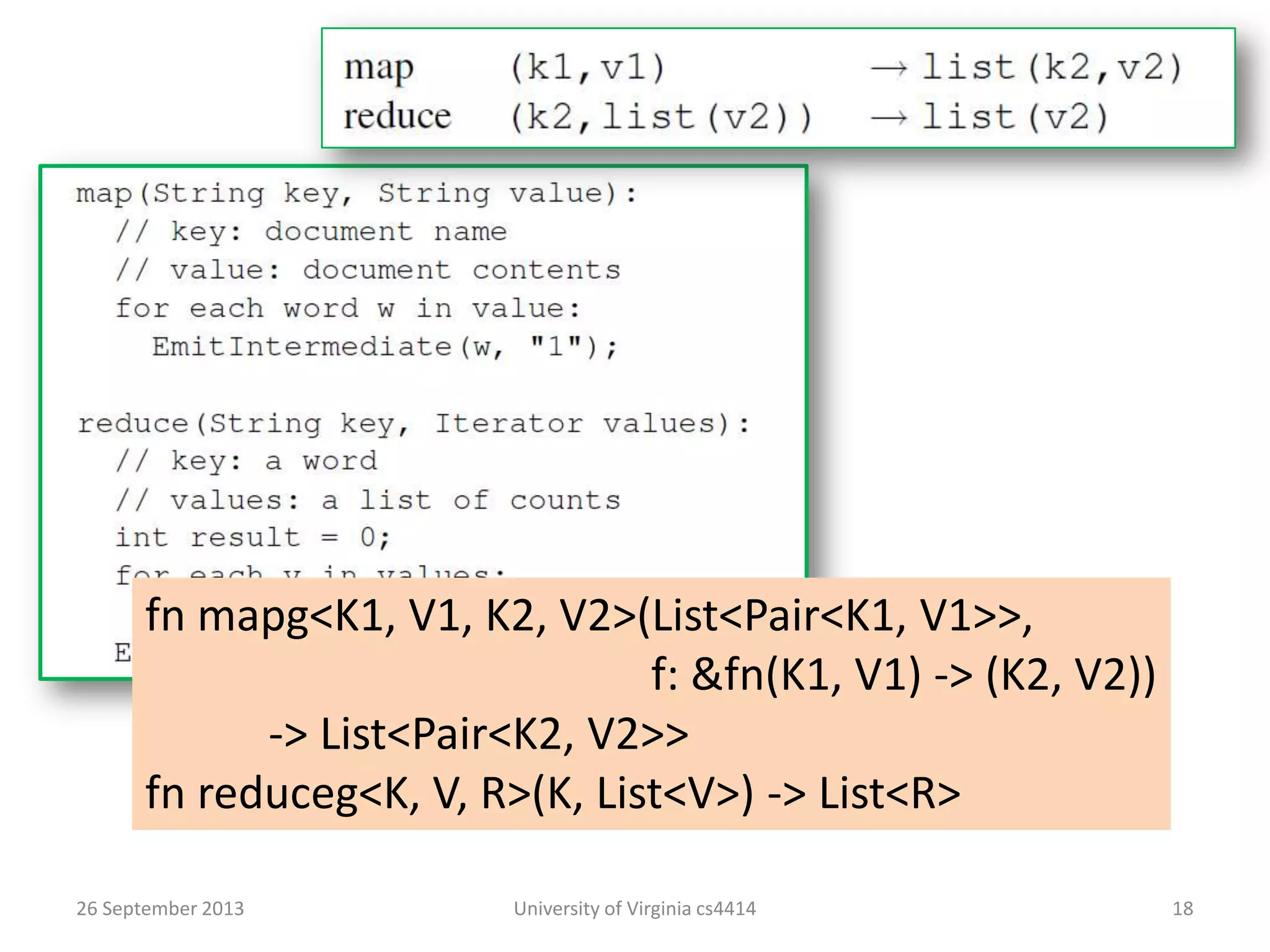

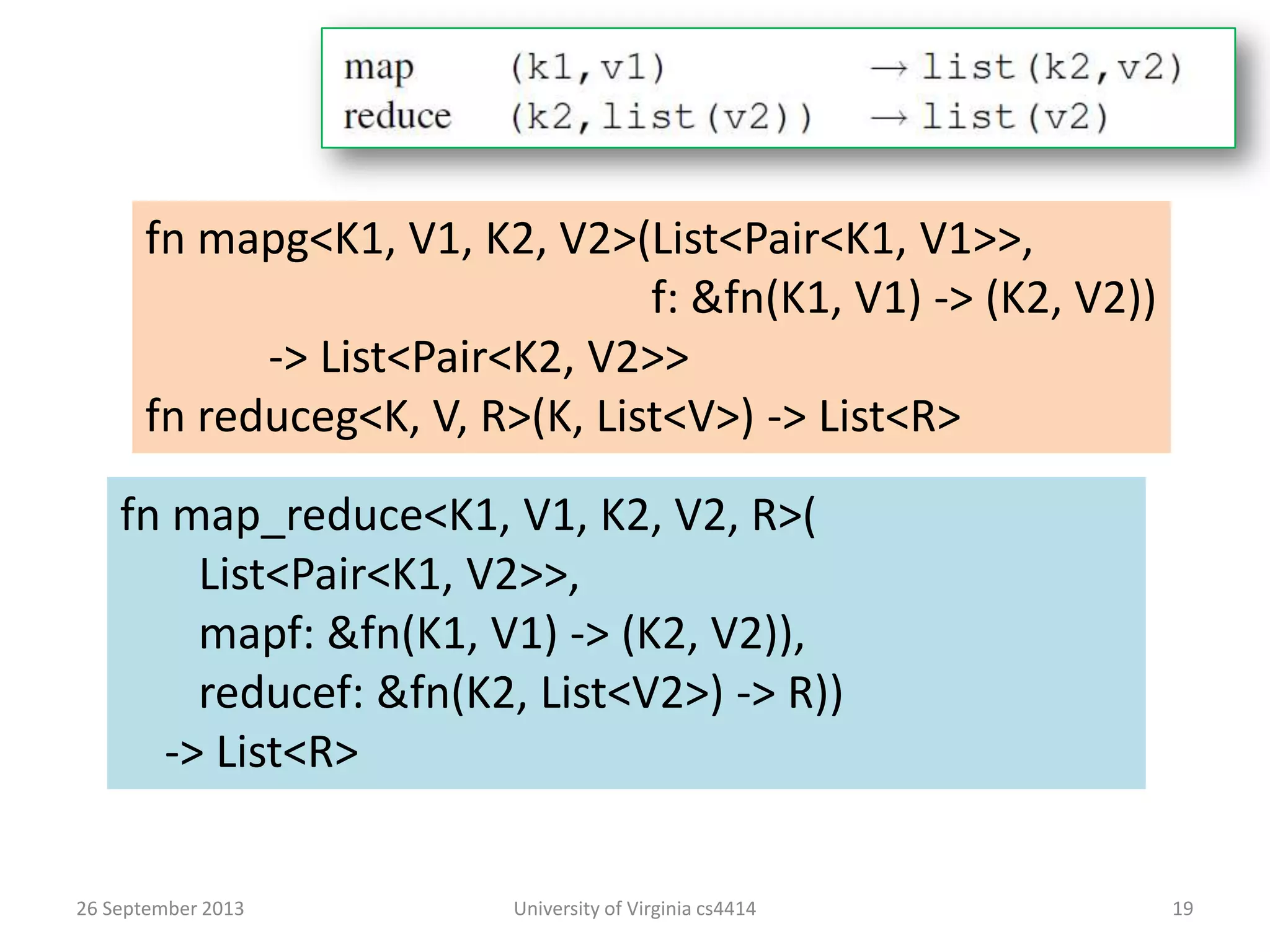

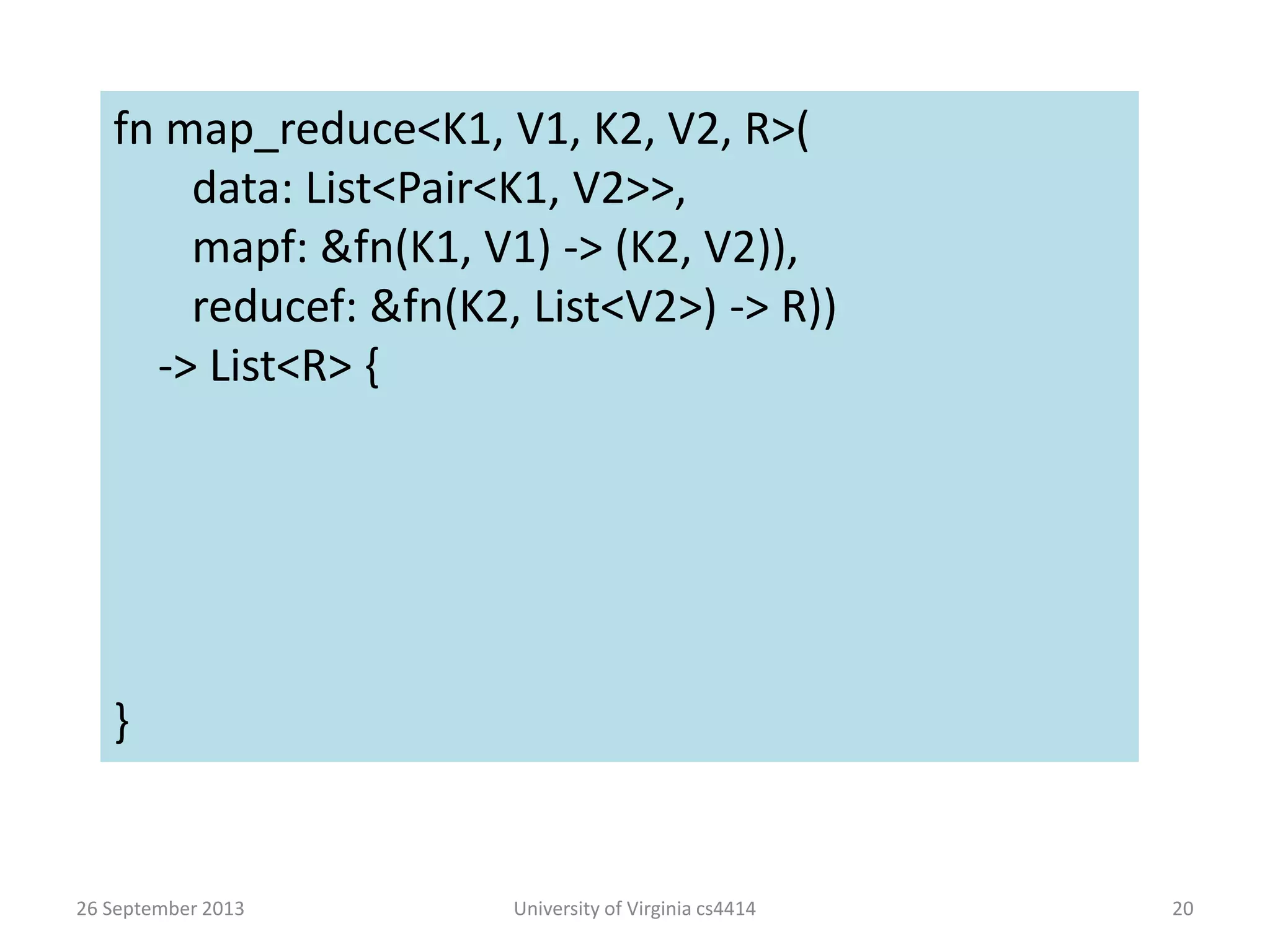

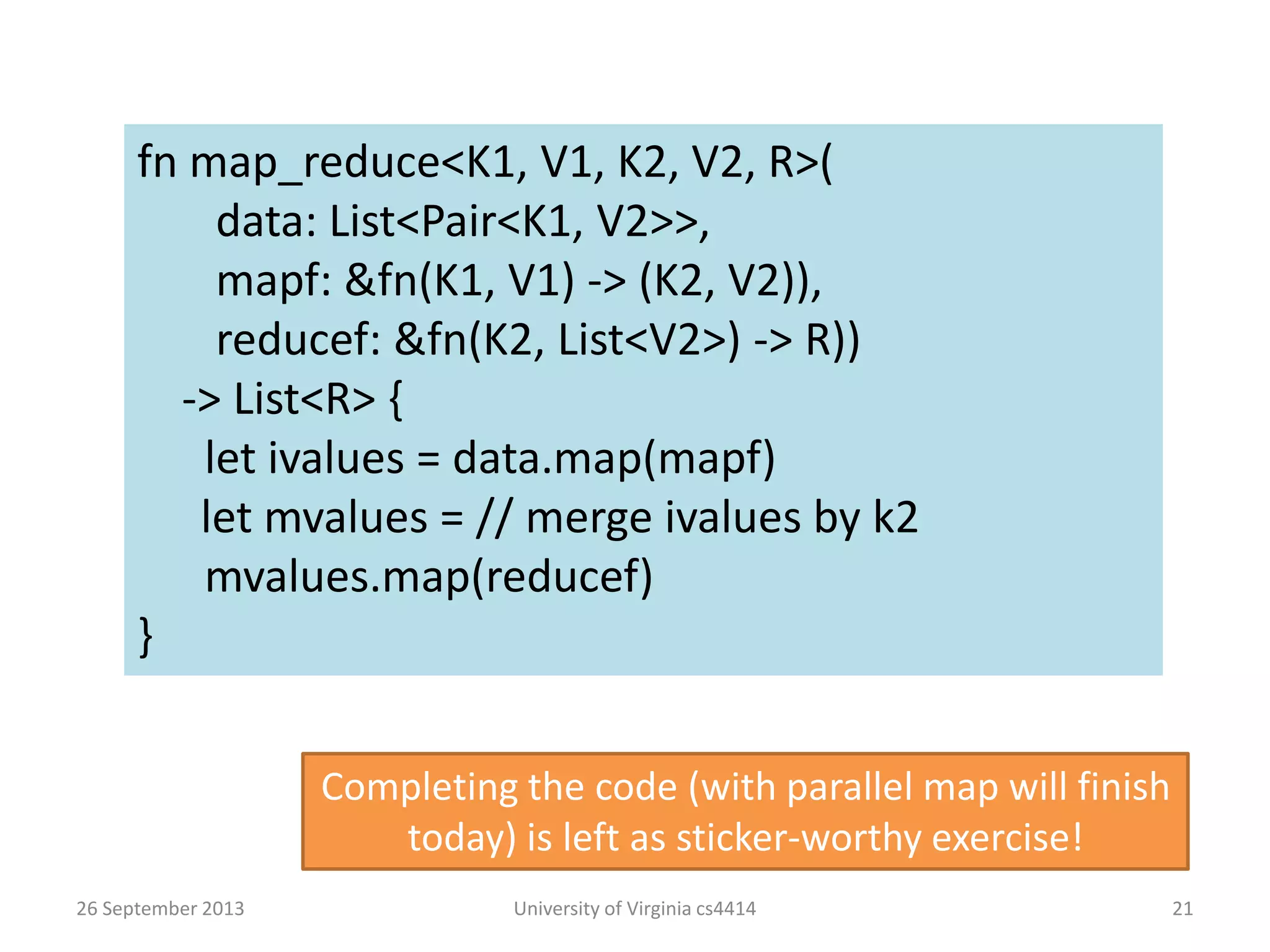

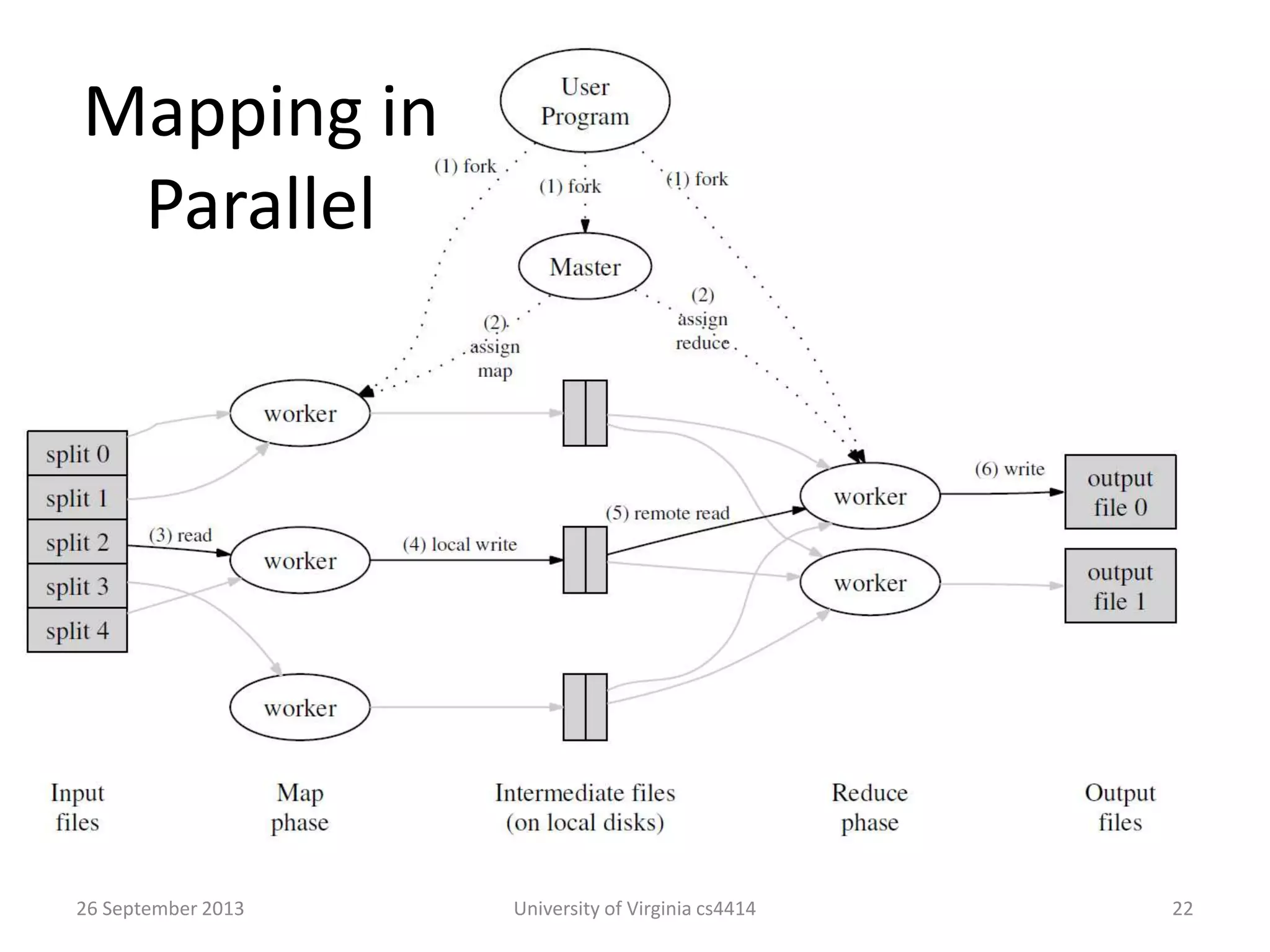

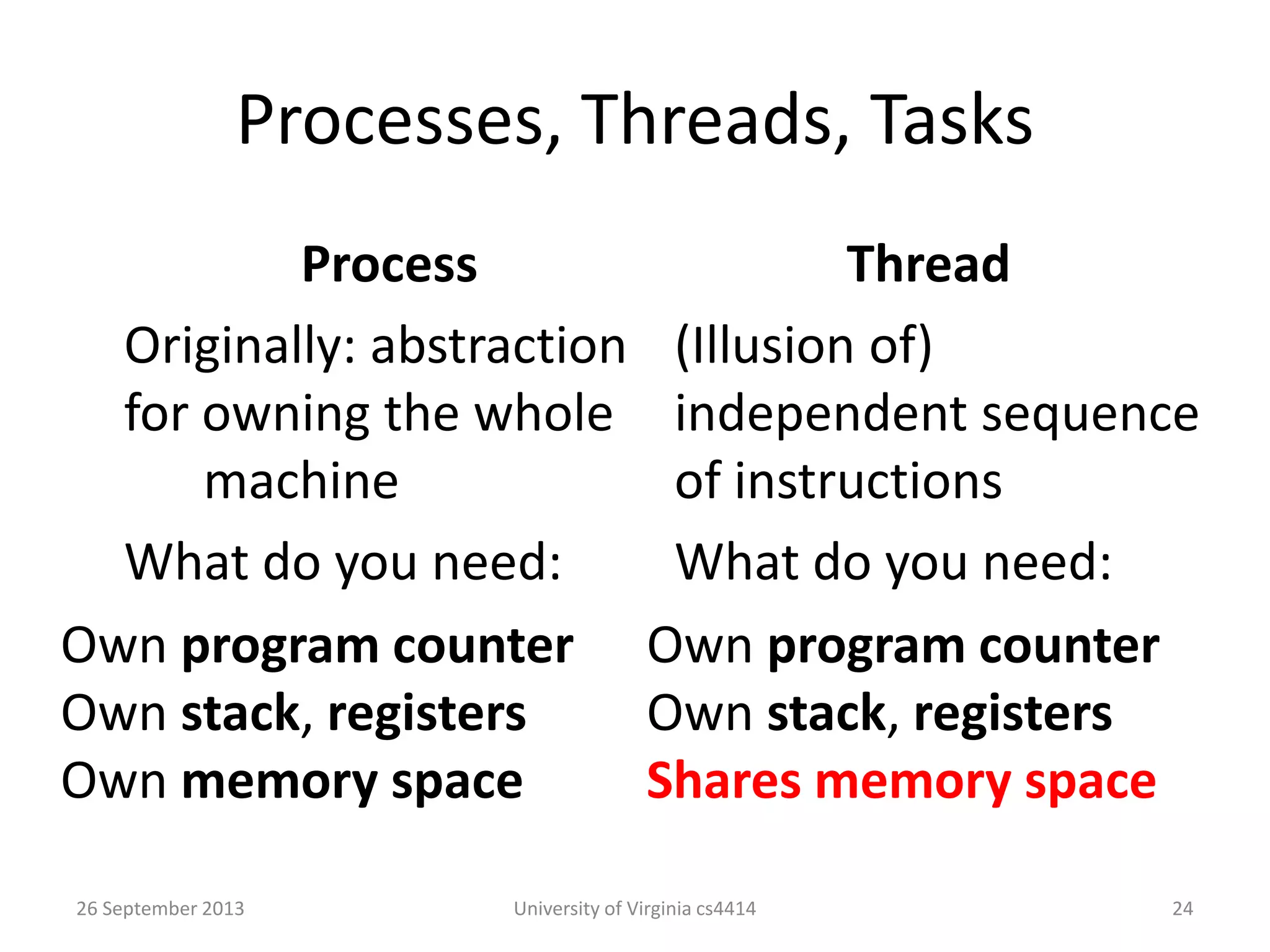

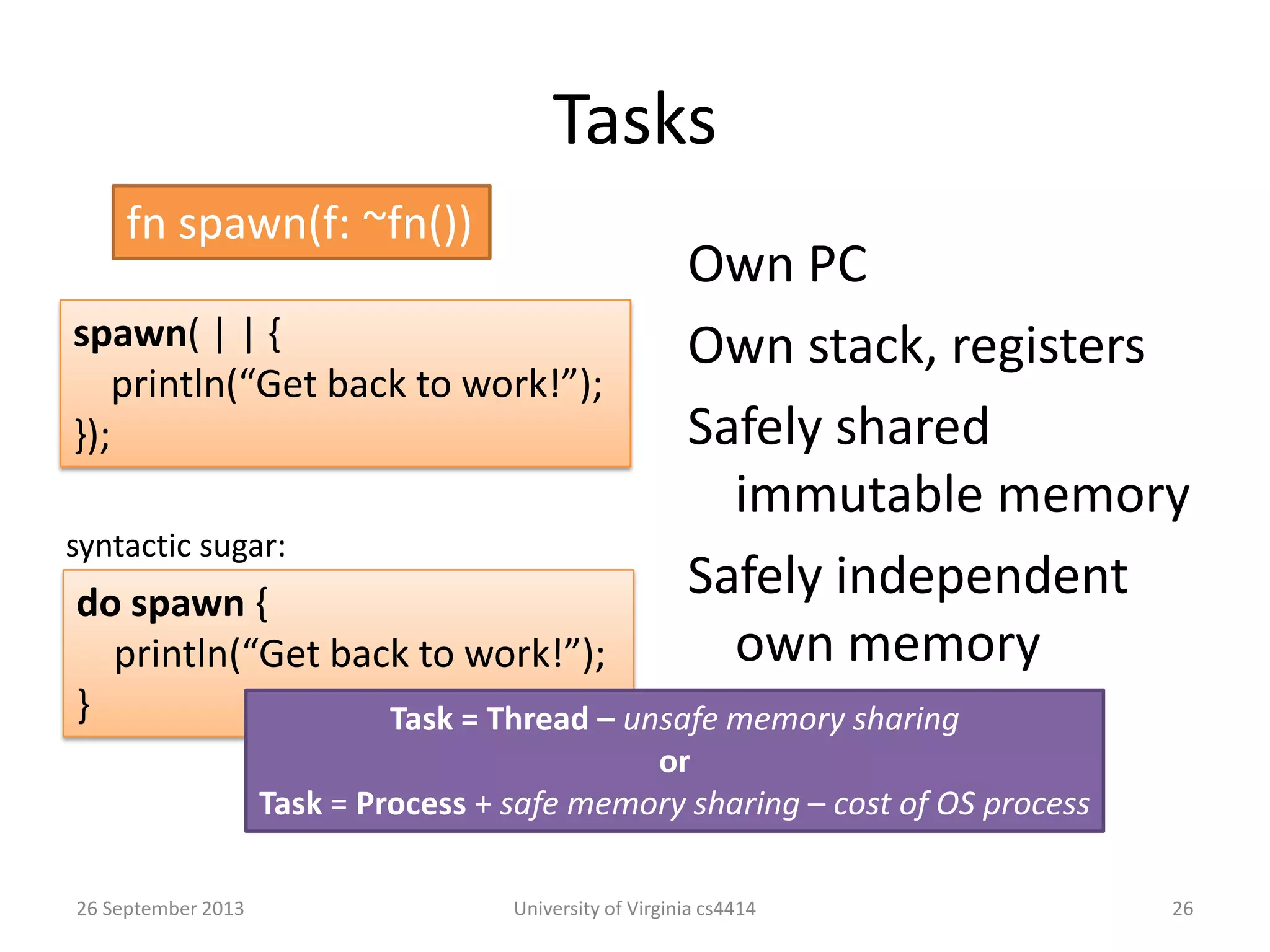

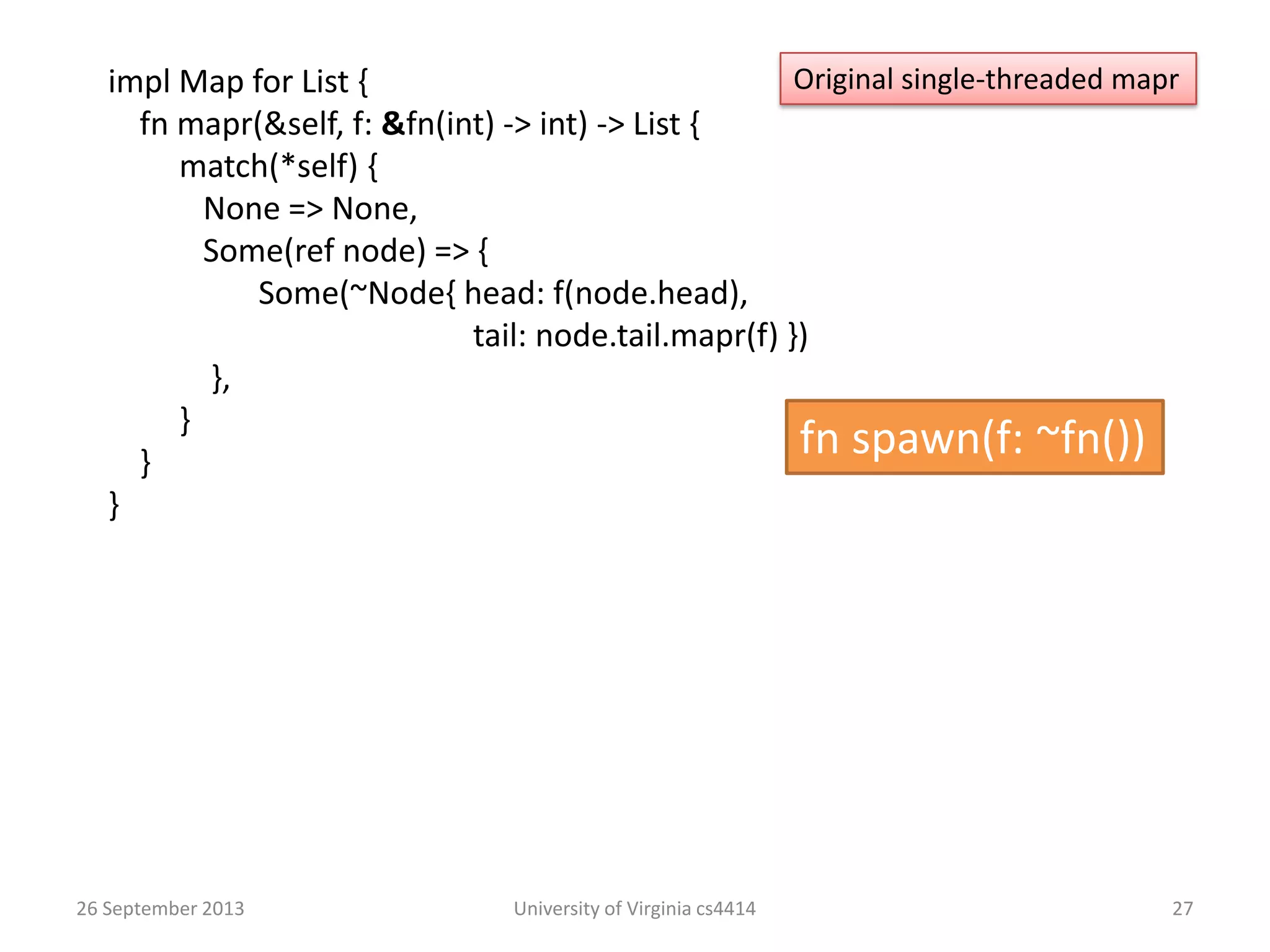

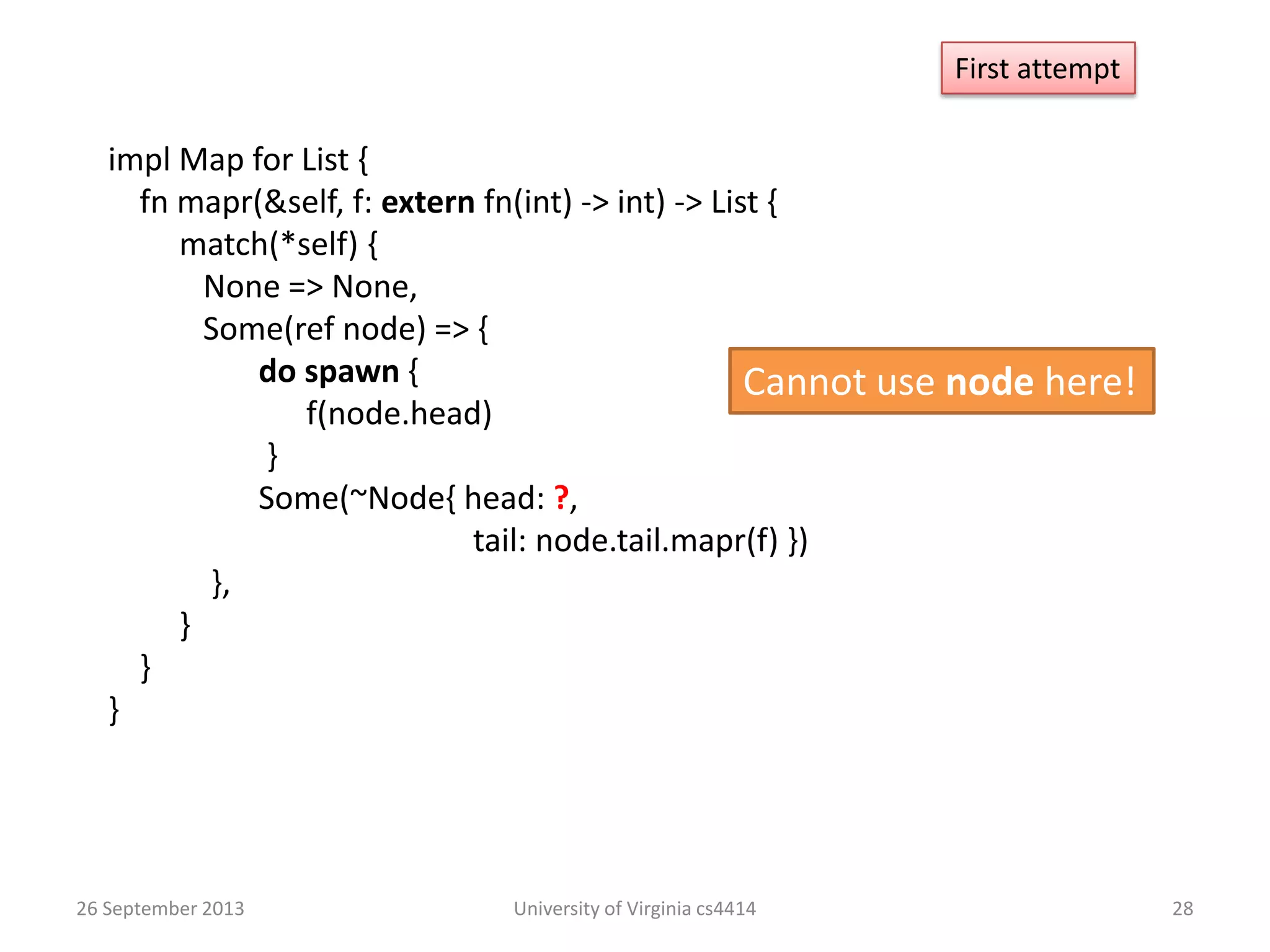

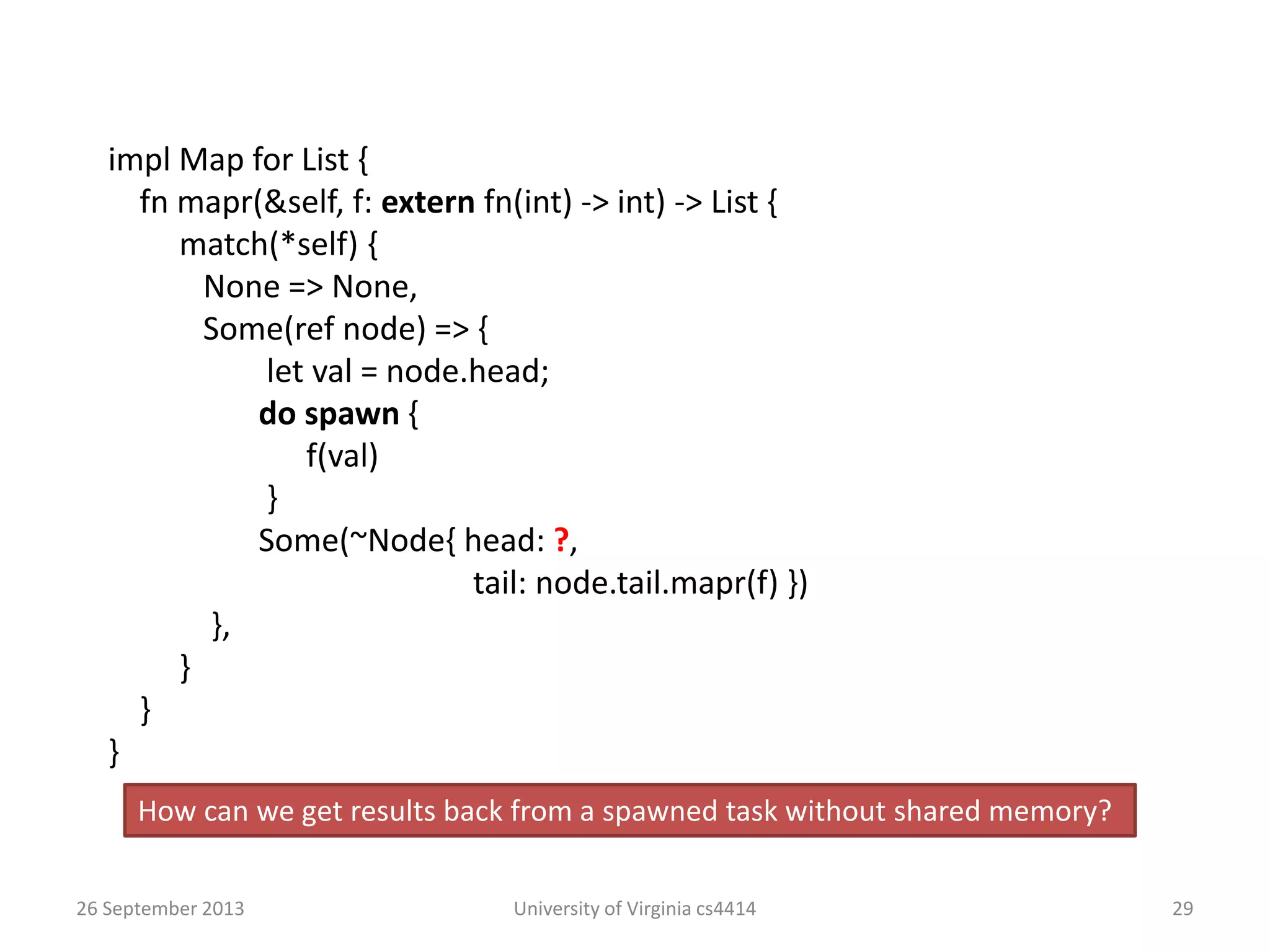

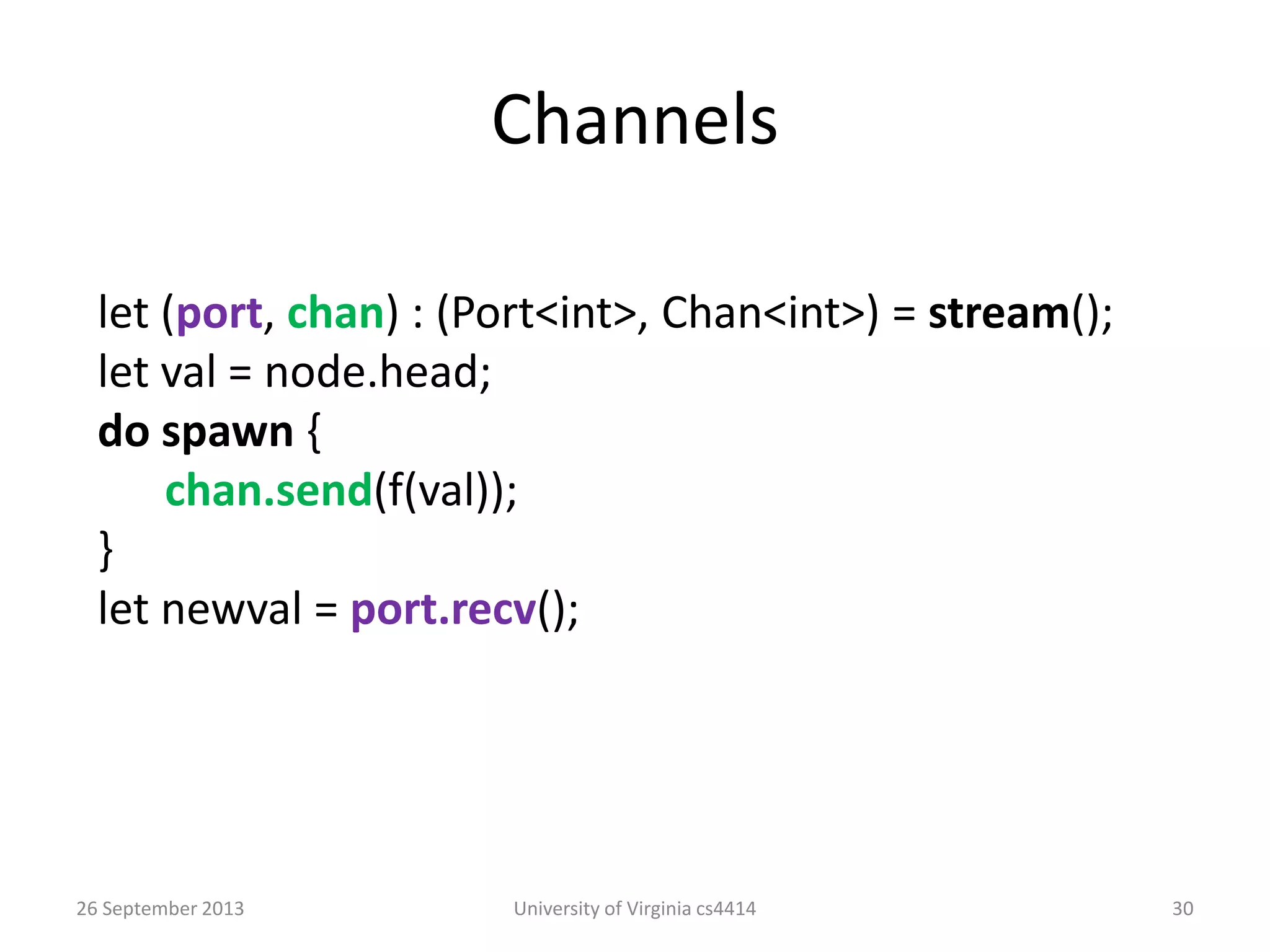

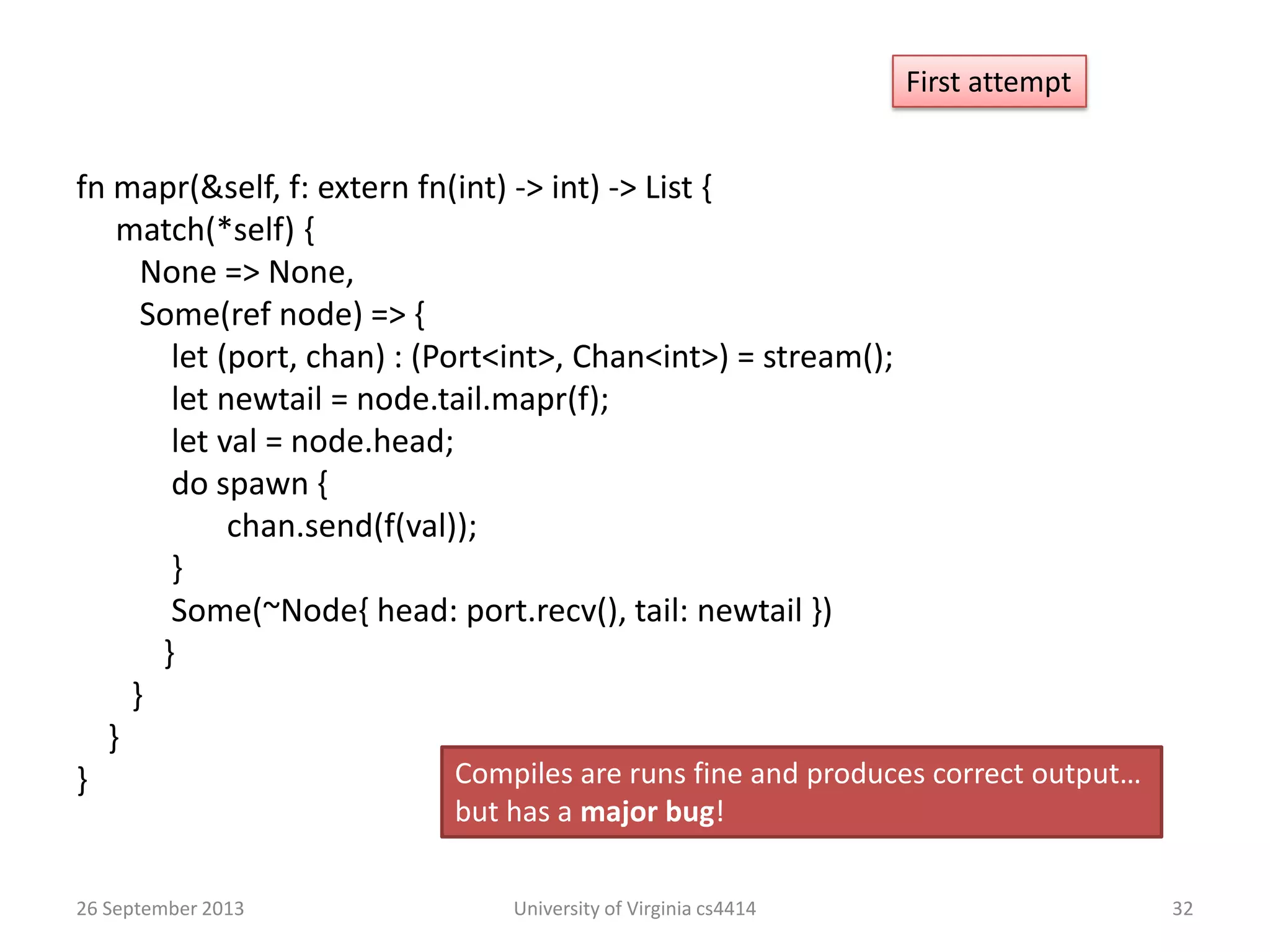

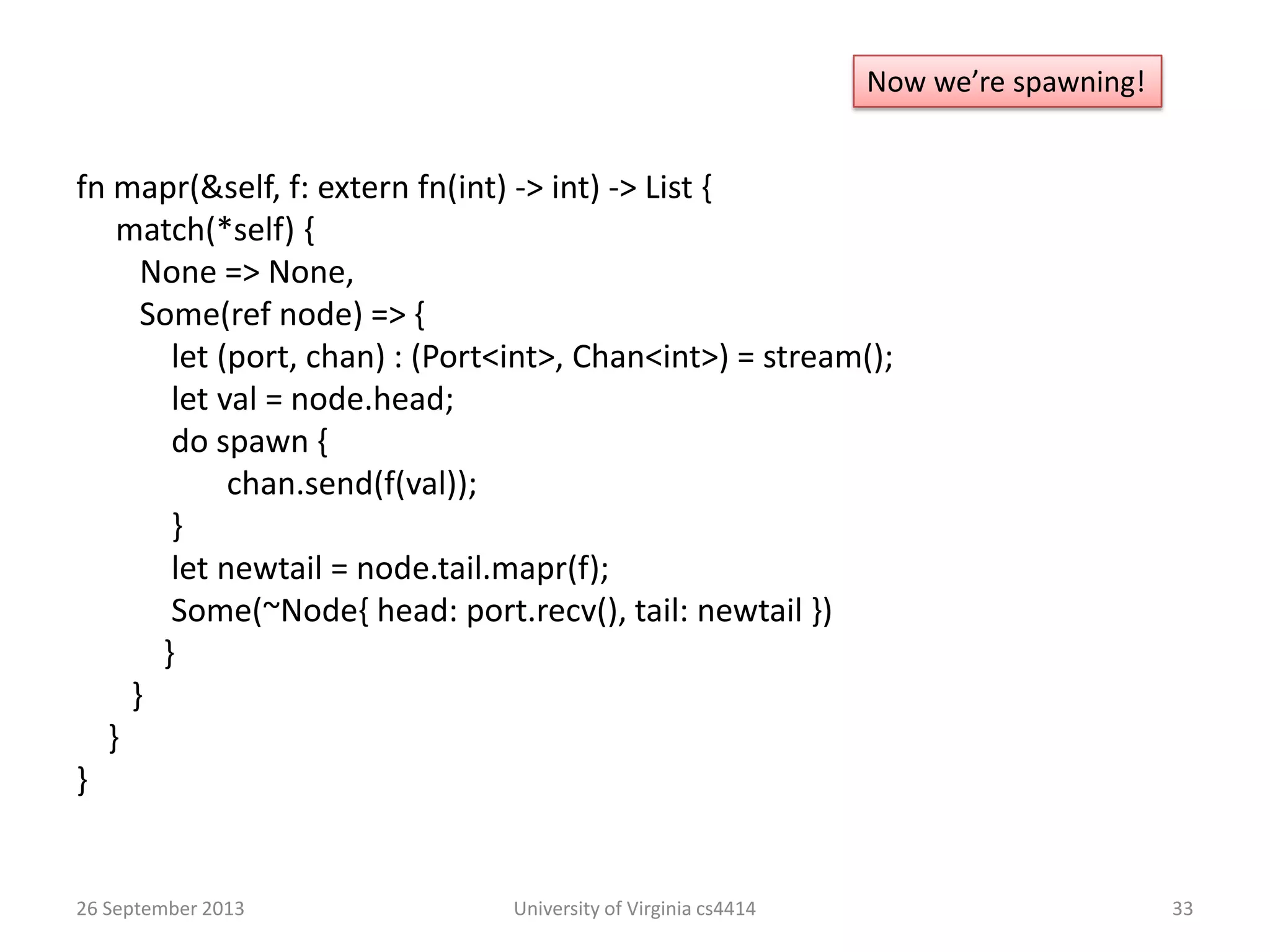

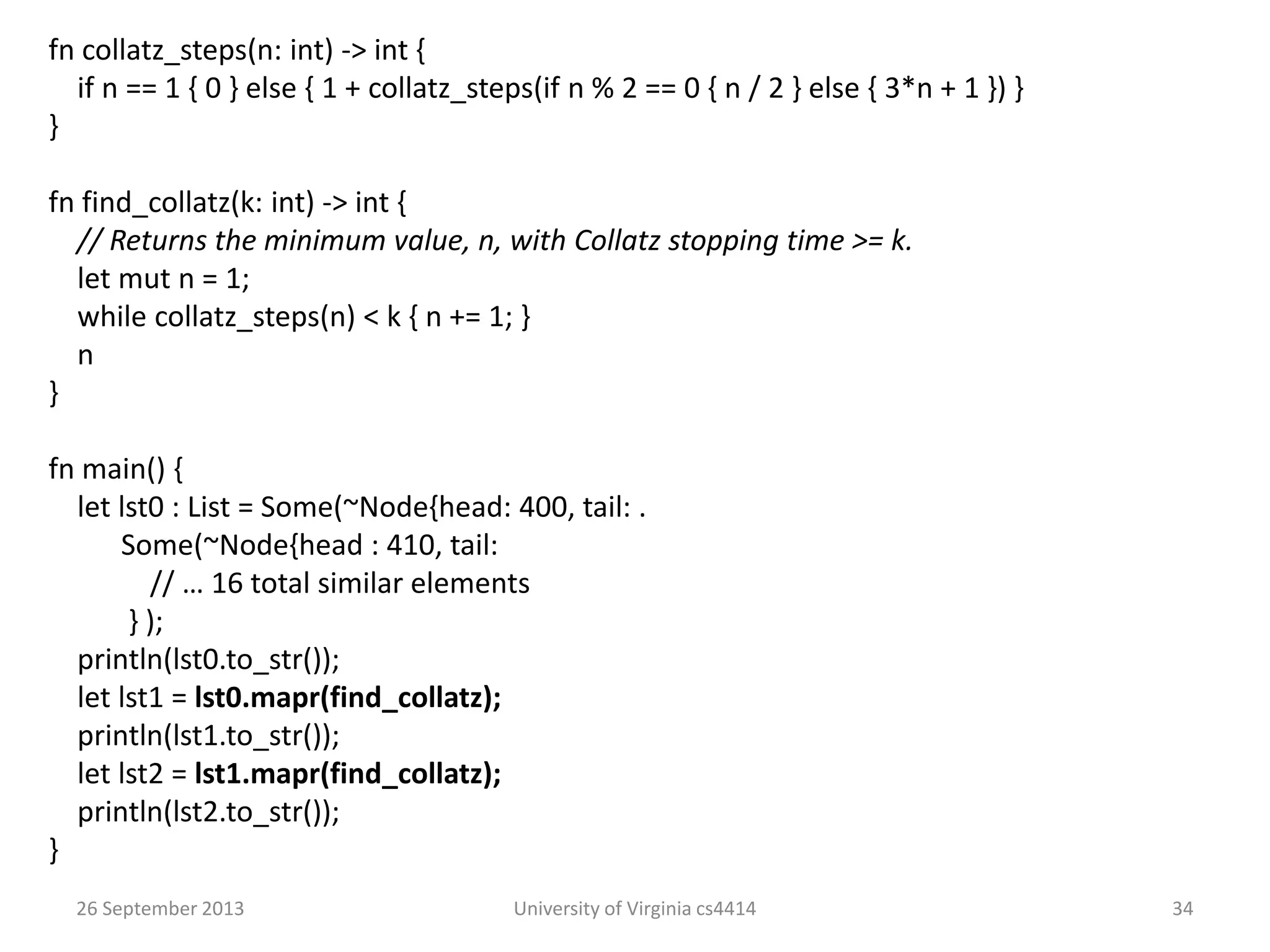

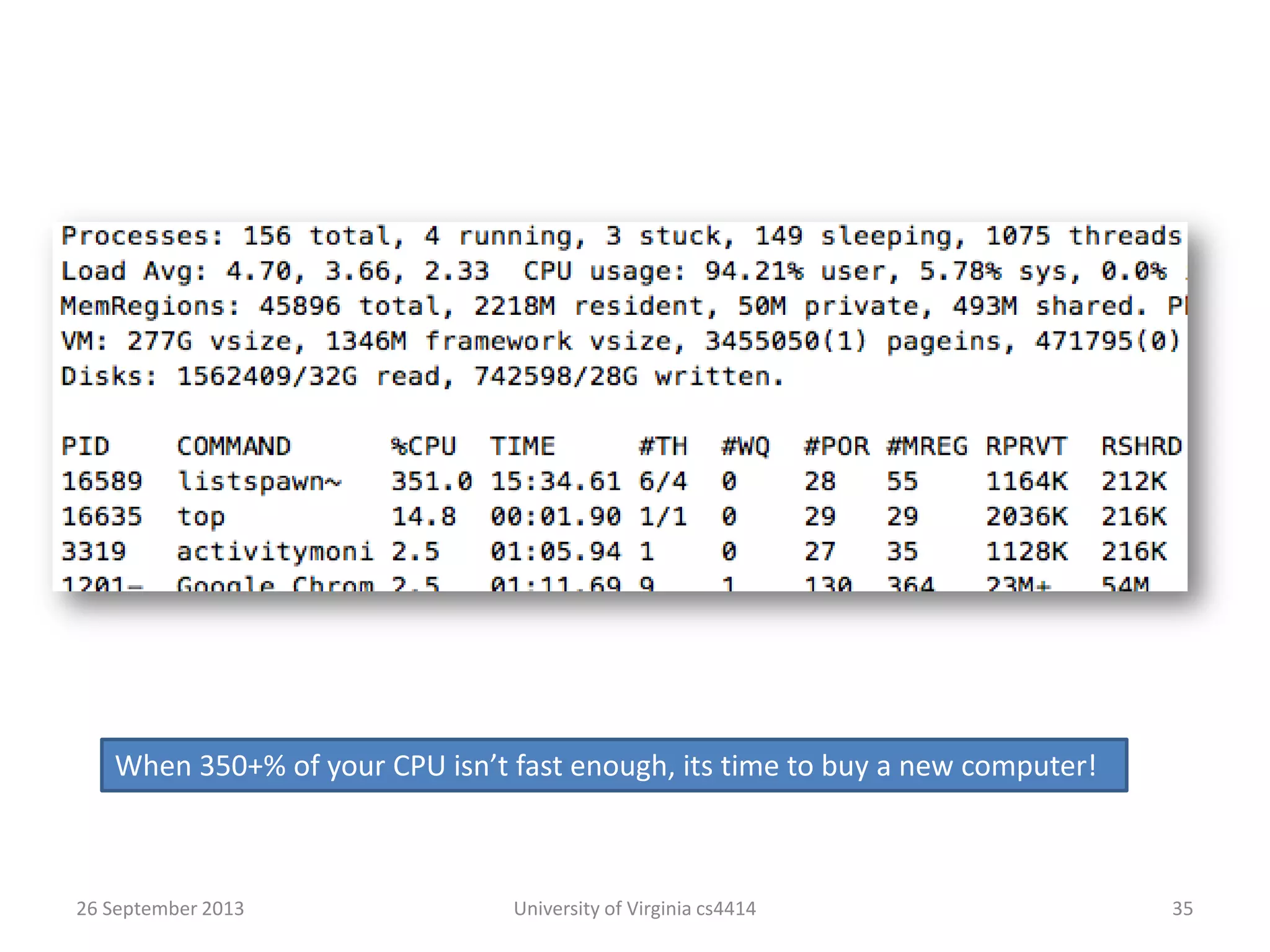

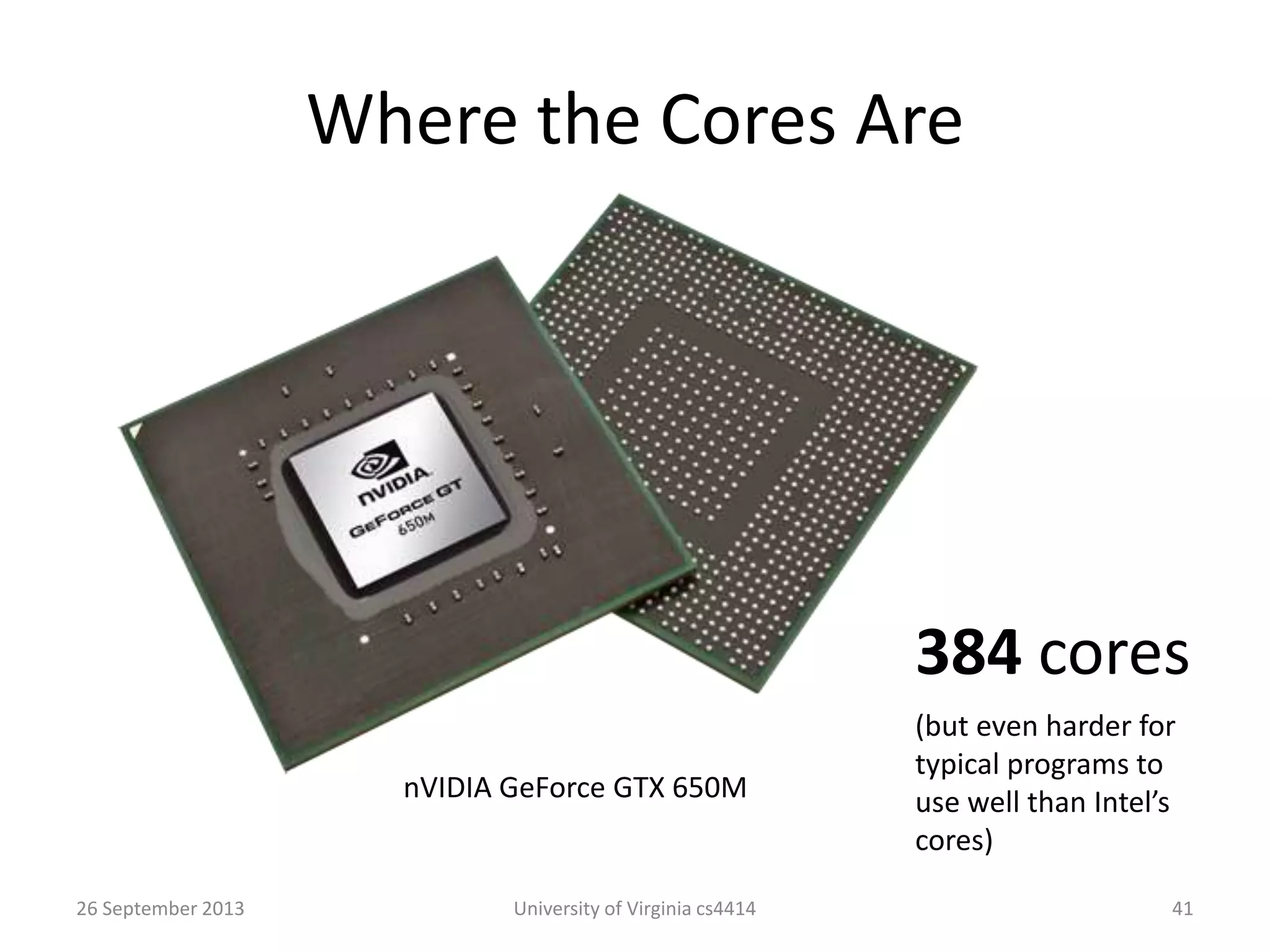

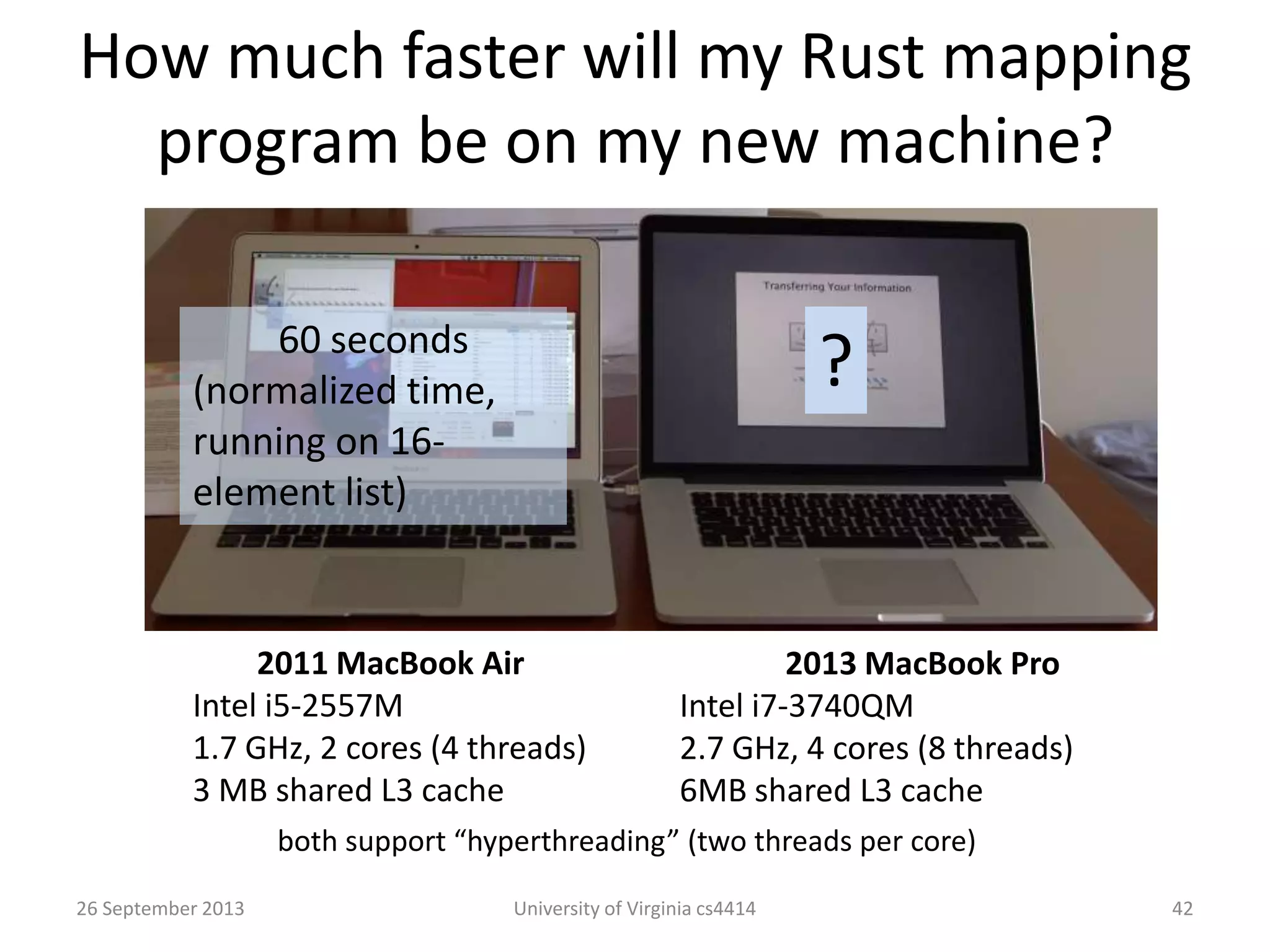

The document discusses parallelizing the map function in Rust. It begins with an explanation of the original sequential map implementation and a first attempt at parallelization using spawn. This raises issues around shared memory that are solved through the use of channels. The presentation then discusses tasks in Rust and how they enable safely sharing immutable data. It concludes with questions about how much faster a parallel map implementation would be on different machines with varying numbers of cores.