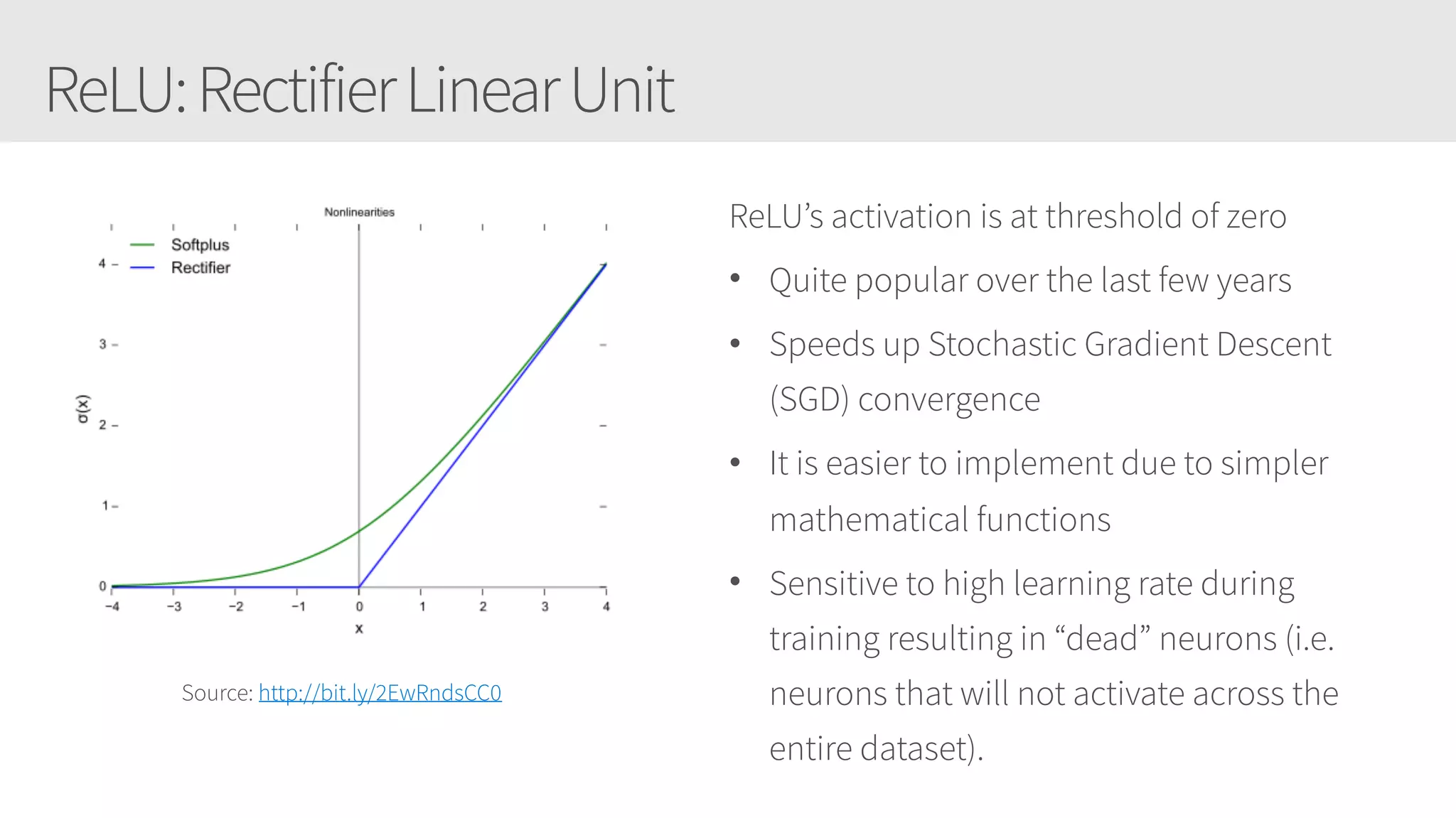

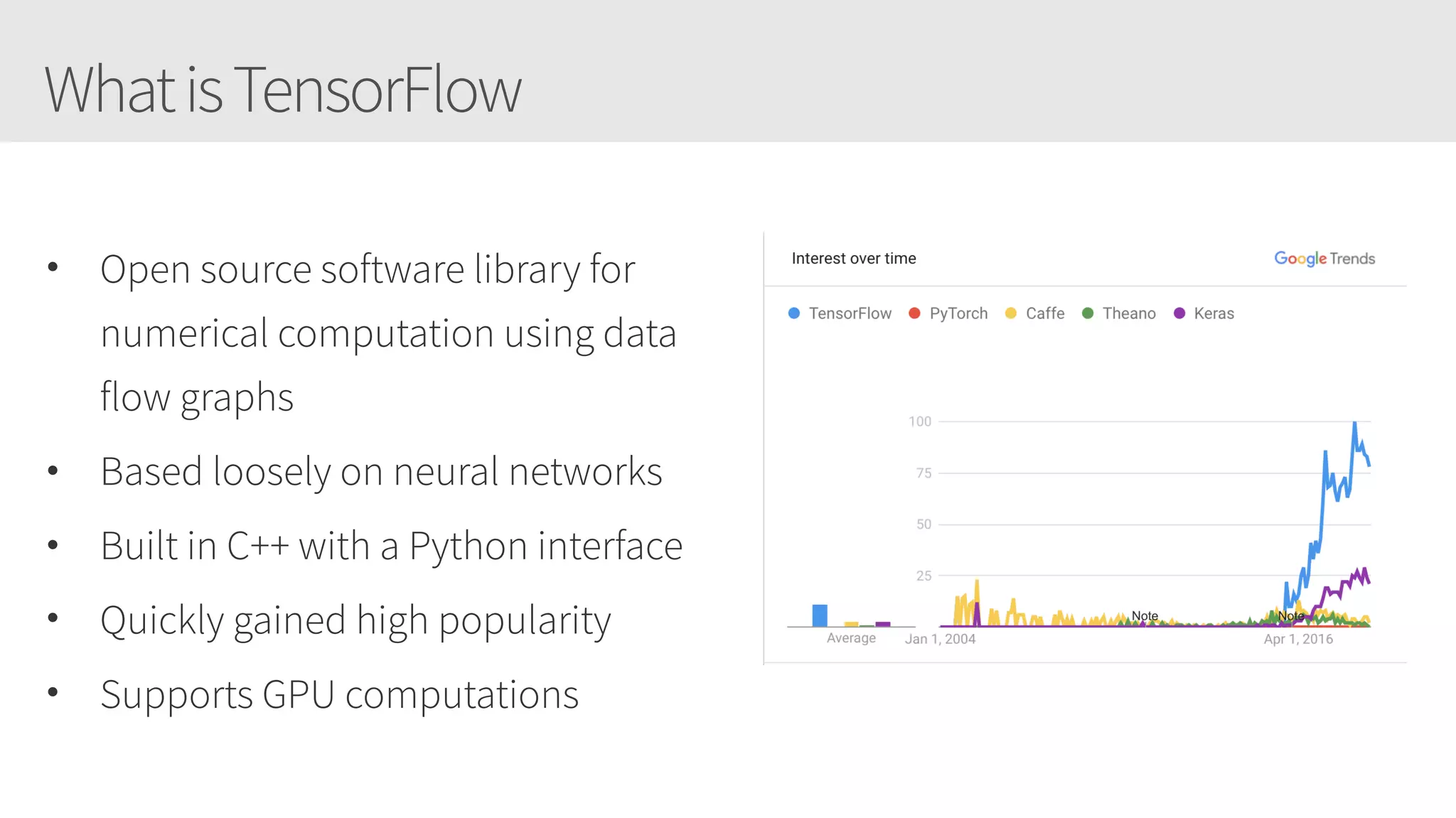

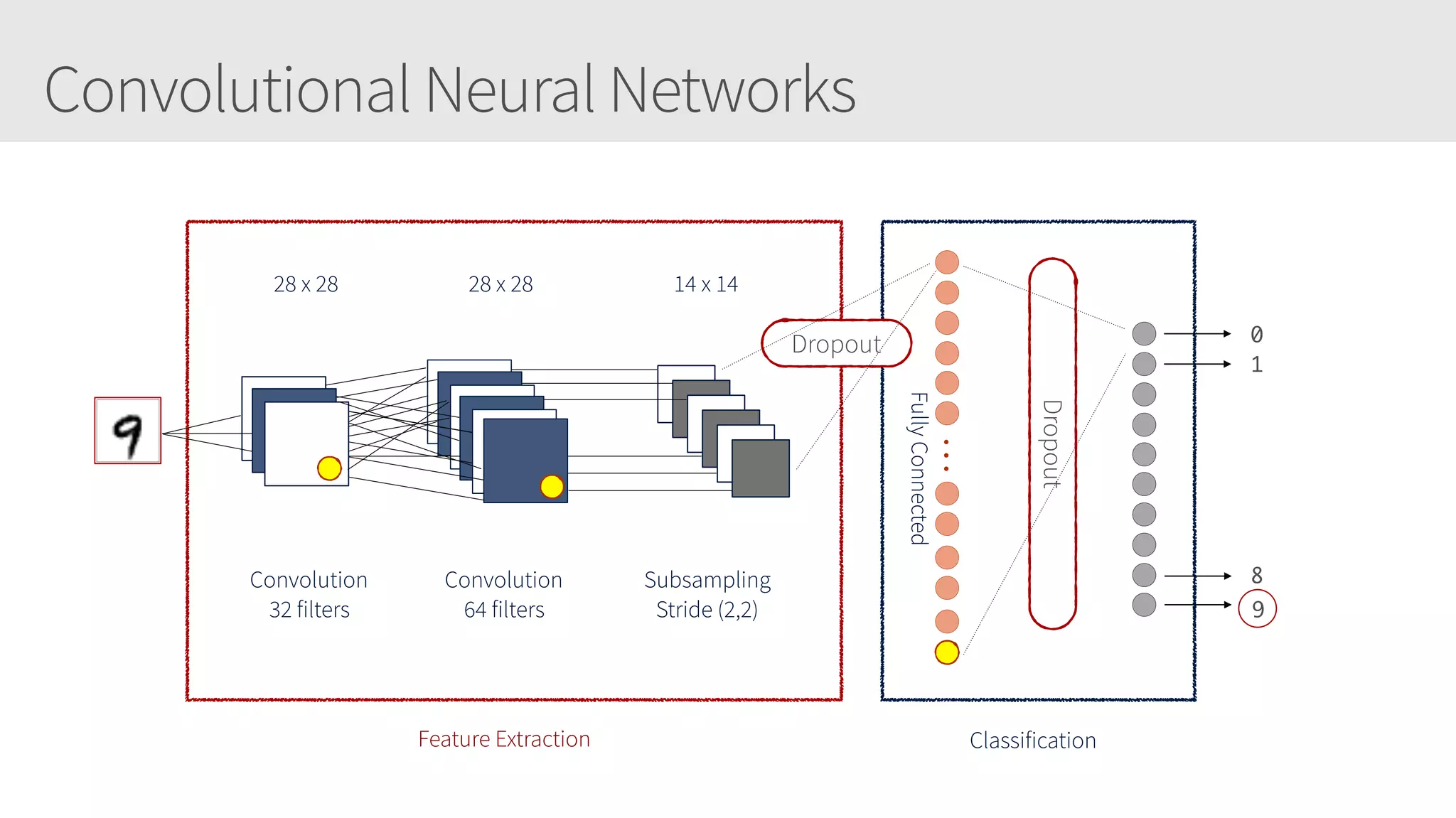

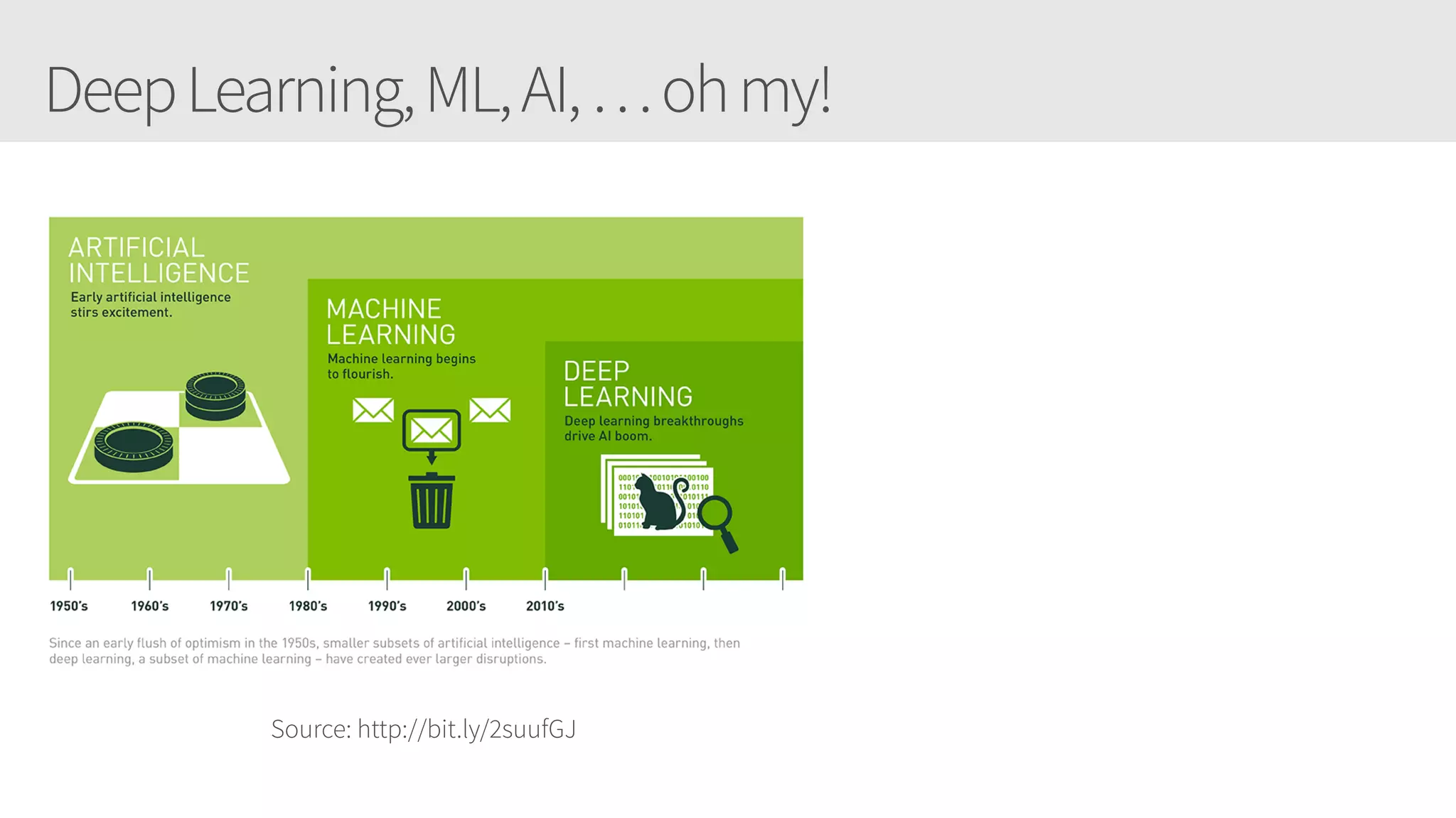

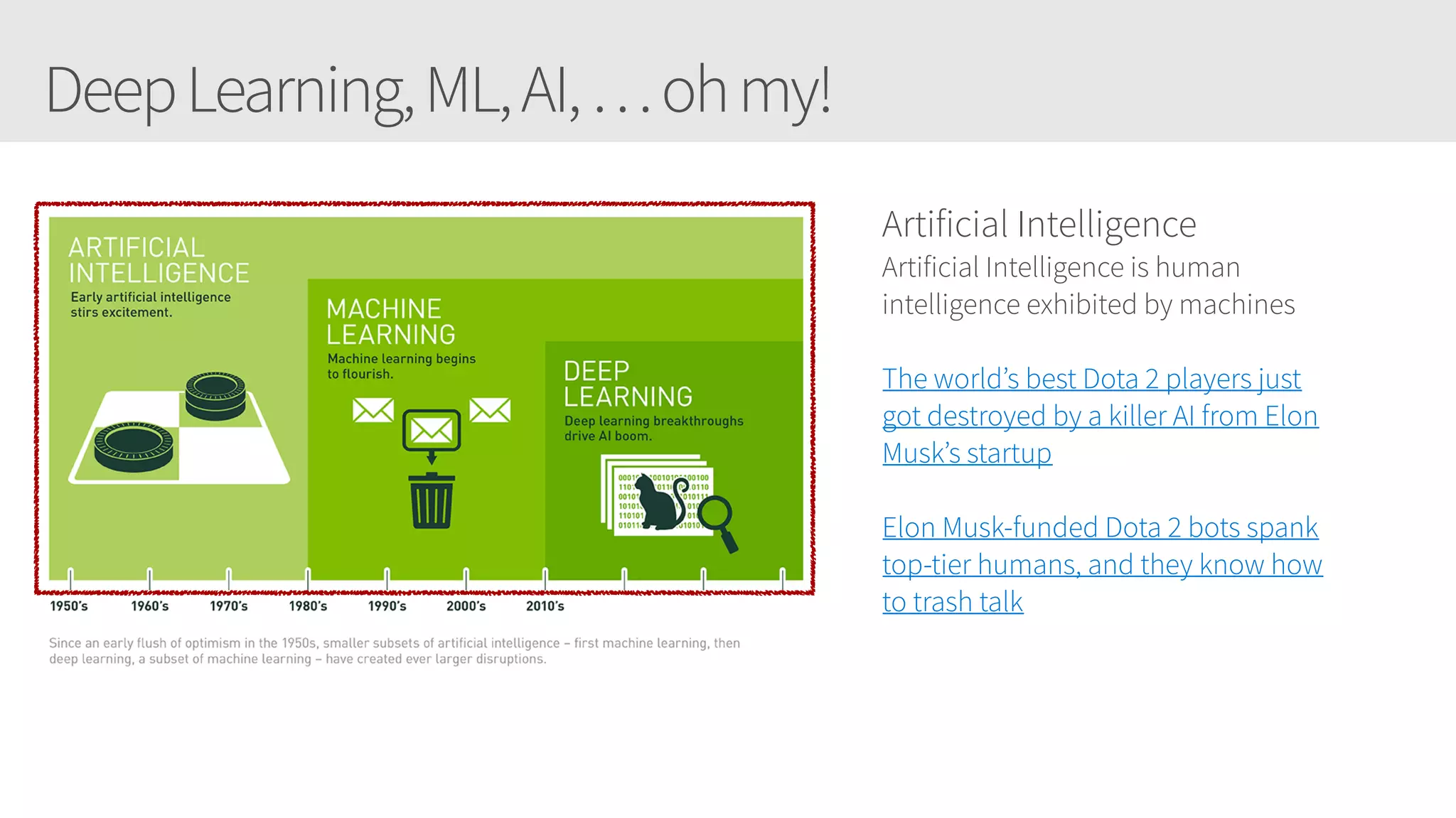

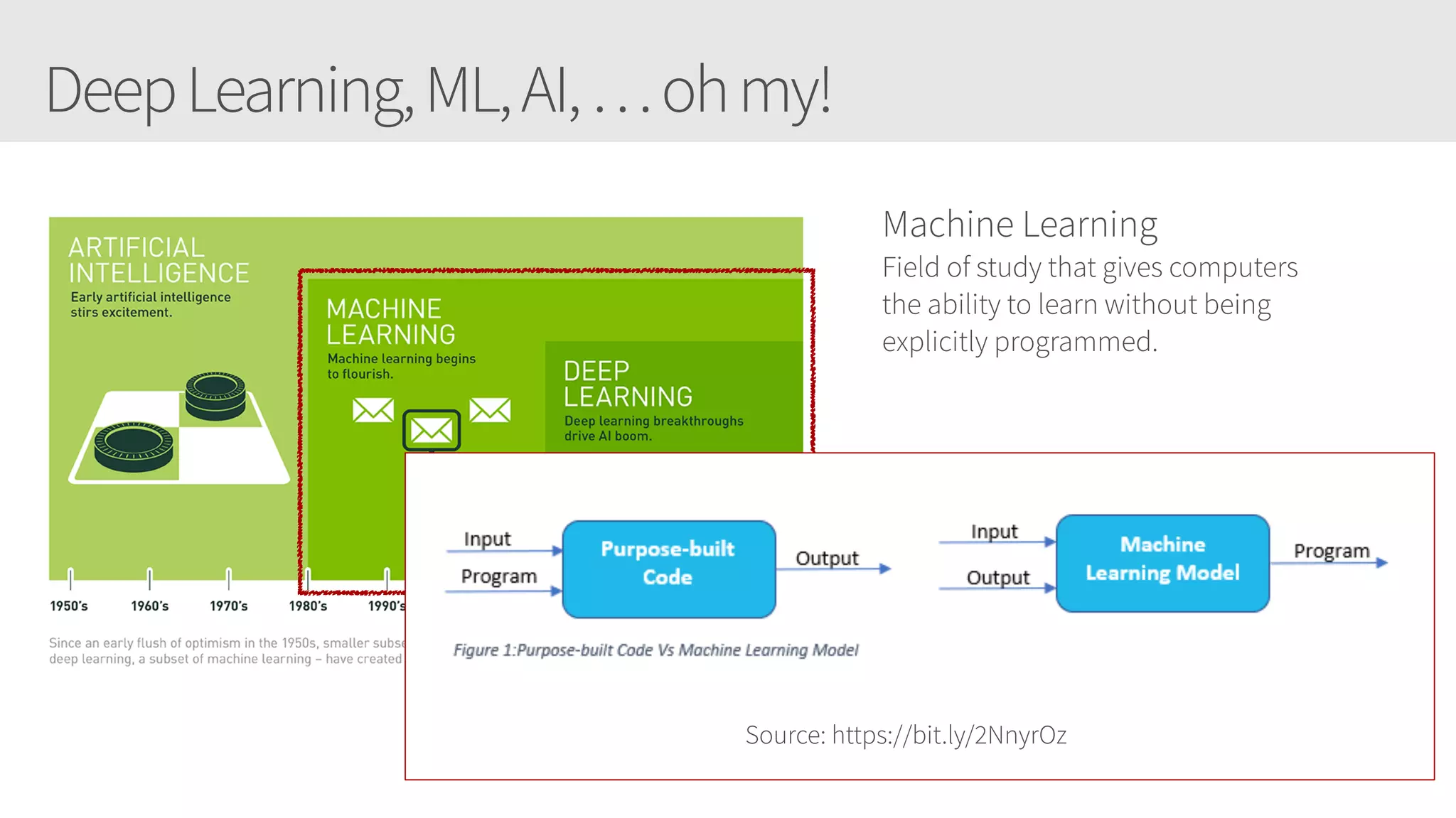

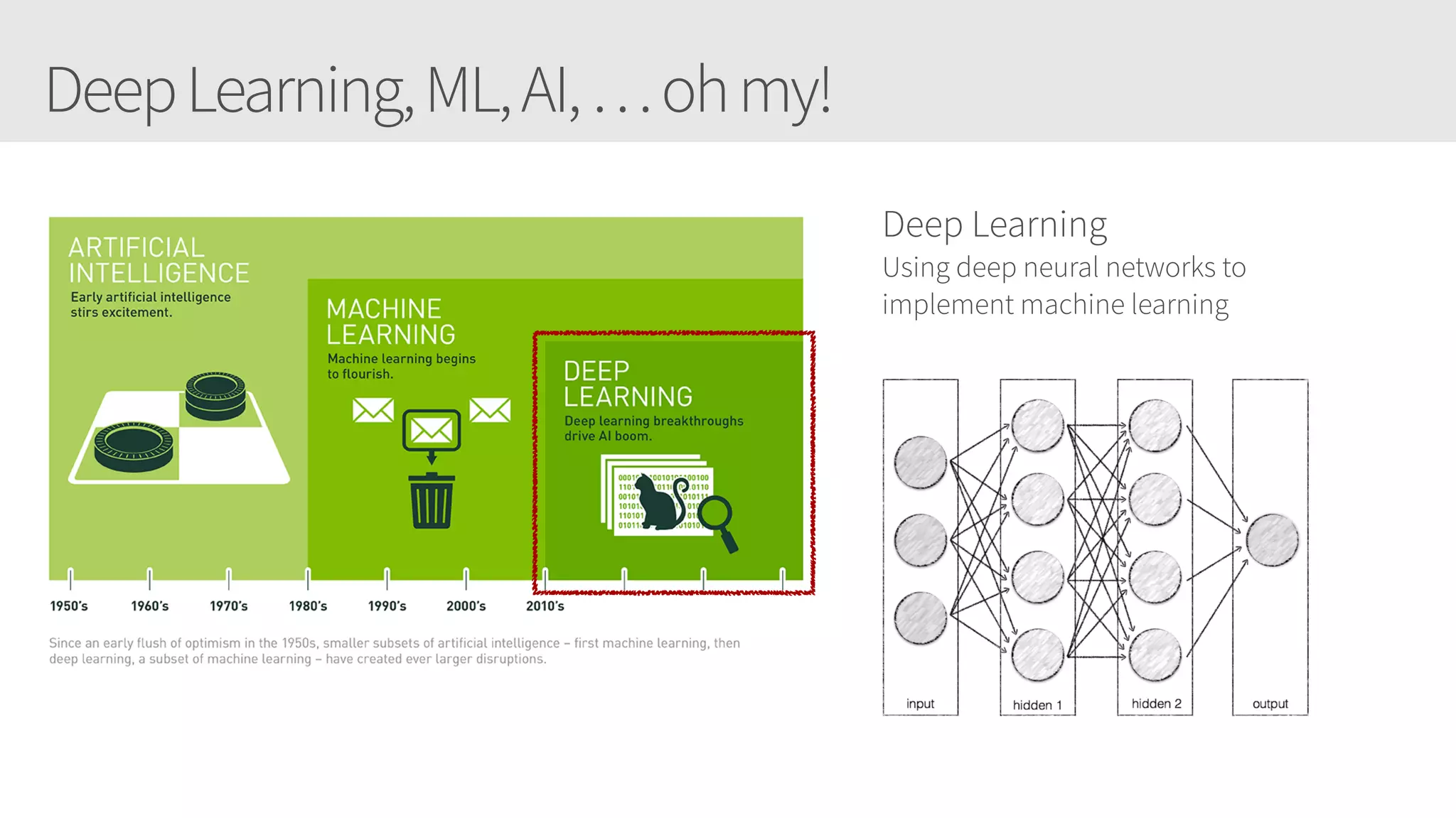

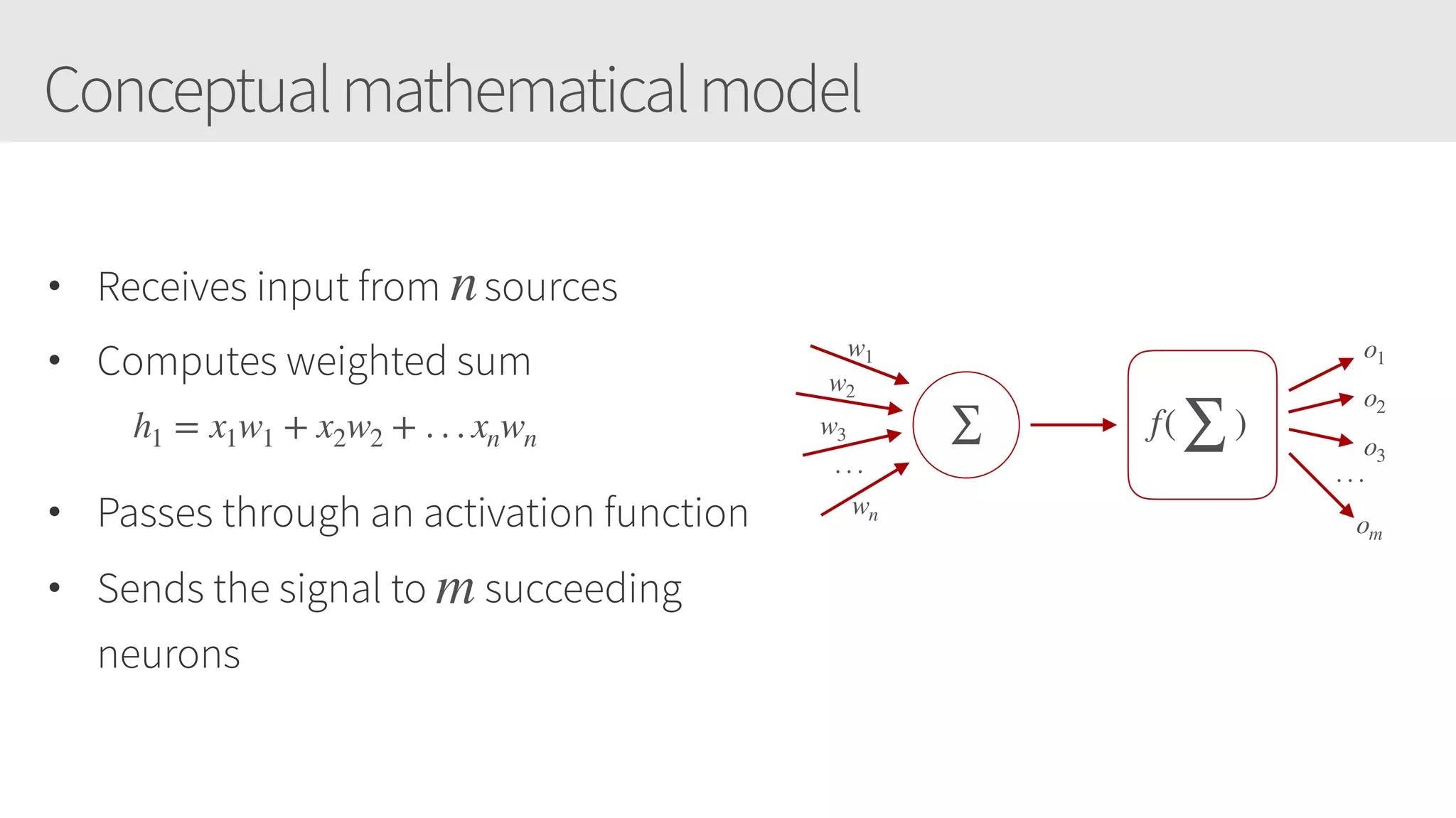

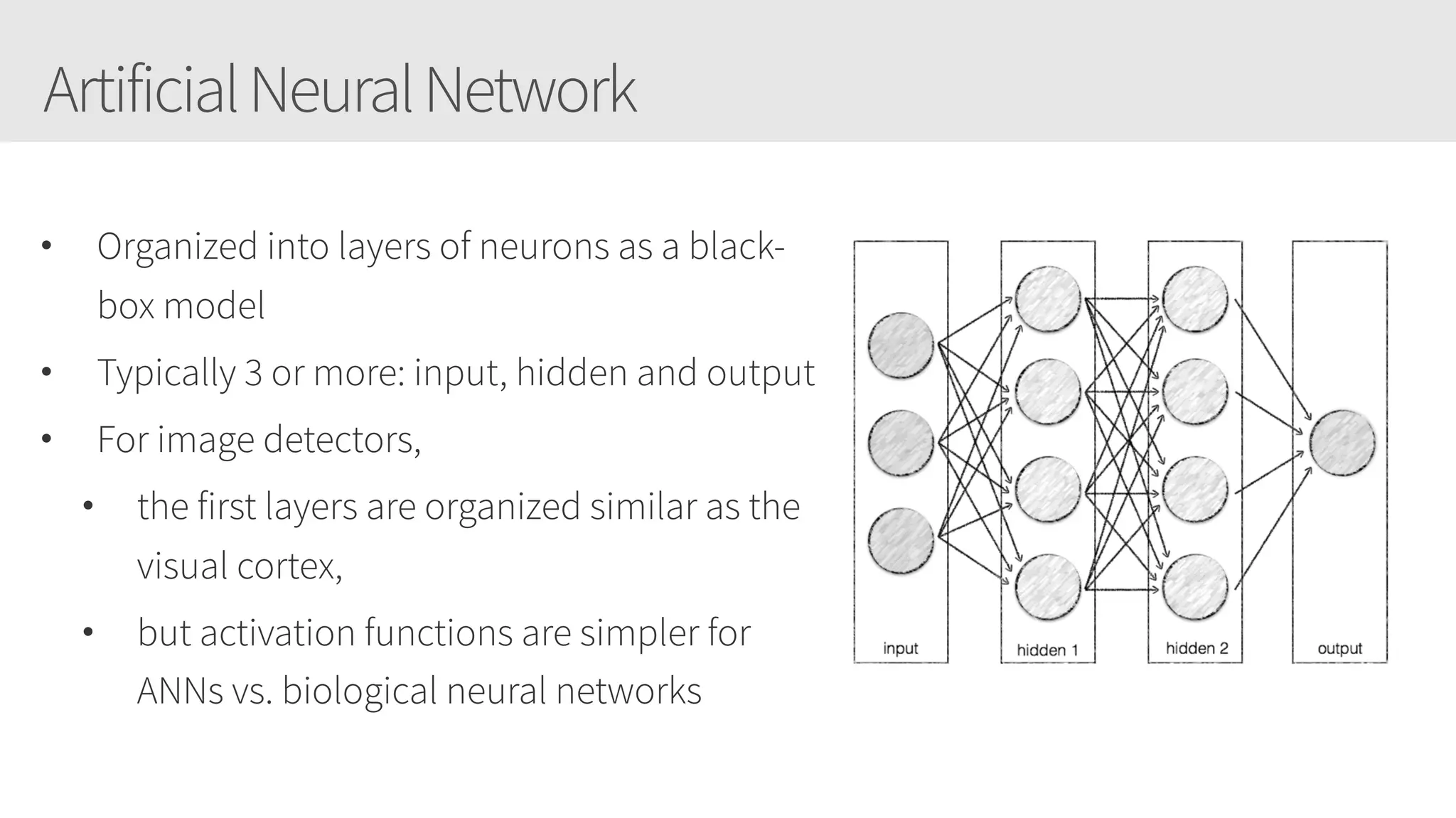

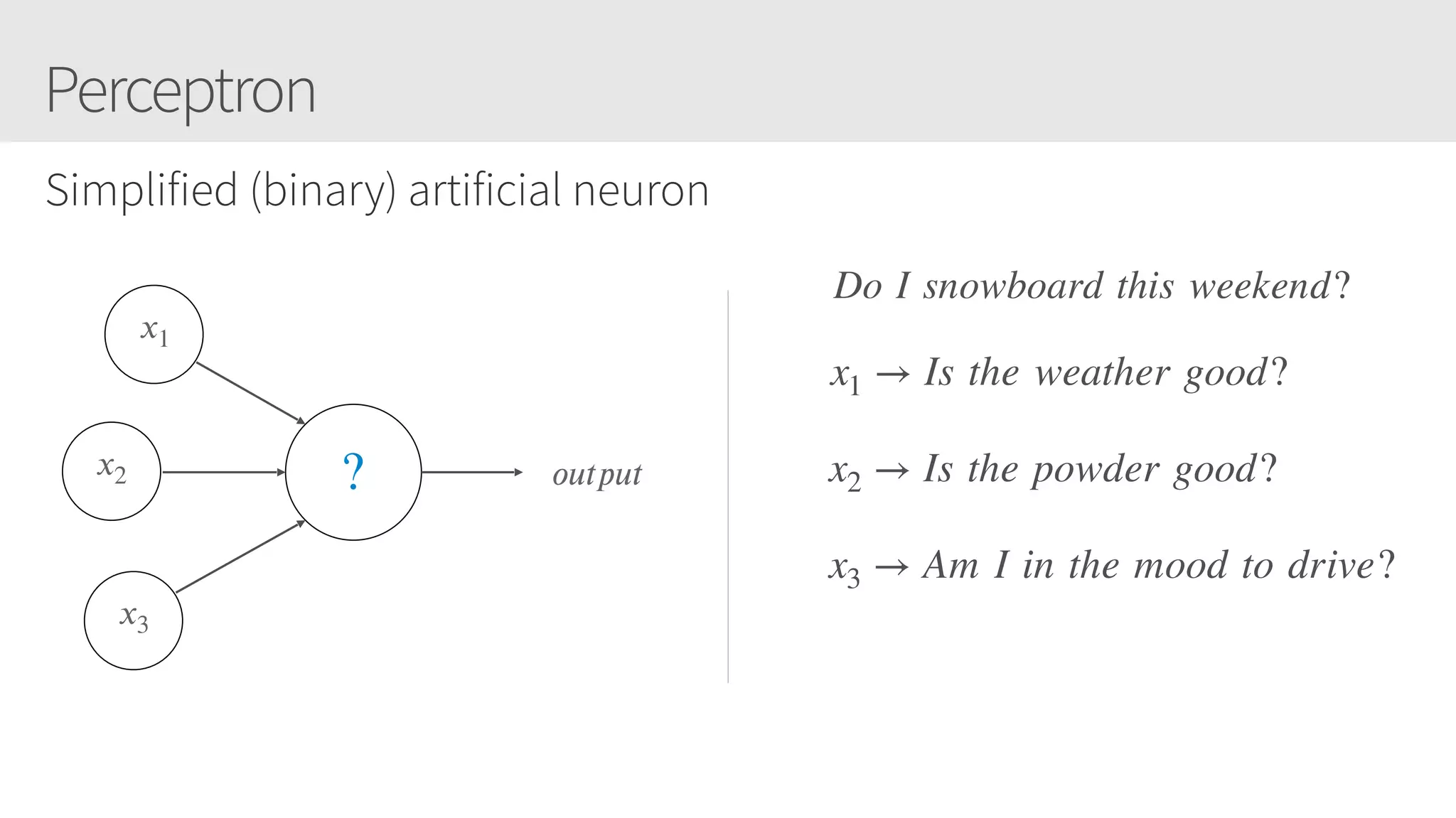

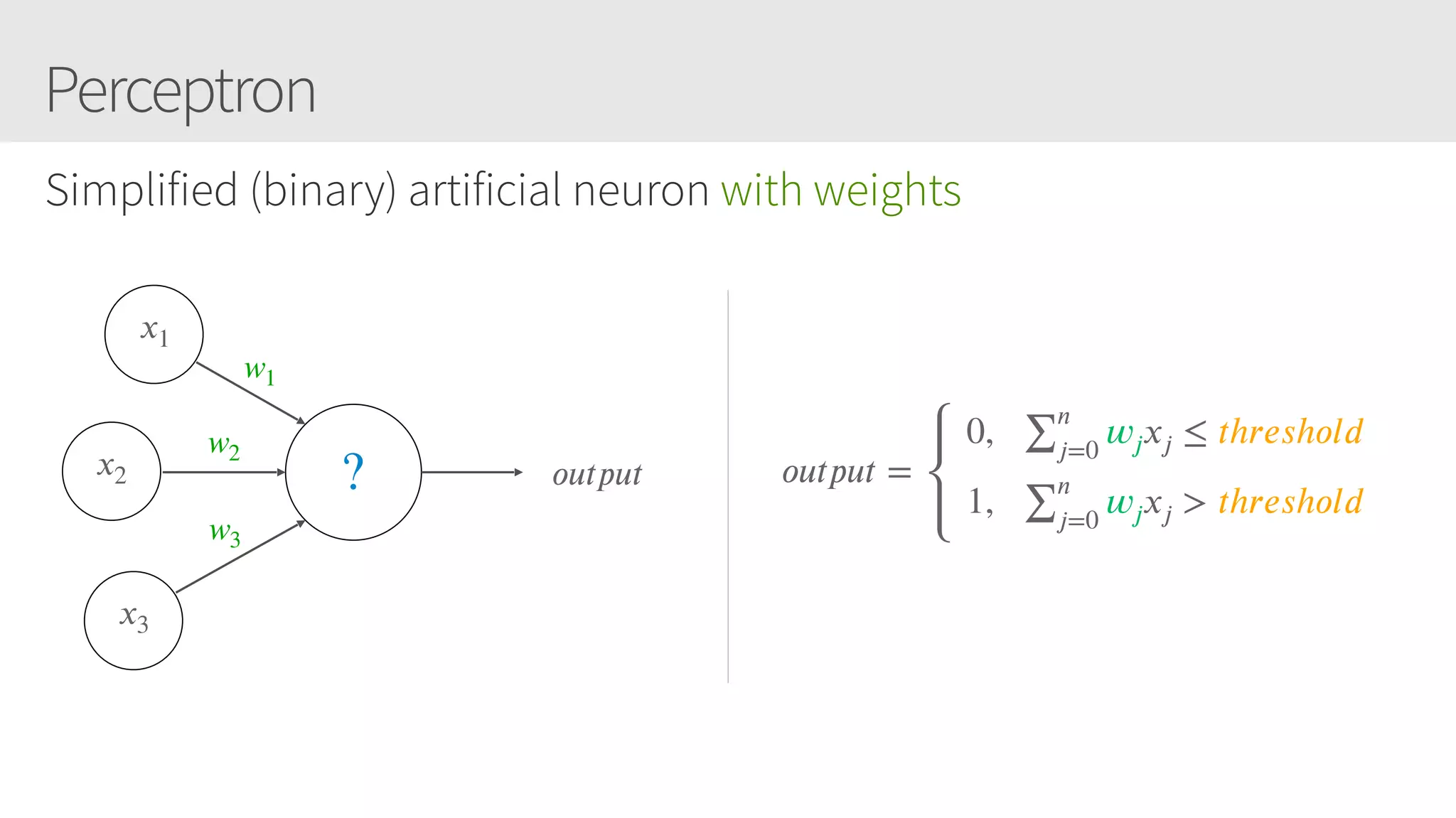

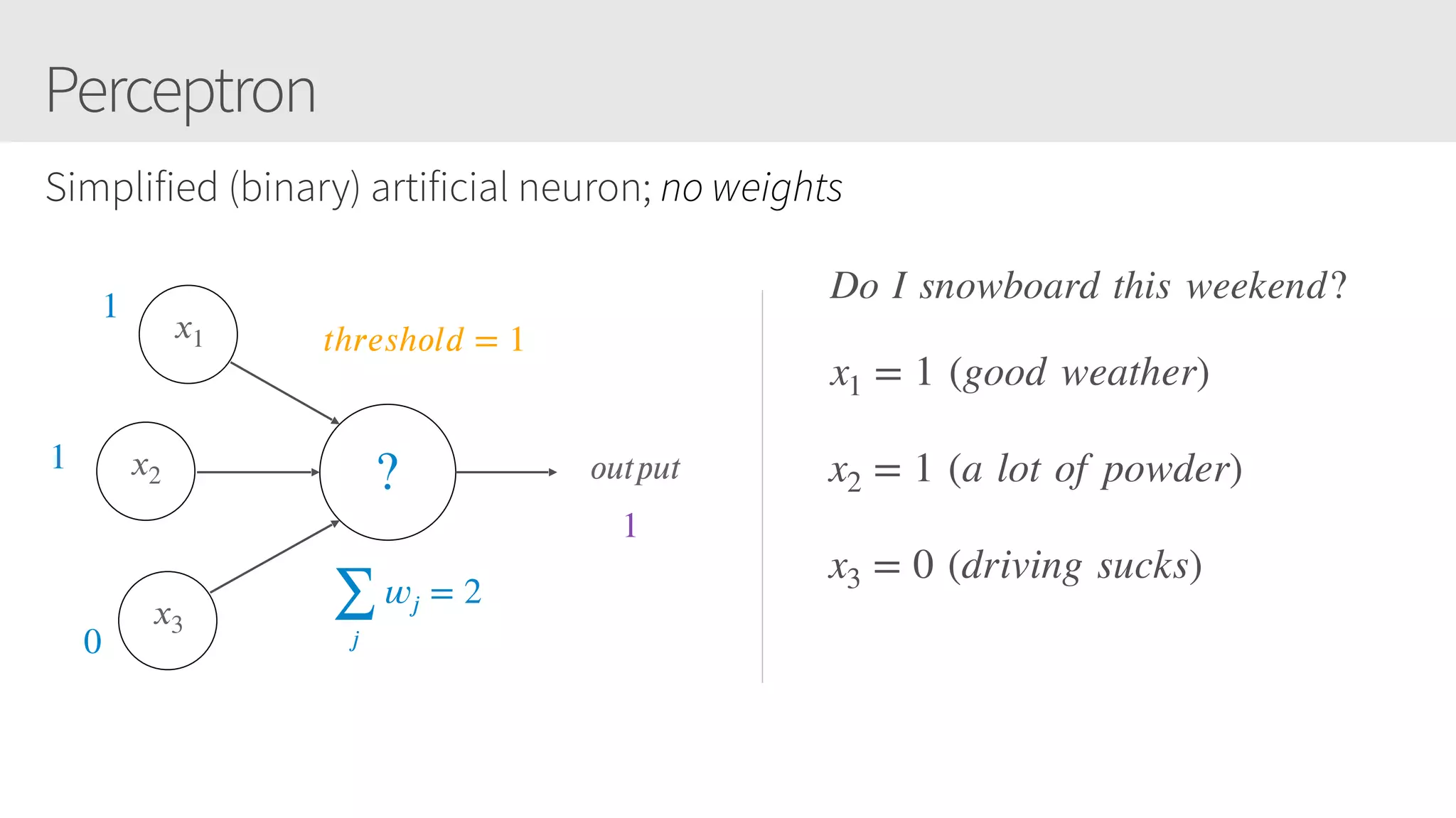

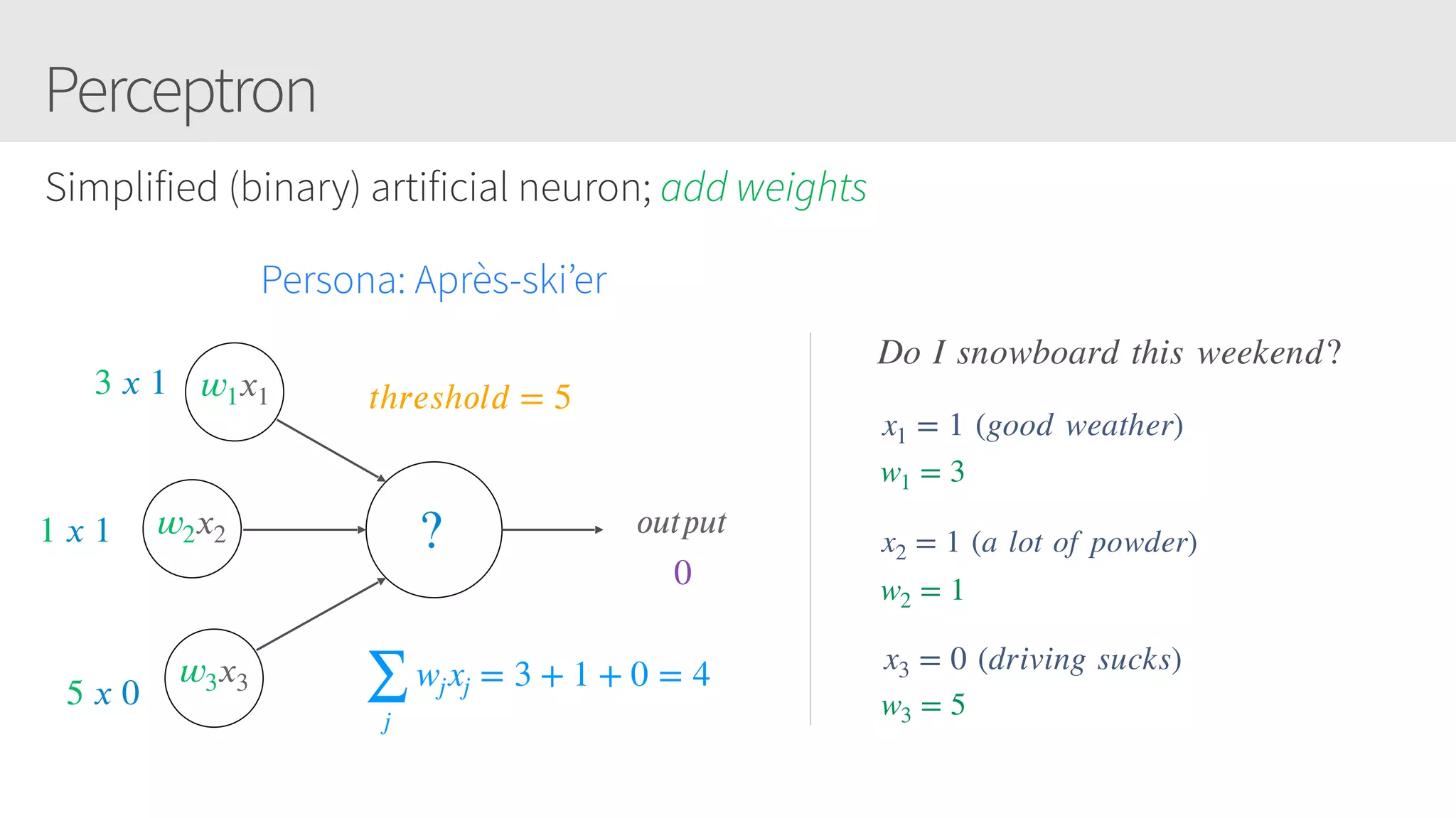

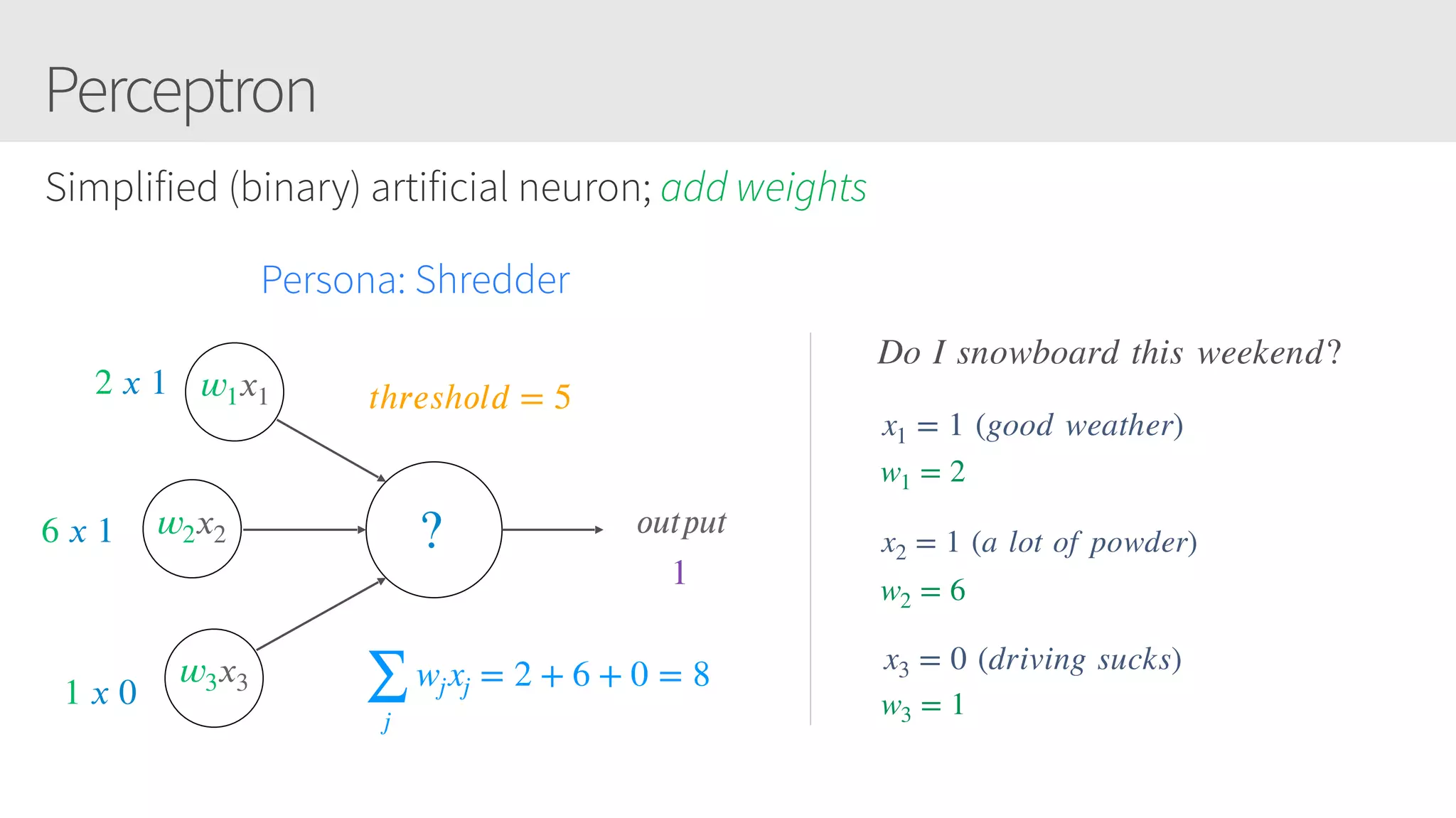

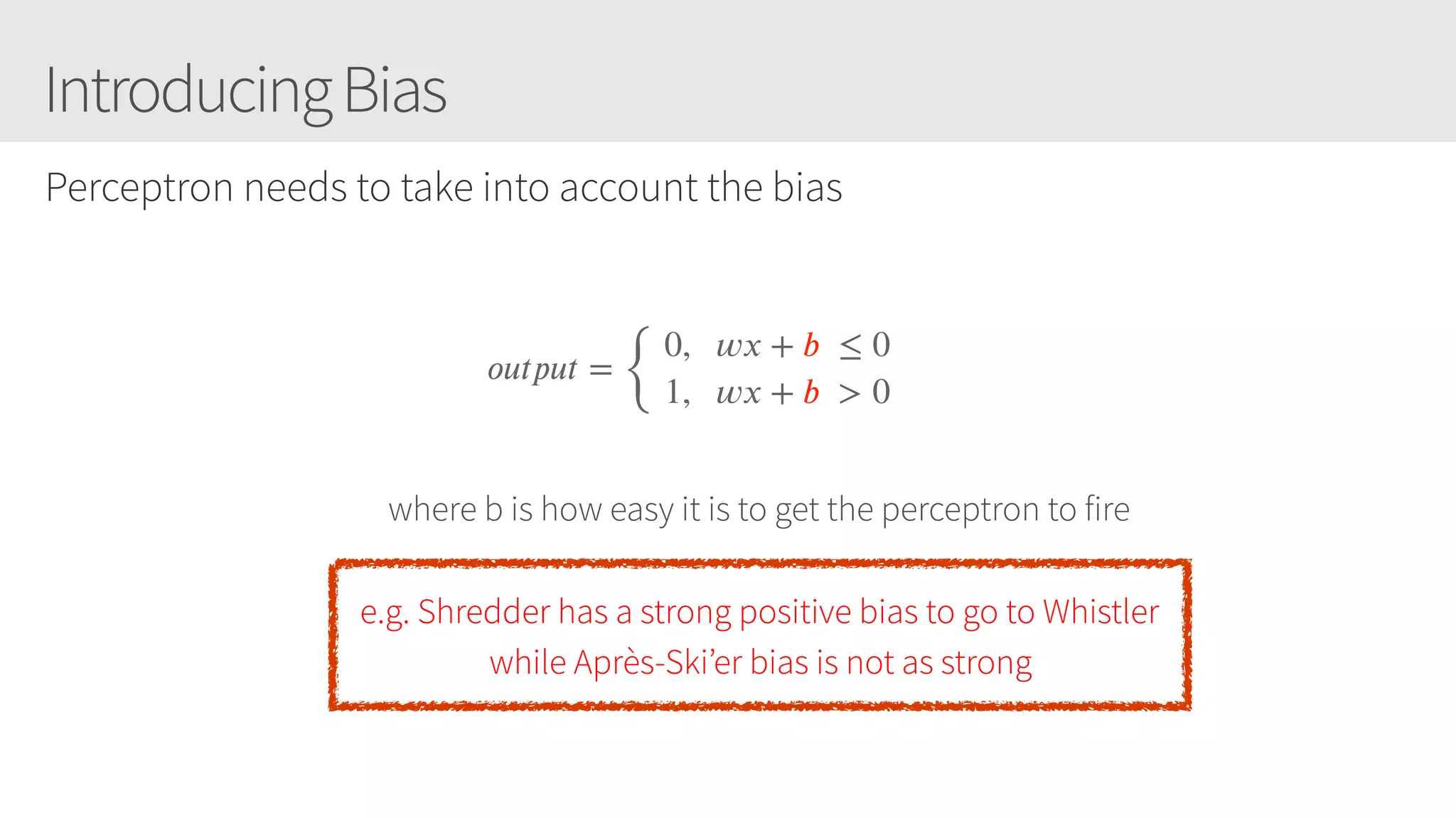

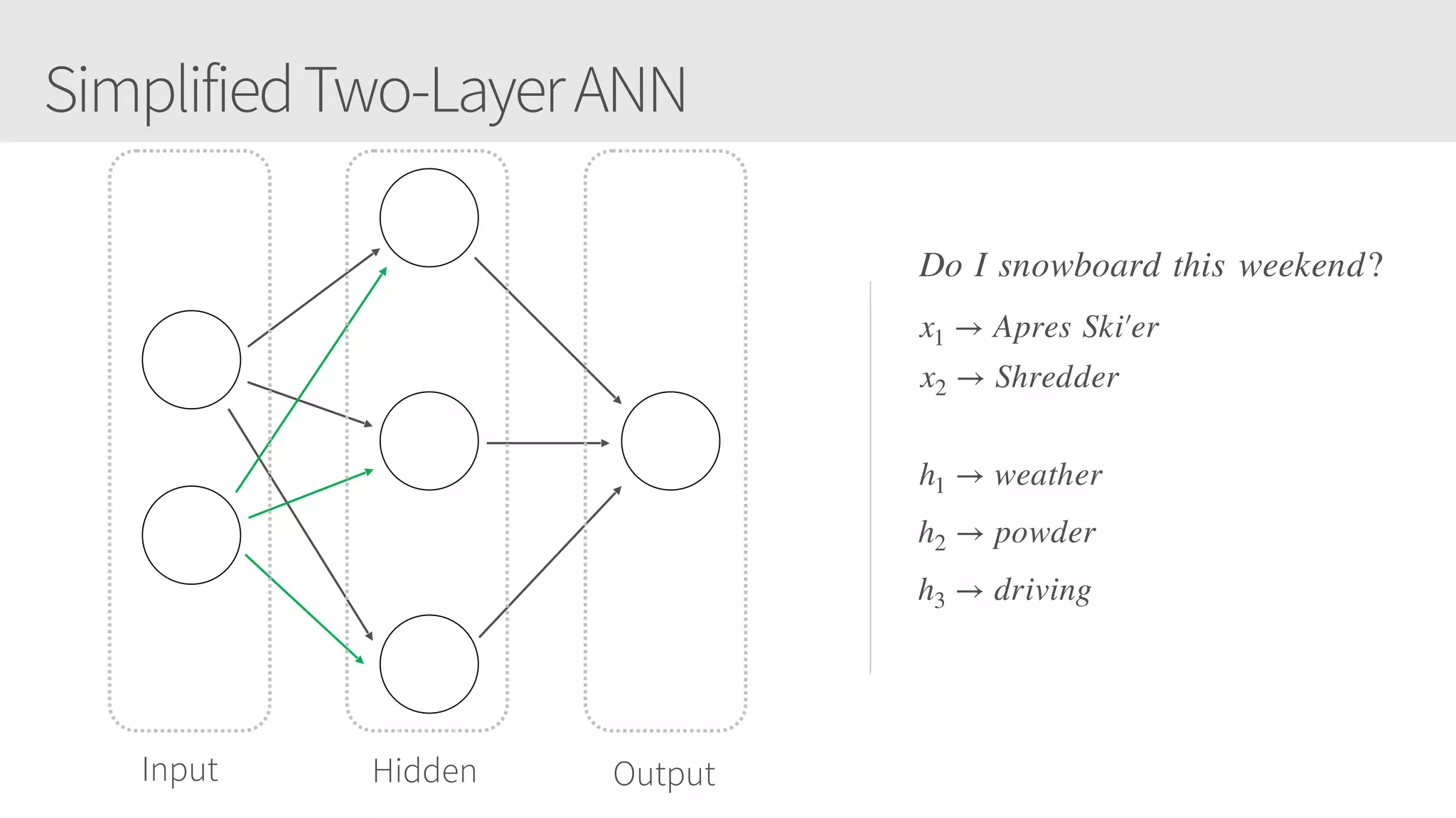

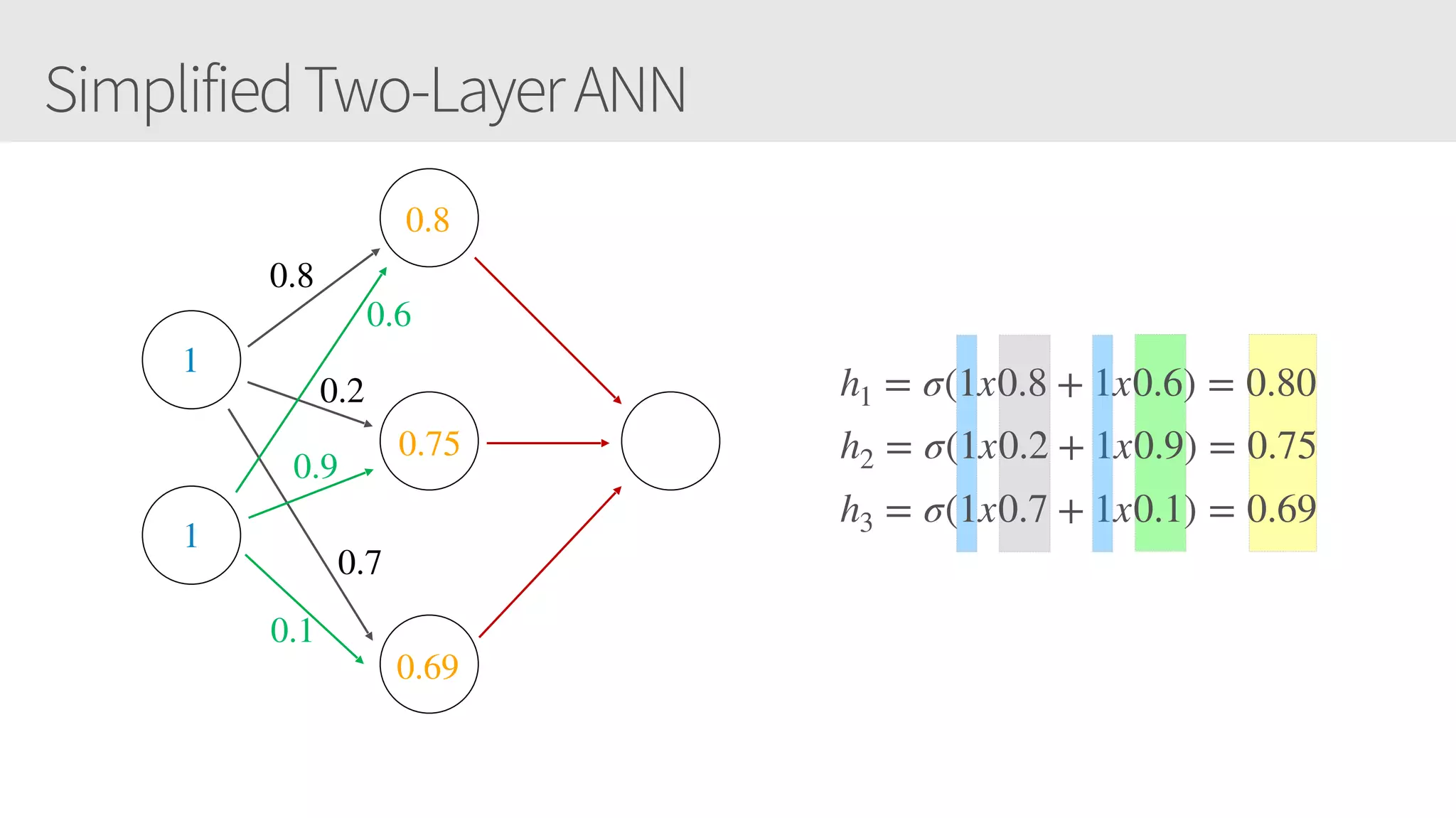

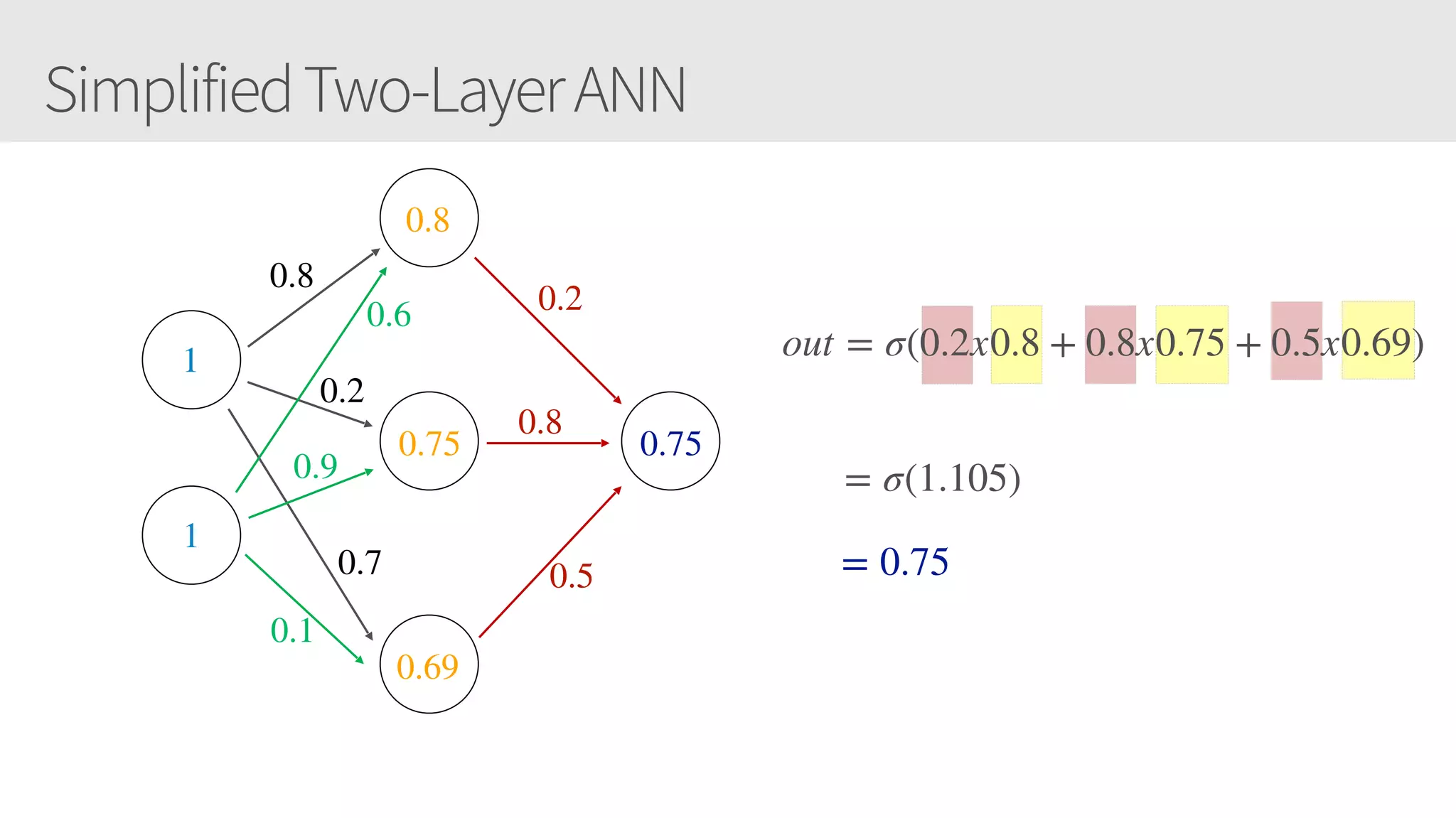

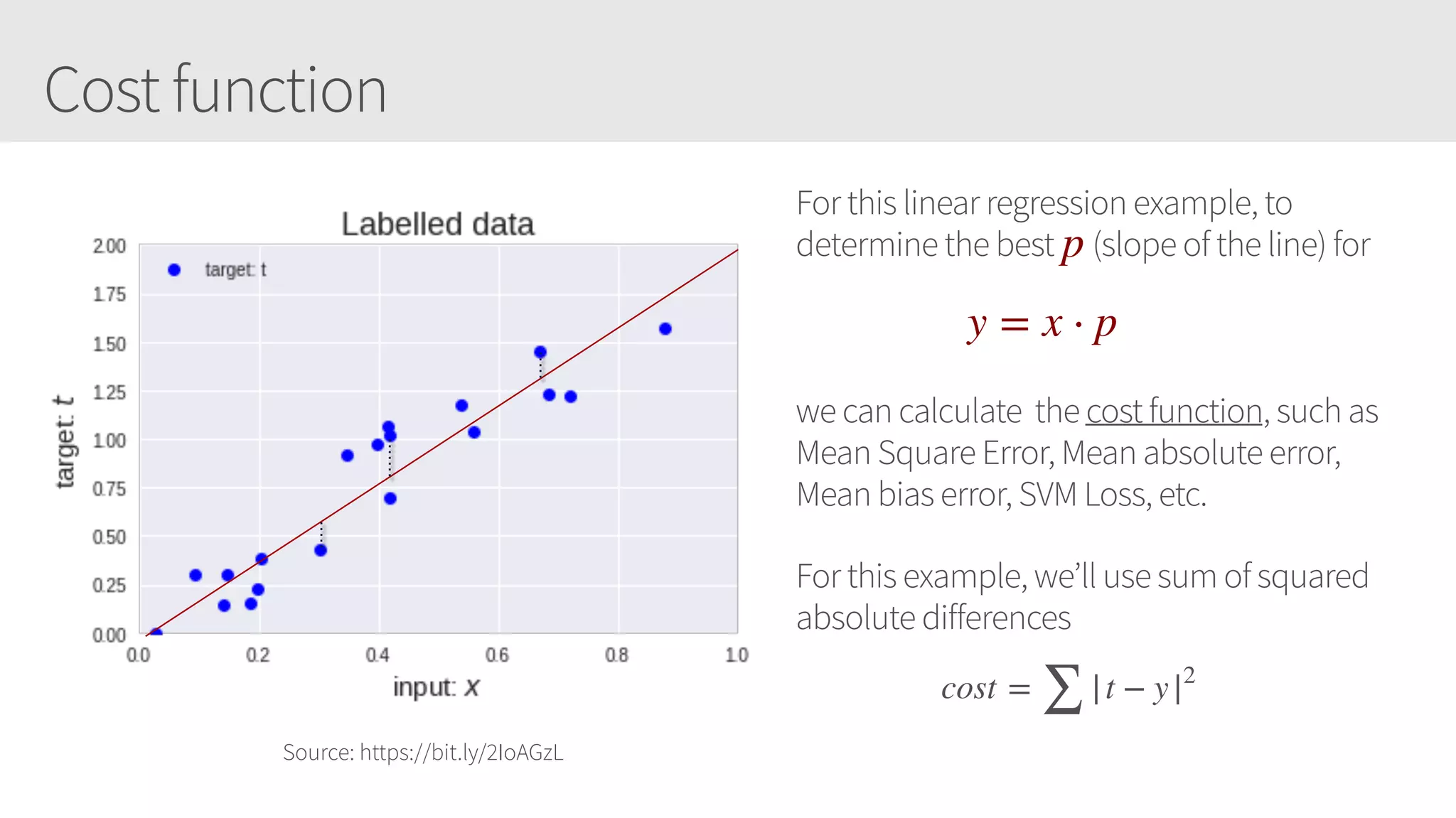

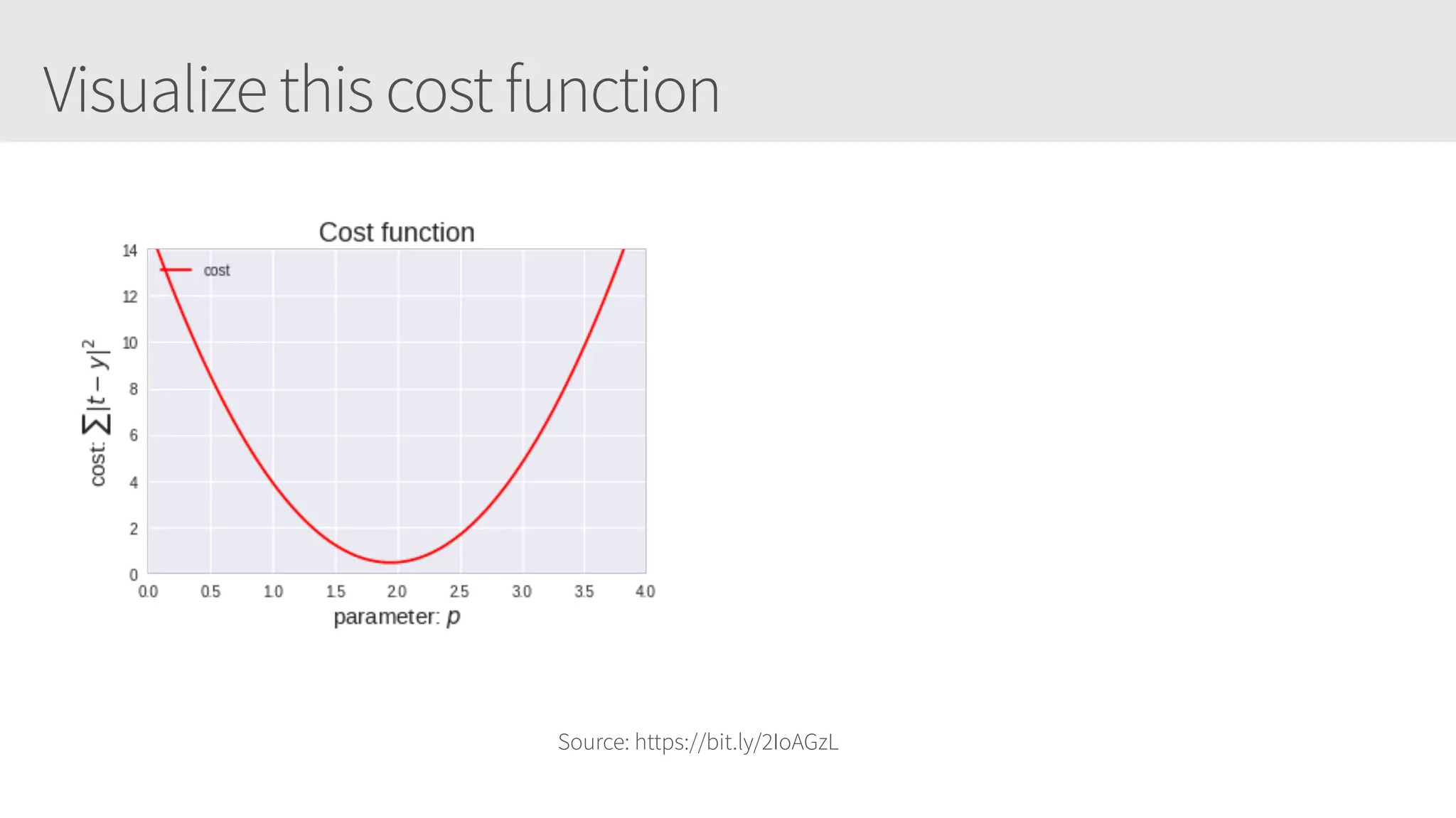

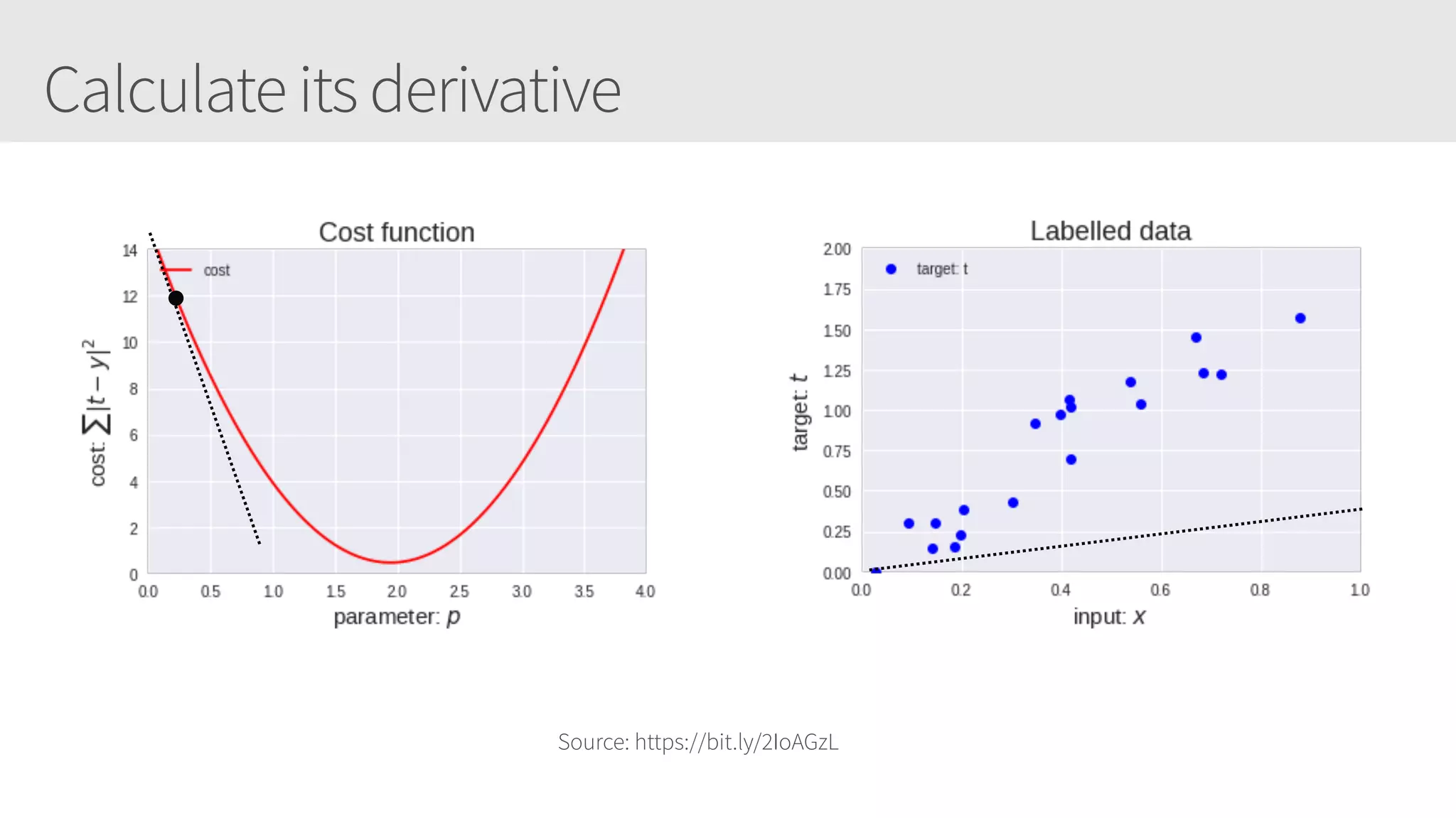

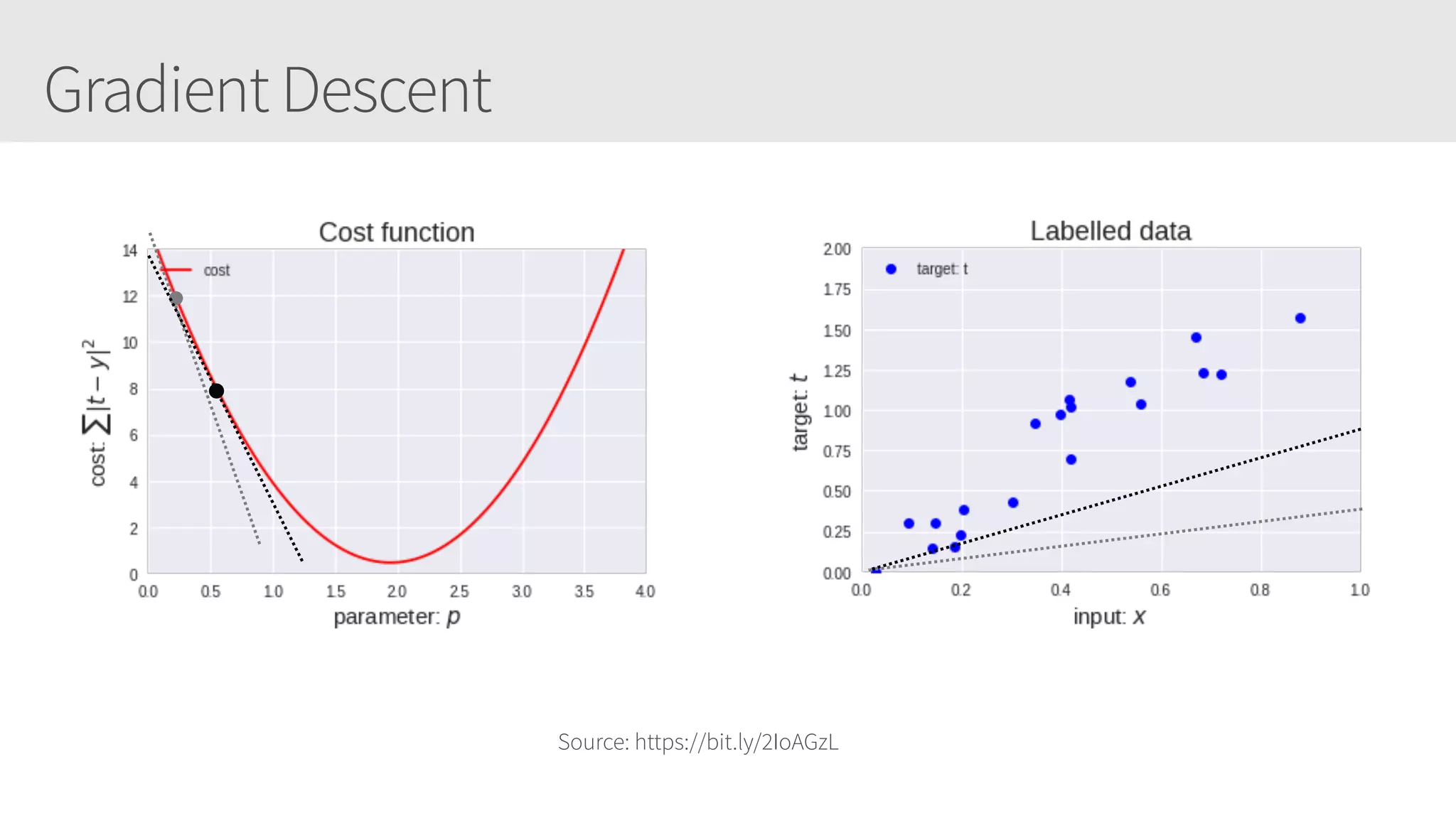

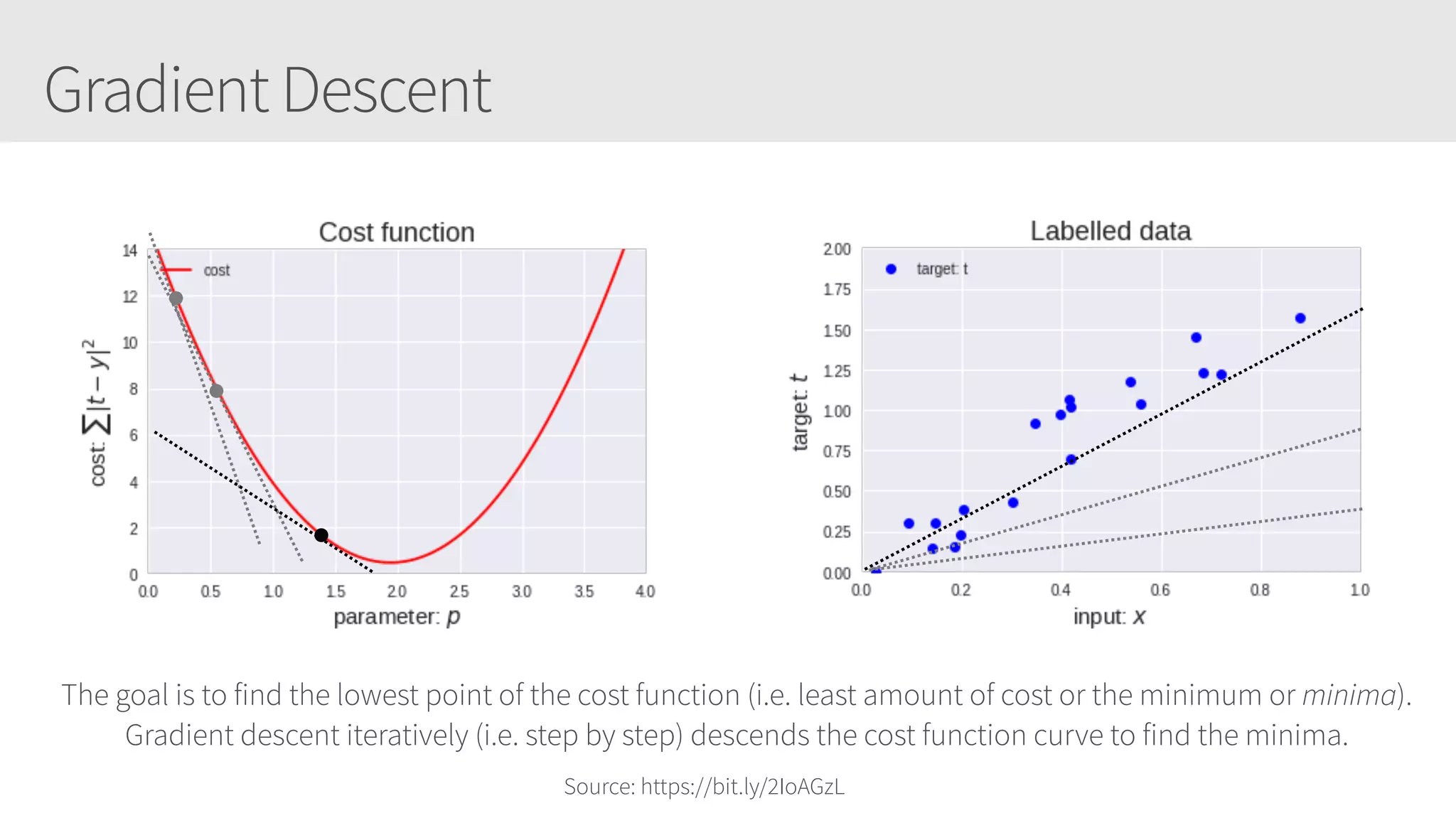

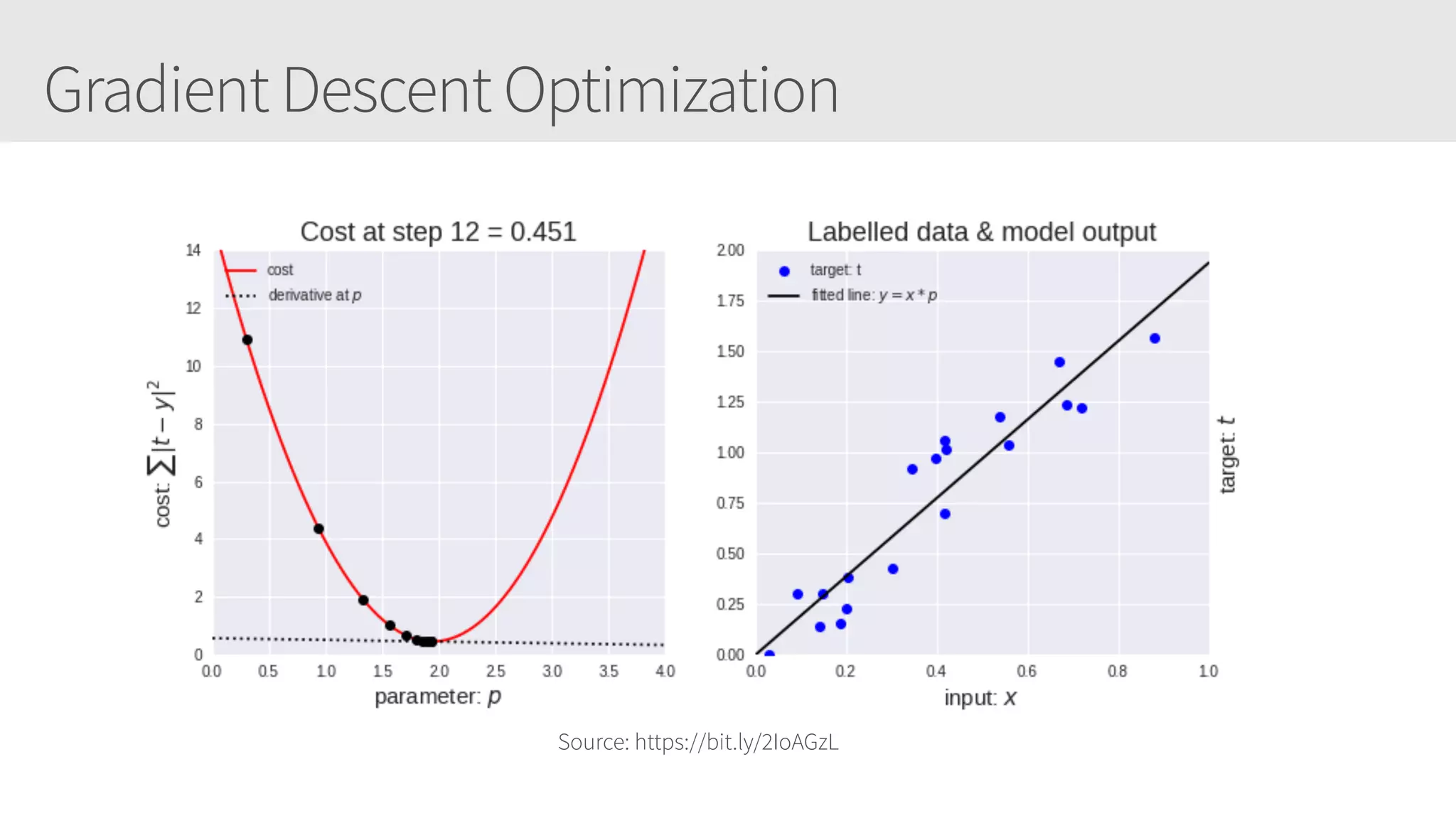

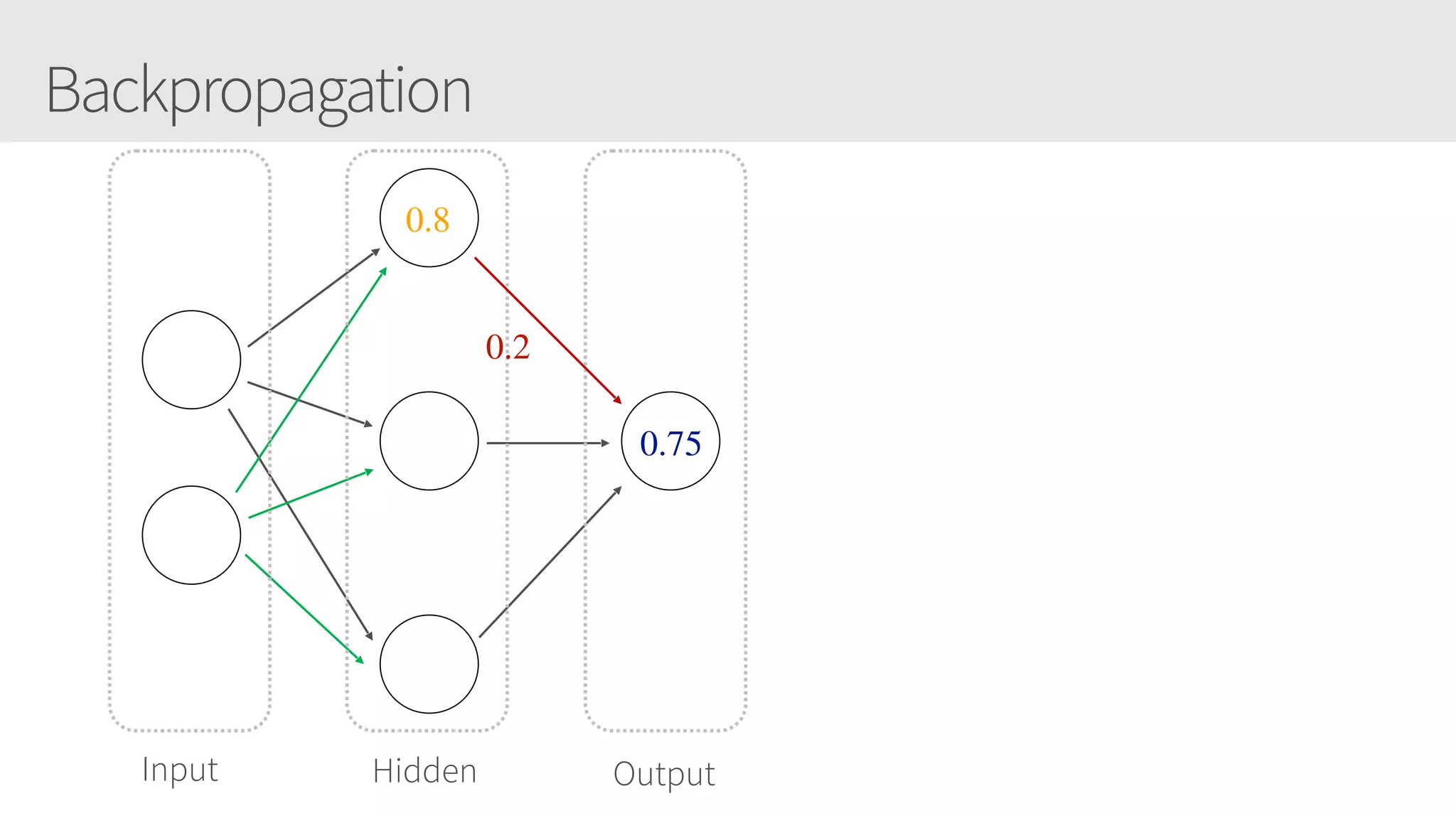

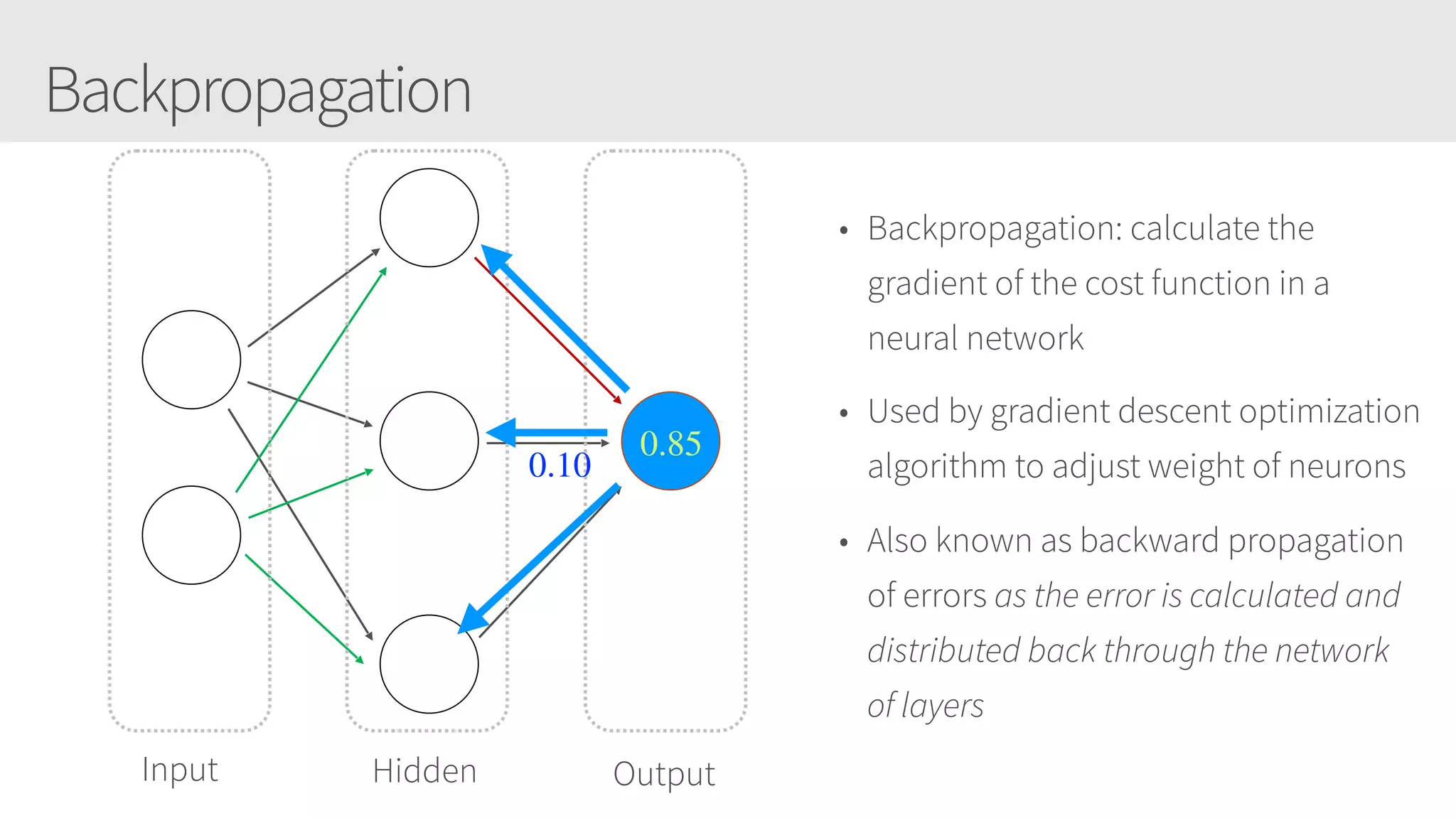

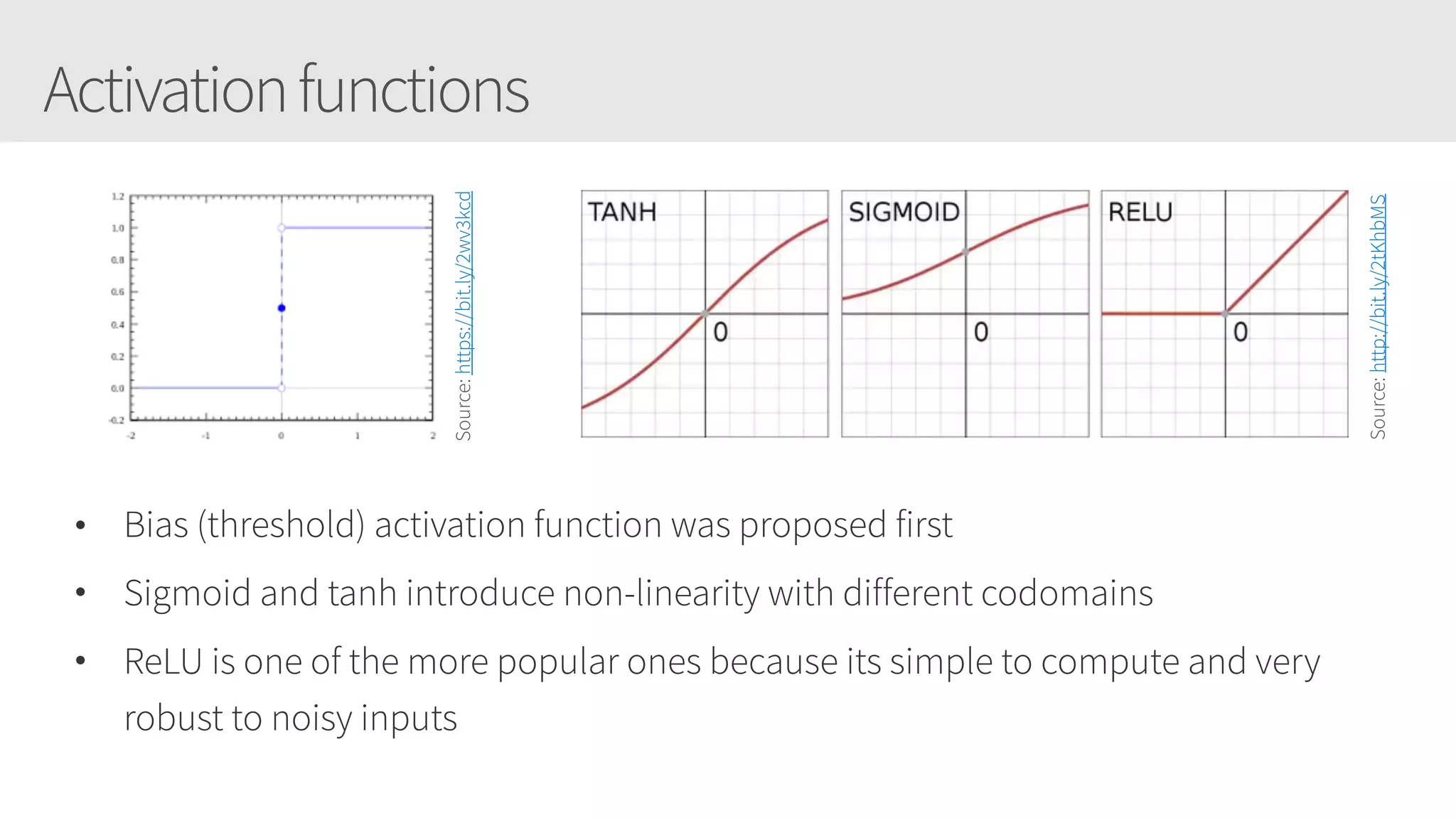

The document introduces a series on neural networks, focusing on deep learning fundamentals, including training and applying neural networks with Keras using TensorFlow. It outlines the structure and function of artificial neural networks compared to biological neurons, discussing concepts like activation functions, backpropagation, and optimization techniques. Upcoming sessions will cover topics such as convolutional neural networks and practical applications in various fields.

![SigmoidNeuron

𝑥1

𝑜𝑢𝑡𝑝𝑢𝑡?𝑥2

𝑥3

𝑤3

𝑤2

𝑤1

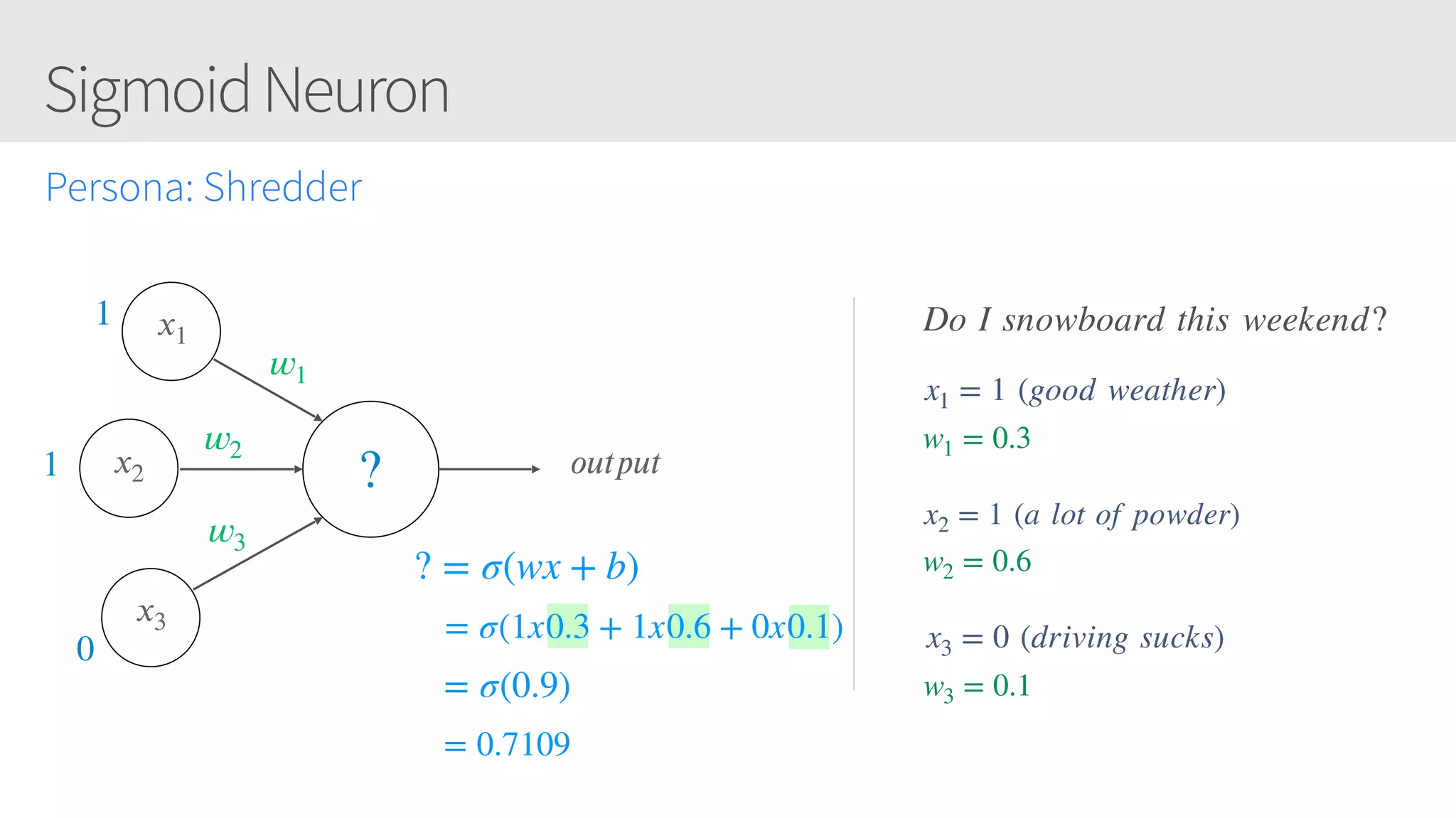

The more common artificial neuron

Instead of [0, 1], now (0…1)

Where output is defined by σ(wx + b)

σ(wx + b)](https://image.slidesharecdn.com/dlfs-introductiontoneuralnetworks-181106031340/75/Introduction-to-Neural-Networks-33-2048.jpg)

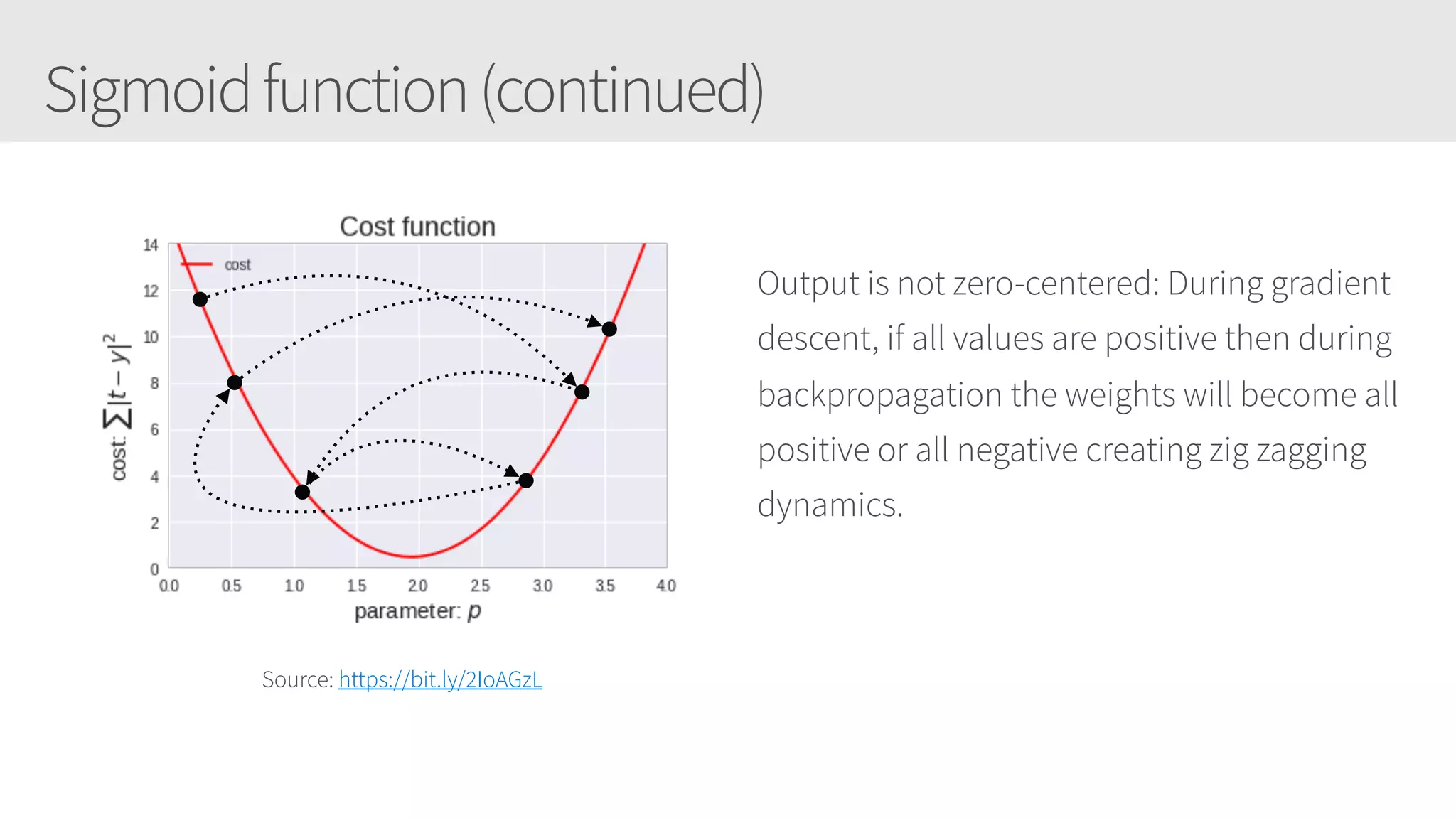

![Sigmoidfunction

• Sigmoid non-linearity squashes real

numbers between [0, 1]

• Historically a nice interpretation of neuron

firing rate (i.e. not firing at all to fully-

saturated firing ).

• Currently, not used as much because really

large values too close to 0 or 1 result in

gradients too close to 0 stopping

backpropagation.Source: http://bit.ly/2GgMbGW](https://image.slidesharecdn.com/dlfs-introductiontoneuralnetworks-181106031340/75/Introduction-to-Neural-Networks-50-2048.jpg)

![Tanhfunction

• Tanh function squashes real numbers [-1, 1]

• Same problem as sigmoid that its

activations saturate thus killing gradients.

• But it is zero-centered minimizing the zig

zagging dynamics during gradient descent.

• Currently preferred sigmoid nonlinearity

Source: http://bit.ly/2C4y89z](https://image.slidesharecdn.com/dlfs-introductiontoneuralnetworks-181106031340/75/Introduction-to-Neural-Networks-52-2048.jpg)