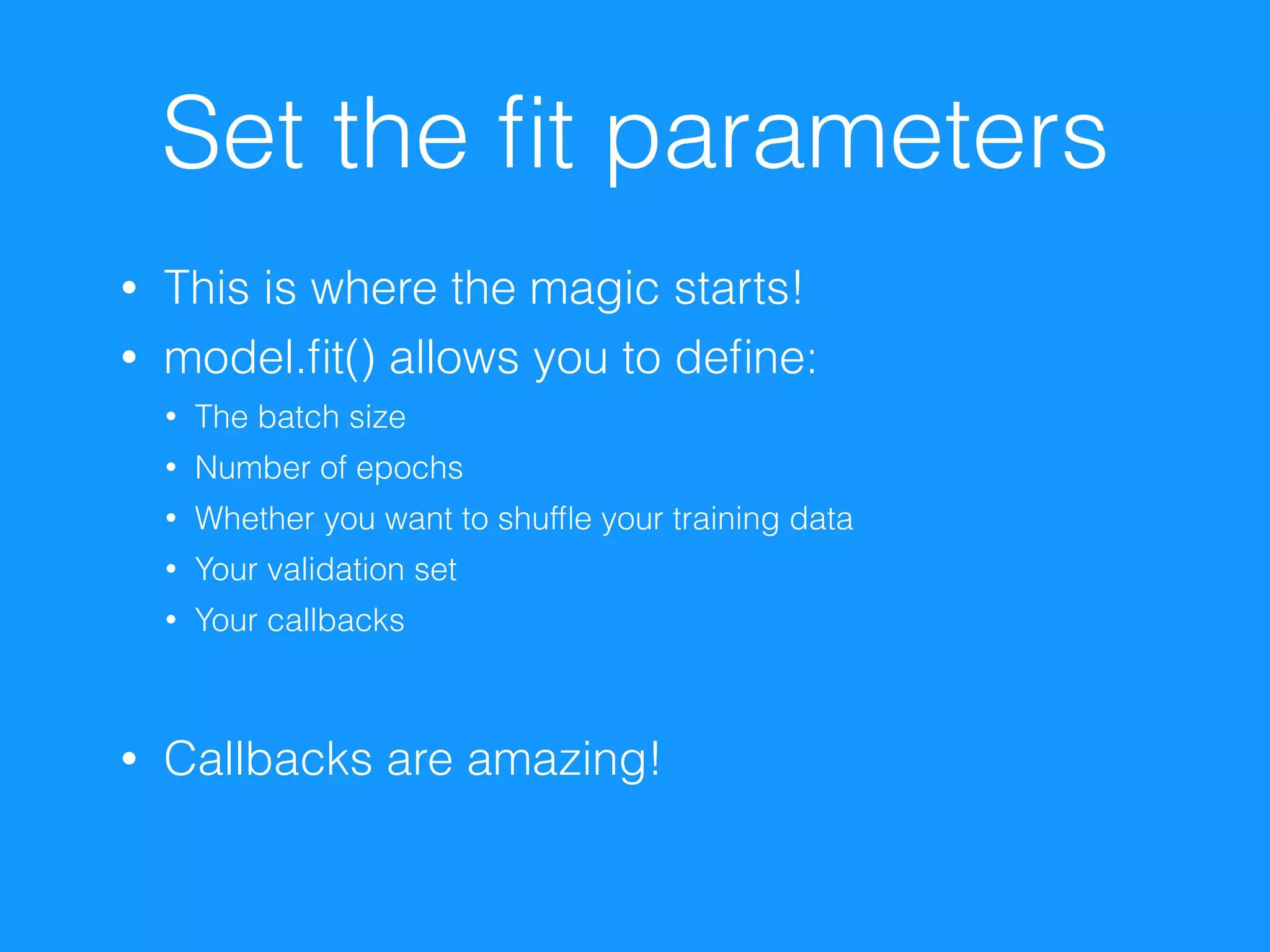

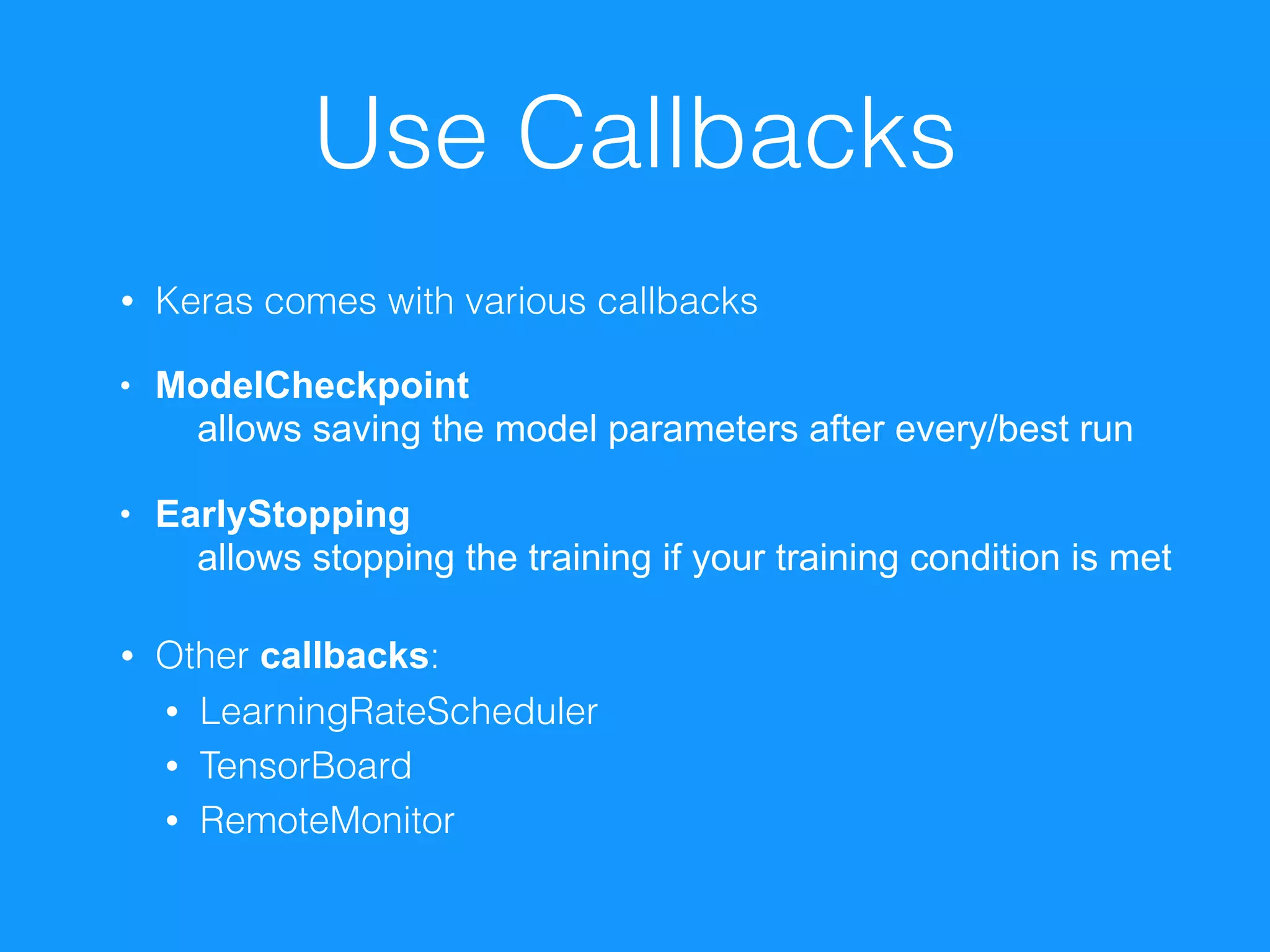

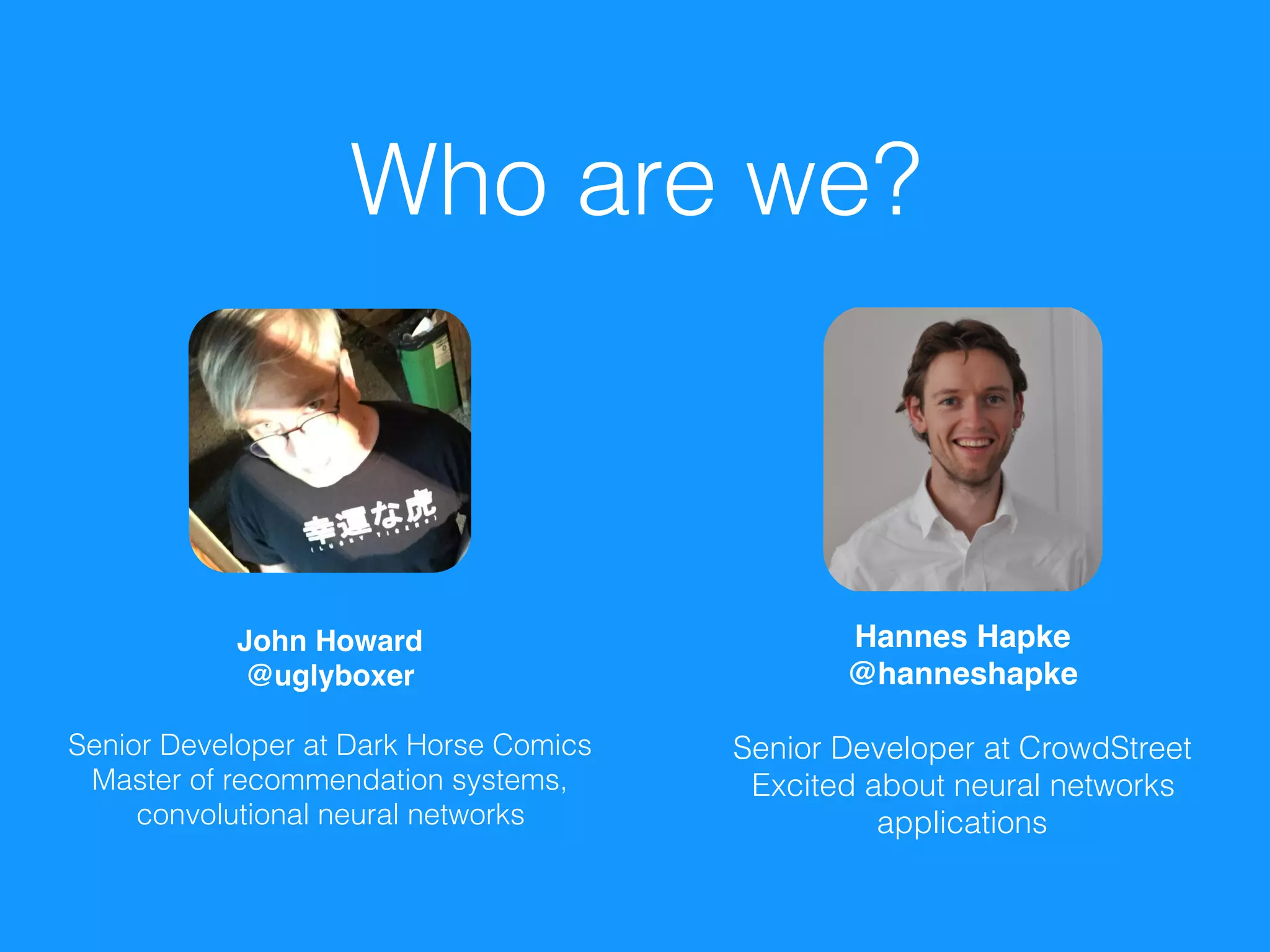

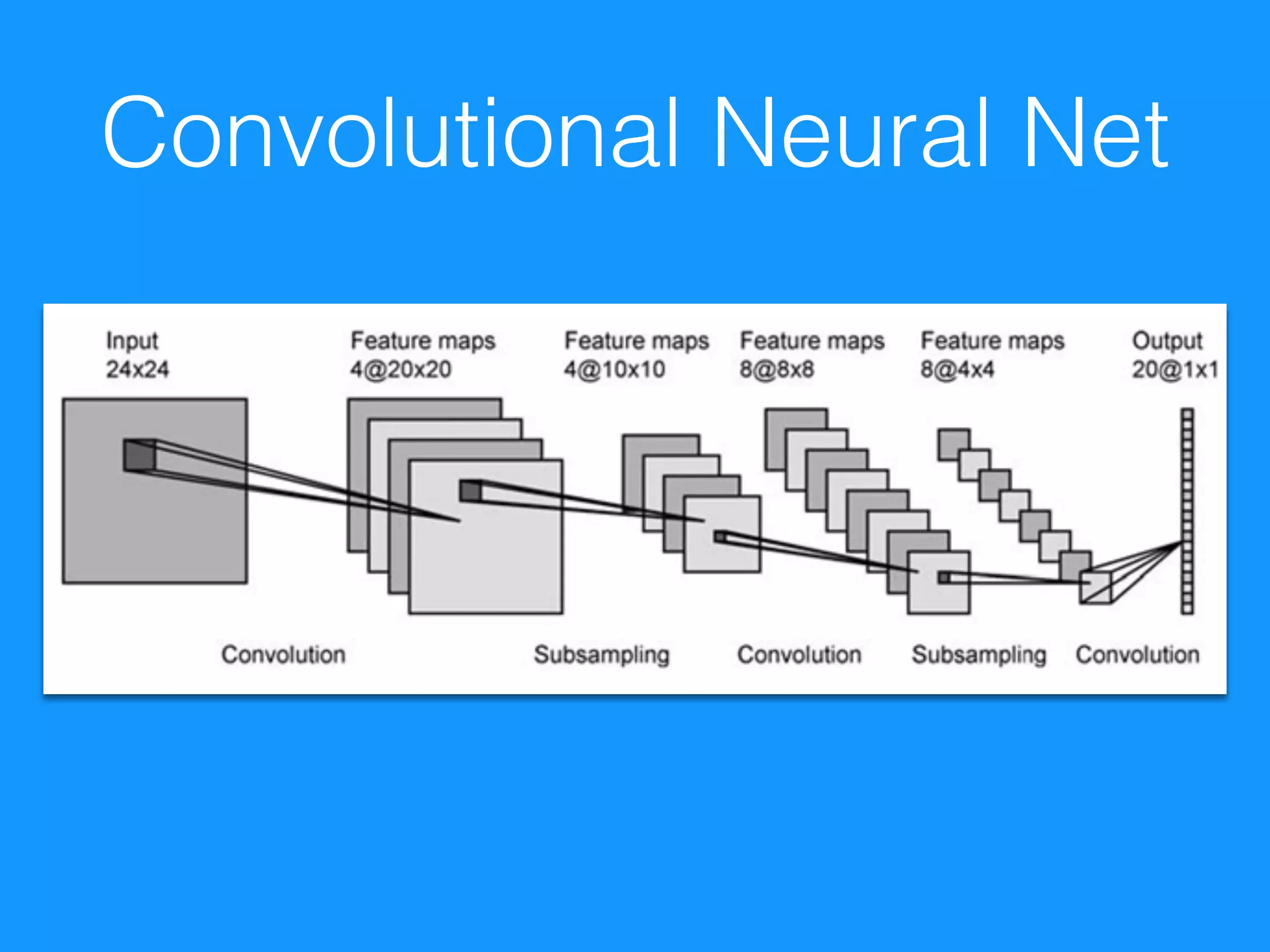

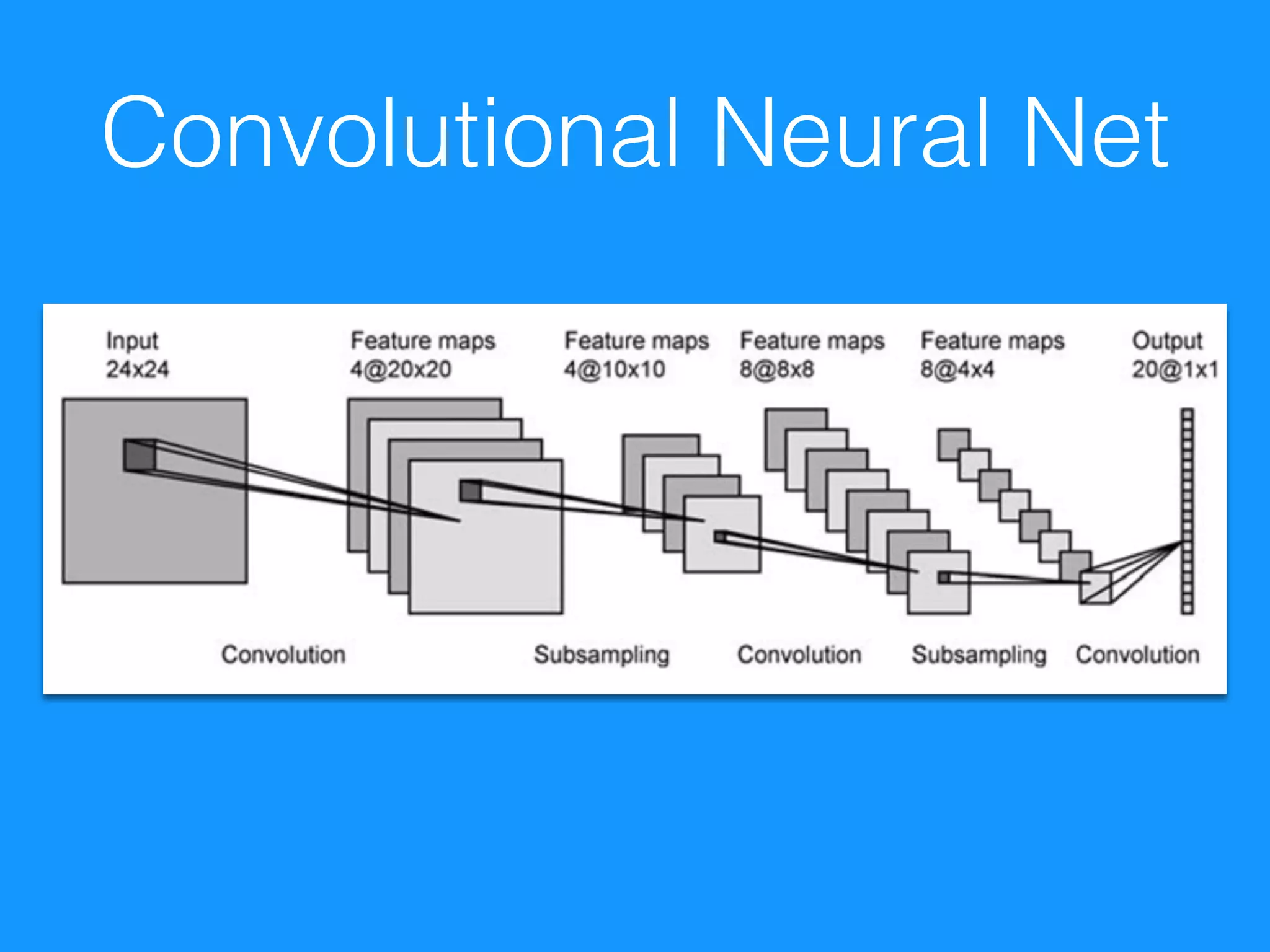

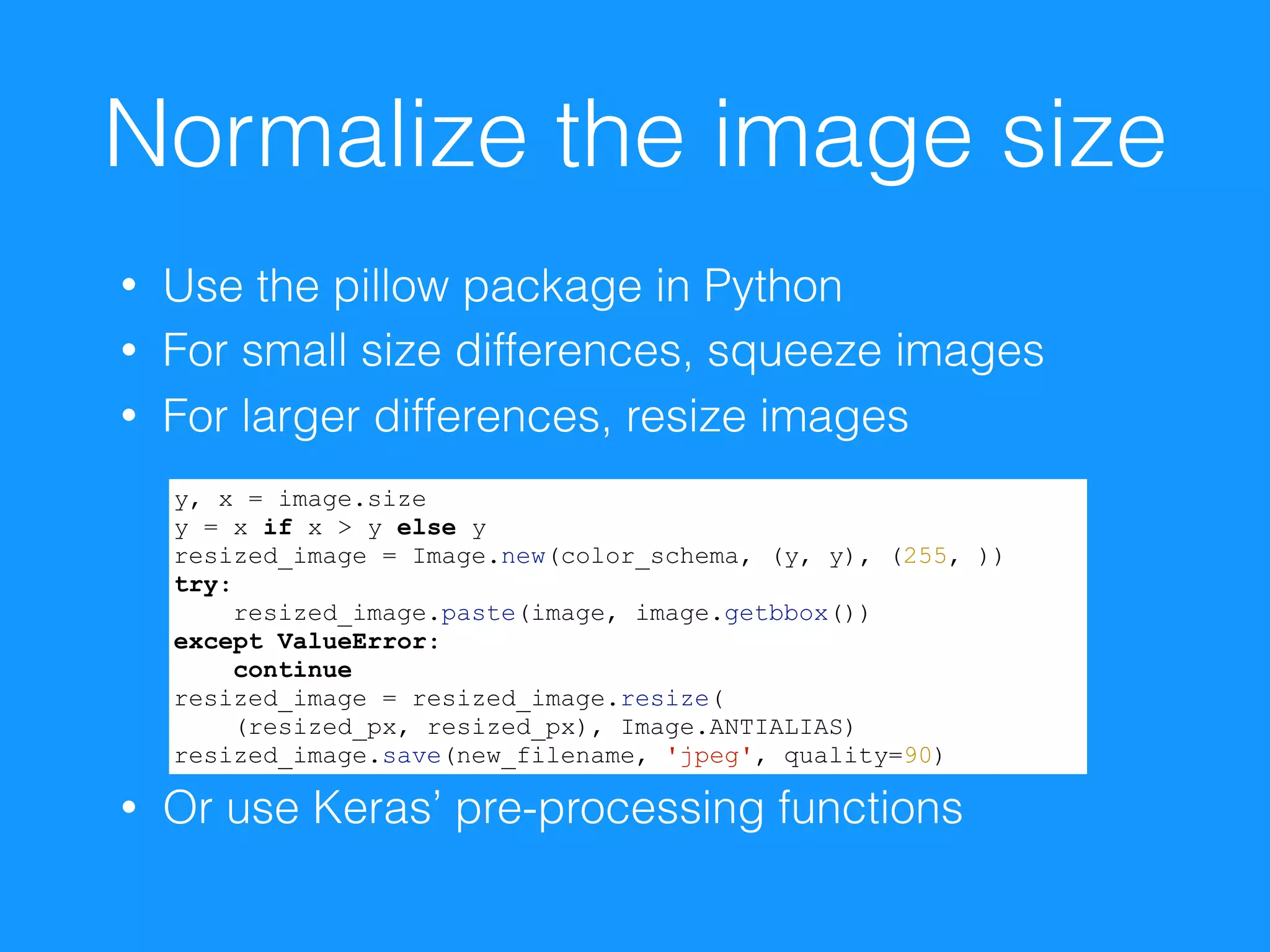

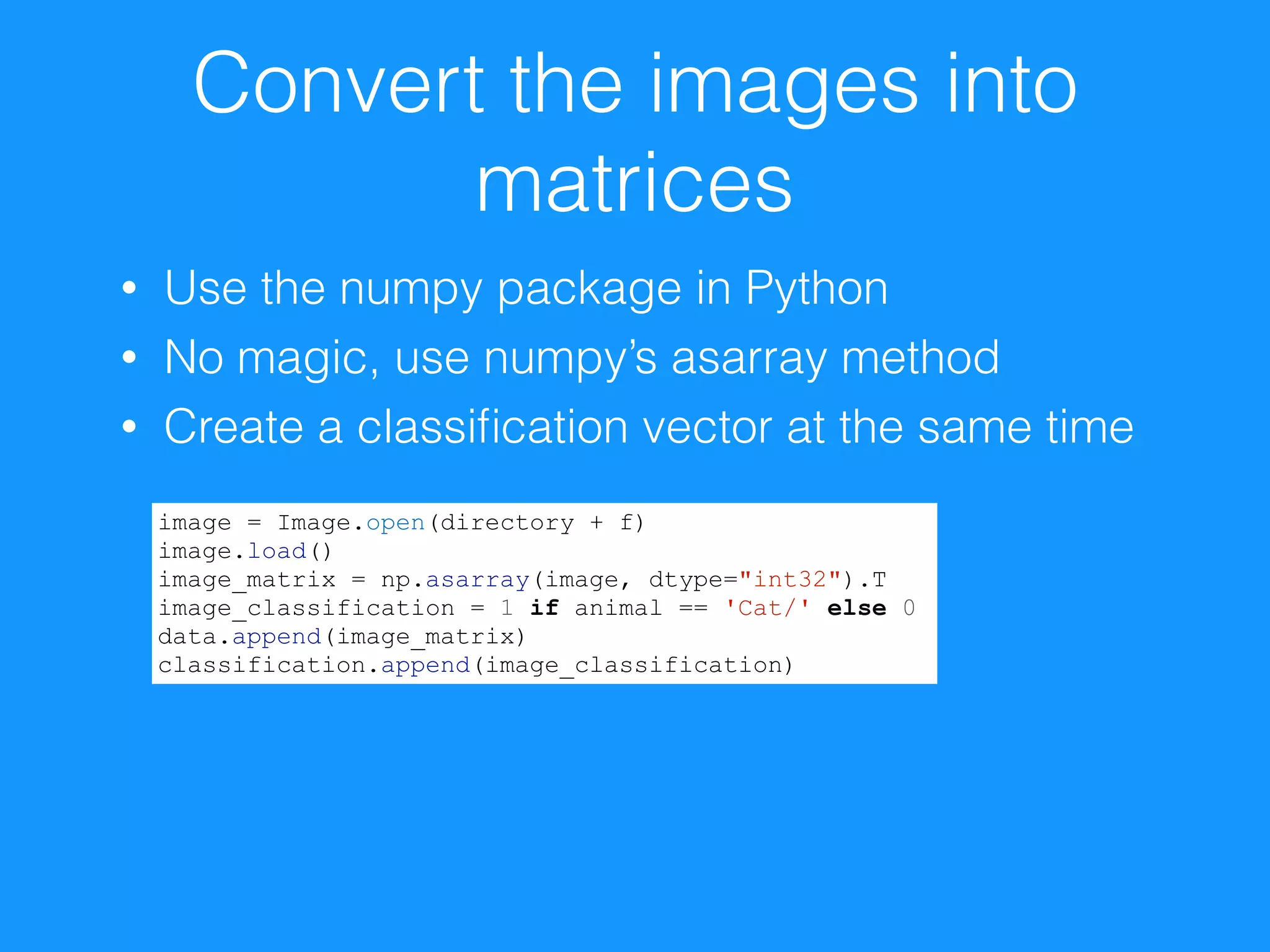

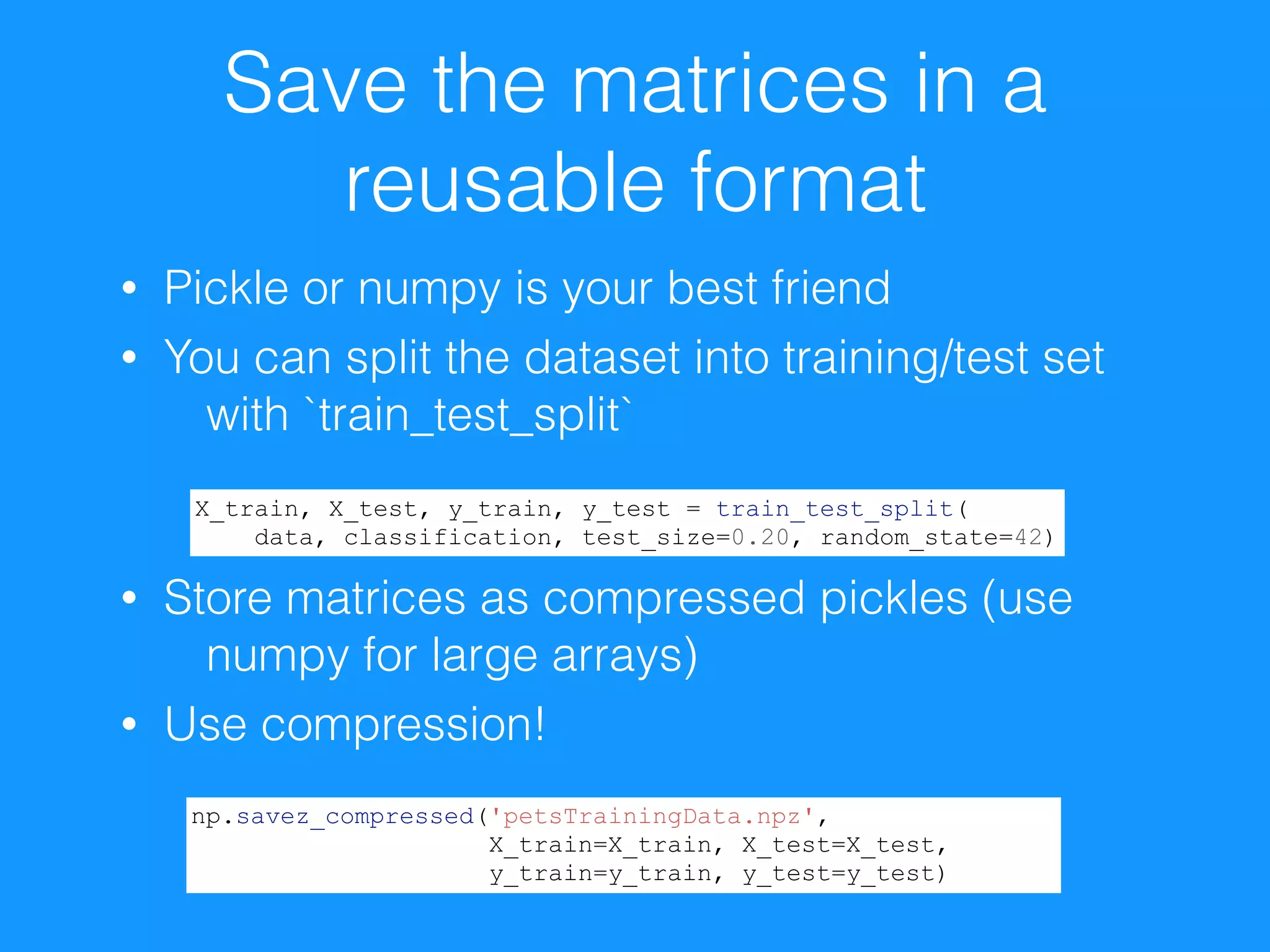

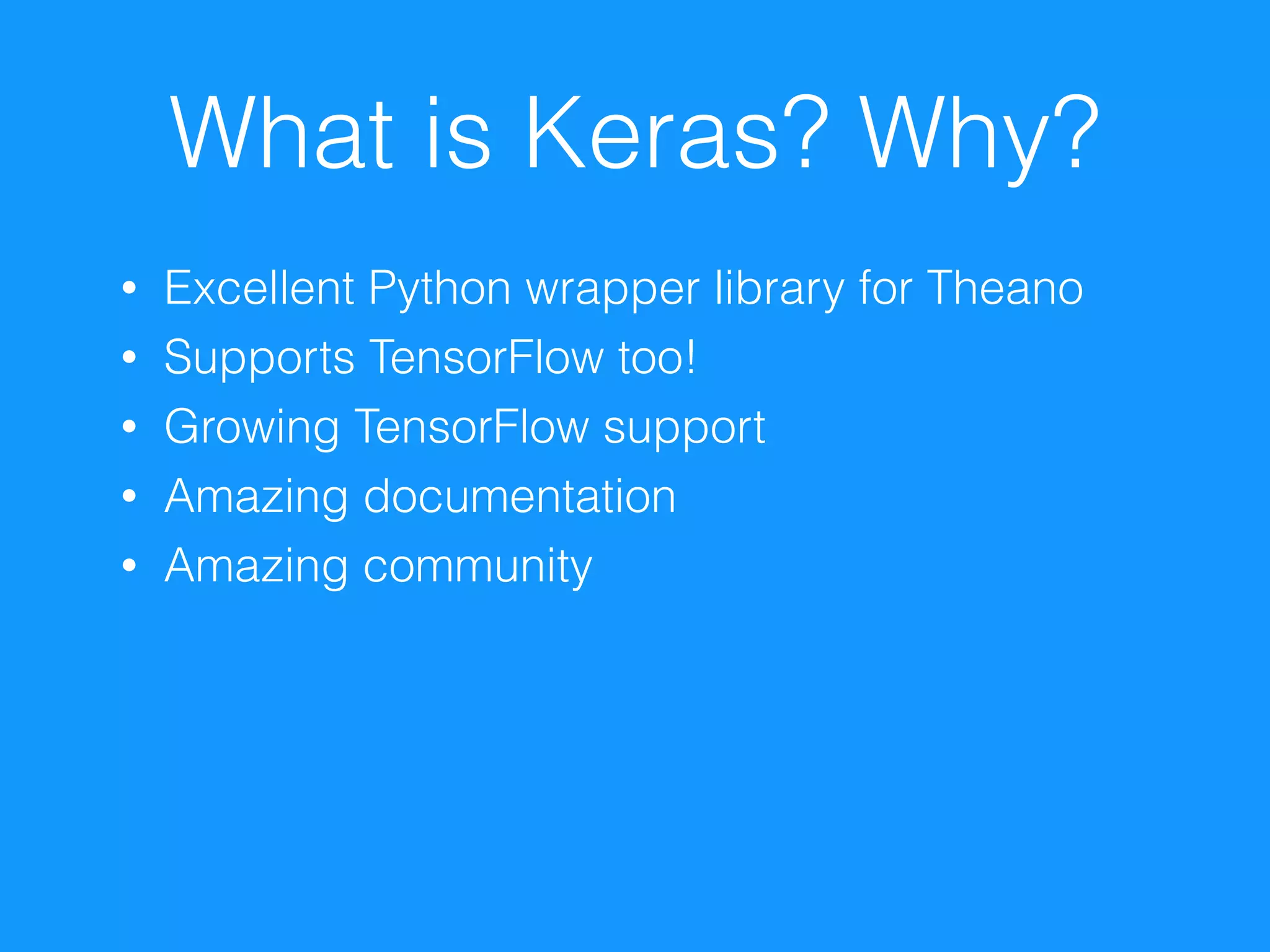

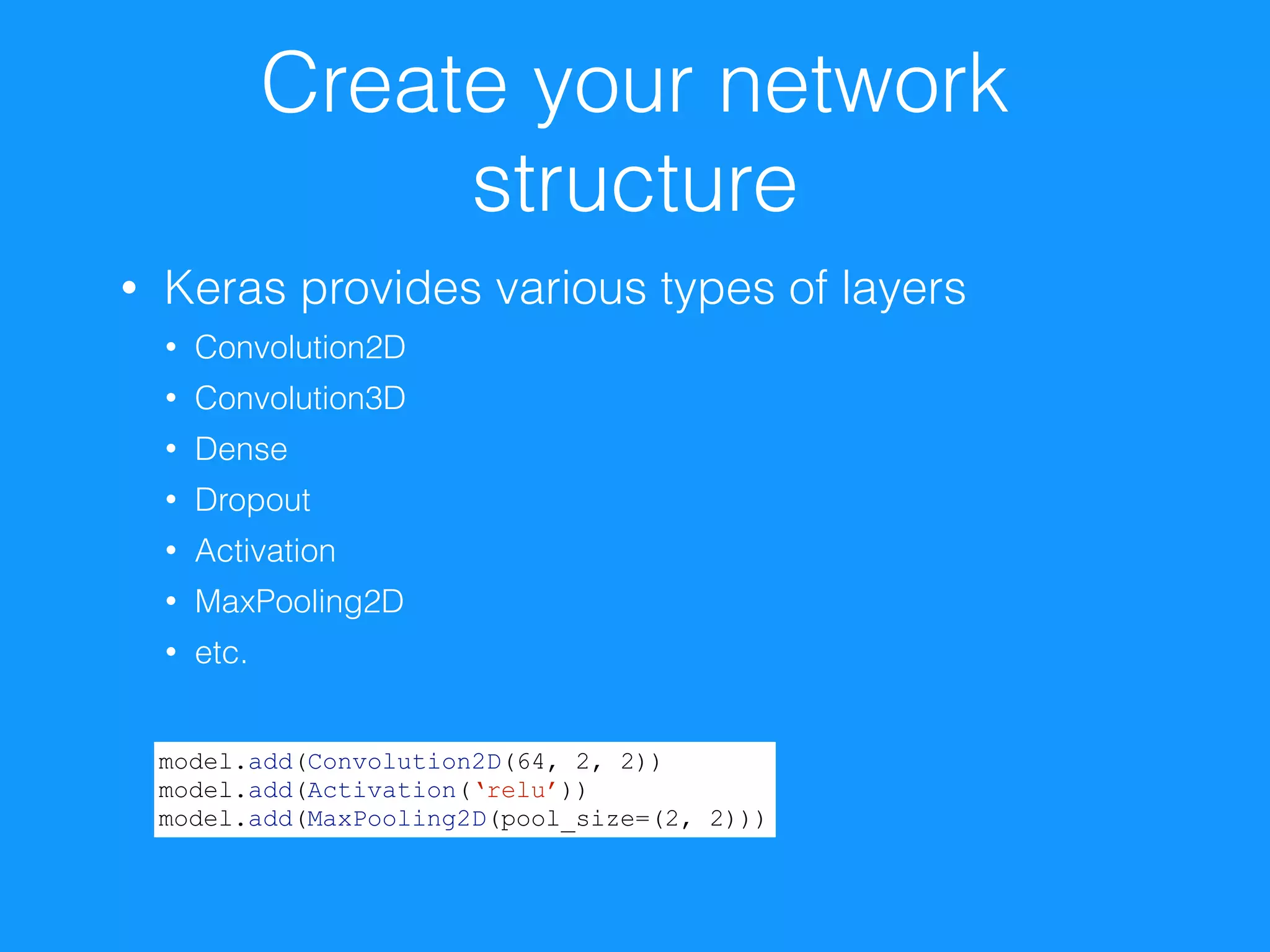

This document provides an introduction to machine learning using convolutional neural networks (CNNs) for image classification. It discusses how to prepare image data, build and train a simple CNN model using Keras, and optimize training using GPUs. The document outlines steps to normalize image sizes, convert images to matrices, save data formats, assemble a CNN in Keras including layers, compilation, and fitting. It provides resources for learning more about CNNs and deep learning frameworks like Keras and TensorFlow.

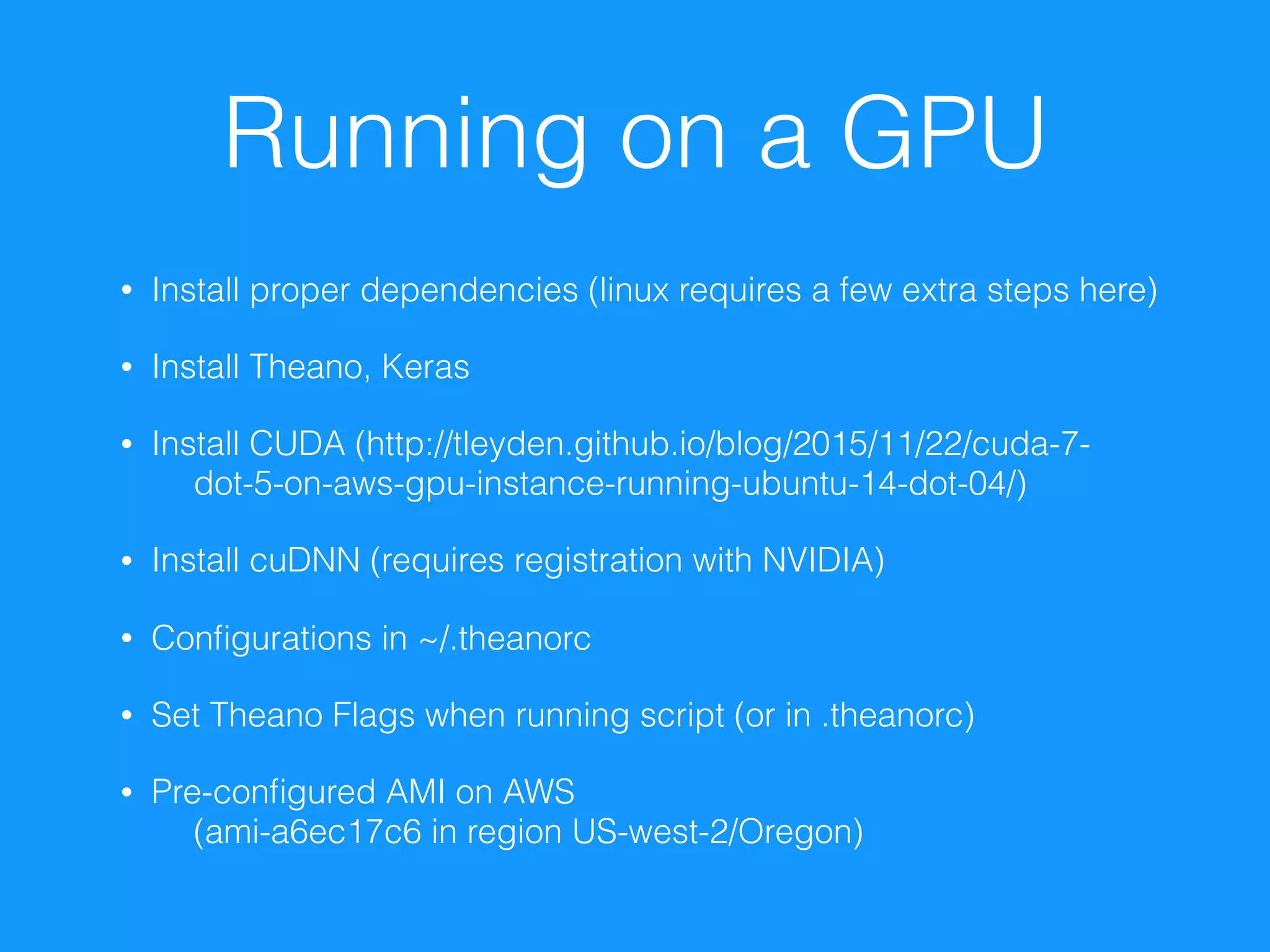

![Theano

• Created by the

University of Montreal

• Framework for

symbolic computation

• Provides GPU support

• Great Python libraries based on Theano:

Keras, Lasagne, PyLearn2

import numpy

import theano.tensor as T

x = T.dmatrix('x')

y = T.dmatrix('y')

z = x + y

f = function([x, y], z)](https://image.slidesharecdn.com/opensourcebridge-machinelearning101-160624030201/75/Introduction-to-Convolutional-Neural-Networks-13-2048.jpg)

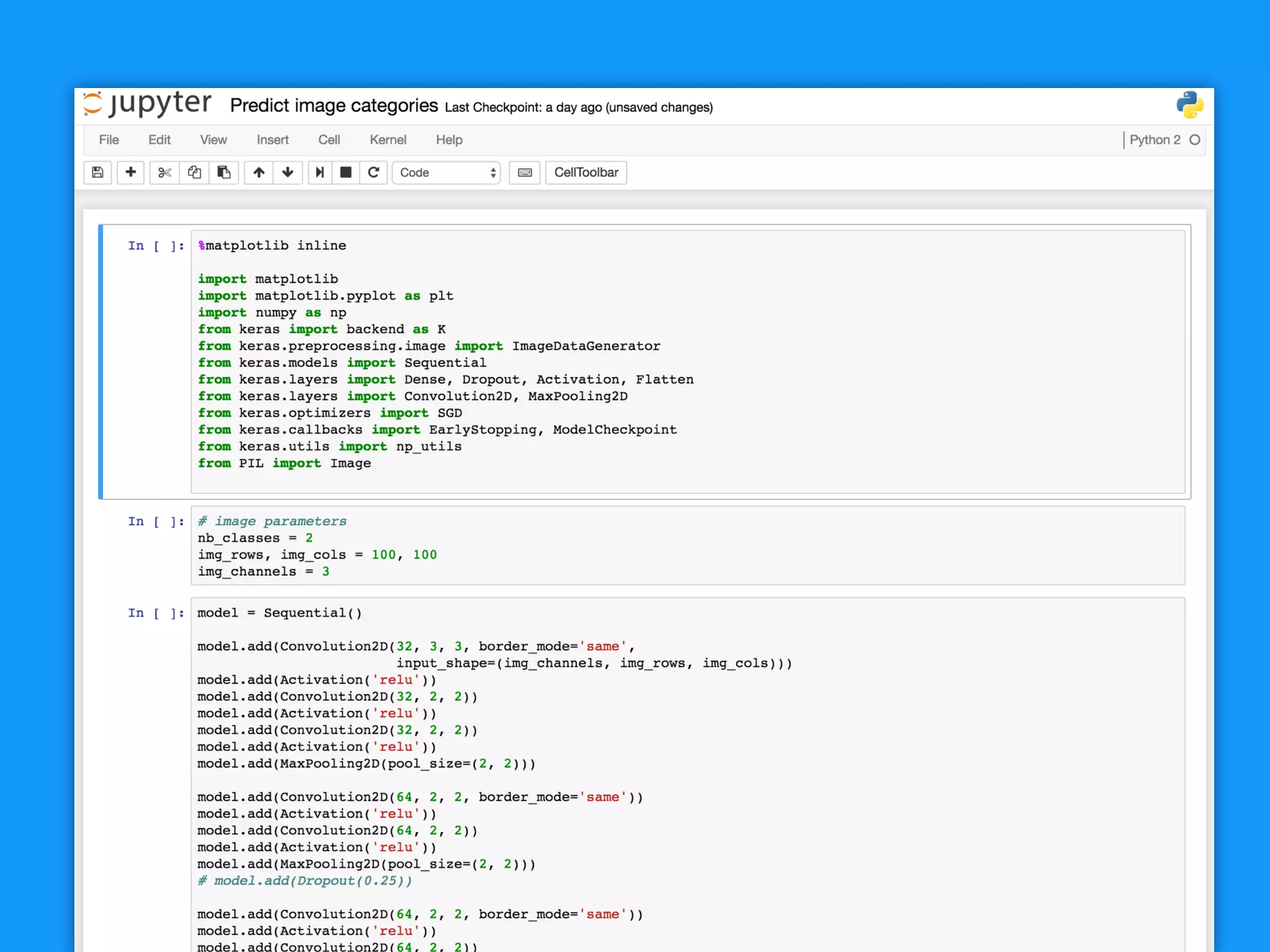

![Set the “compile”

parameters

• Keras provides various options for optimizing

your network

• SGD

• Adagrad

• Adadelta

• Etc.

• Set the learning rate, momentum, etc.

• Define your loss definition and metrics

sgd = SGD(lr=0.01, decay=1e-6, momentum=0.9, nesterov=True)

model.compile(

loss=‘categorical_crossentropy',

optimizer=sgd, metrics=['accuracy'])](https://image.slidesharecdn.com/opensourcebridge-machinelearning101-160624030201/75/Introduction-to-Convolutional-Neural-Networks-24-2048.jpg)