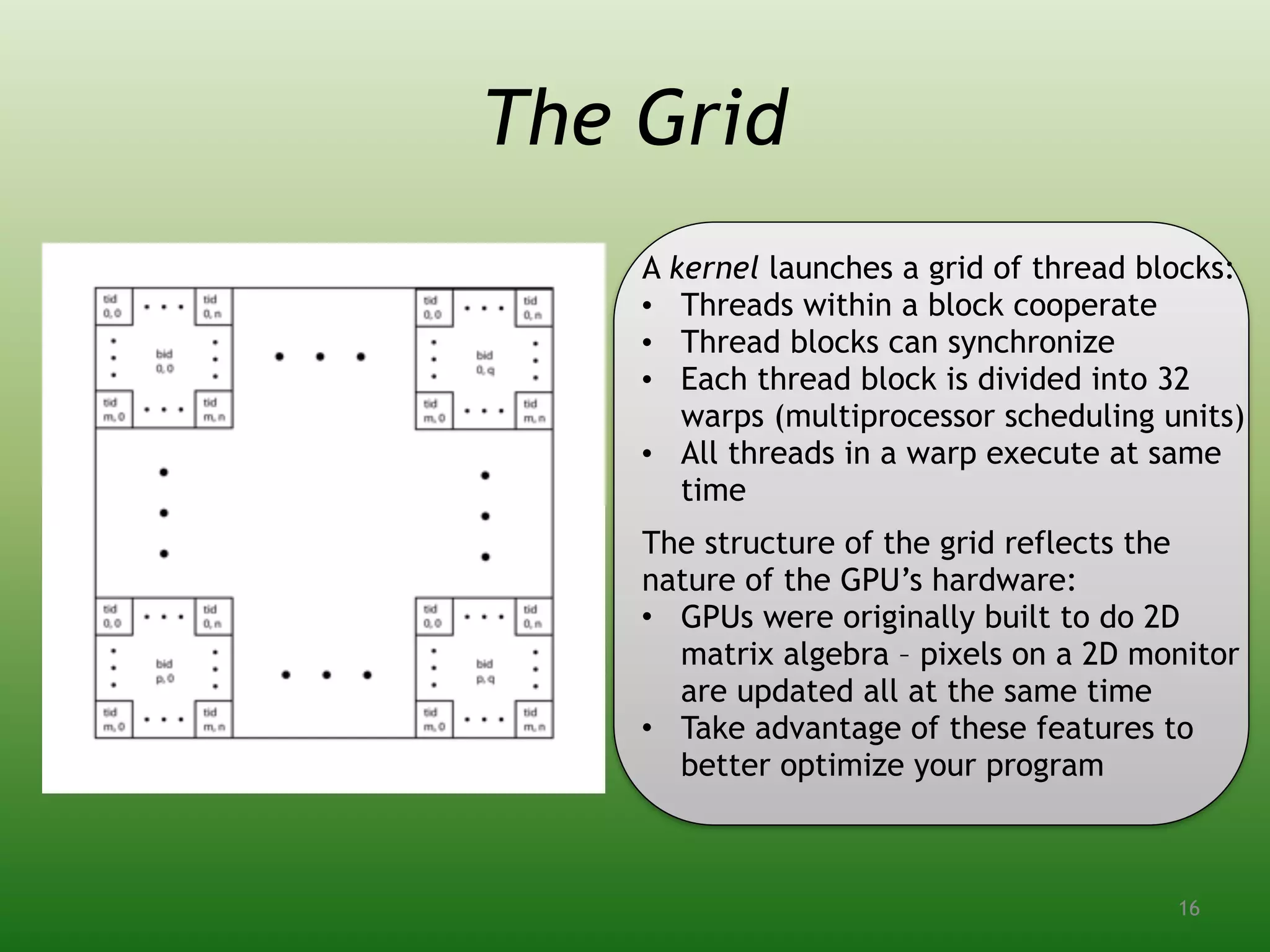

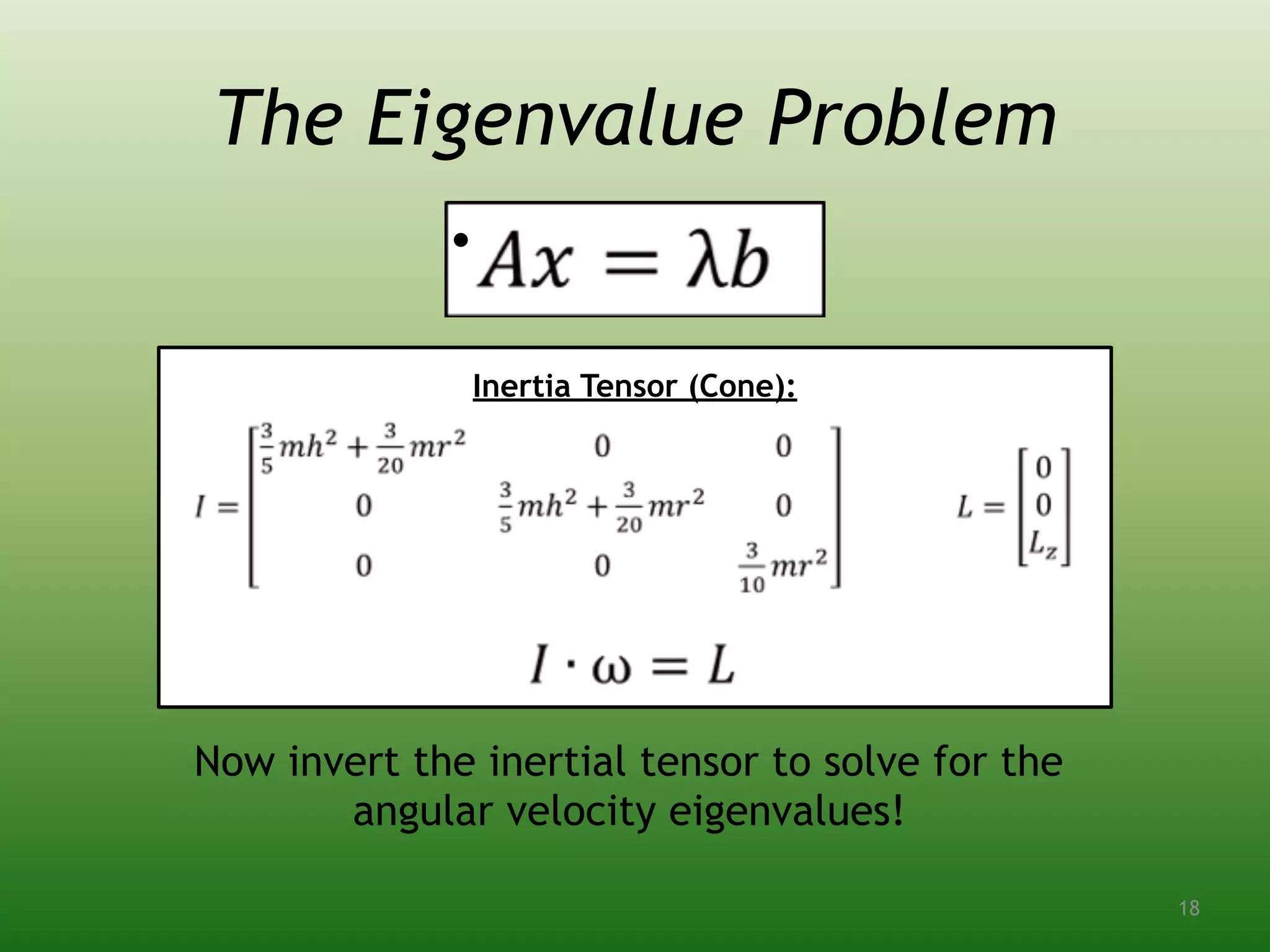

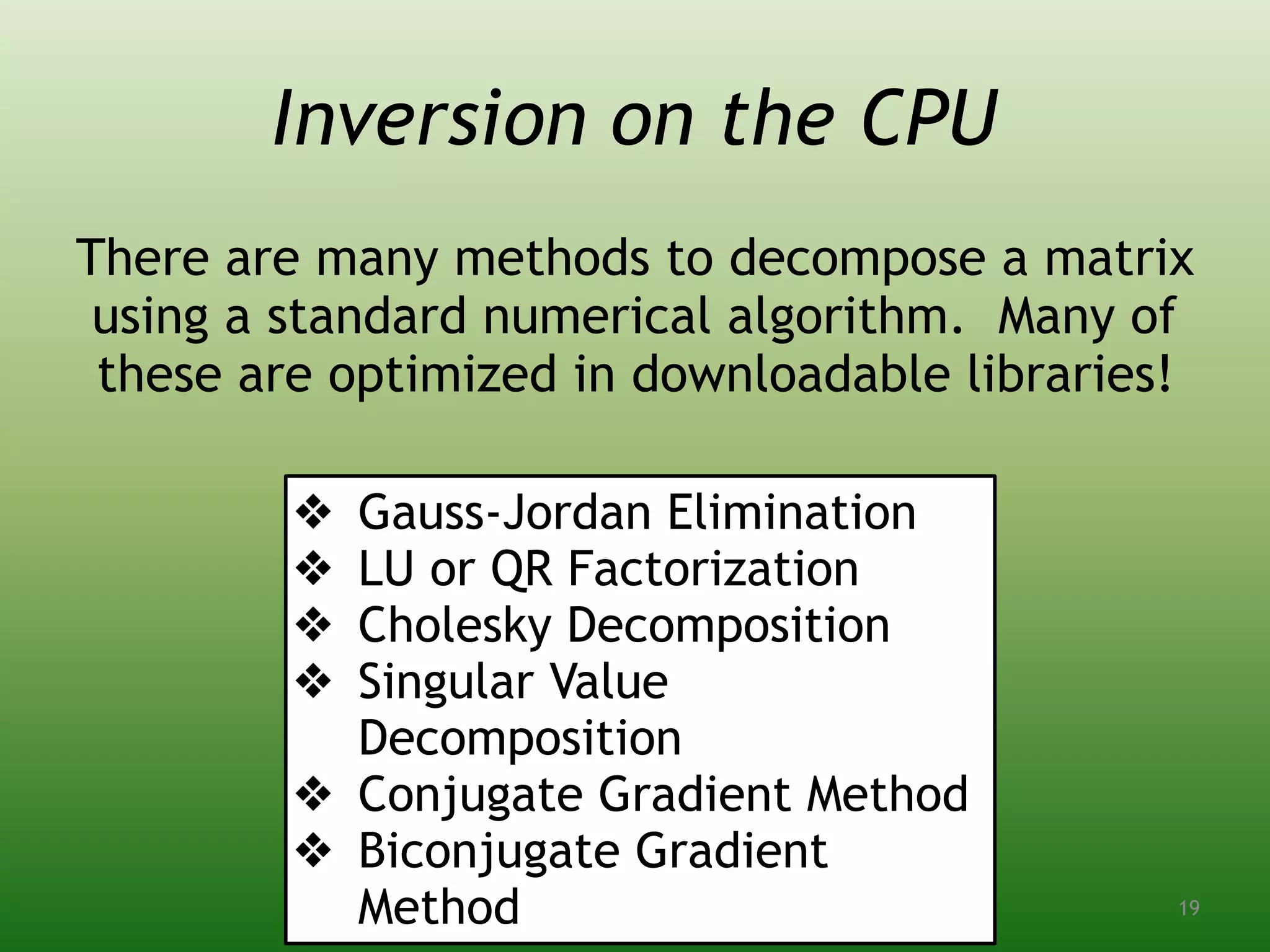

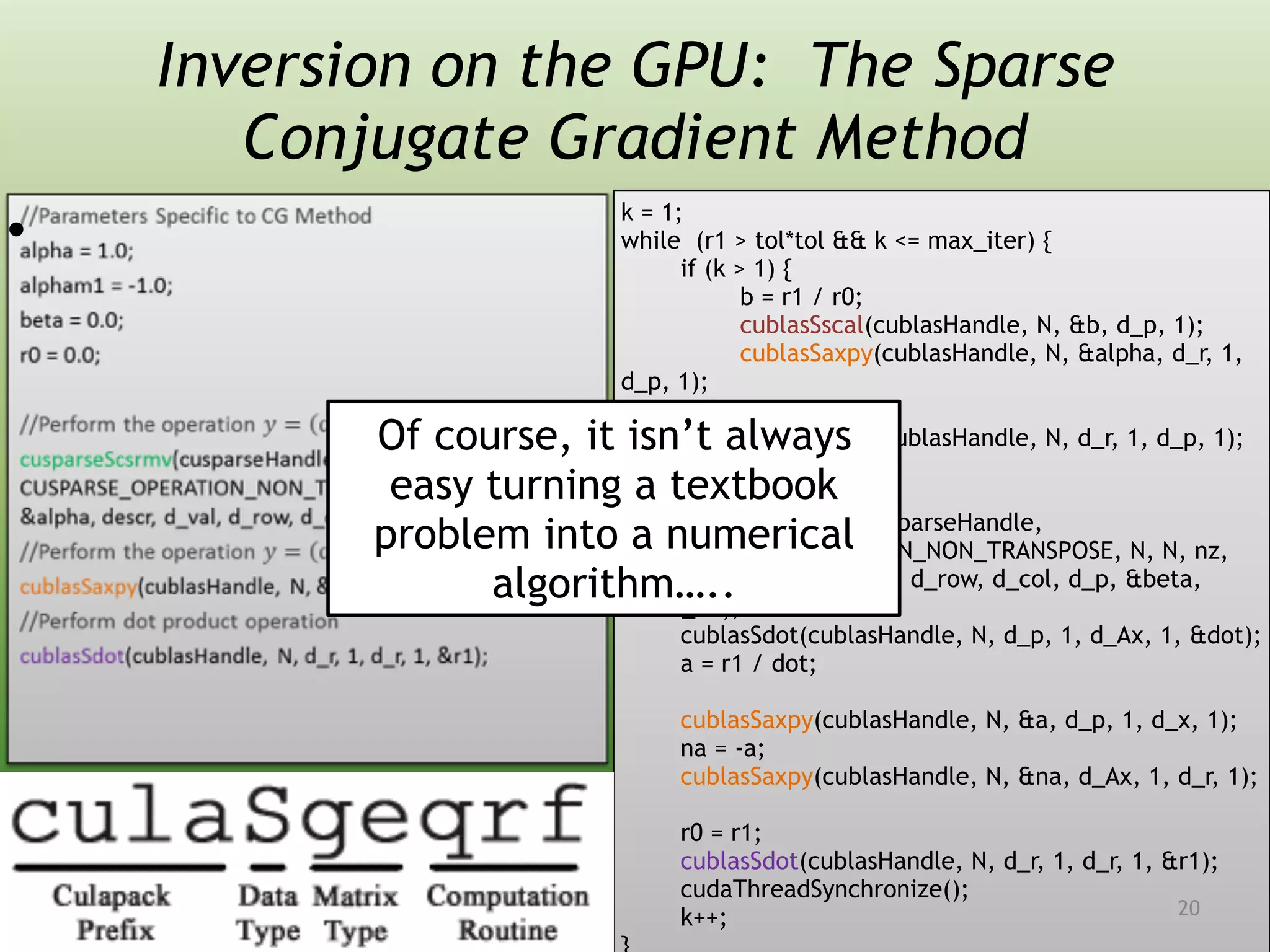

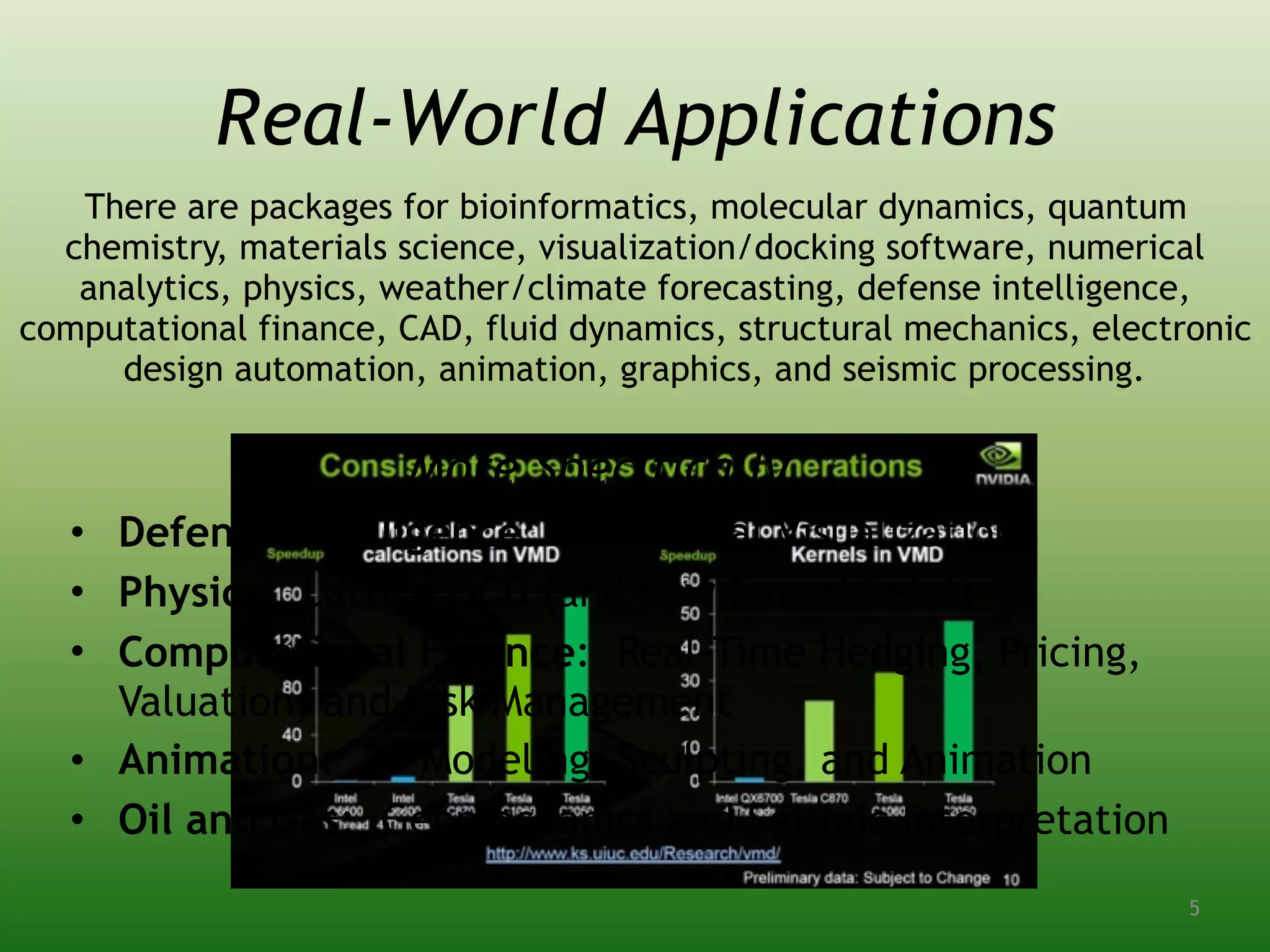

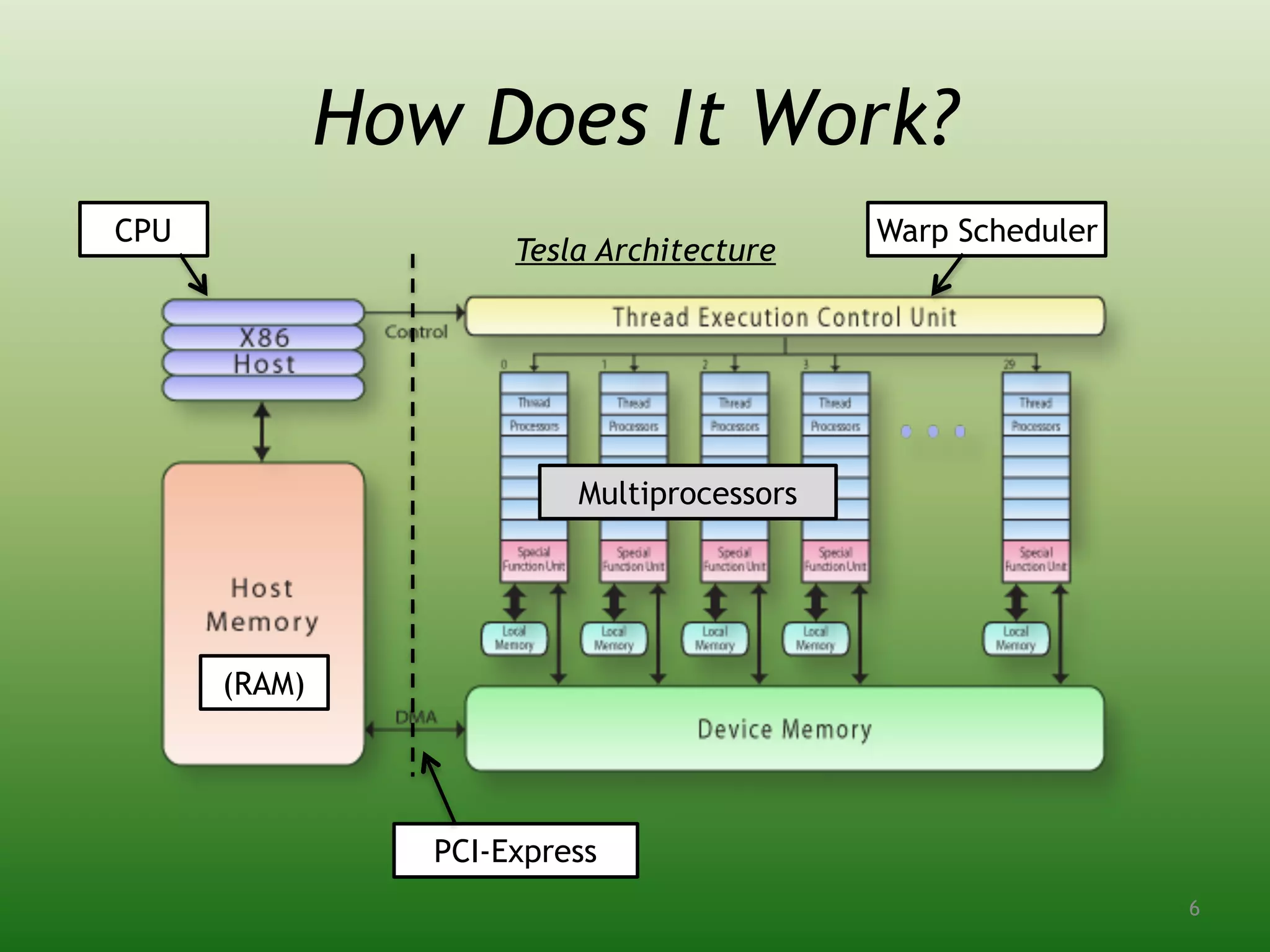

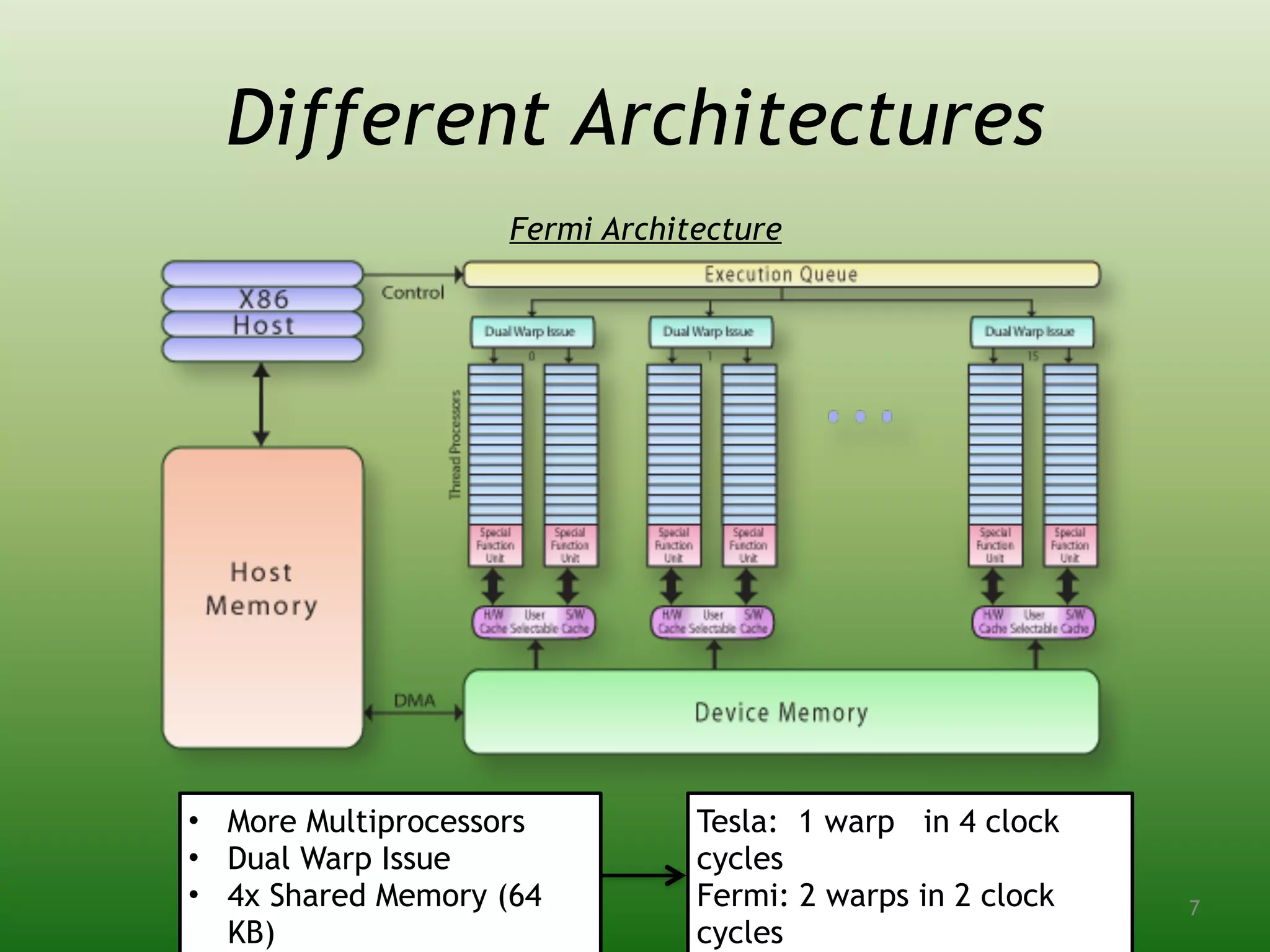

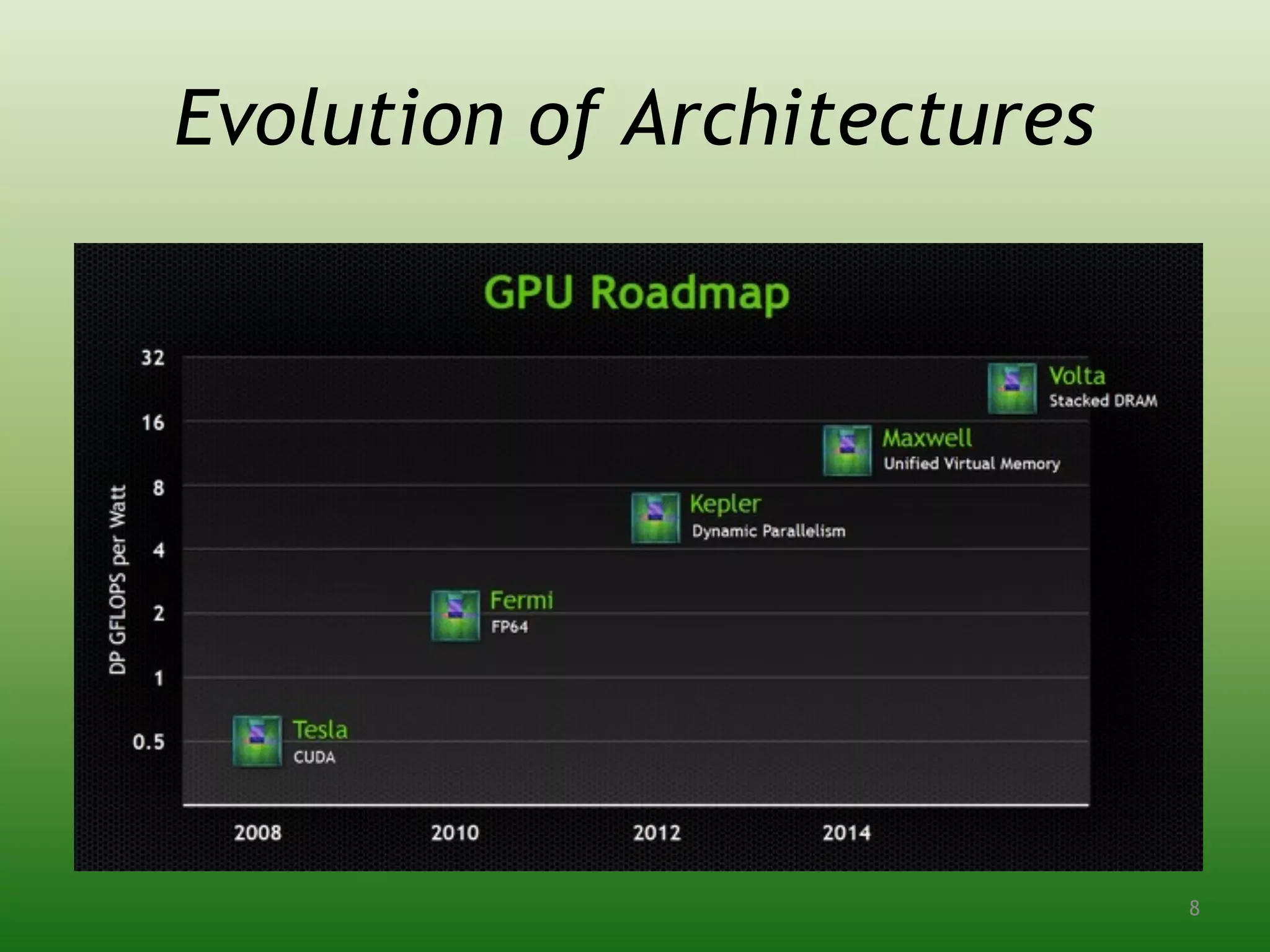

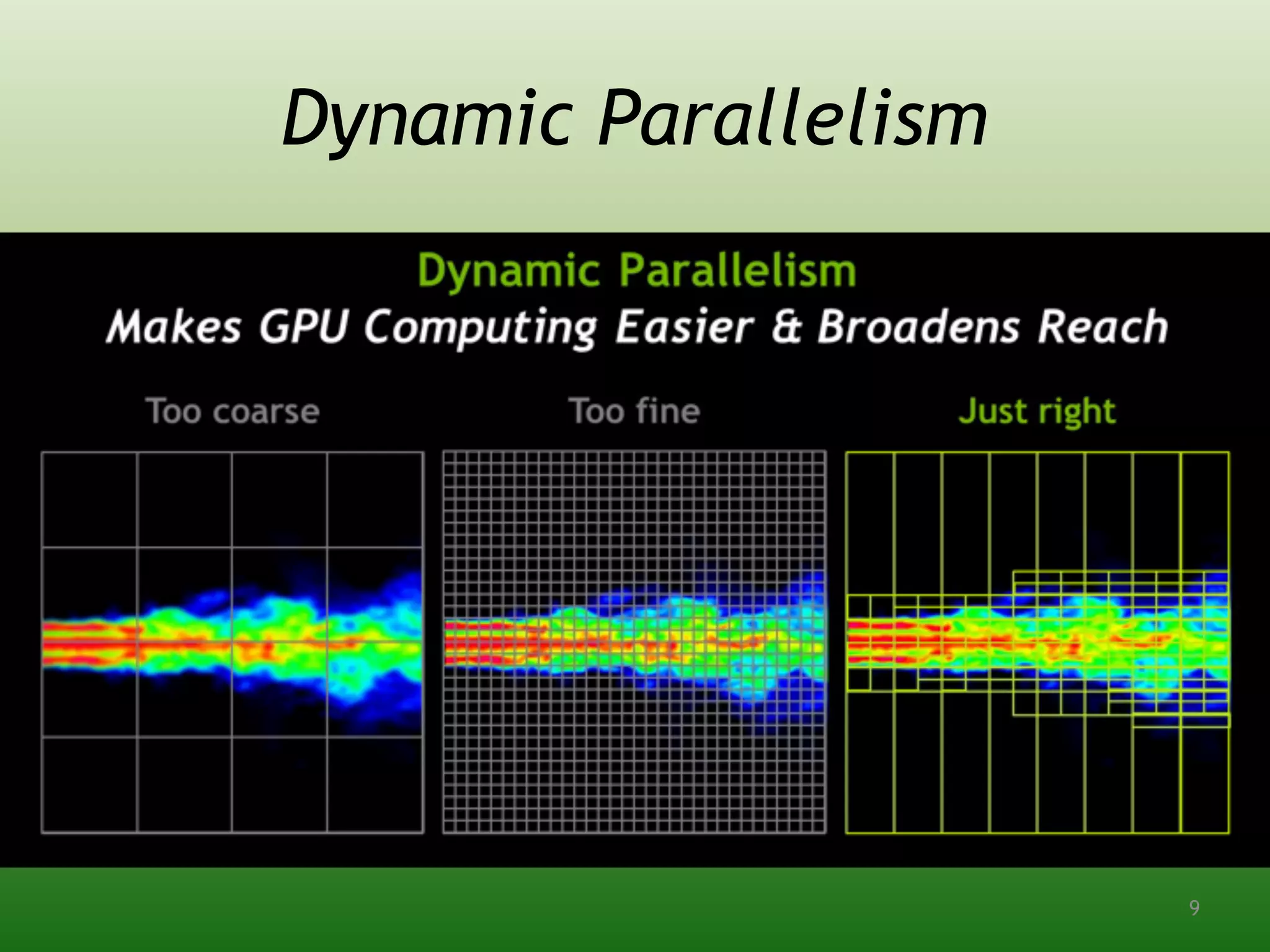

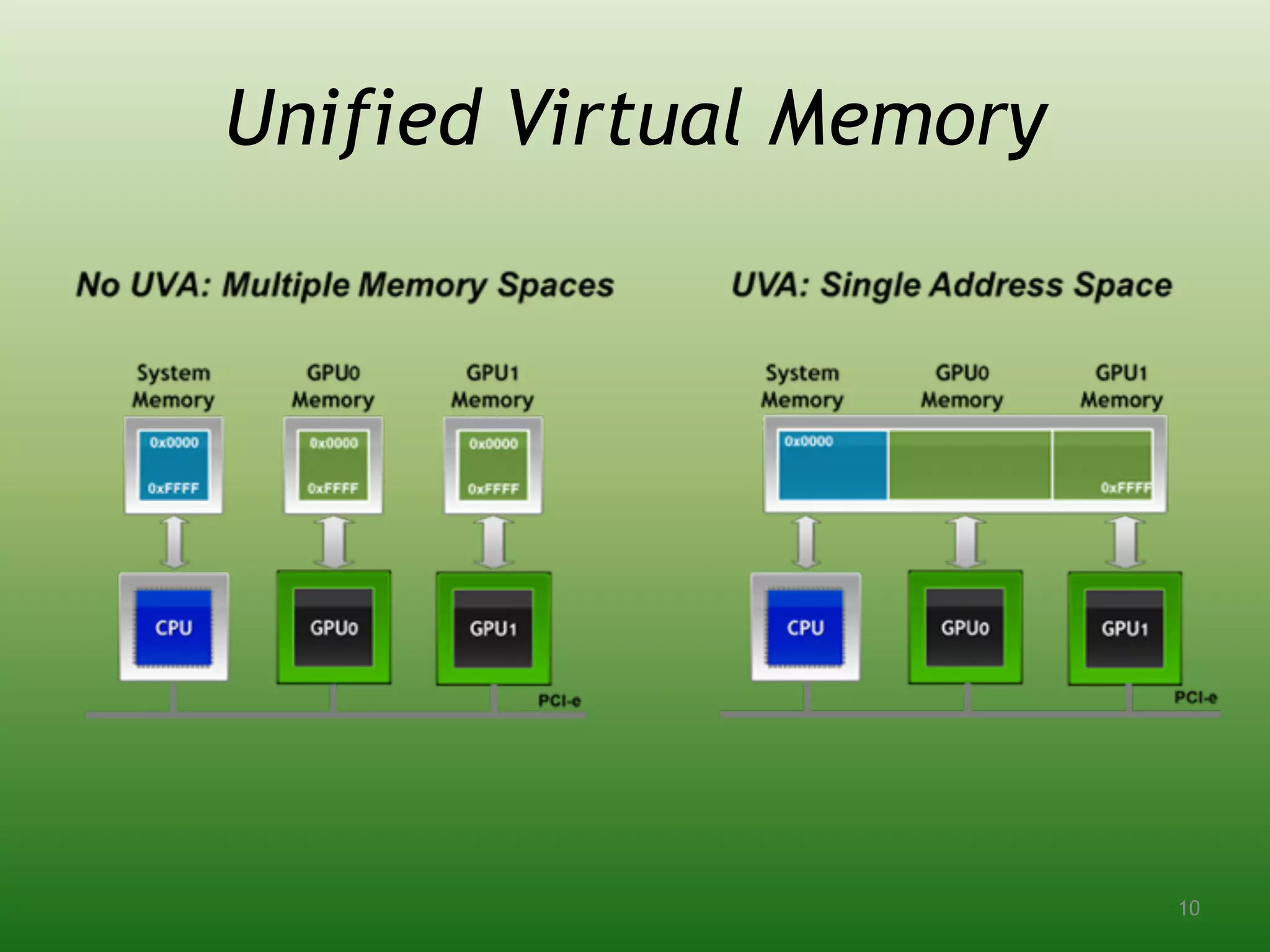

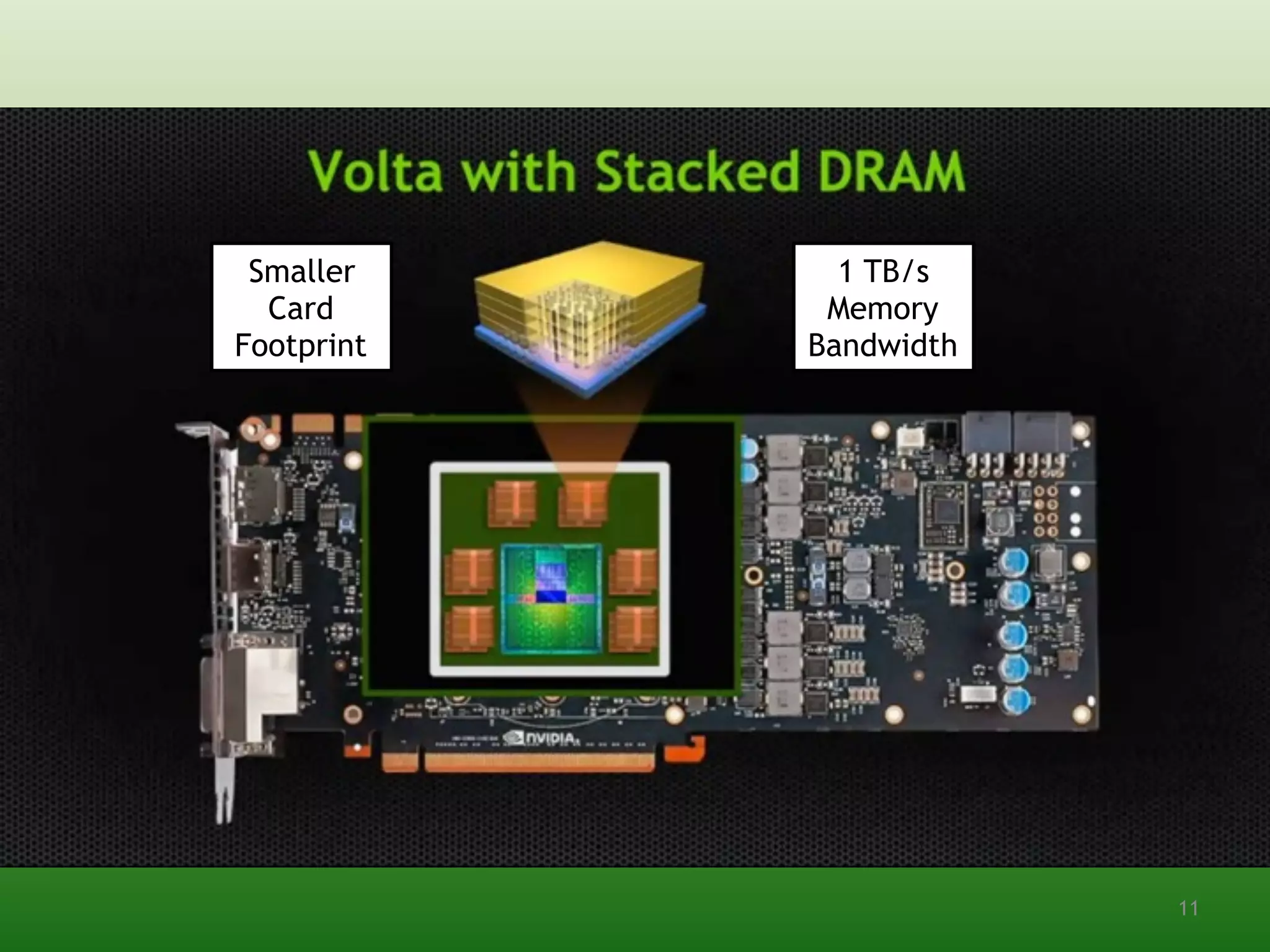

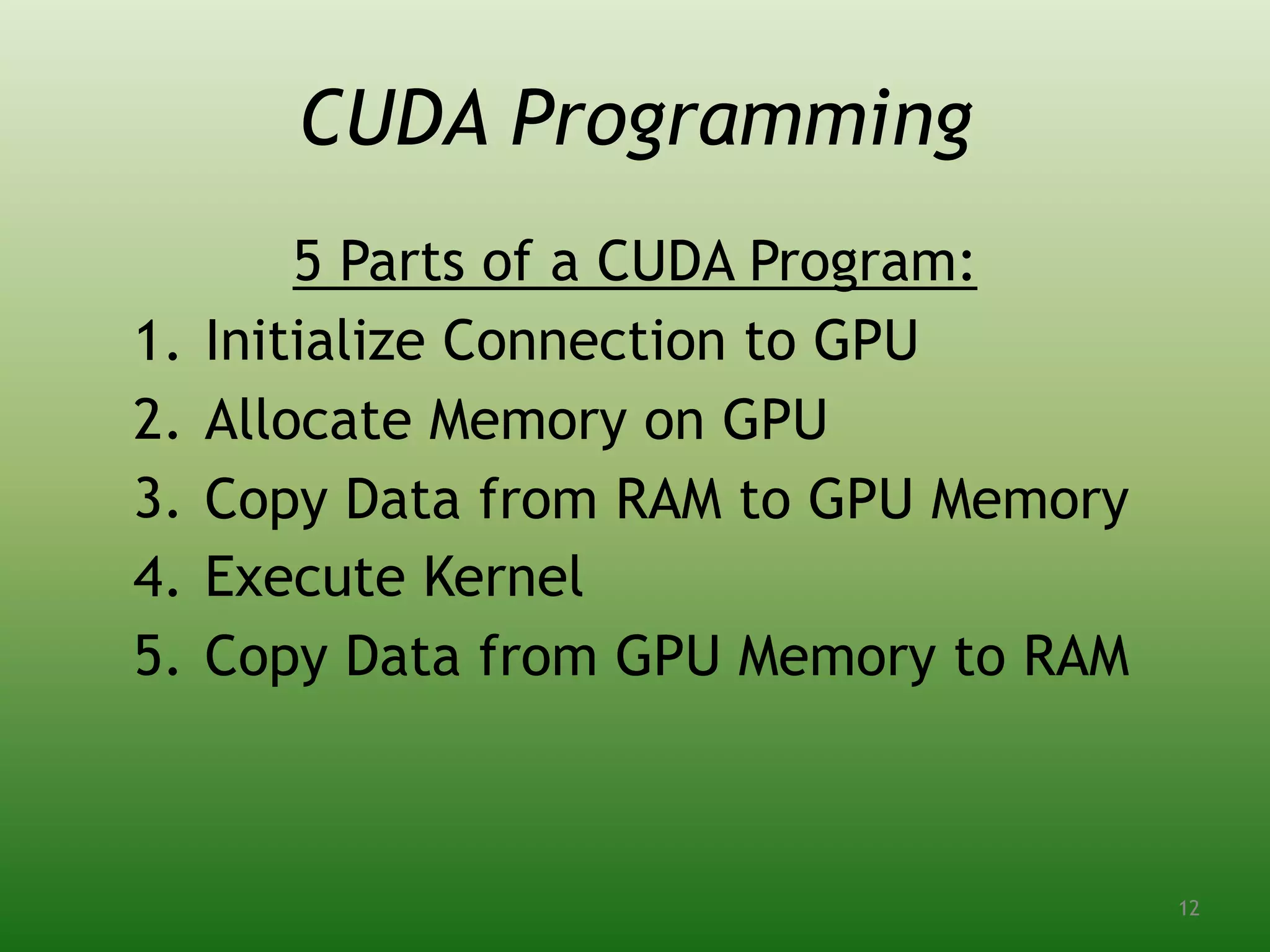

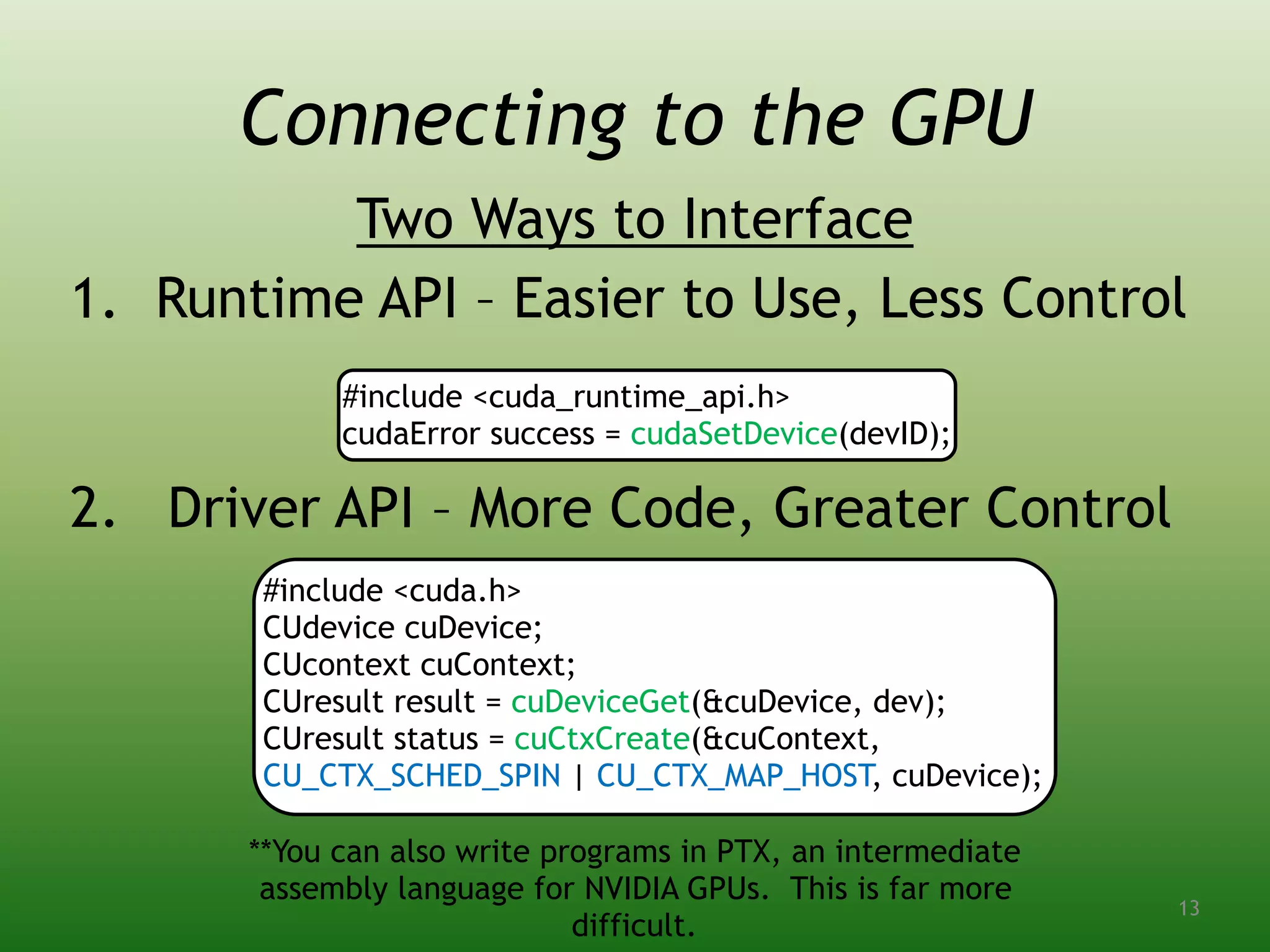

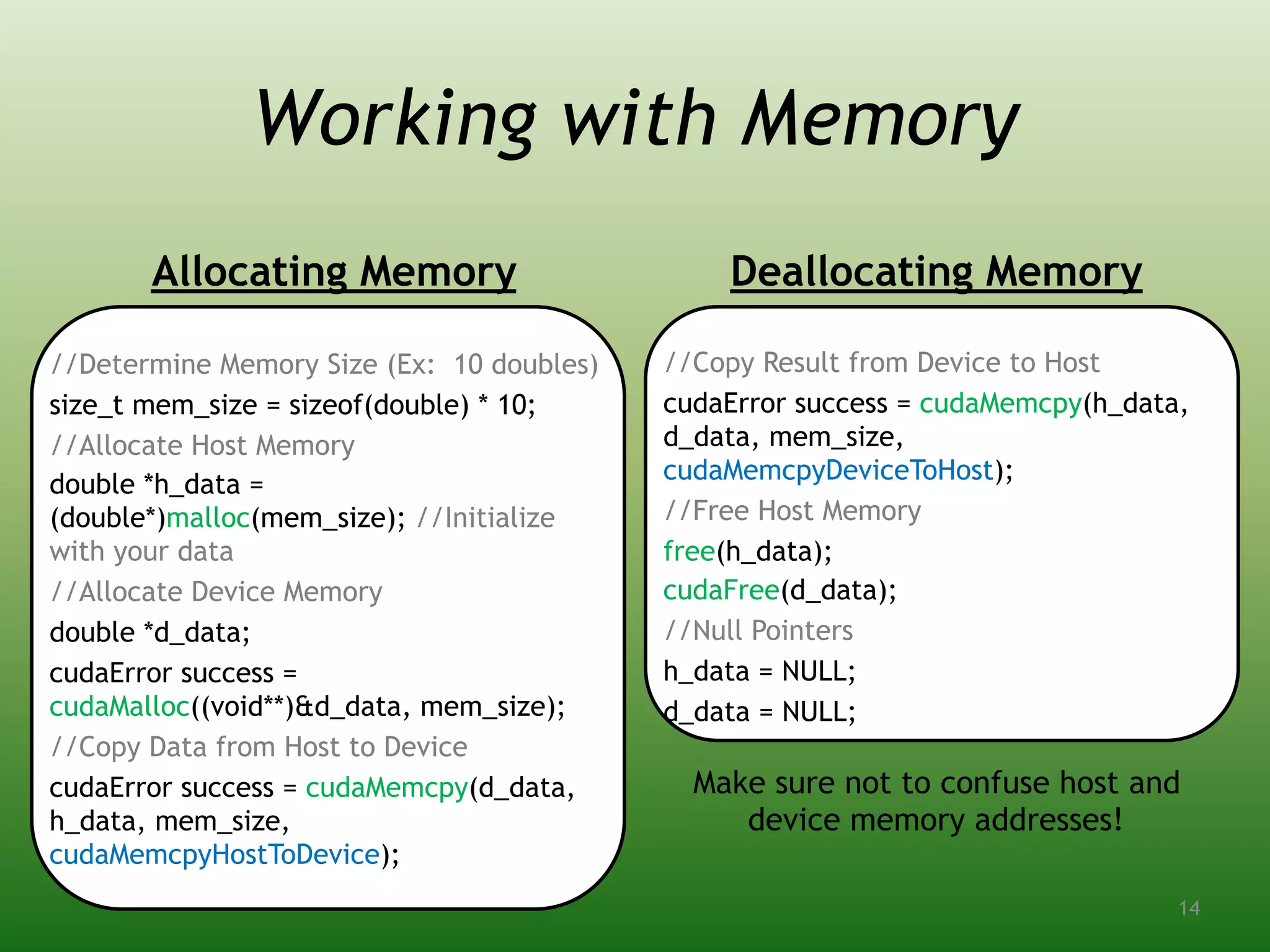

This document provides an introduction to parallel programming using GPUs. It outlines the hardware architecture of GPUs, which have hundreds of cores optimized for processing pixels in parallel. It then discusses CUDA programming, with examples of initializing the GPU, allocating and transferring memory, executing kernels, and common applications in physics, finance, and other fields. The document concludes by discussing the sparse conjugate gradient method for inverting matrices on the GPU as an example application in computational physics.

![Kernels are functions written to run on the

GPU:

Invocation on the host side executes the

kernel:

//This kernel adds the each value in d_data to its index

__global__ void myKernel(double *d_data)

{

unsigned int i = blockDim.x * blockIdx.x + threadIdx.x;

unsigned int j = blockDim.y * blockIdx.y + threadIdx.y;

d_data[(i*width)+j] = d_data[(i*width)+j] + (i*width) + j;

}

Kernels

//Kernel Parameters

dim3 threads(numthreads / blocksize, 1);

dim3 blocks(blocksize, 1);

//Execute Kernel

myKernel<<<blocks, threads>>>(d_data);

These

determine the

grid size

15](https://image.slidesharecdn.com/7aabf9b7-f6d8-4880-824a-d3647222d832-160429204201/75/GPU-Programming-15-2048.jpg)