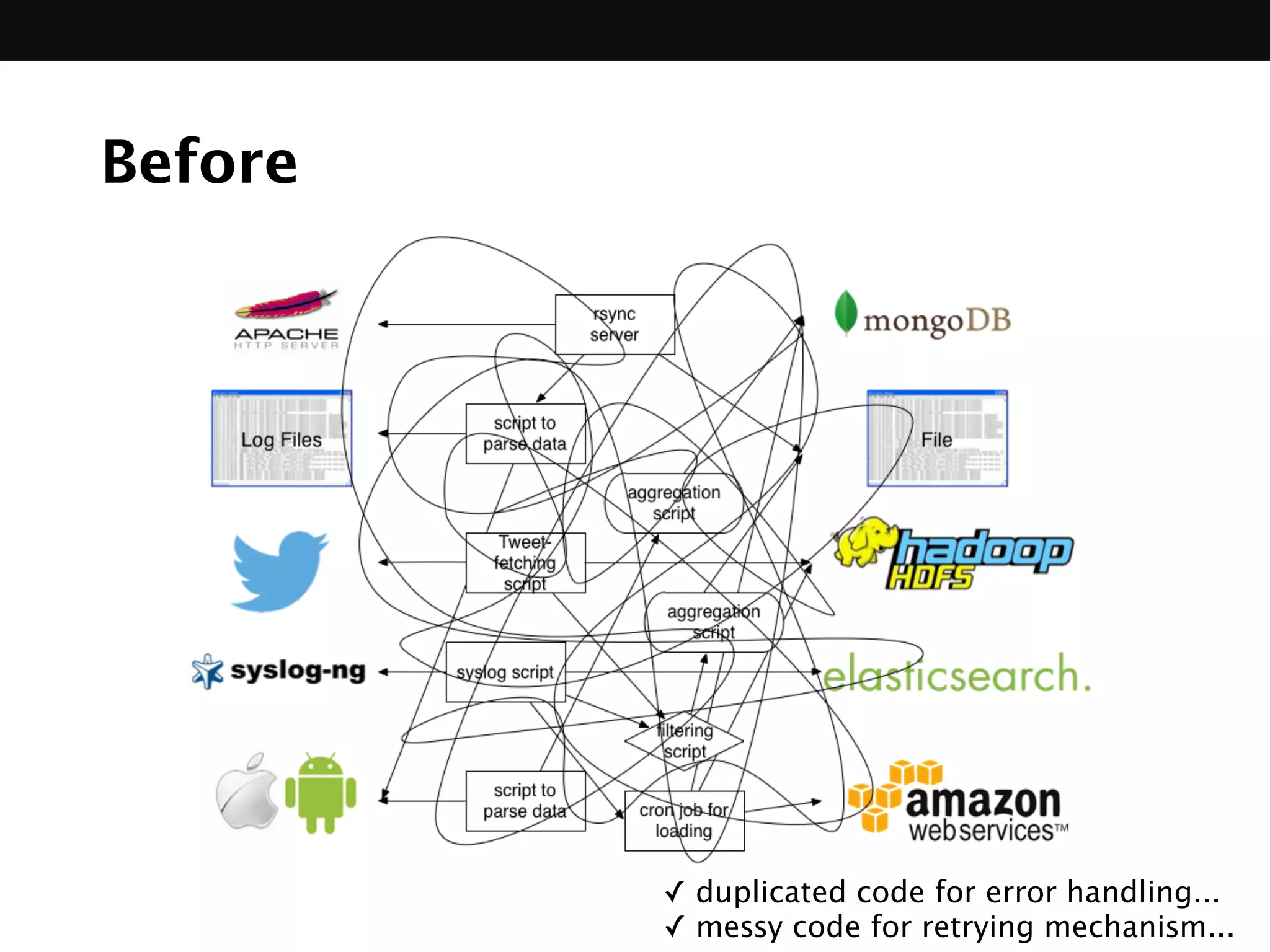

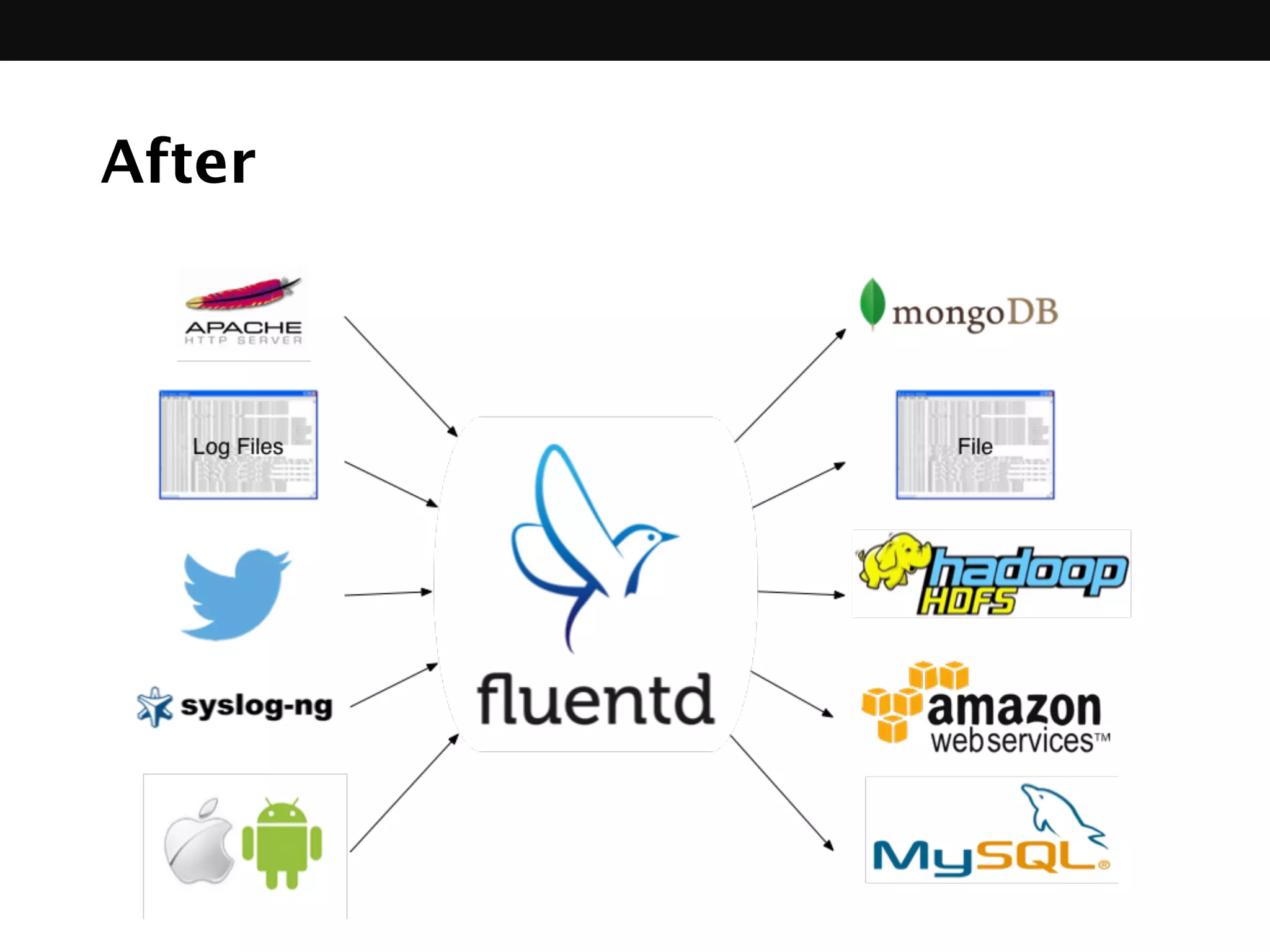

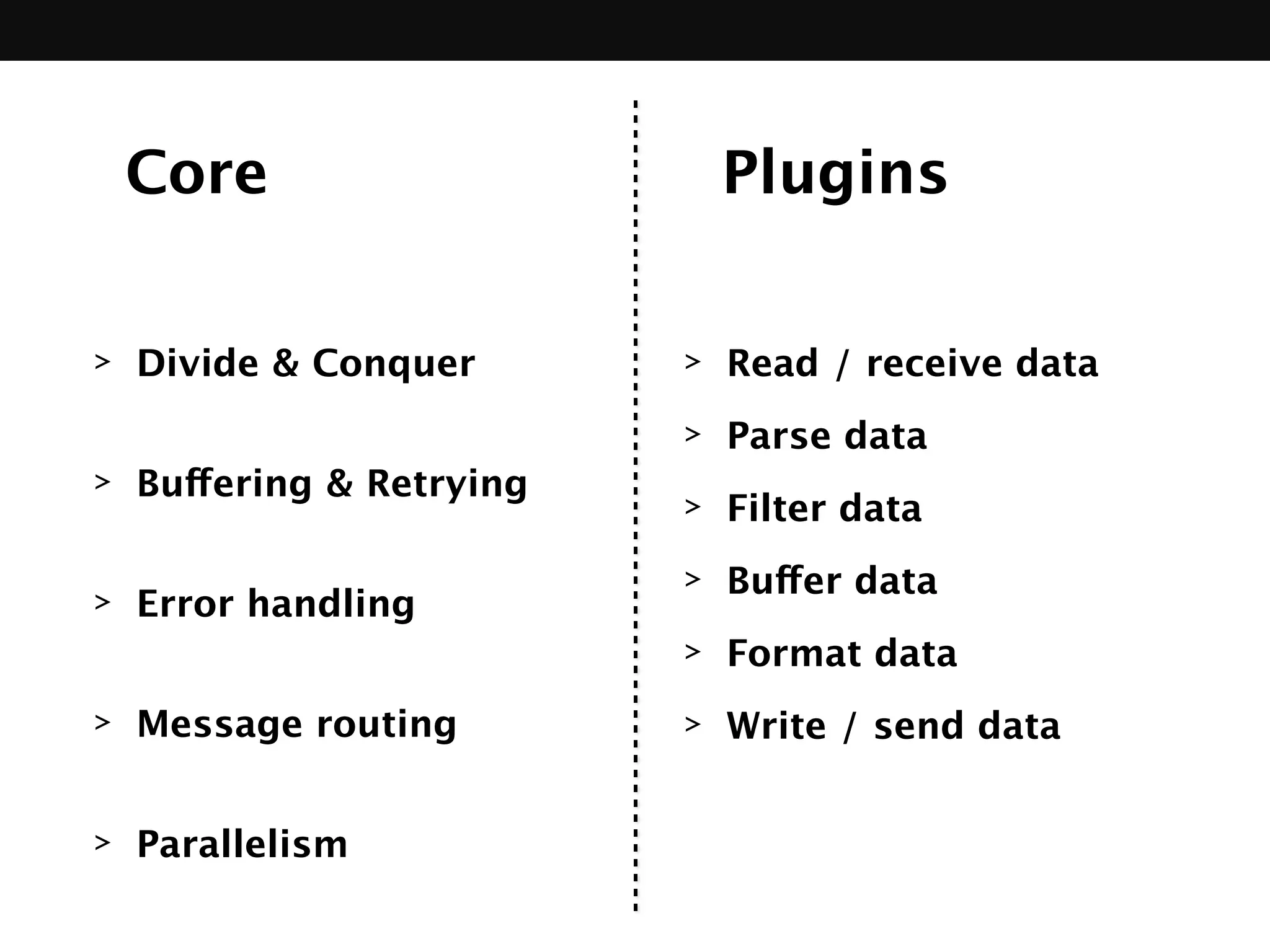

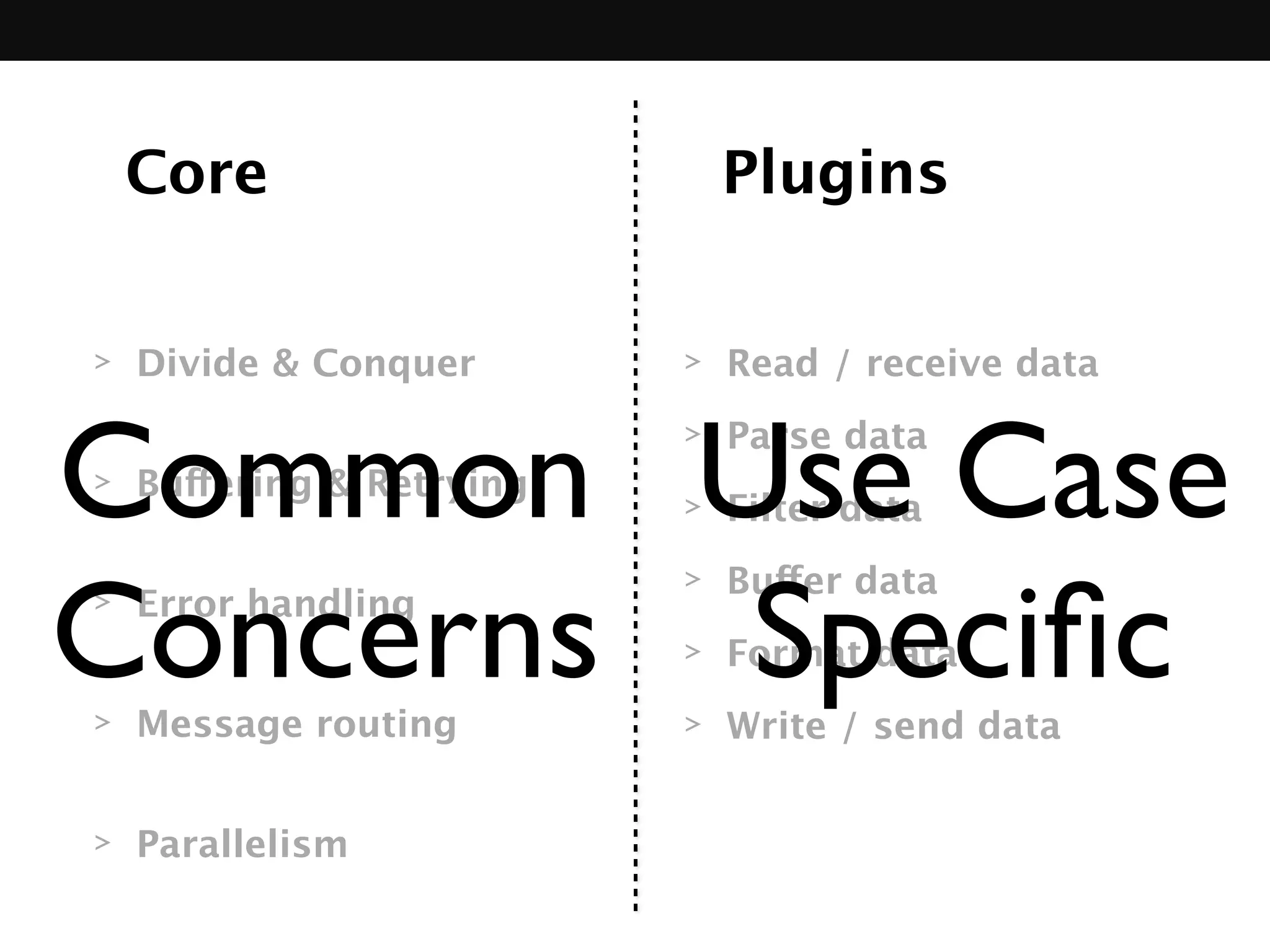

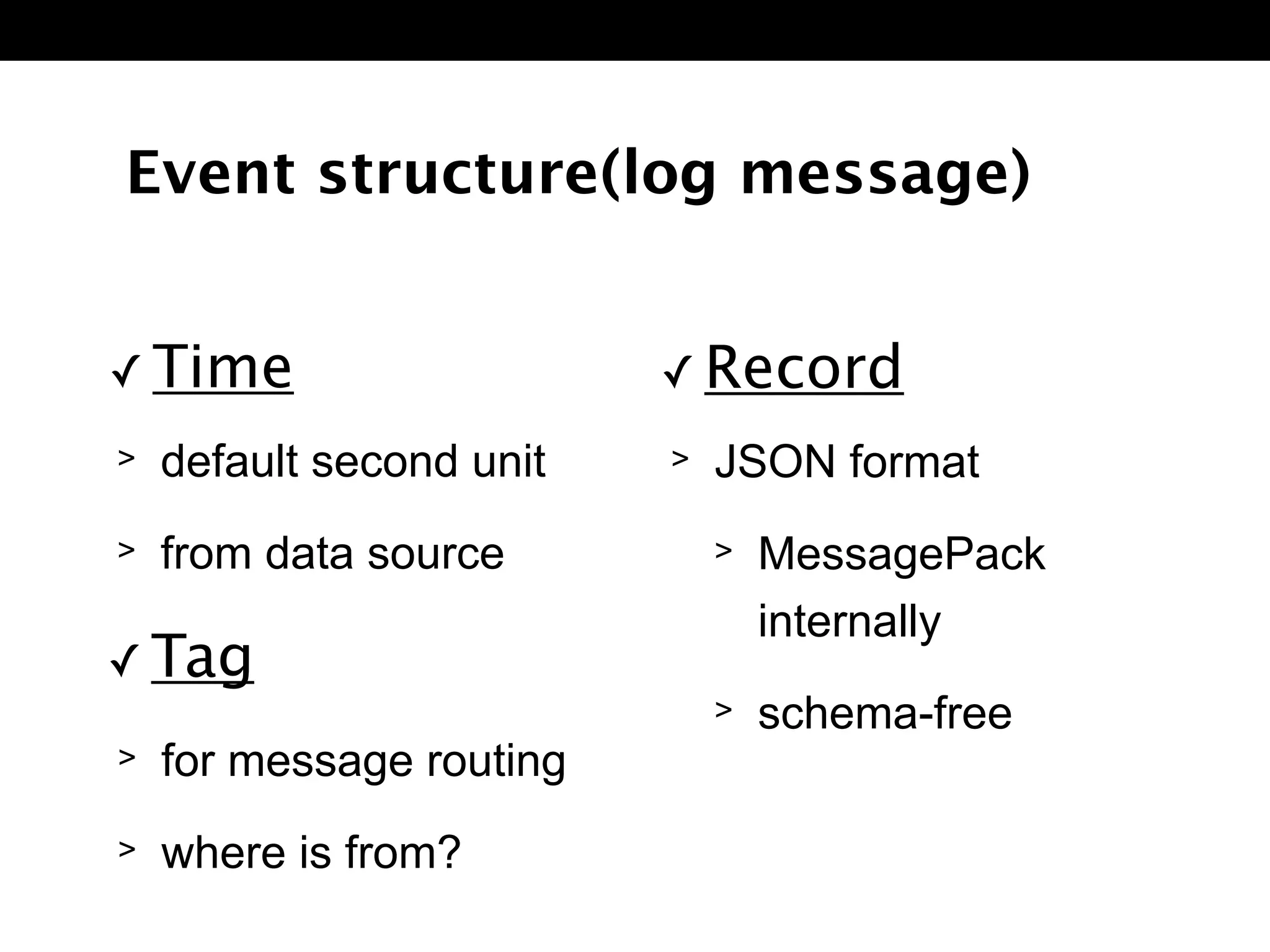

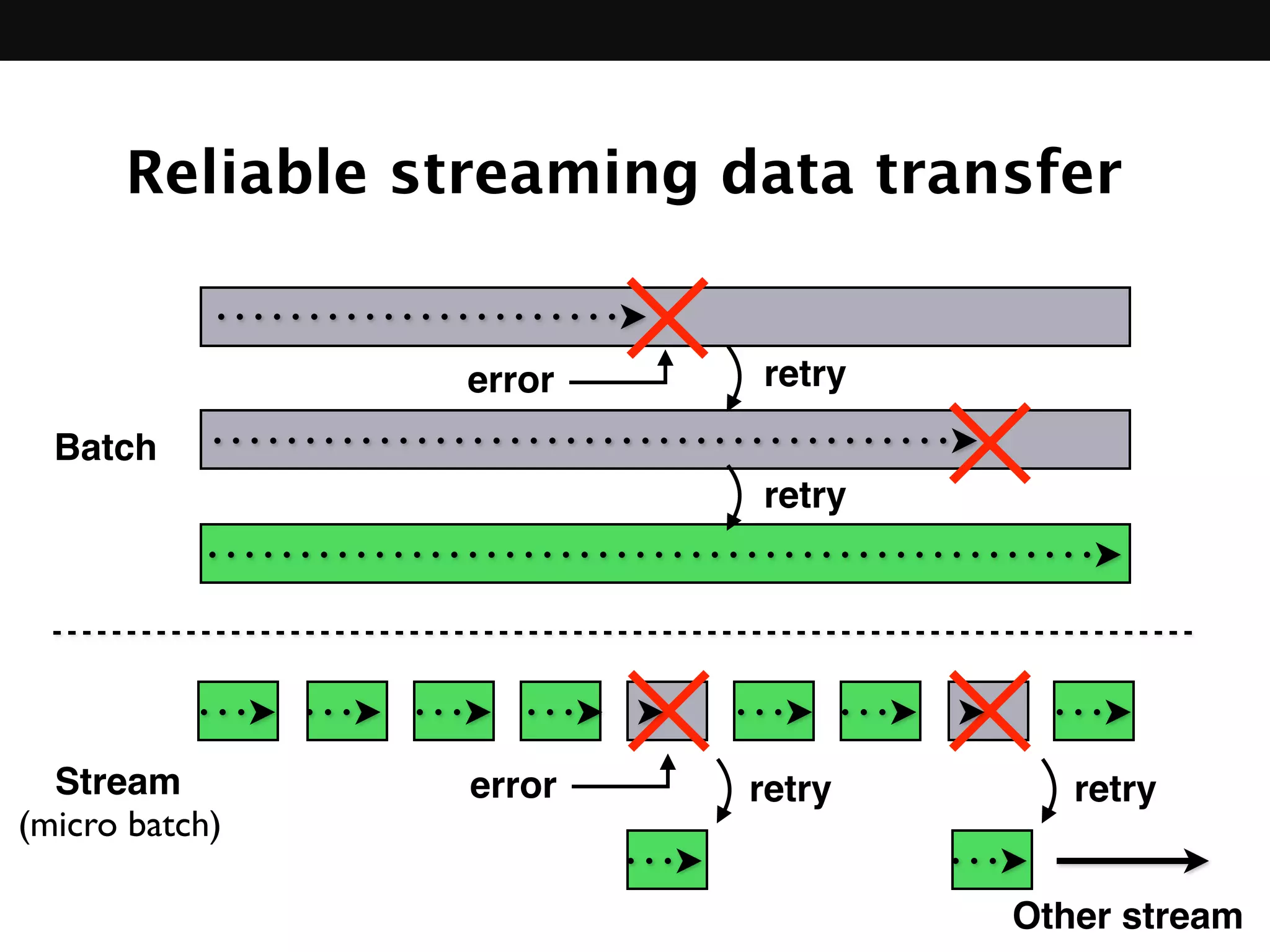

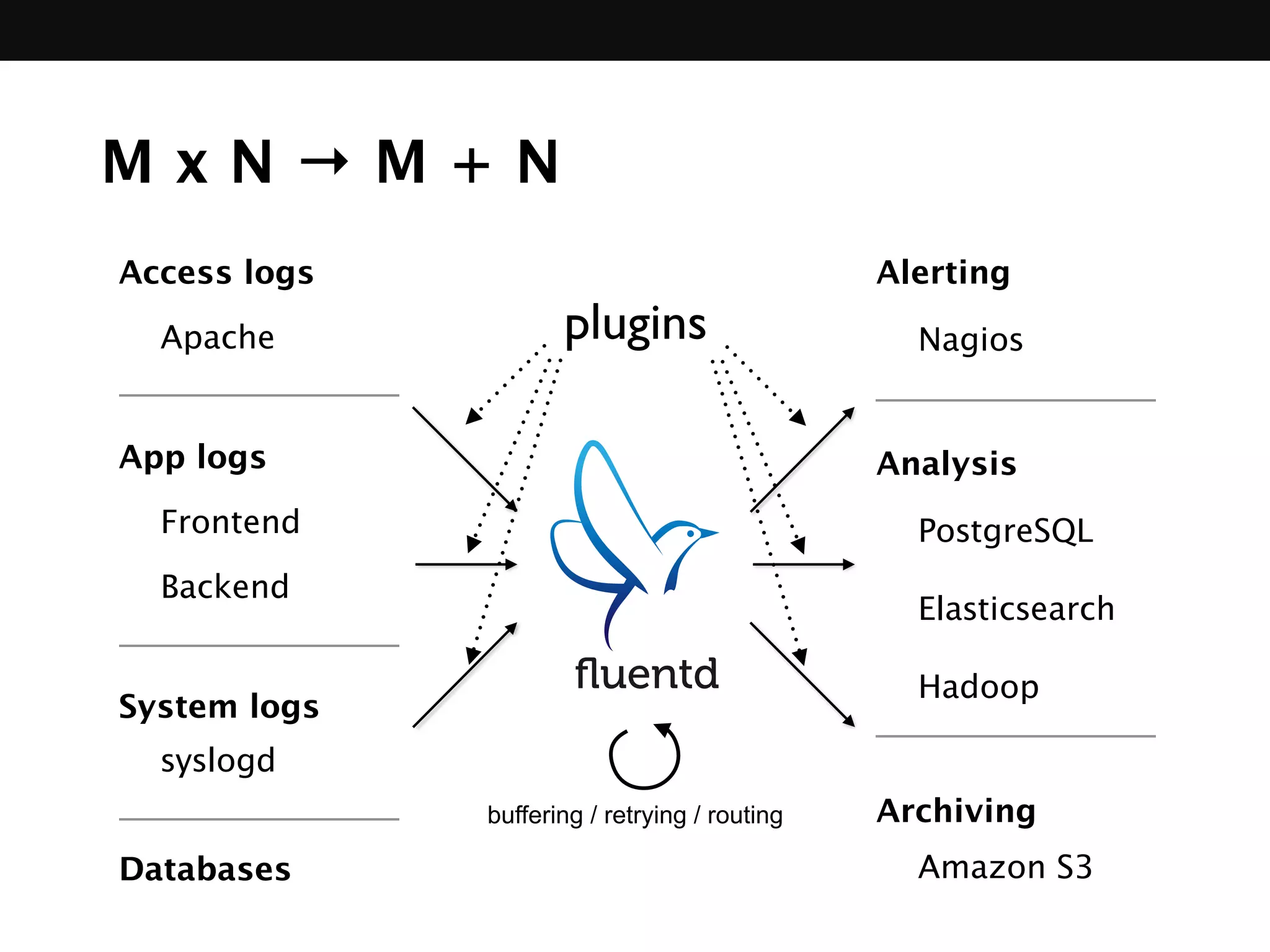

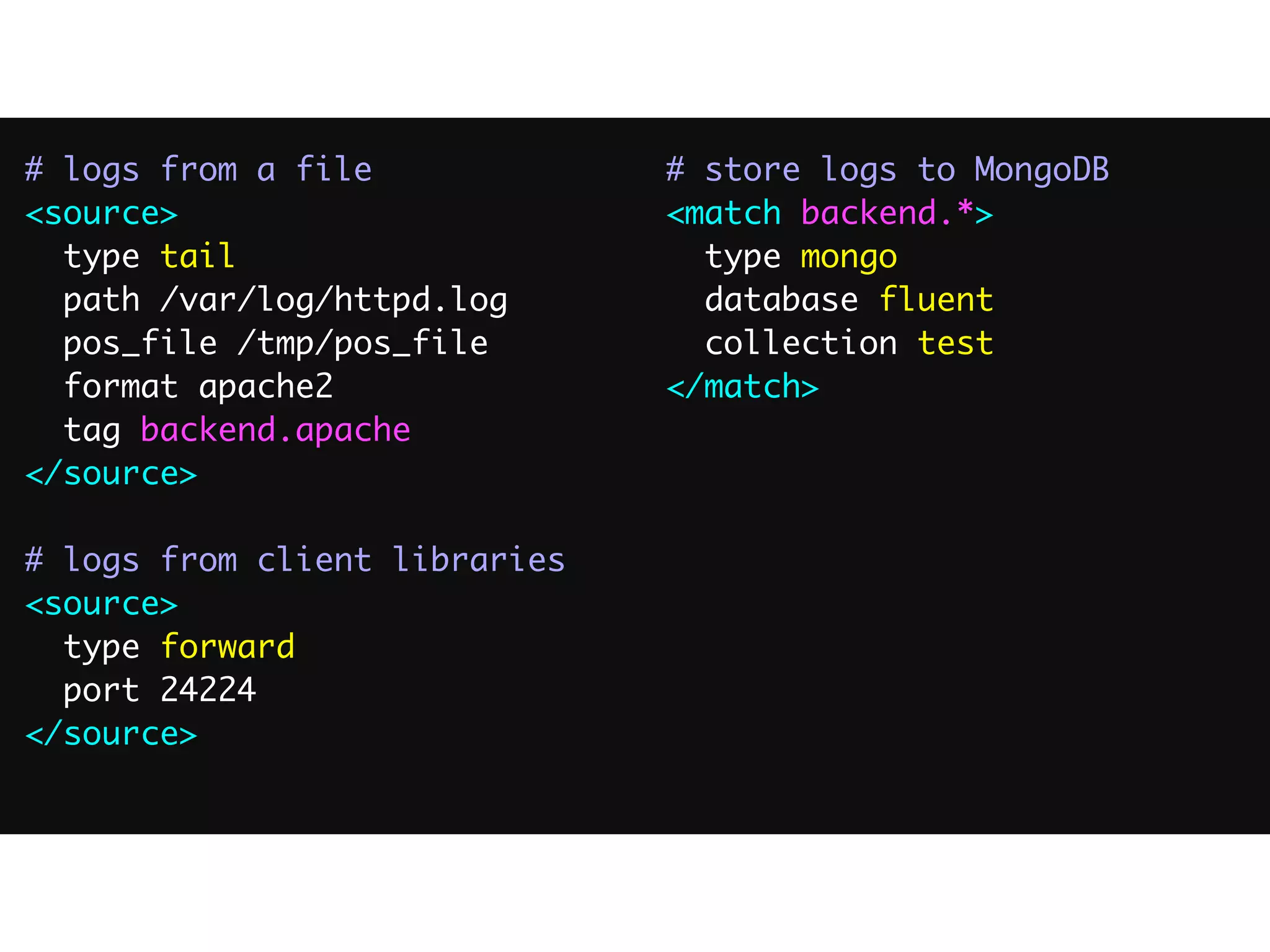

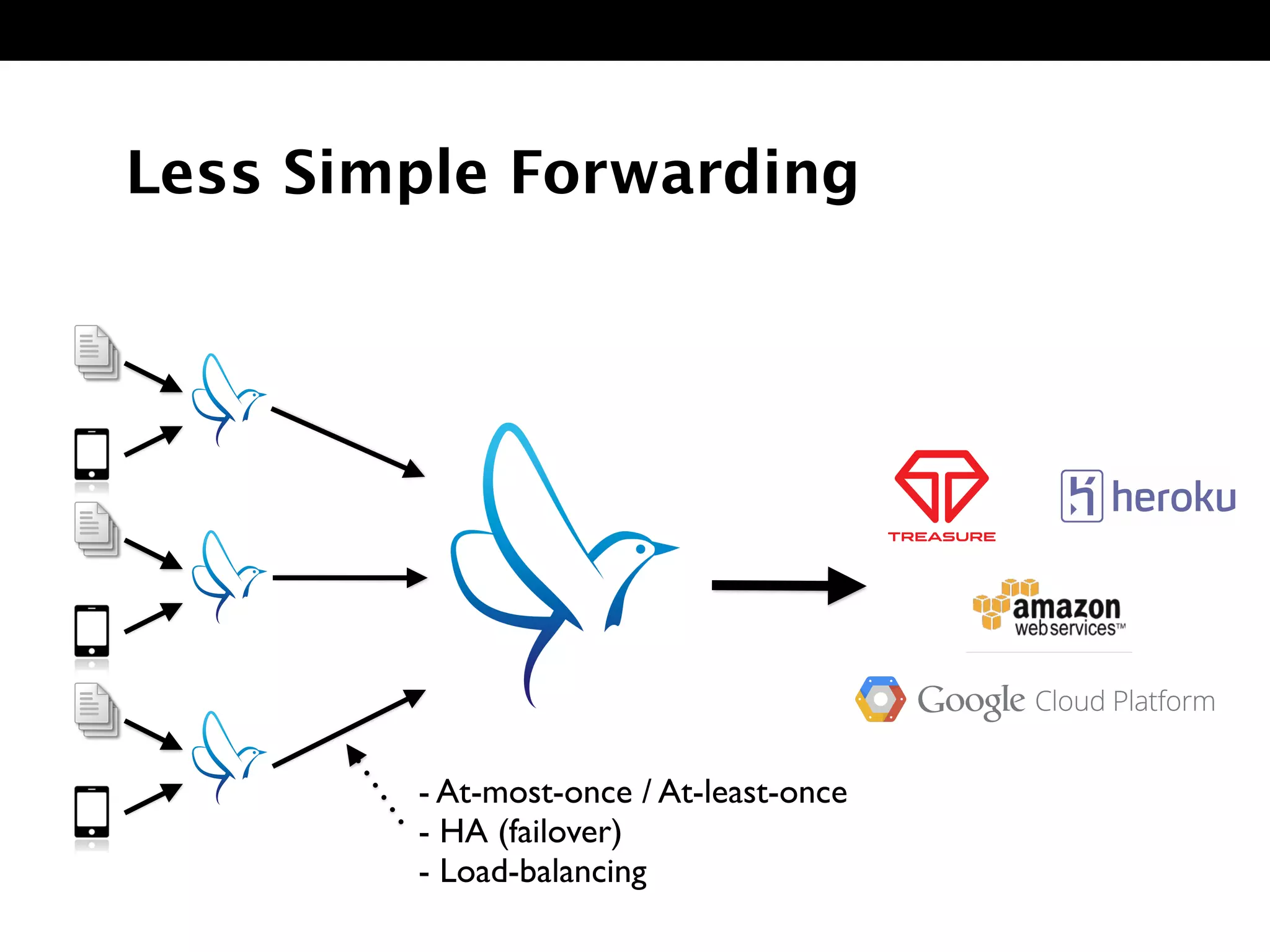

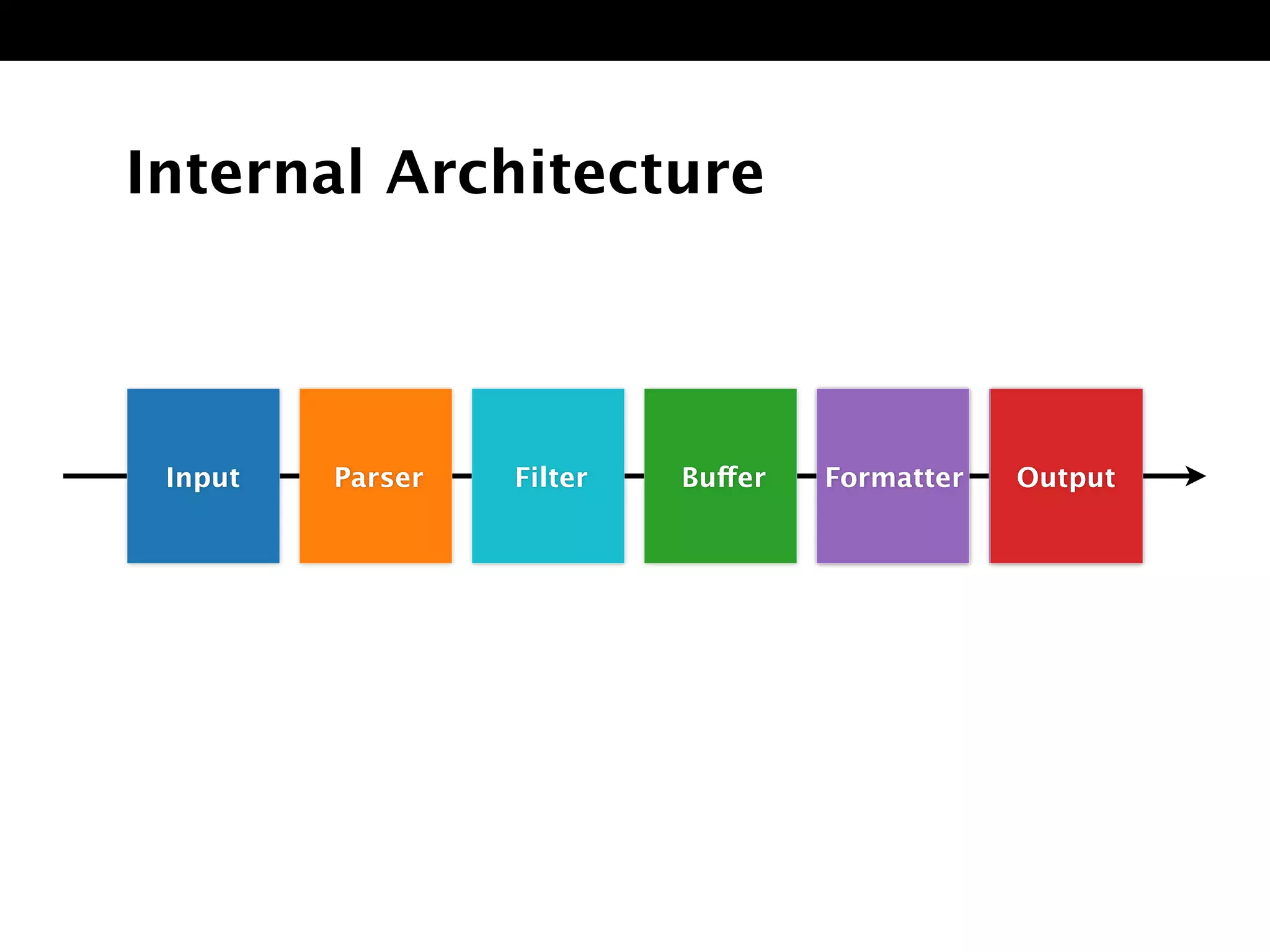

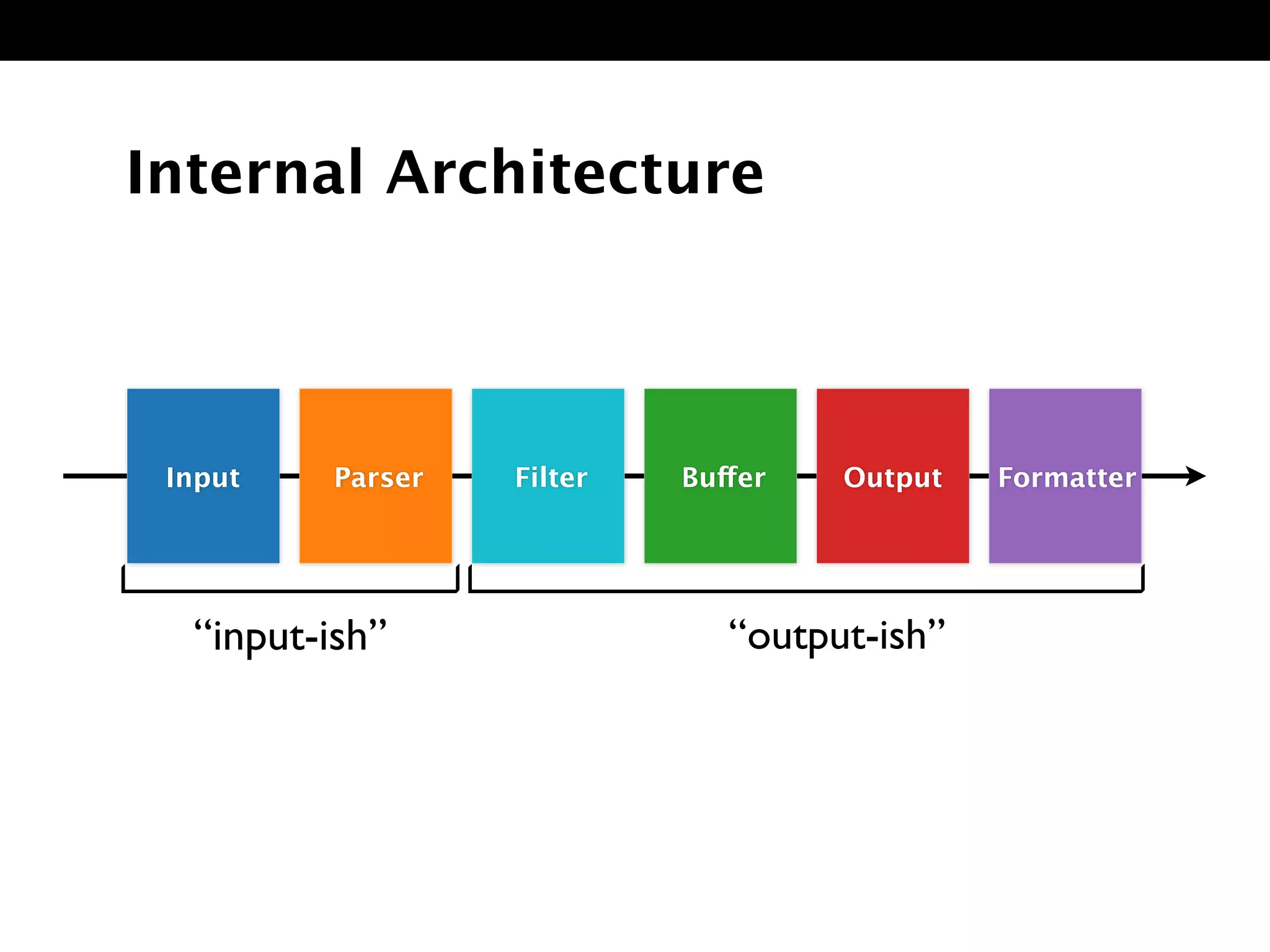

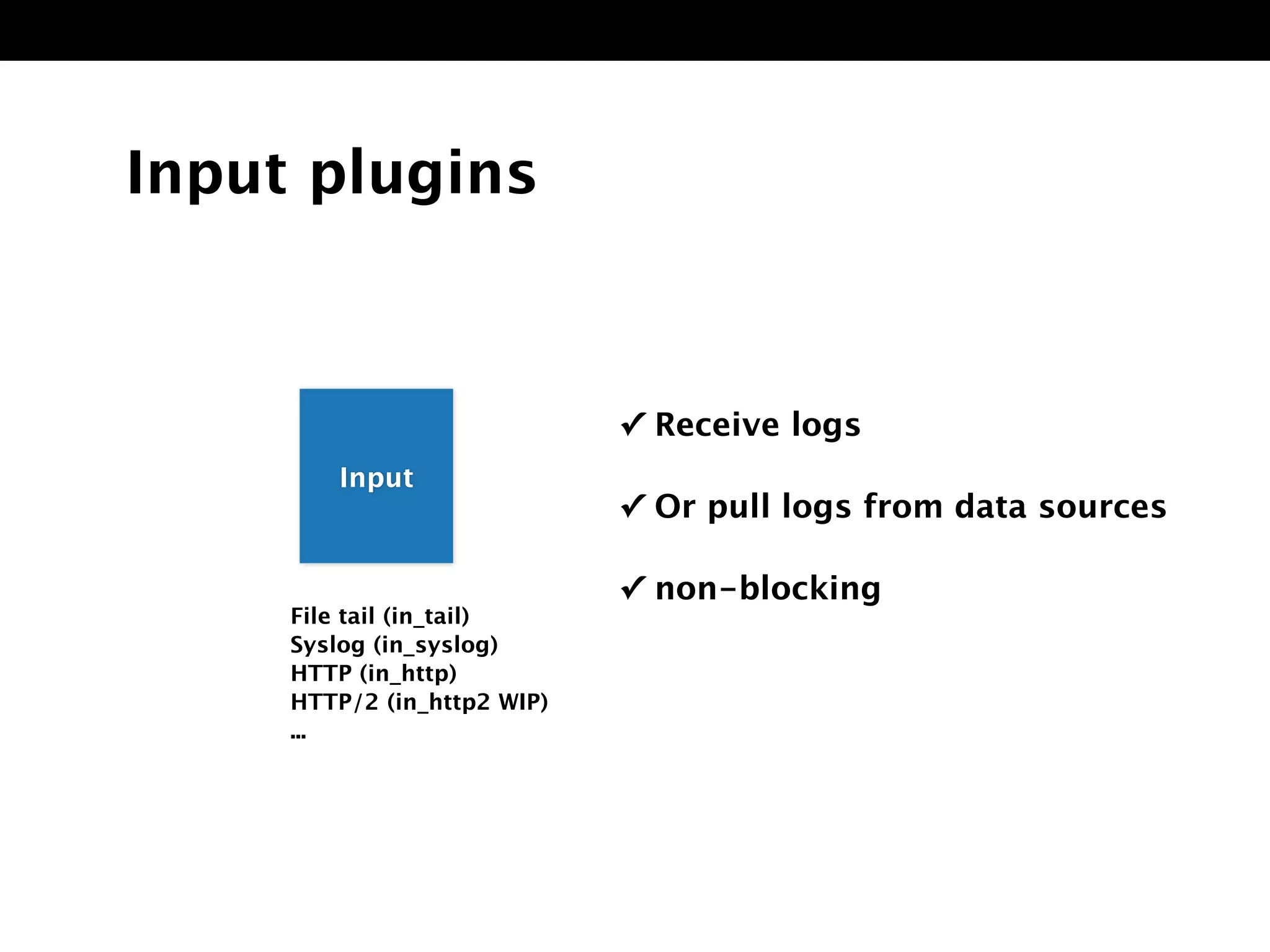

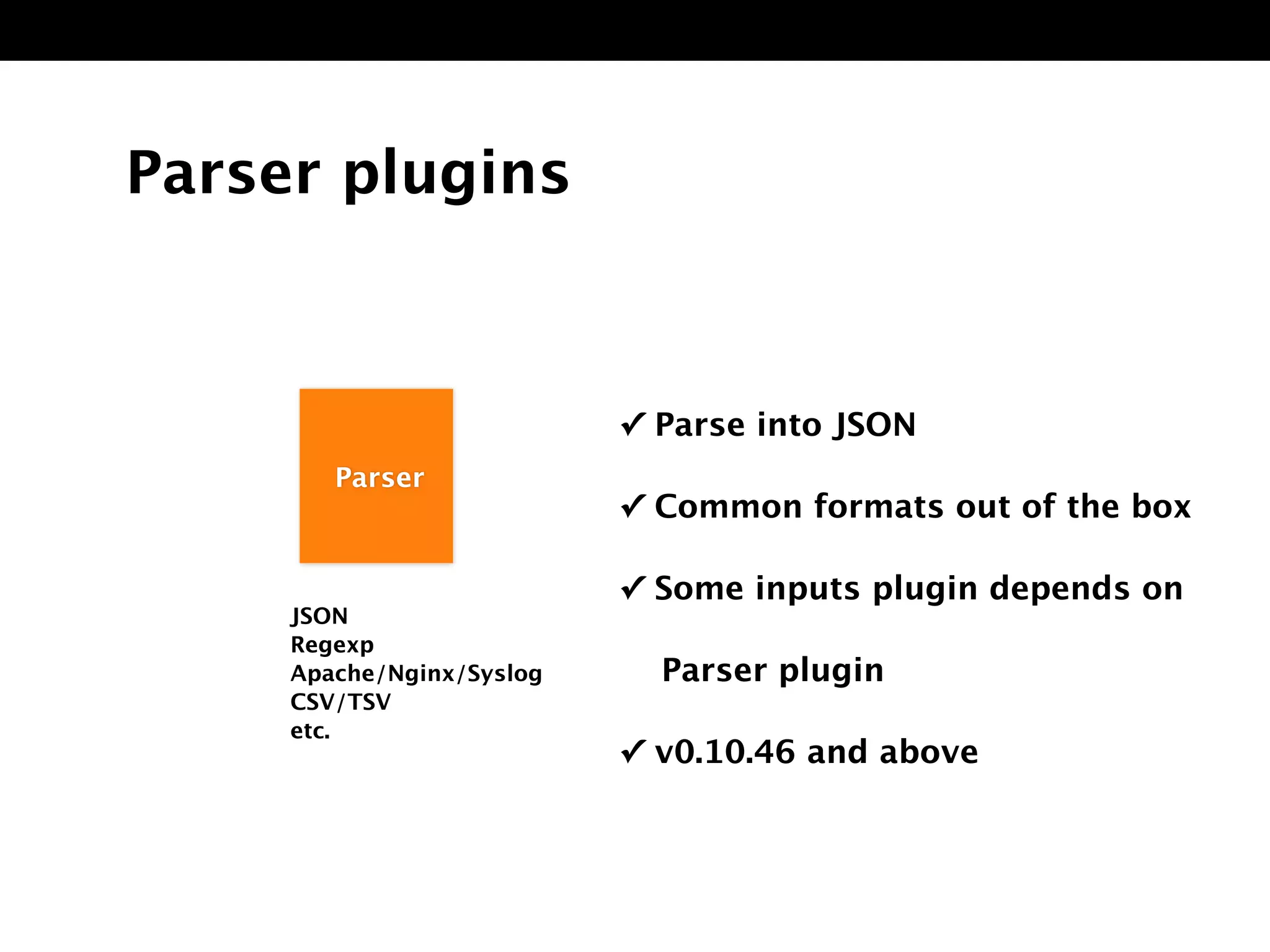

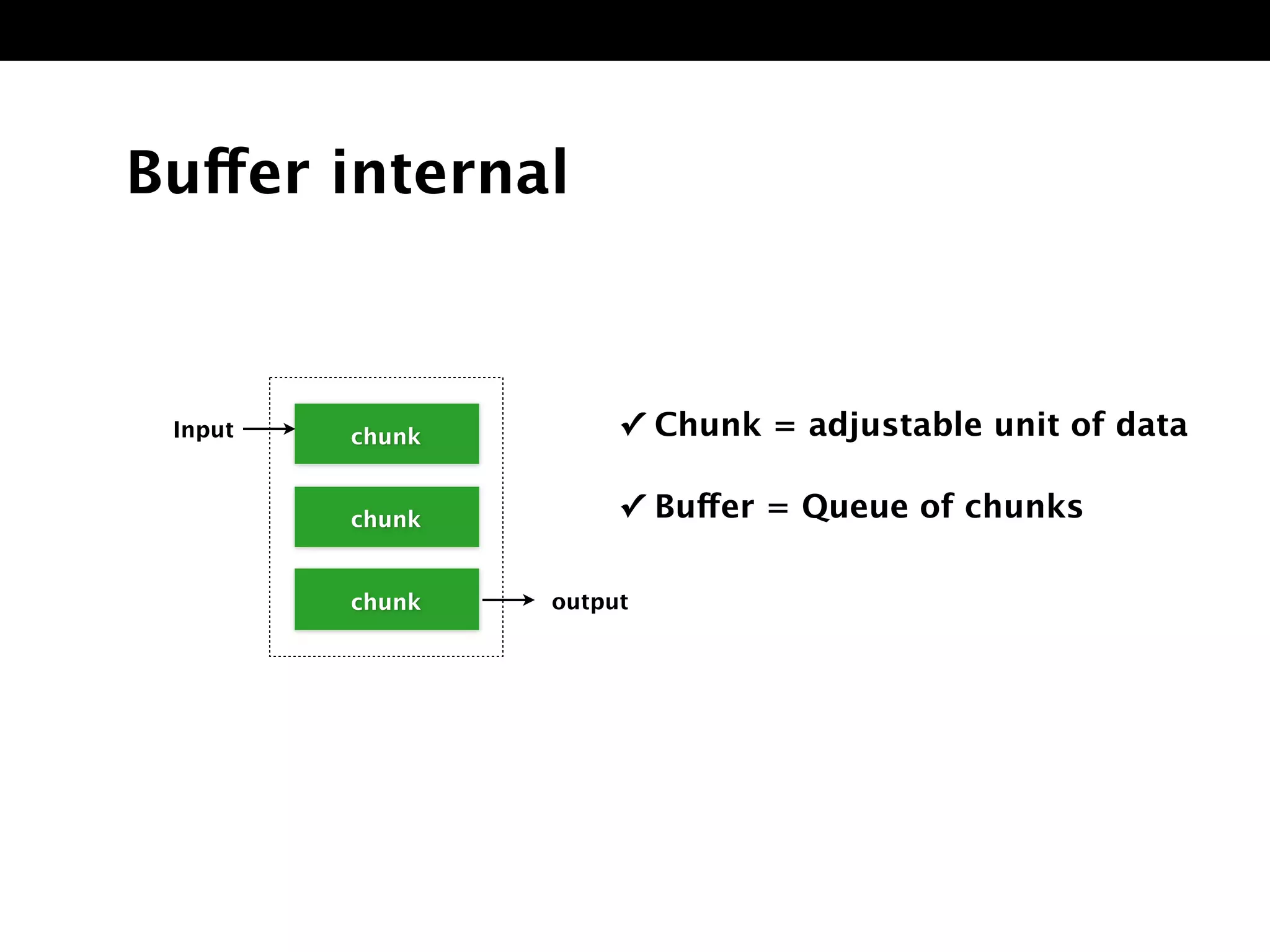

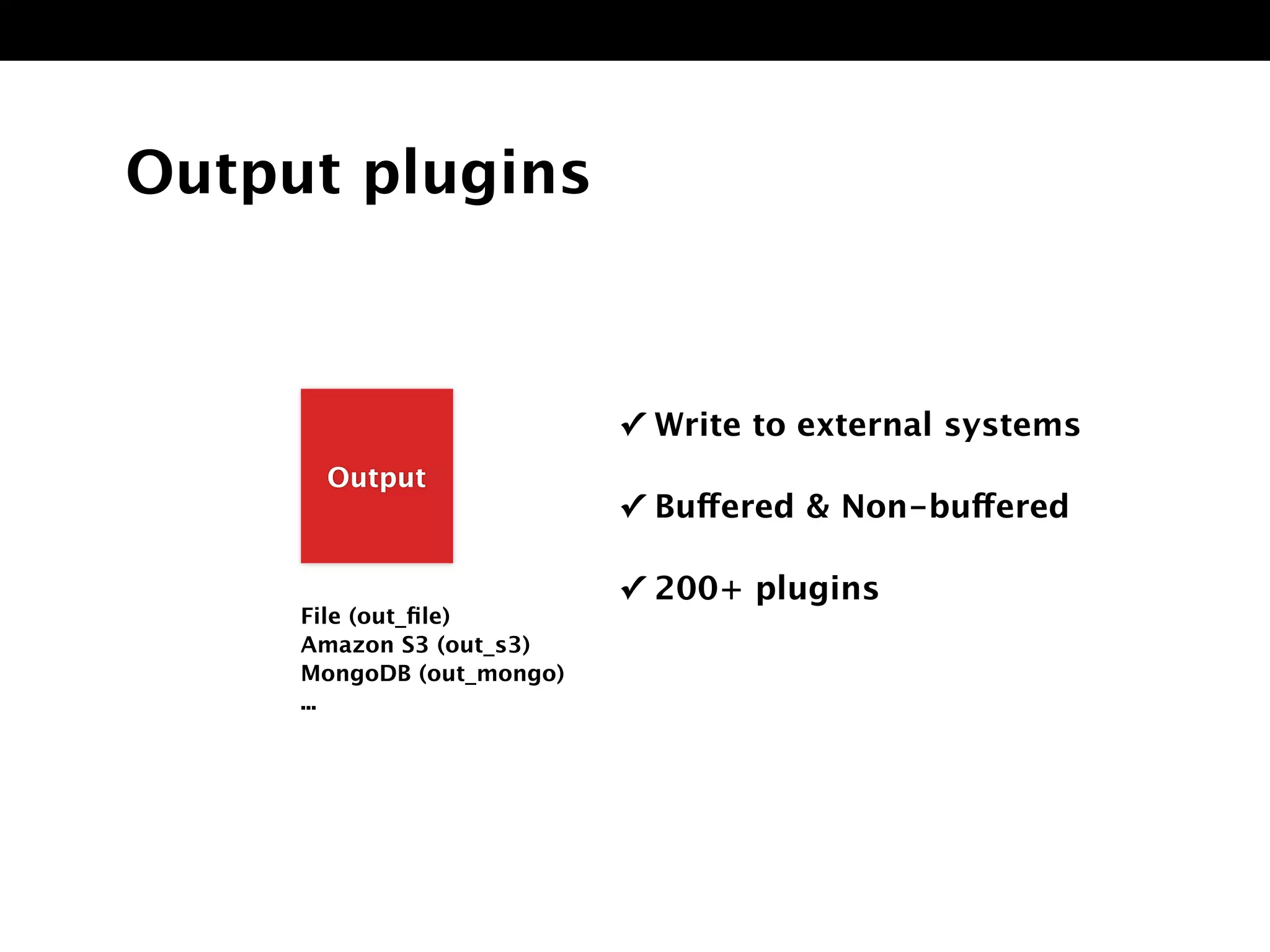

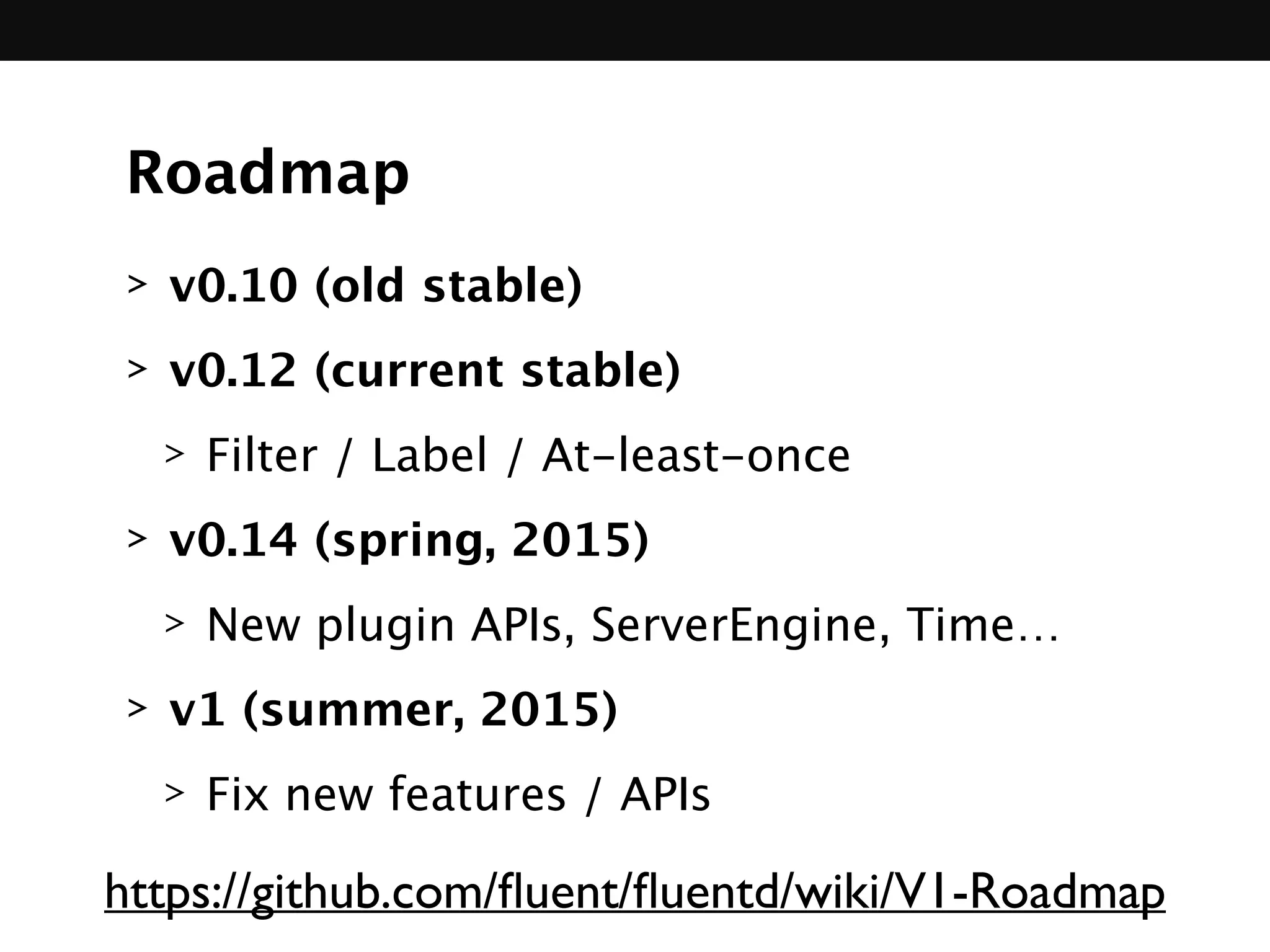

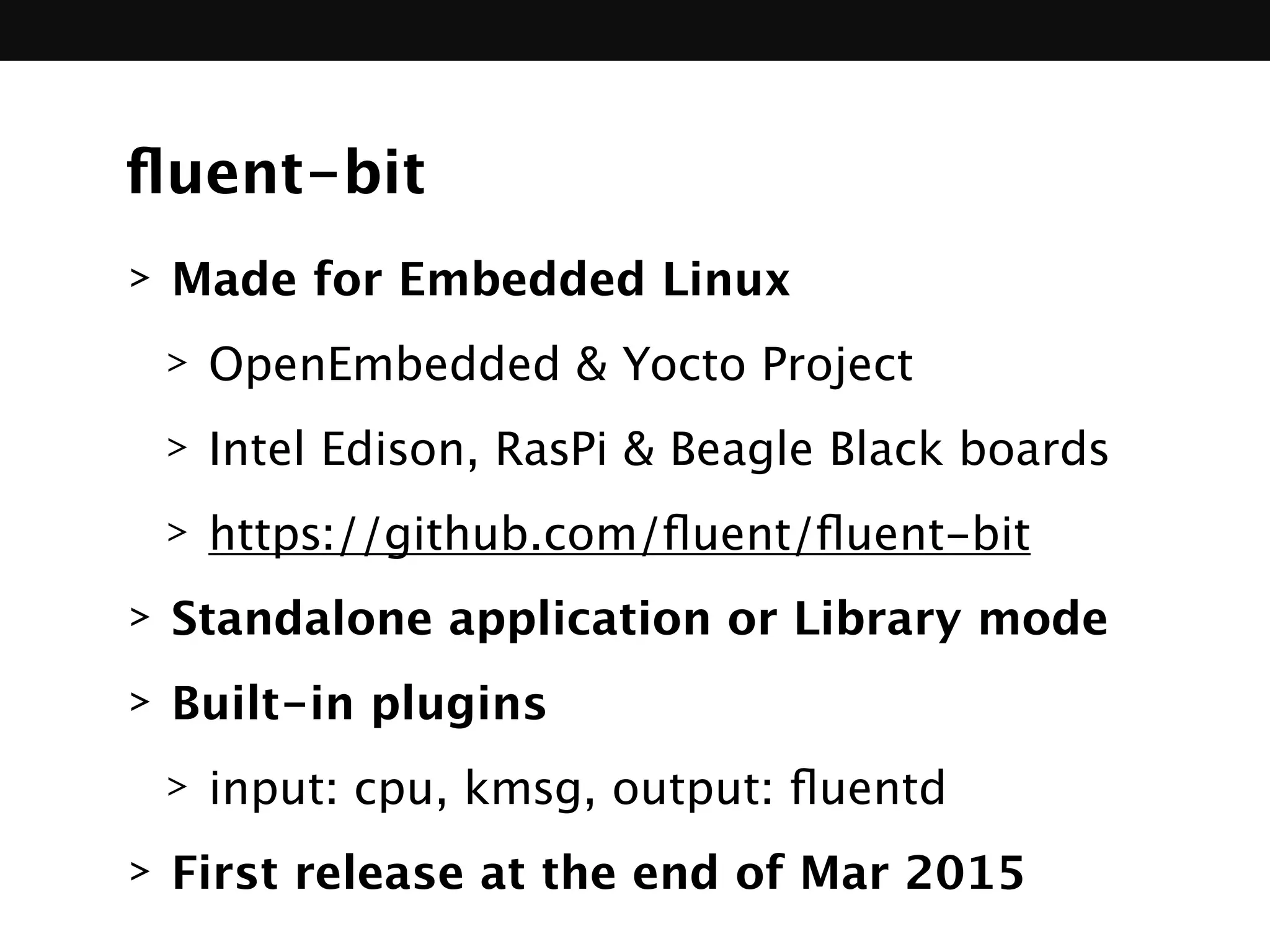

Masahiro Nakagawa is a senior software engineer at Treasure Data and the main maintainer of Fluentd. Fluentd is a data collector for unified logging that provides a streaming data transfer based on JSON. It has a simple core with plugins written in Ruby to provide functionality like input/output, buffering, parsing, filtering and formatting of data.