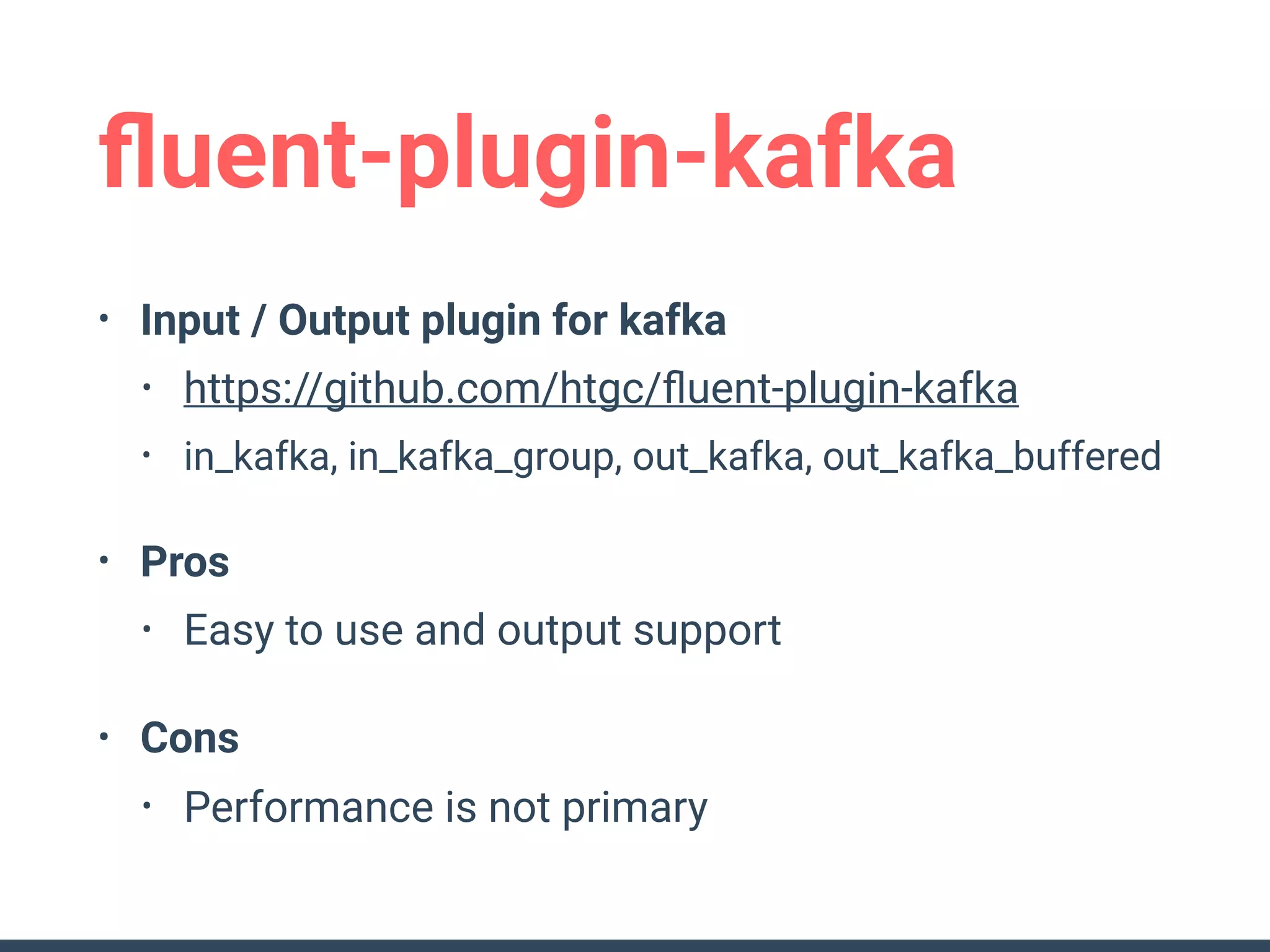

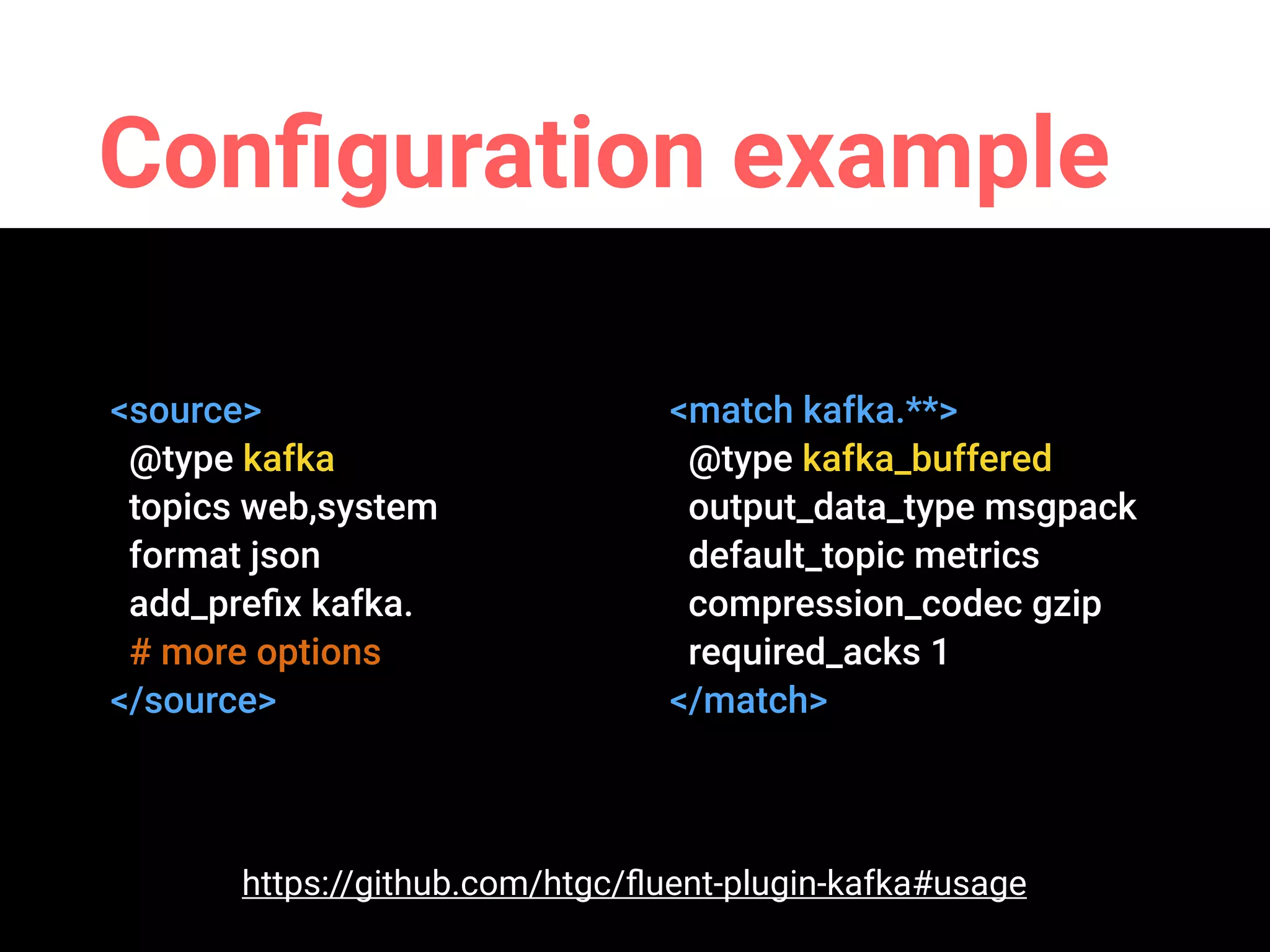

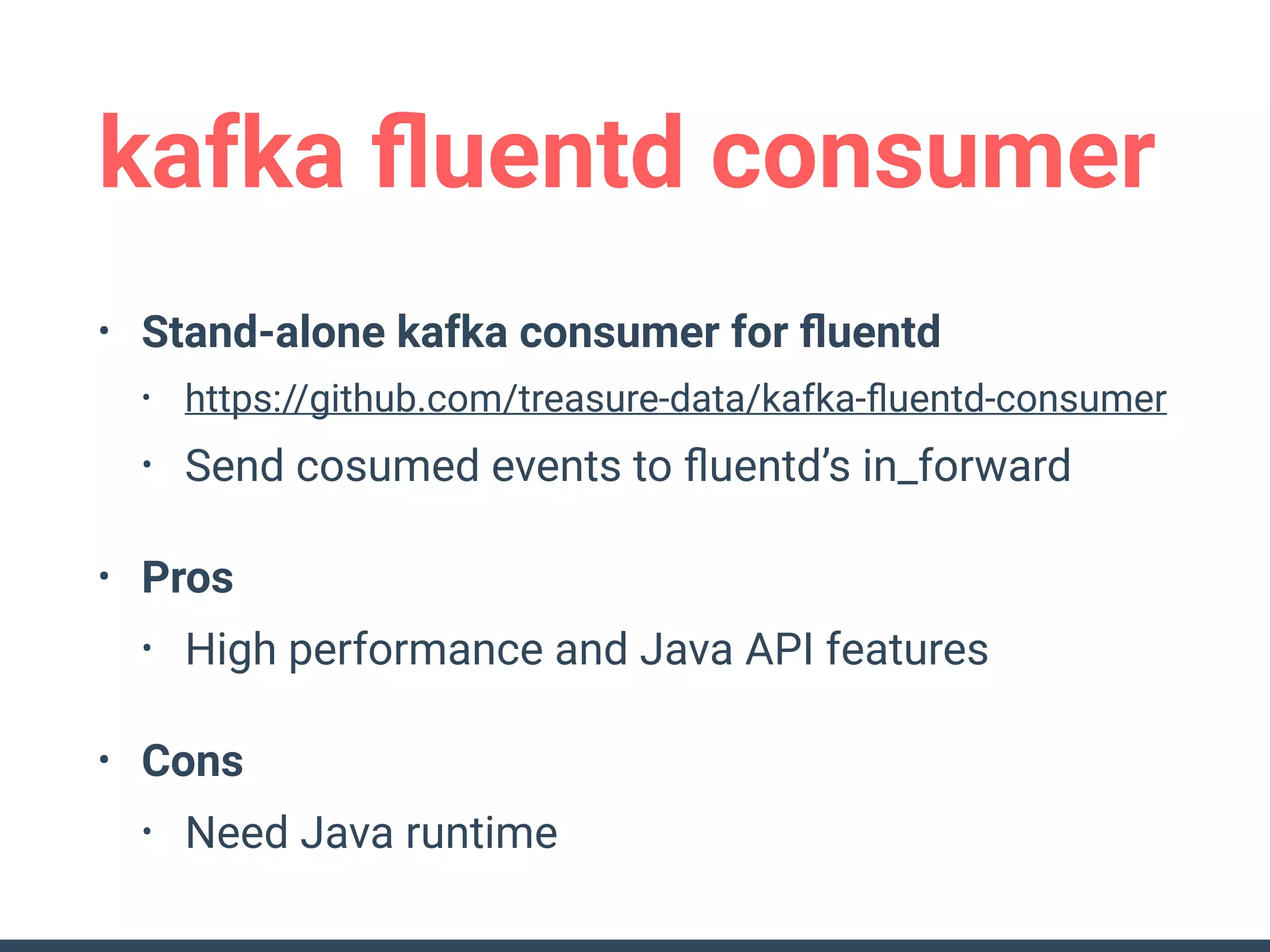

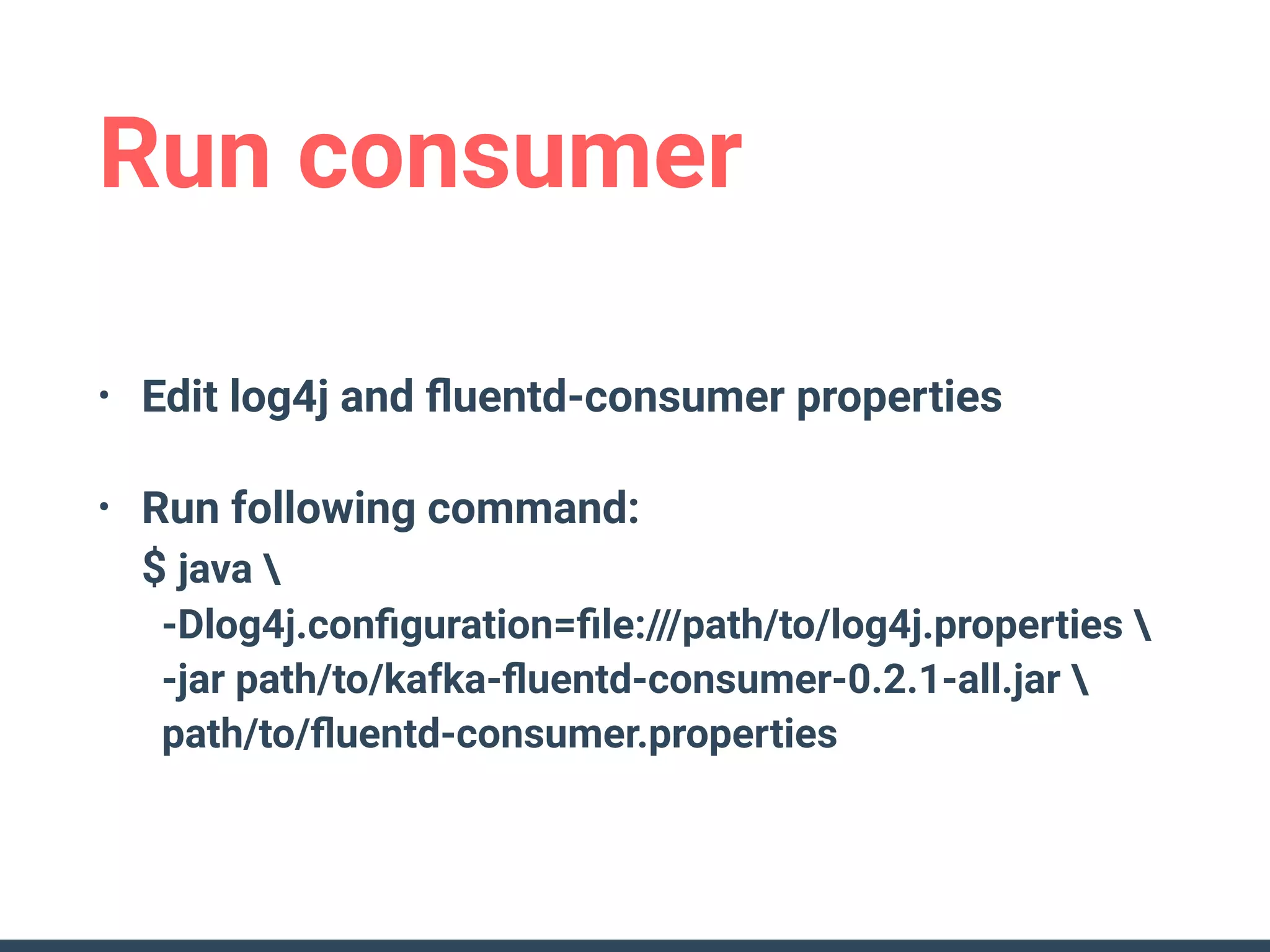

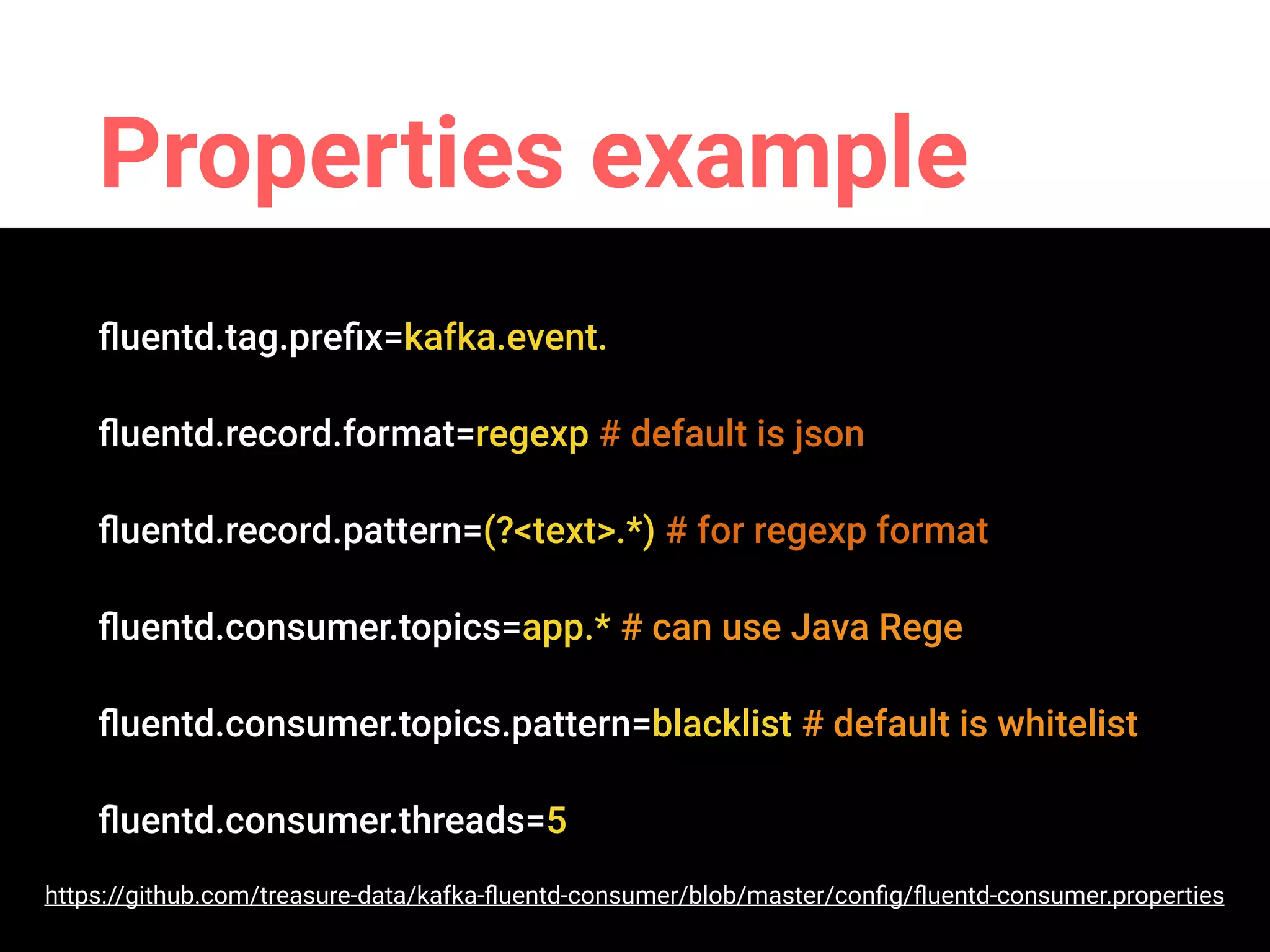

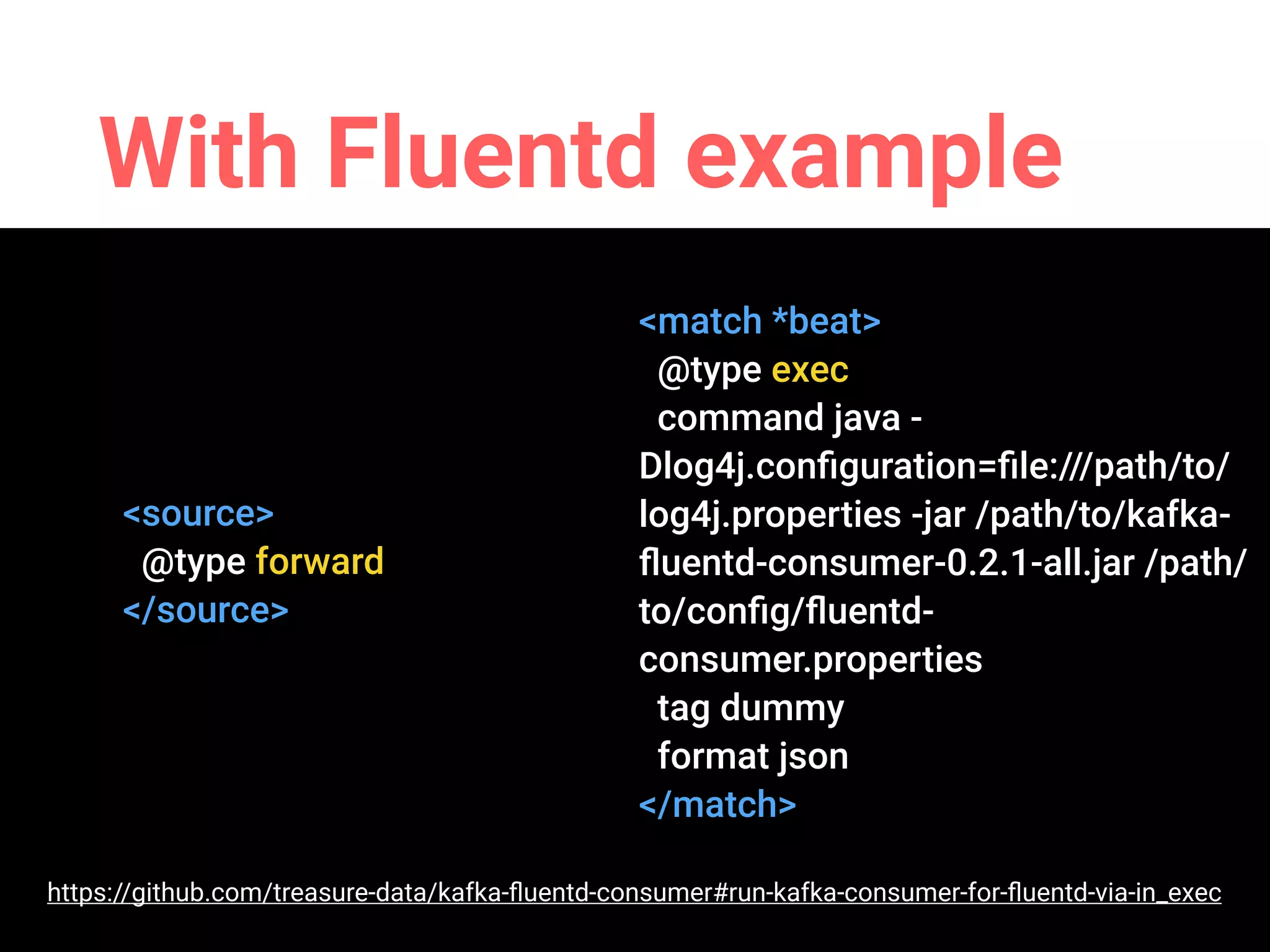

This document discusses using Fluentd to collect streaming data from Apache Kafka. It presents two approaches: 1) the fluent-plugin-kafka plugin which allows Fluentd to act as a producer and consumer of Kafka topics, and 2) the kafka-fluentd-consumer project which runs a standalone Kafka consumer that sends events to Fluentd. Configuration examples are provided for both approaches. The document concludes that Fluentd and Kafka can work together to build reliable and flexible data pipelines.