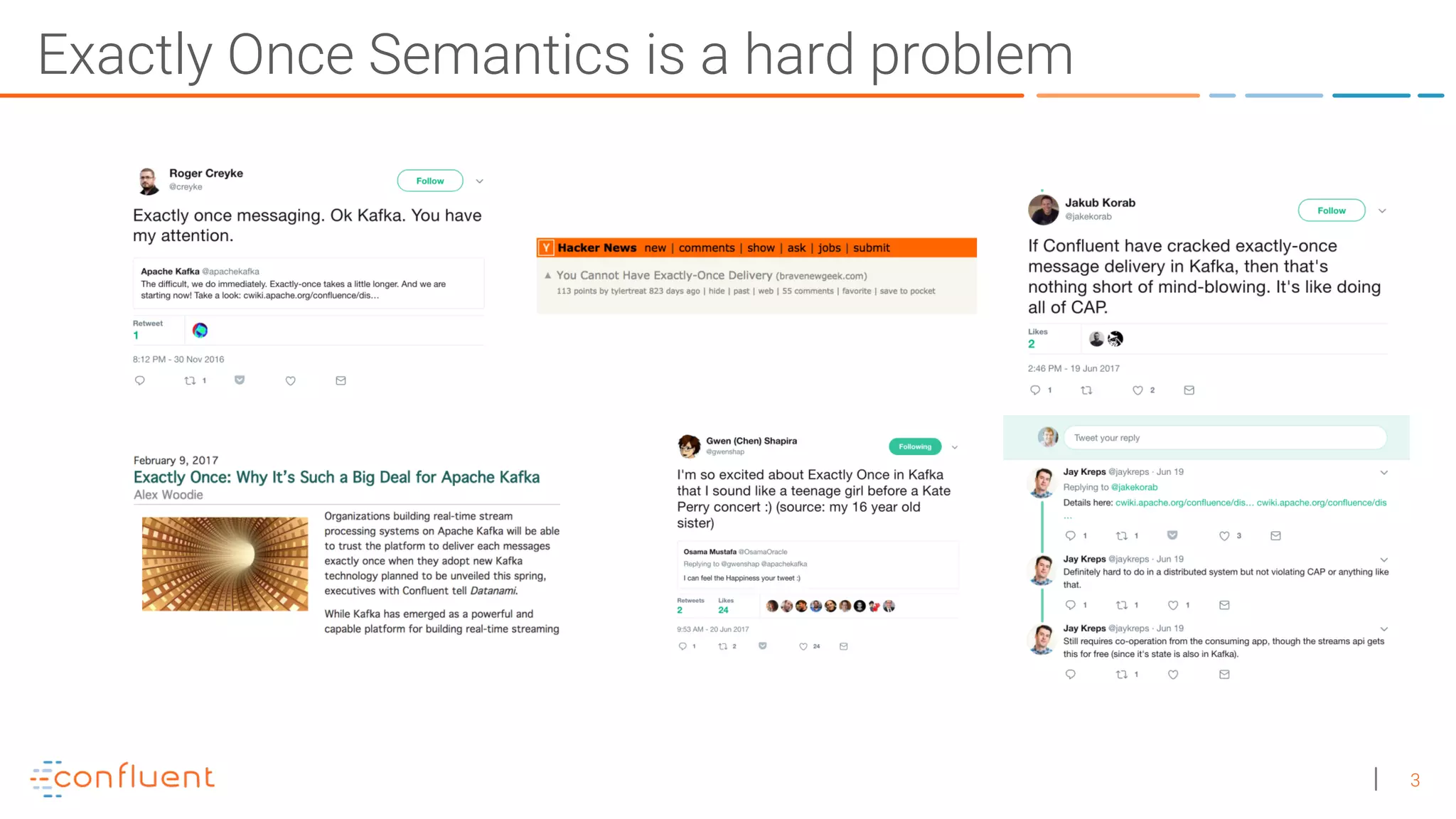

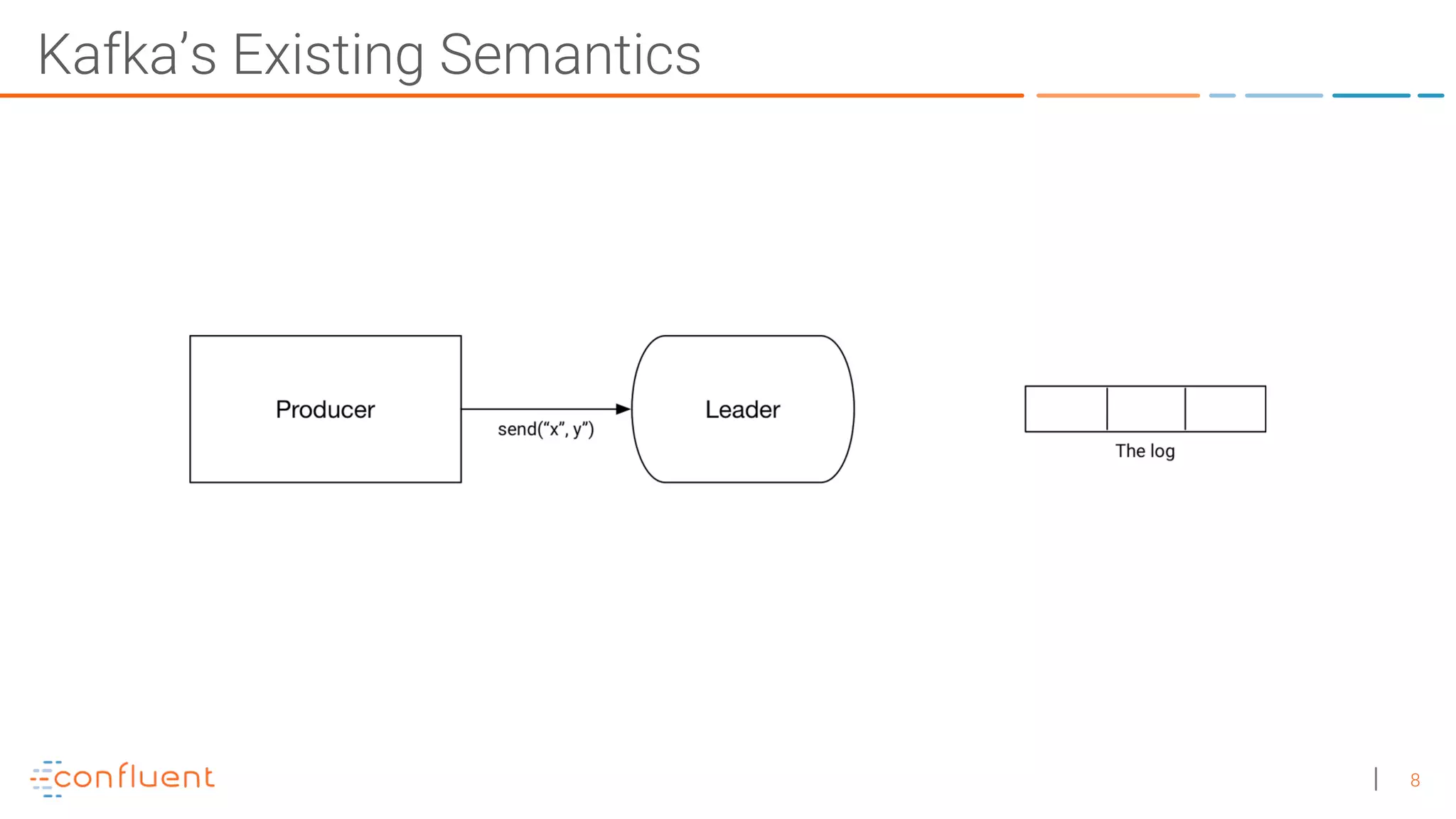

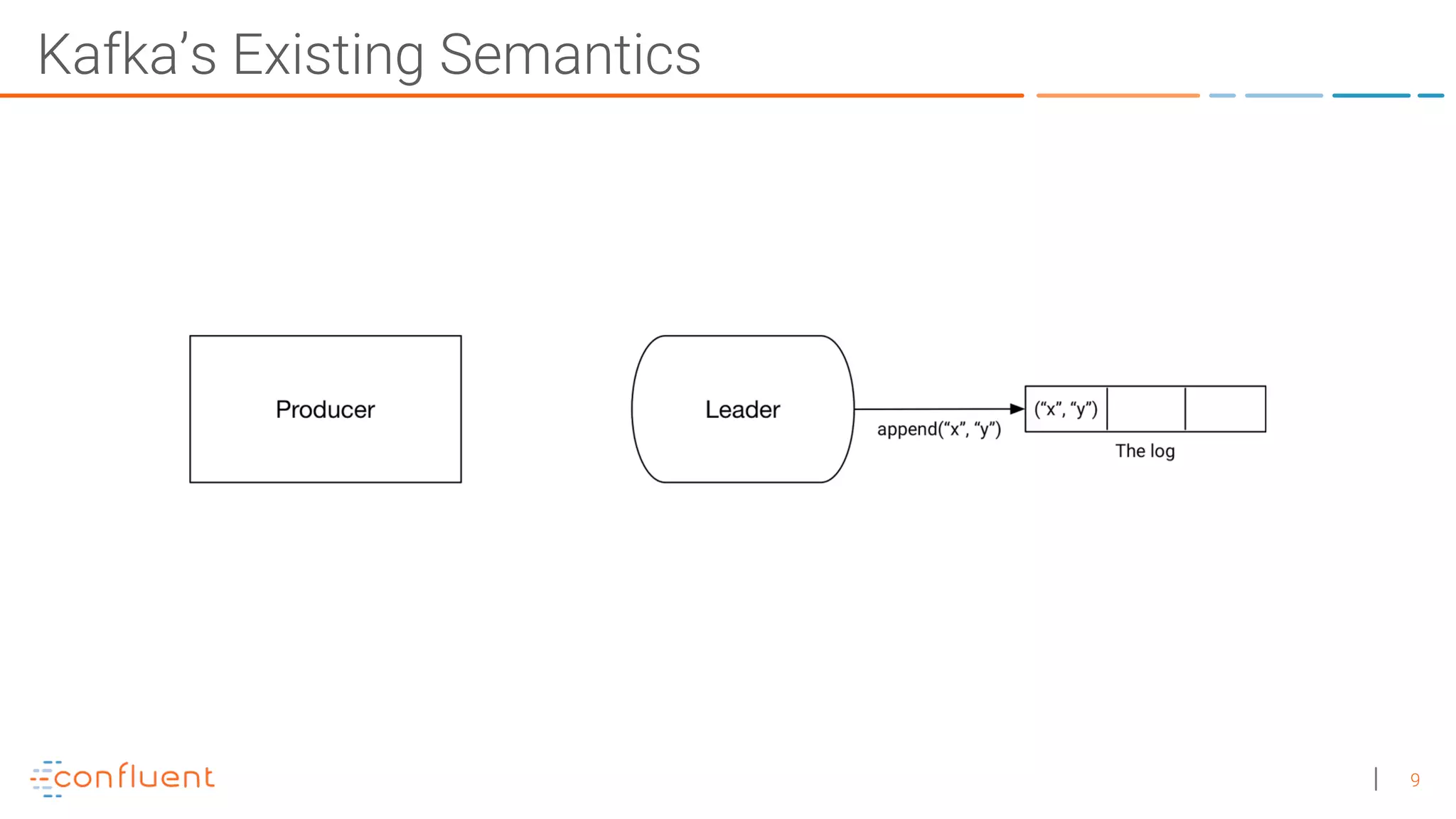

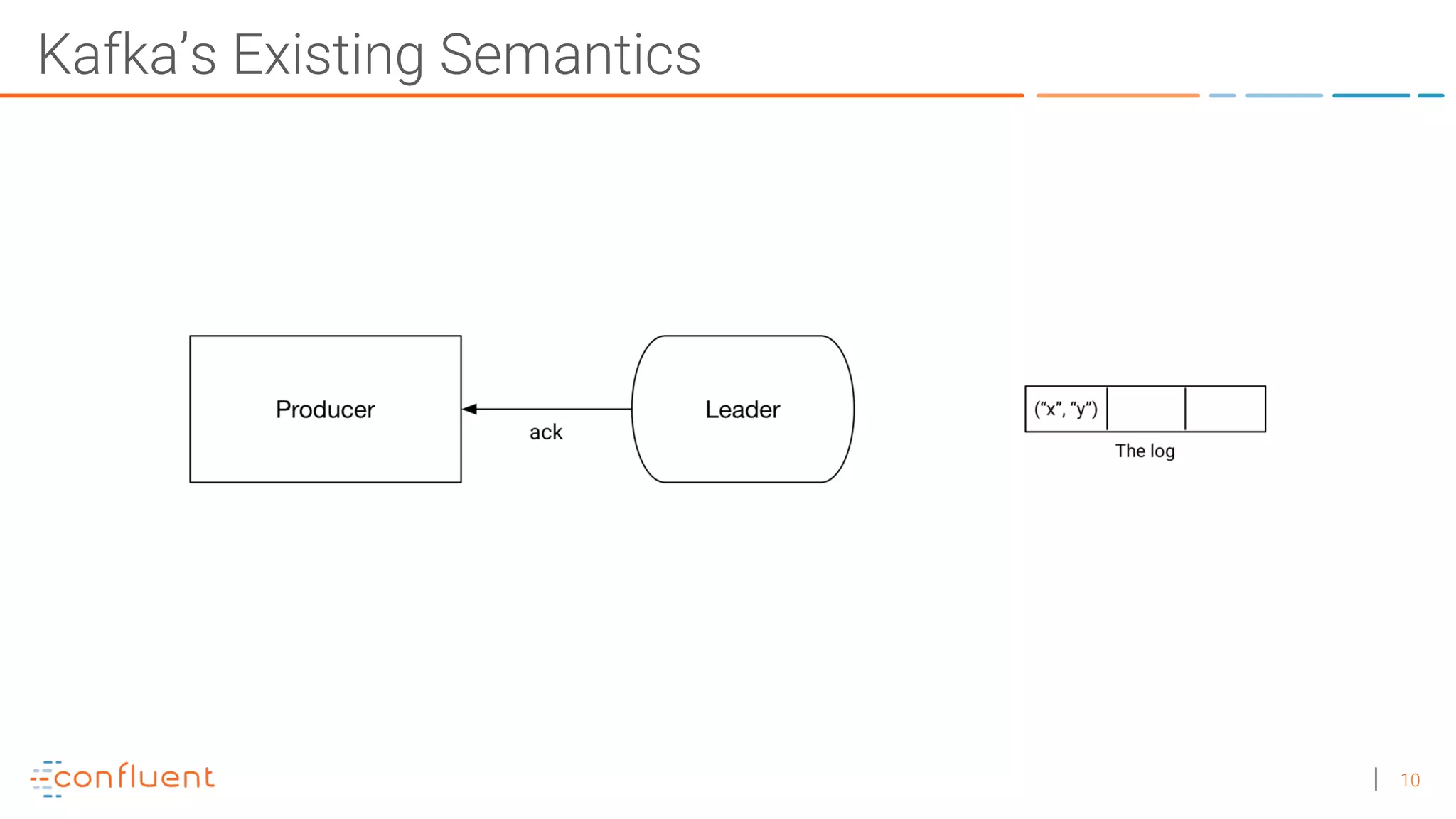

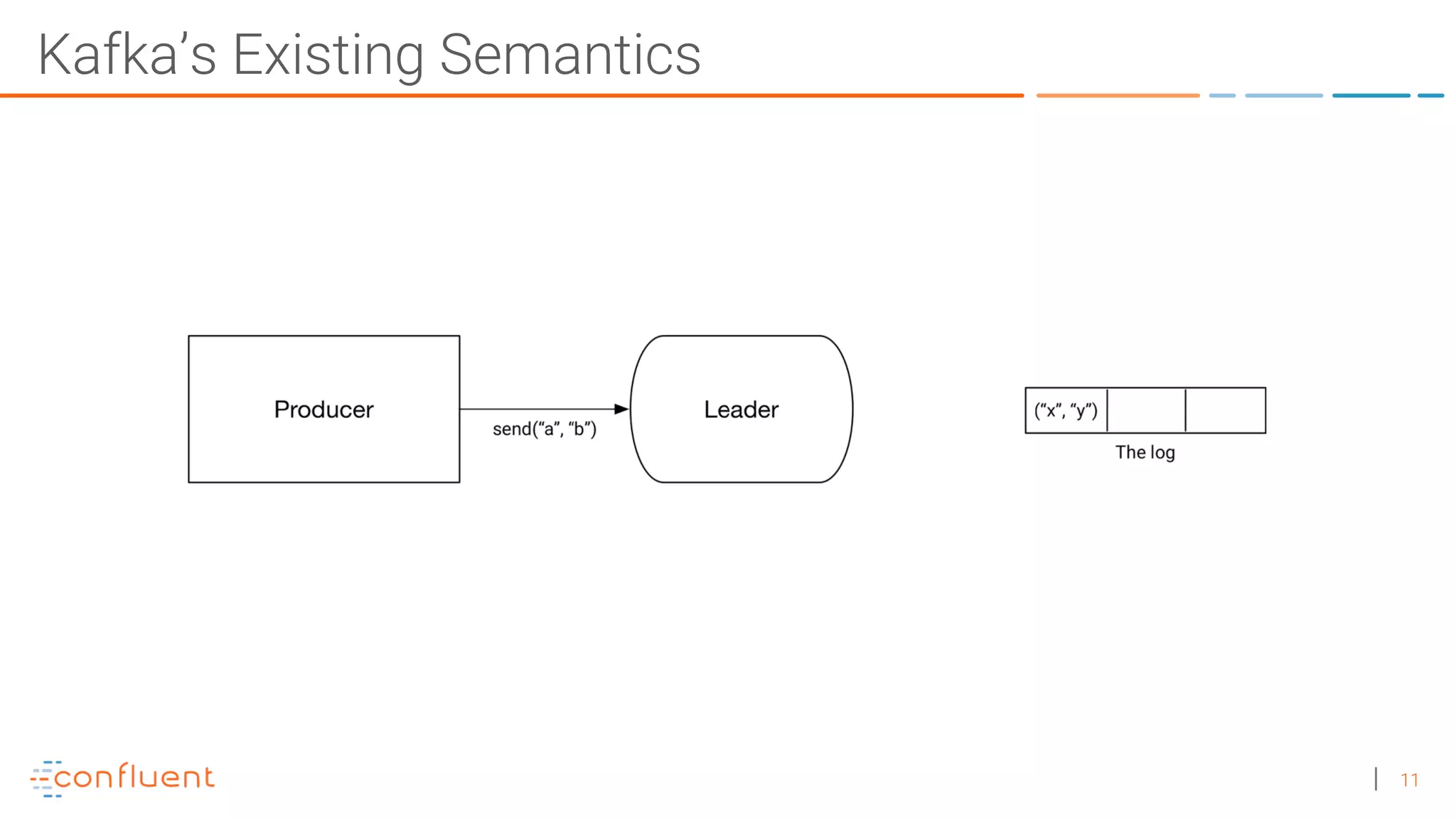

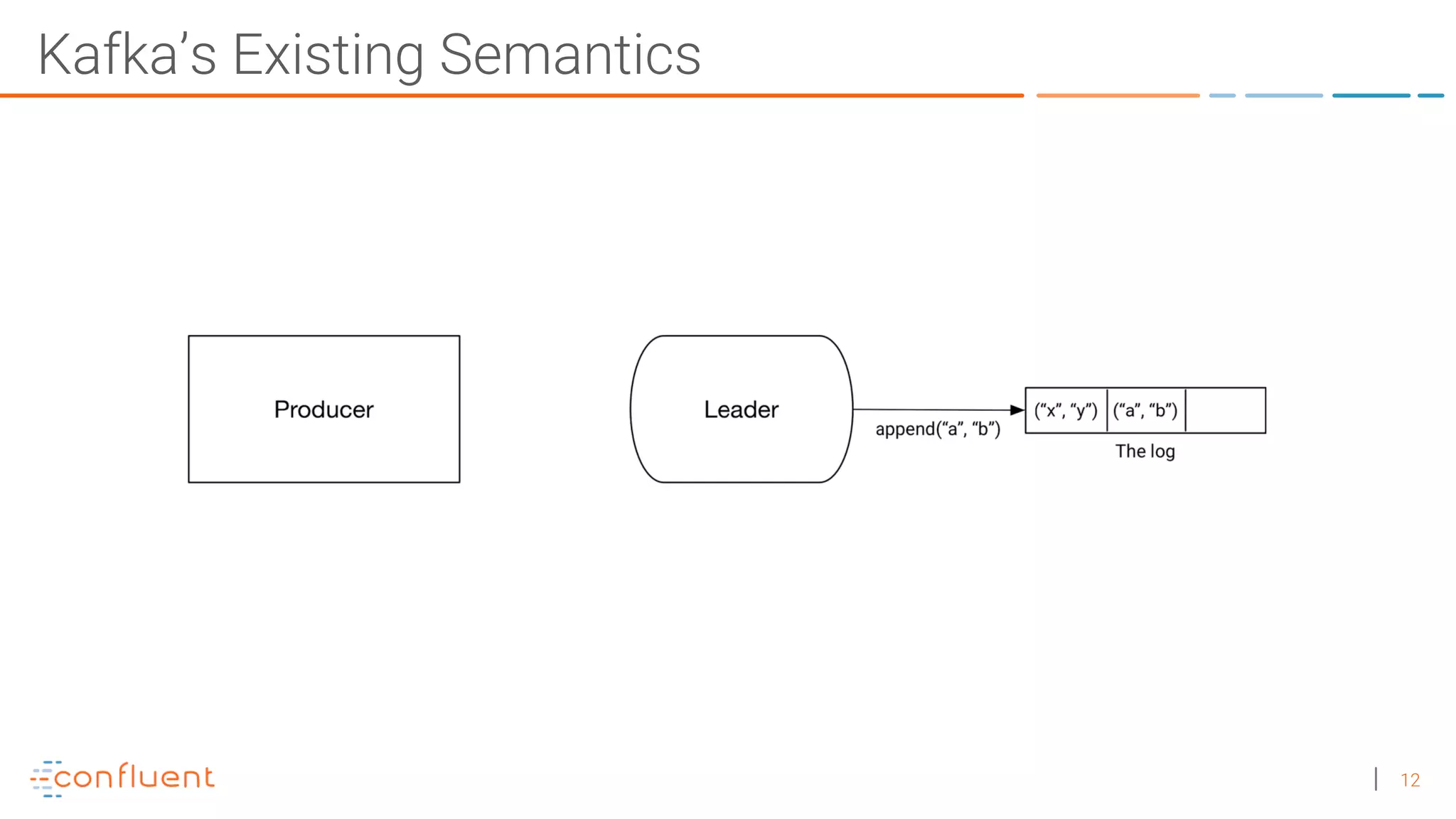

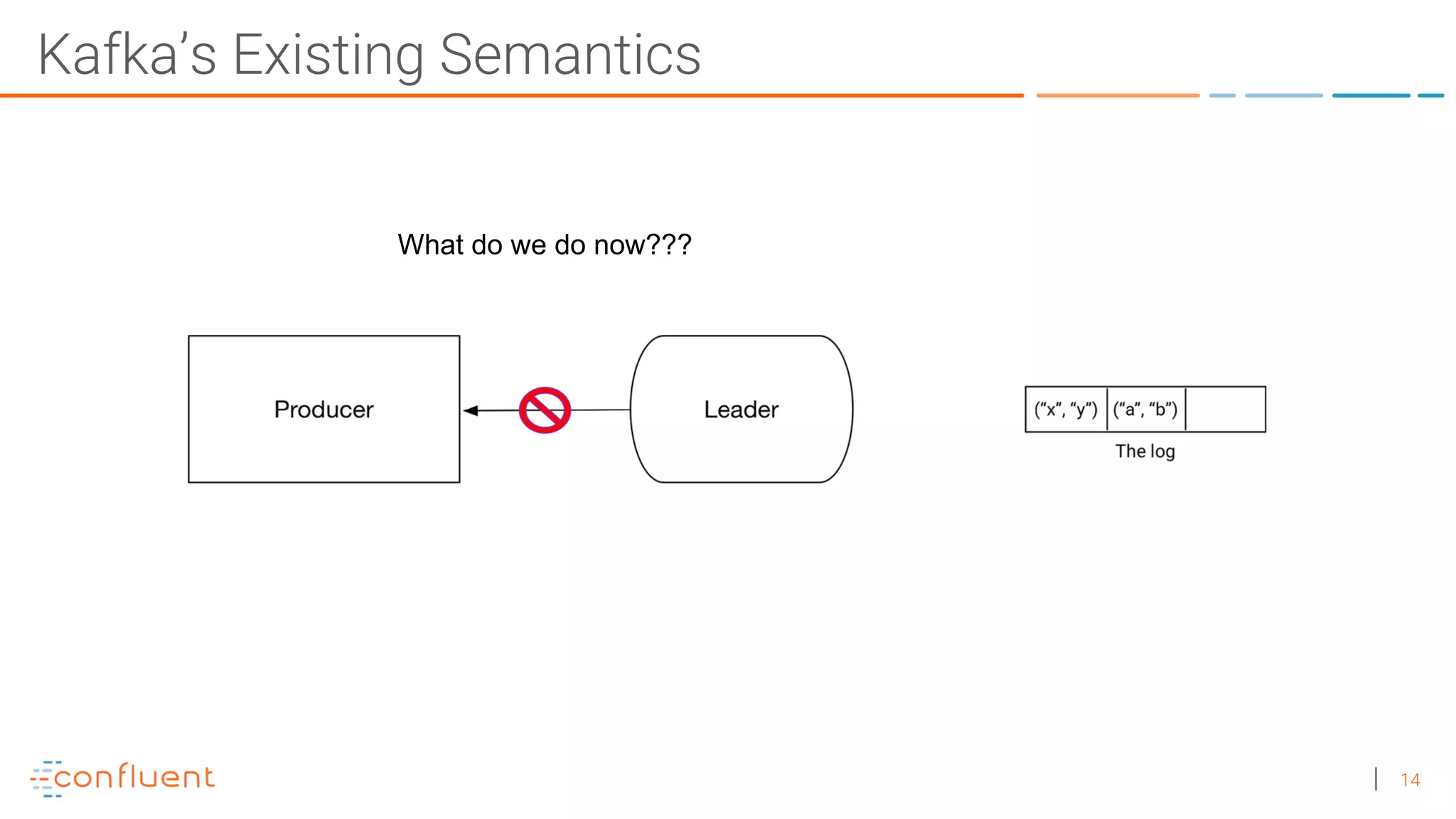

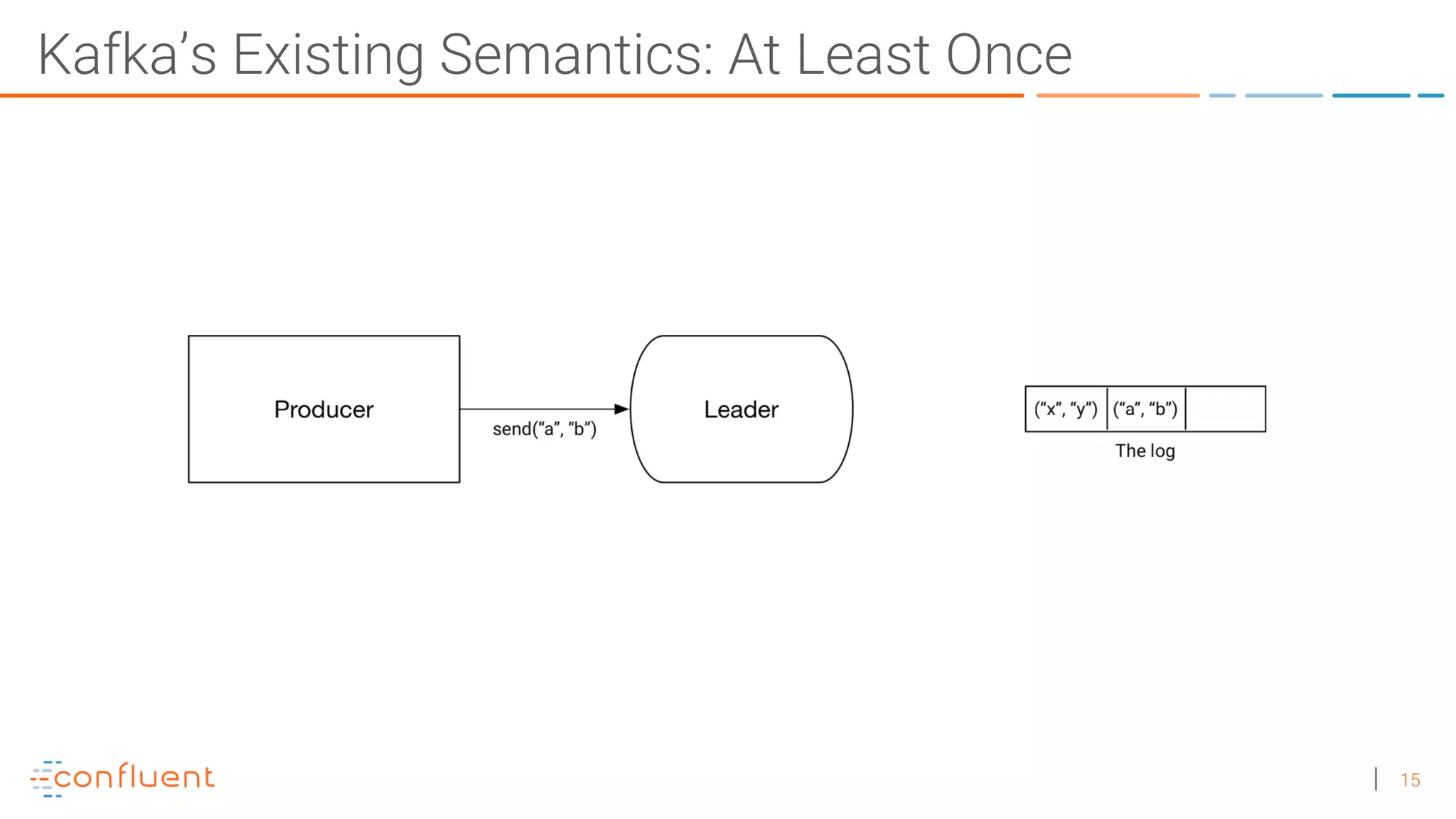

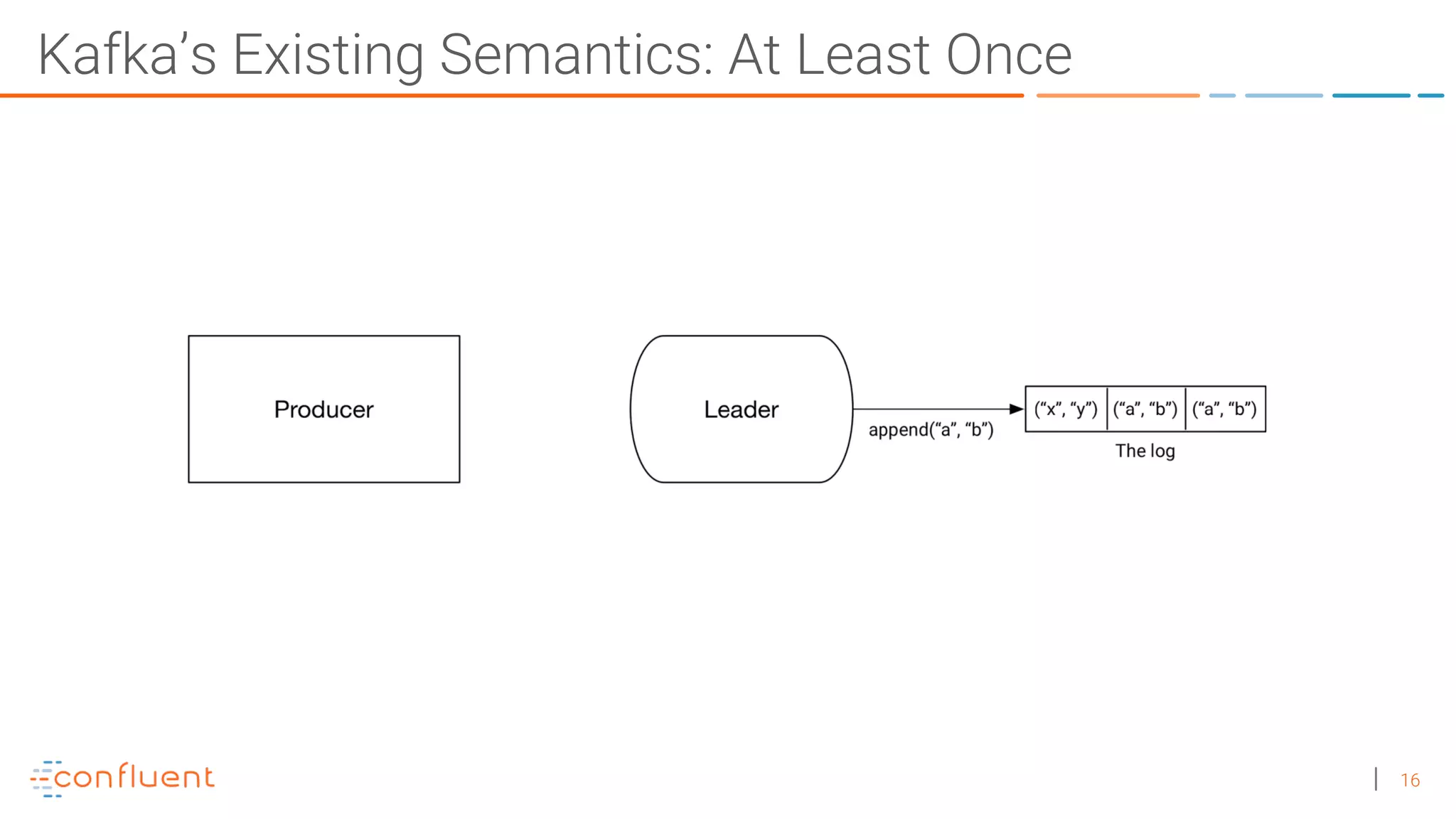

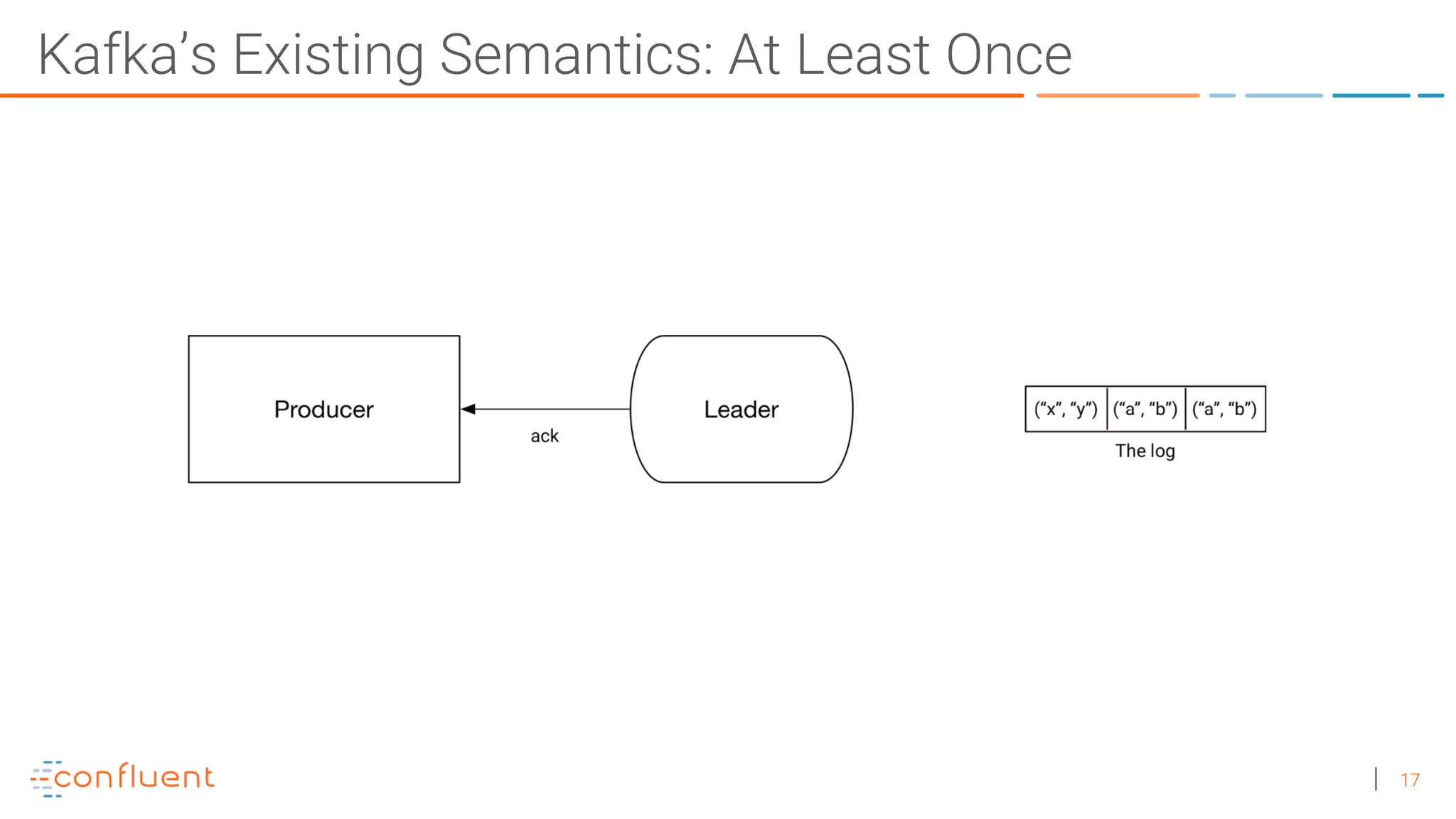

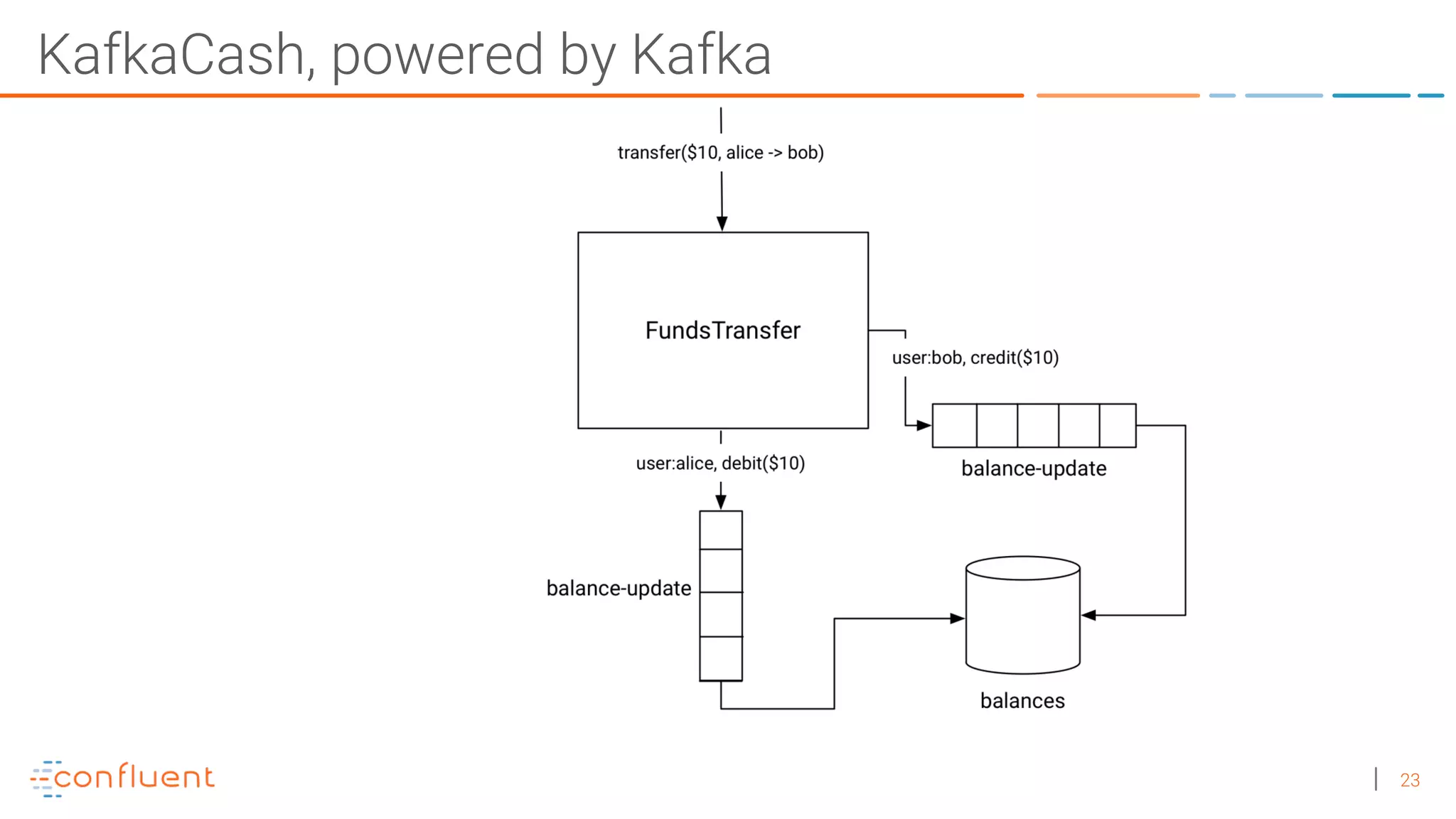

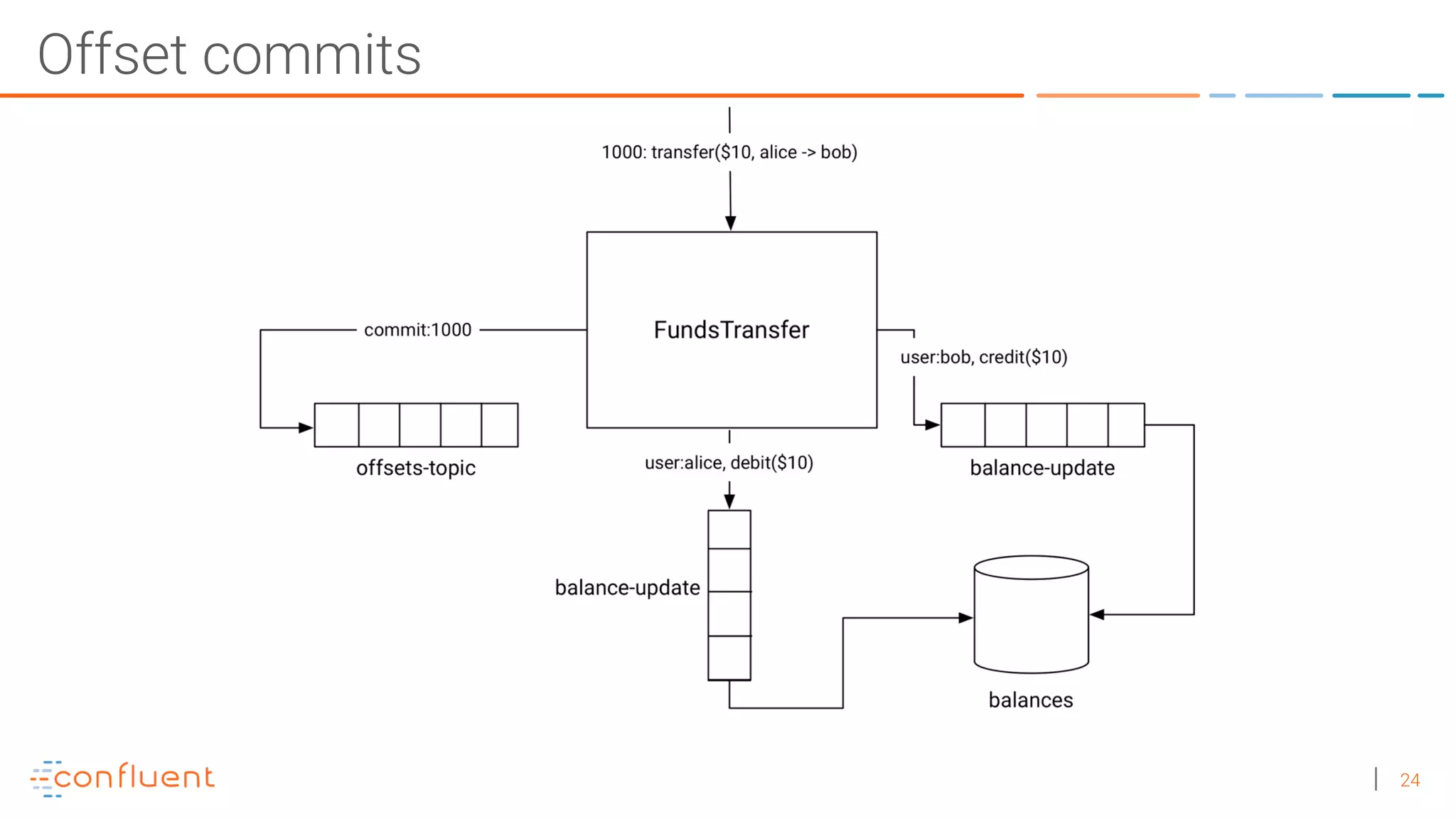

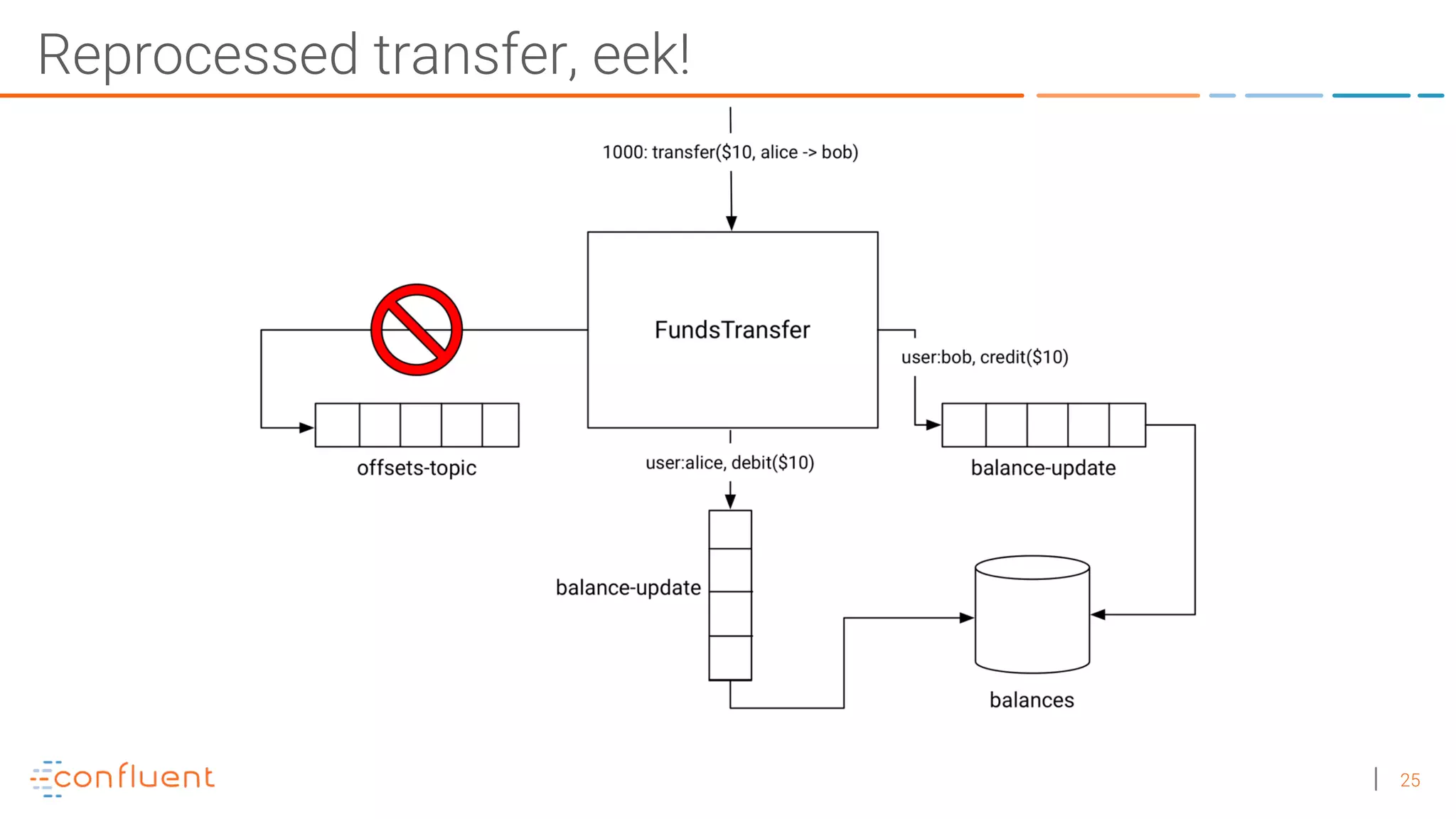

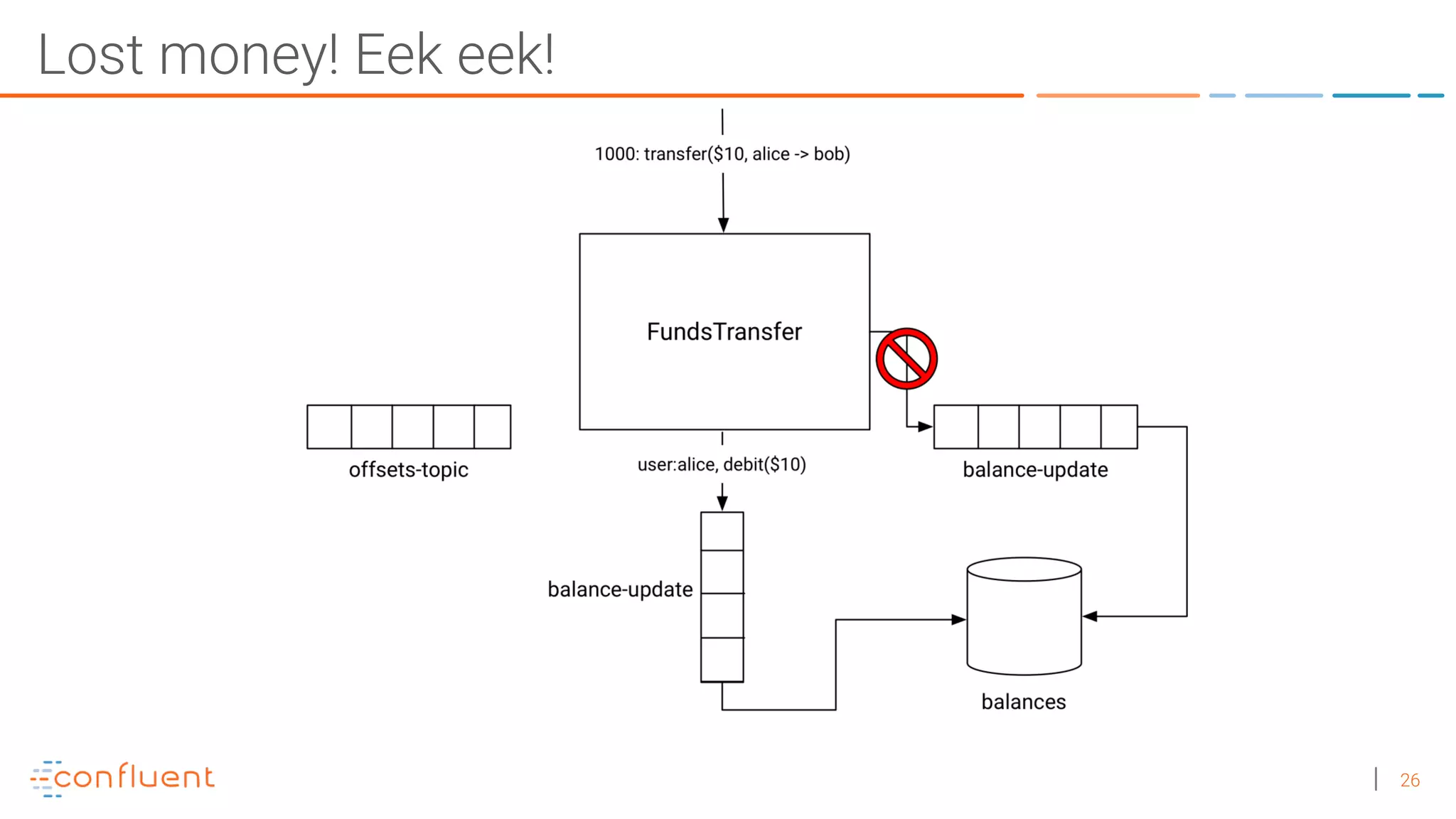

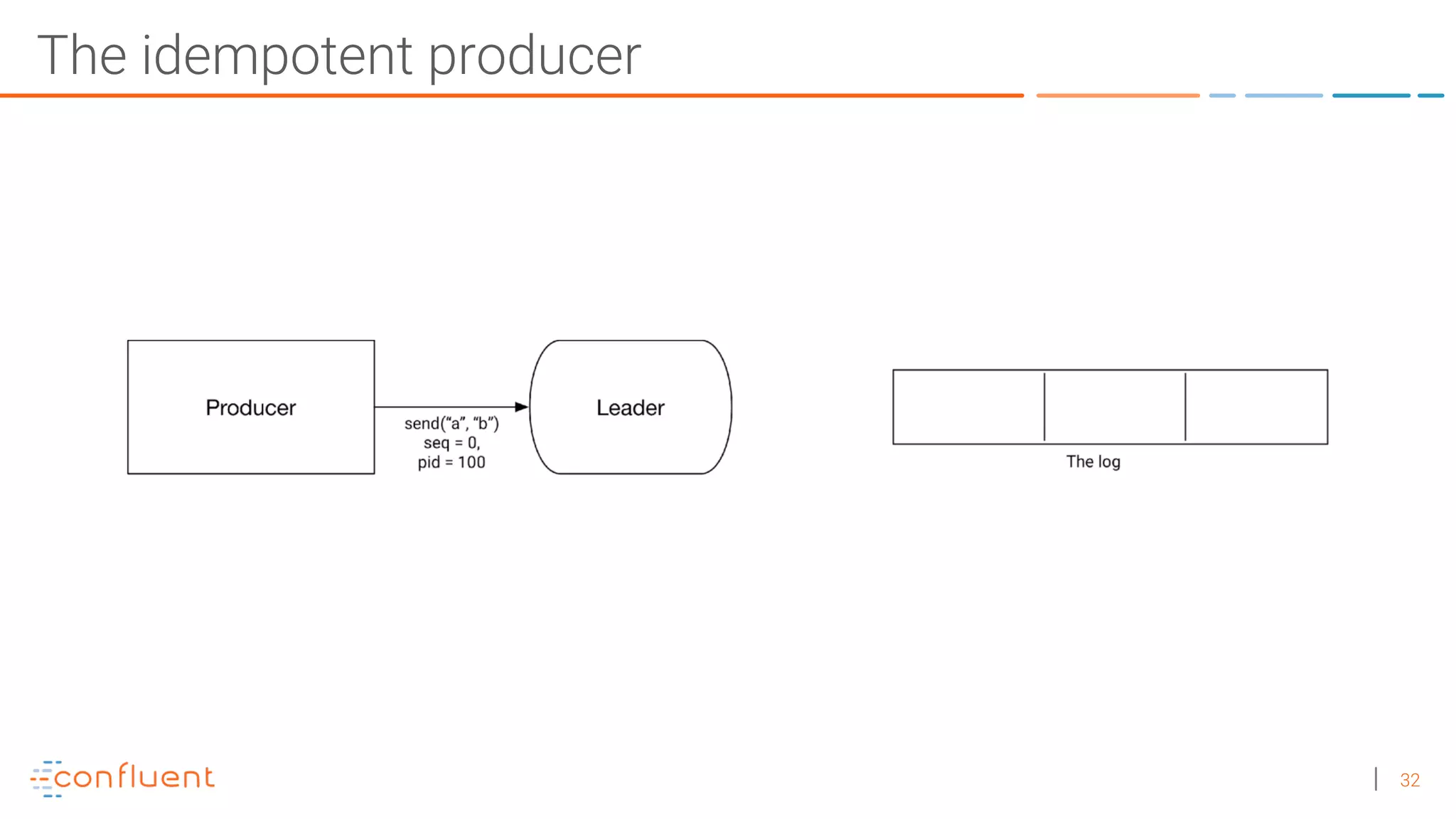

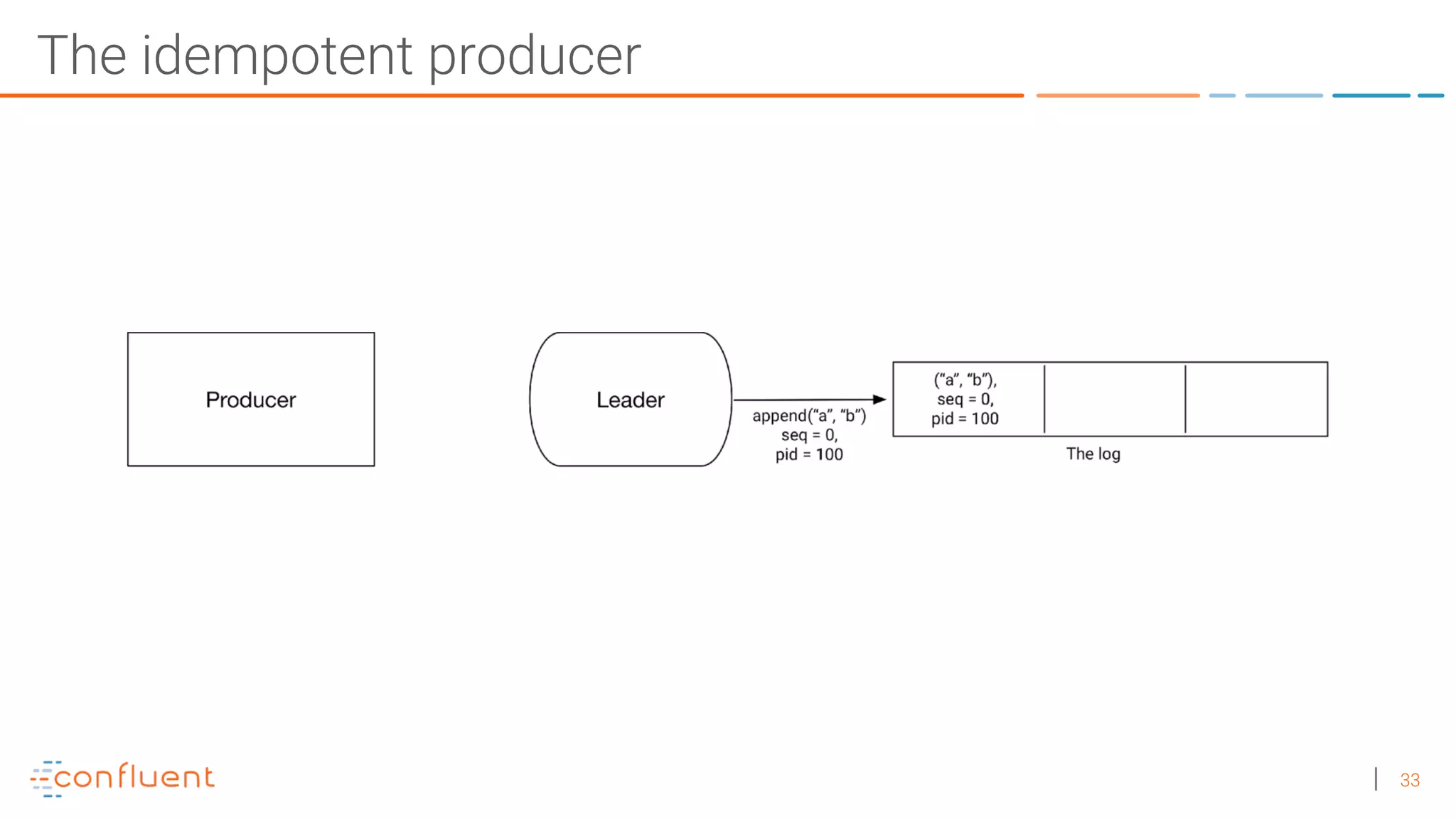

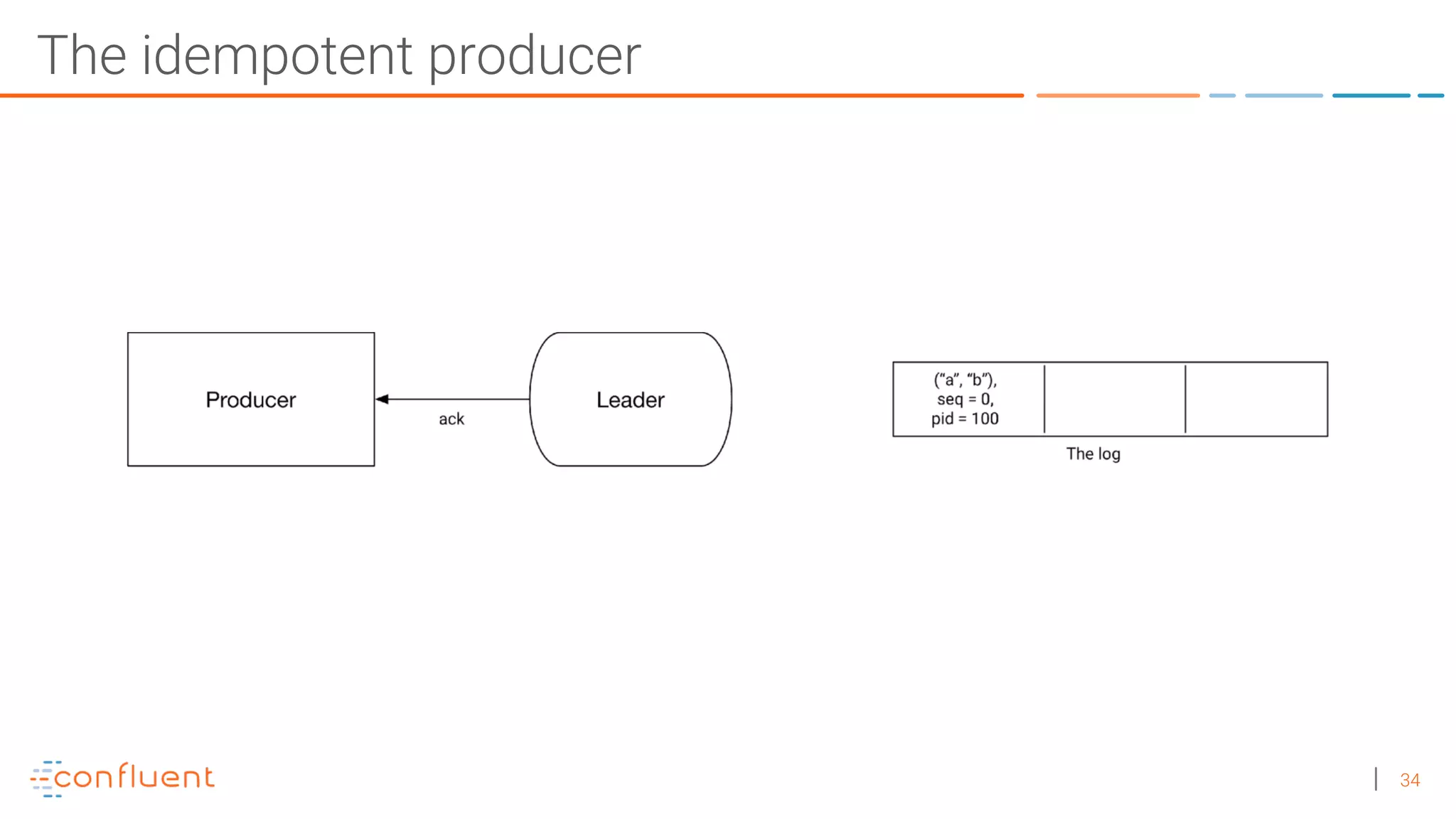

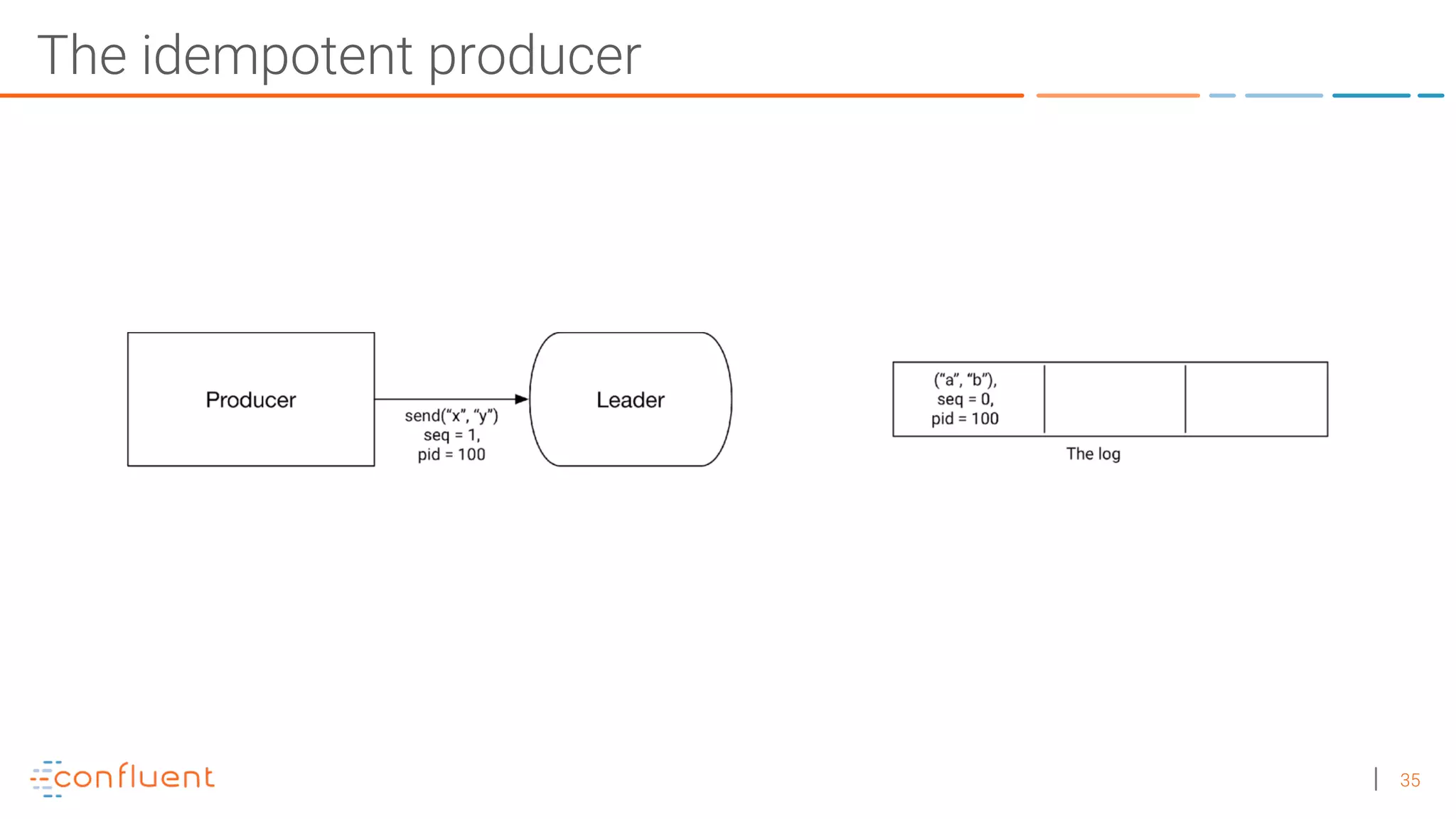

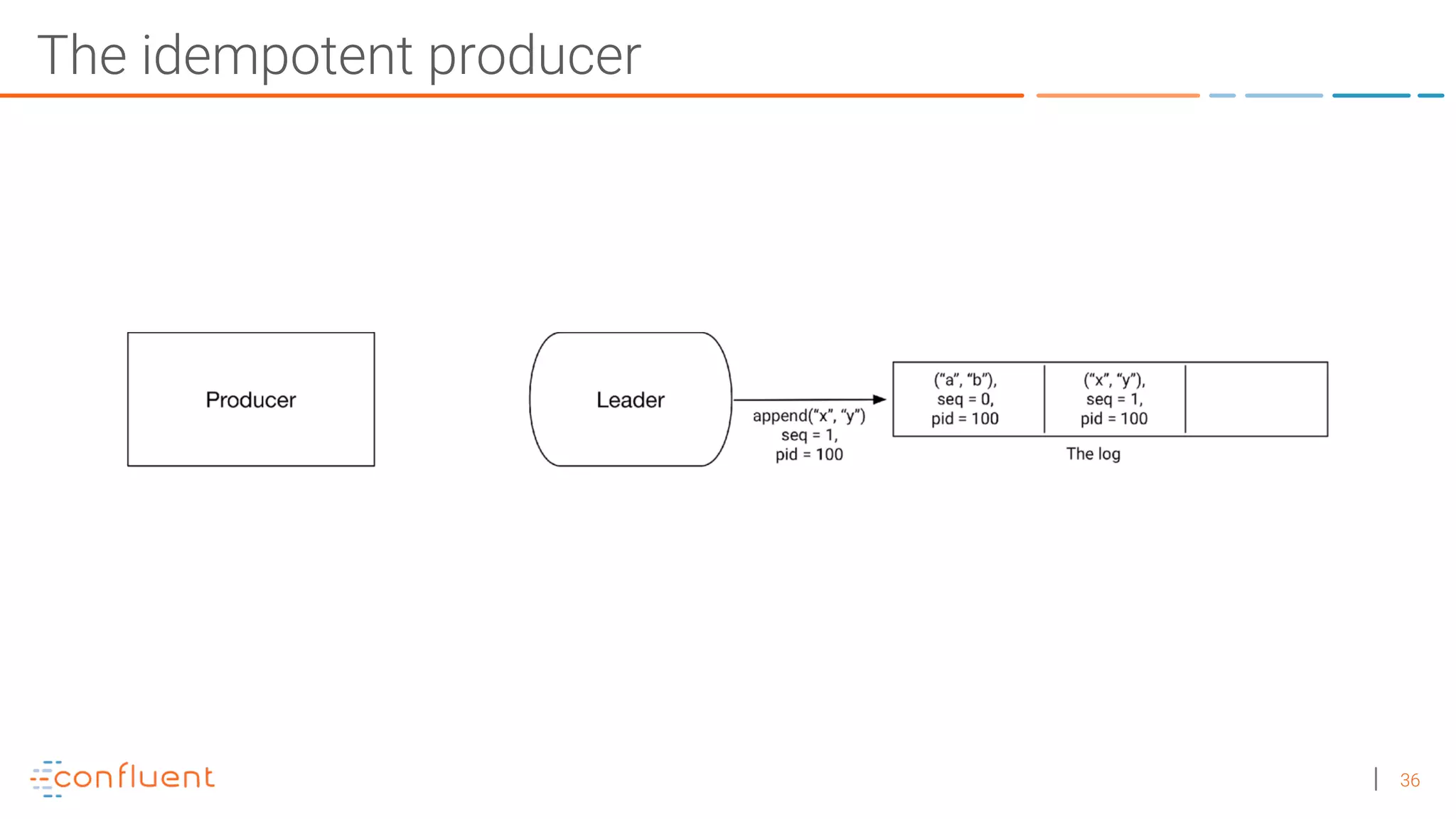

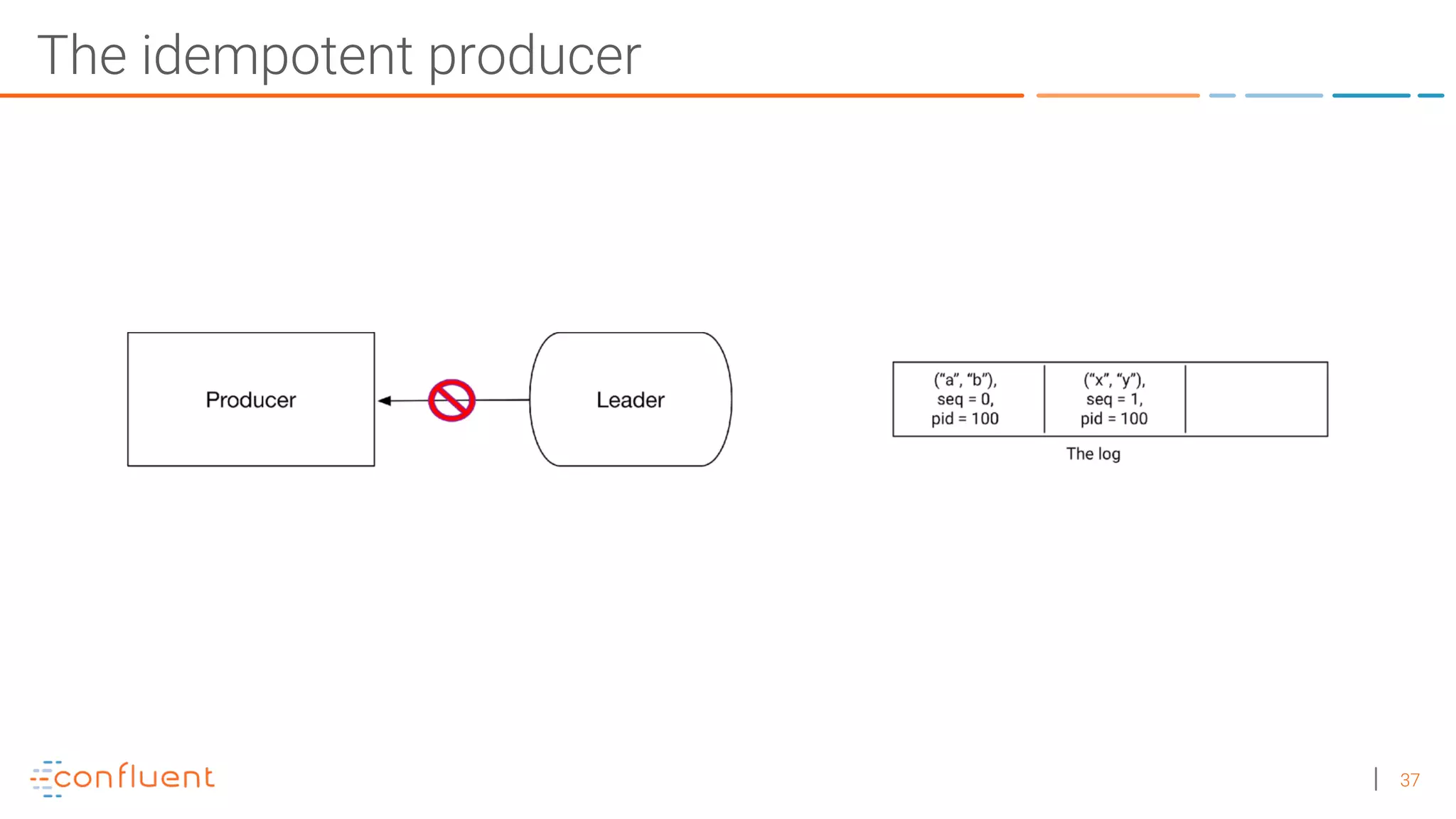

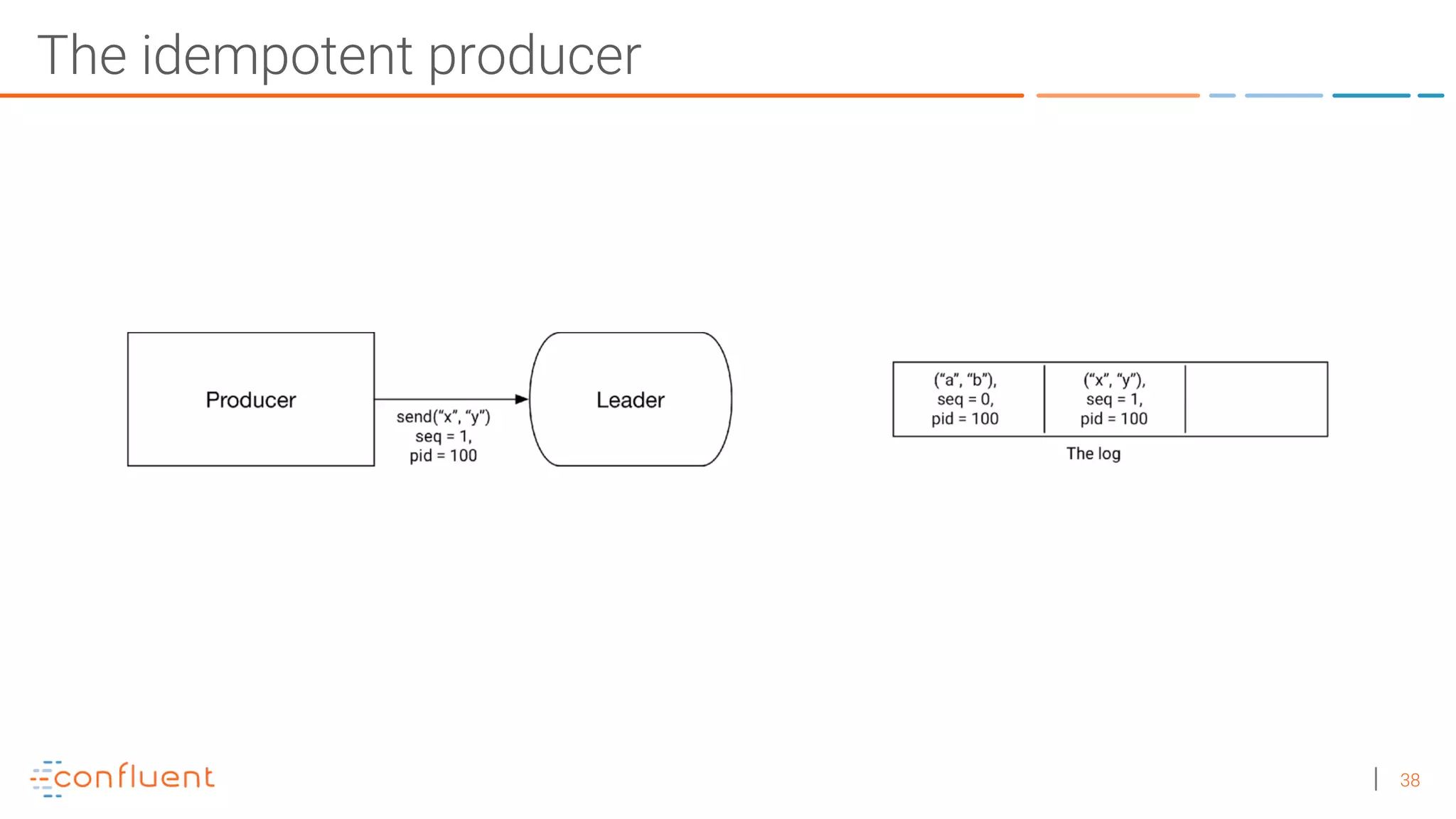

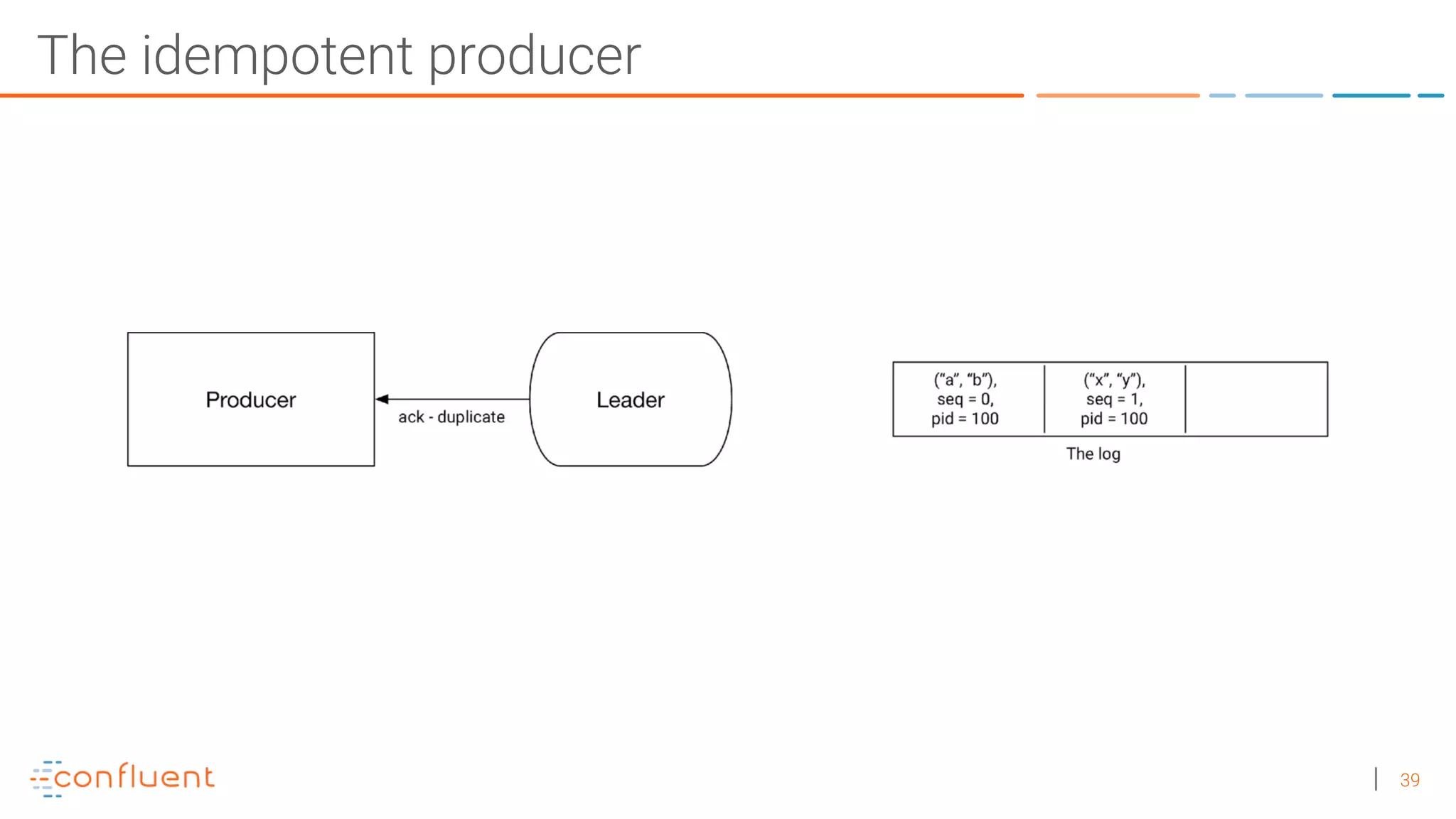

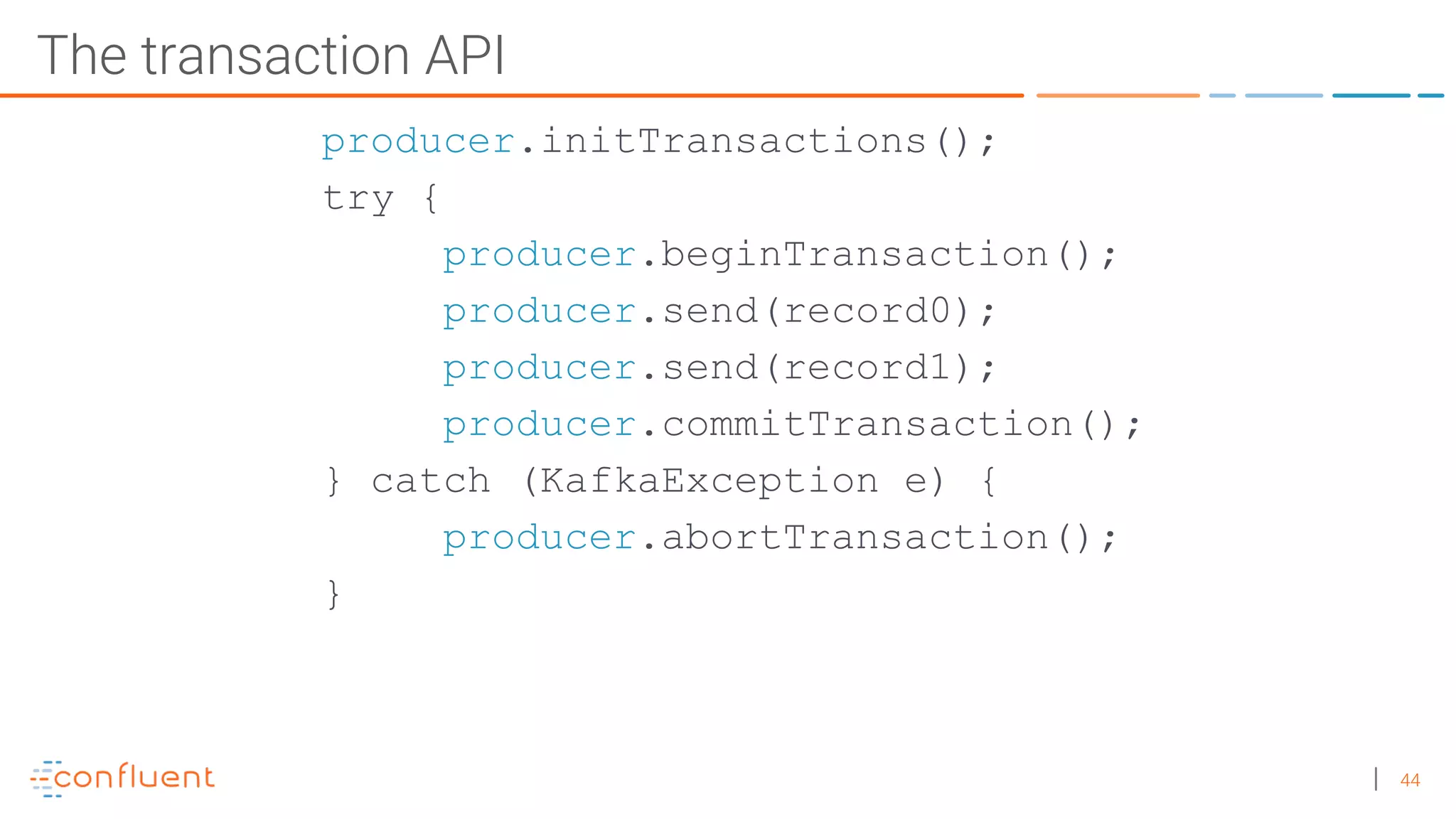

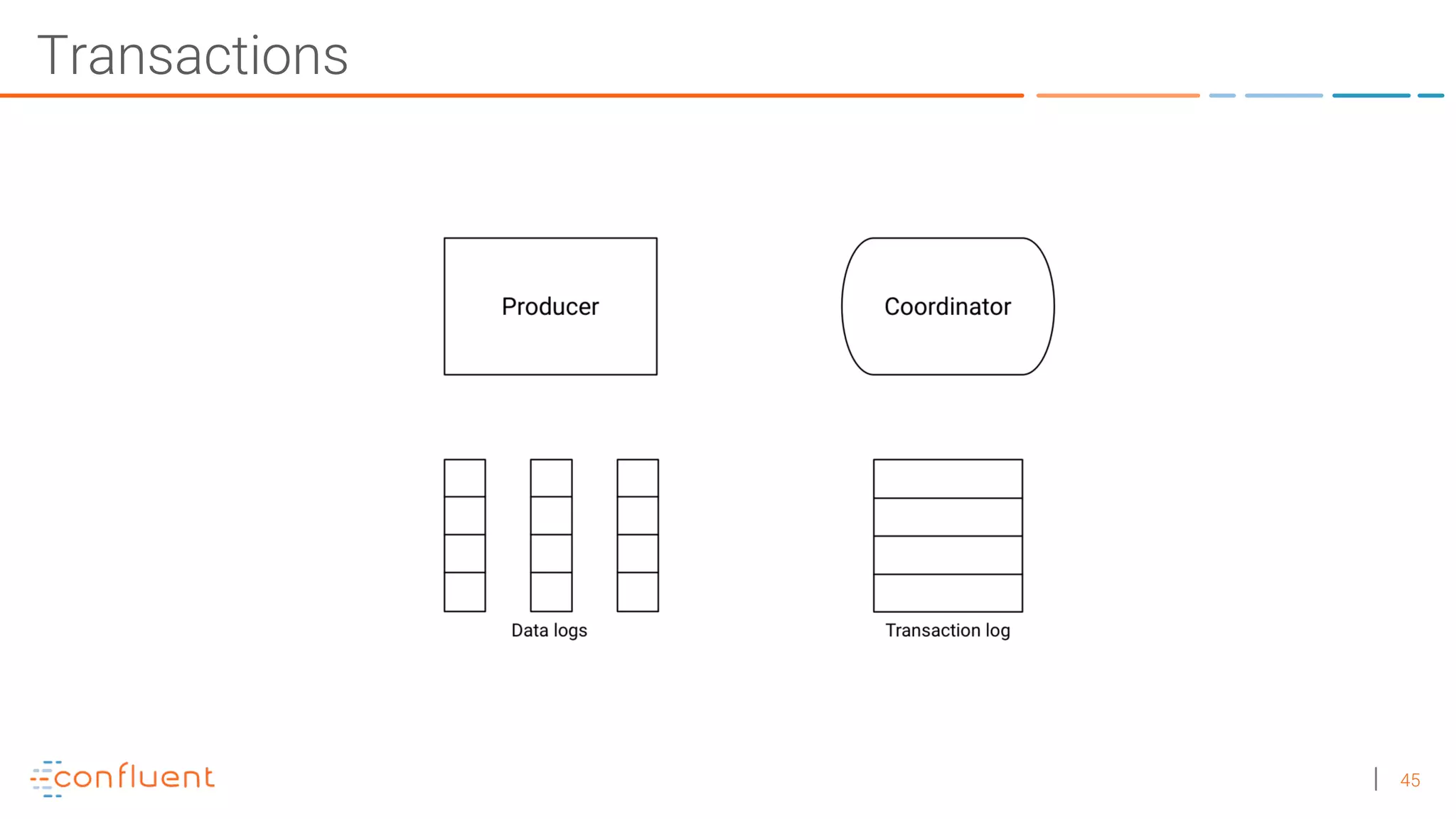

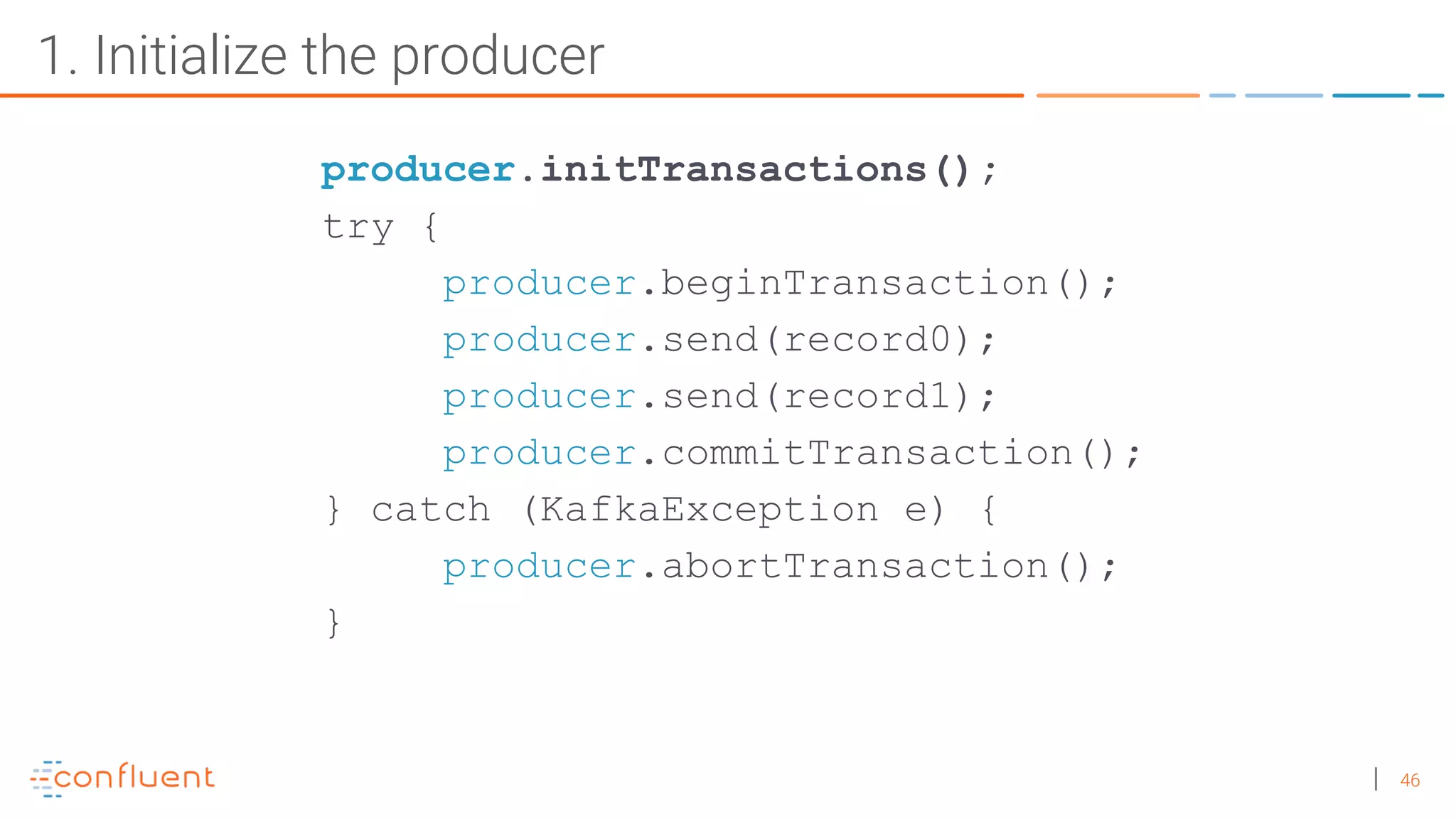

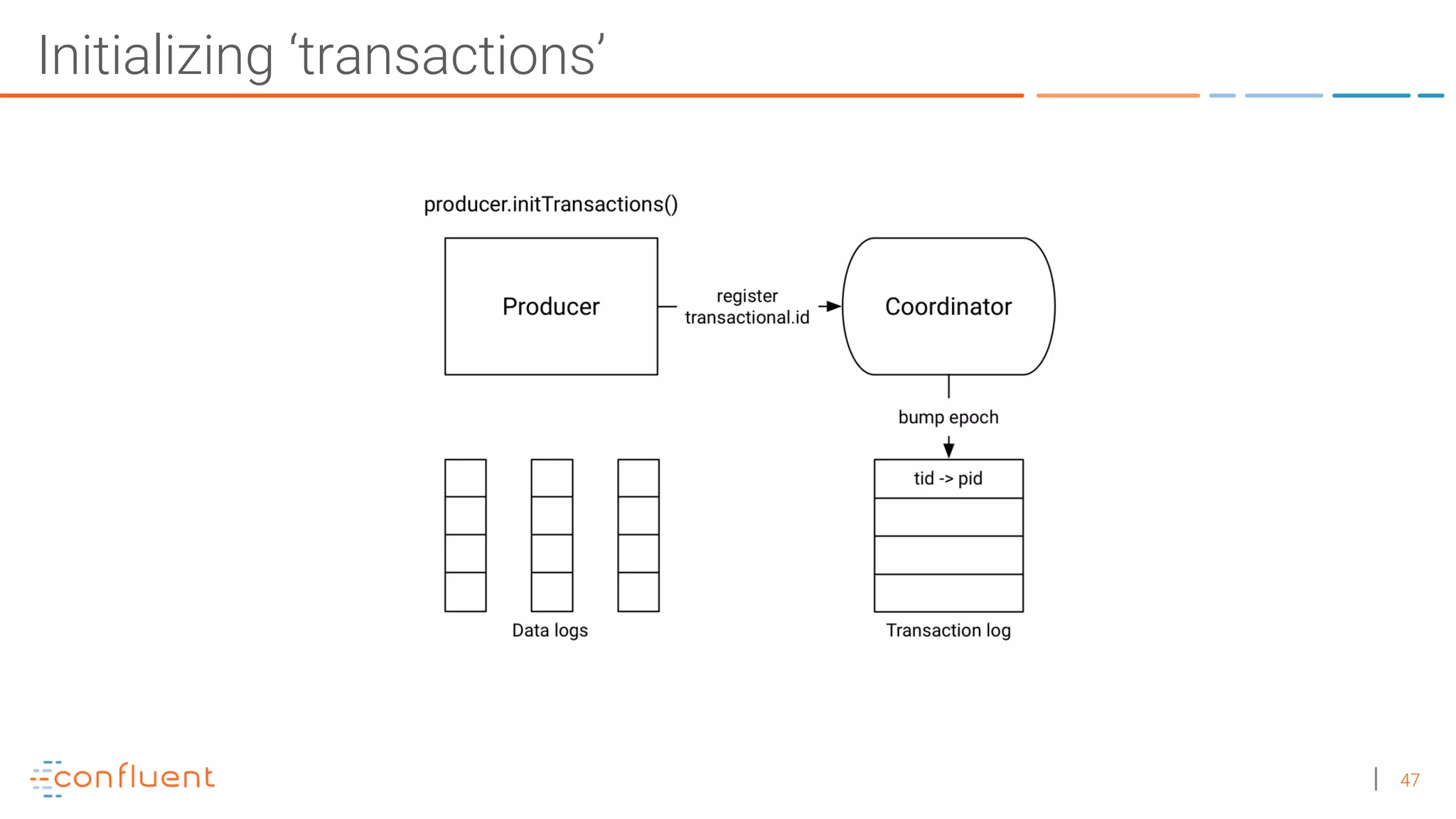

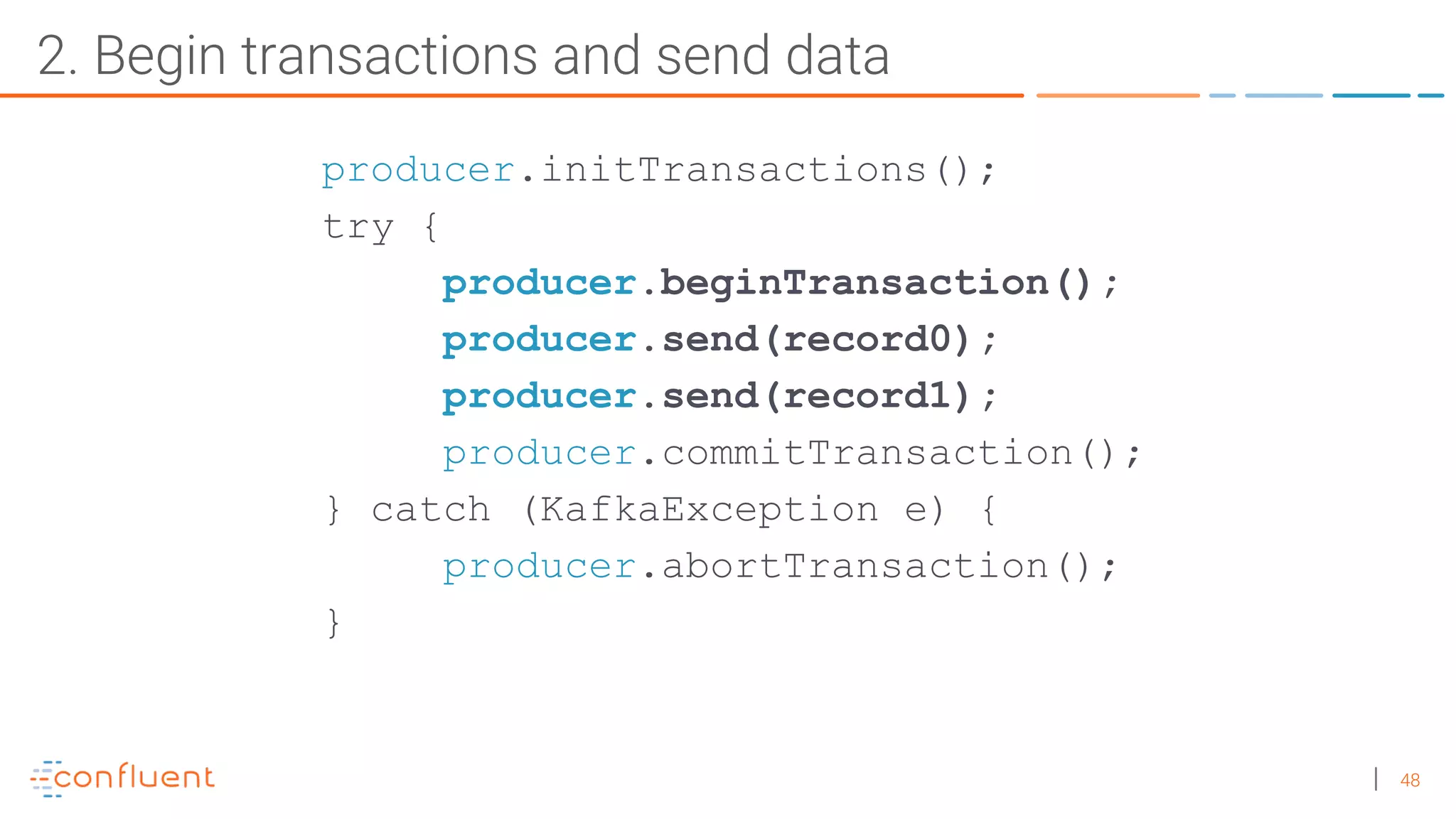

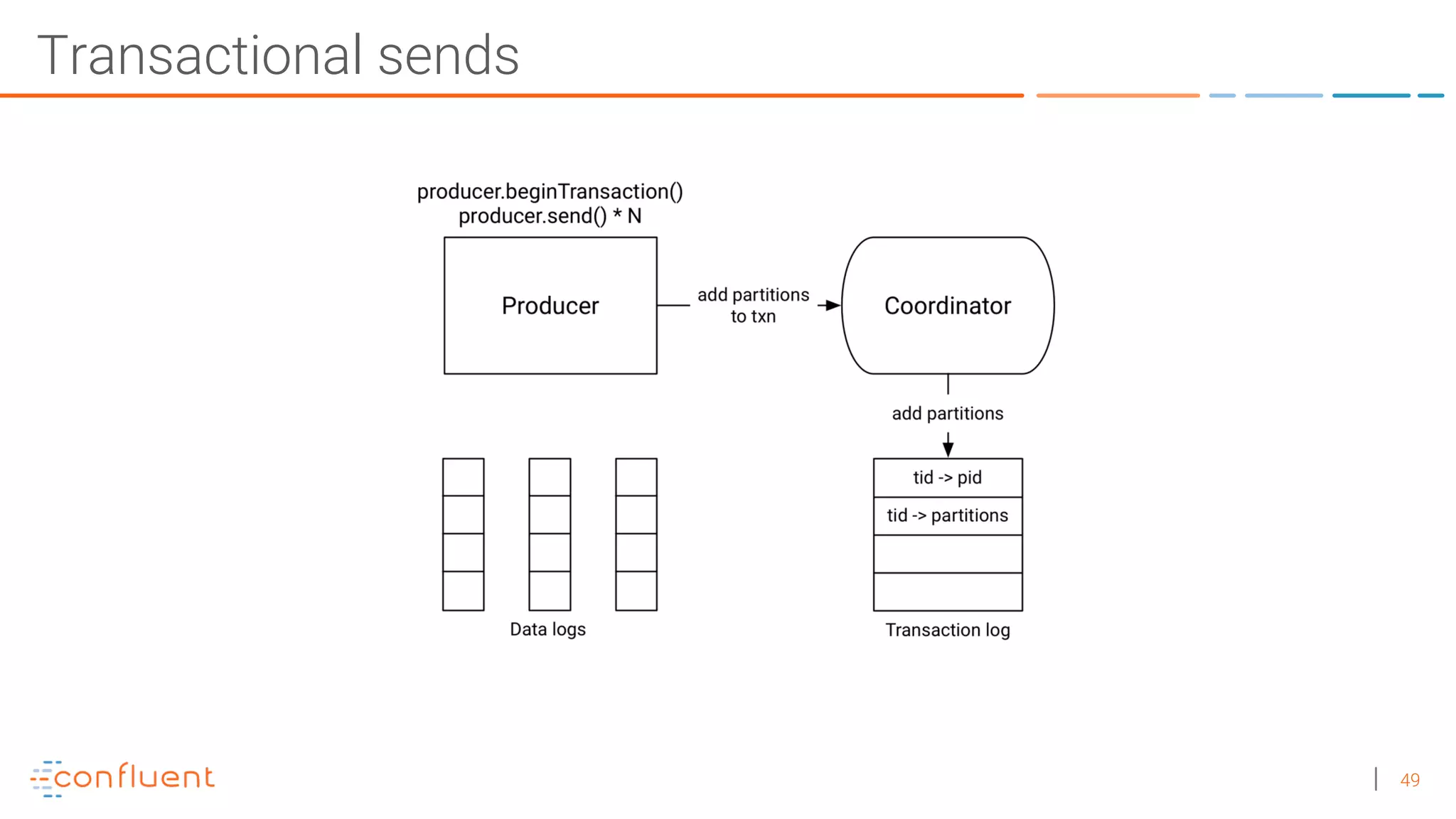

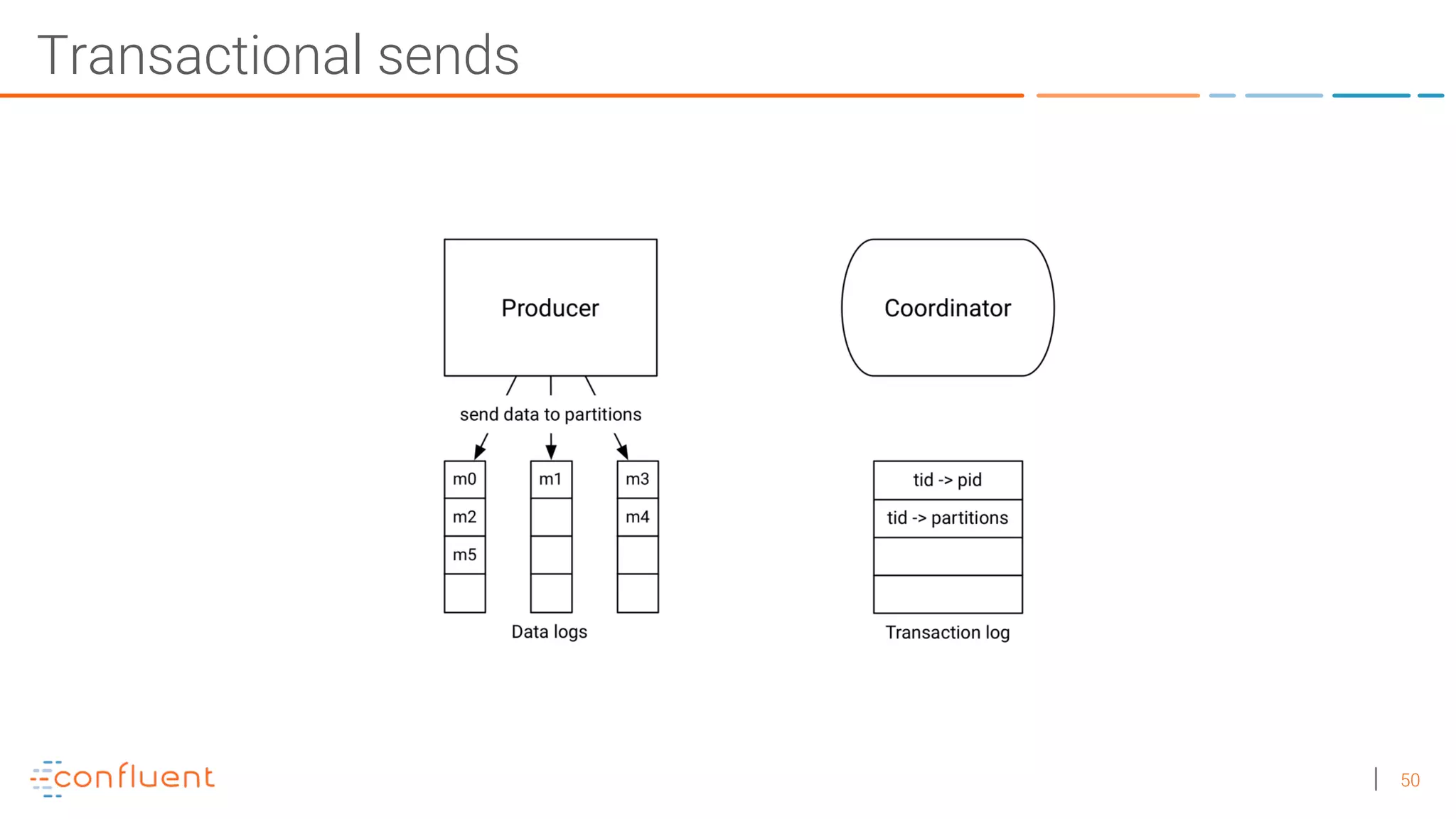

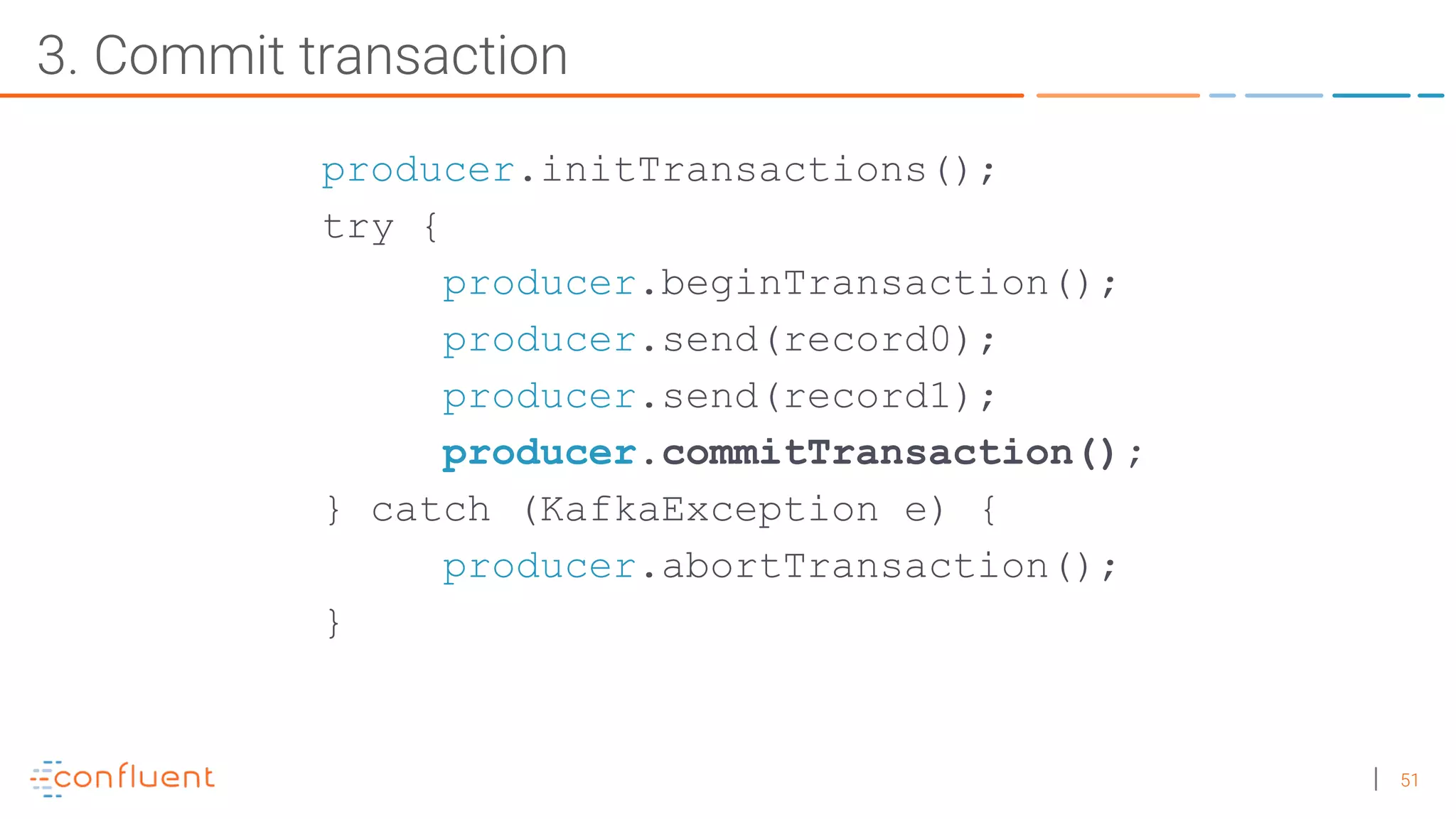

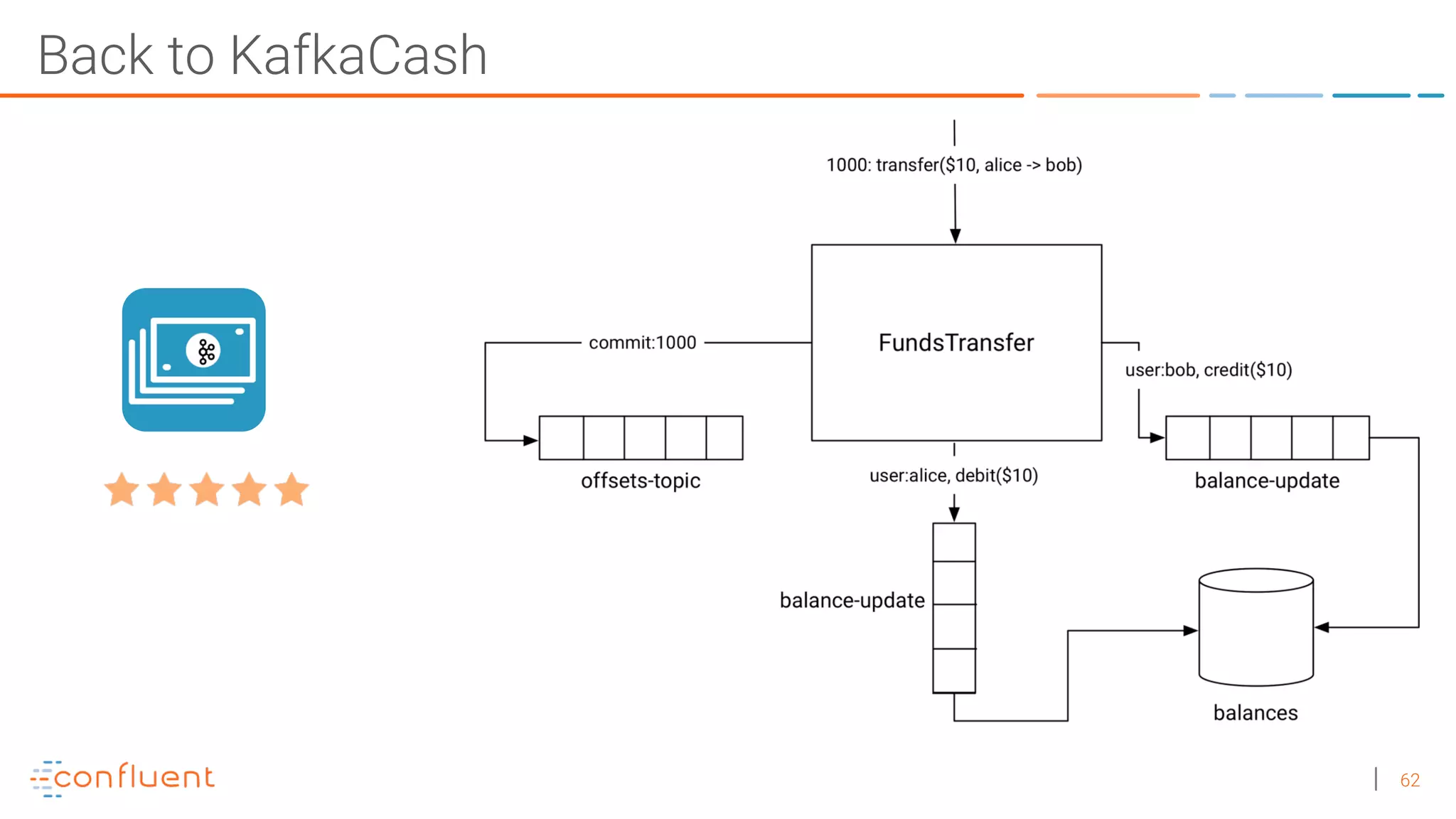

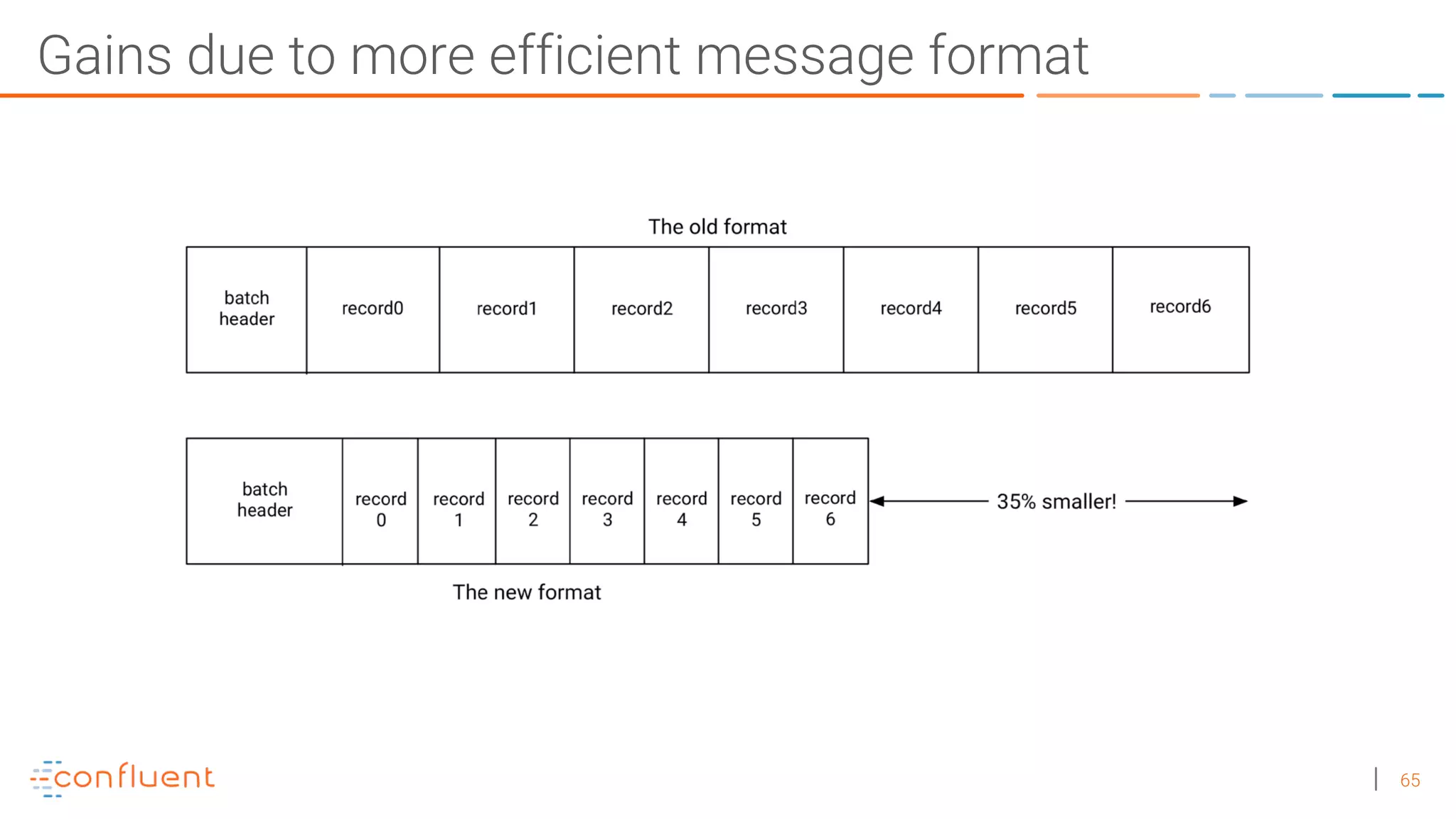

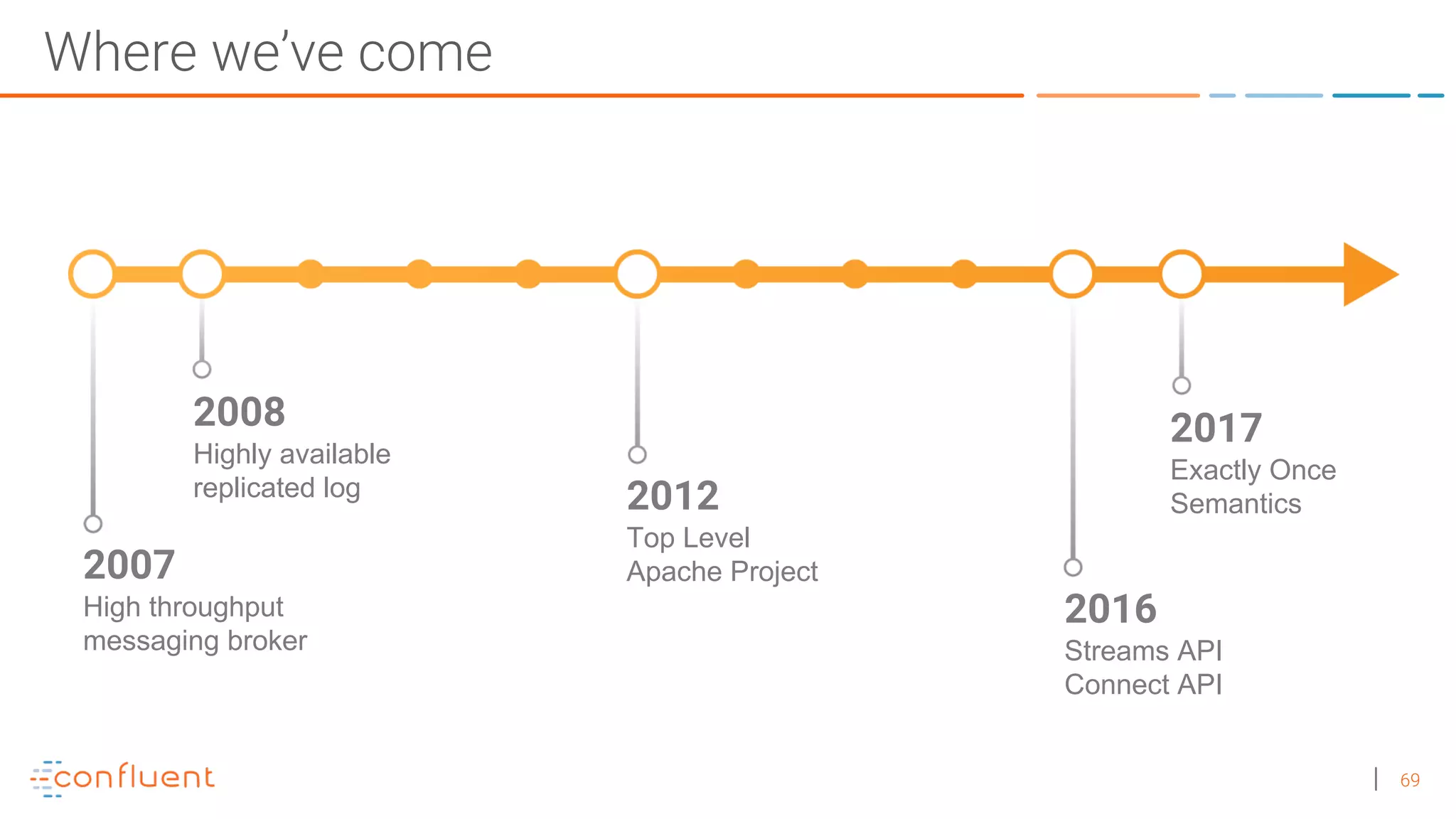

The document discusses the introduction of exactly-once semantics in Apache Kafka, outlining its significance for reliable stream processing. It explains the challenges and existing semantics, highlighting improvements through idempotent producers and transactional writes. The presentation concludes with insights on performance gains and future developments in Kafka's capabilities.