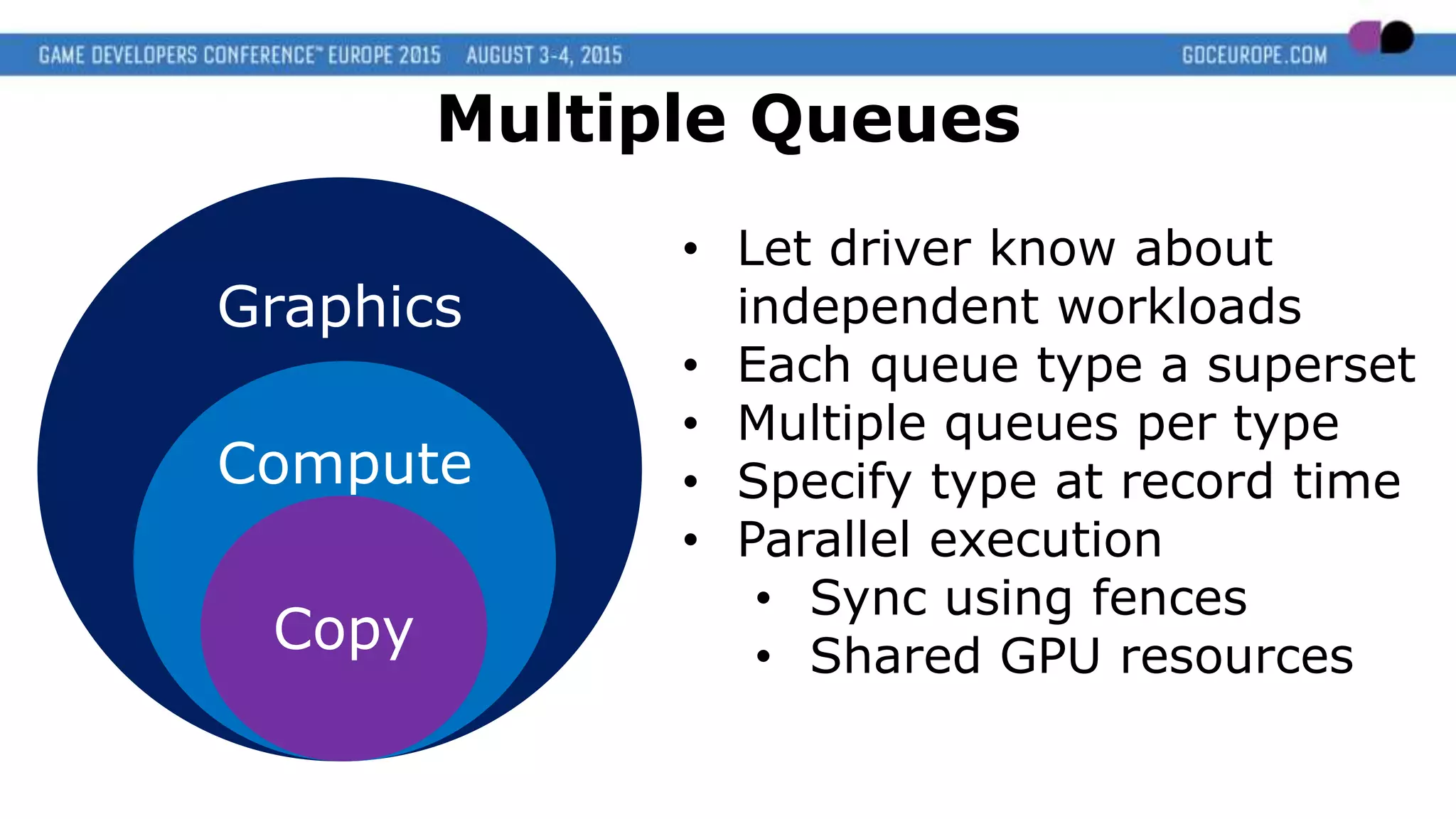

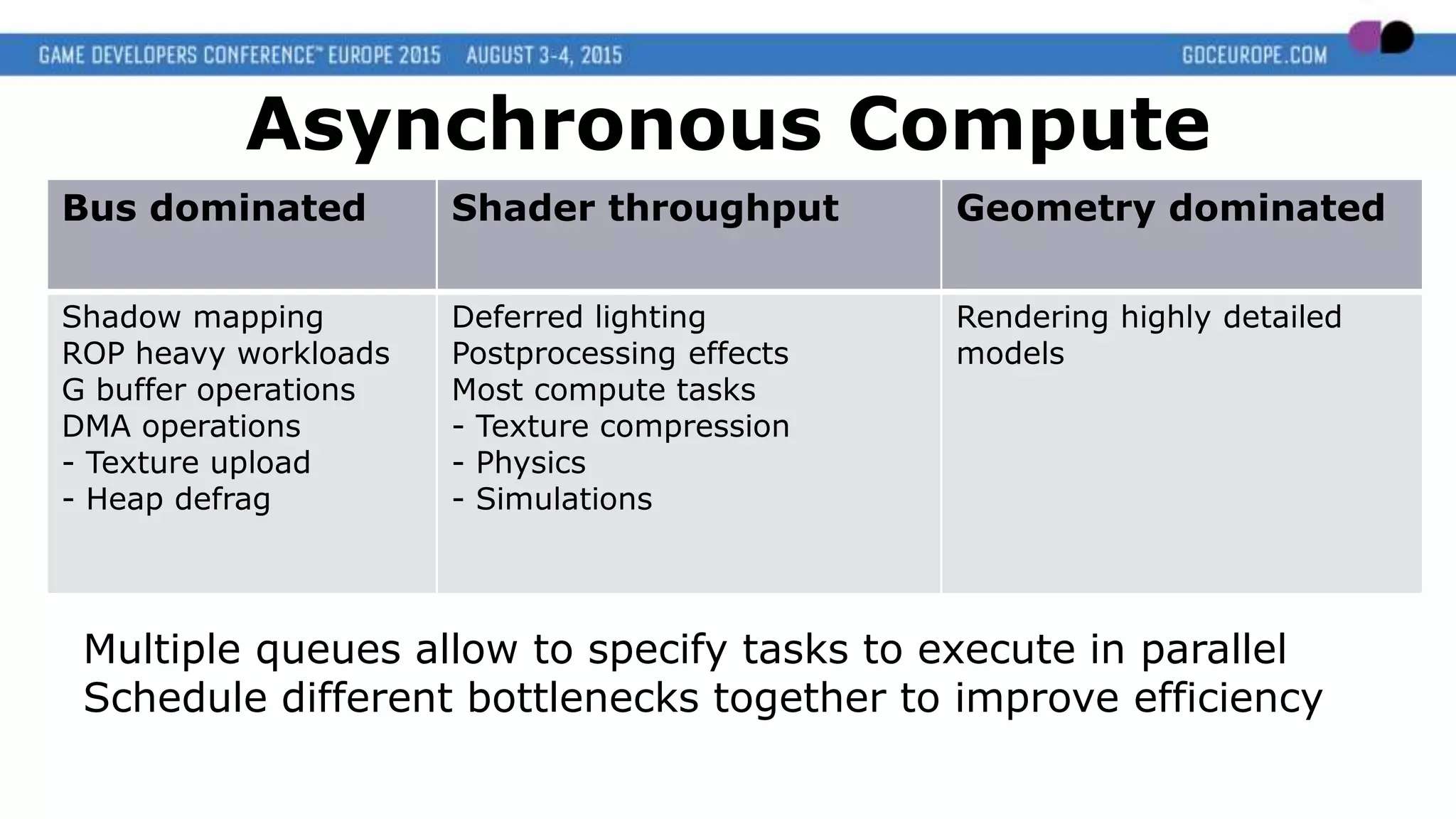

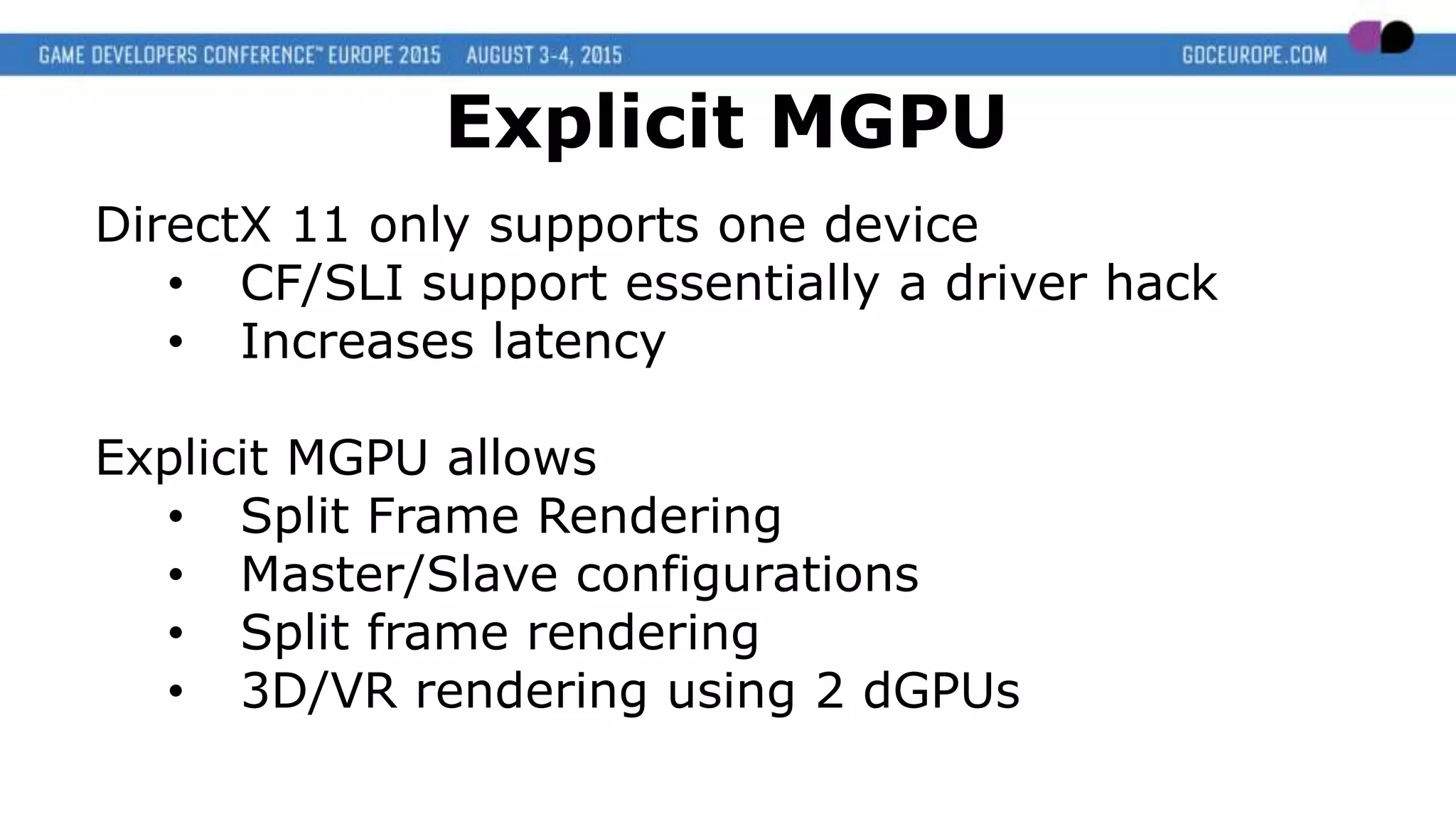

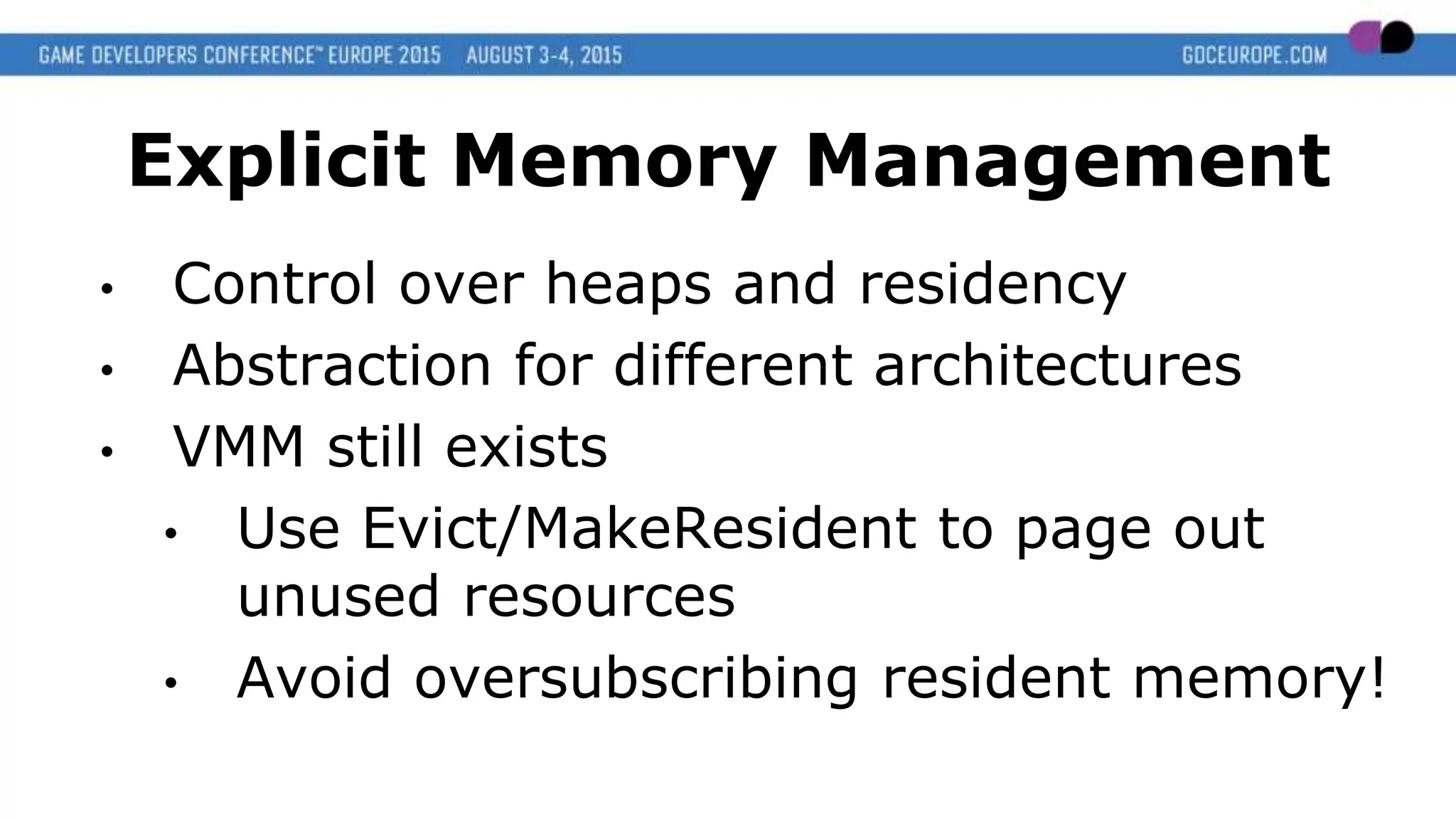

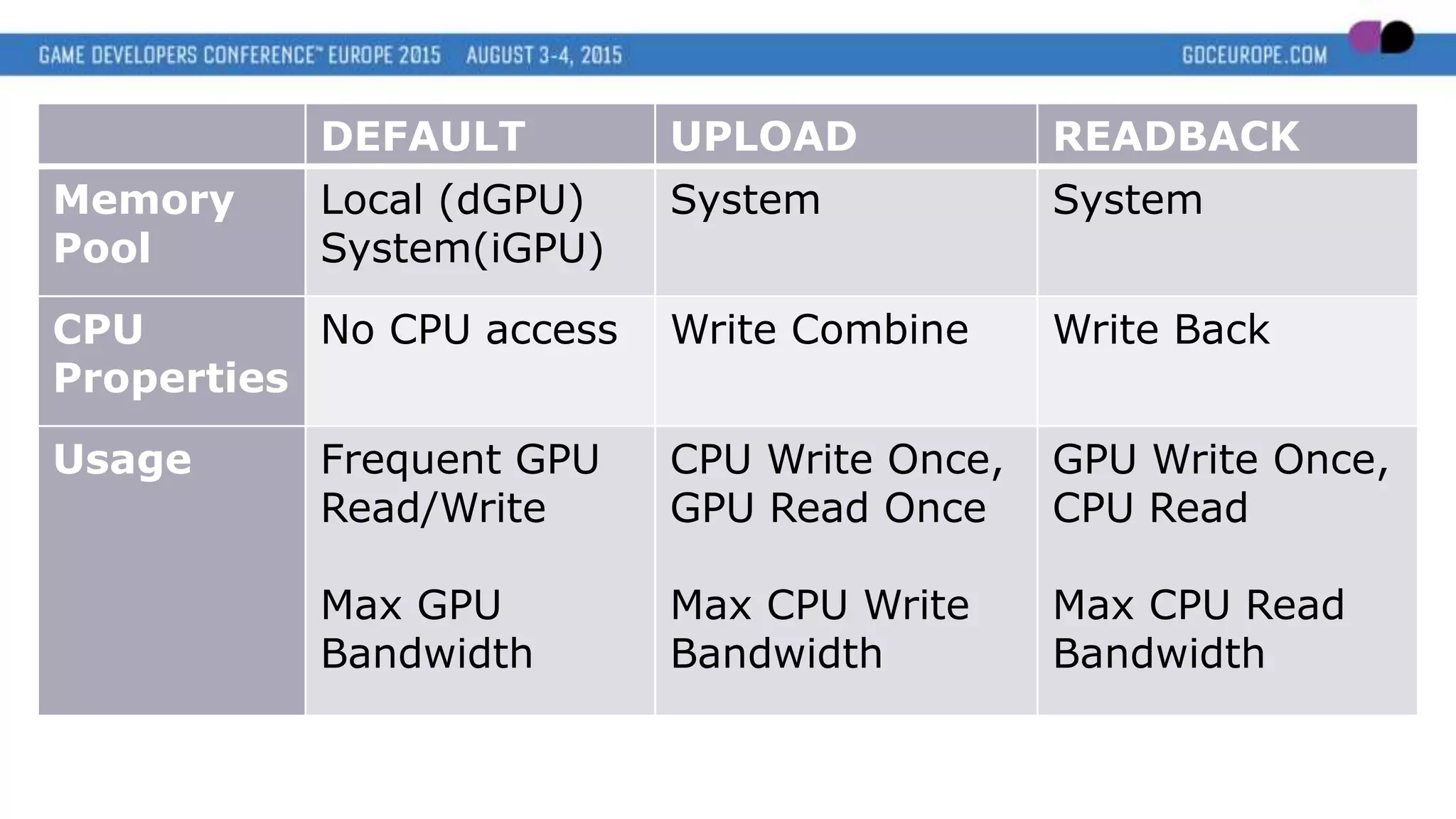

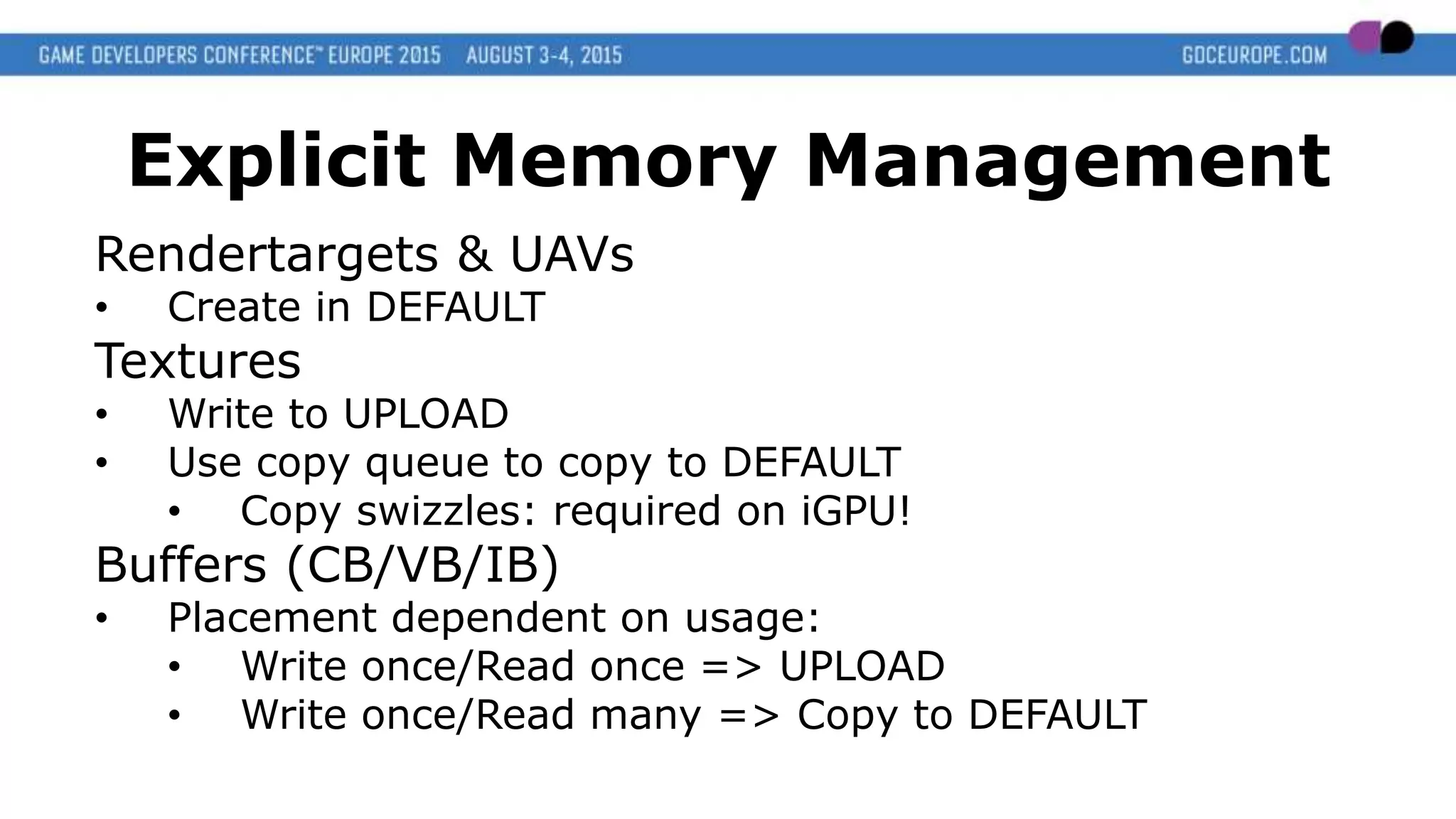

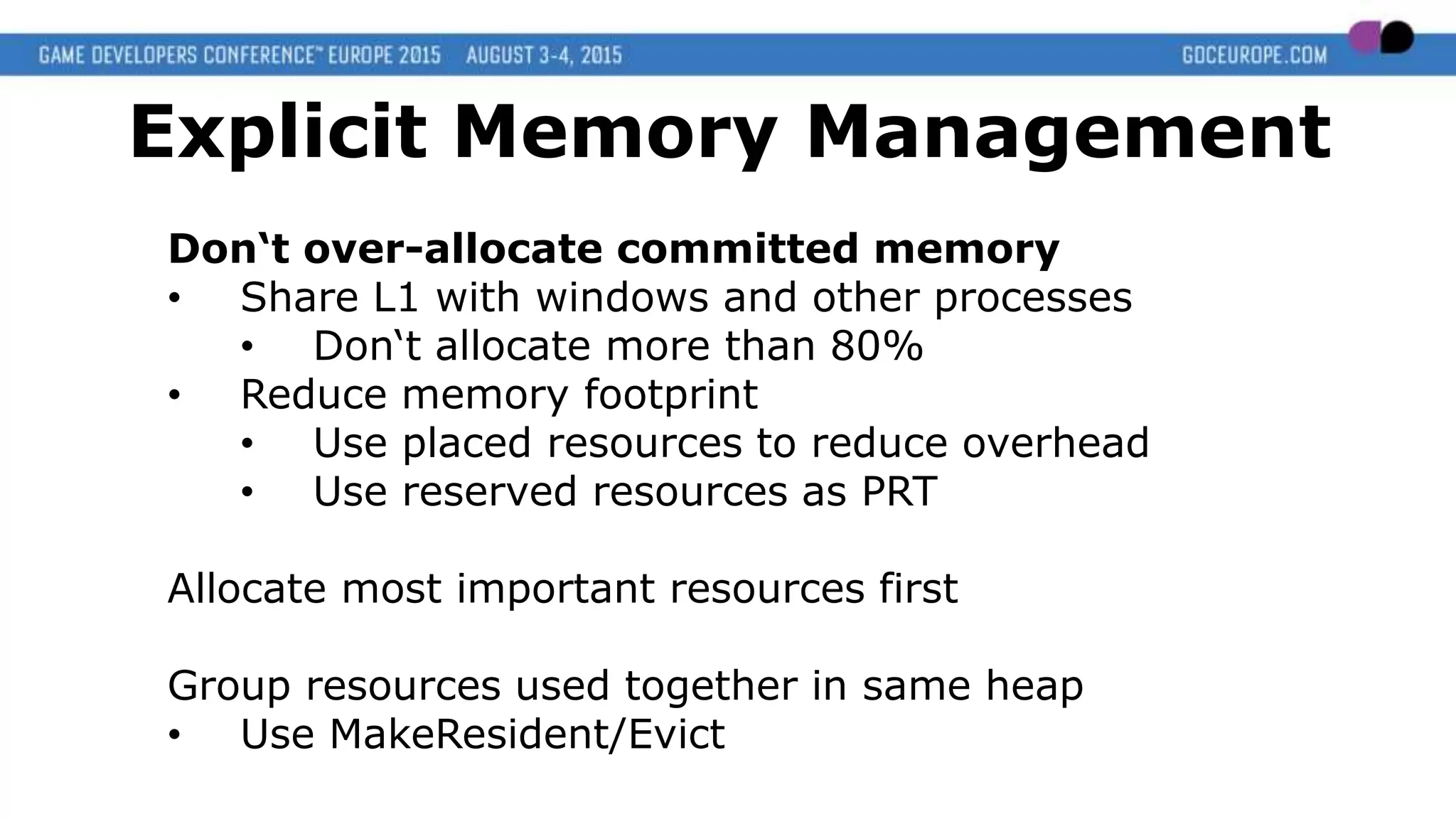

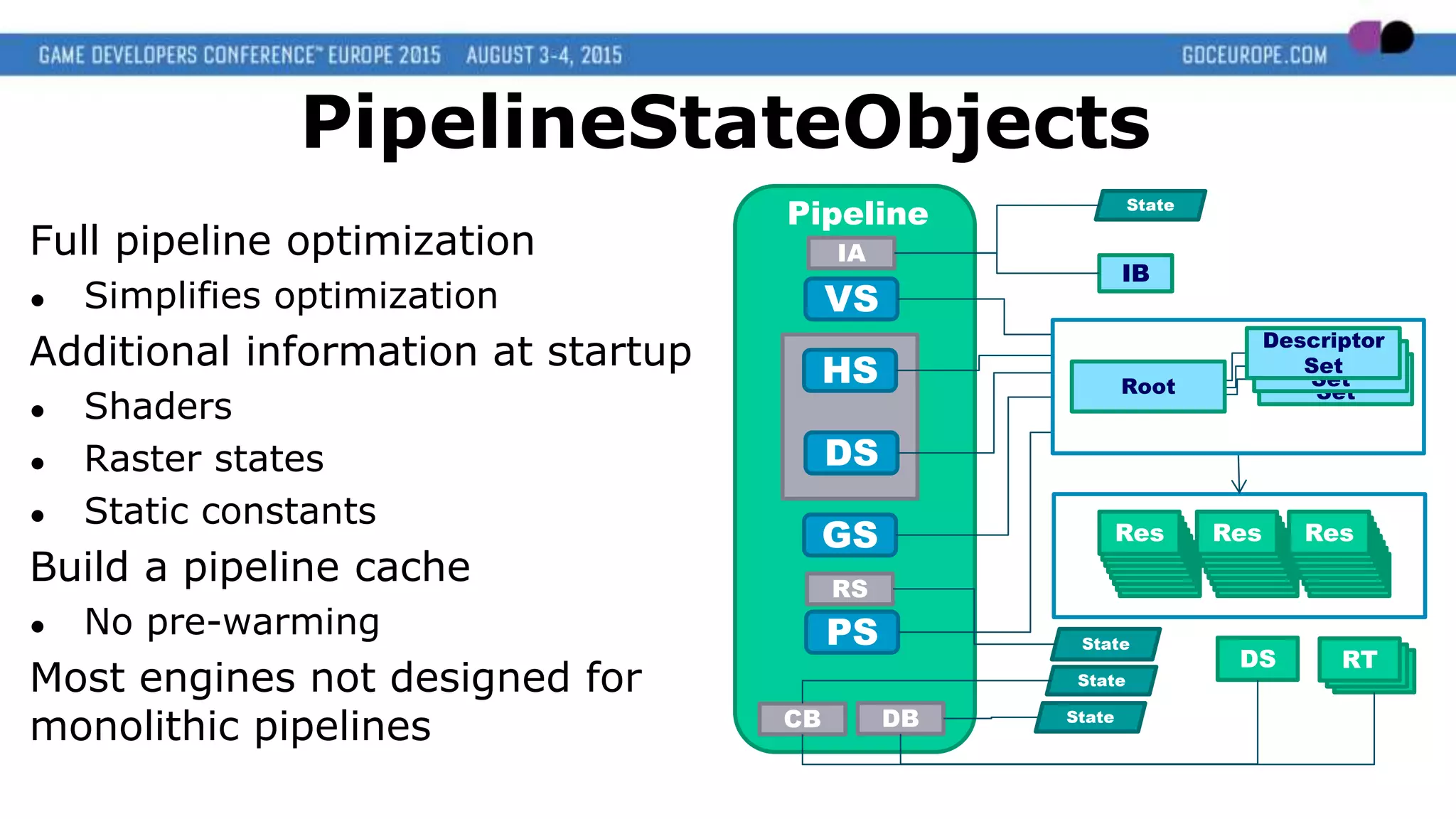

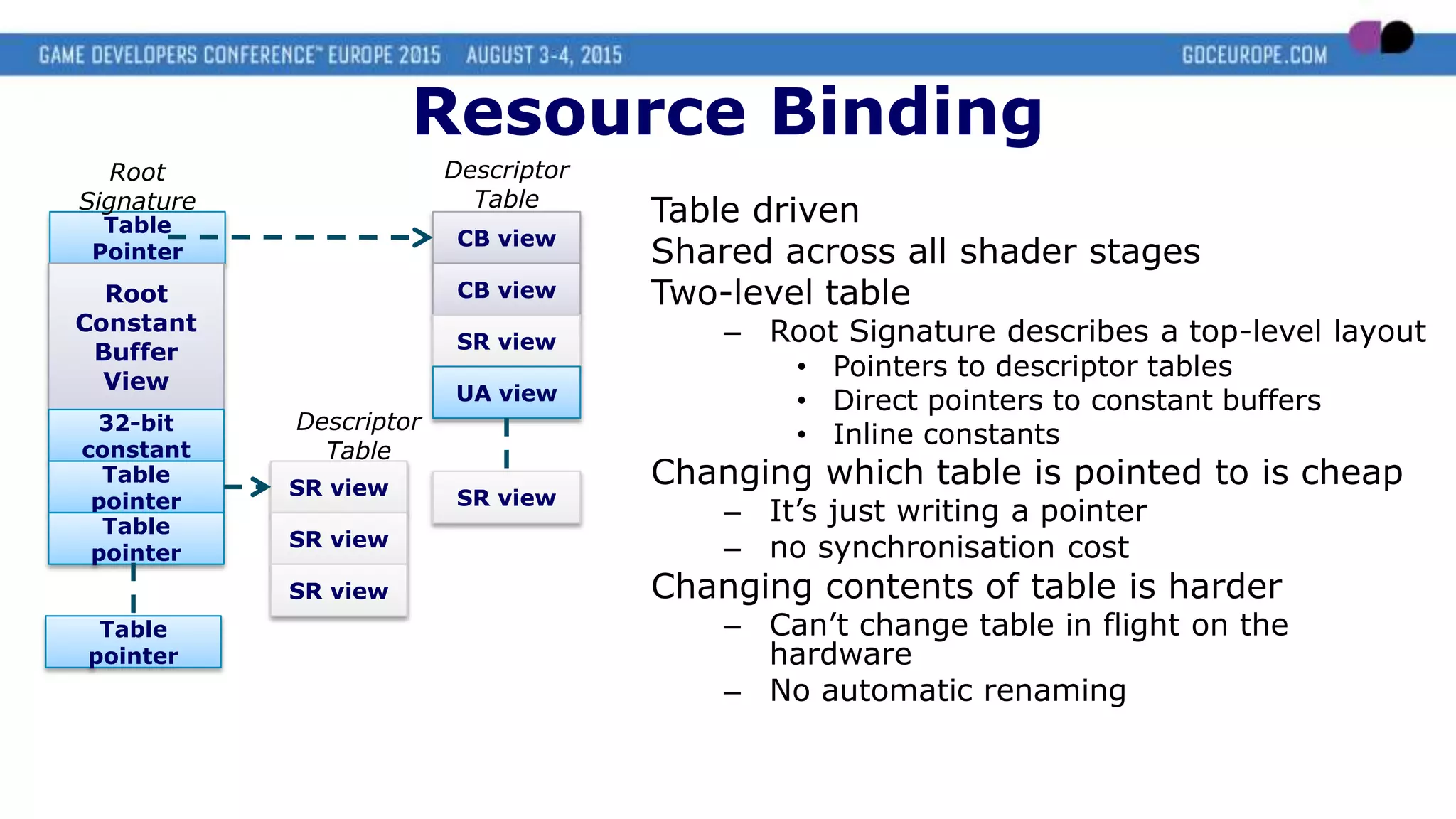

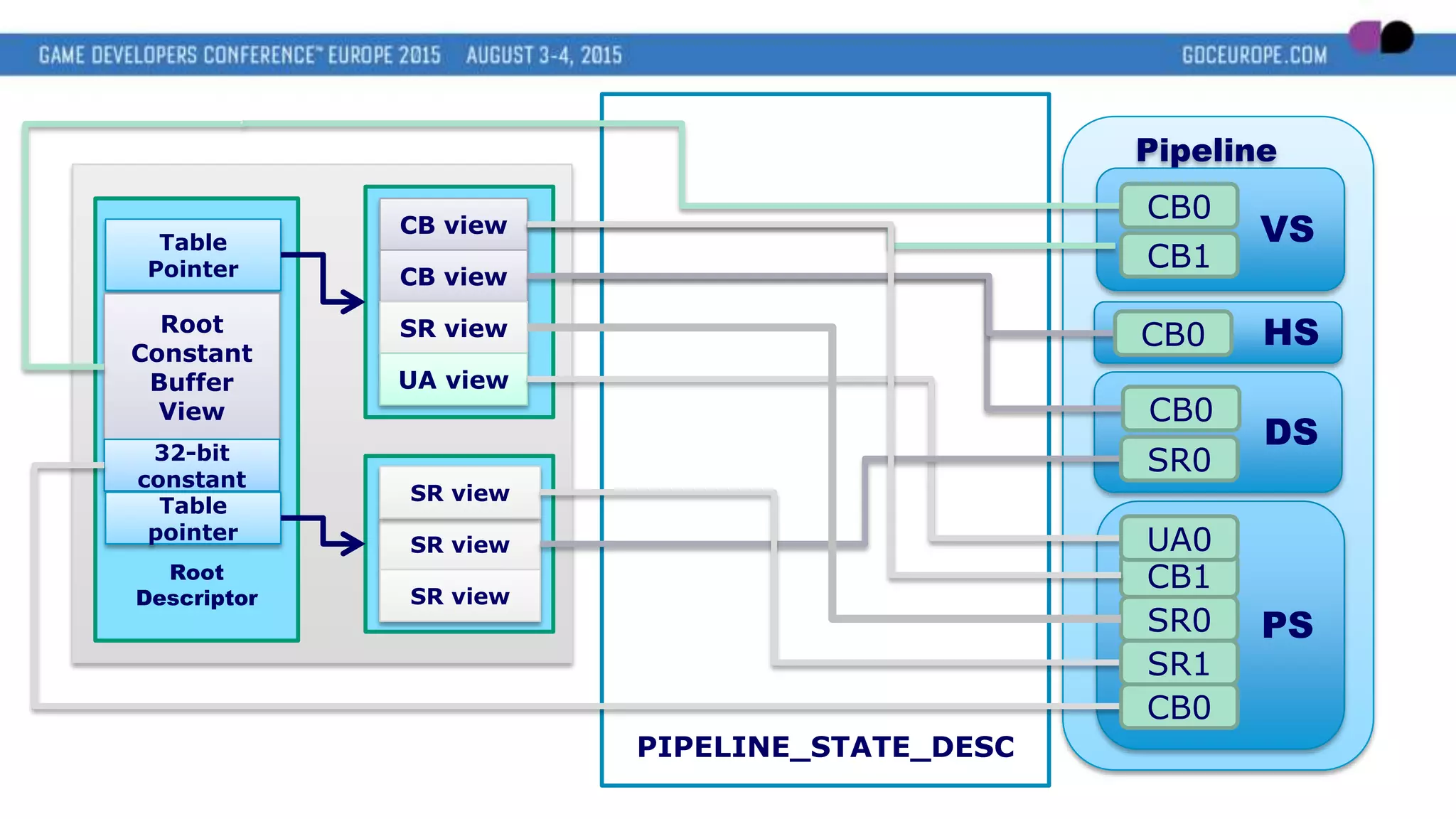

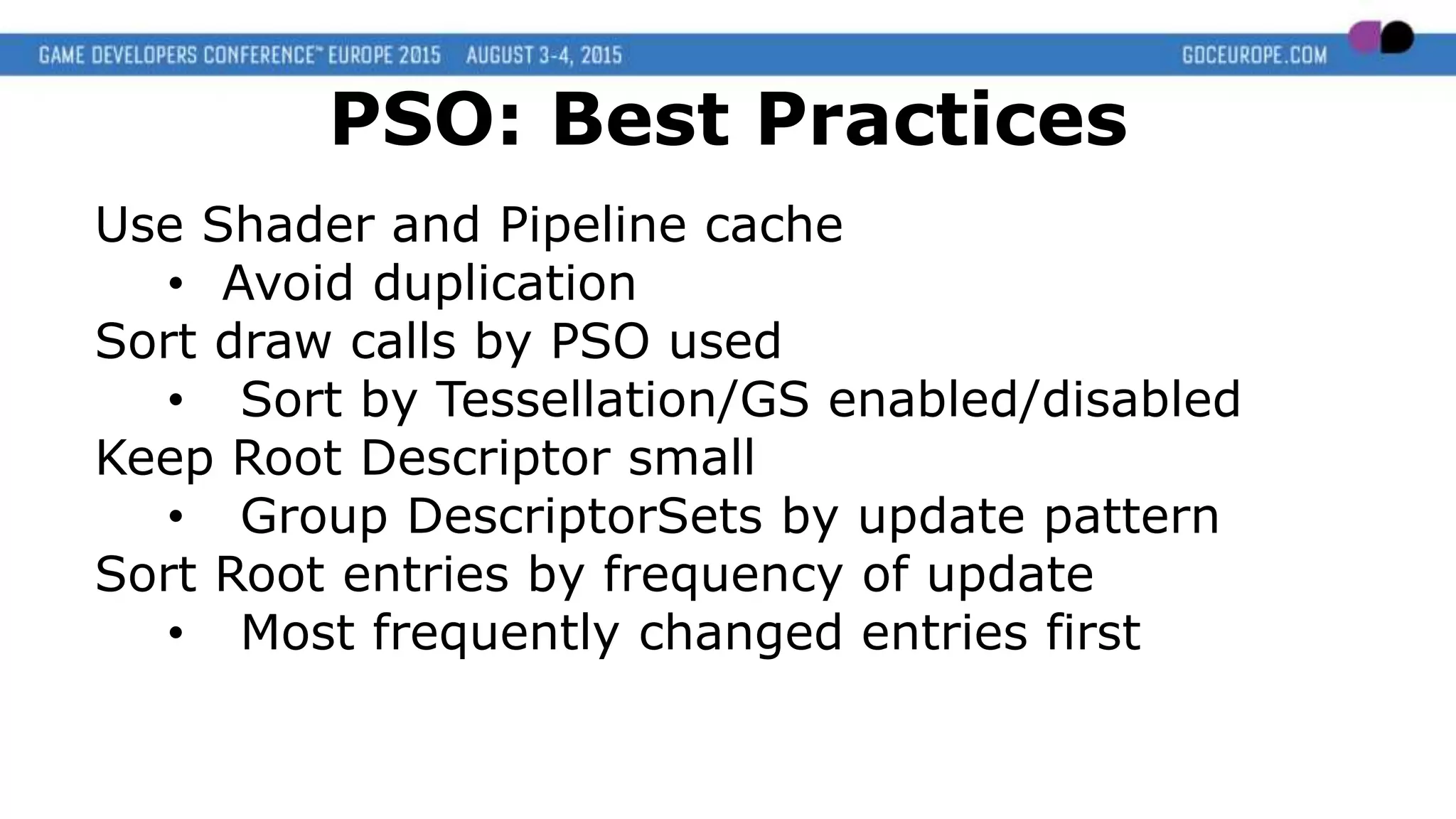

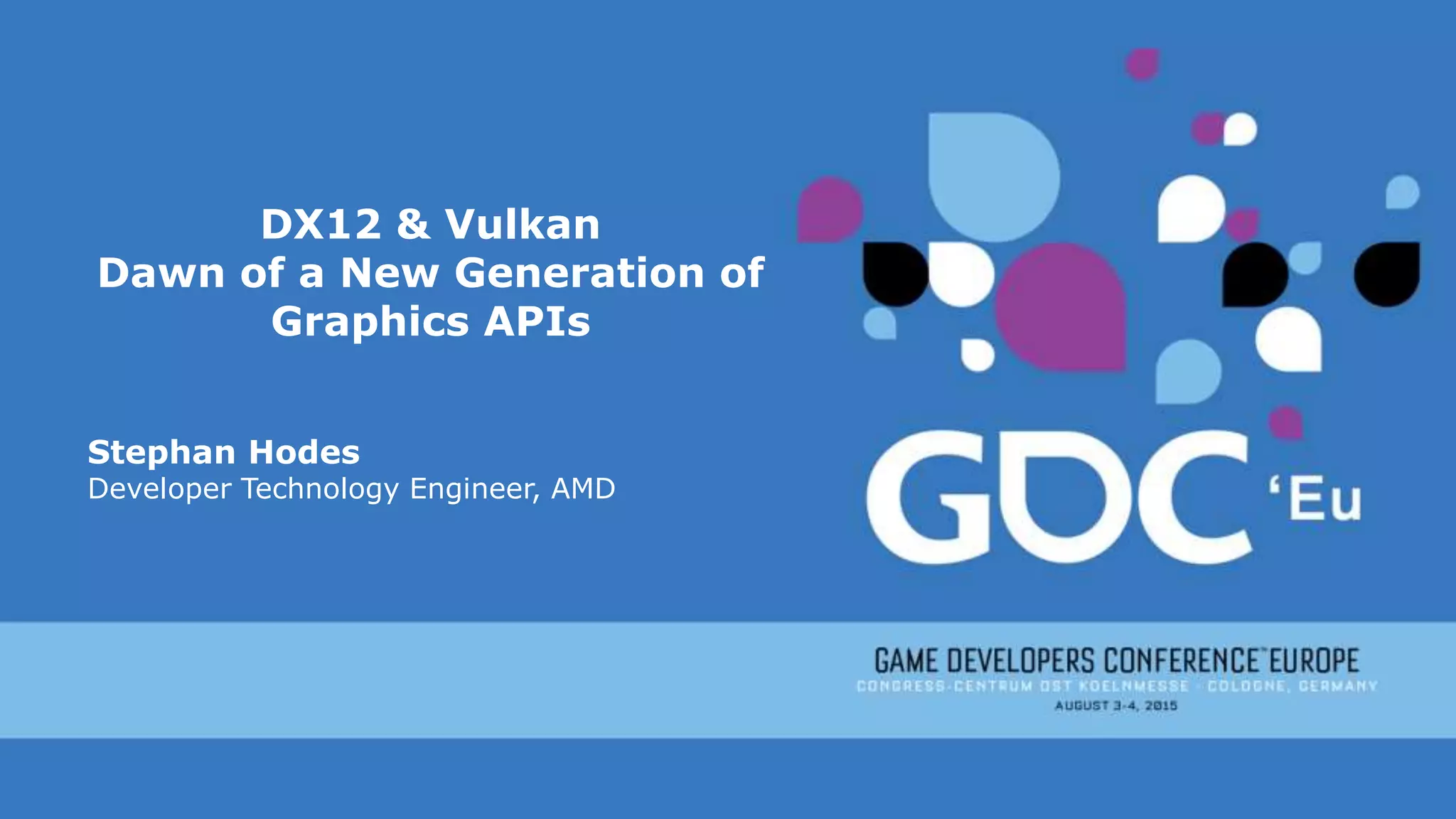

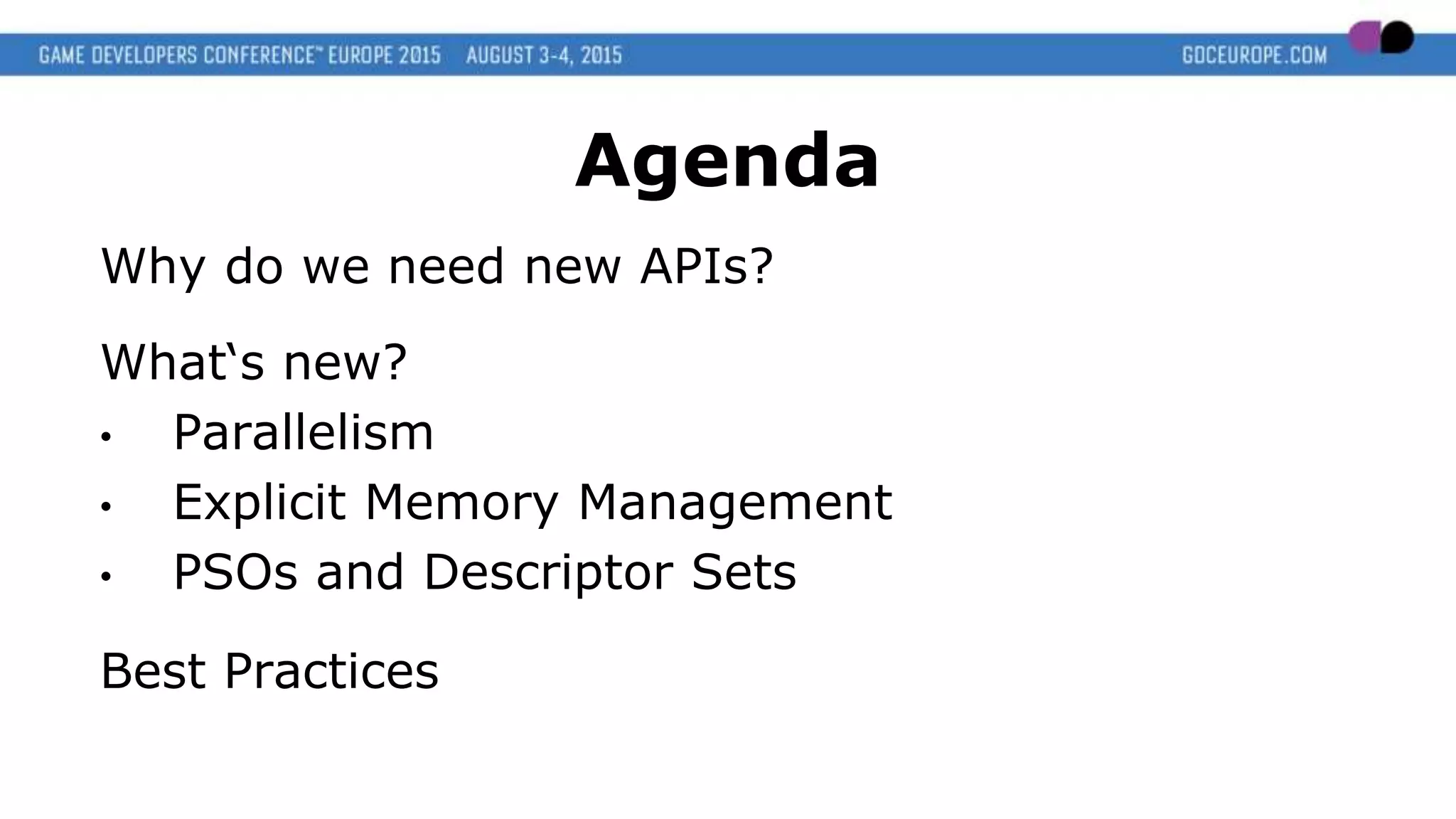

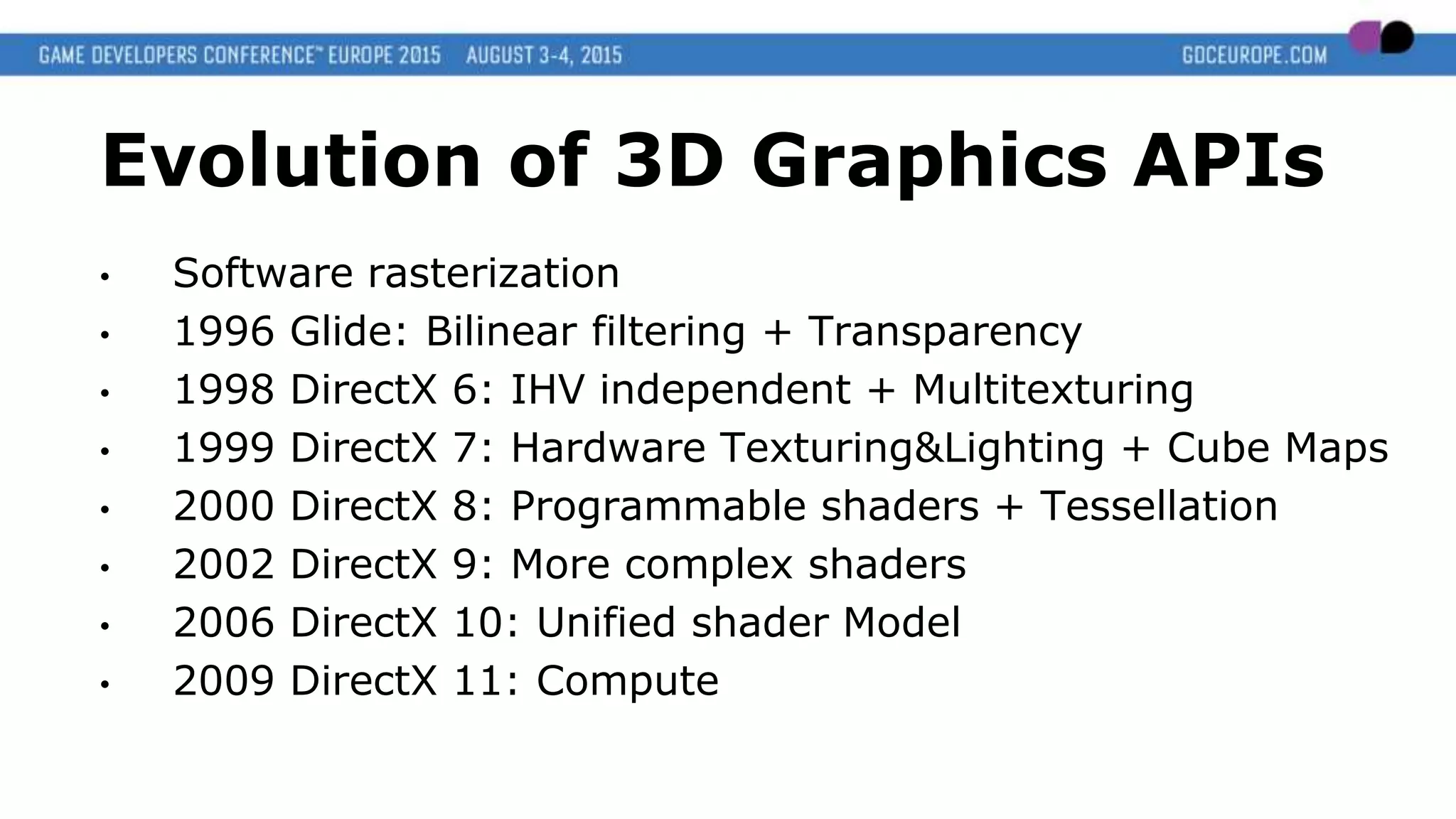

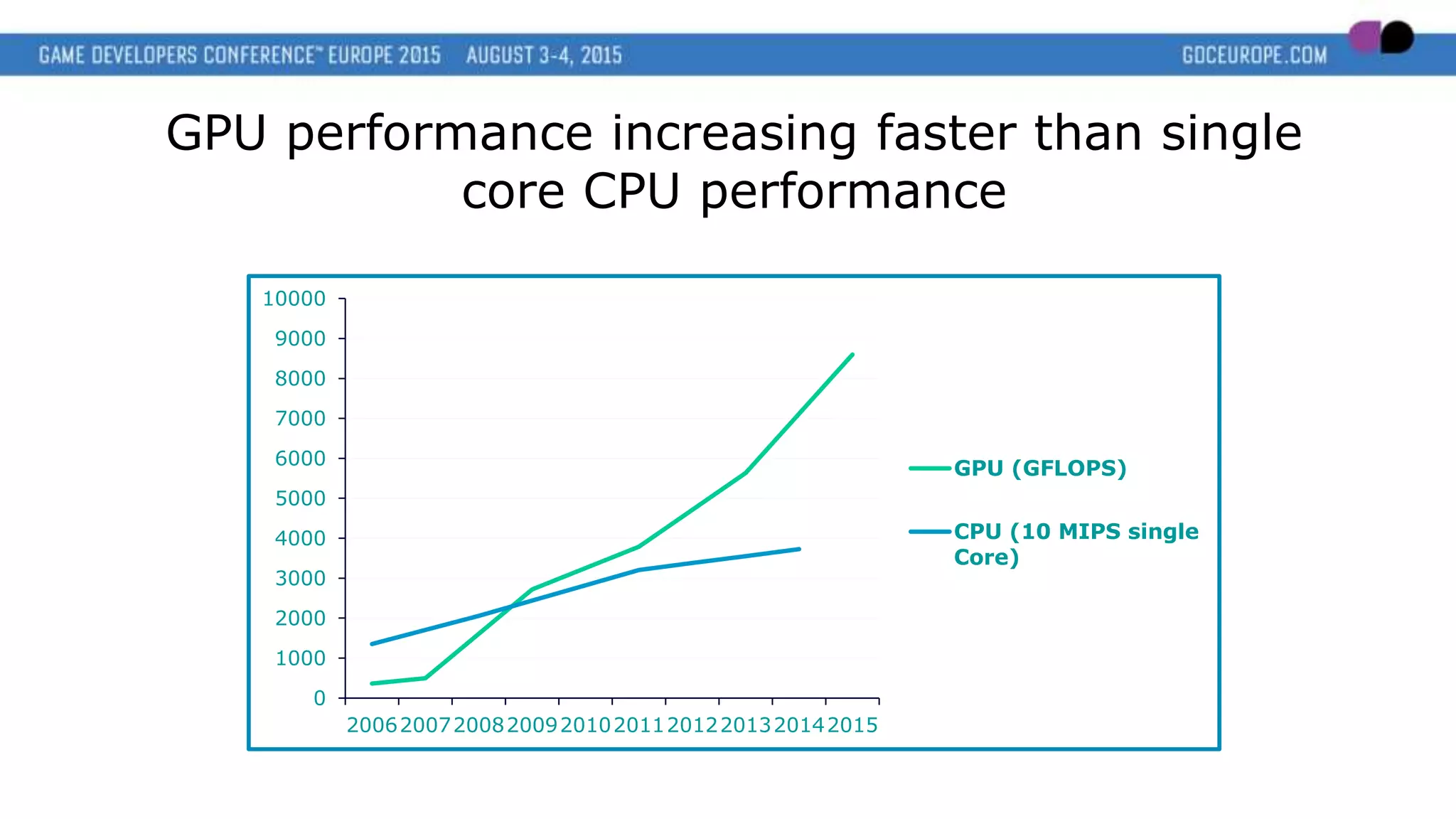

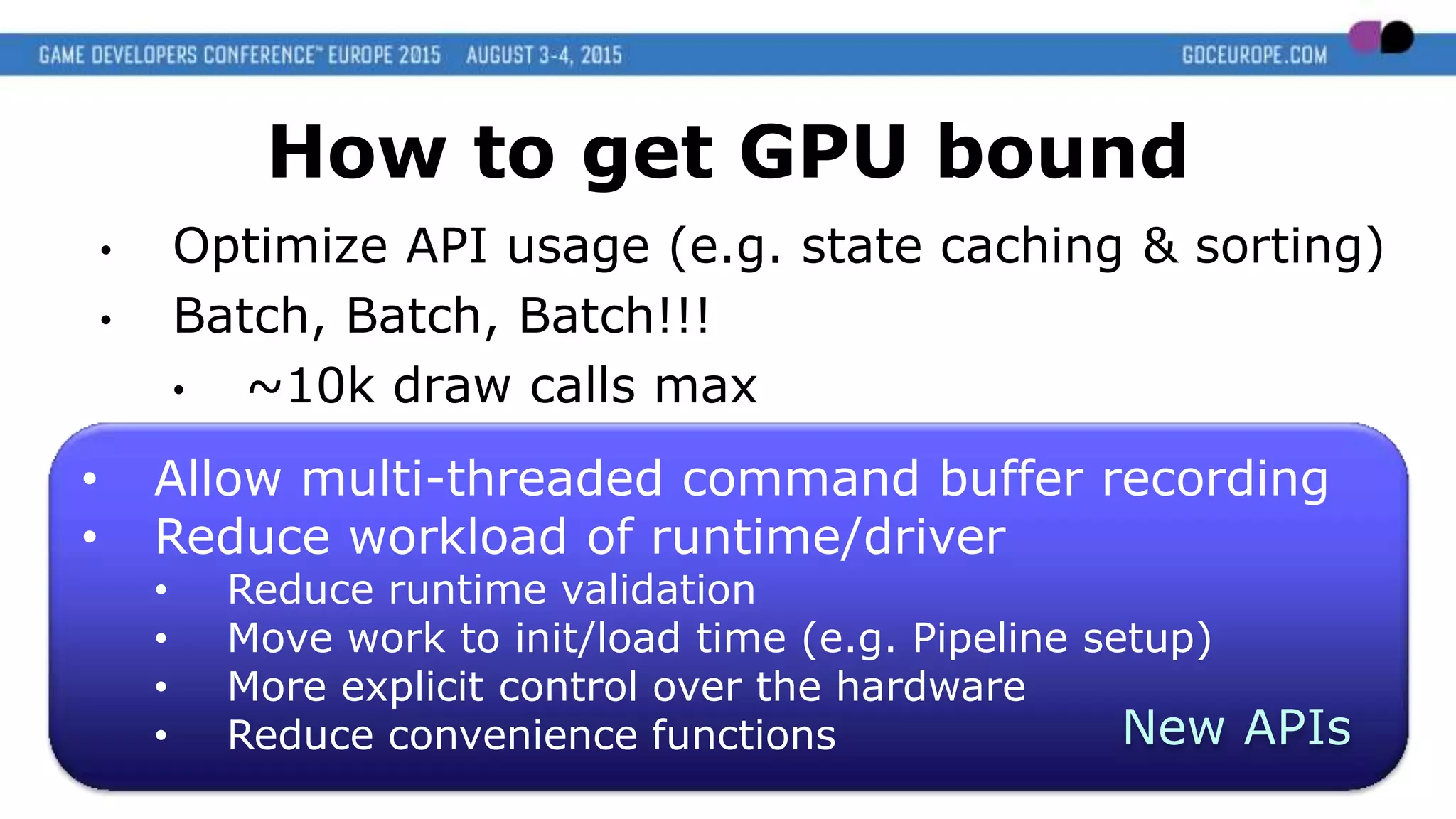

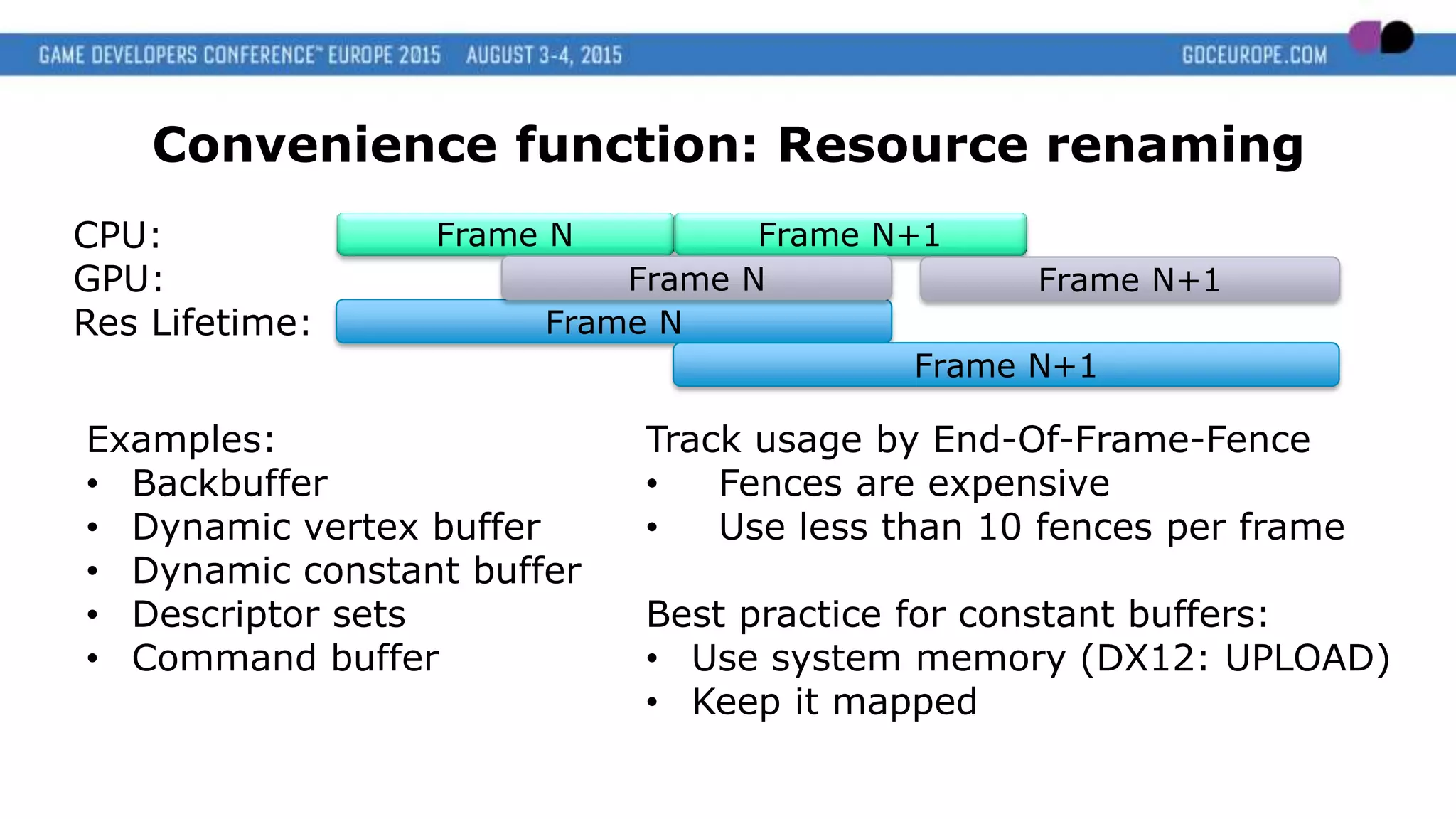

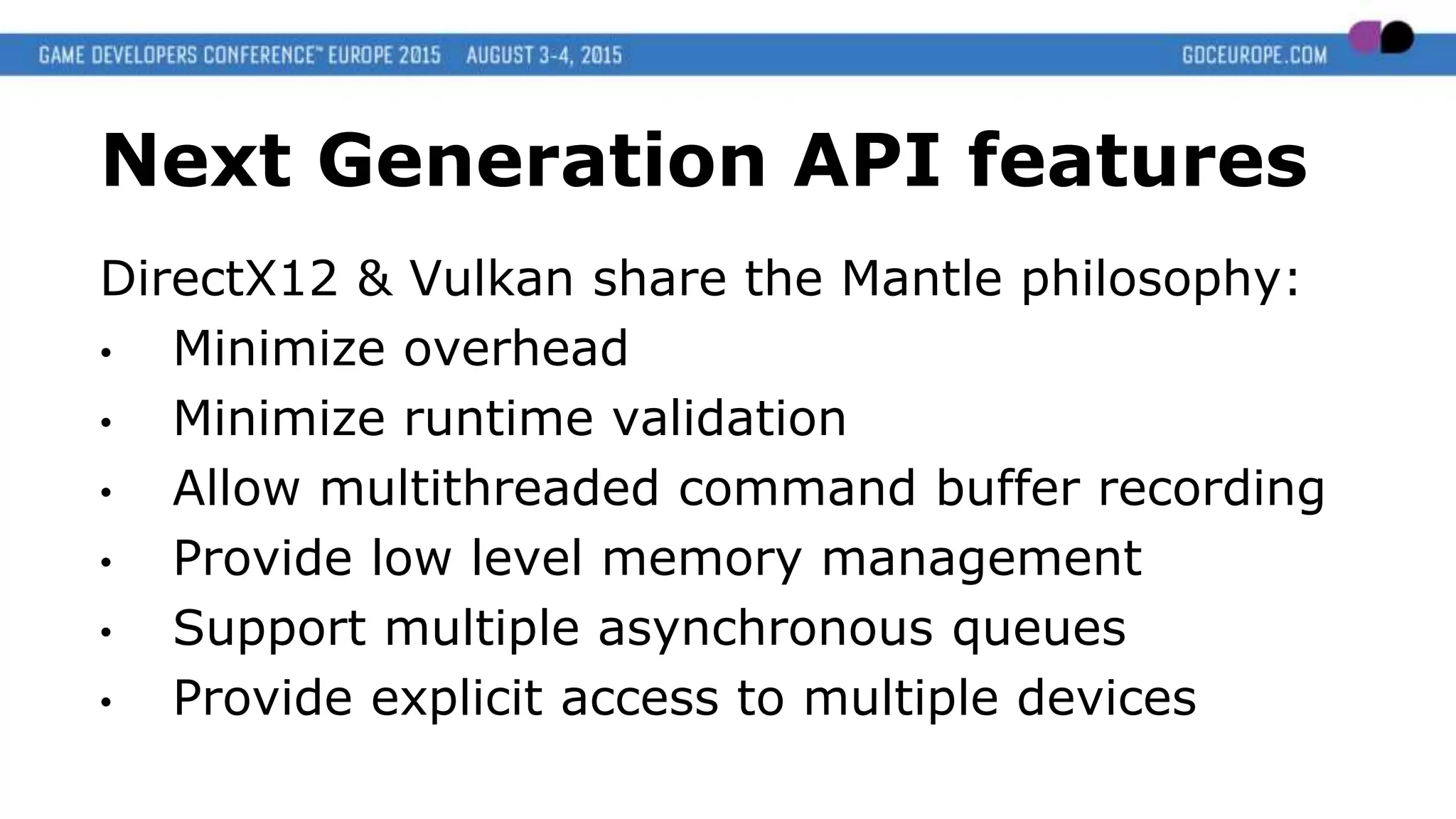

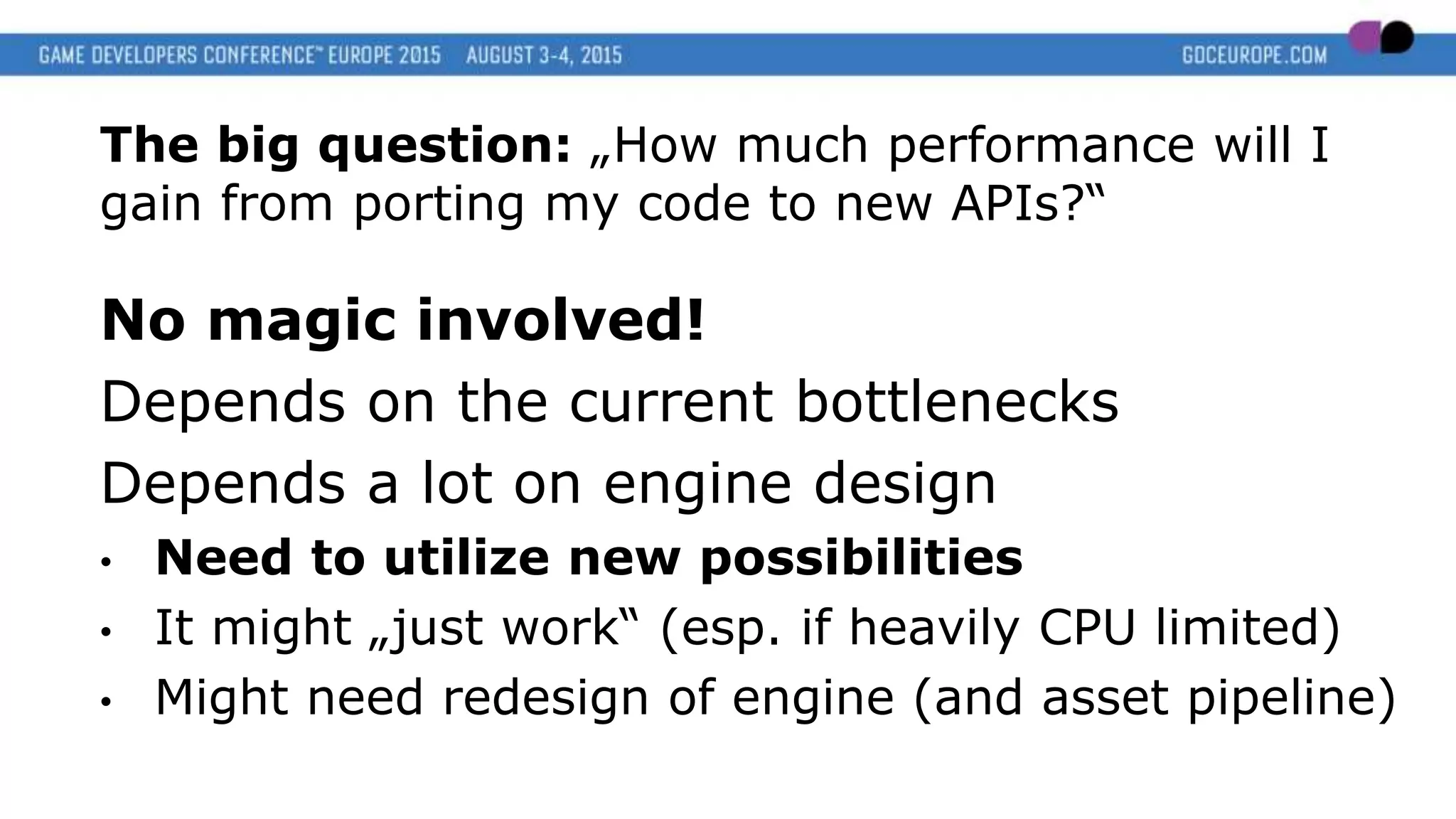

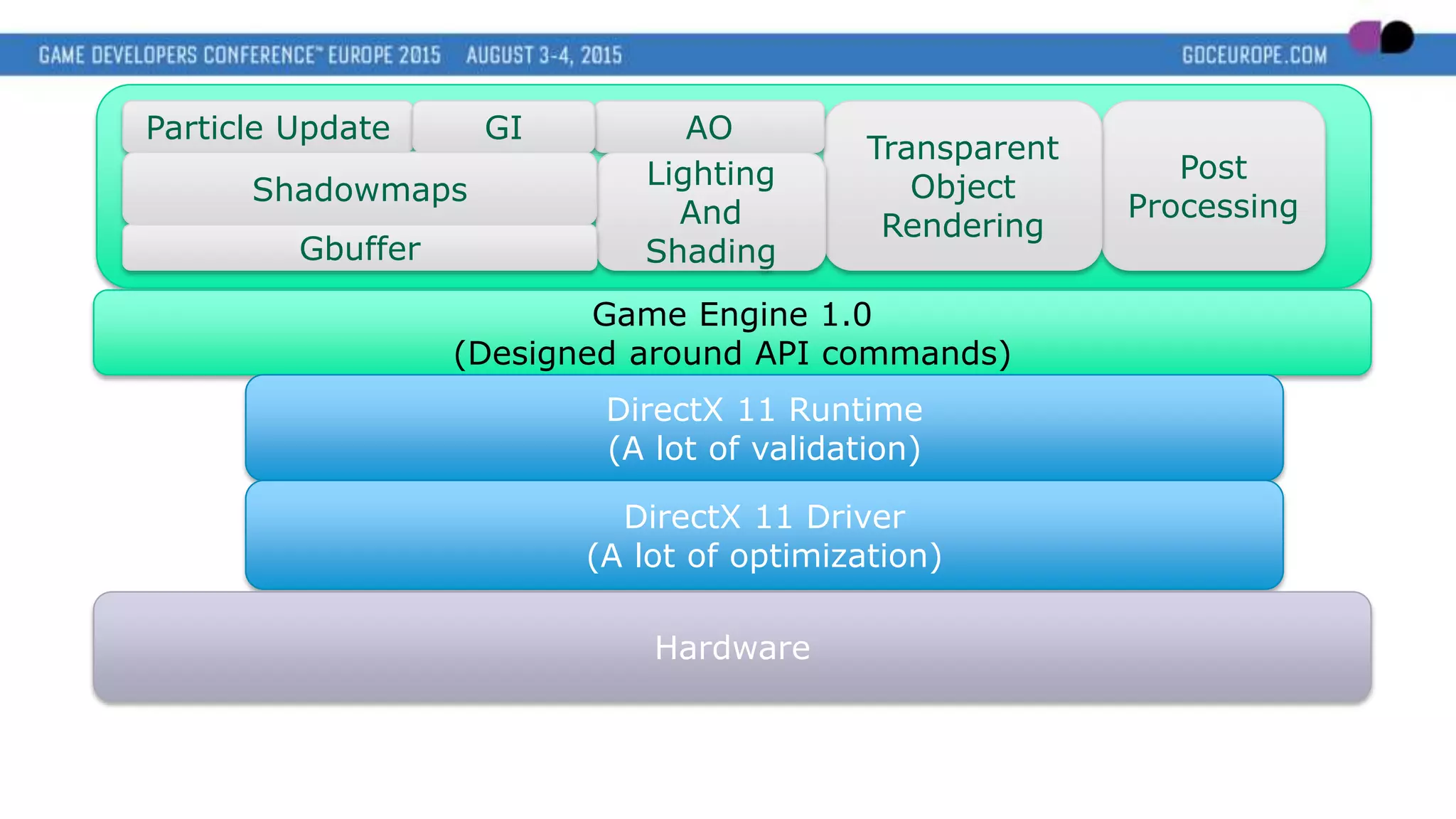

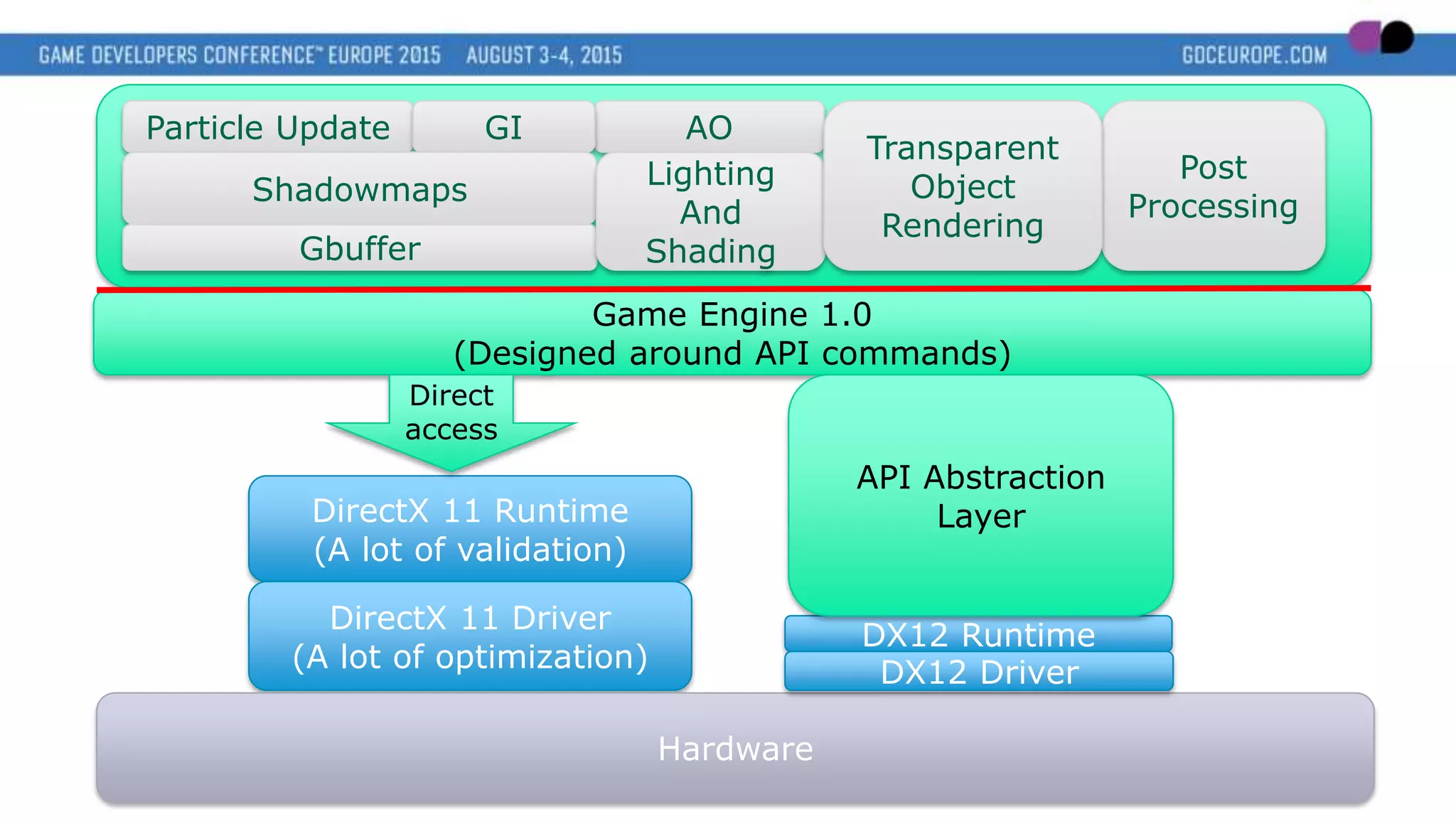

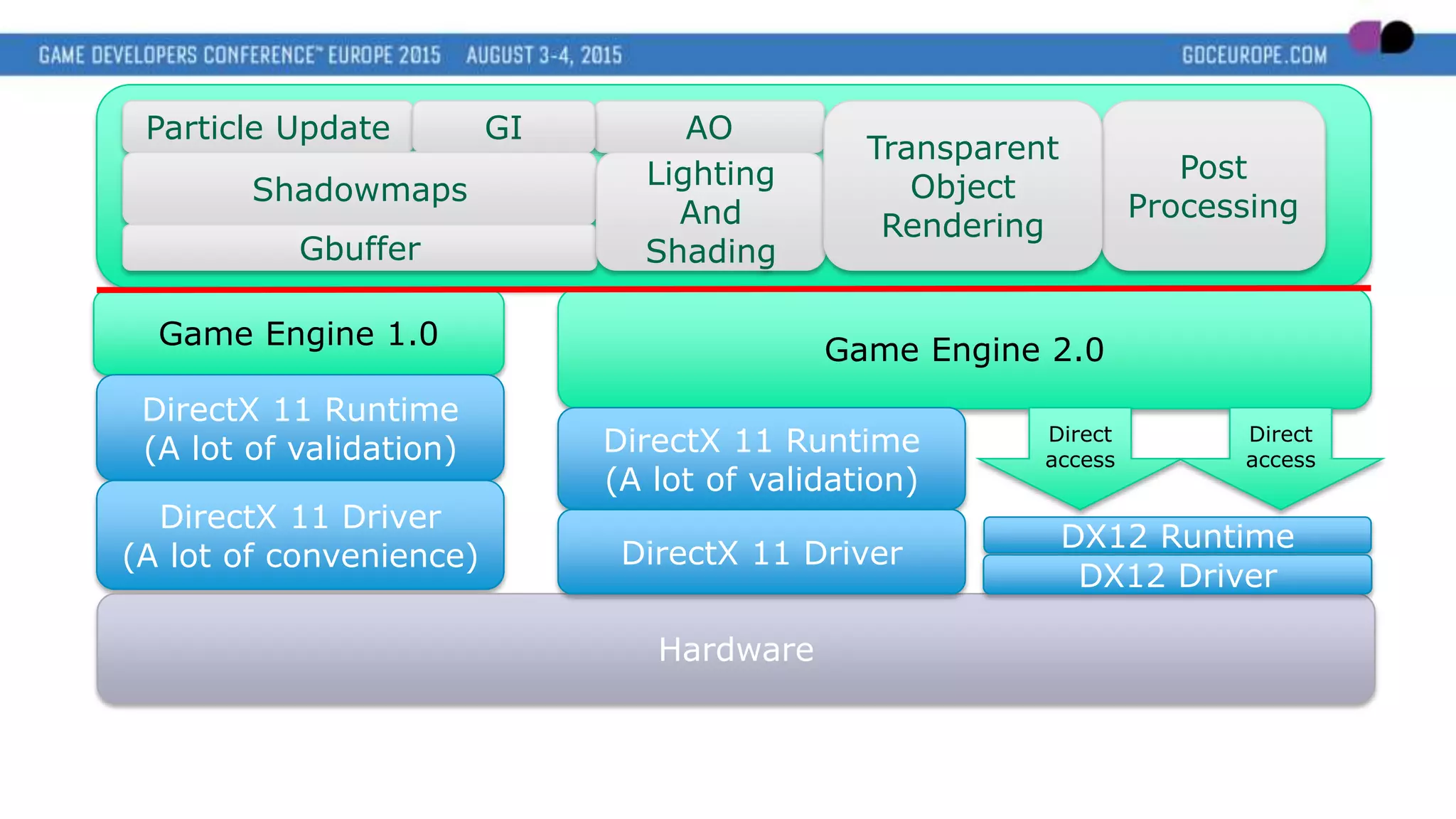

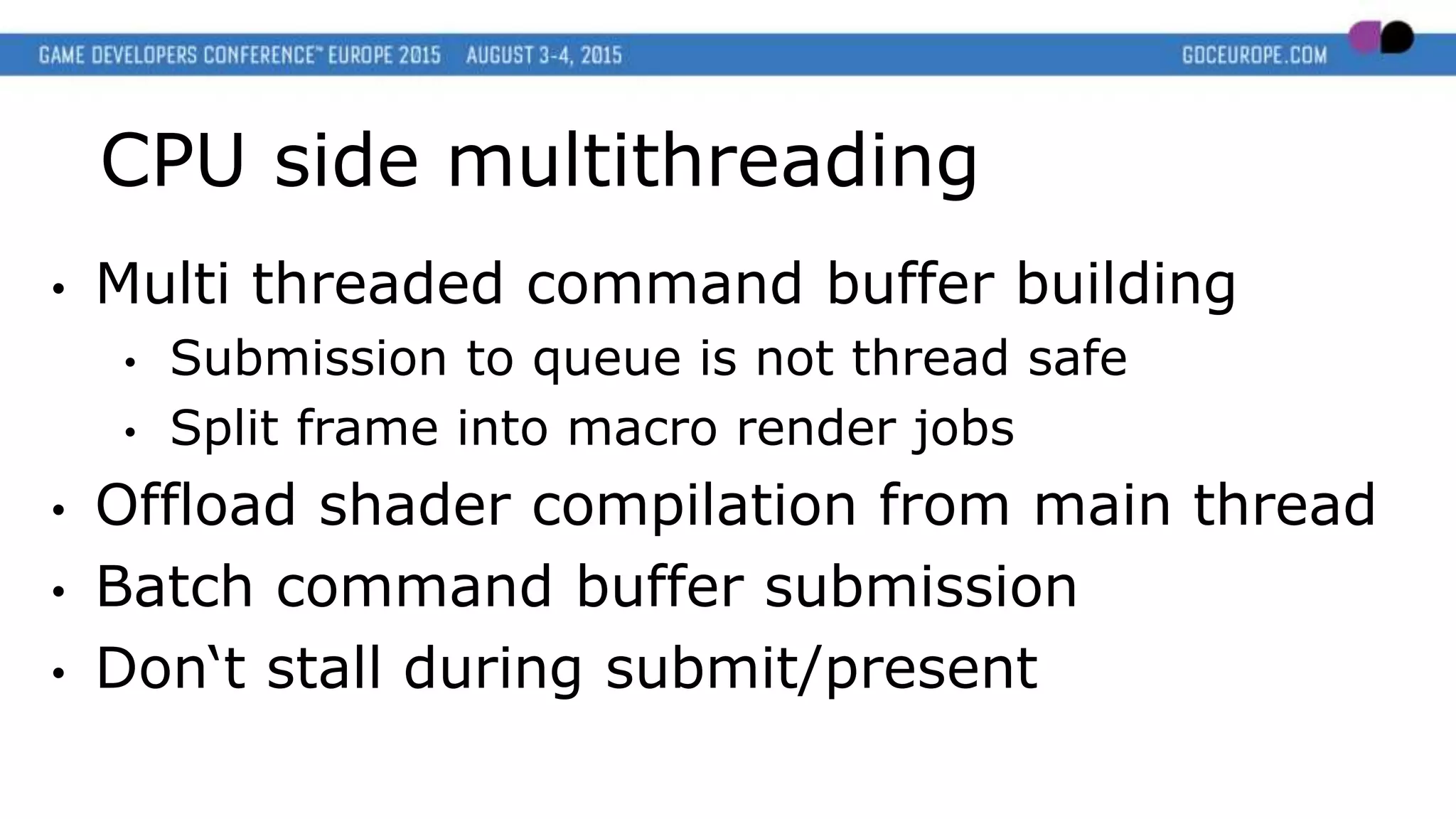

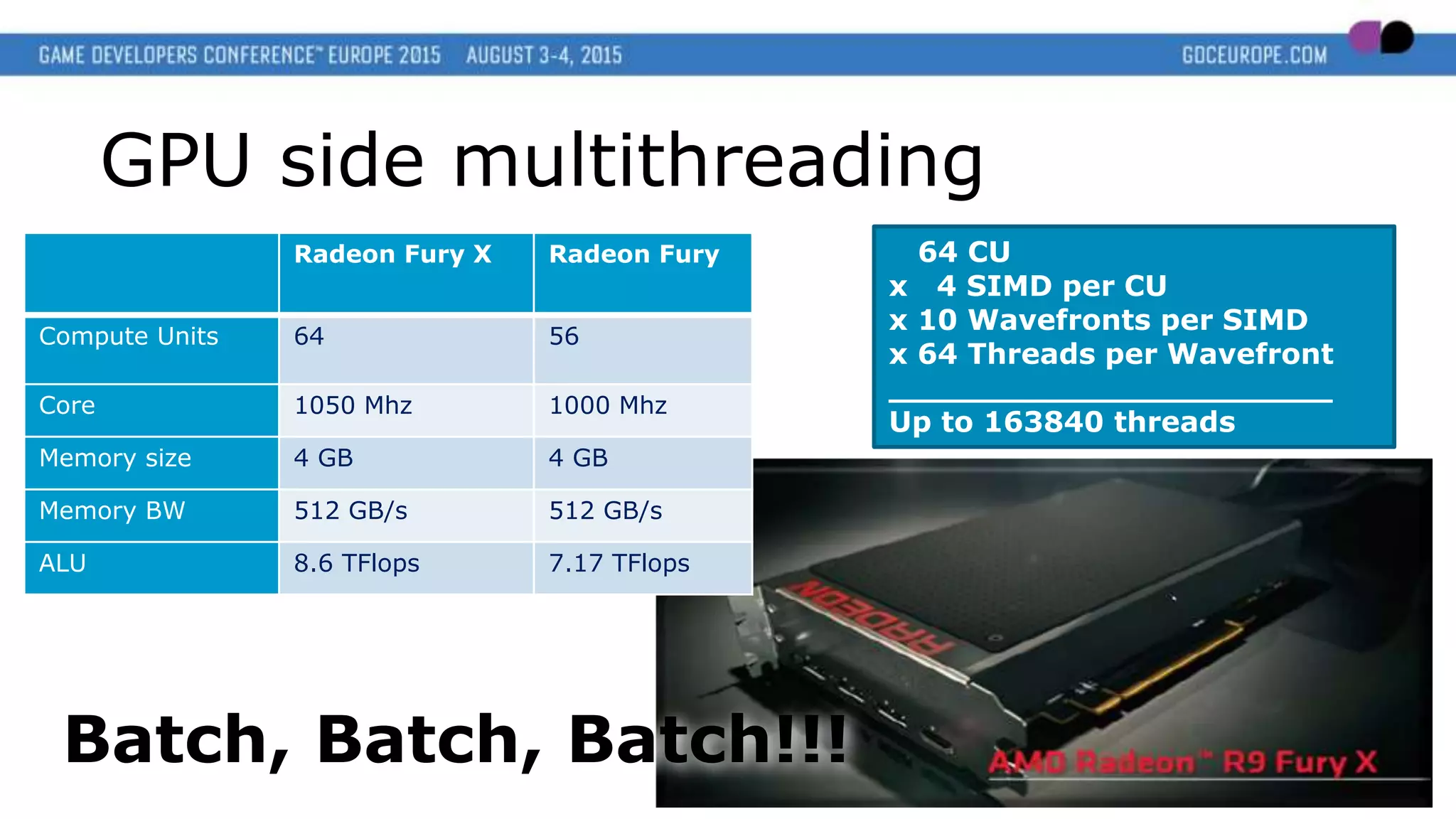

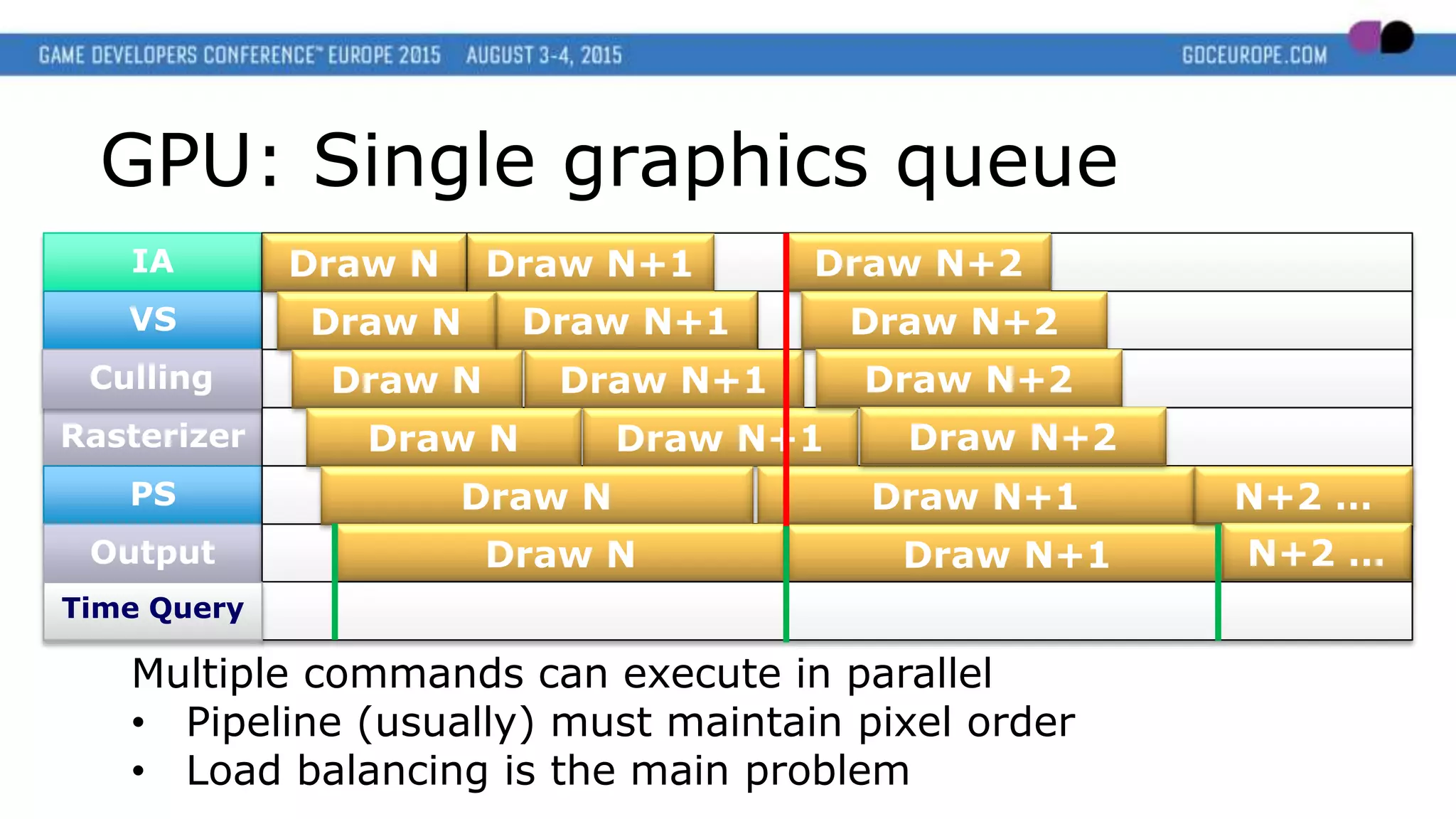

This document discusses new graphics APIs like DX12 and Vulkan that aim to provide lower overhead and more direct hardware access compared to earlier APIs. It covers topics like increased parallelism, explicit memory management using descriptor sets and pipelines, and best practices like batching draw calls and using multiple asynchronous queues. Overall, the new APIs allow more explicit control over GPU hardware for improved performance but require following optimization best practices around areas like parallelism, memory usage, and command batching.

![IA

VS

Rasterizer

PS

Output

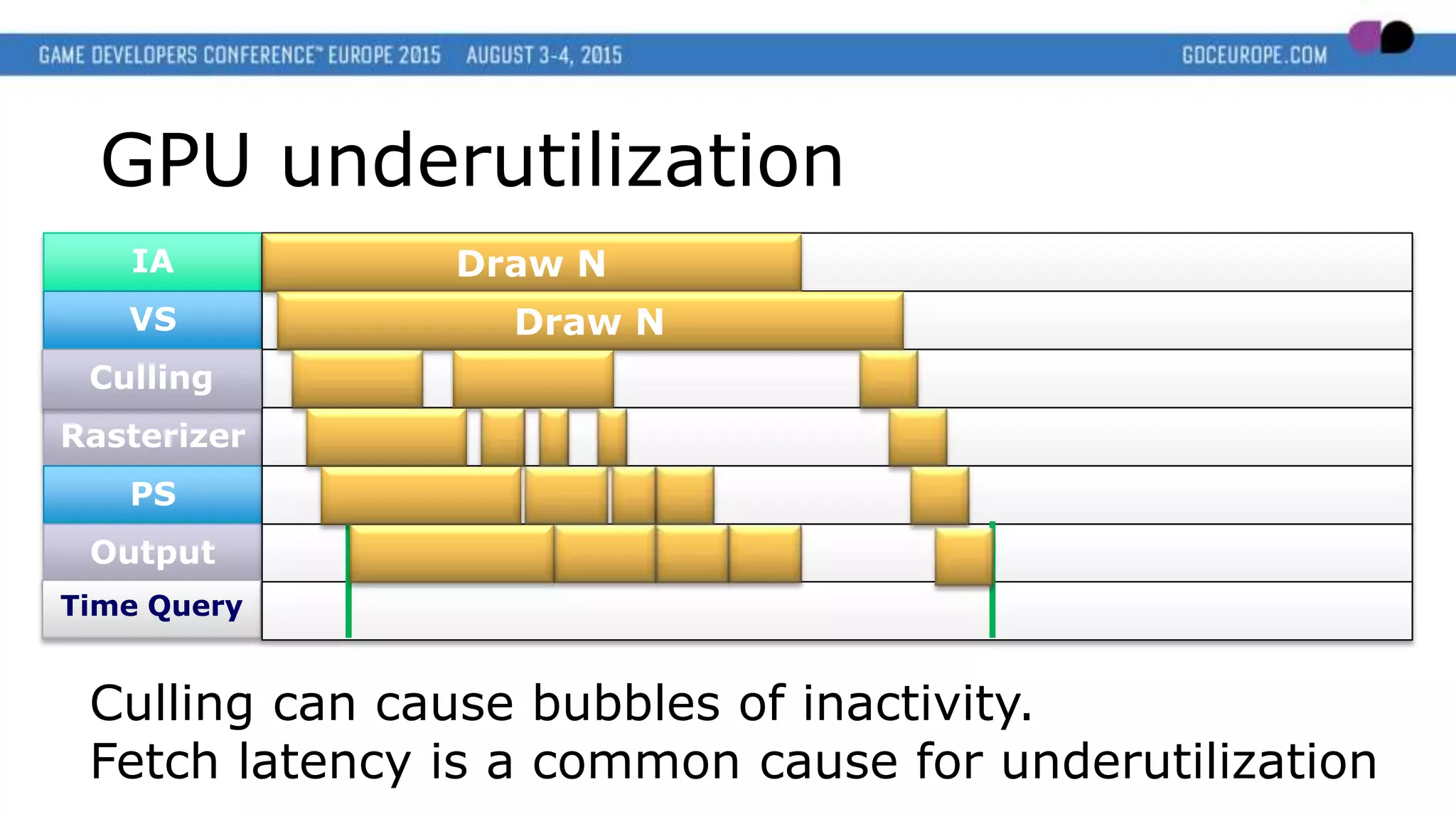

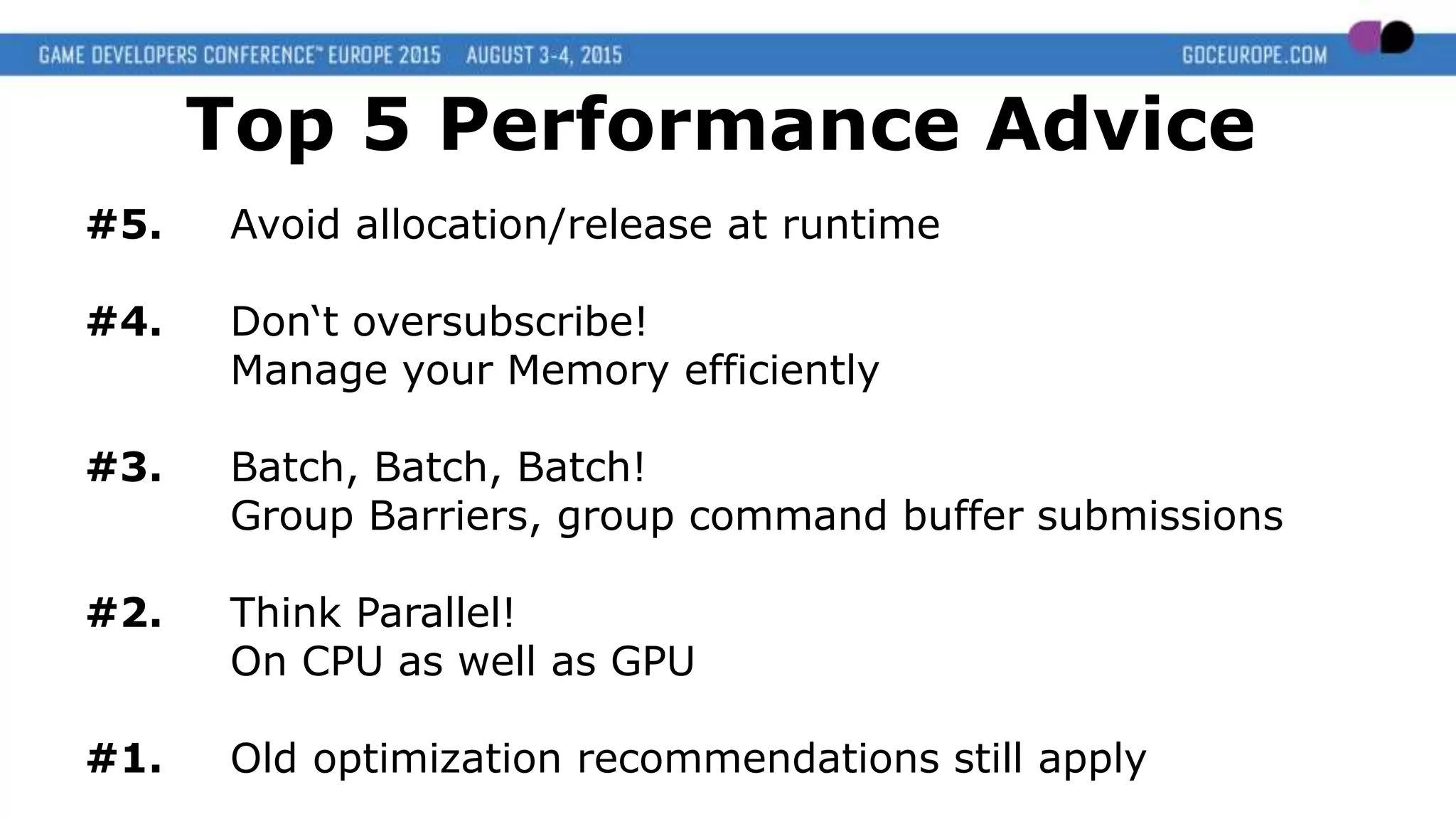

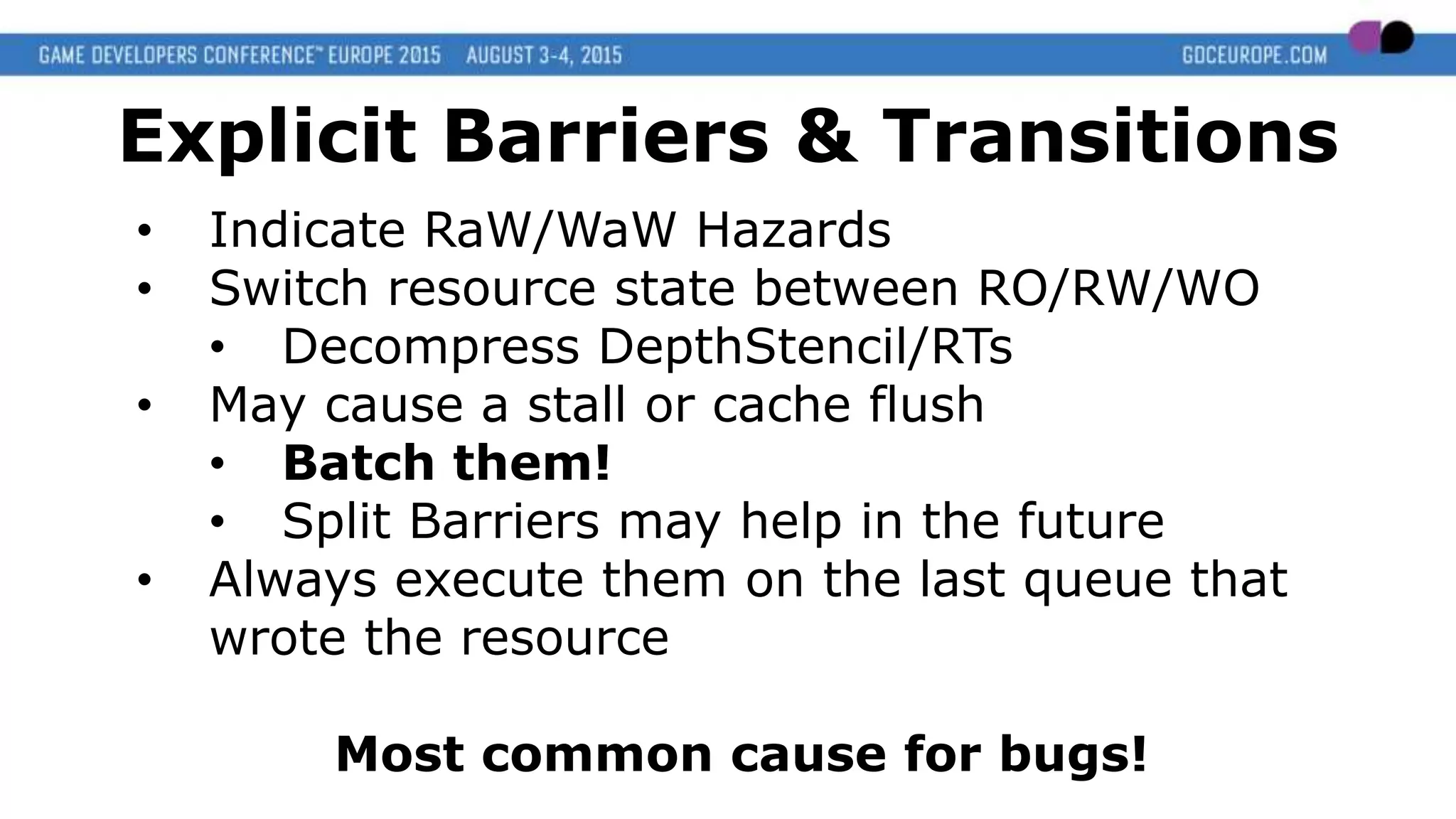

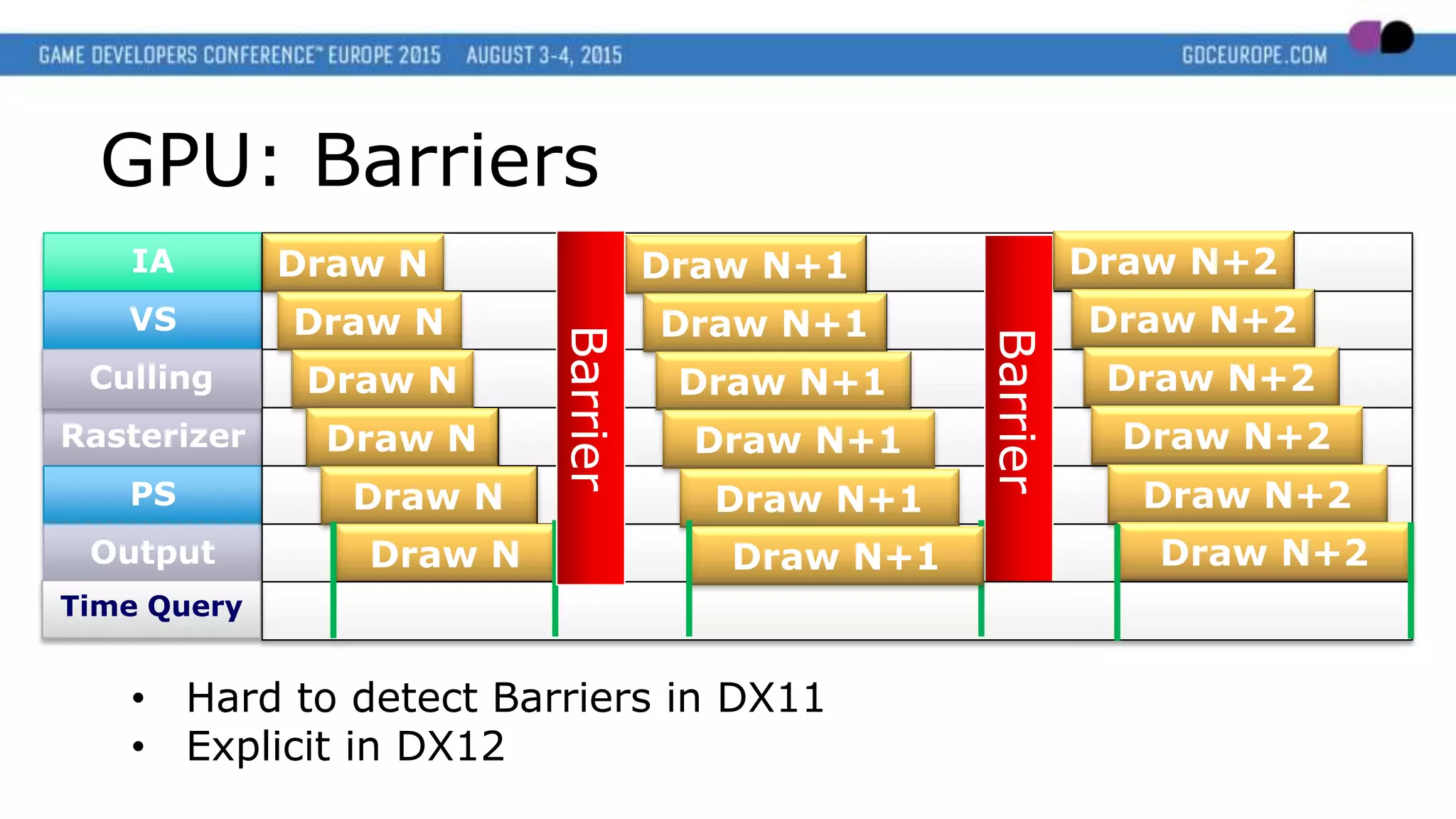

GPU: Barriers

• Batch them!

• [DX12] In the future split barriers may help

Culling

Draw N

Draw N

Draw N

Draw N

Draw N

Draw N

Time Query

Draw N+2

Draw N+2

Draw N+2

Draw N+2

Draw N+2

Draw N+2DoubleBarrier

Draw N+1

Draw N+1

Draw N+1

Draw N+1

Draw N+1

Draw N+1](https://image.slidesharecdn.com/hodesstephandirectx12andvulkan-150820145027-lva1-app6892/75/DX12-Vulkan-Dawn-of-a-New-Generation-of-Graphics-APIs-20-2048.jpg)