This document summarizes key aspects of deep neural networks, including:

1. The number of parameters in a neural network is determined by the number and size of layers and weights.

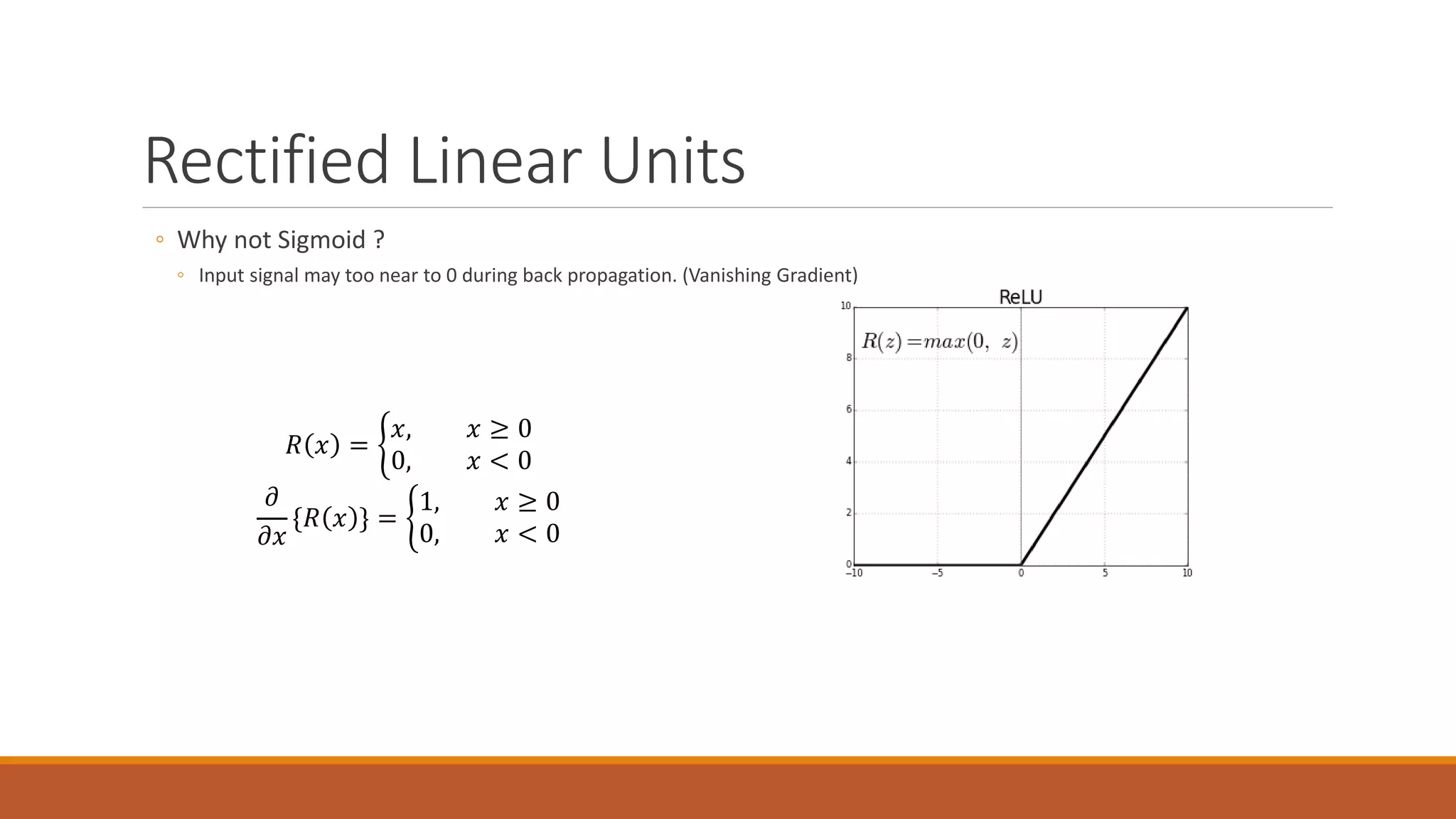

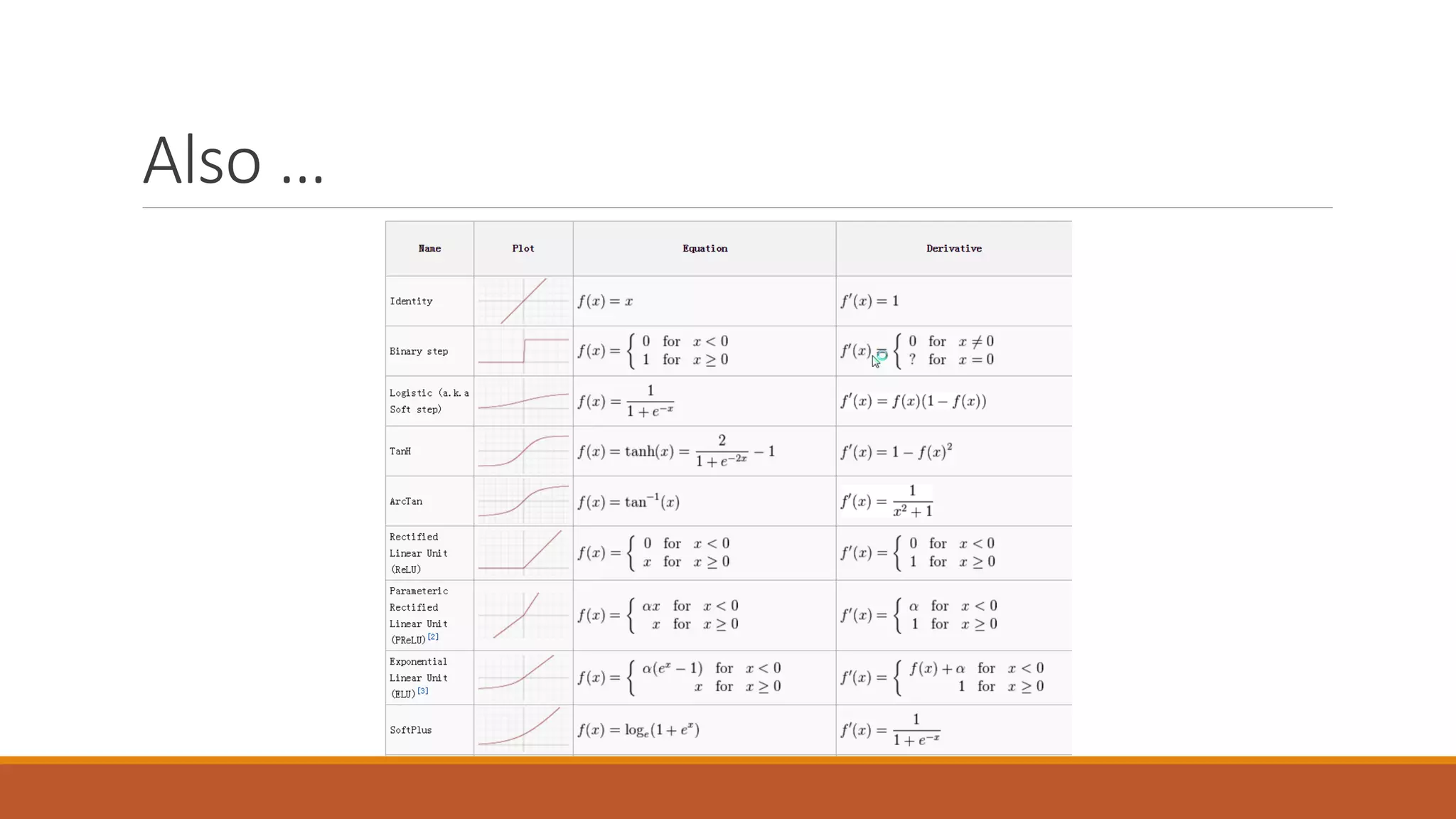

2. Rectified linear units (ReLU) are used instead of sigmoid to avoid vanishing gradients, where ReLU keeps inputs above 0 and sets others to 0.

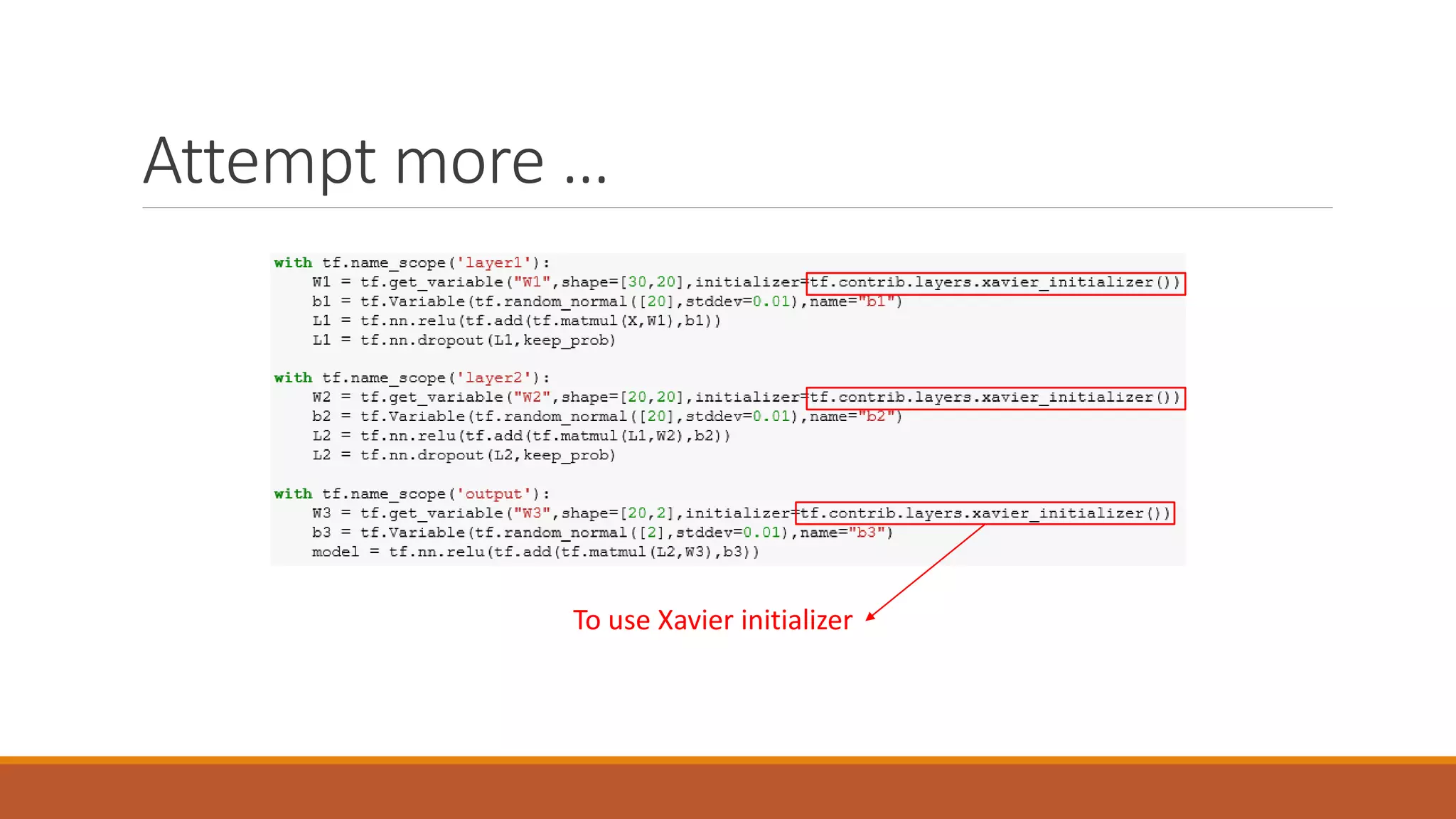

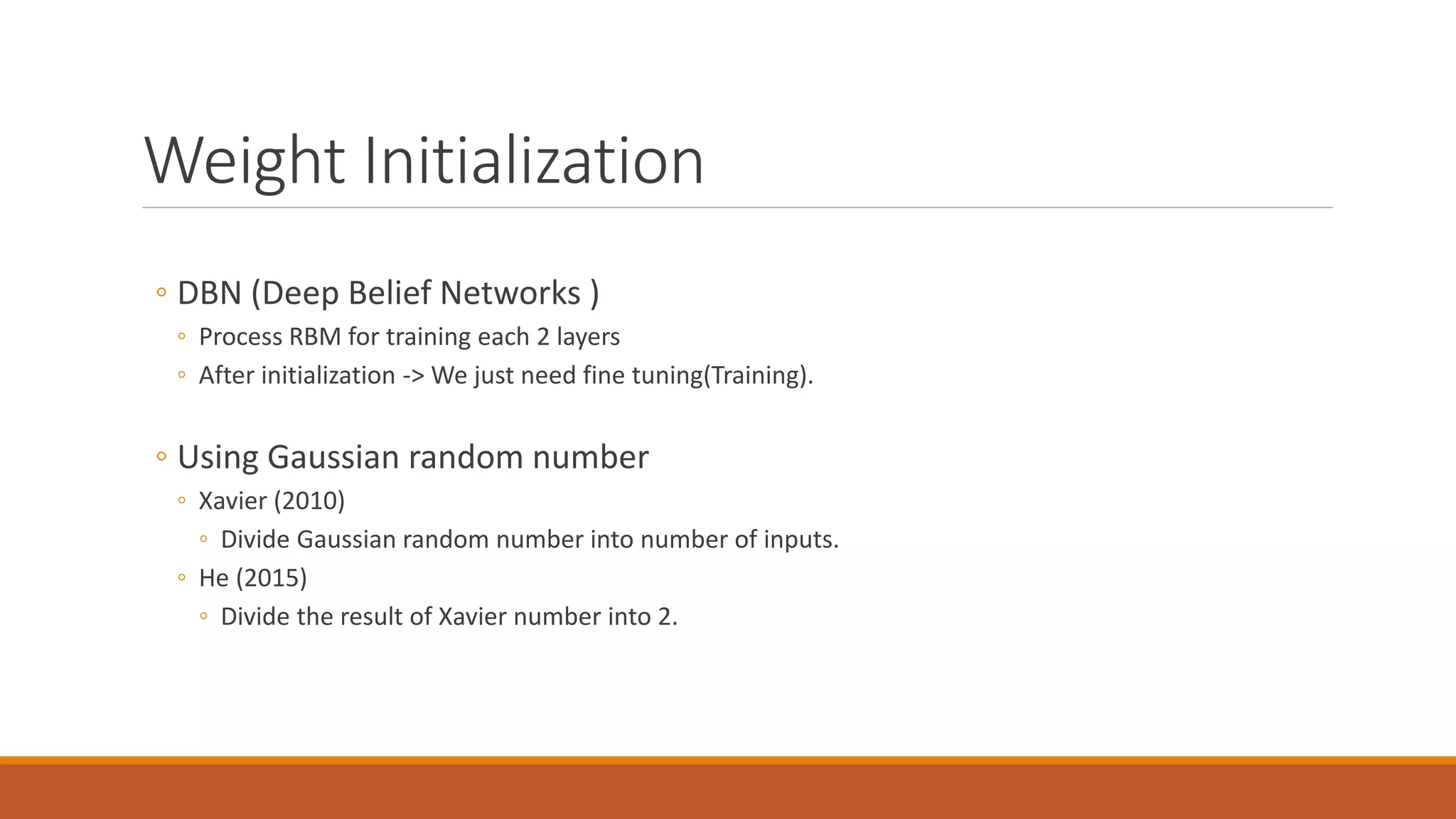

3. Weight initialization, such as Xavier and He initialization, helps training by setting initial weights to small random numbers.

![Number of Parameters

From the last presentation …

How many parameters in this linear model ?

X W b S(Y)Y

0

1

0

0

0

Dog !

x

Test data (Image)

[1024x768] image

5 Classes

𝑆𝑆𝑆𝑆𝑆𝑆𝑆𝑆 𝑊𝑊 + 𝑆𝑆𝑆𝑆𝑆𝑆𝑆𝑆 𝐵𝐵 = 𝐼𝐼 𝐼𝐼𝐼𝐼𝐼𝐼𝐼𝐼_𝑠𝑠𝑠𝑠𝑠𝑠𝑠𝑠 ∗ 𝐶𝐶𝐶𝐶𝐶𝐶𝐶𝐶𝐶𝐶𝐶𝐶𝐶𝐶 + 𝐶𝐶𝐶𝐶𝐶𝐶𝐶𝐶𝐶𝐶𝐶𝐶𝐶𝐶 = 3,932,165](https://image.slidesharecdn.com/2deepneuralnetwork-180706073057/75/Deep-Neural-Network-3-2048.jpg)

![Go Deep & Wide !

W1 W2 W3 ?

[784, 256] [256, 256] [256, 10]

Hidden Layer

[10]32

32

X Y

Invisible from the input/output.](https://image.slidesharecdn.com/2deepneuralnetwork-180706073057/75/Deep-Neural-Network-4-2048.jpg)

![One-Hot Encoding

‘M’

[1, 0]

[0, 1]

Malignant

Benign](https://image.slidesharecdn.com/2deepneuralnetwork-180706073057/75/Deep-Neural-Network-16-2048.jpg)