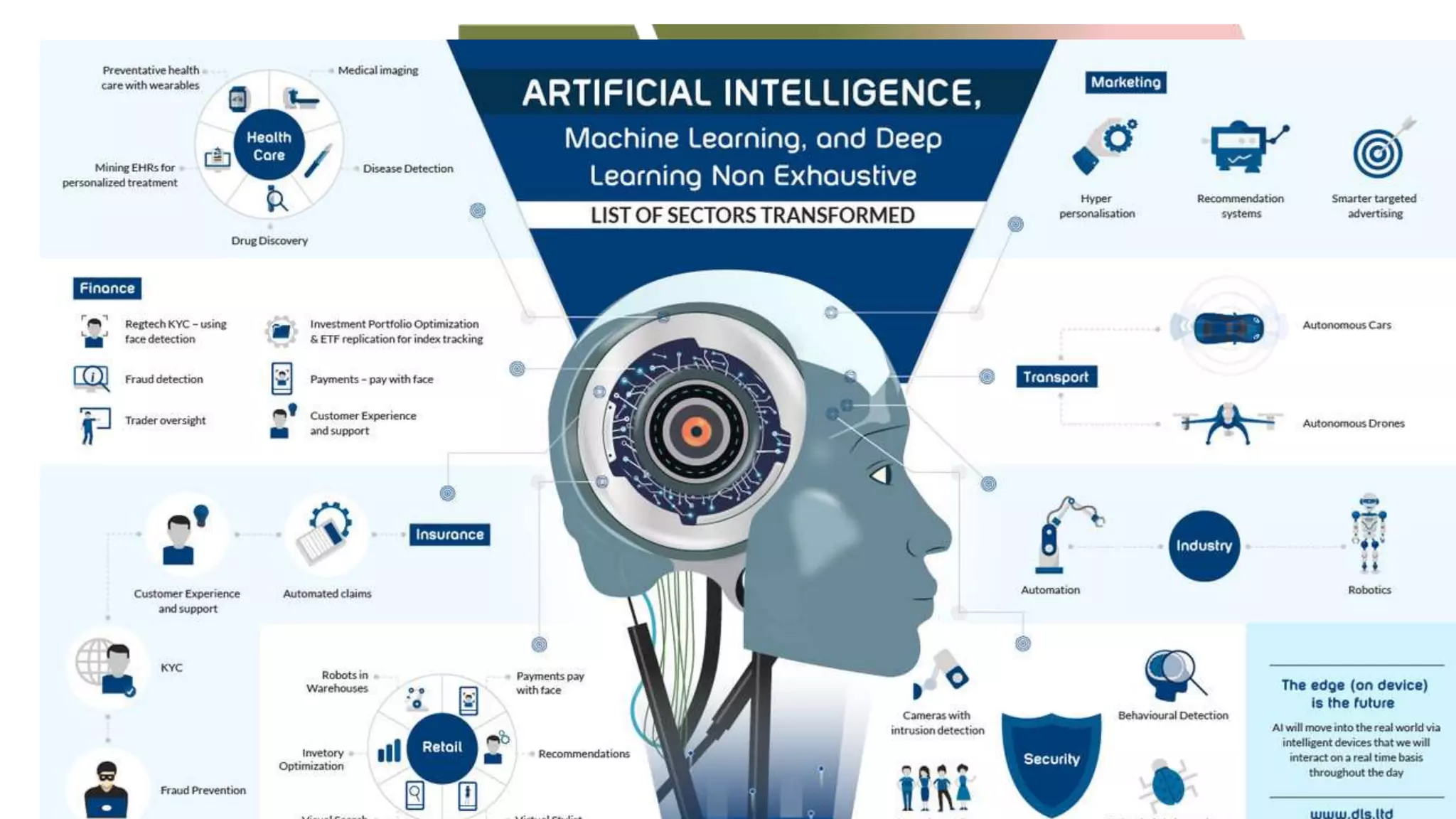

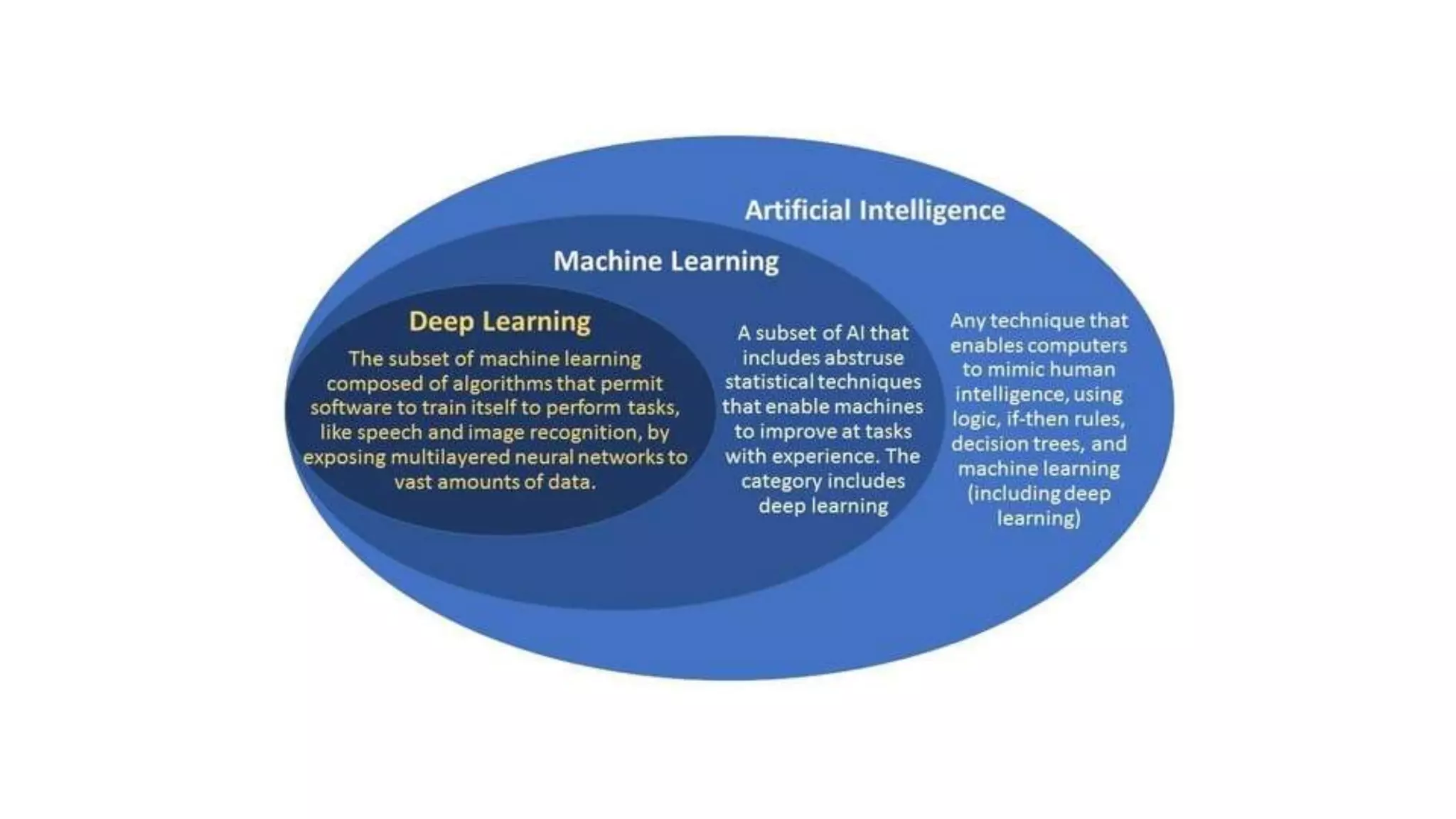

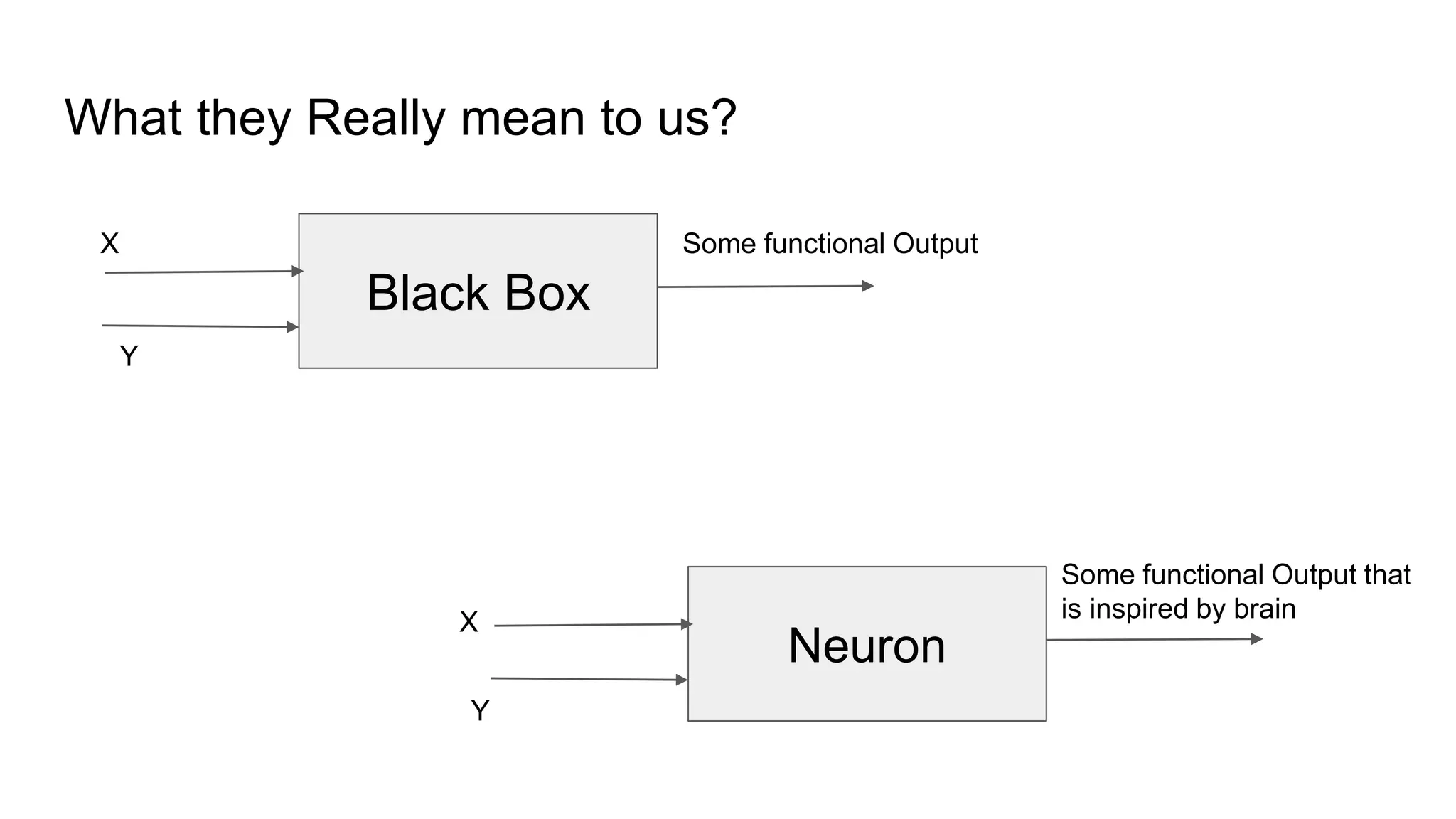

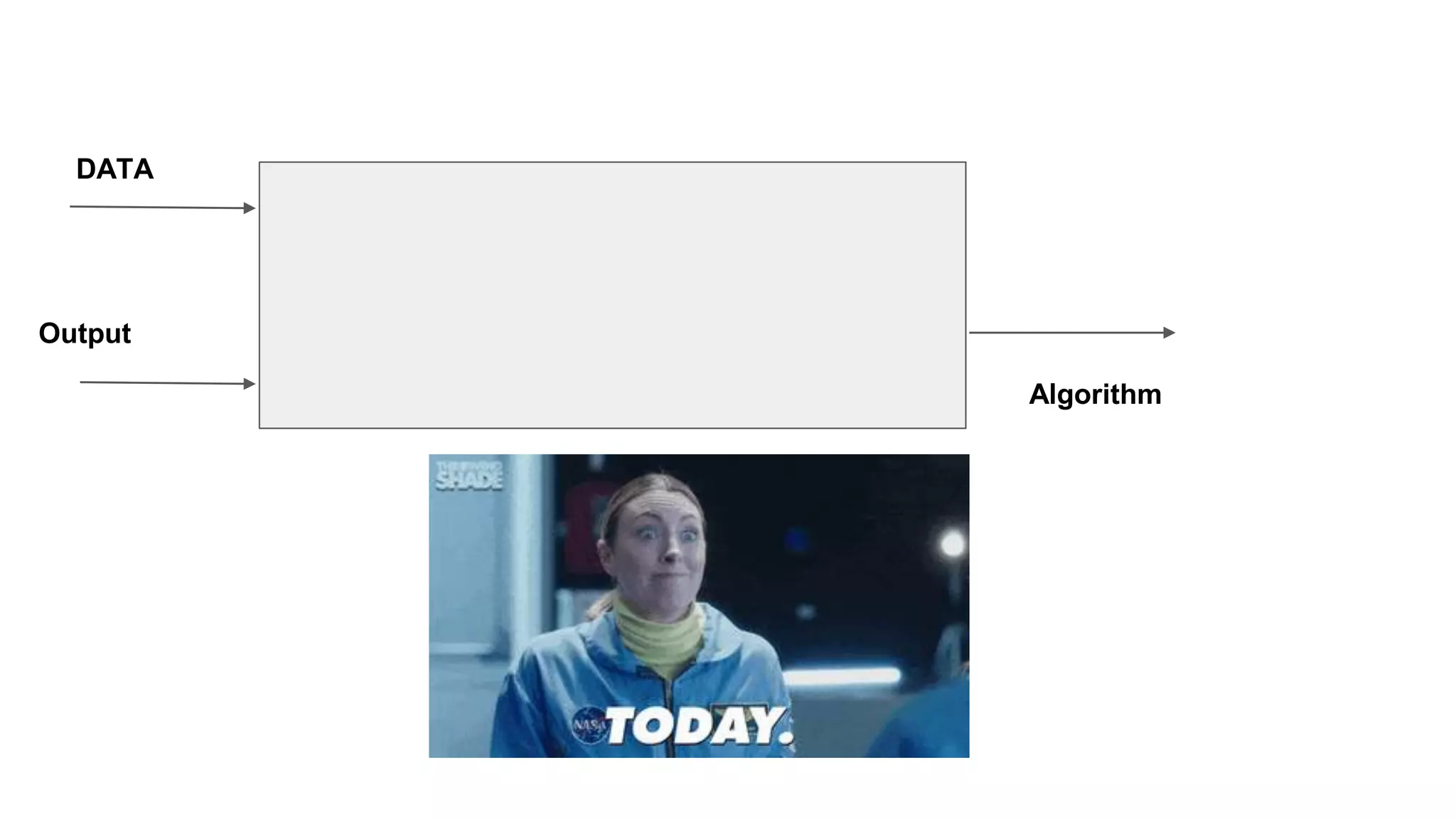

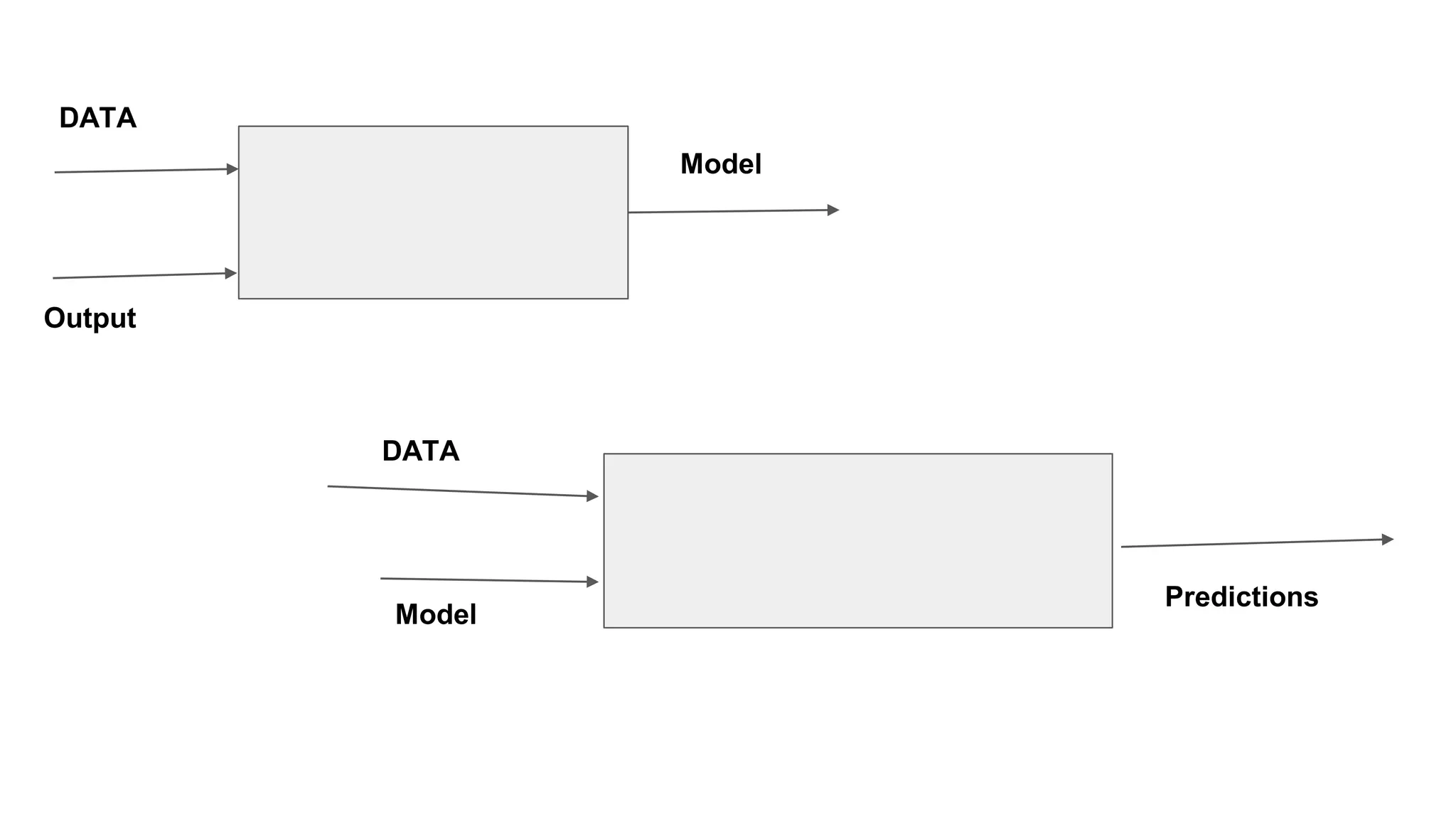

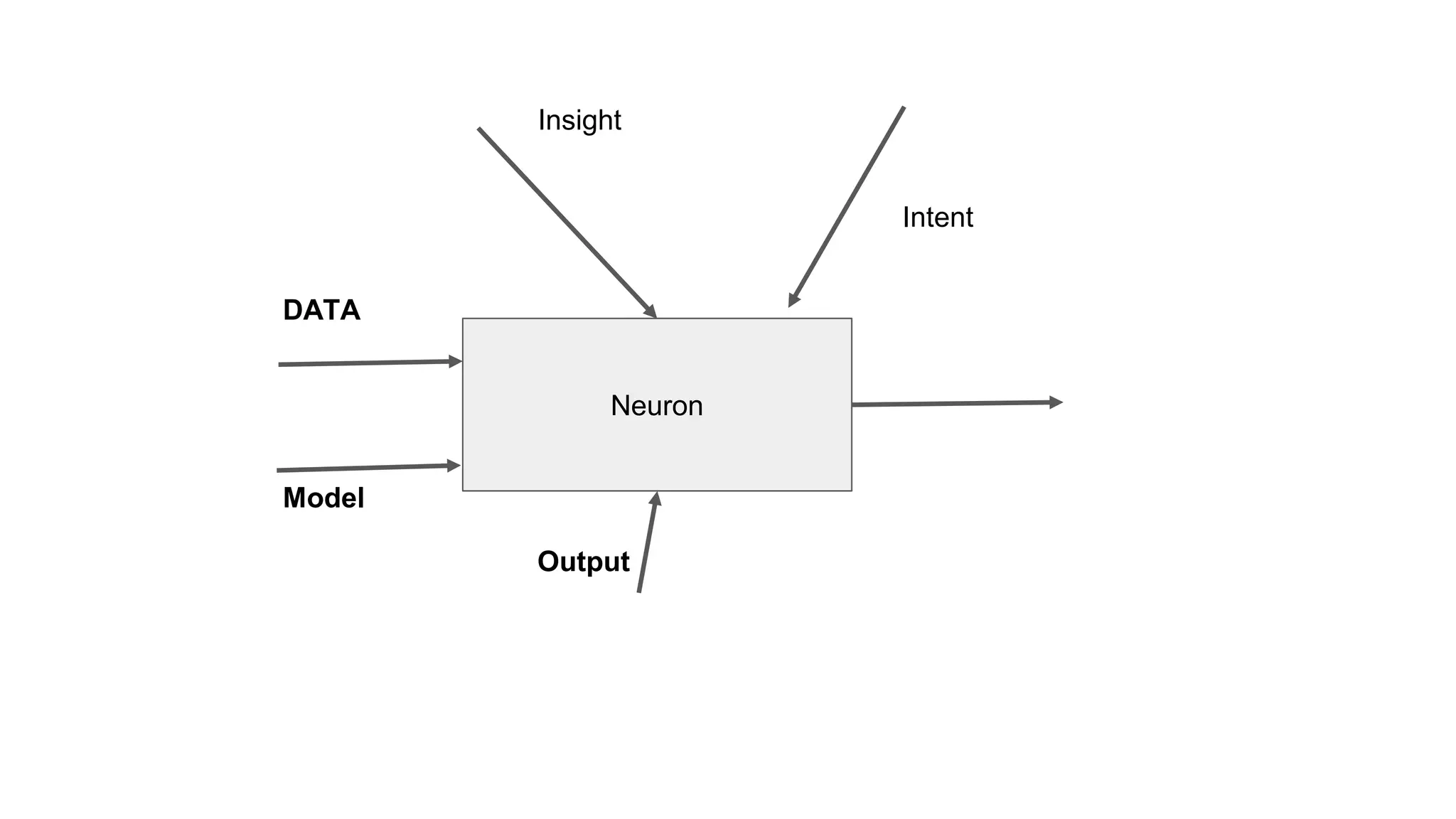

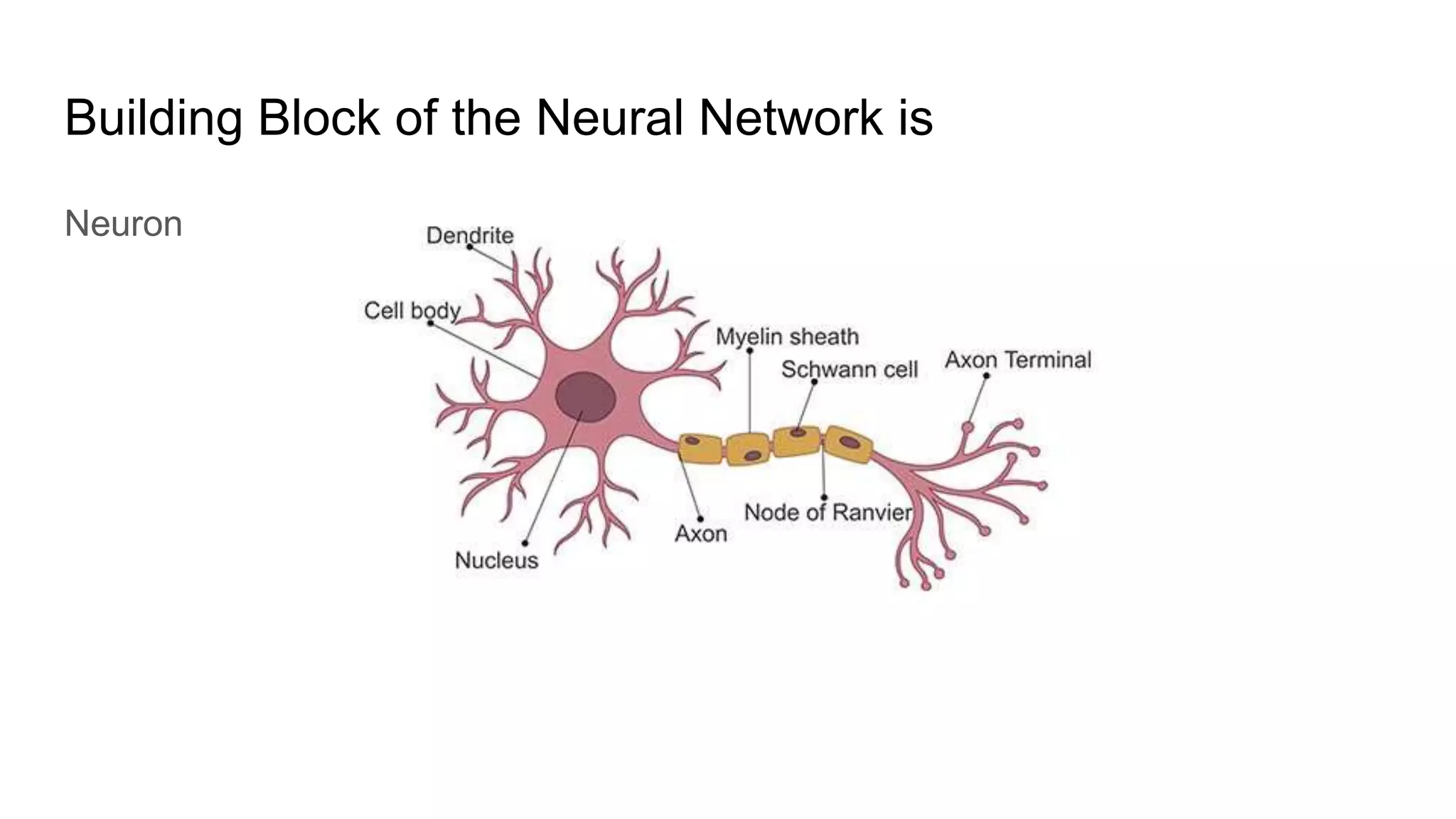

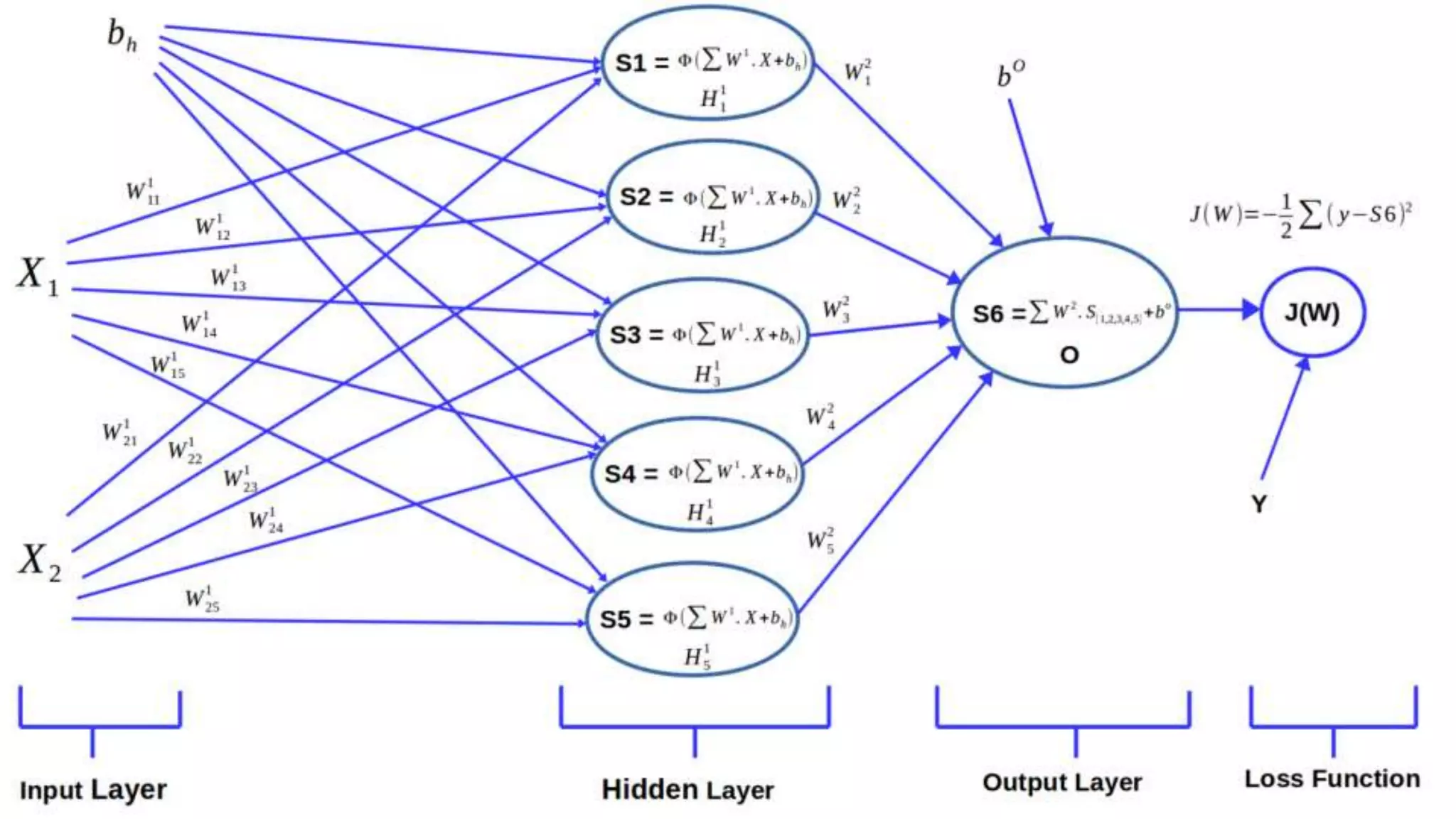

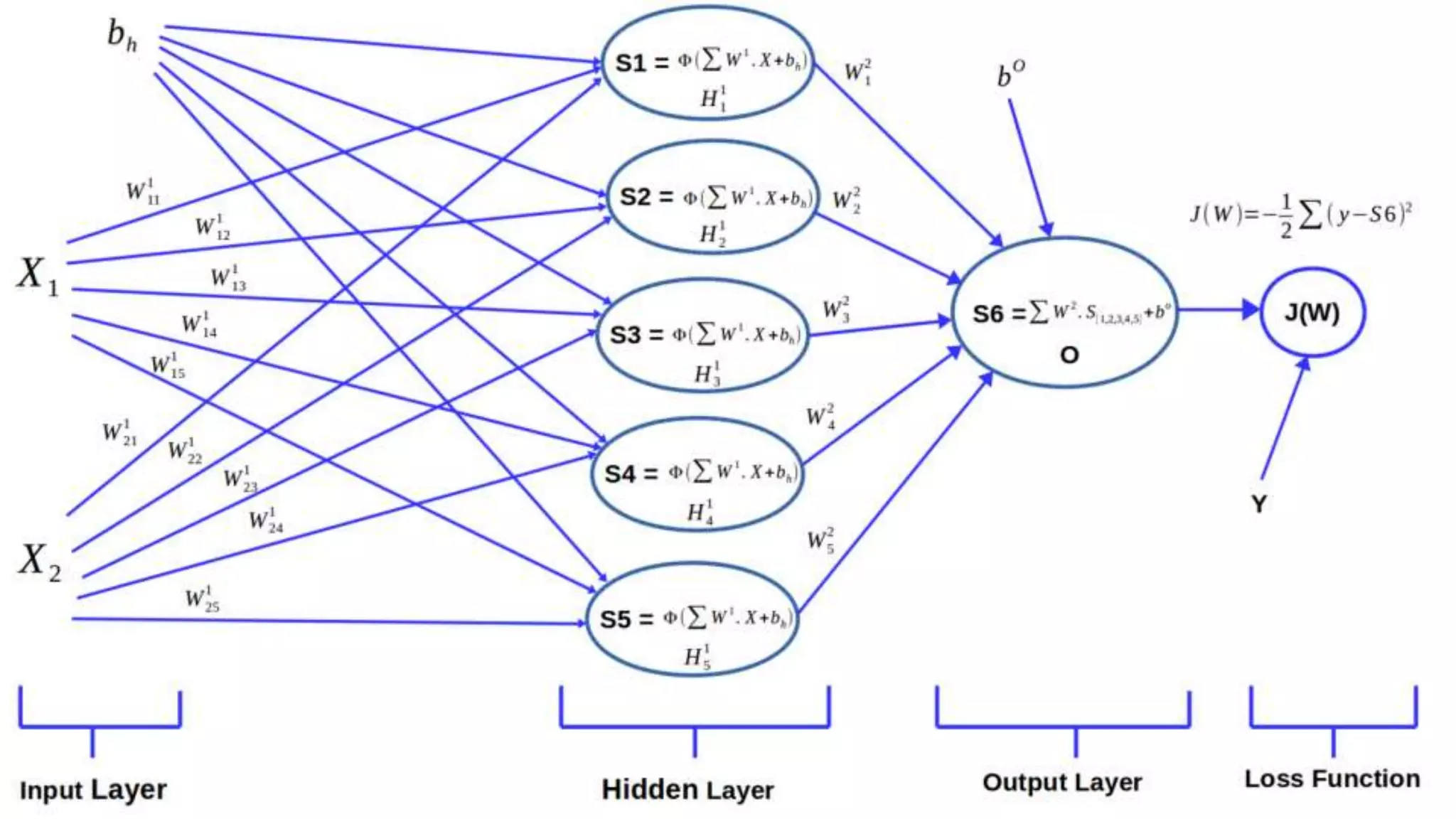

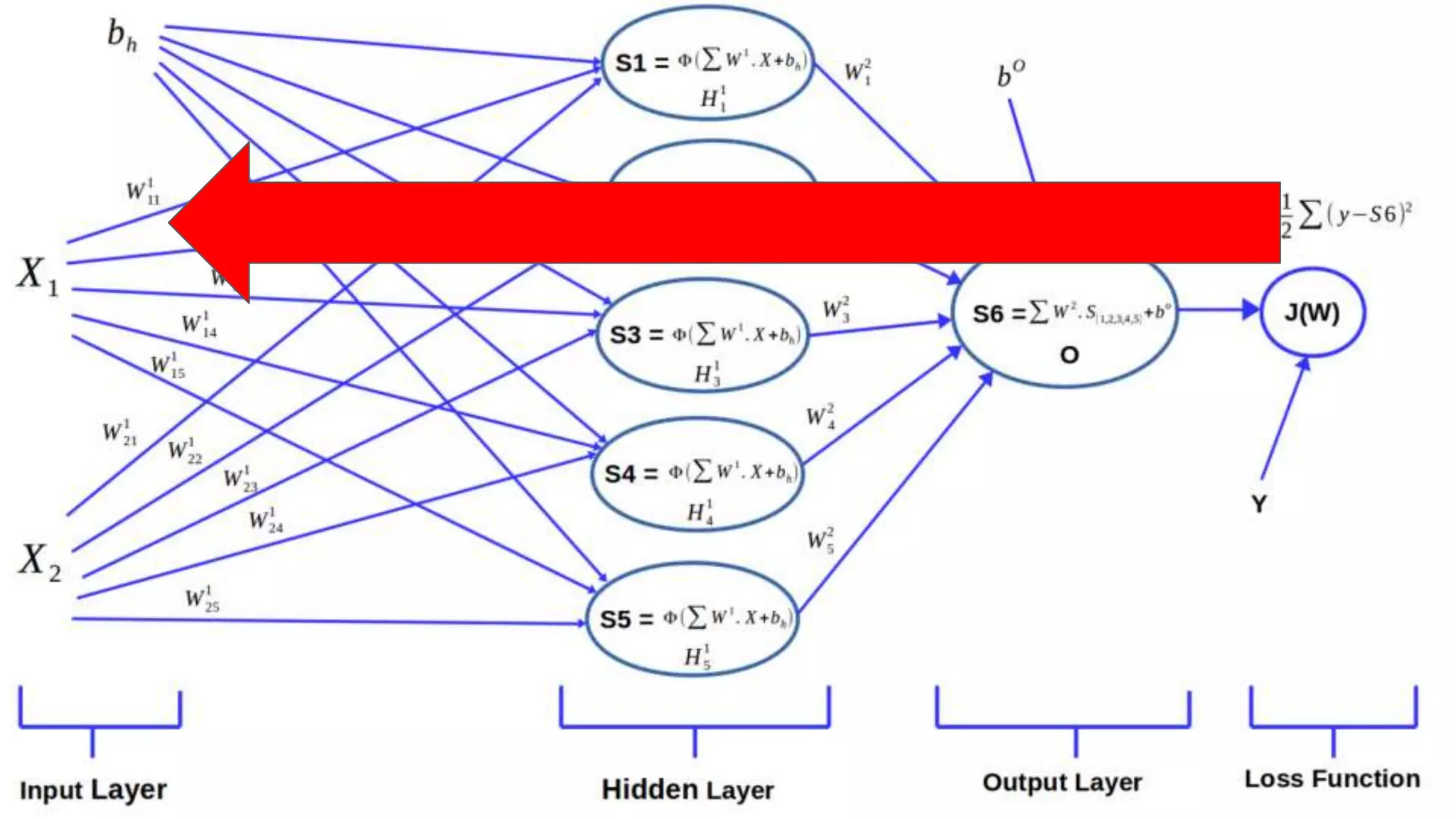

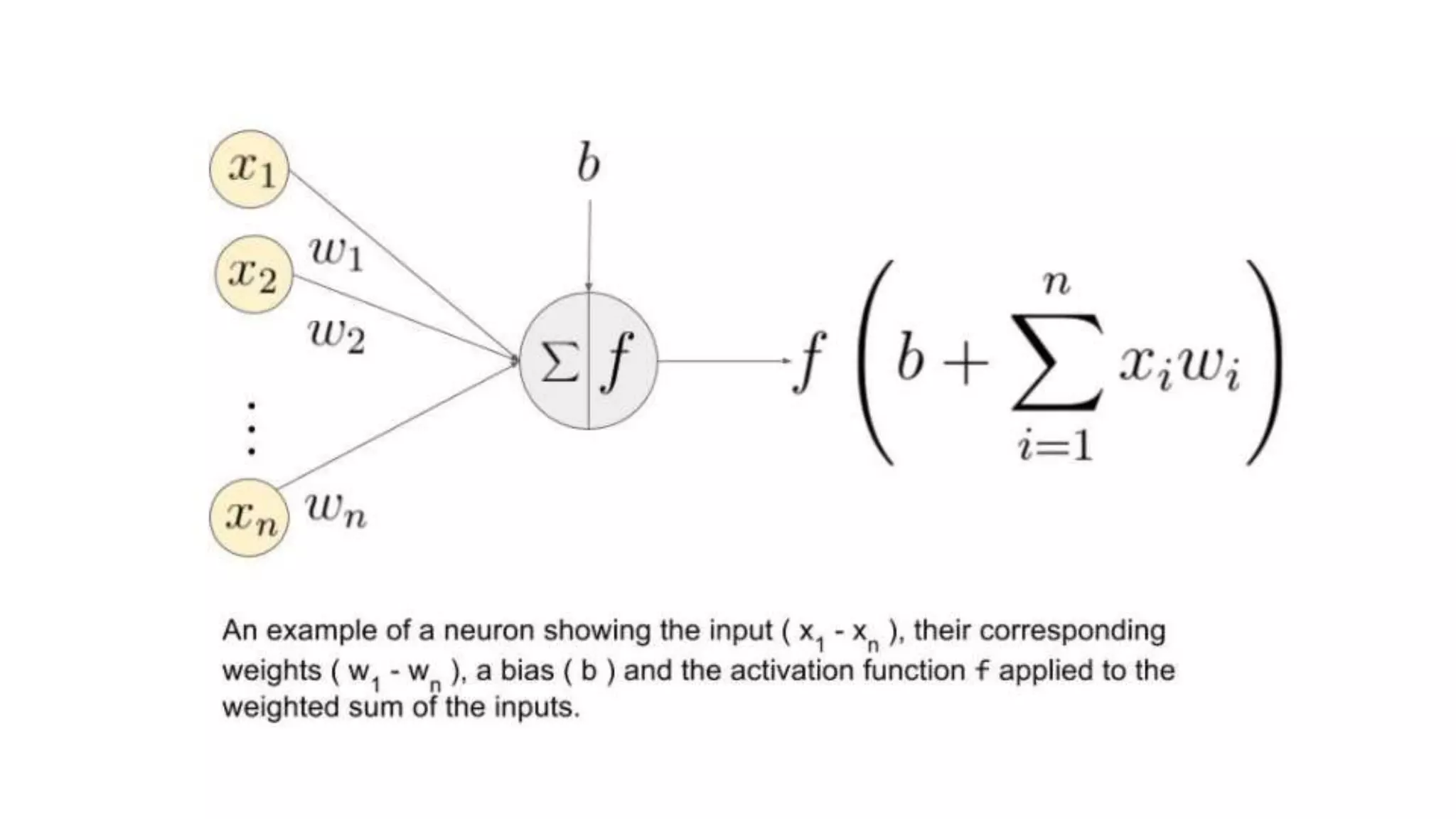

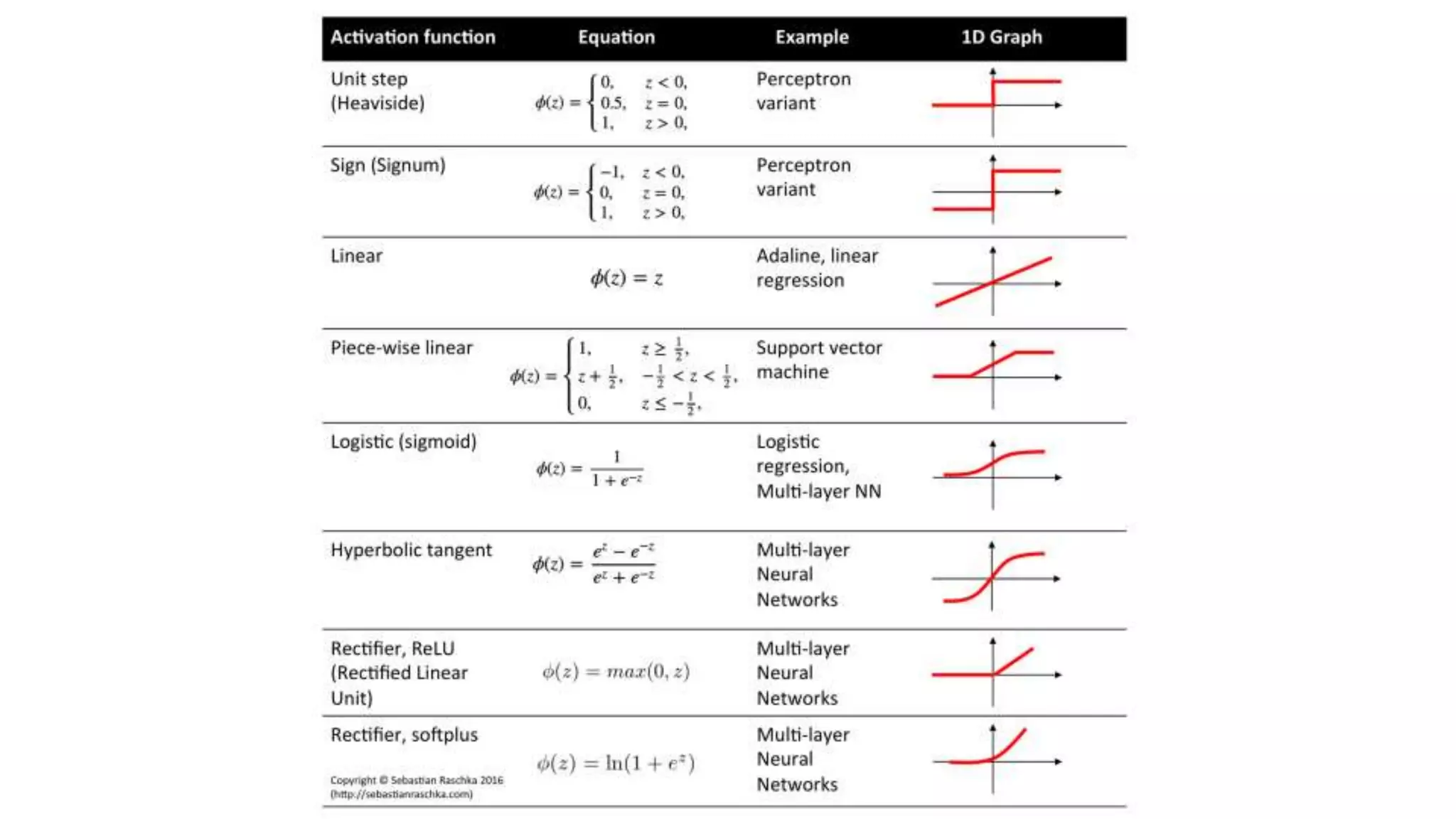

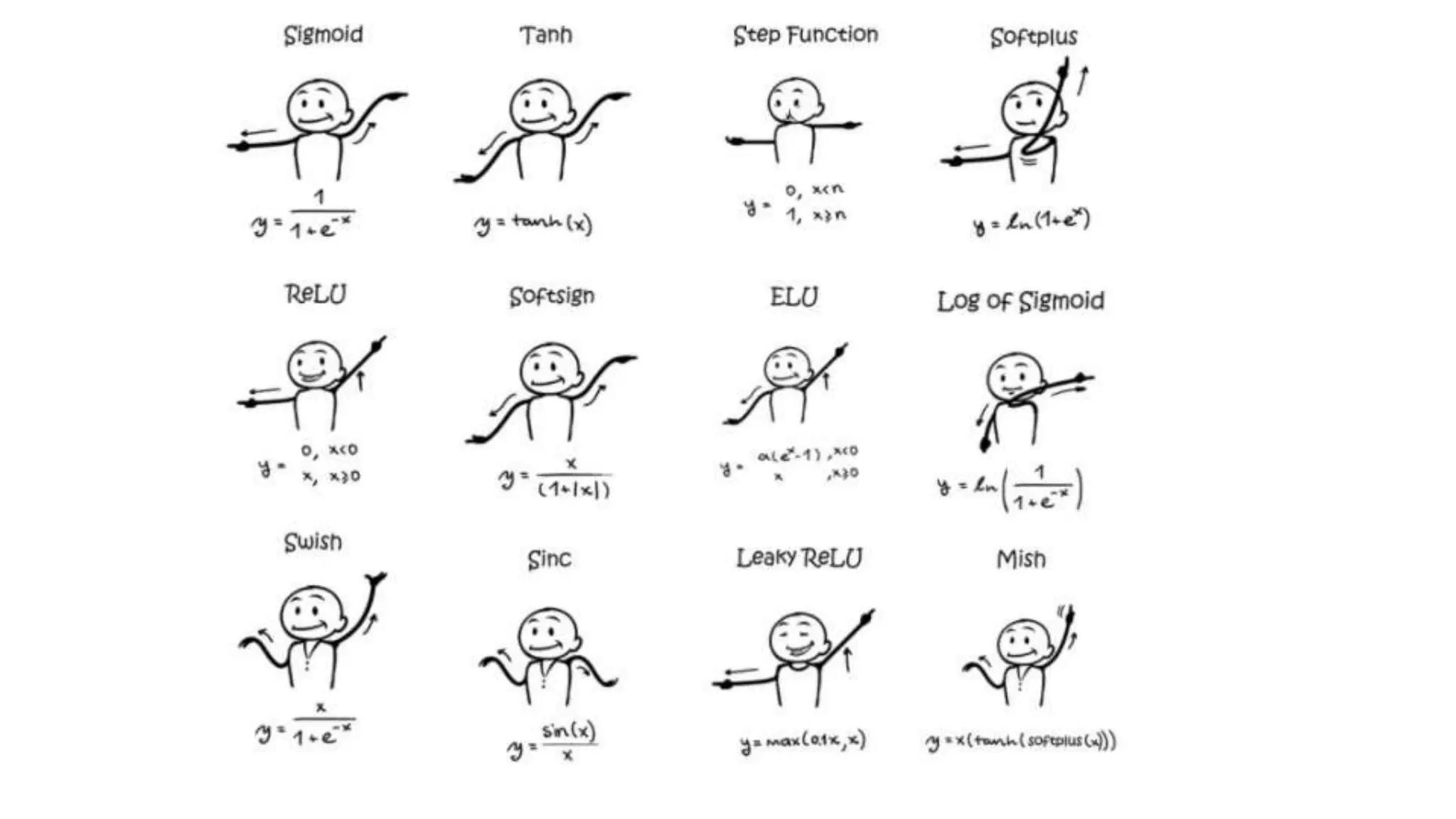

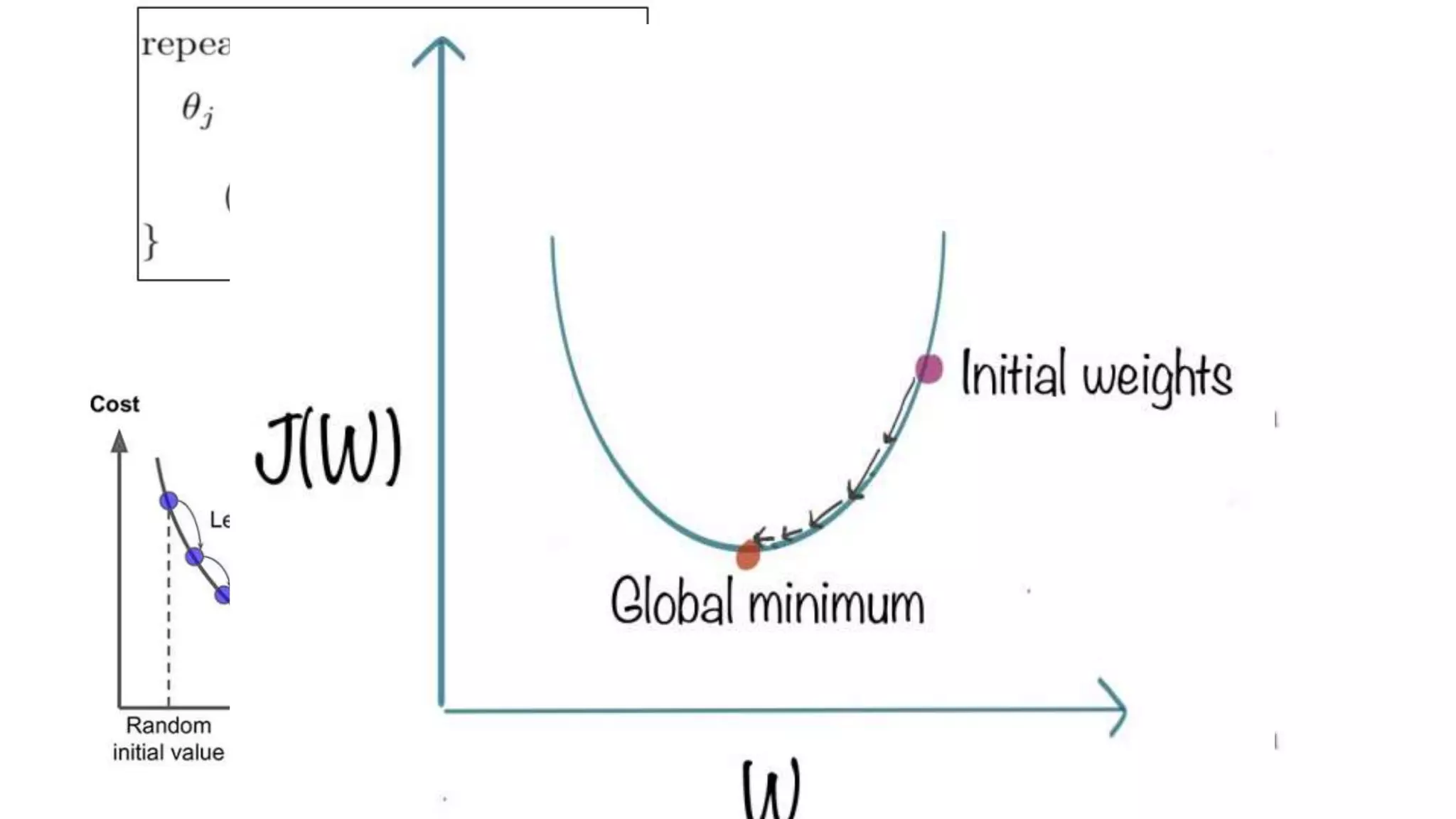

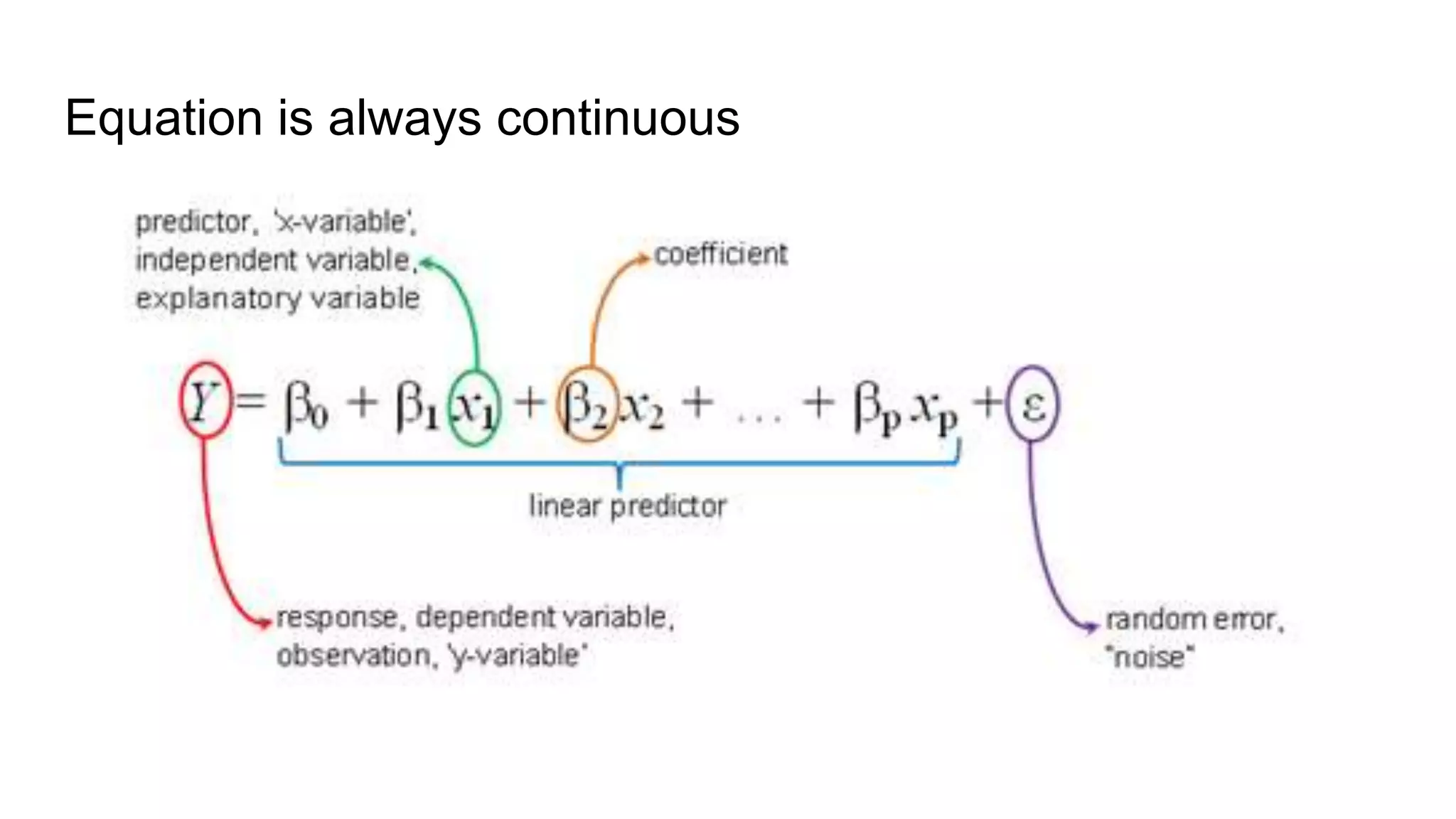

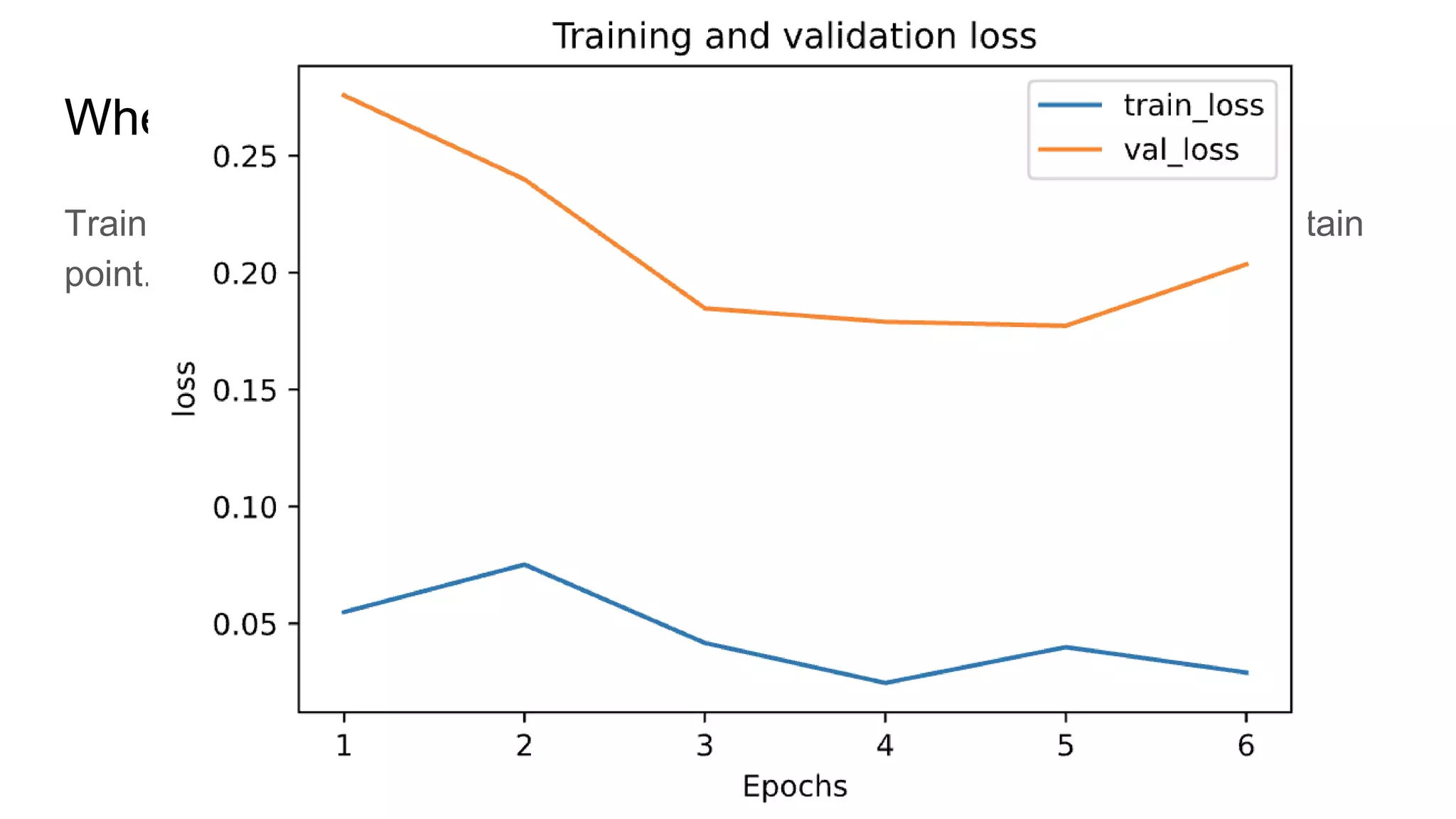

This document provides an overview of deep learning concepts including neural networks, supervised and unsupervised learning, and key terms. It explains that deep learning uses neural networks with many hidden layers to learn features directly from raw data. Supervised learning algorithms learn from labeled examples to perform classification or regression on unseen data. Unsupervised learning finds patterns in unlabeled data. Key terms defined include neurons, activation functions, loss functions, optimizers, epochs, batches, and hyperparameters.