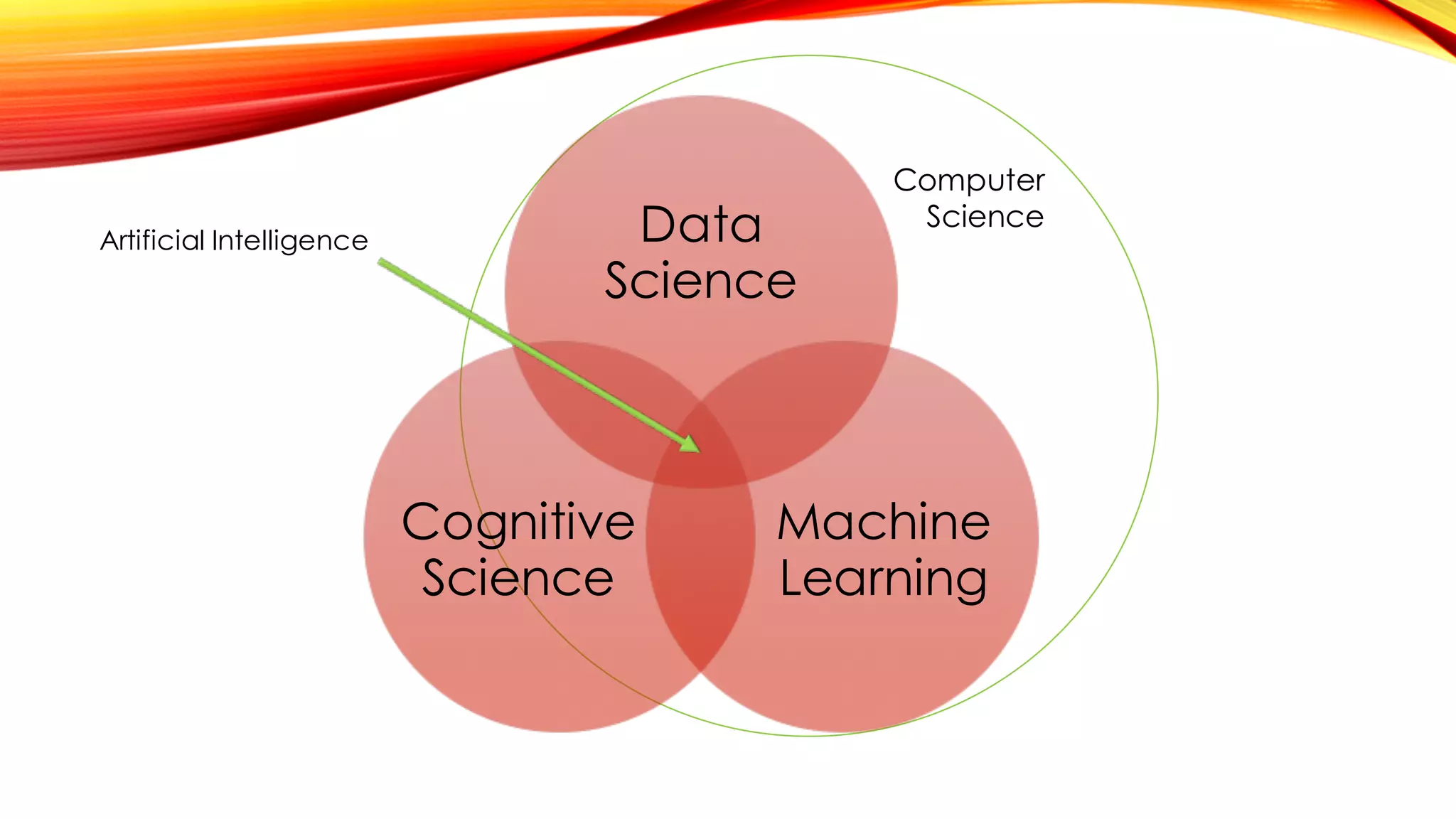

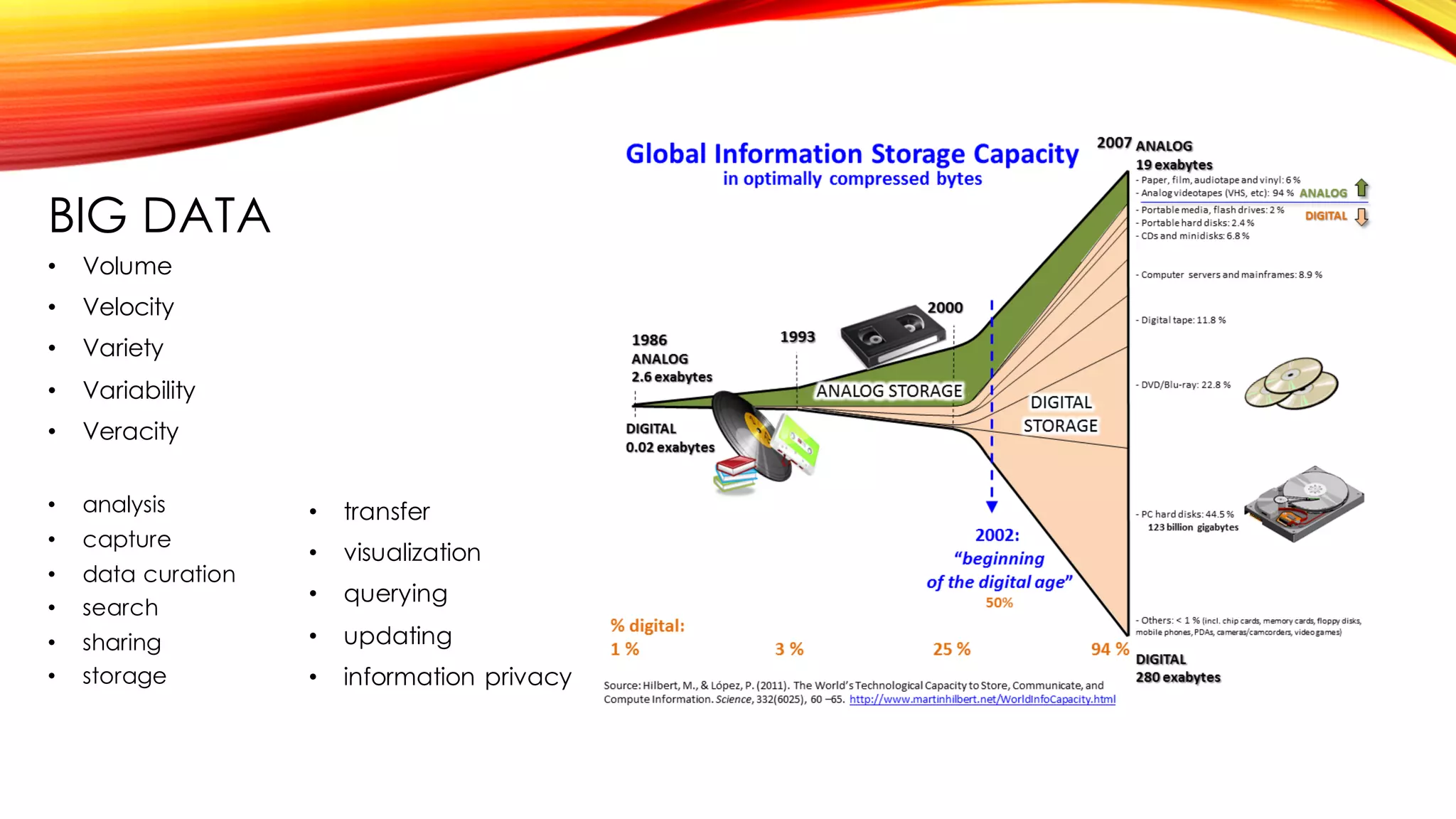

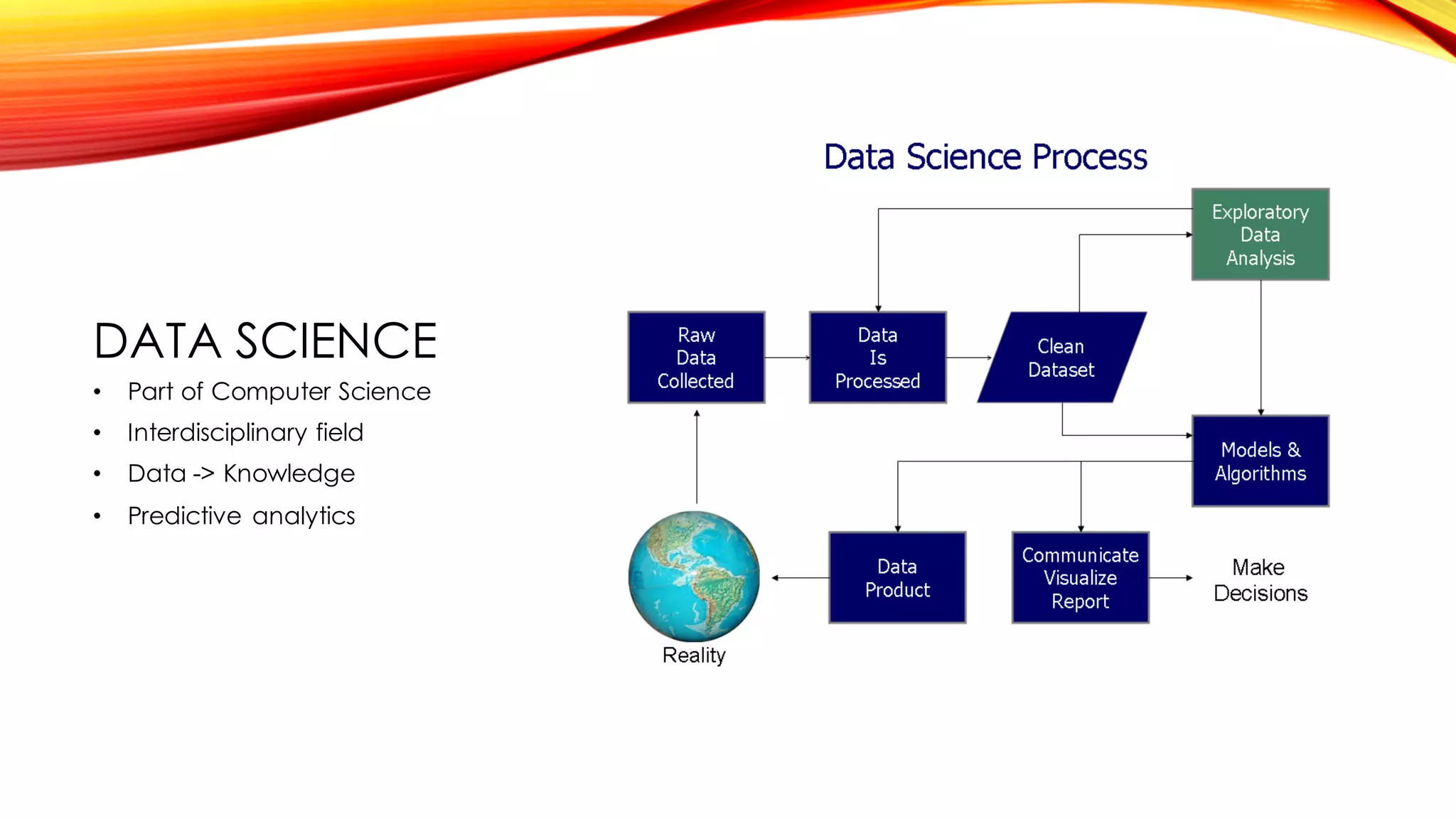

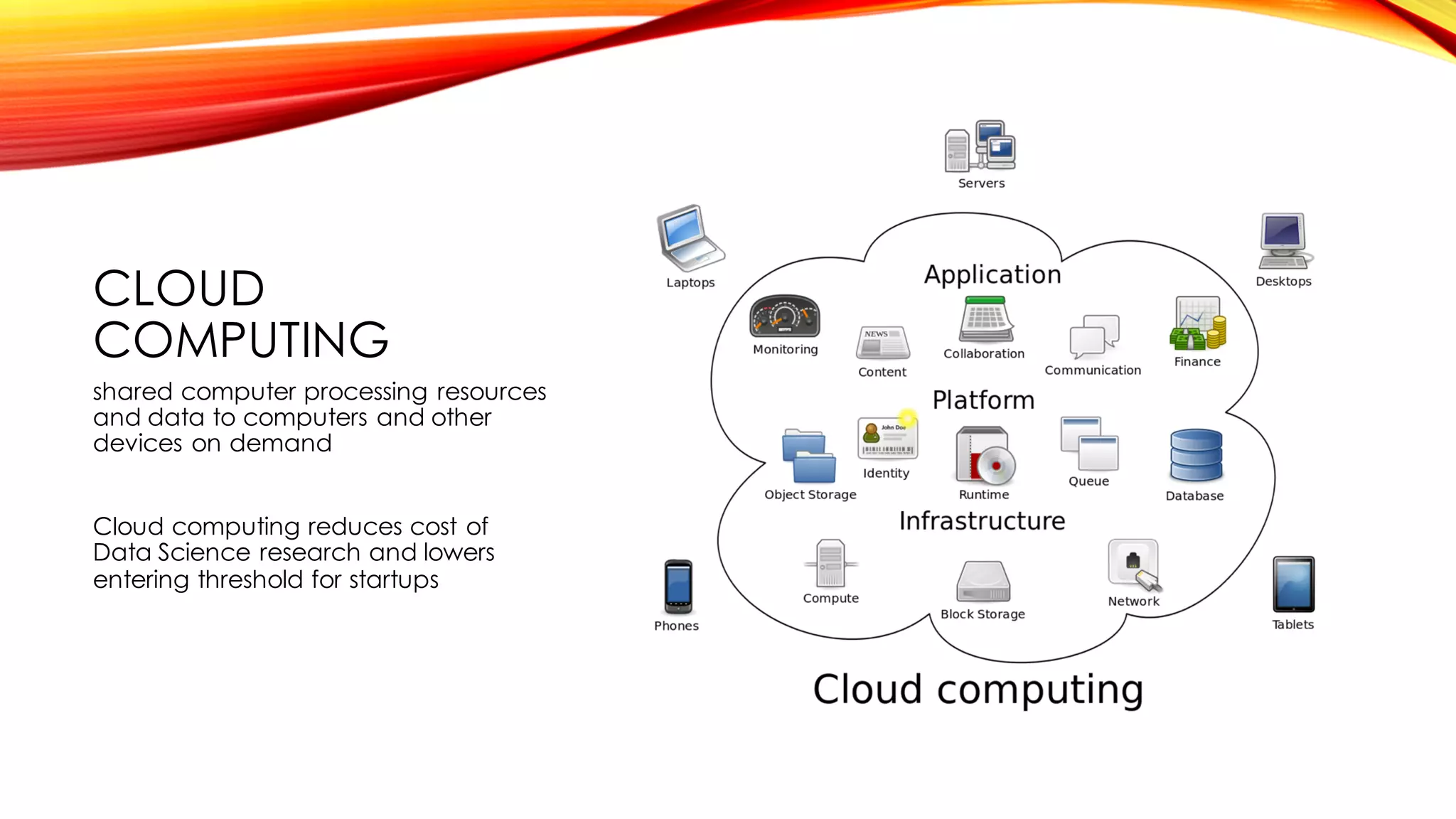

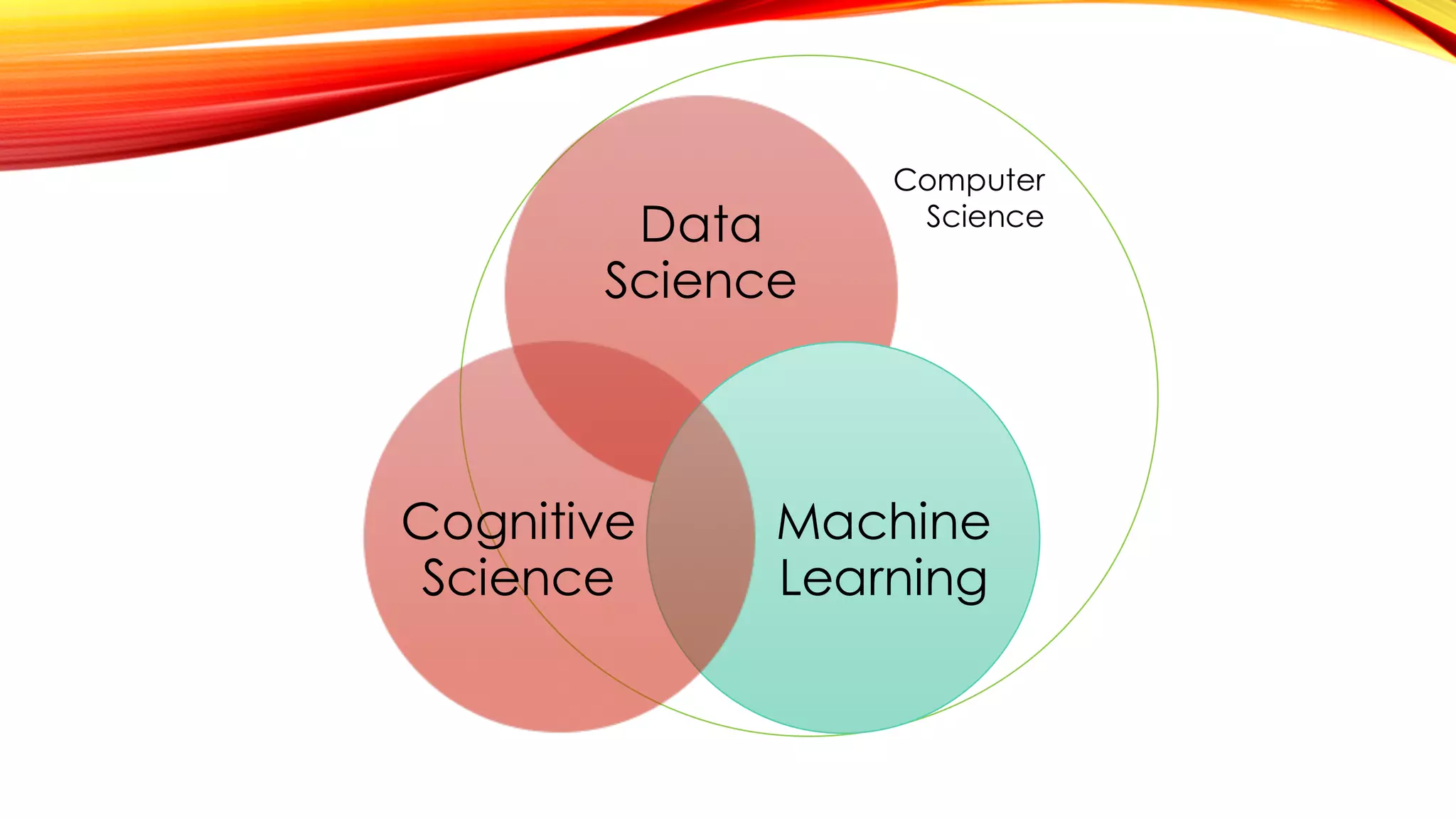

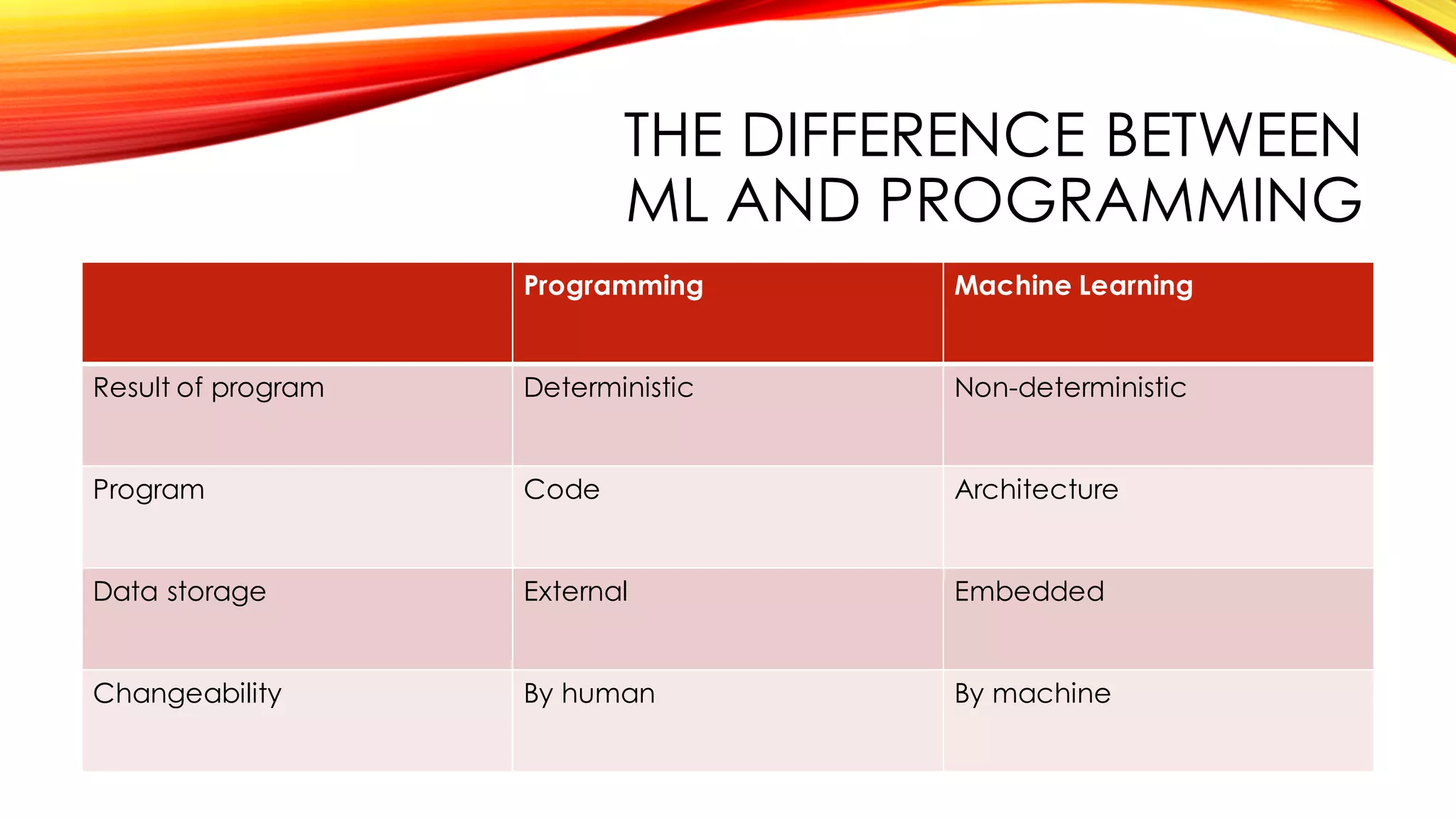

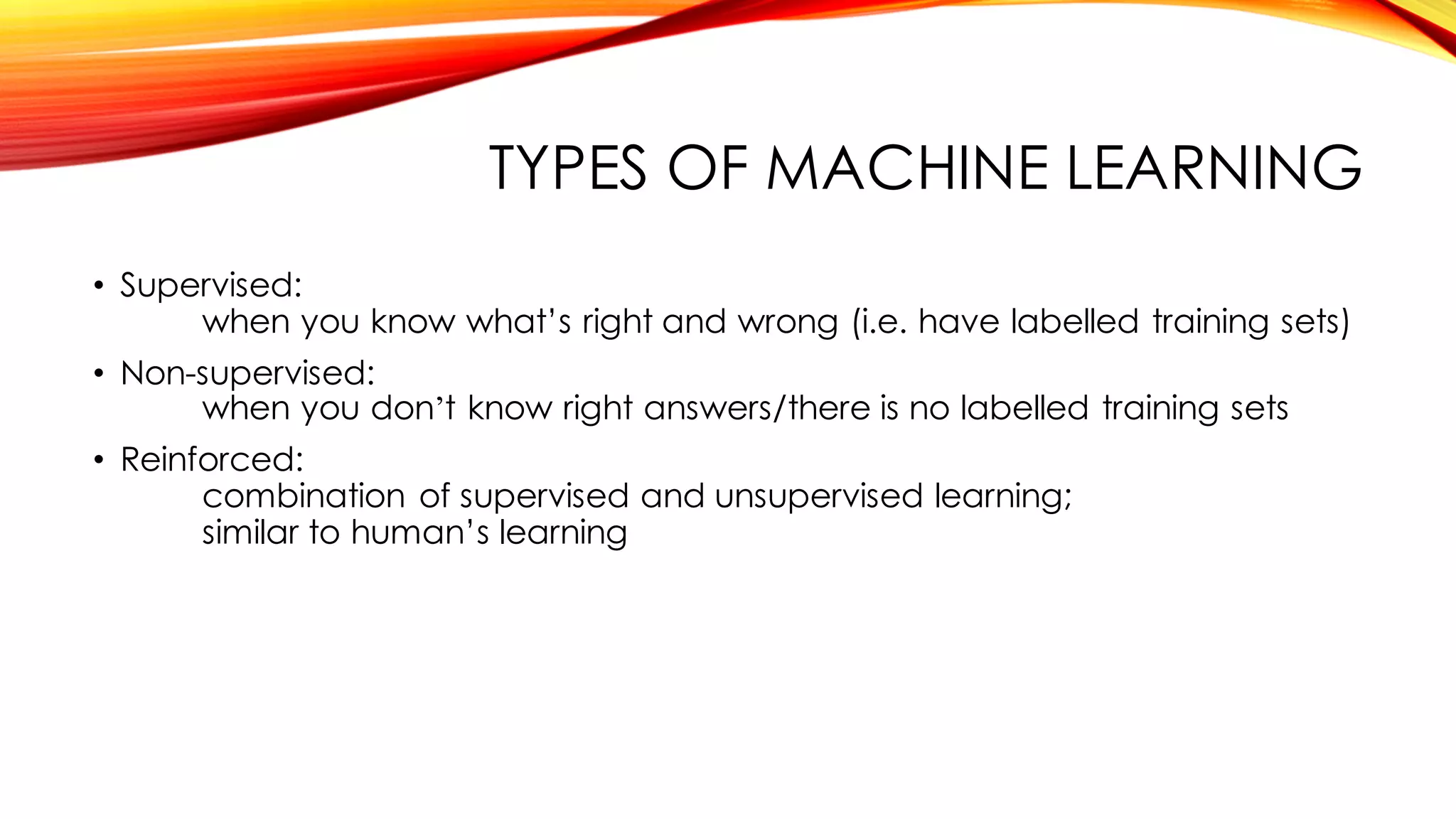

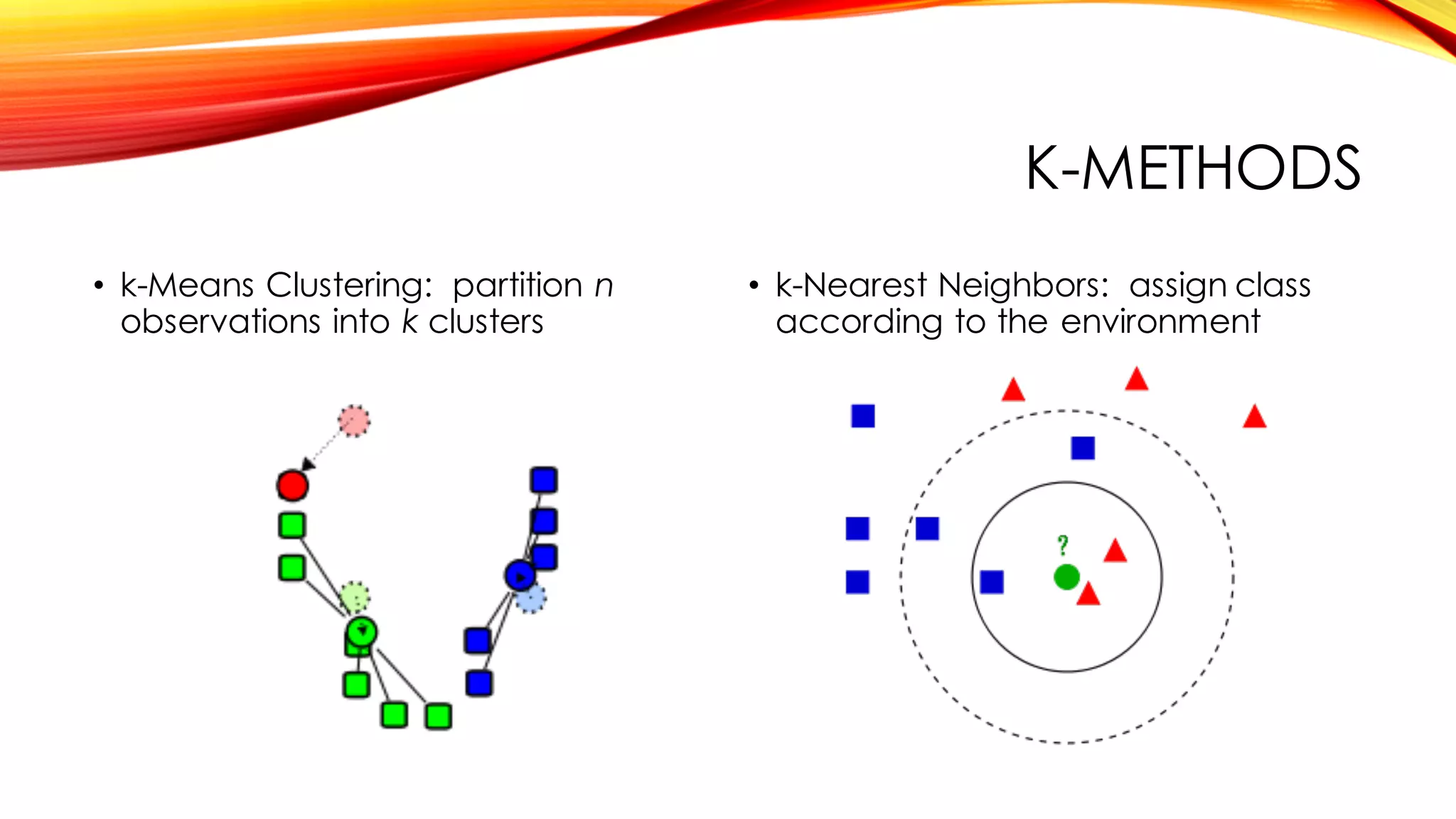

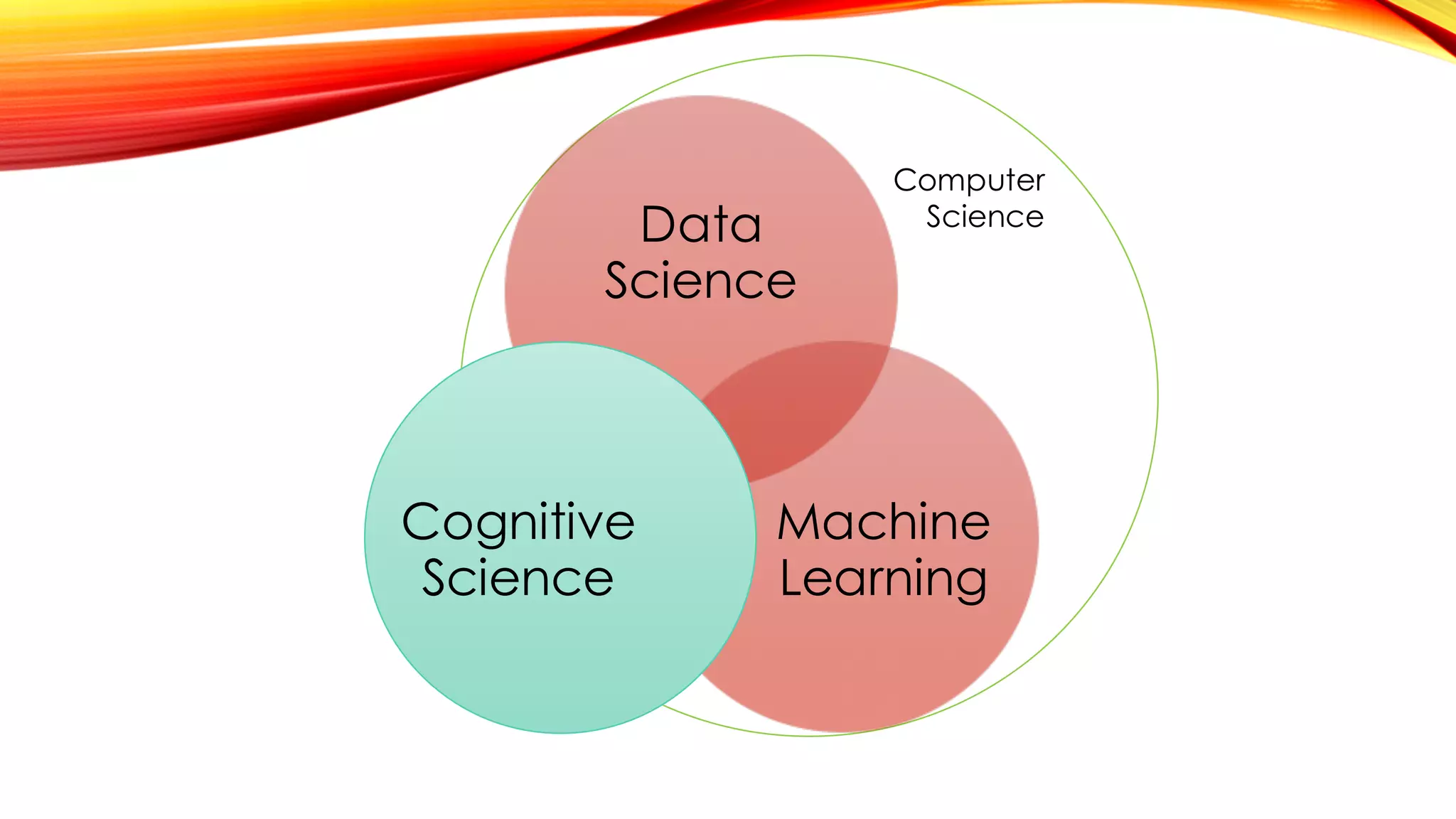

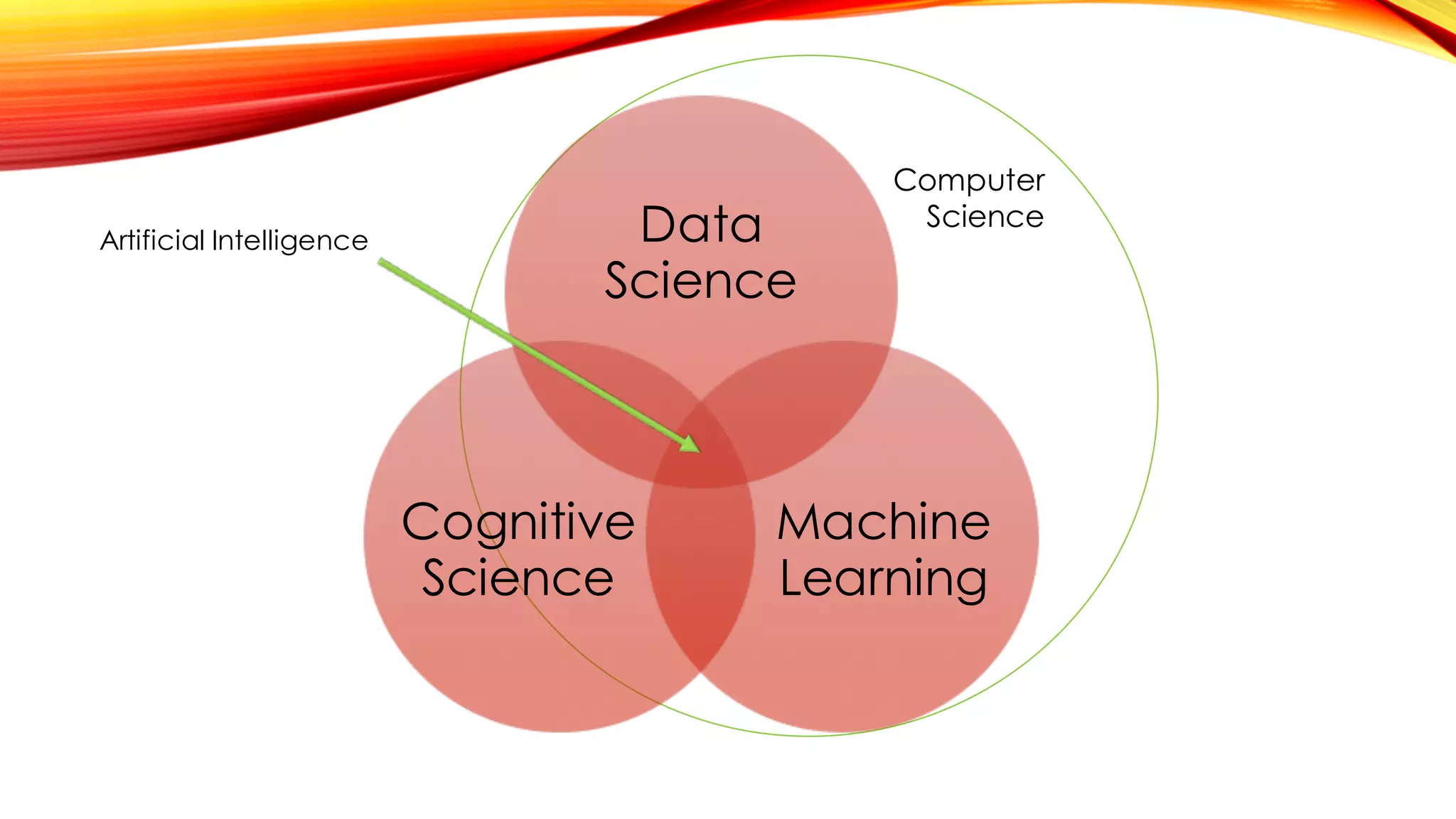

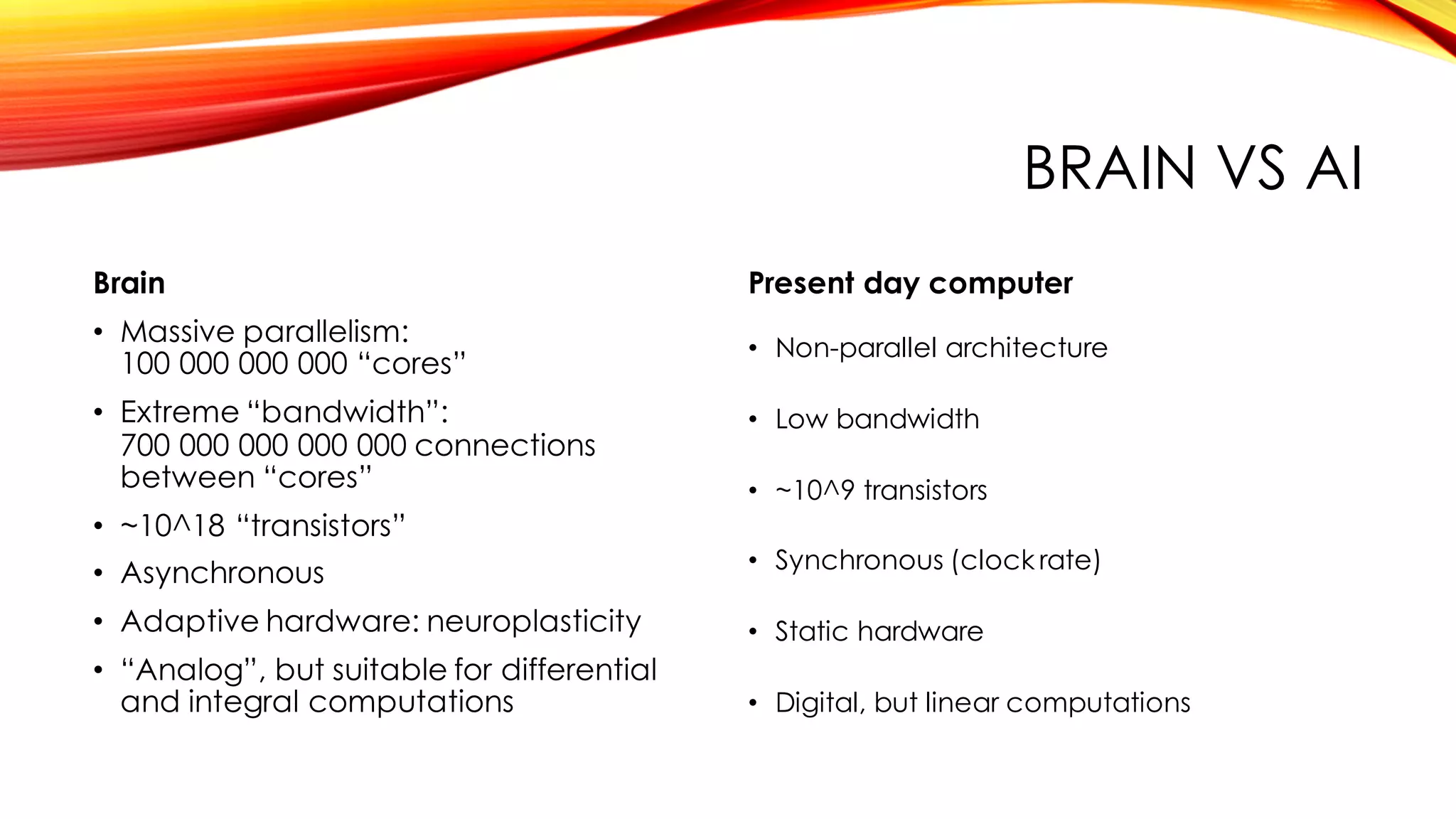

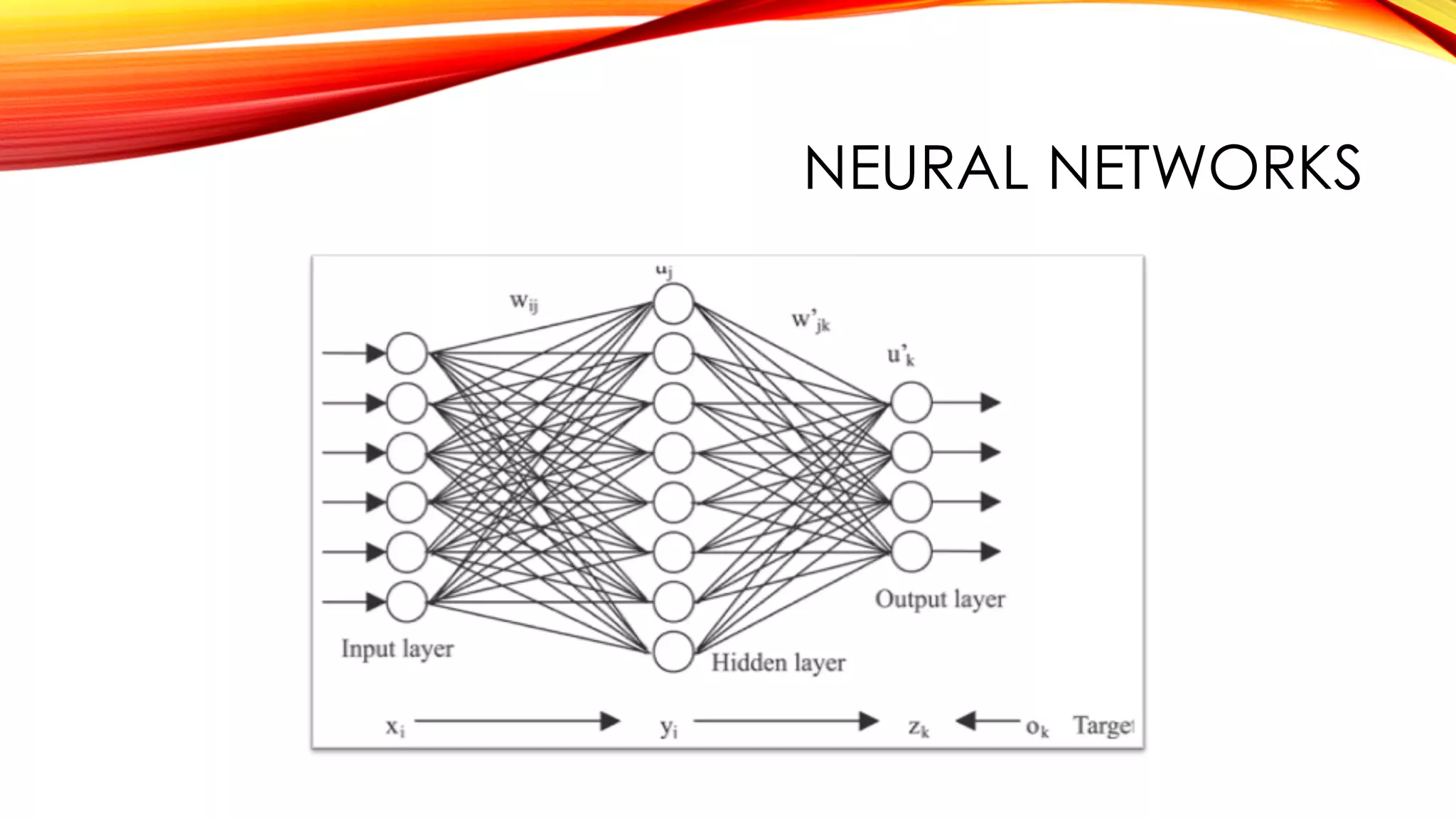

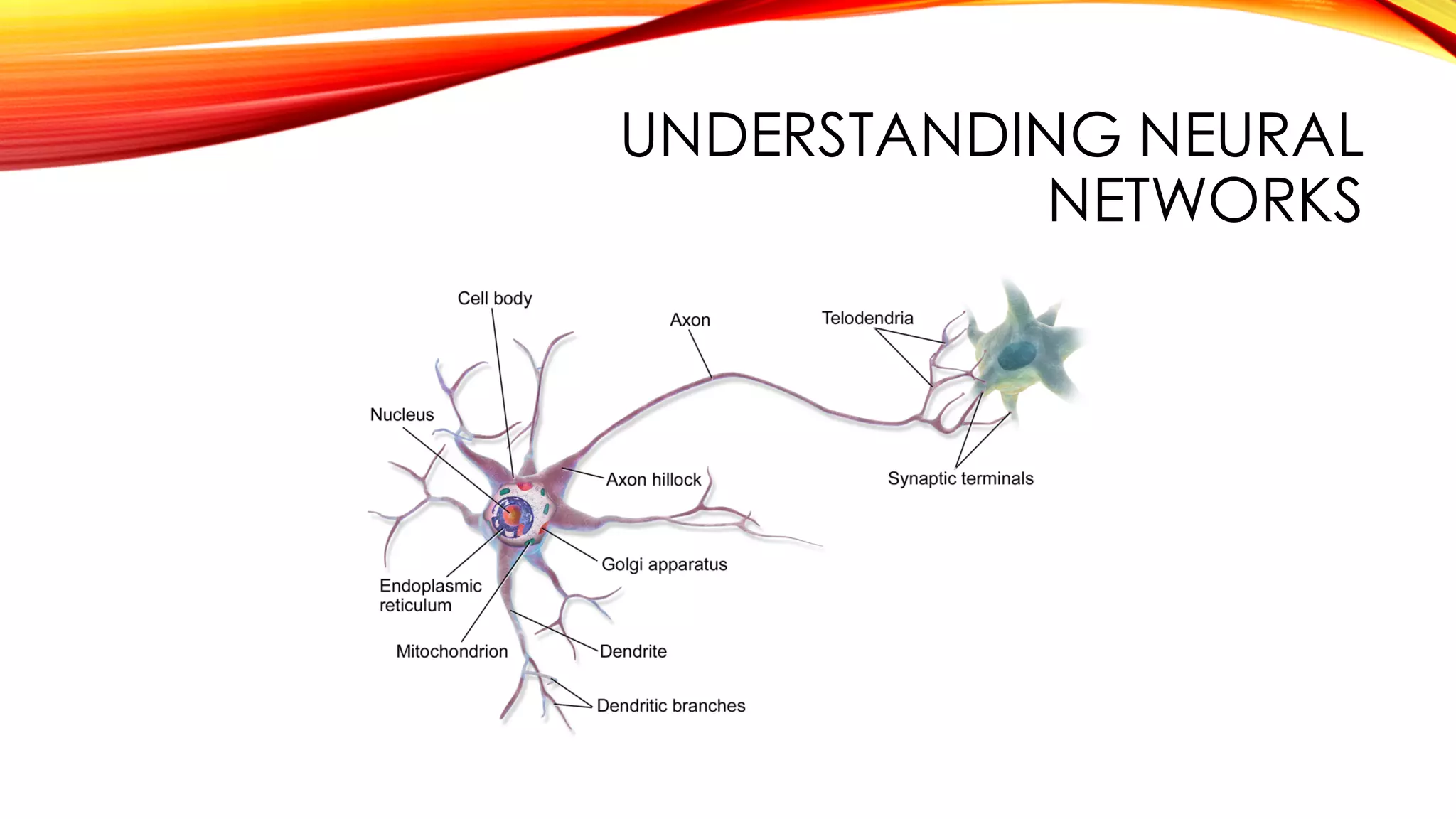

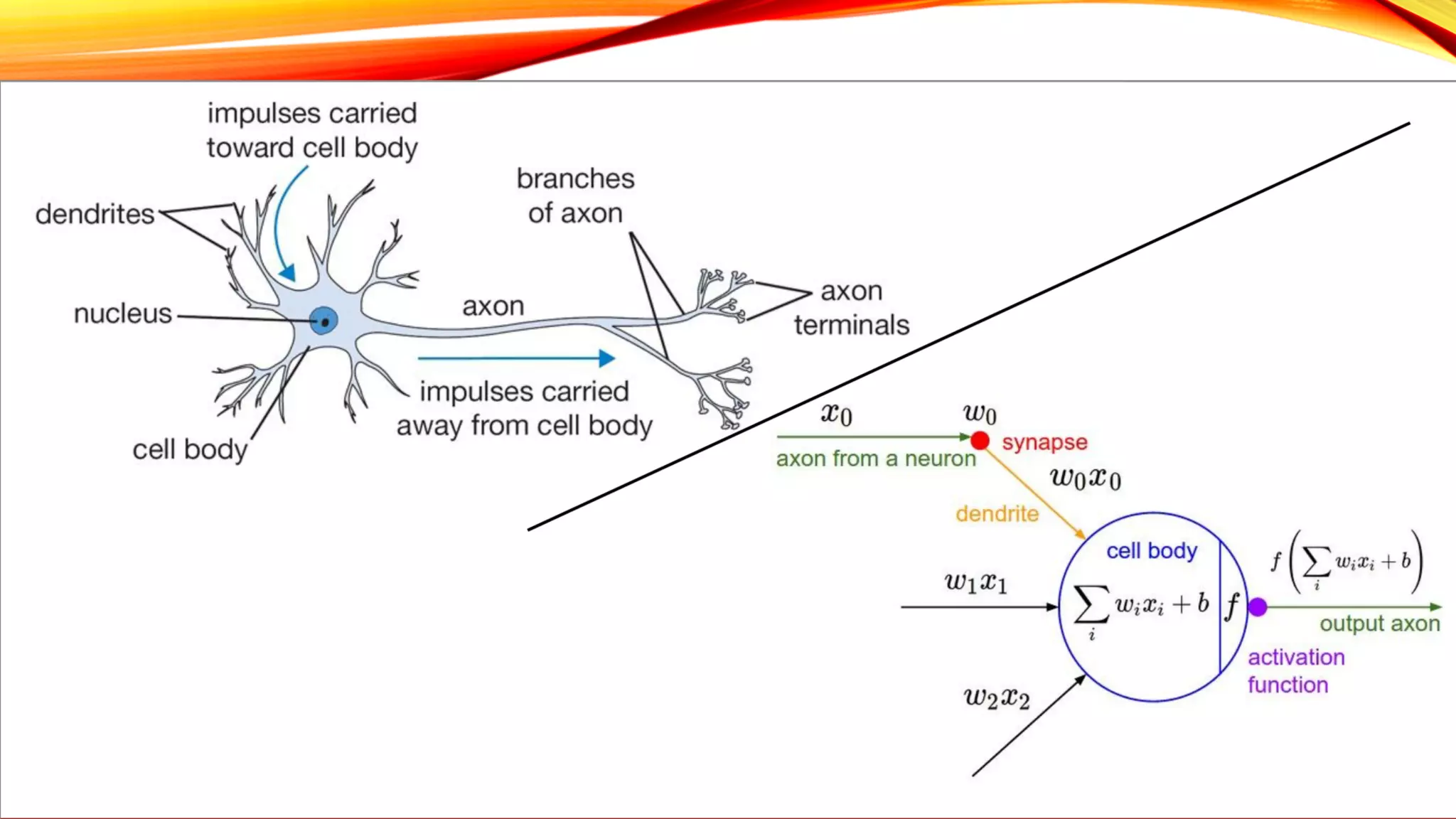

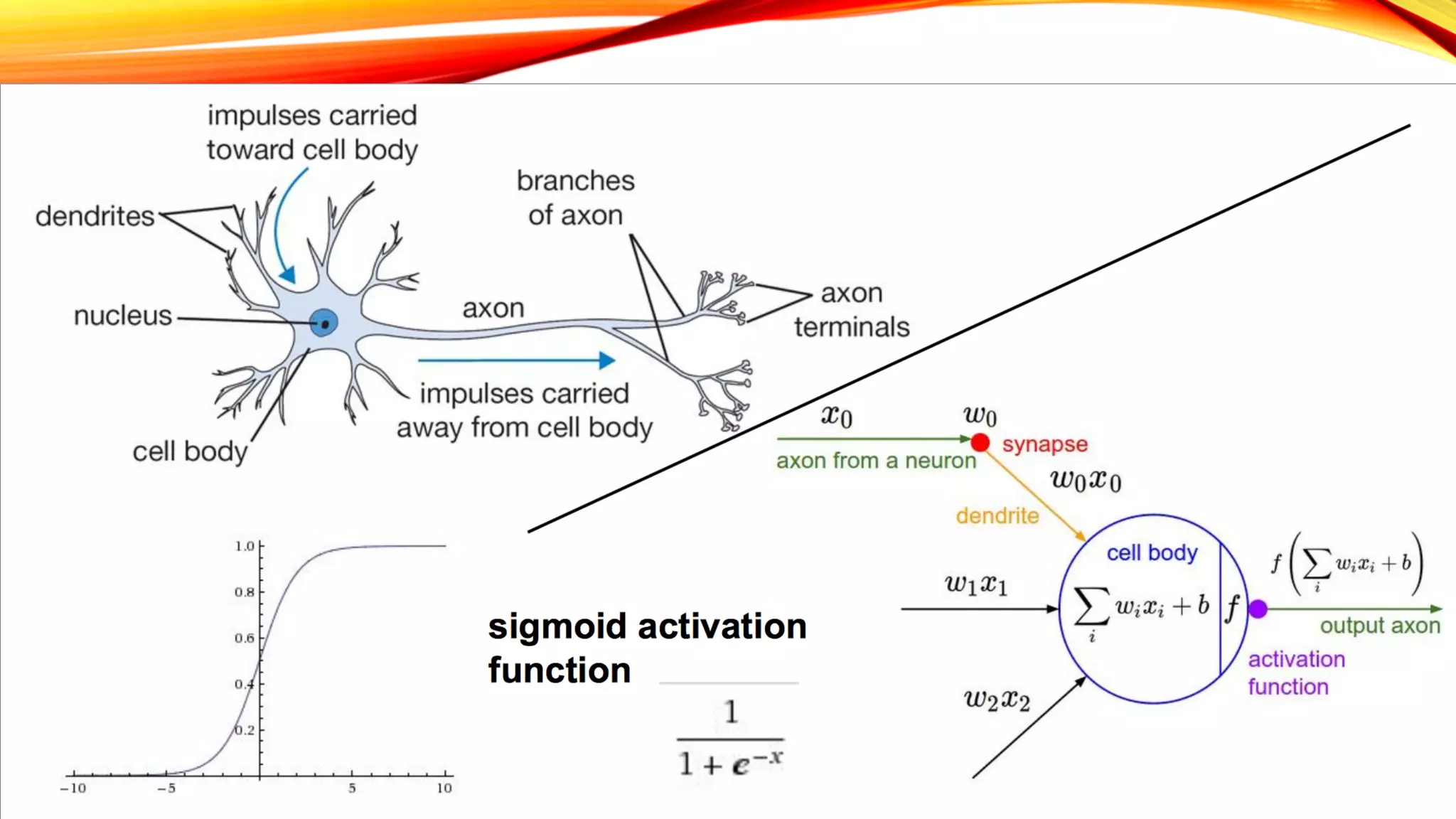

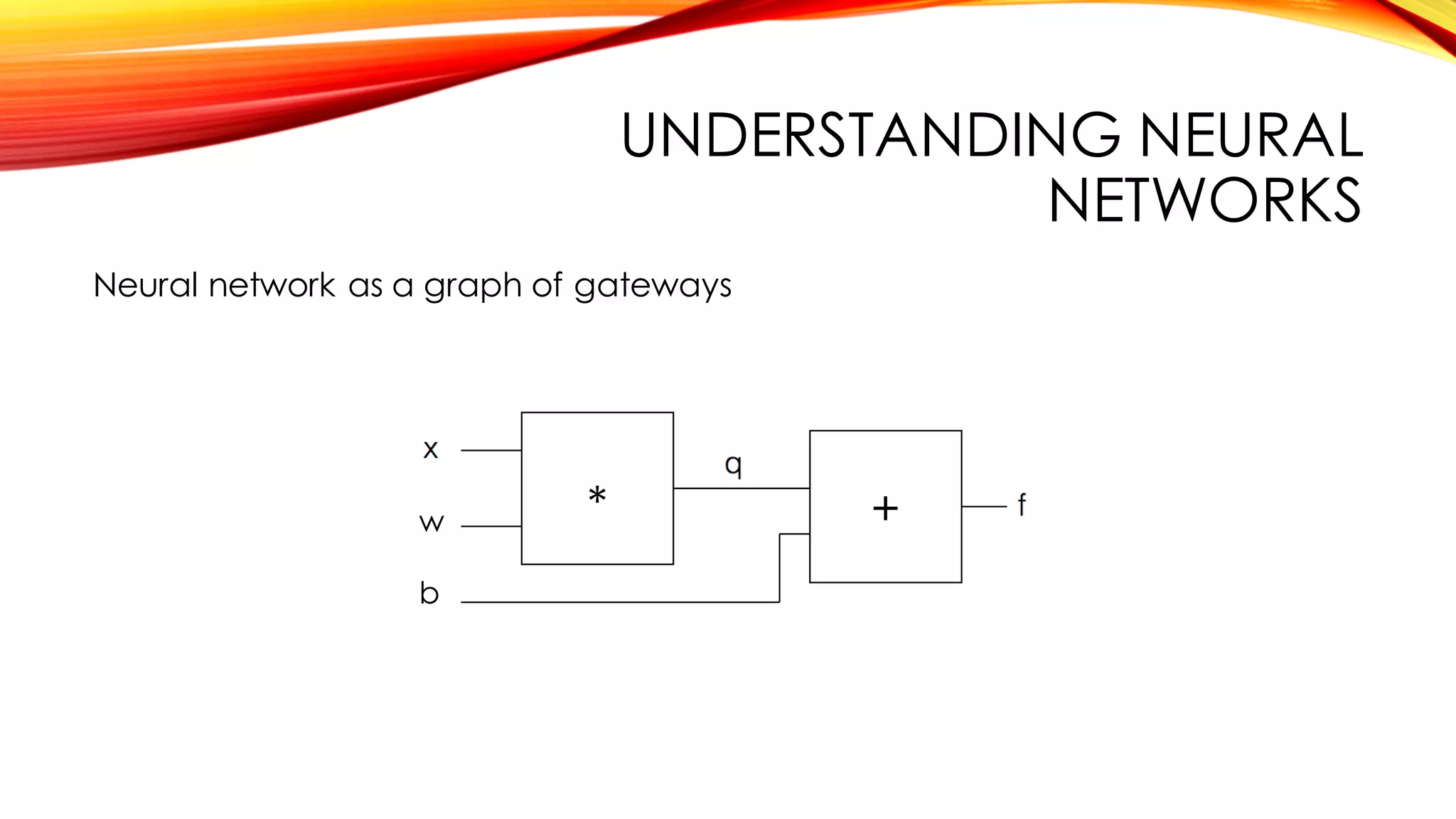

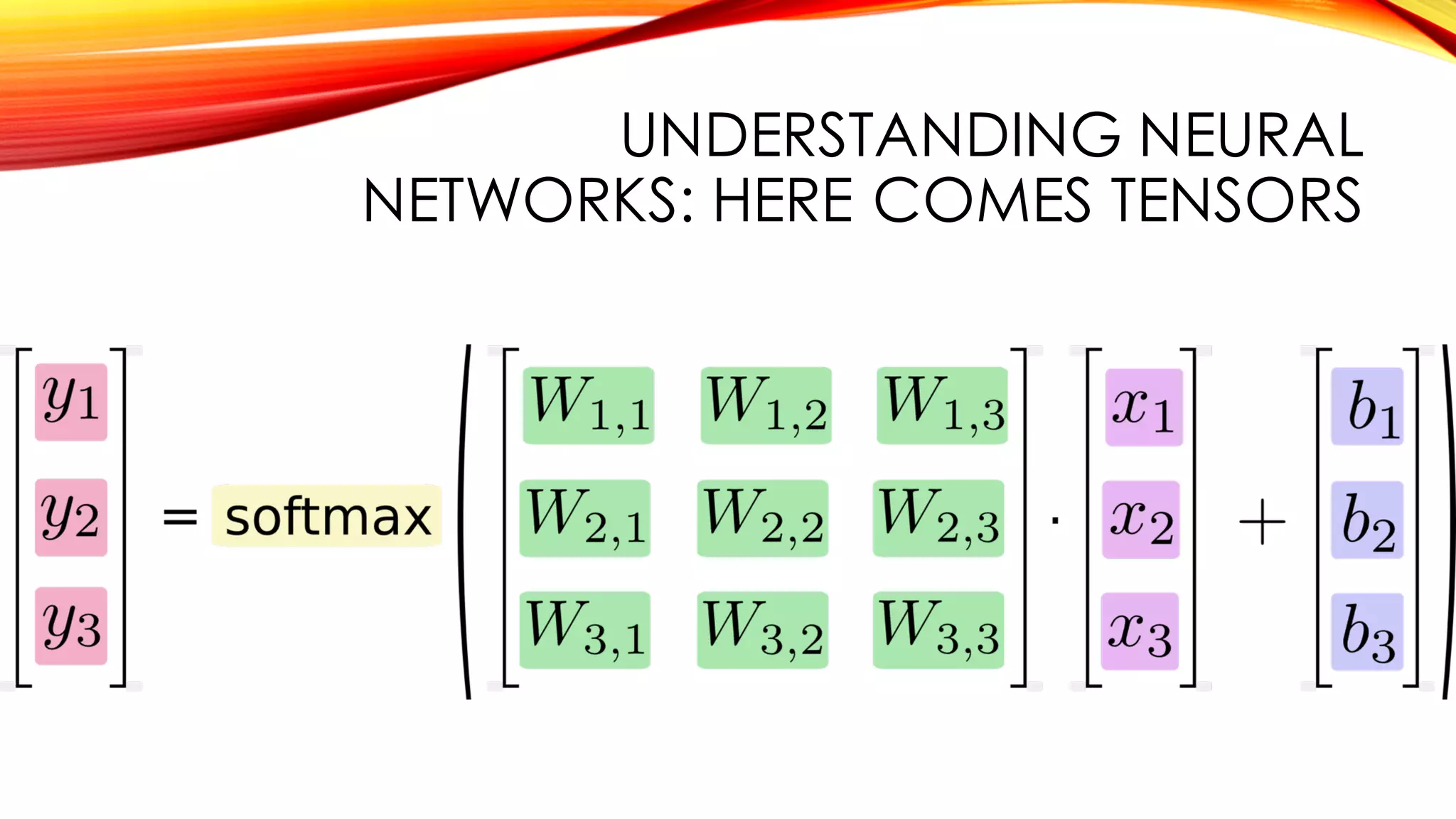

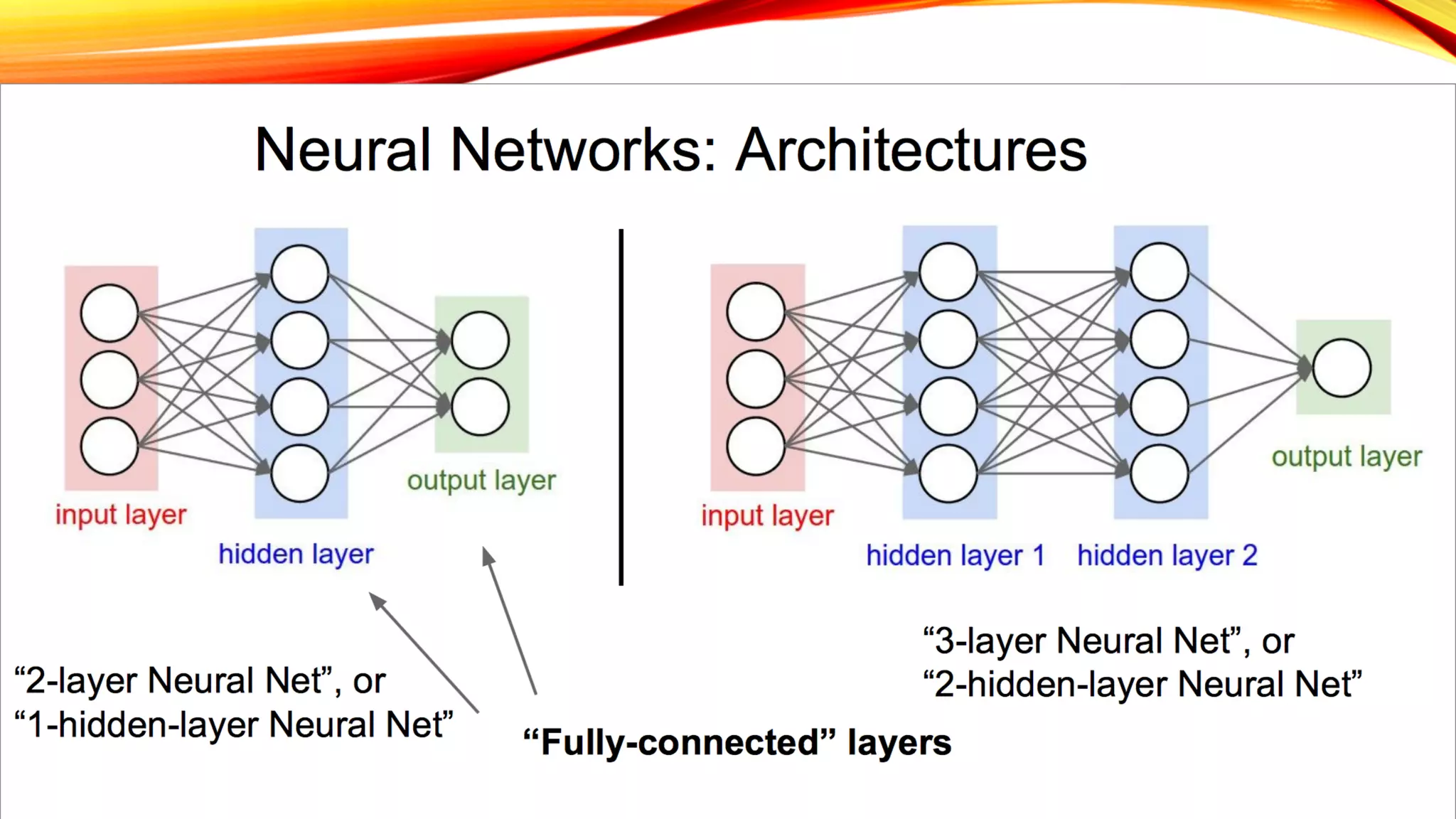

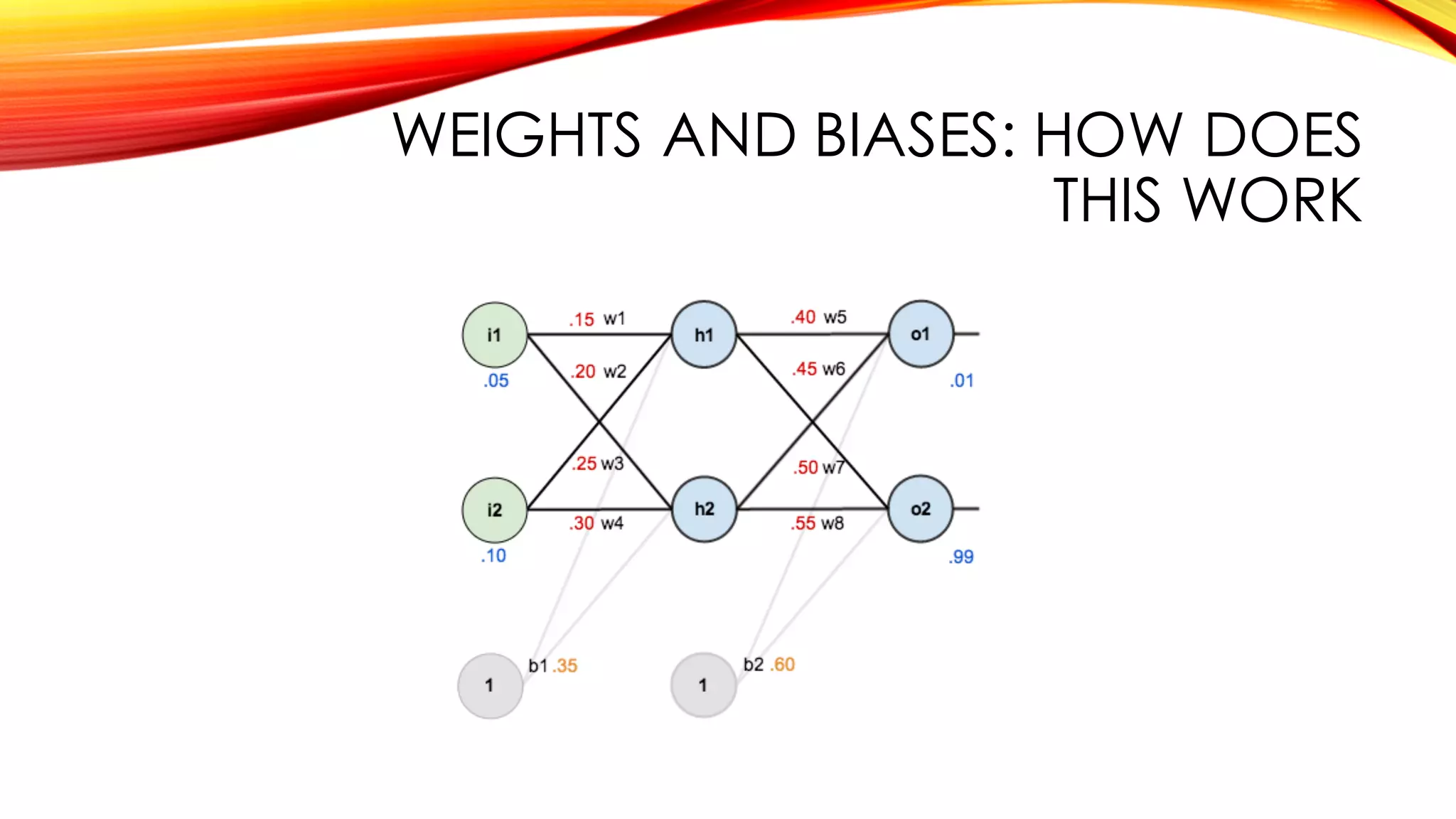

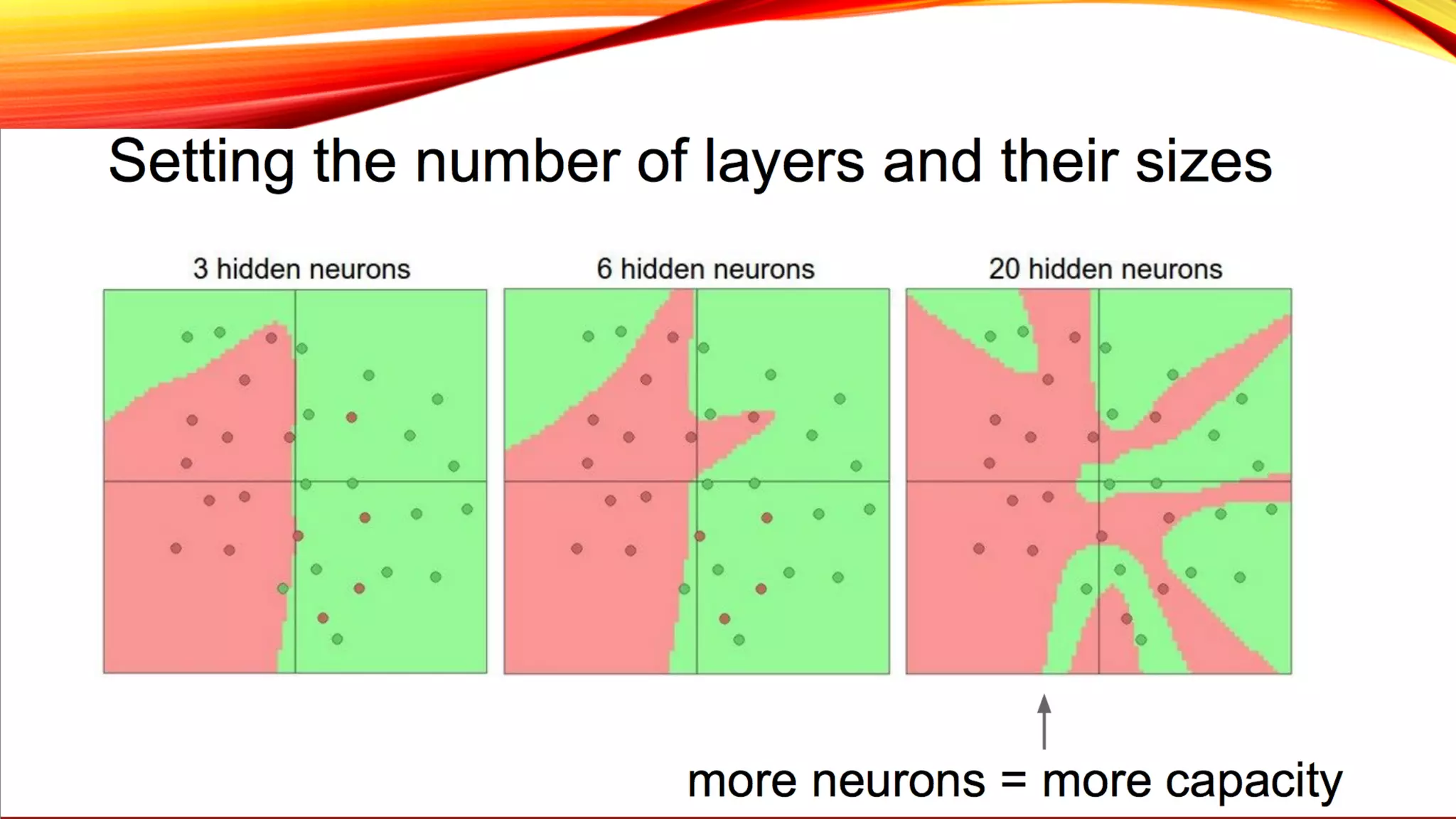

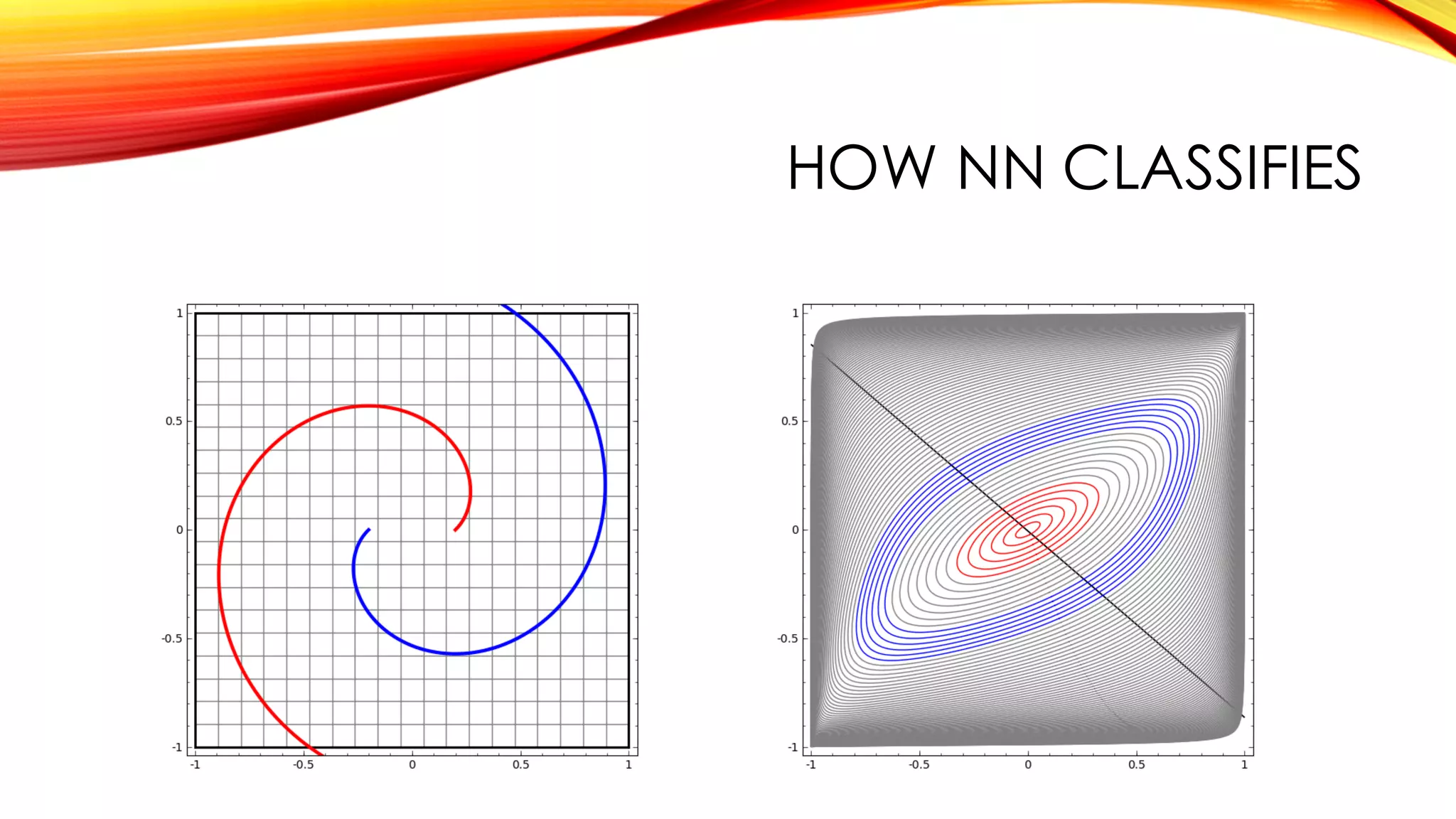

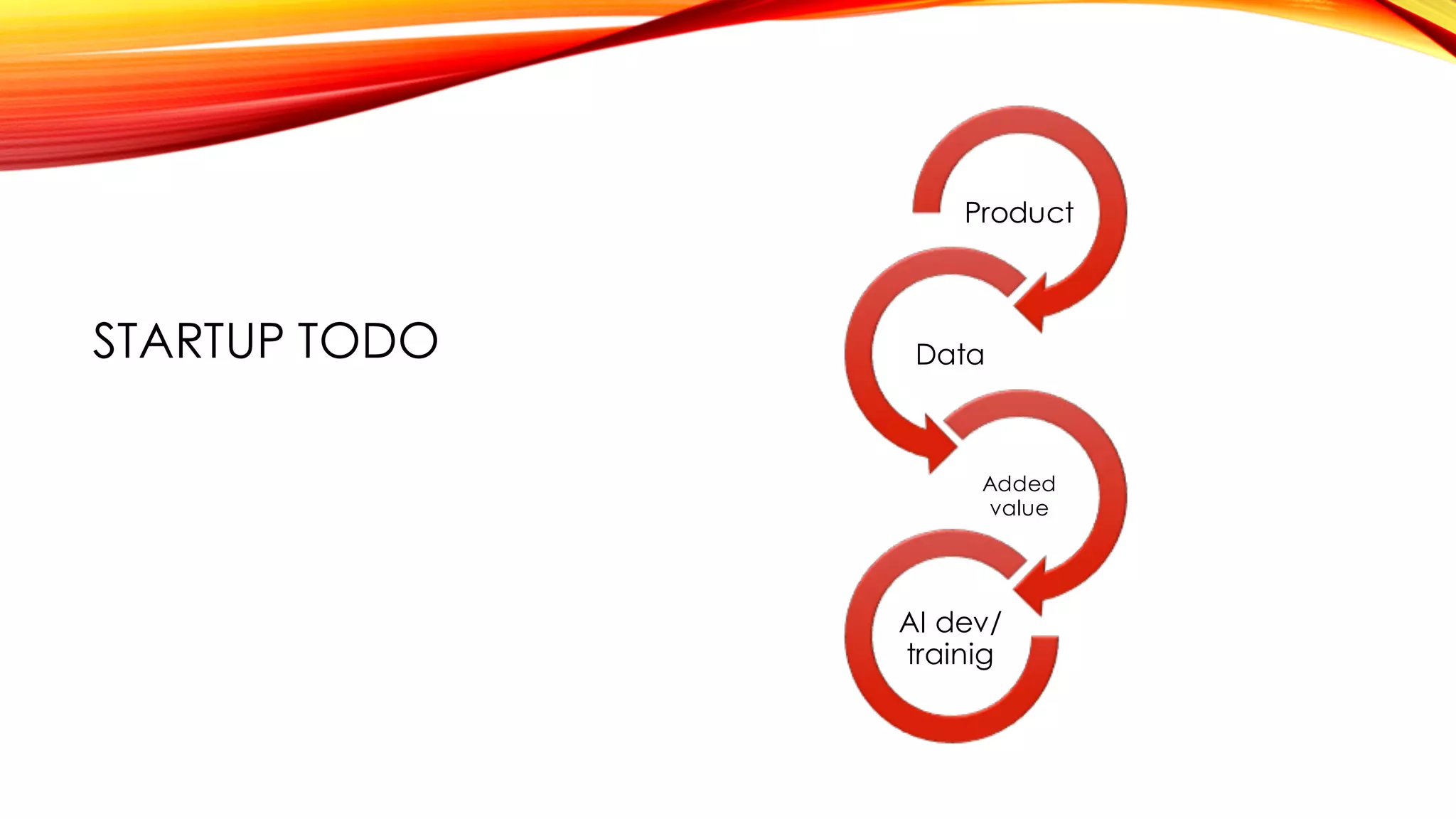

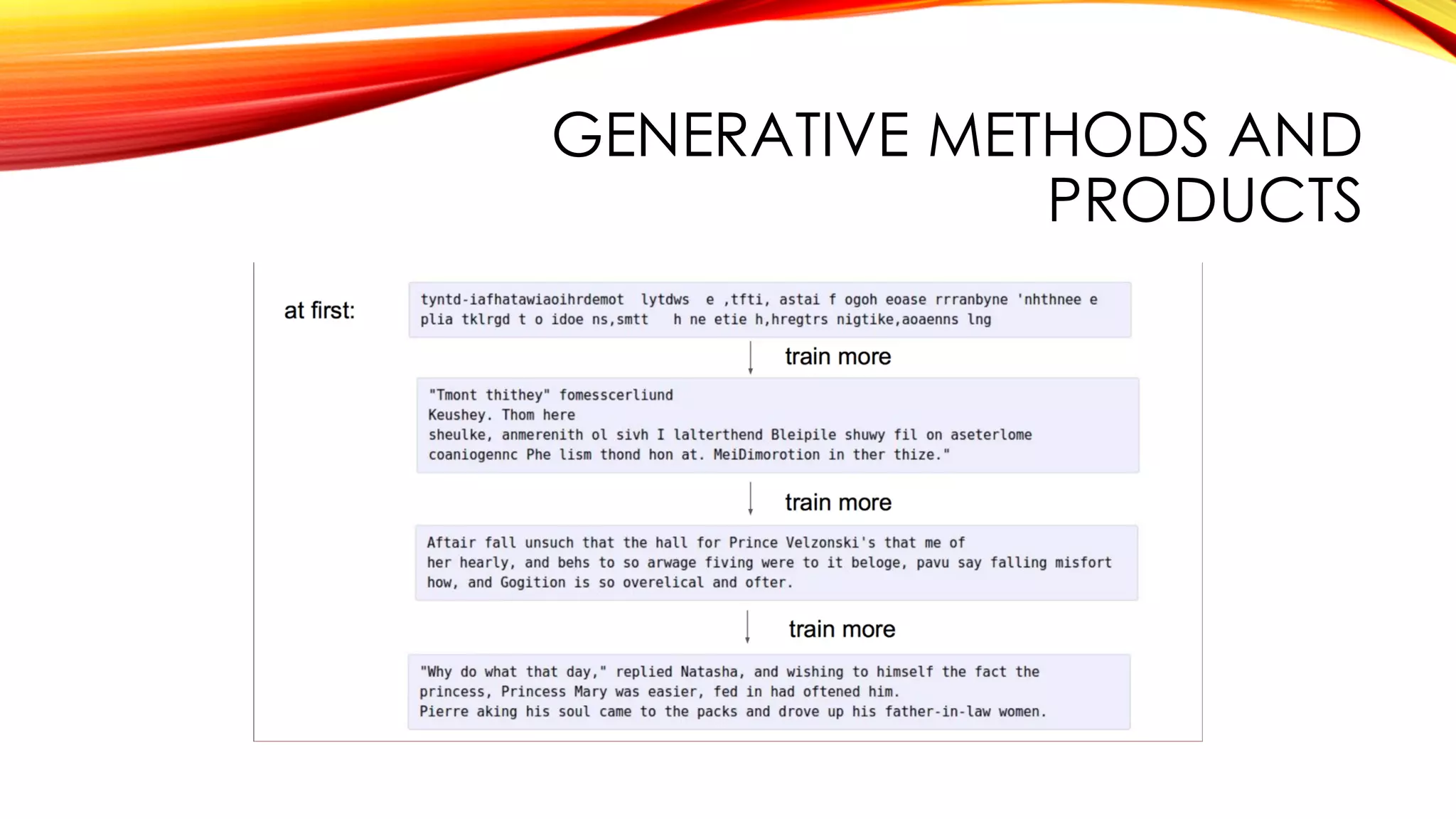

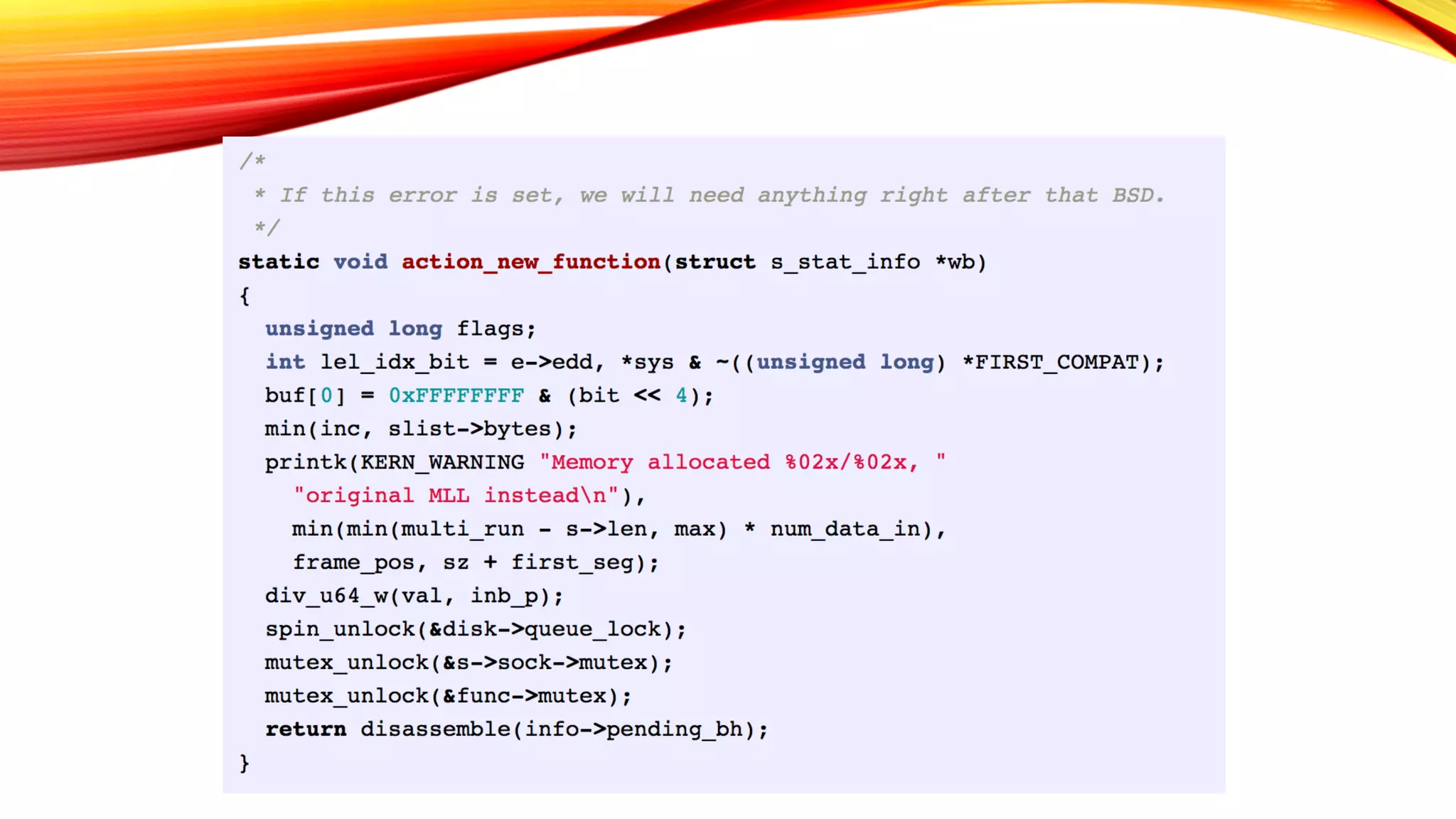

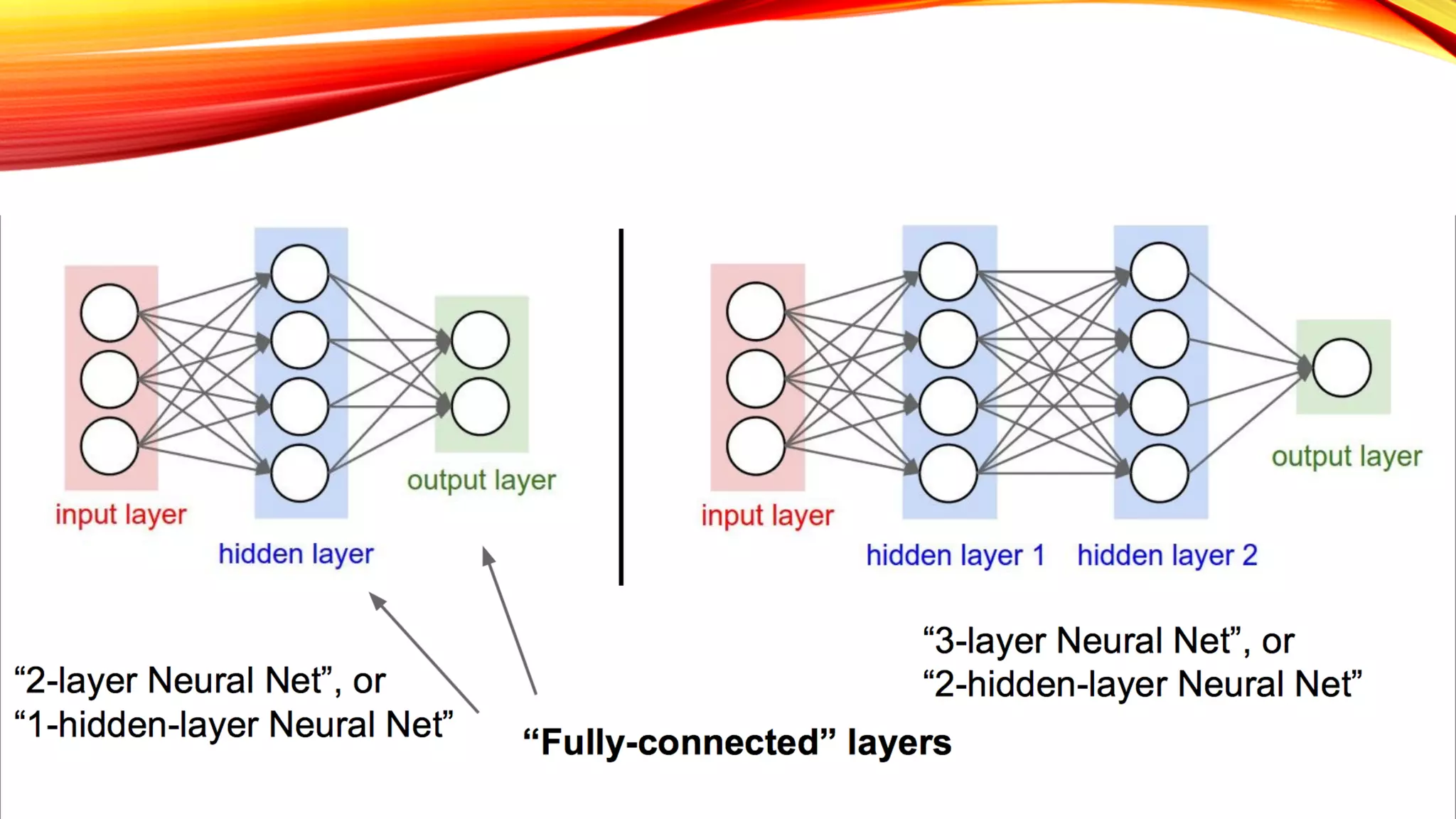

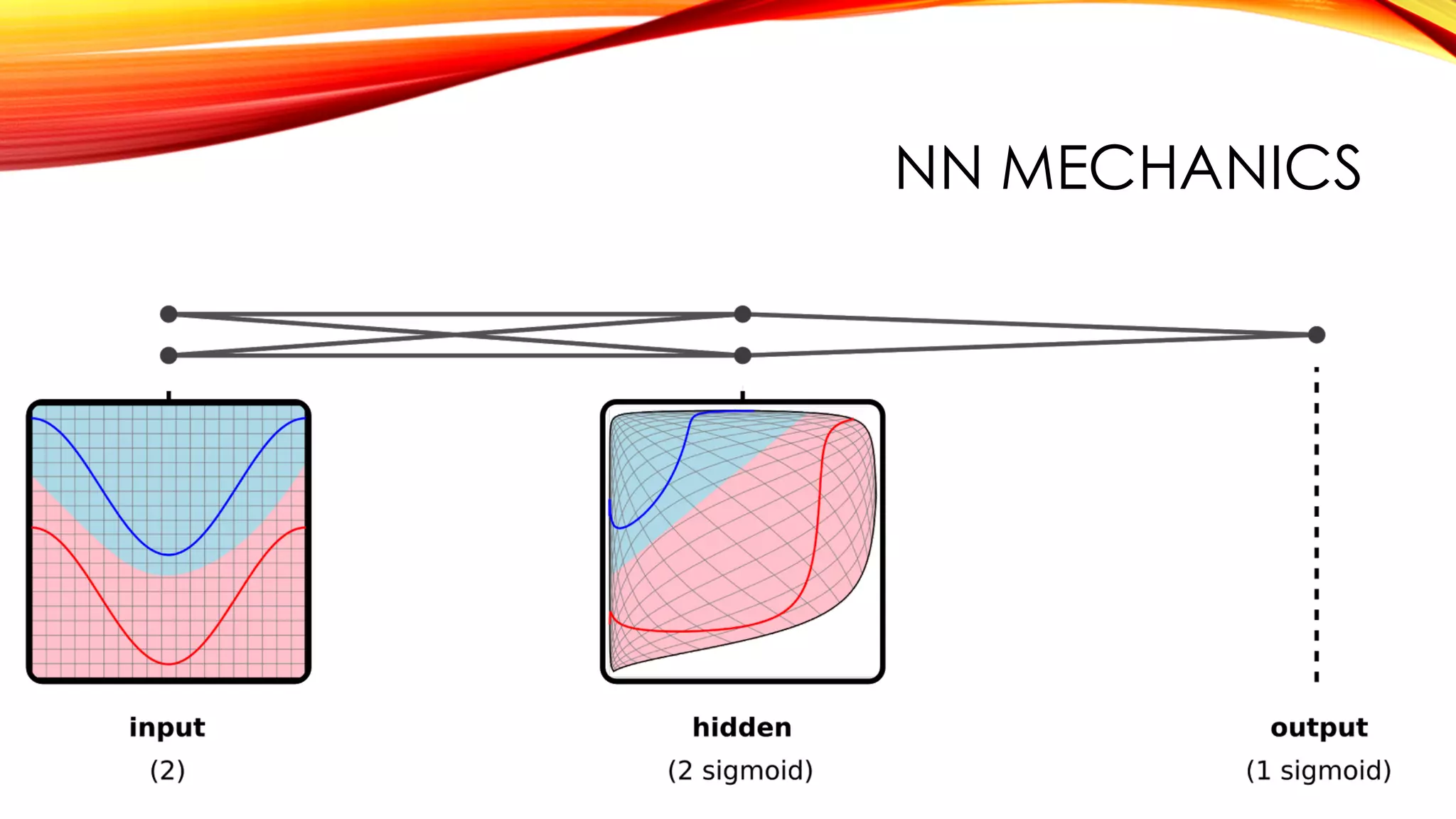

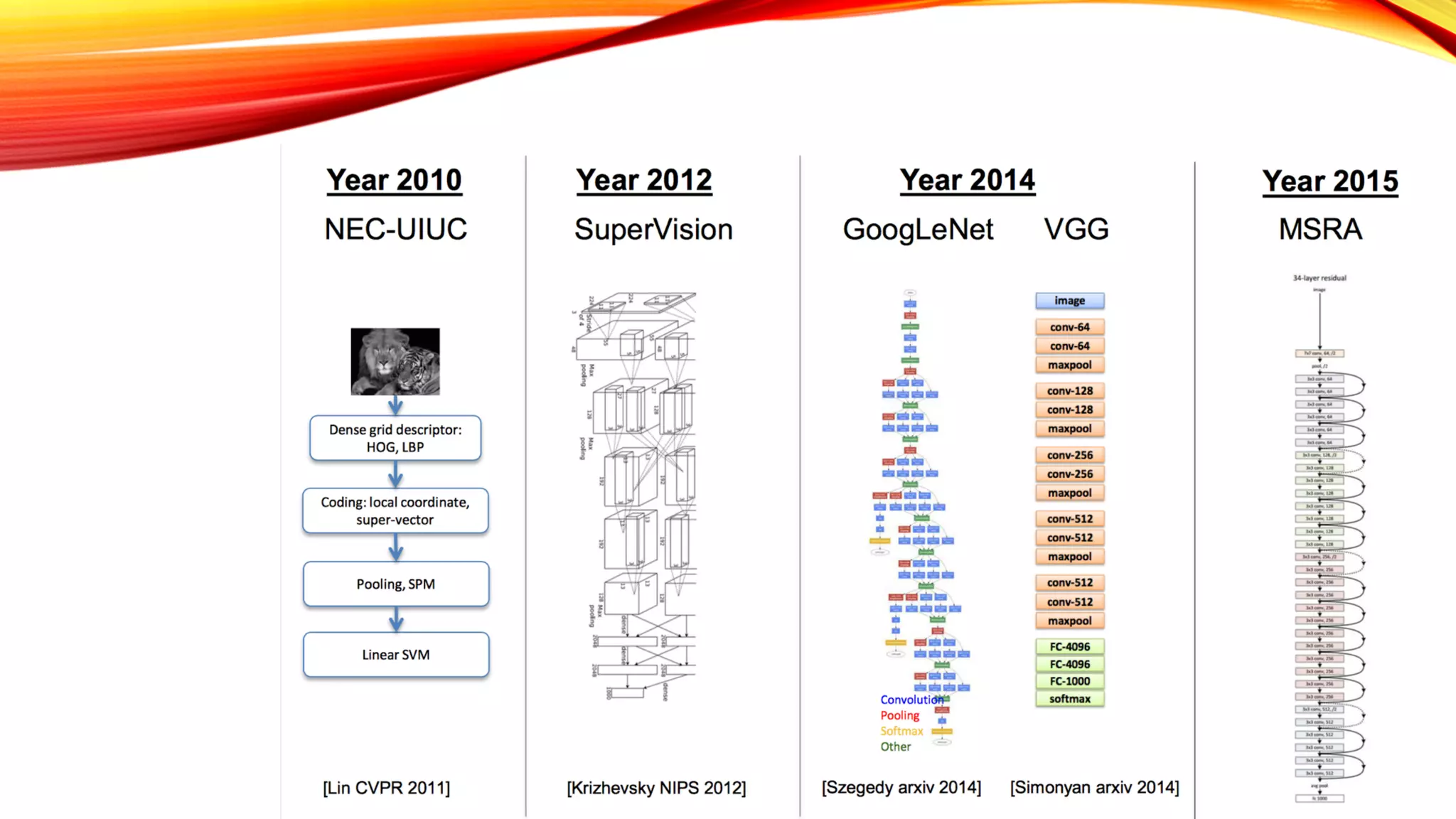

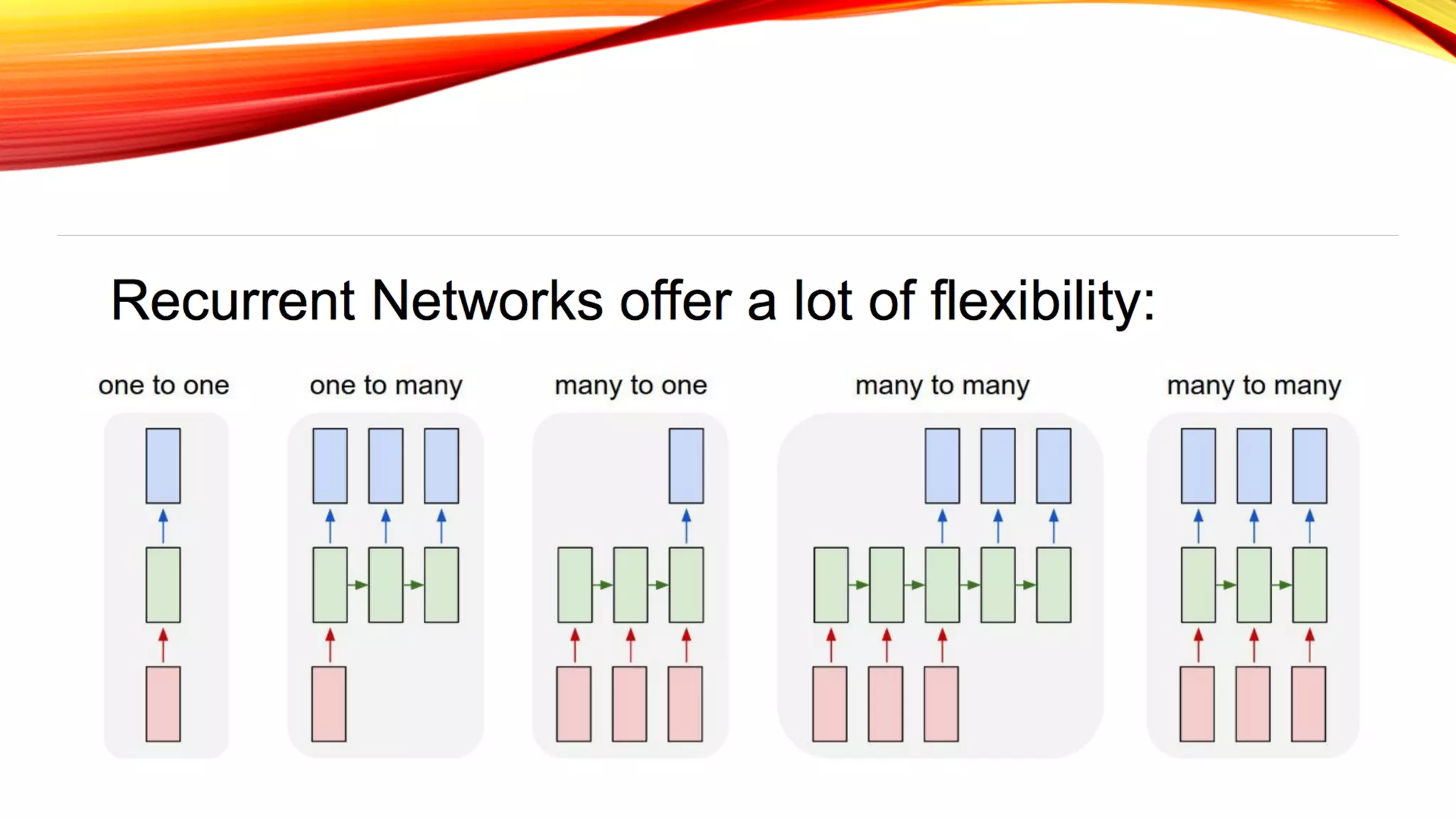

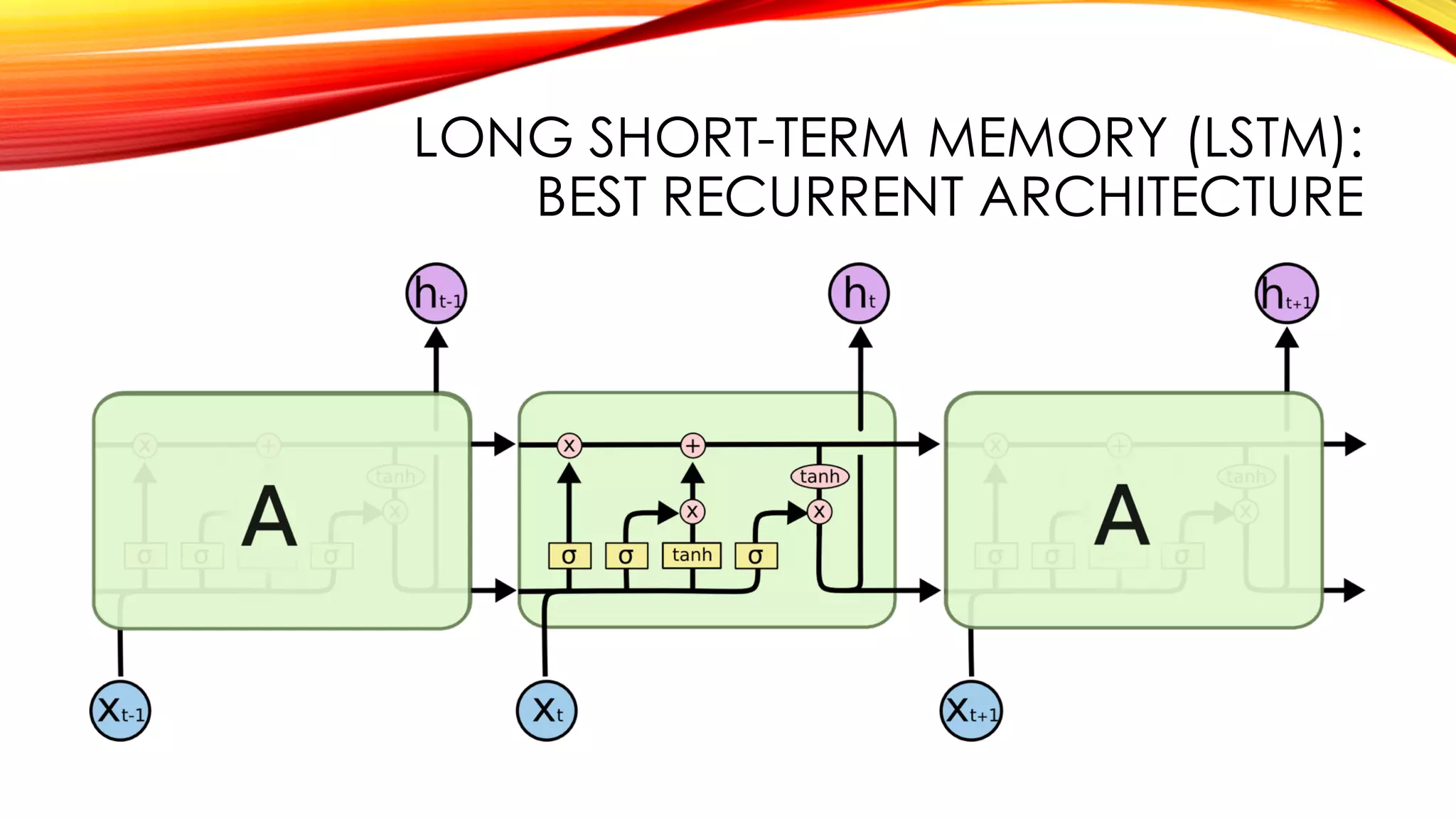

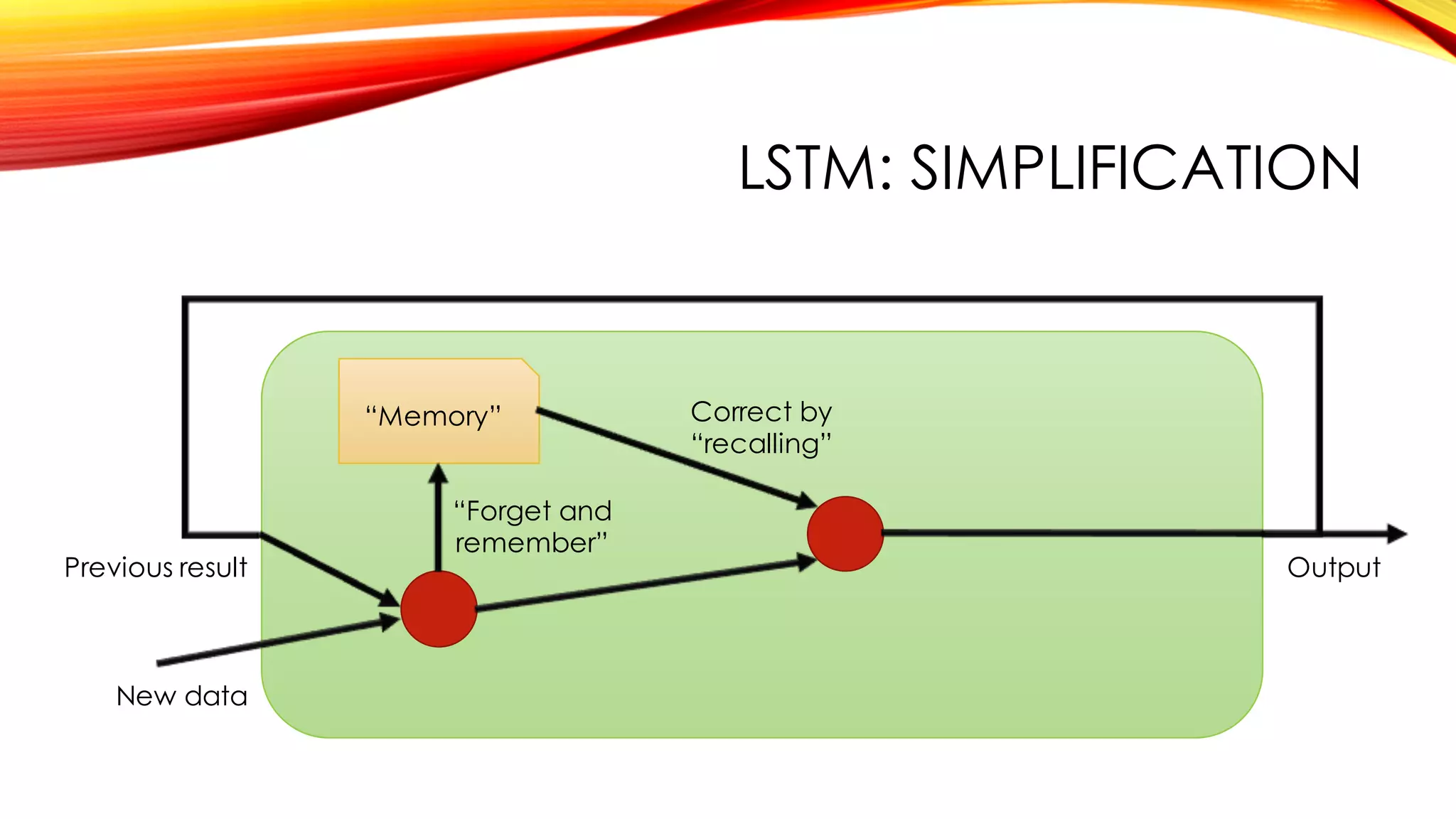

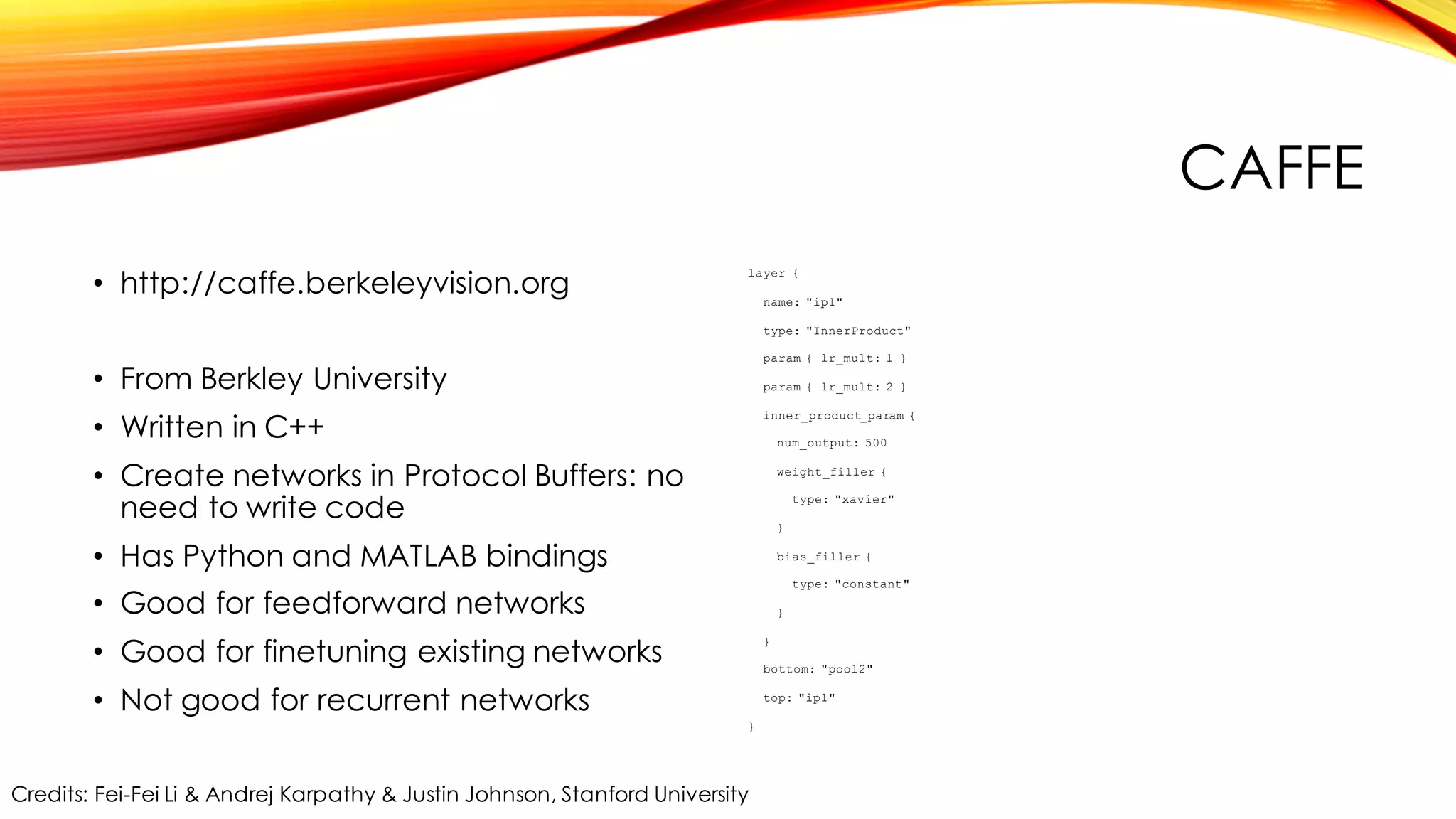

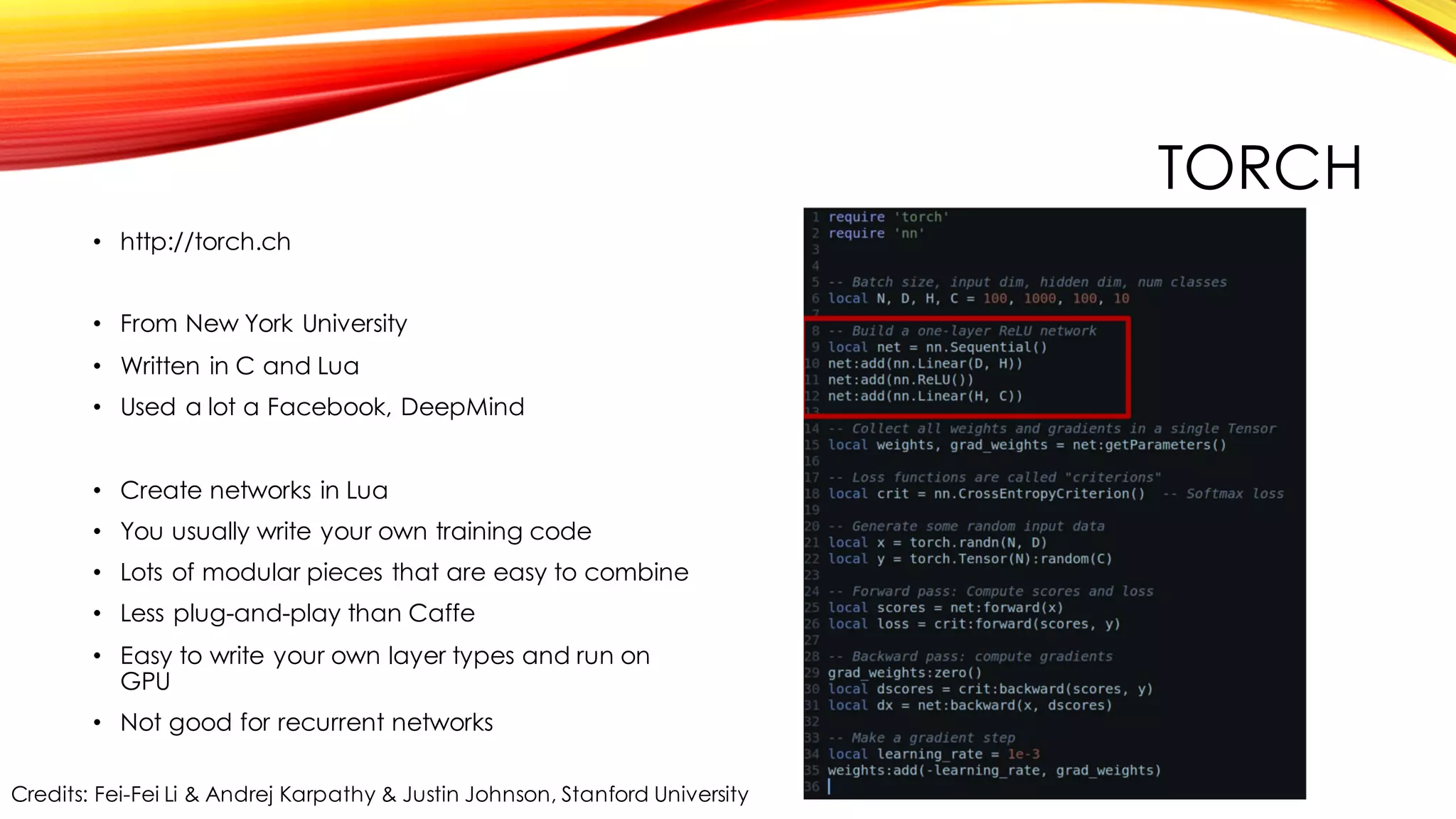

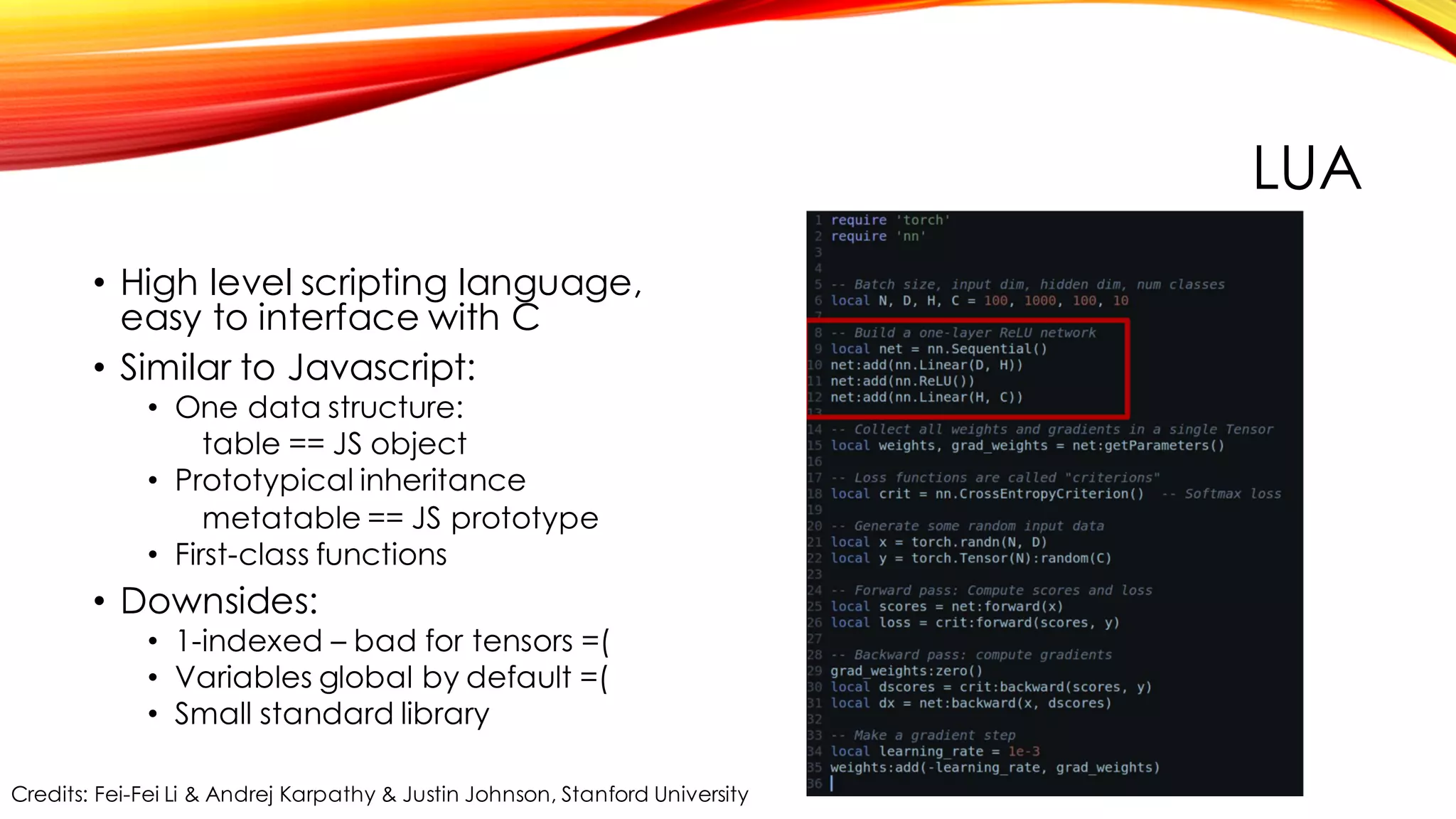

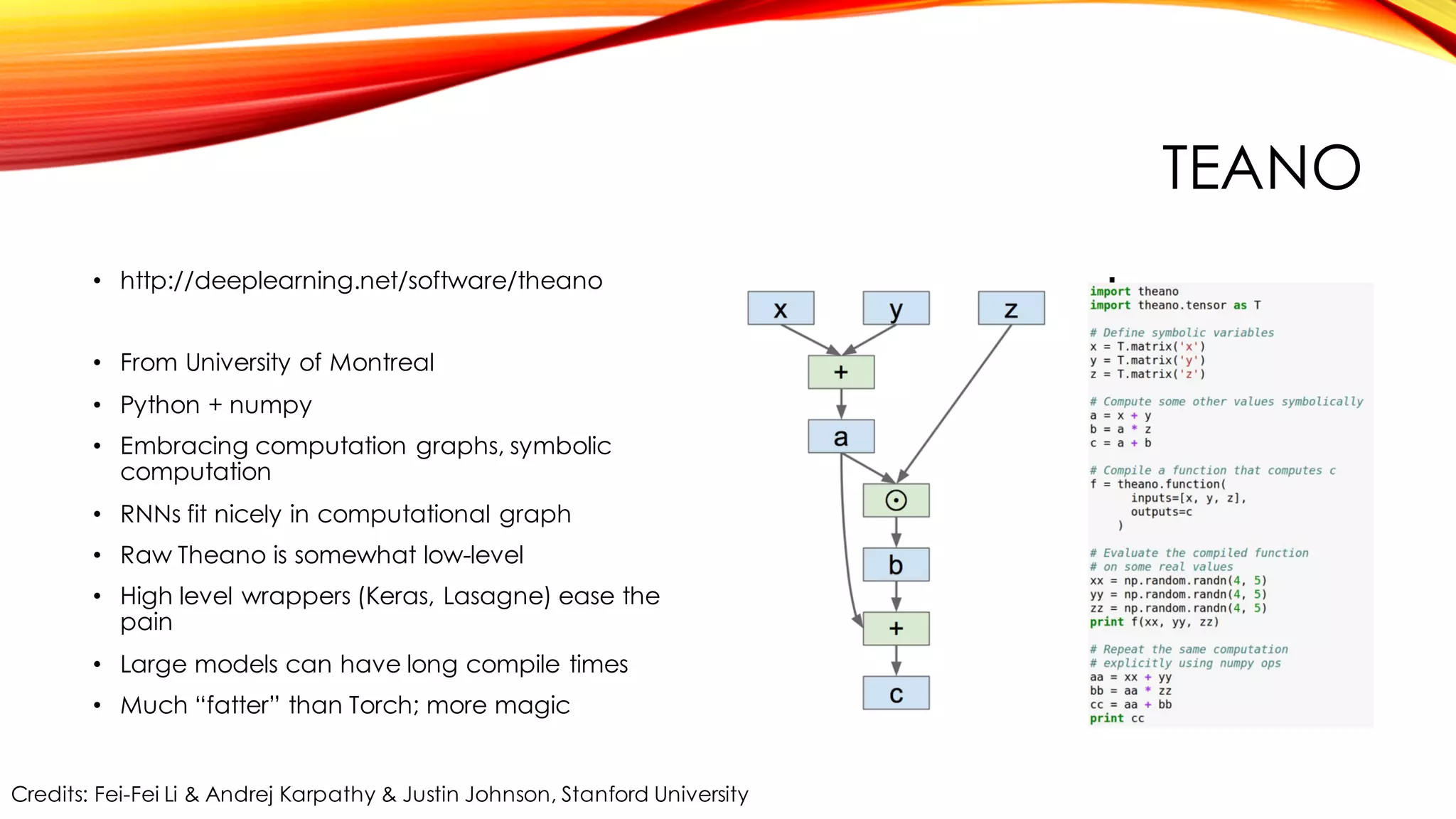

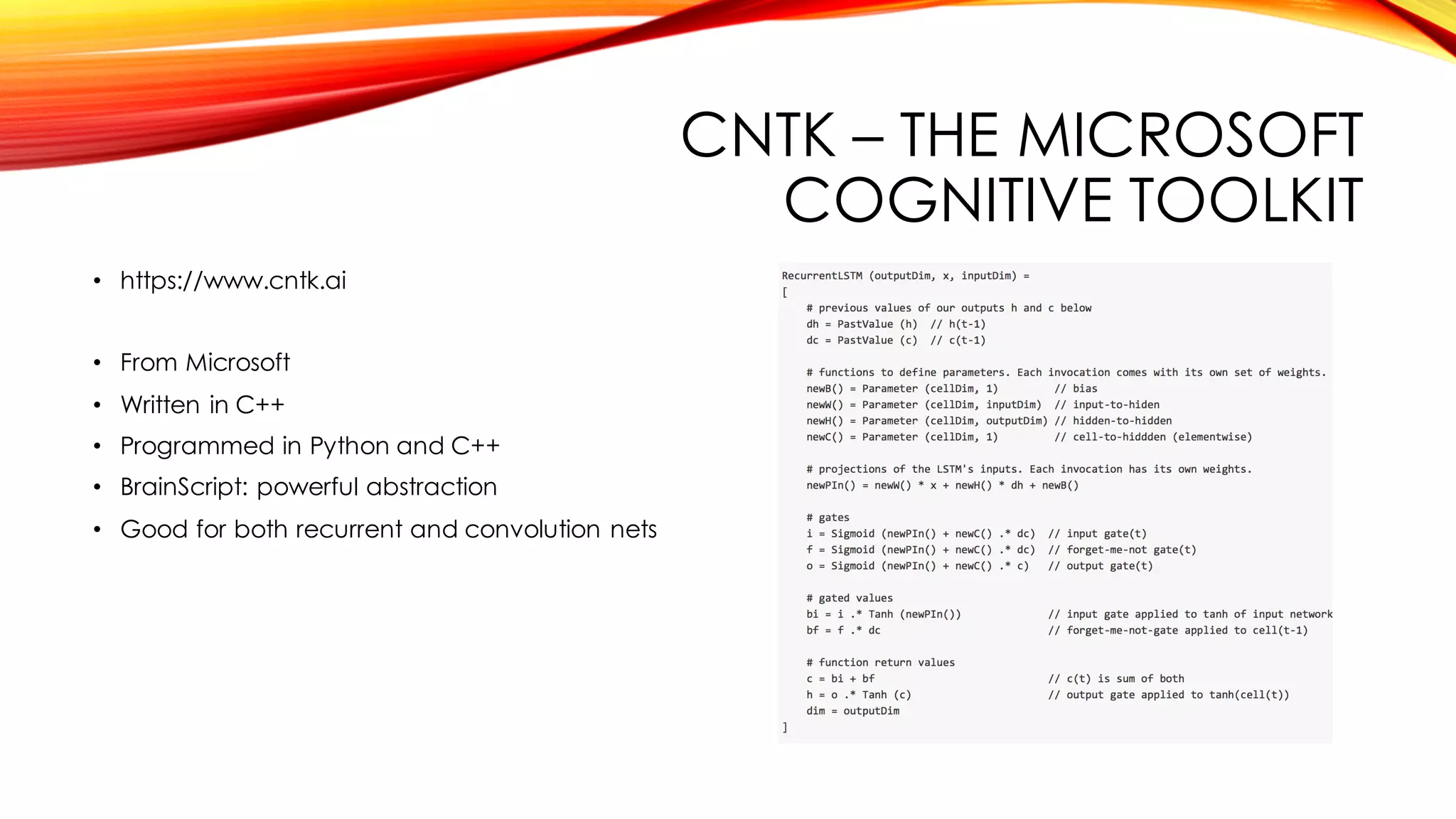

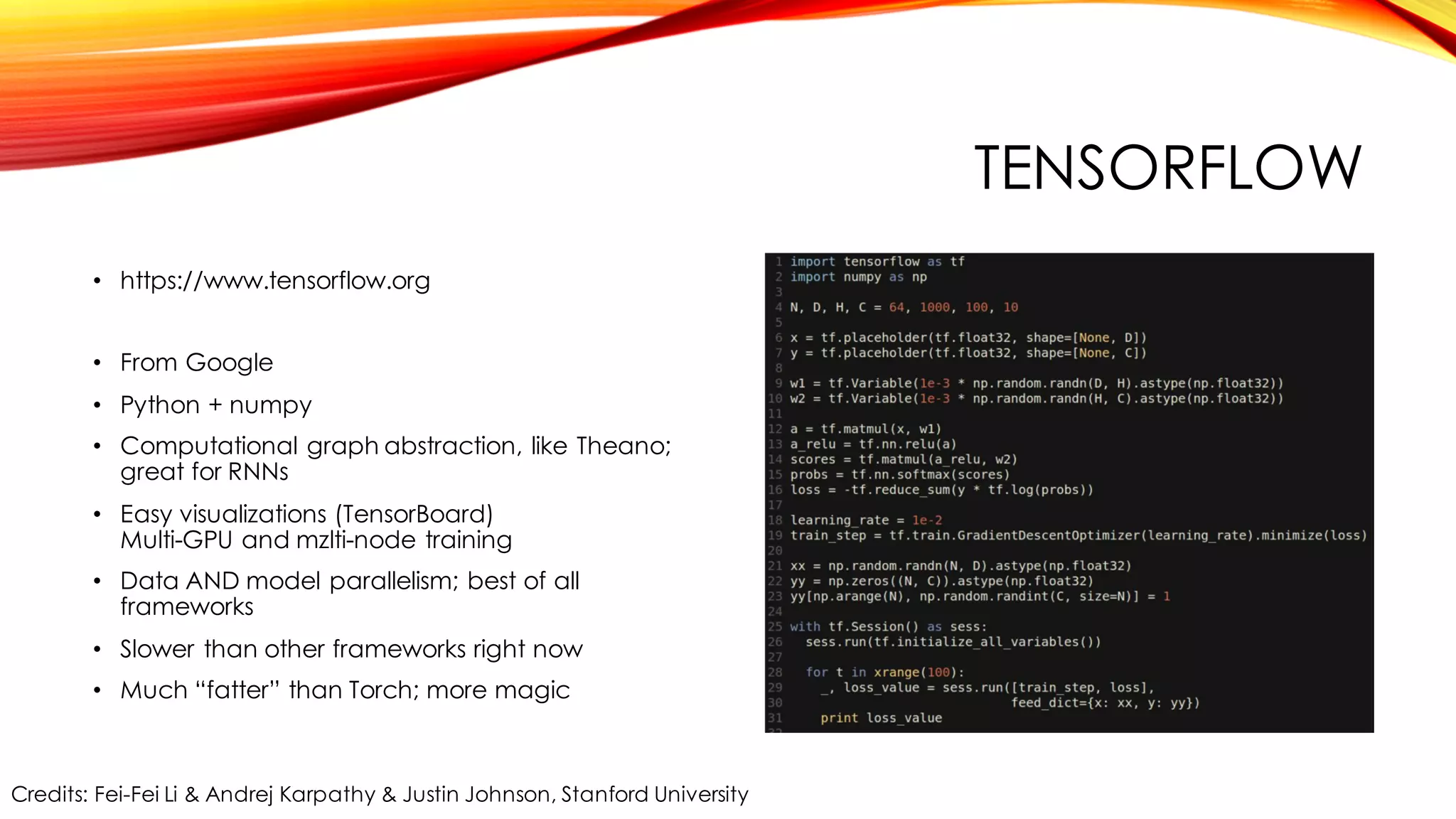

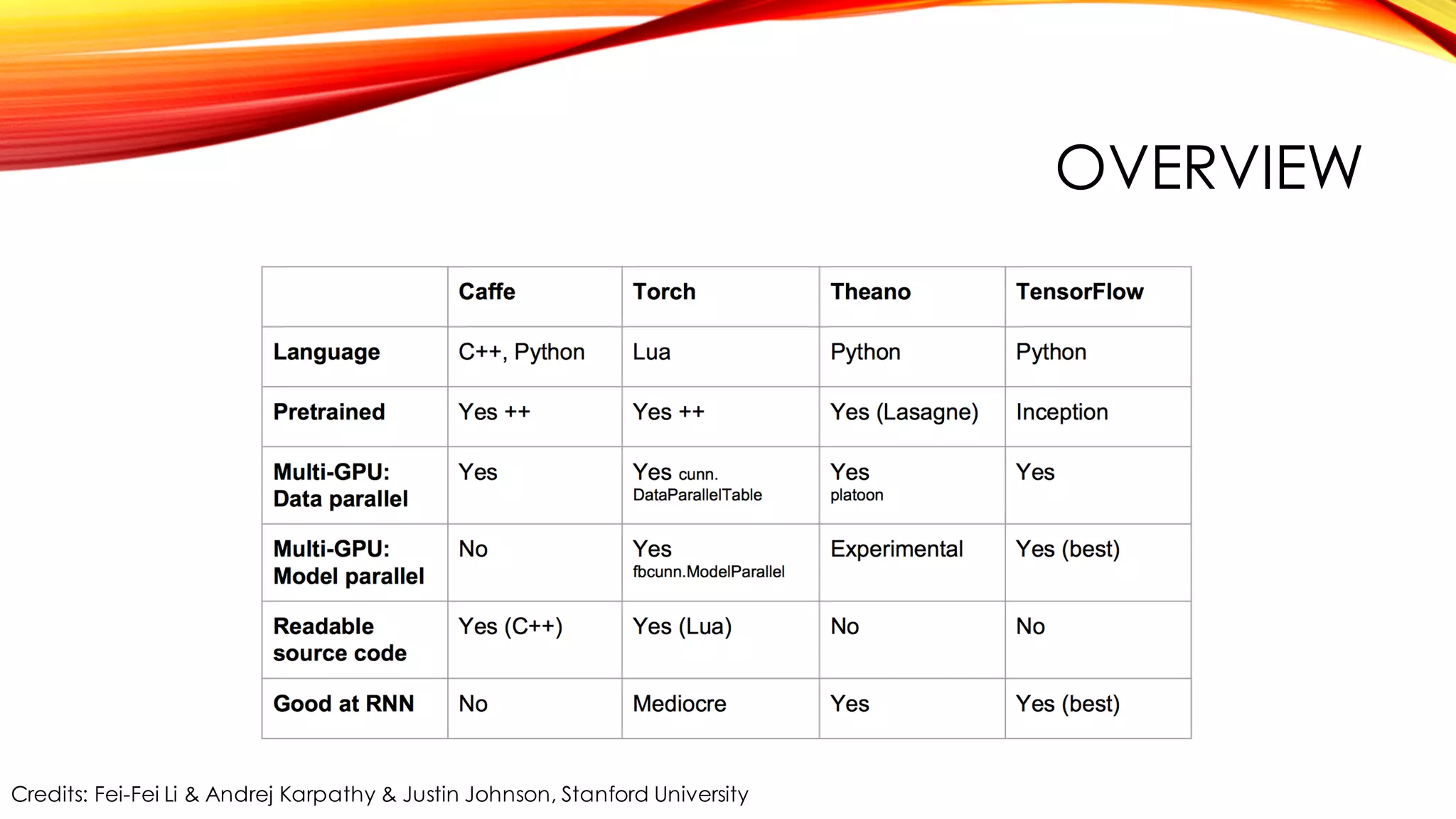

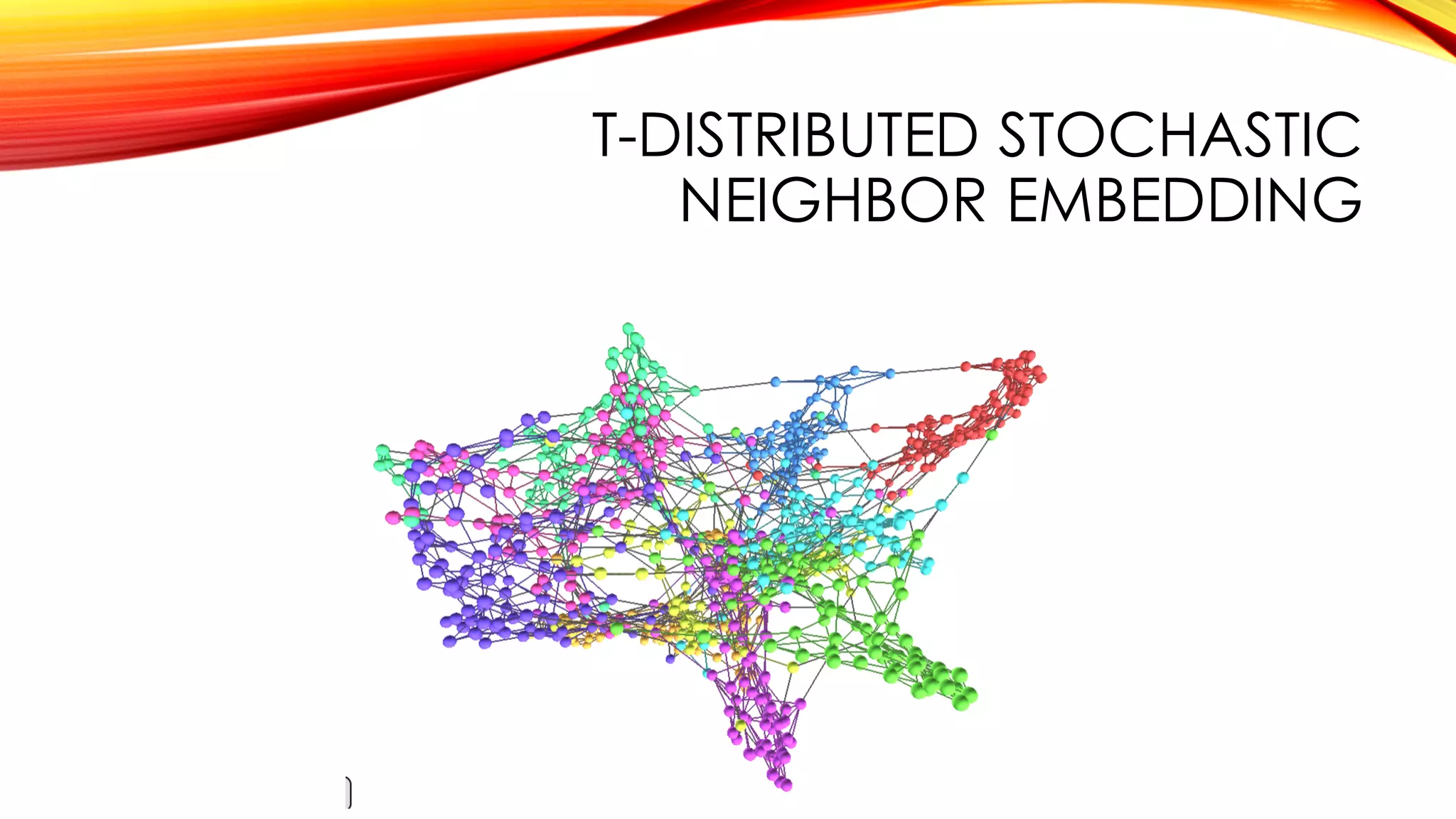

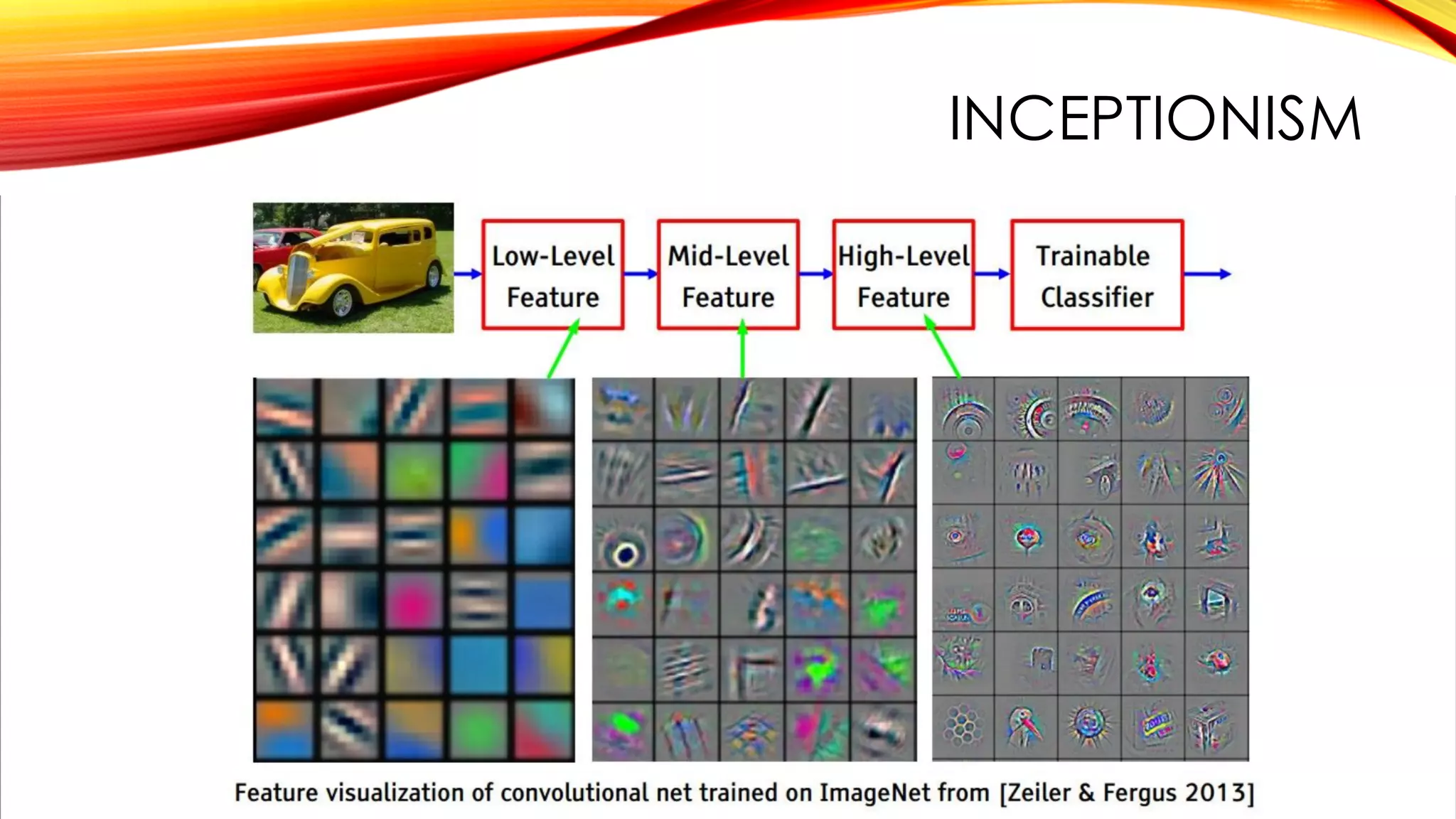

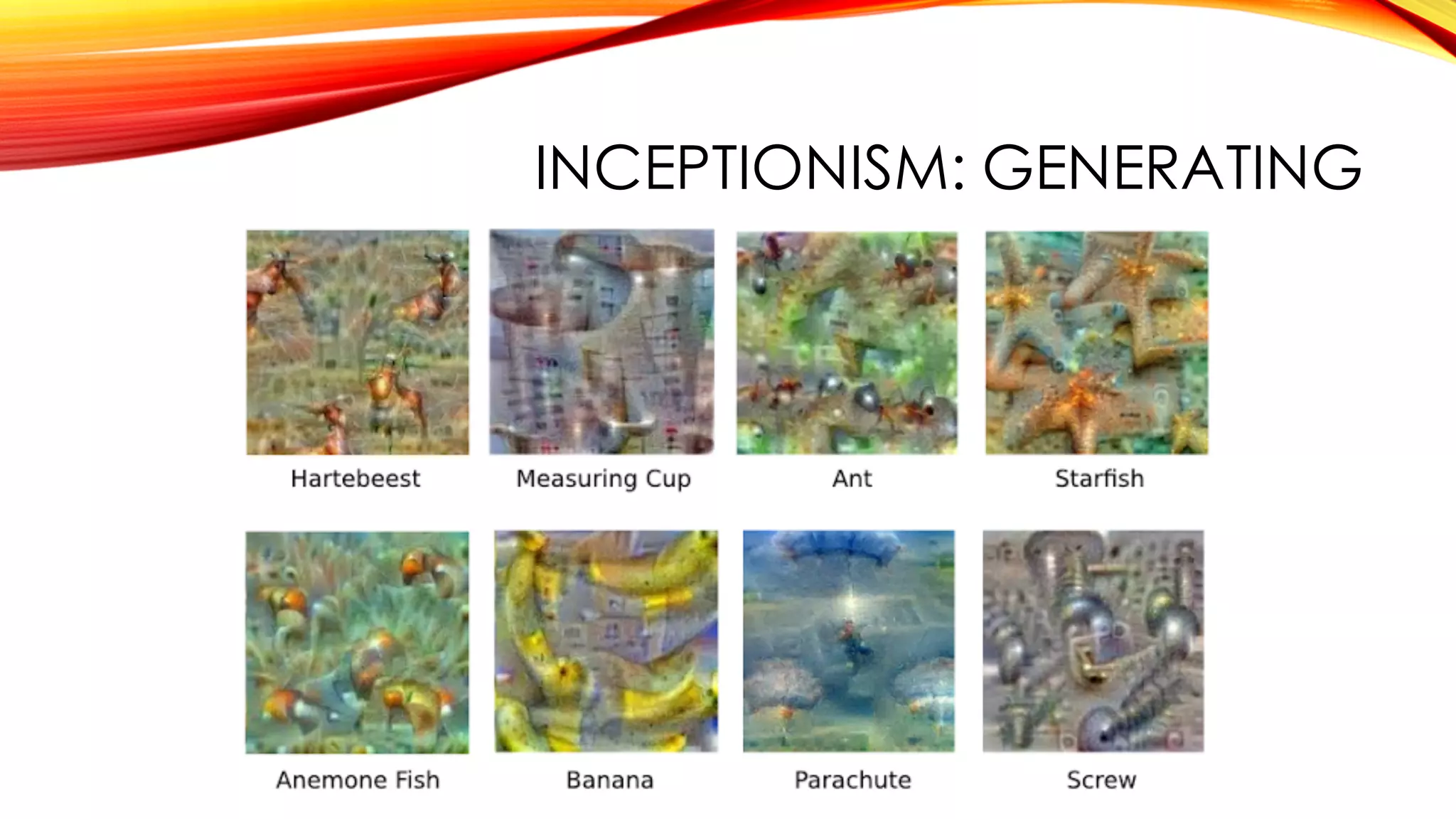

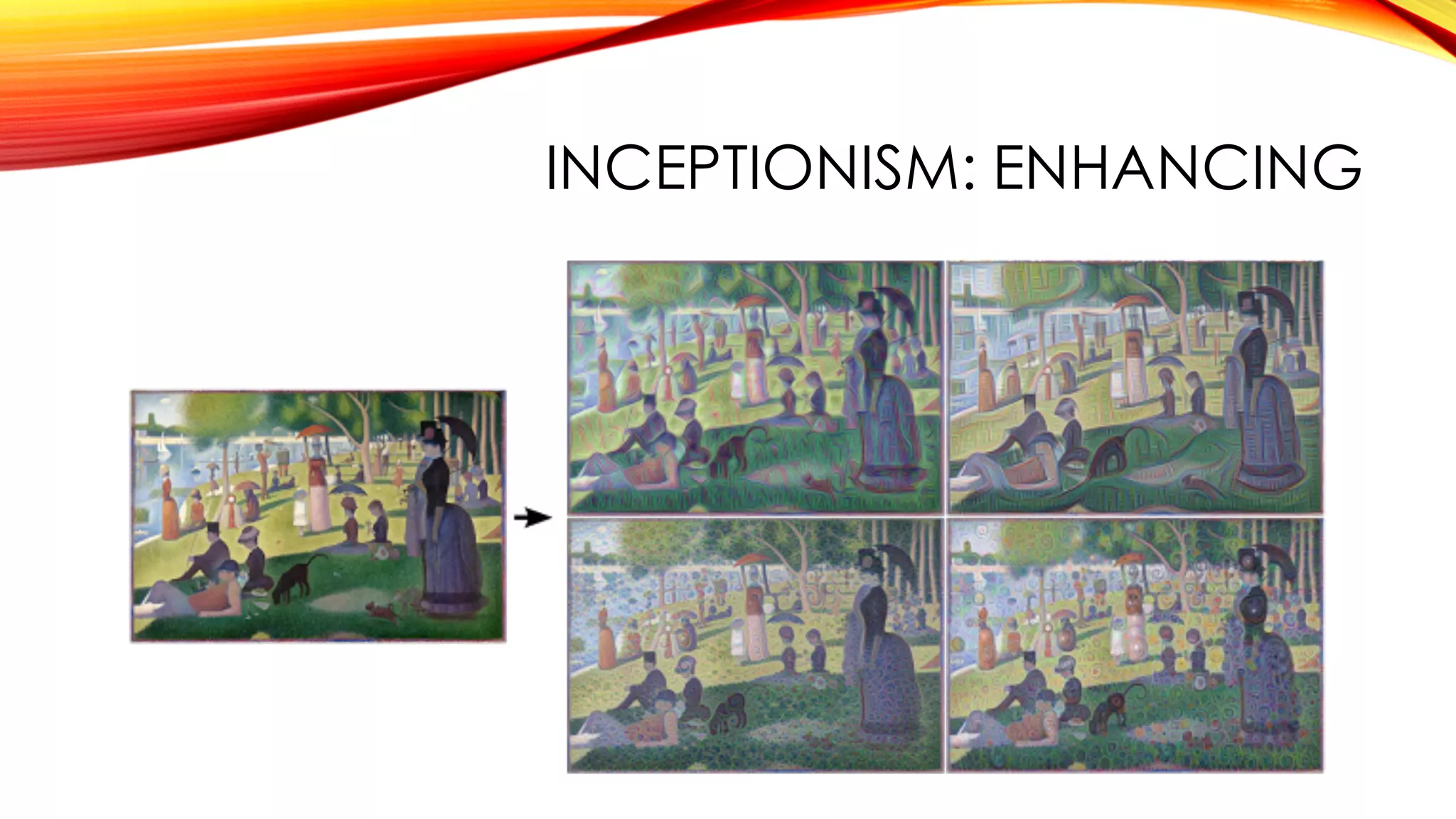

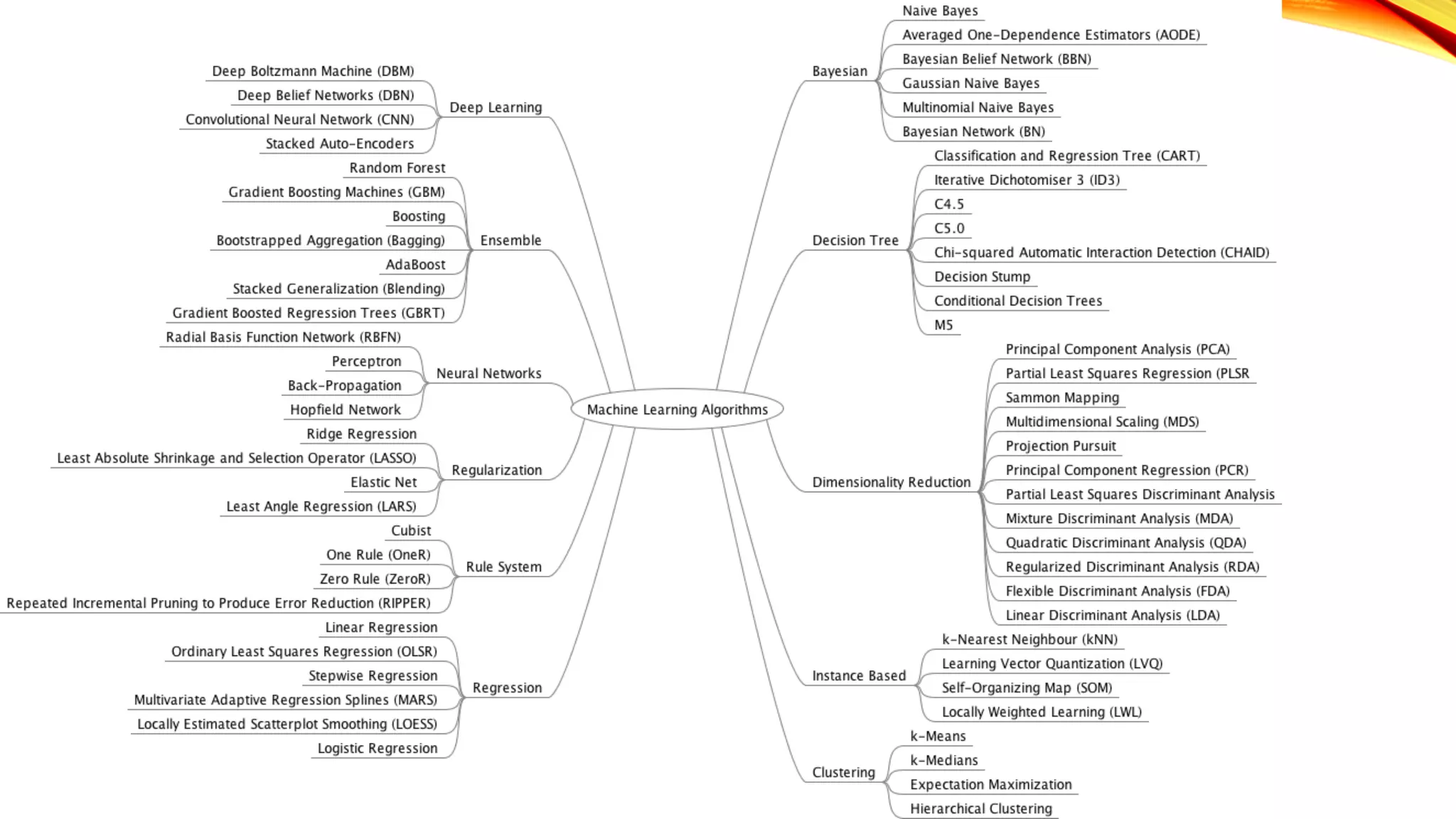

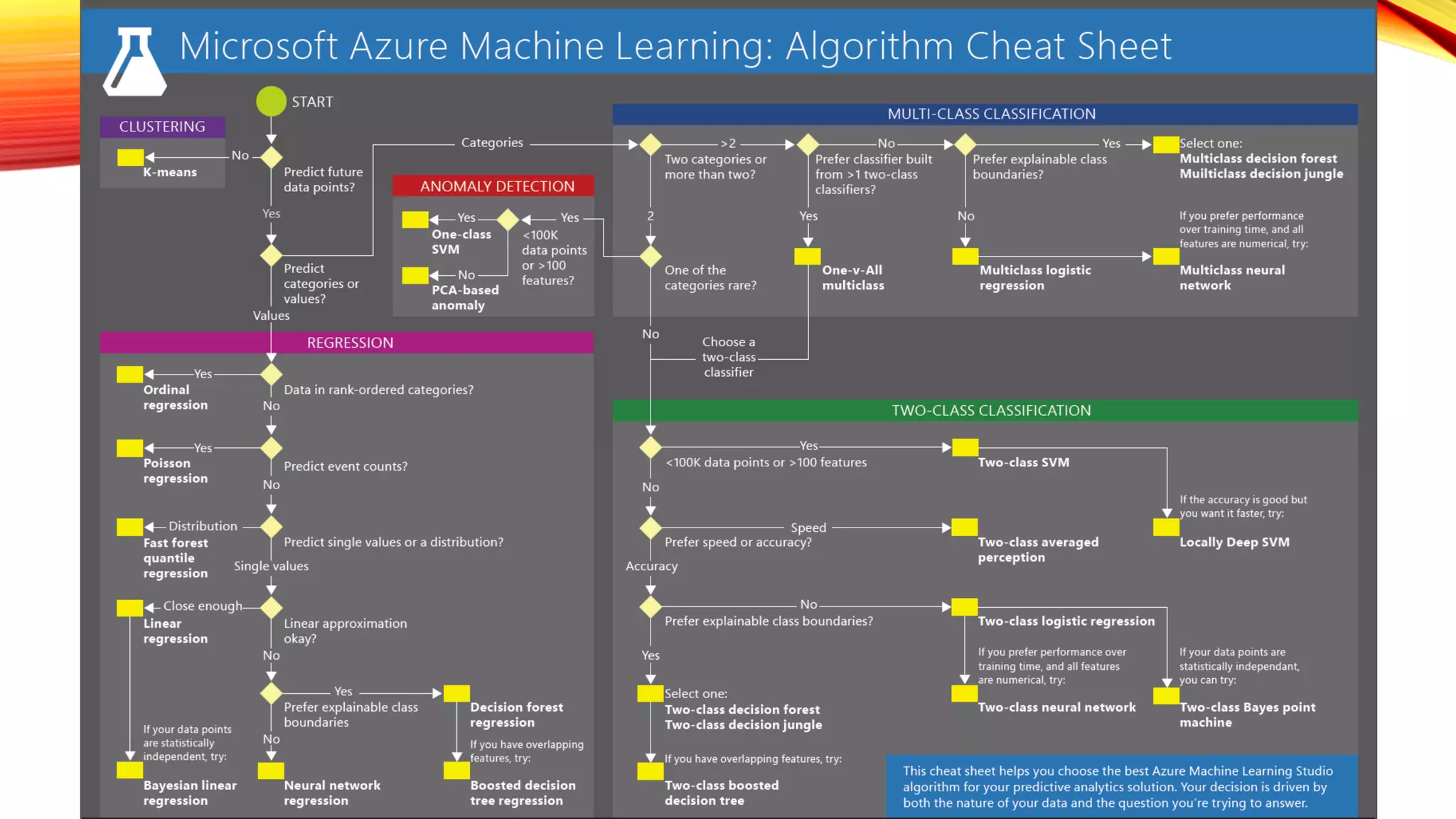

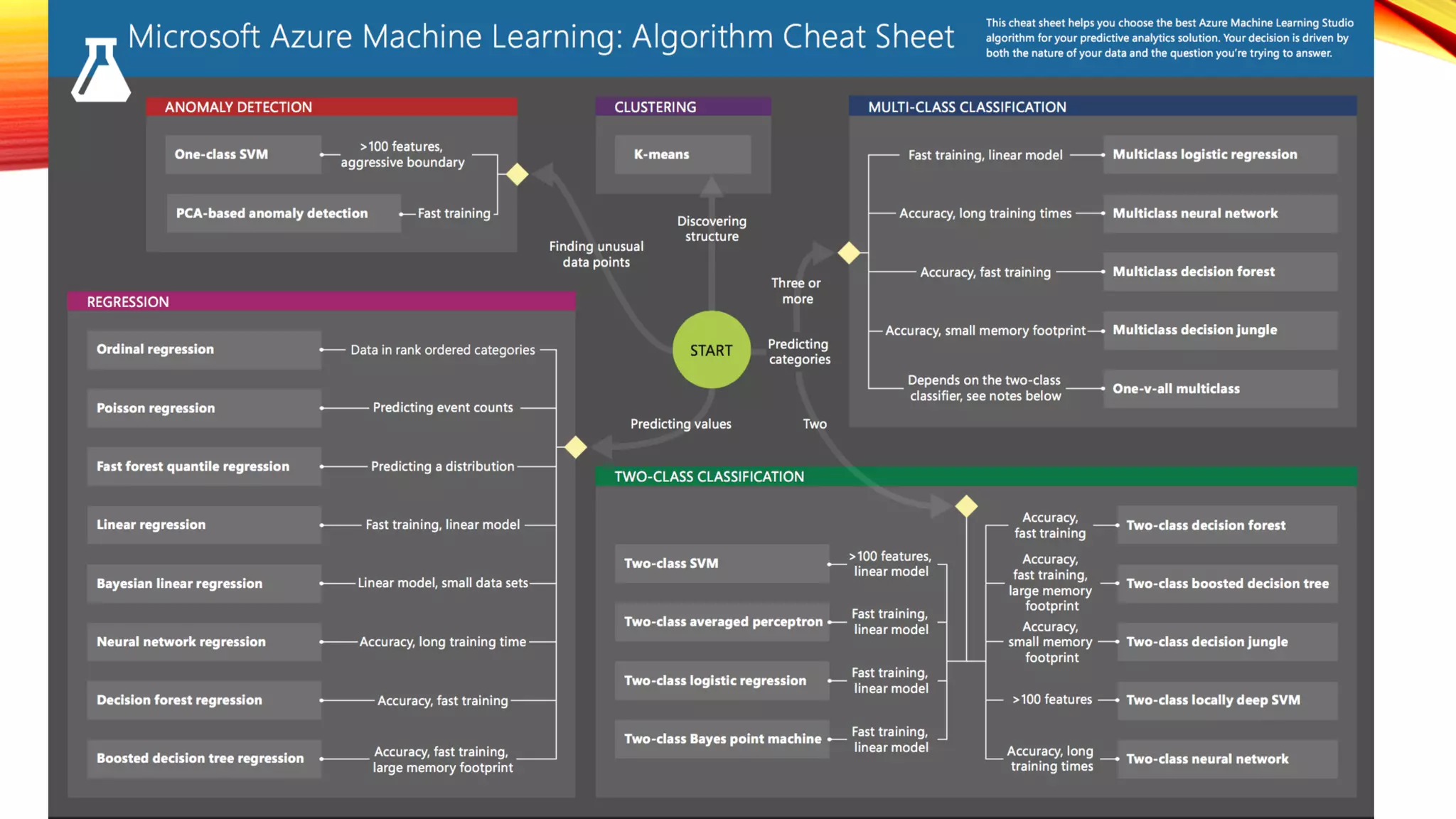

The document provides a comprehensive overview of data science, machine learning, neural networks, and artificial intelligence, emphasizing their interdisciplinary nature and methodologies. It outlines concepts such as cloud computing, types of machine learning, the architecture of neural networks, and their applications in various fields, along with frameworks for implementing these technologies. Additionally, it discusses the significance of big data and offers guidance for startups in designing AI products and utilizing available resources.