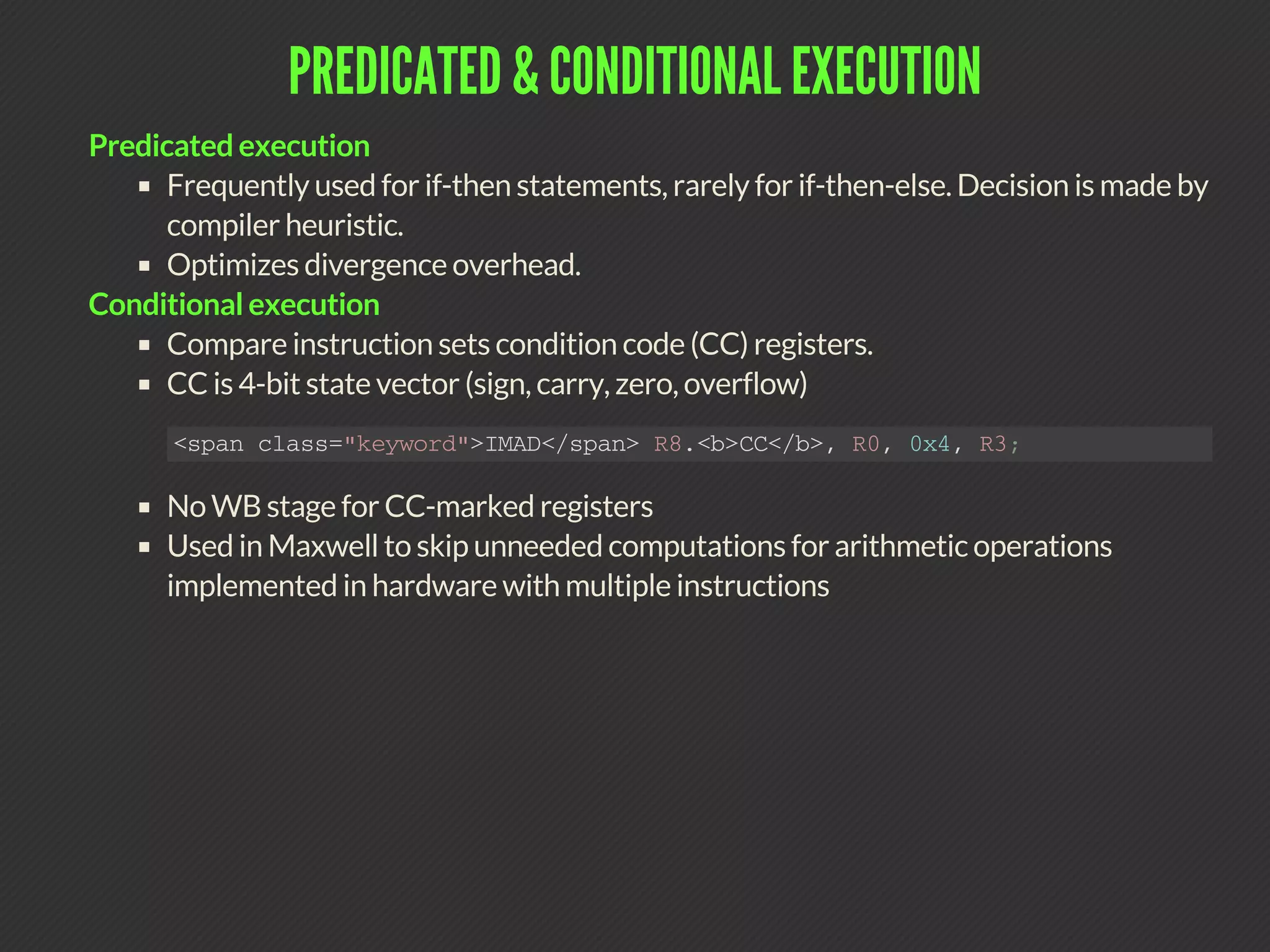

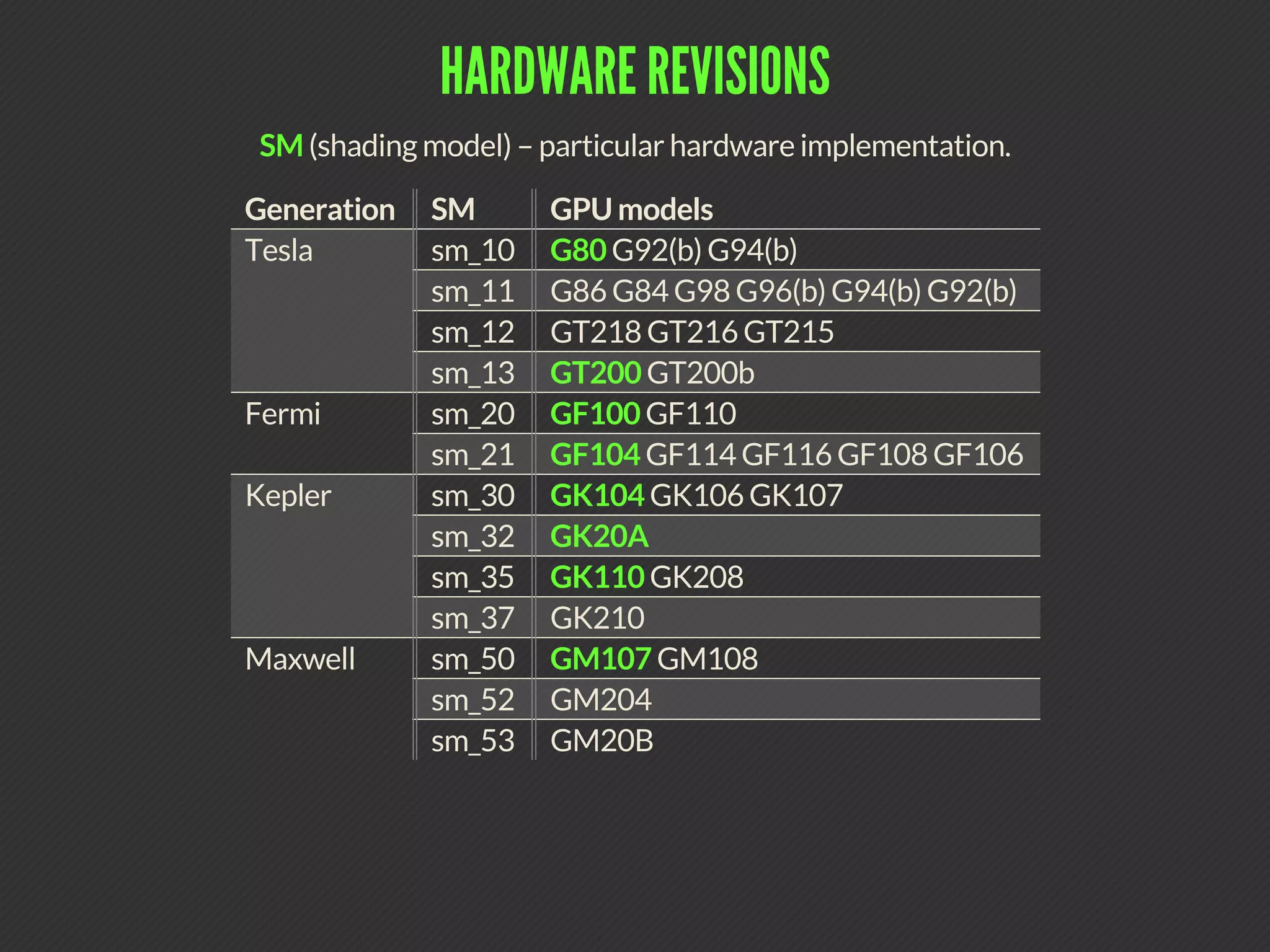

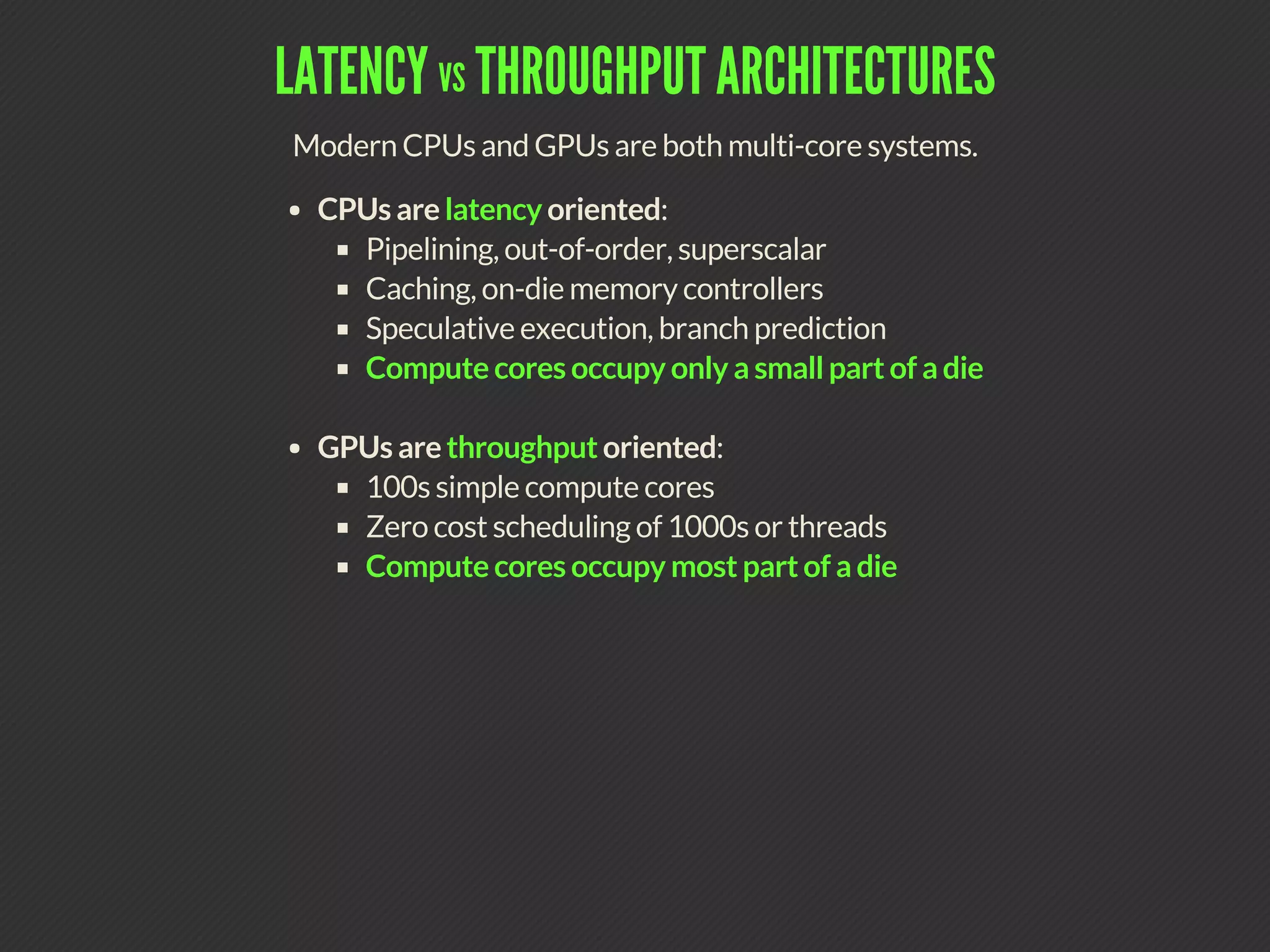

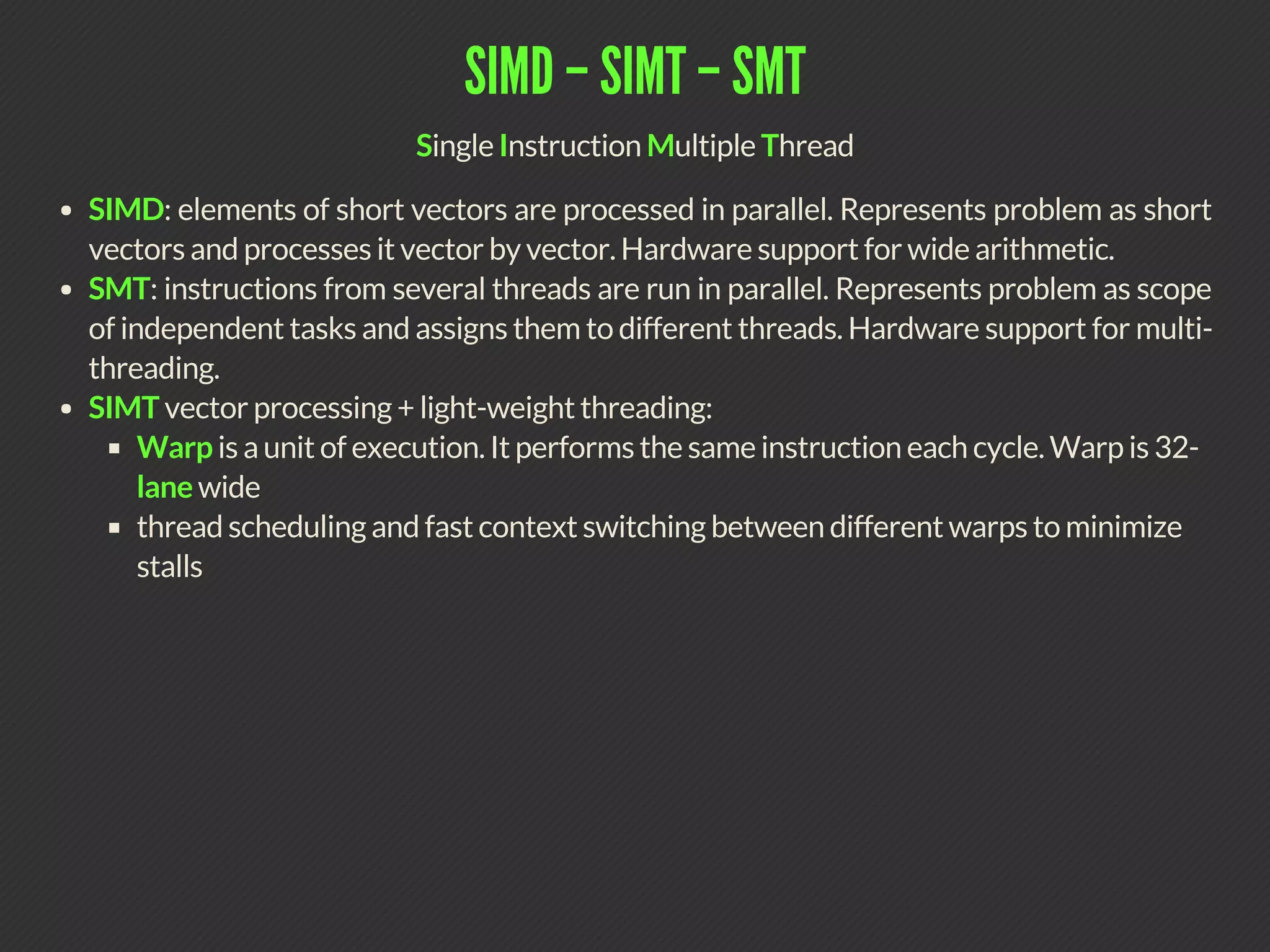

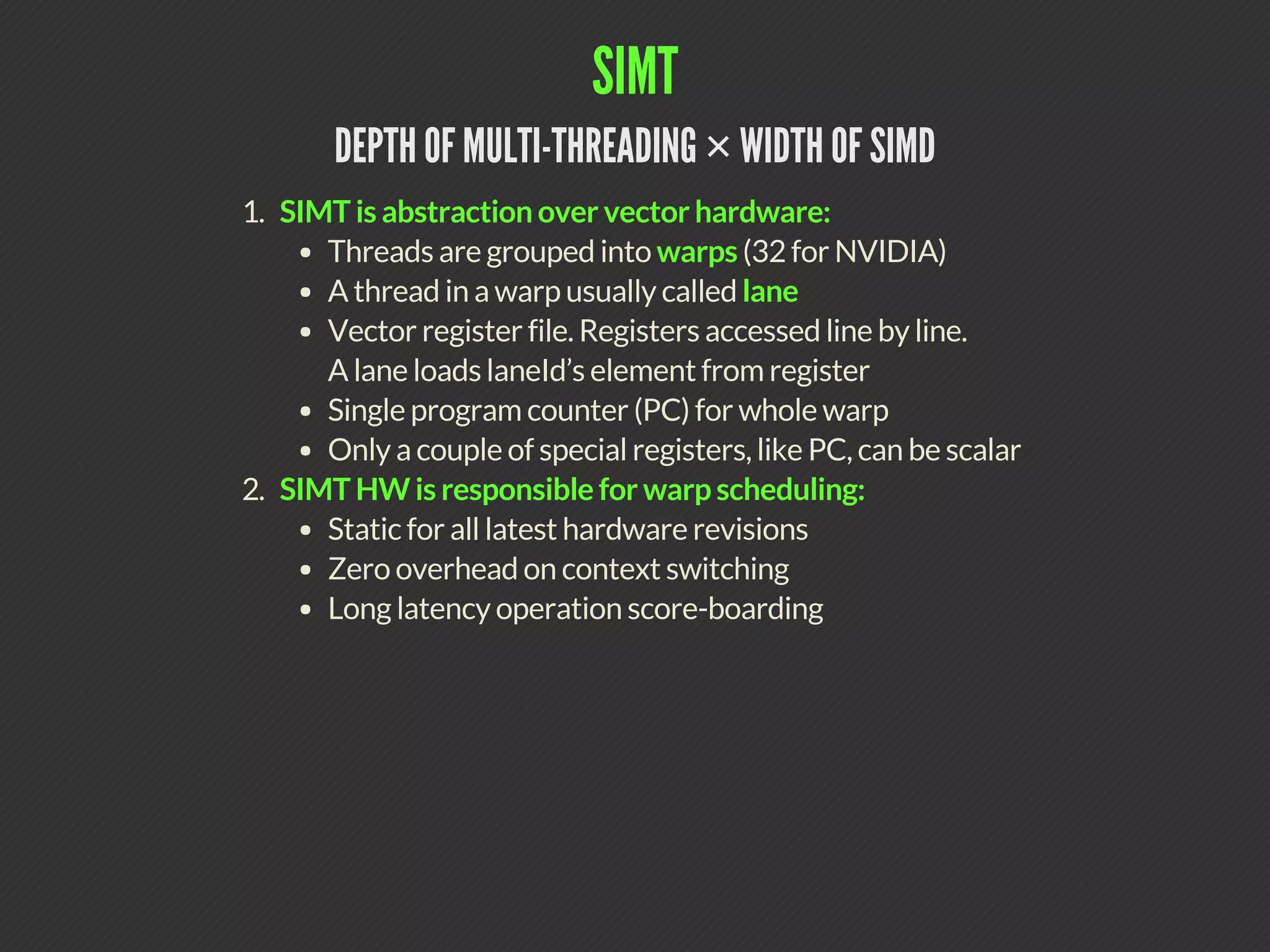

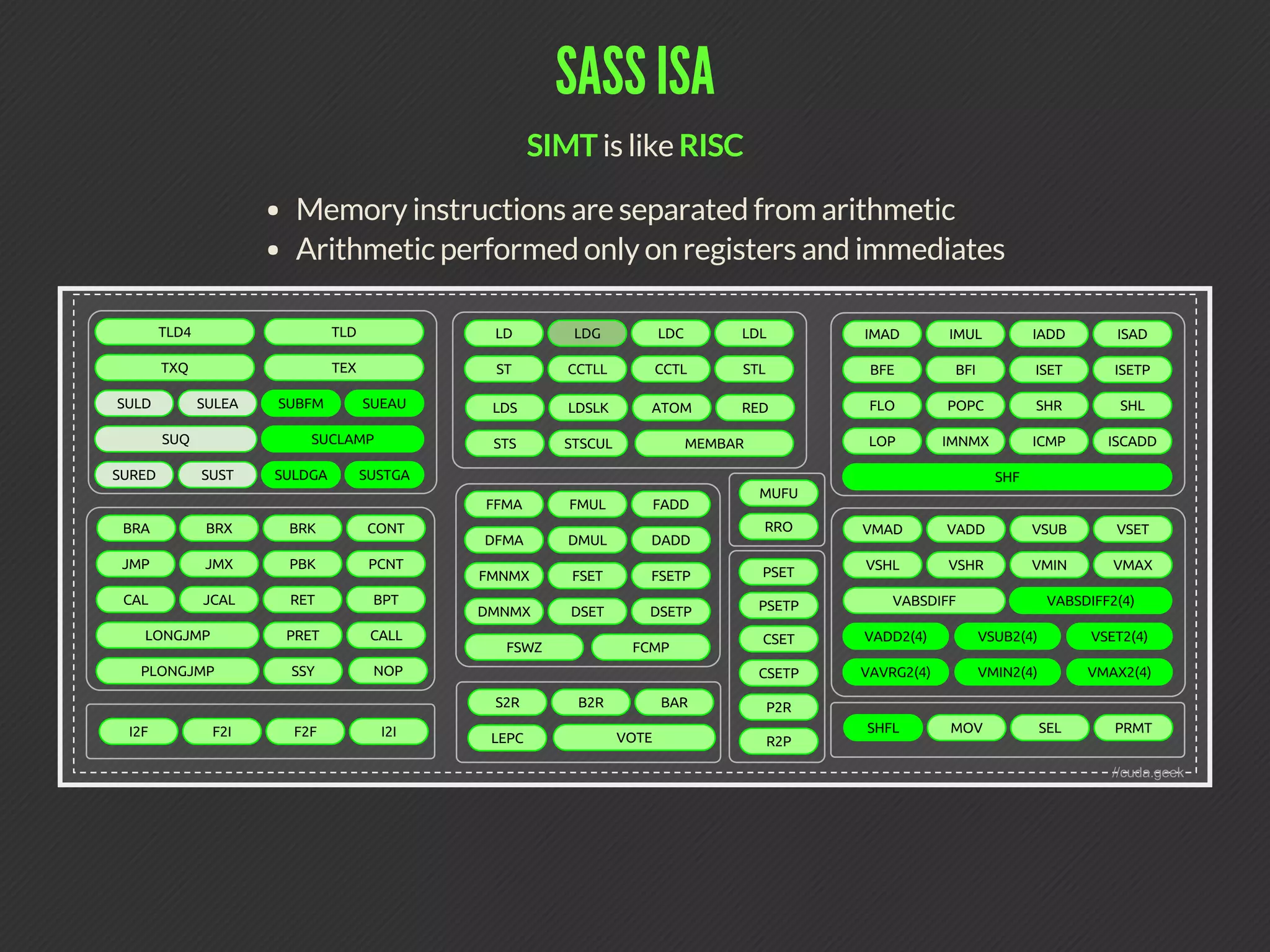

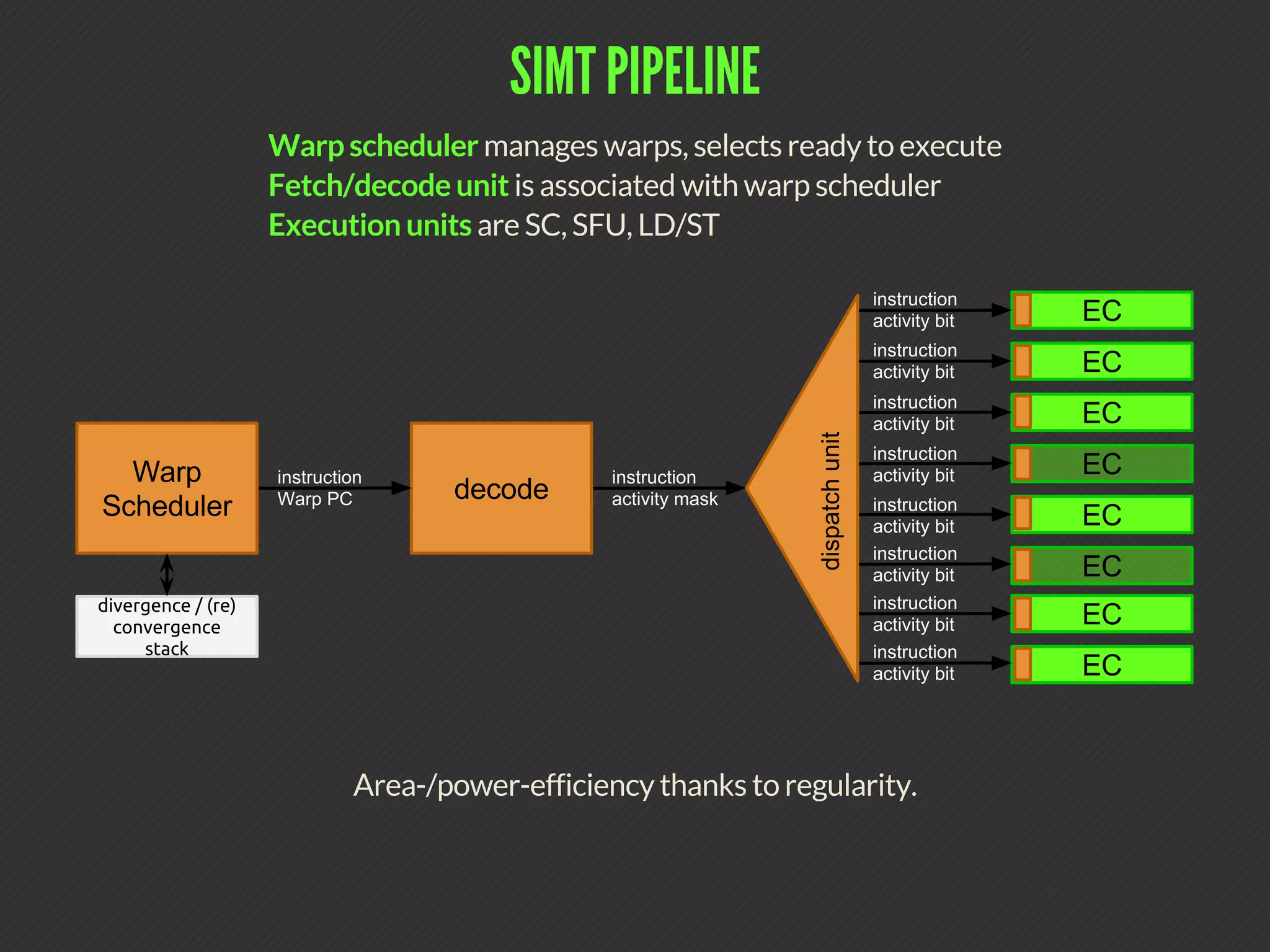

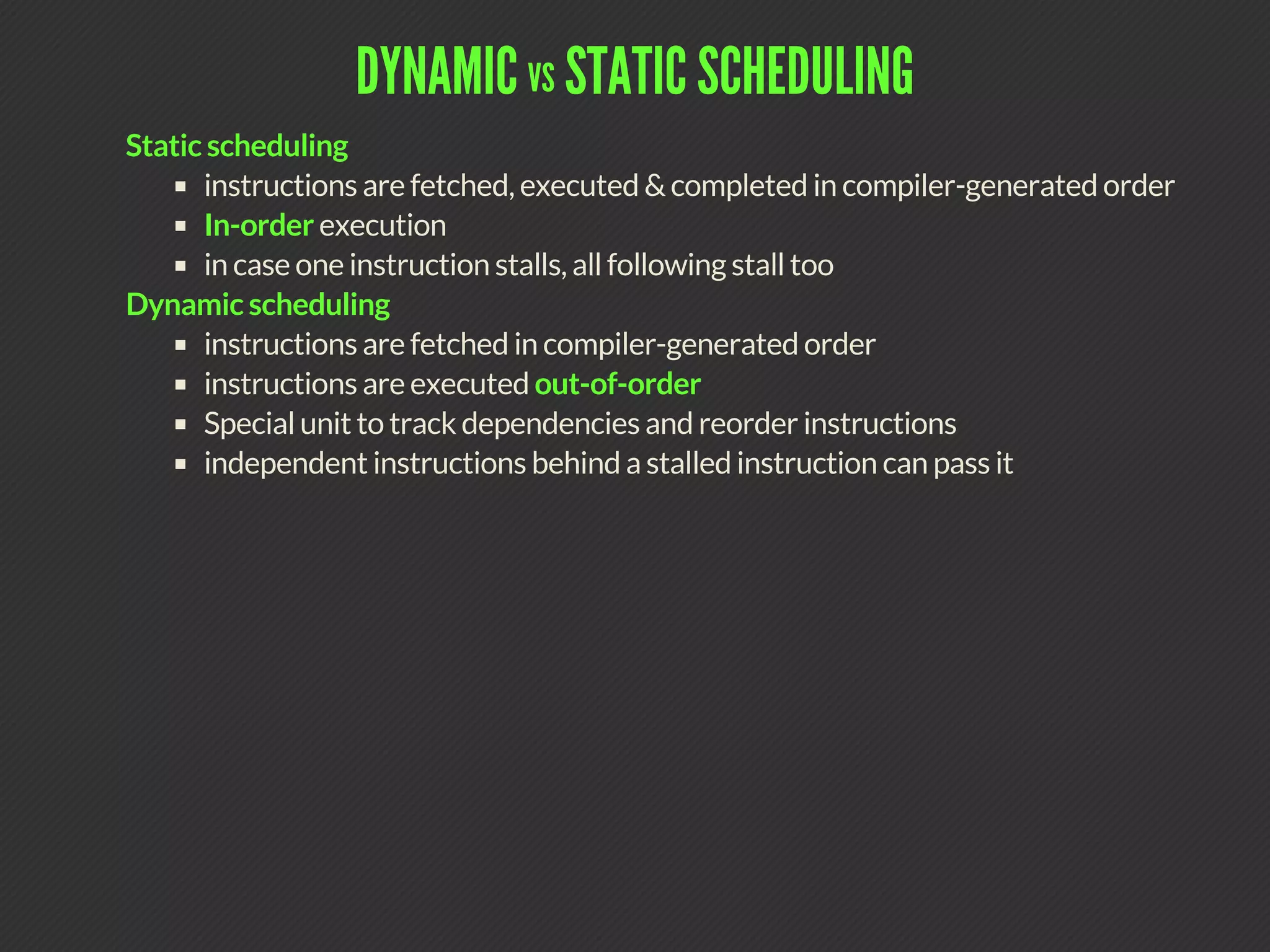

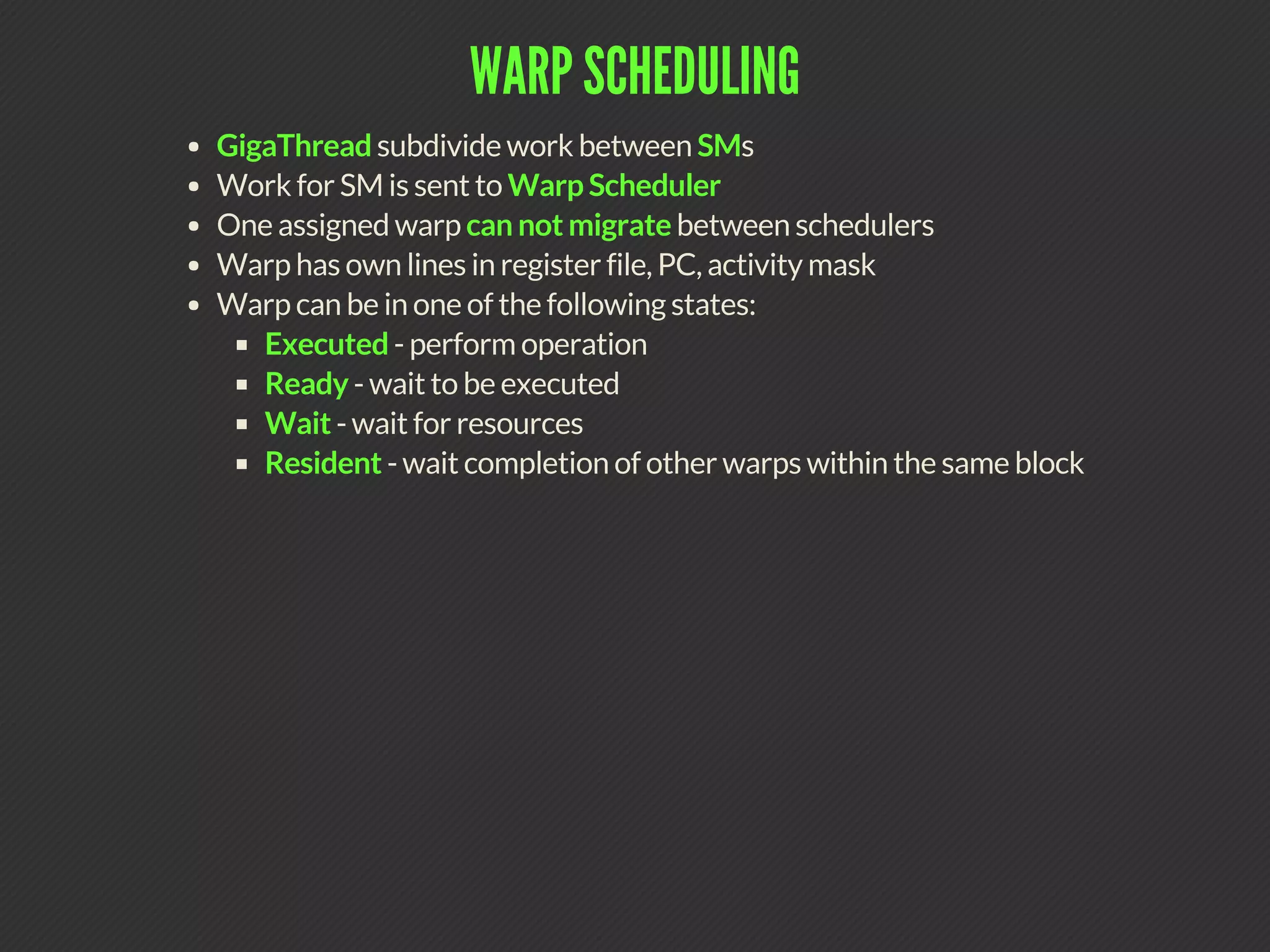

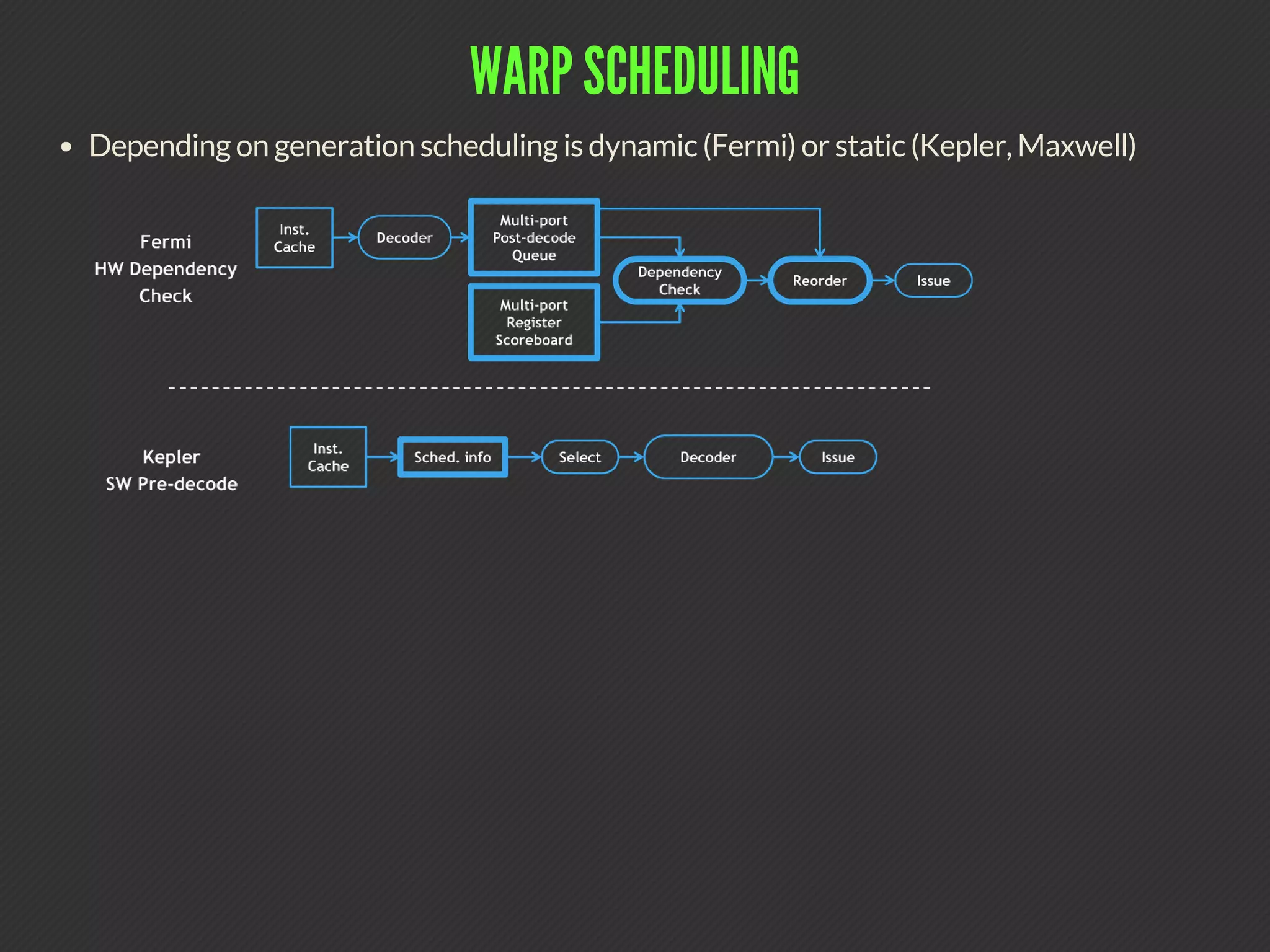

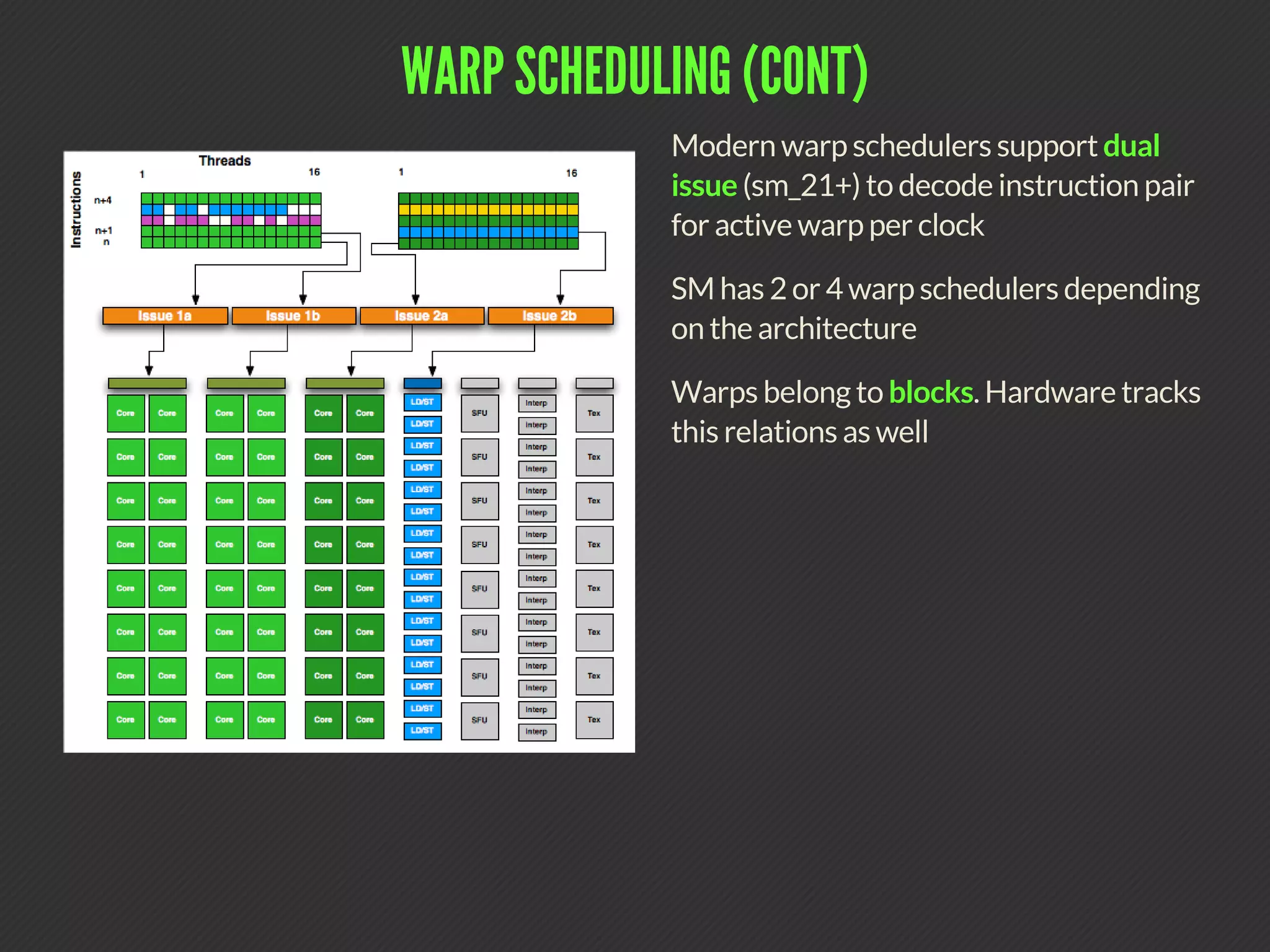

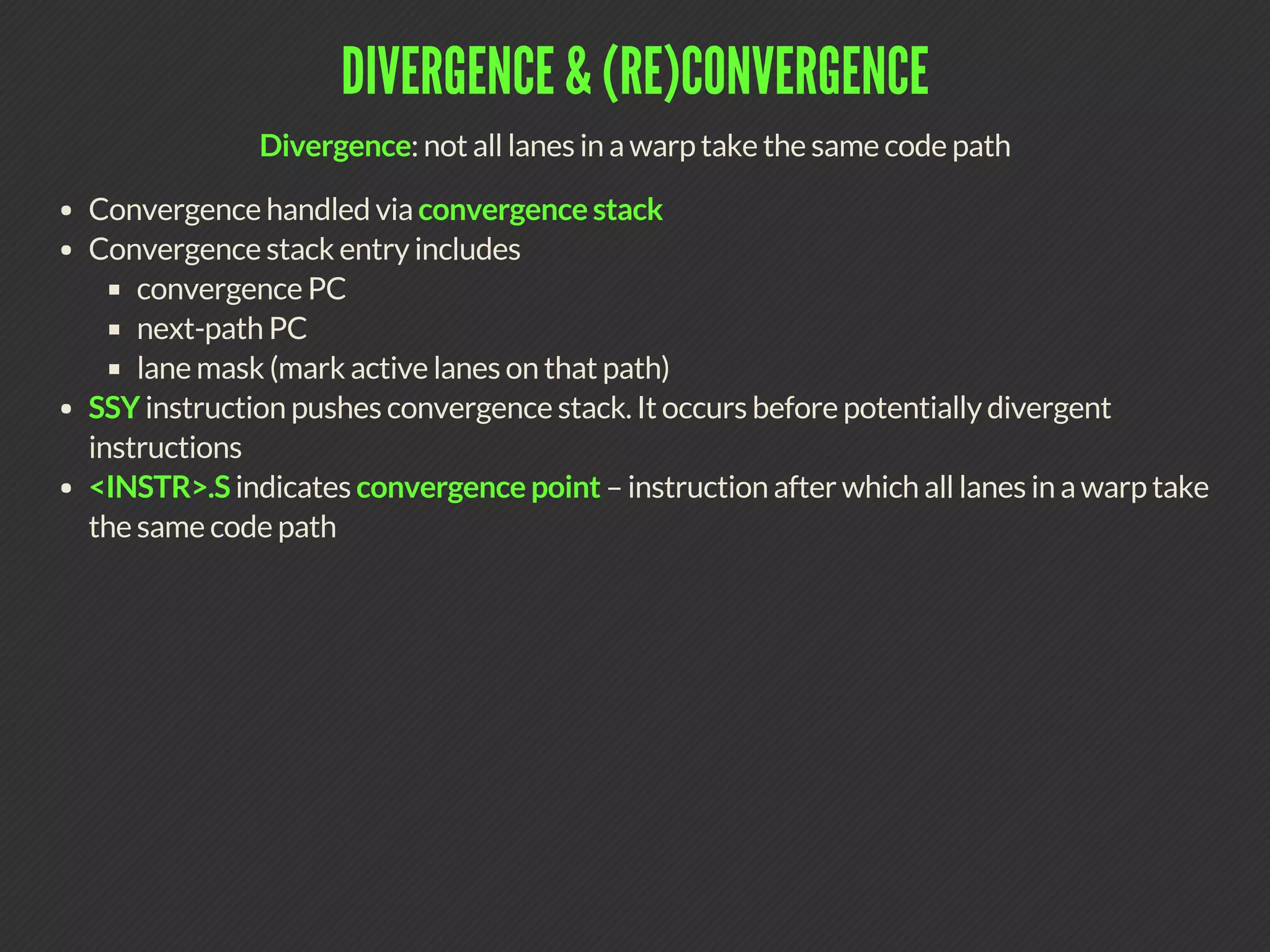

This document summarizes key aspects of GPU hardware and the SIMT (Single Instruction Multiple Thread) architecture used in NVIDIA GPUs. It describes the evolution of NVIDIA GPU hardware, the differences between latency-oriented CPUs and throughput-oriented GPUs, how SIMT combines SIMD and threading, warp scheduling, divergence and convergence, predicated and conditional execution.

![DIVERGENT CODE EXAMPLE

( v o i d ) a t o m i c A d d ( & s m e m [ 0 ] , s r c [ t h r e a d I d x . x ] ) ;

/ * 0 0 5 0 * / S S Y 0 x 8 0 ;

/ * 0 0 5 8 * / L D S L K P 0 , R 3 , [ R Z ] ;

/ * 0 0 6 0 * / @ P 0 I A D D R 3 , R 3 , R 0 ;

/ * 0 0 6 8 * / @ P 0 S T S U L [ R Z ] , R 3 ;

/ * 0 0 7 0 * / @ ! P 0 B R A 0 x 5 8 ;

/ * 0 0 7 8 * / N O P . S ;

Assume warp size == 4](https://image.slidesharecdn.com/code-gpu-with-cuda-0-150915004041-lva1-app6891/75/Code-GPU-with-CUDA-SIMT-16-2048.jpg)