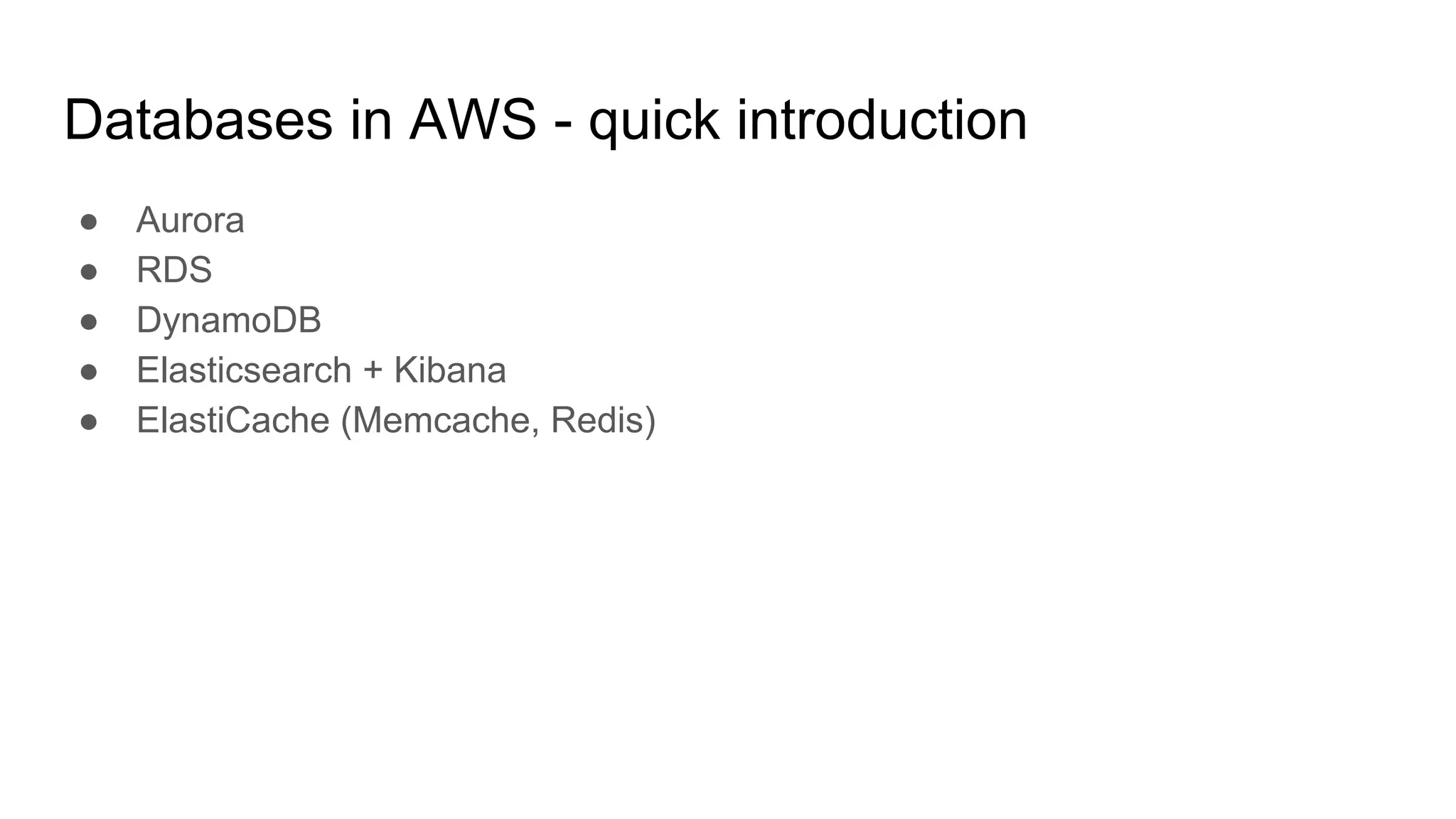

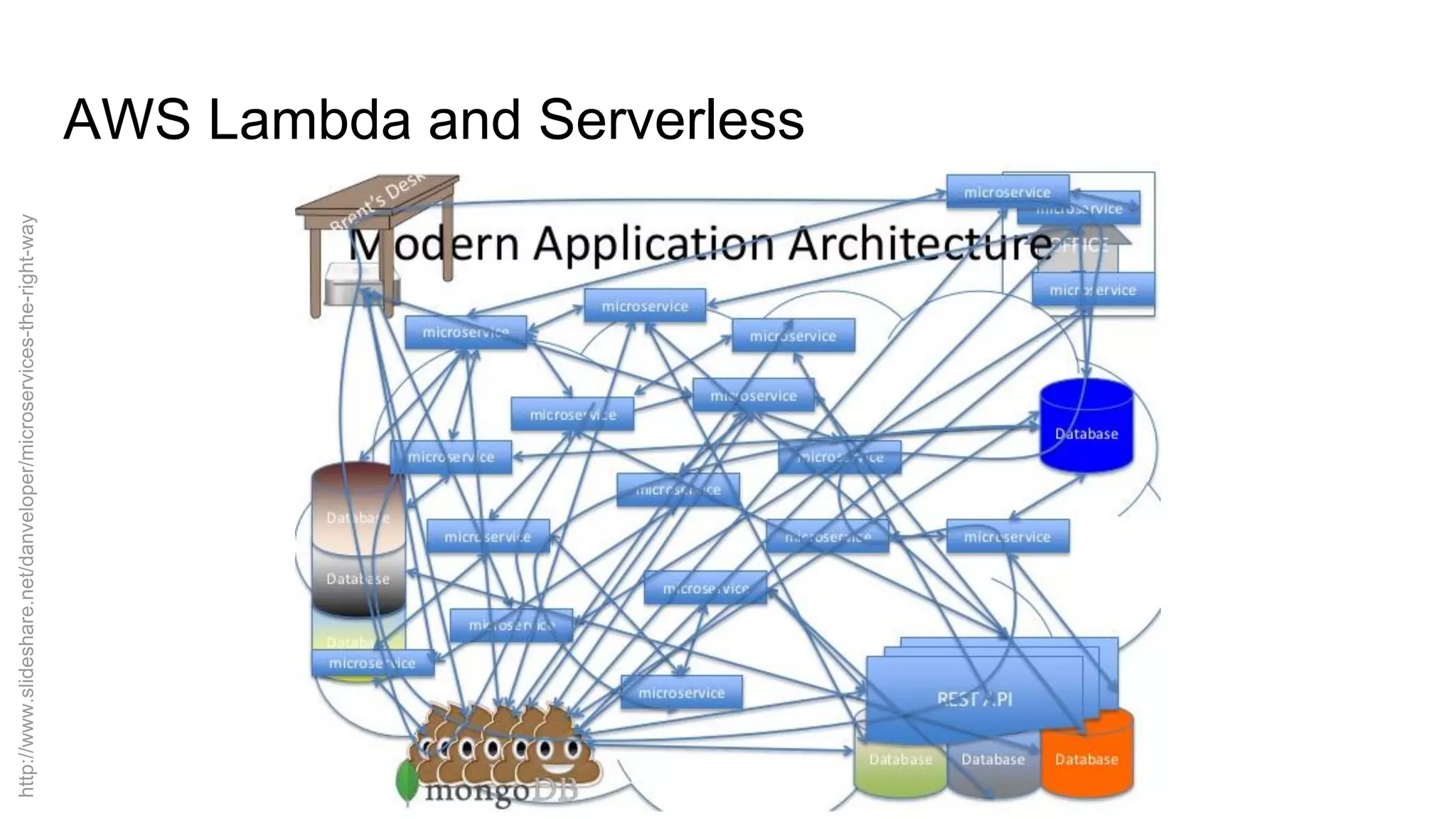

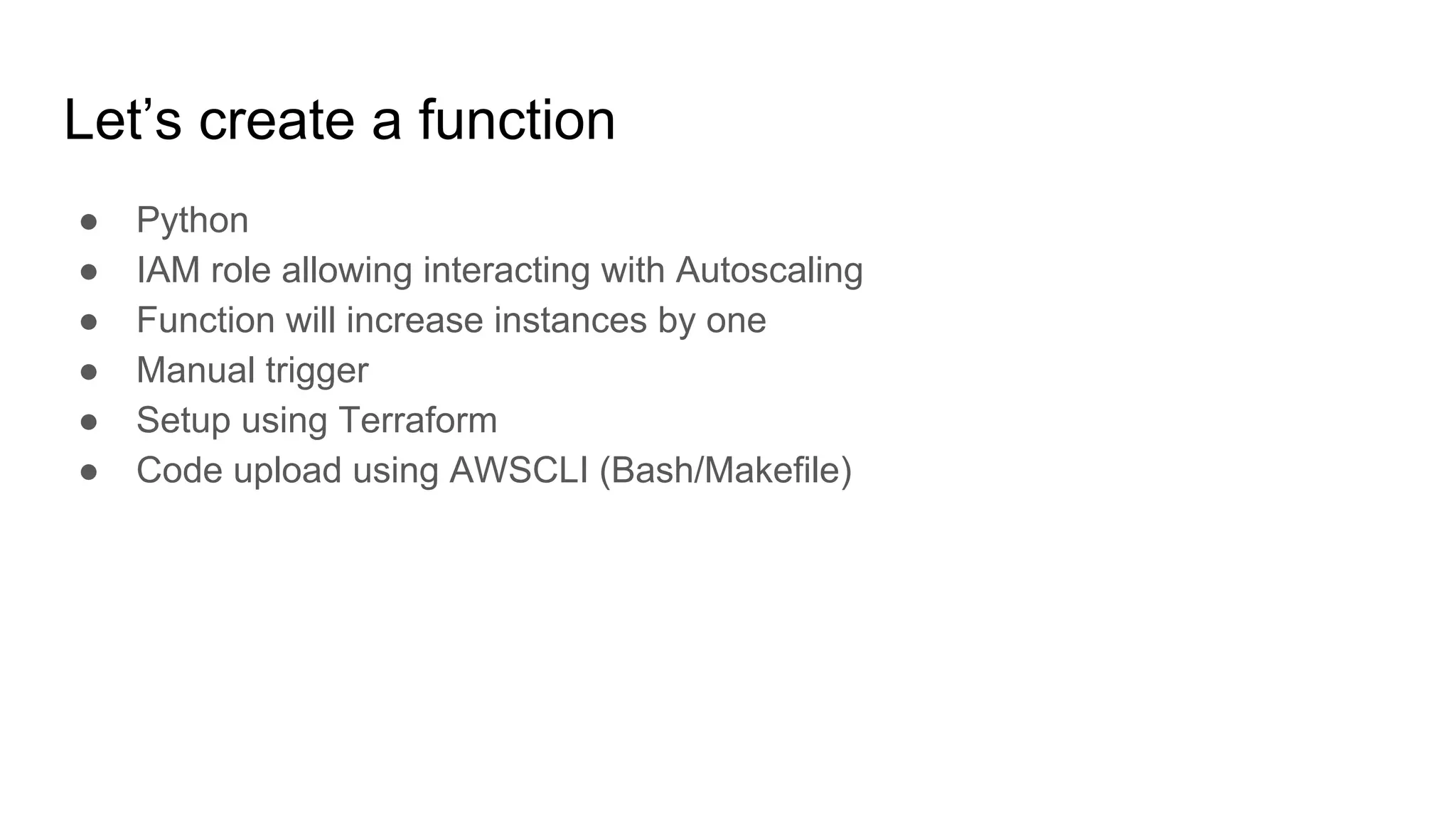

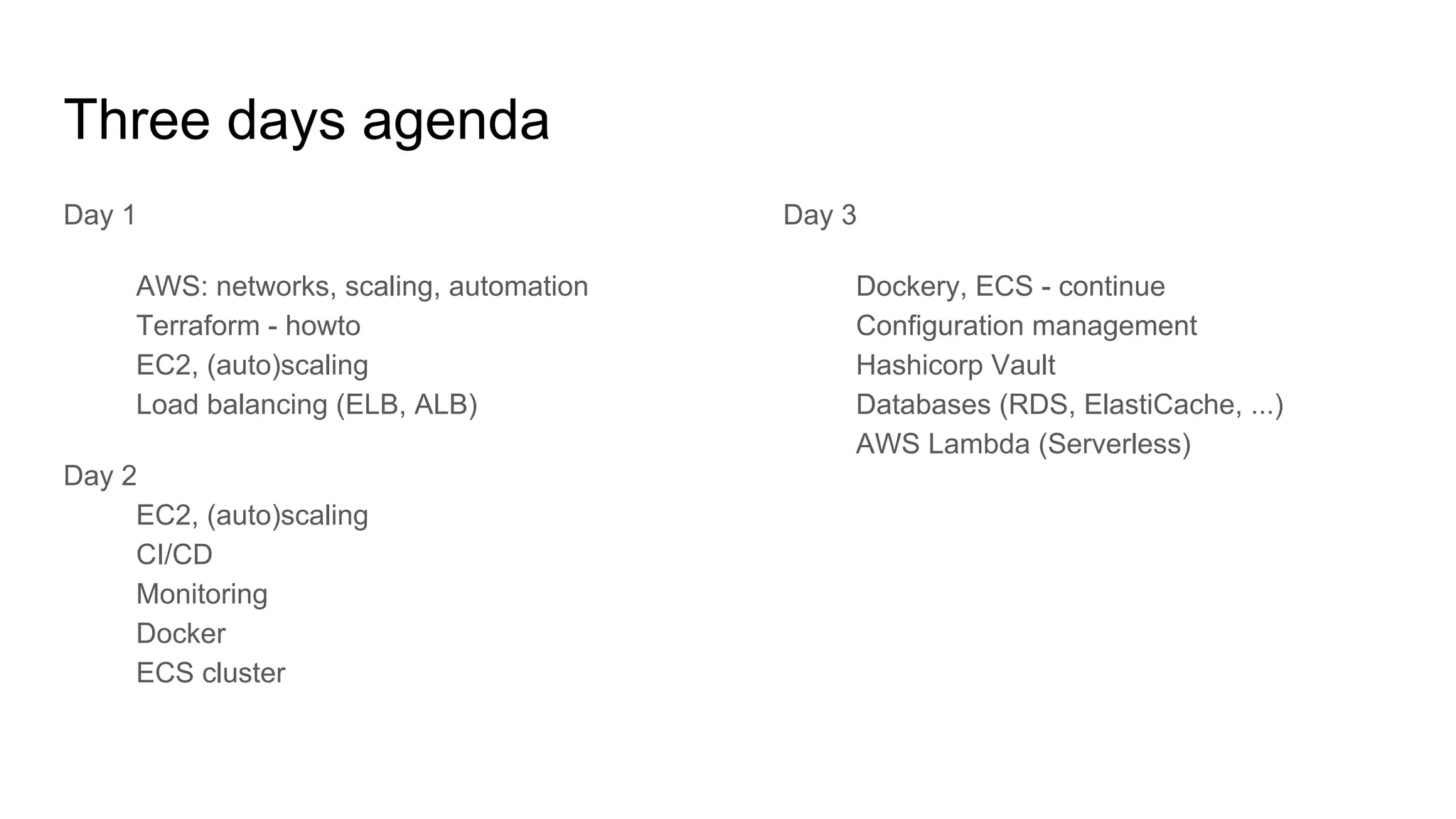

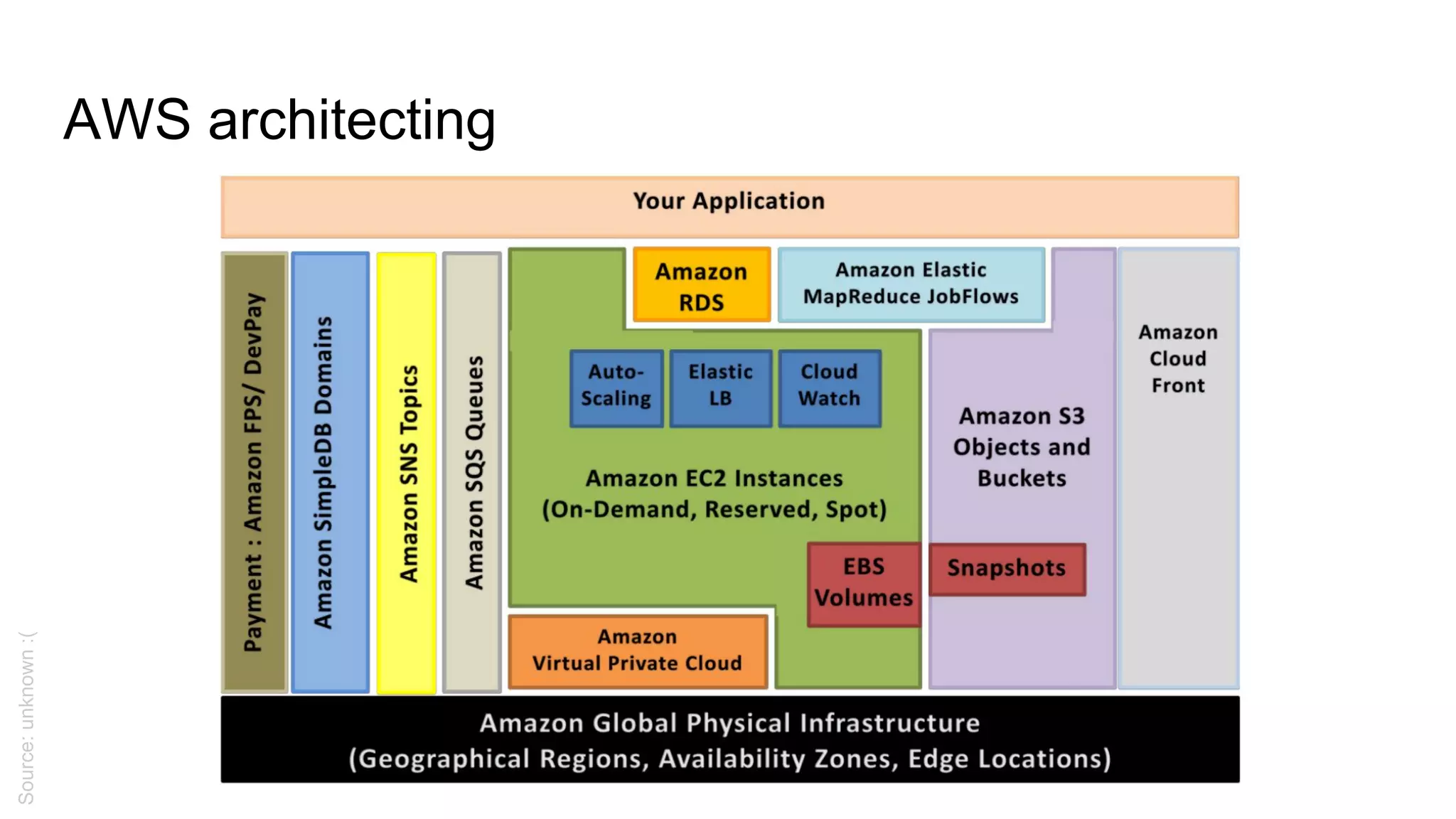

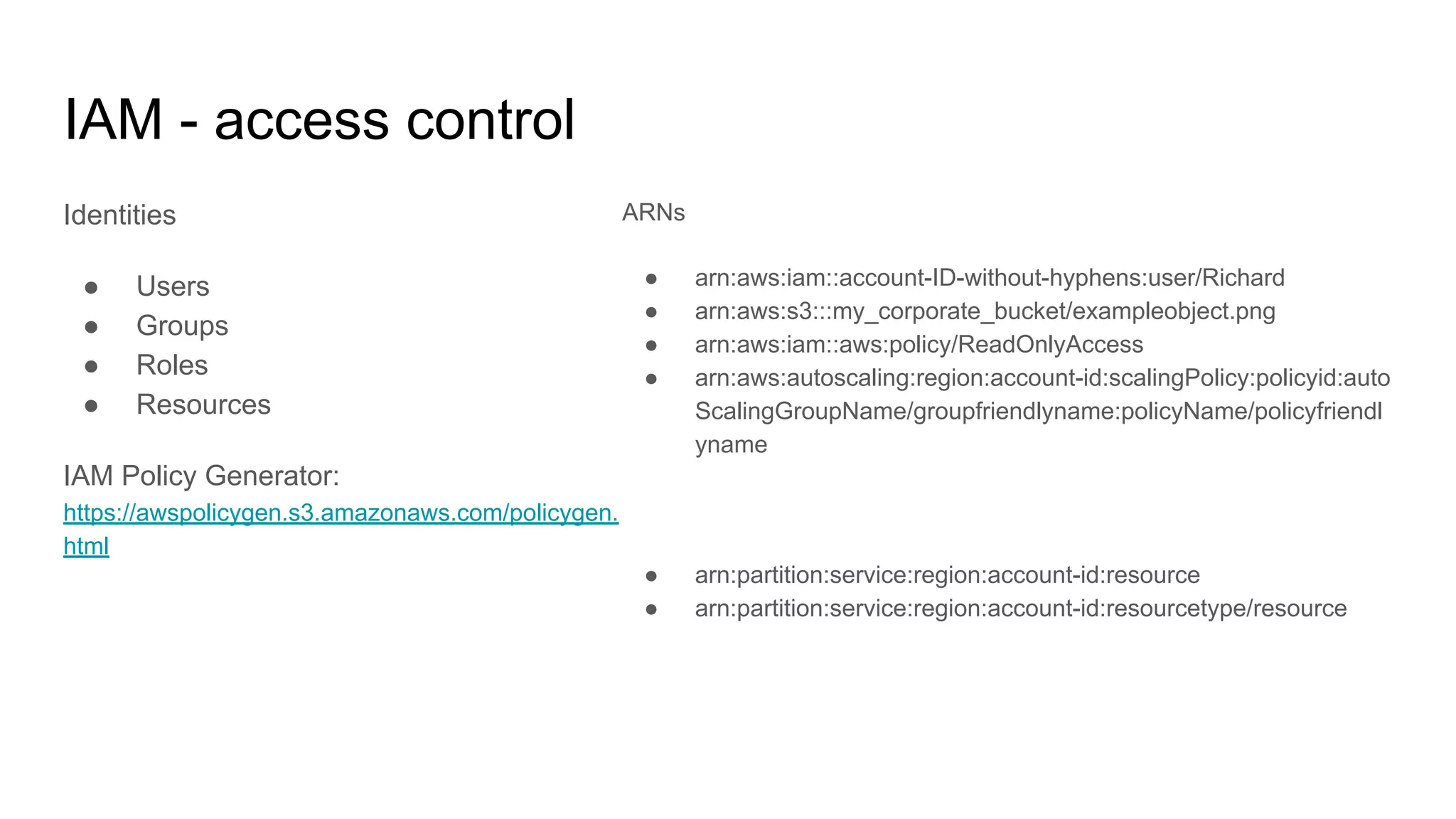

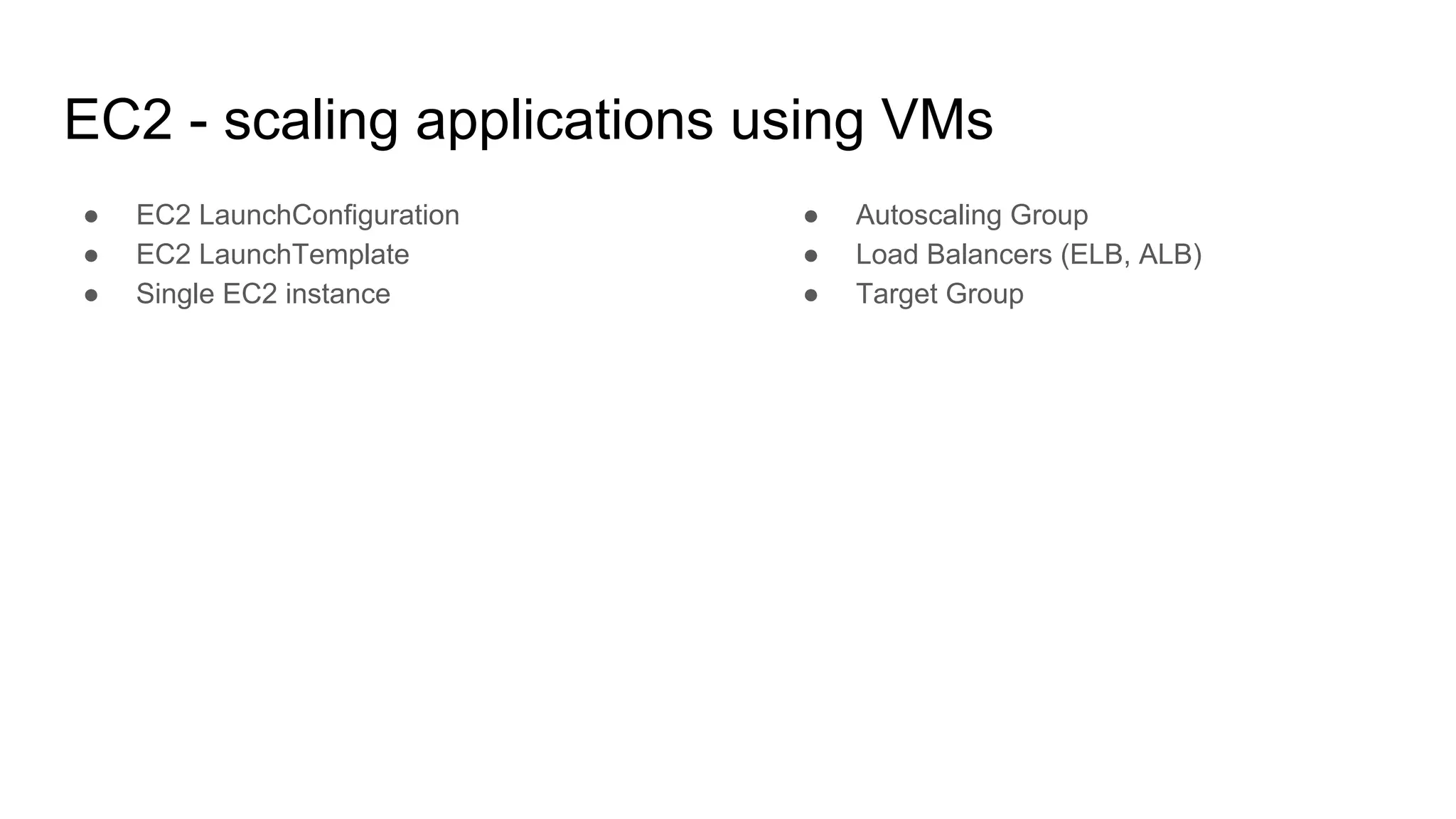

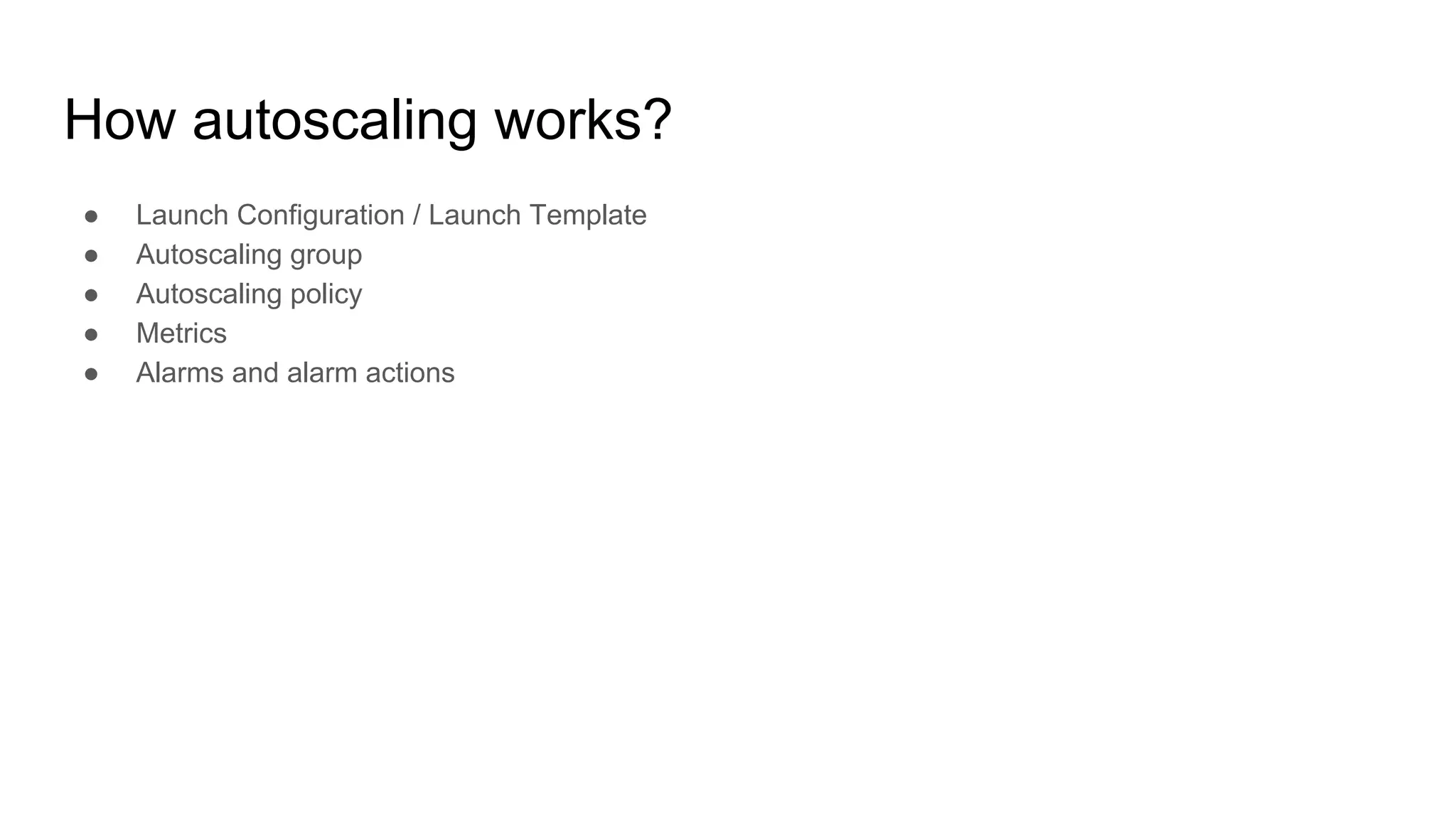

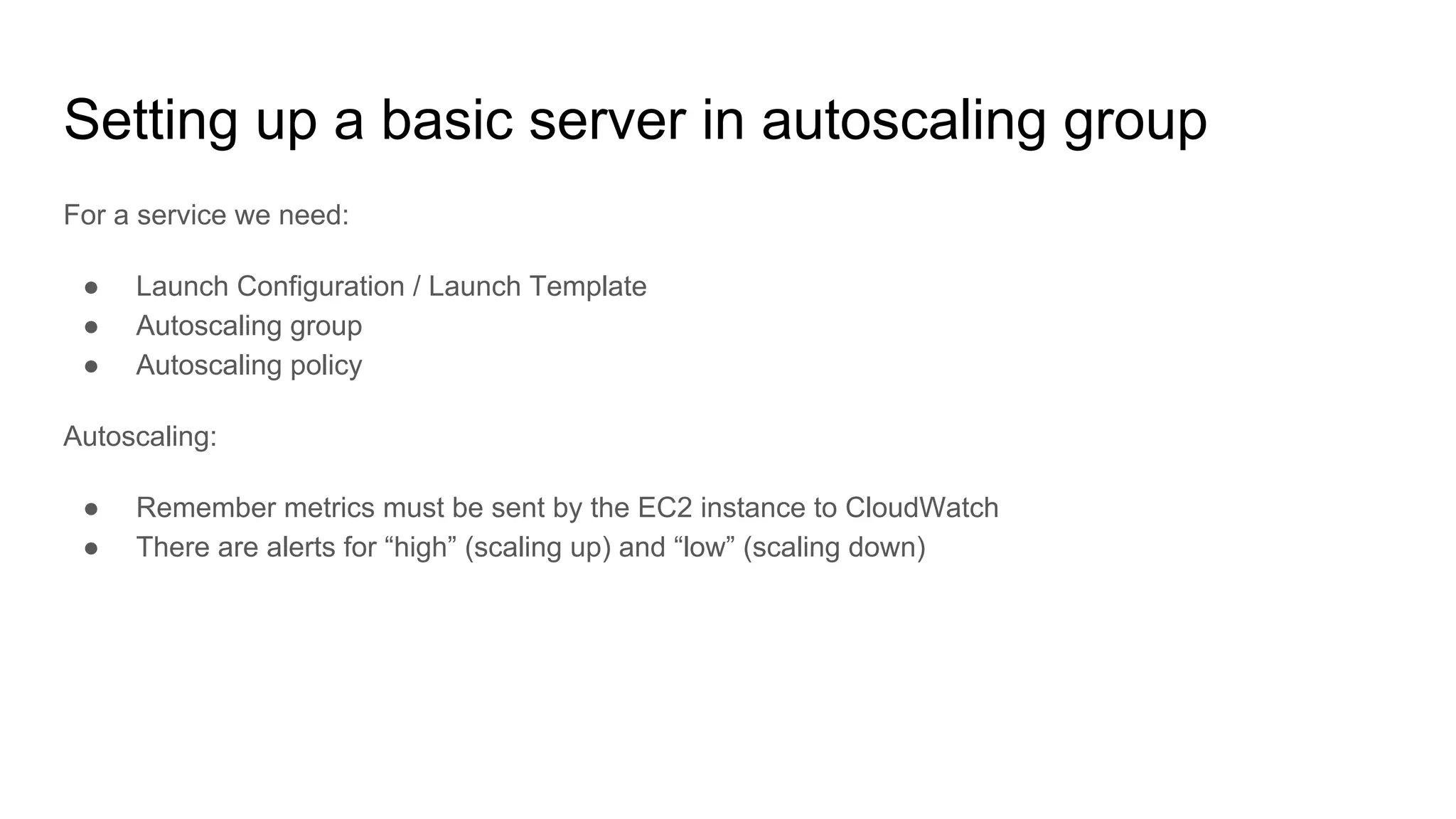

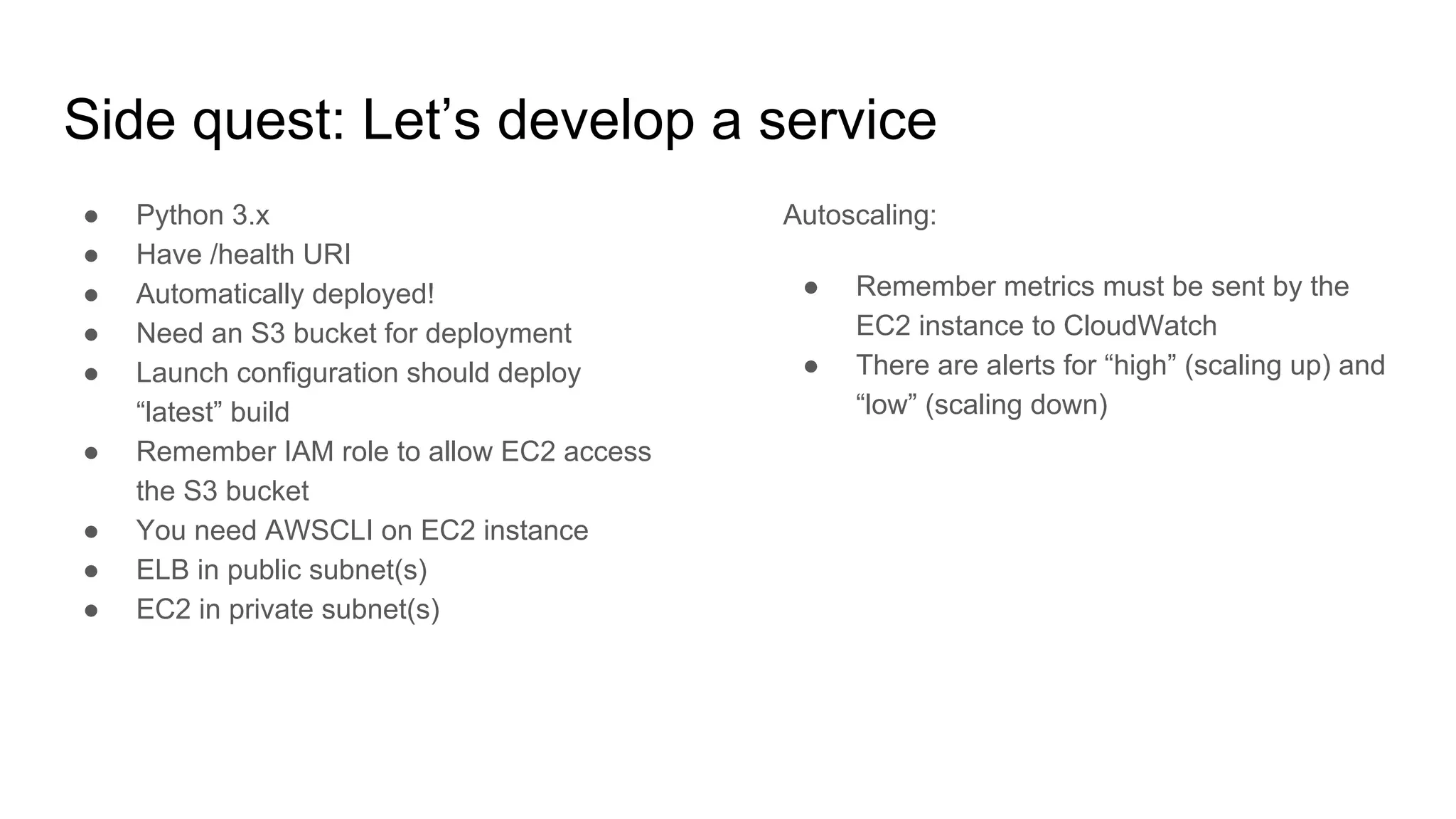

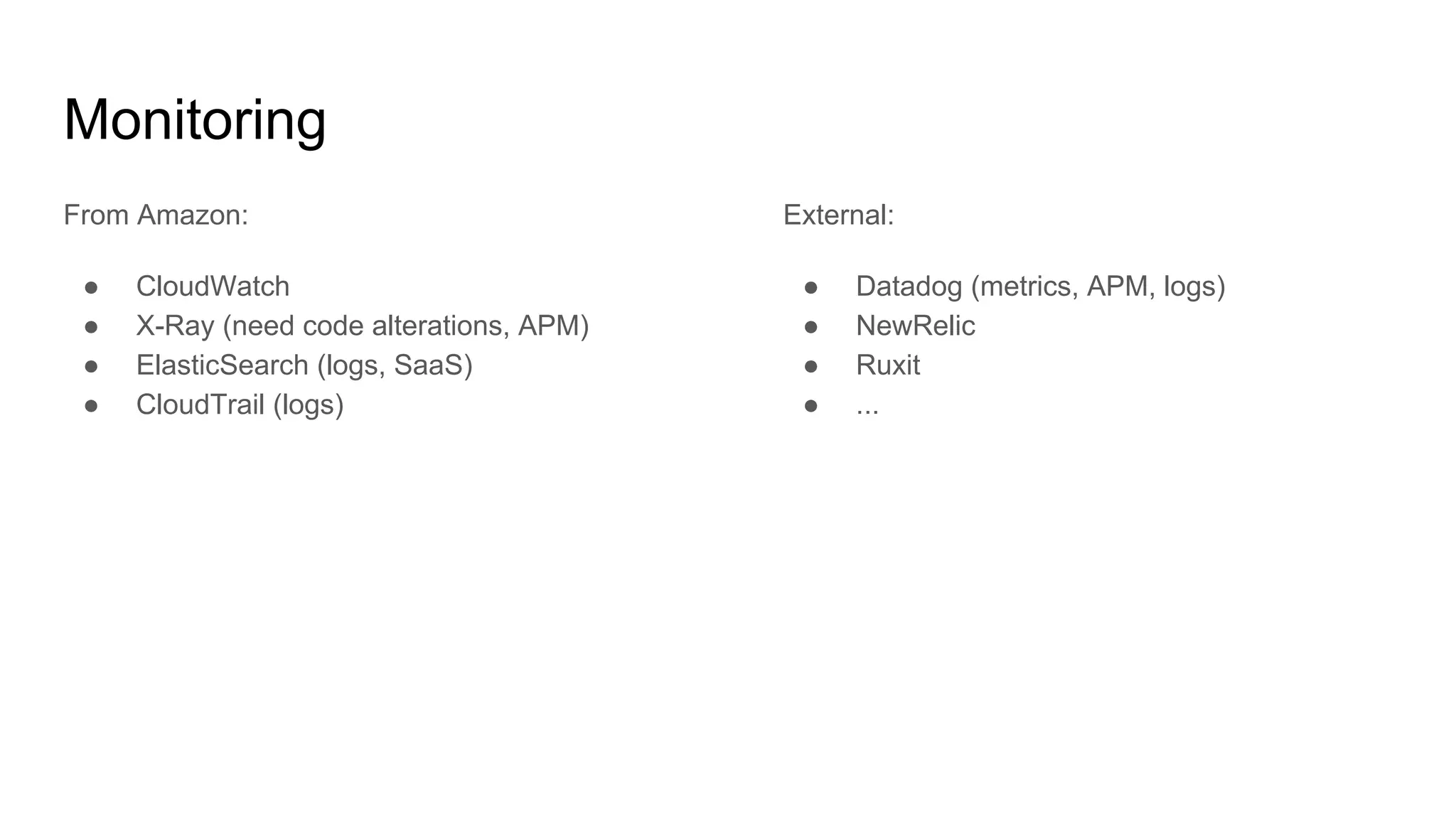

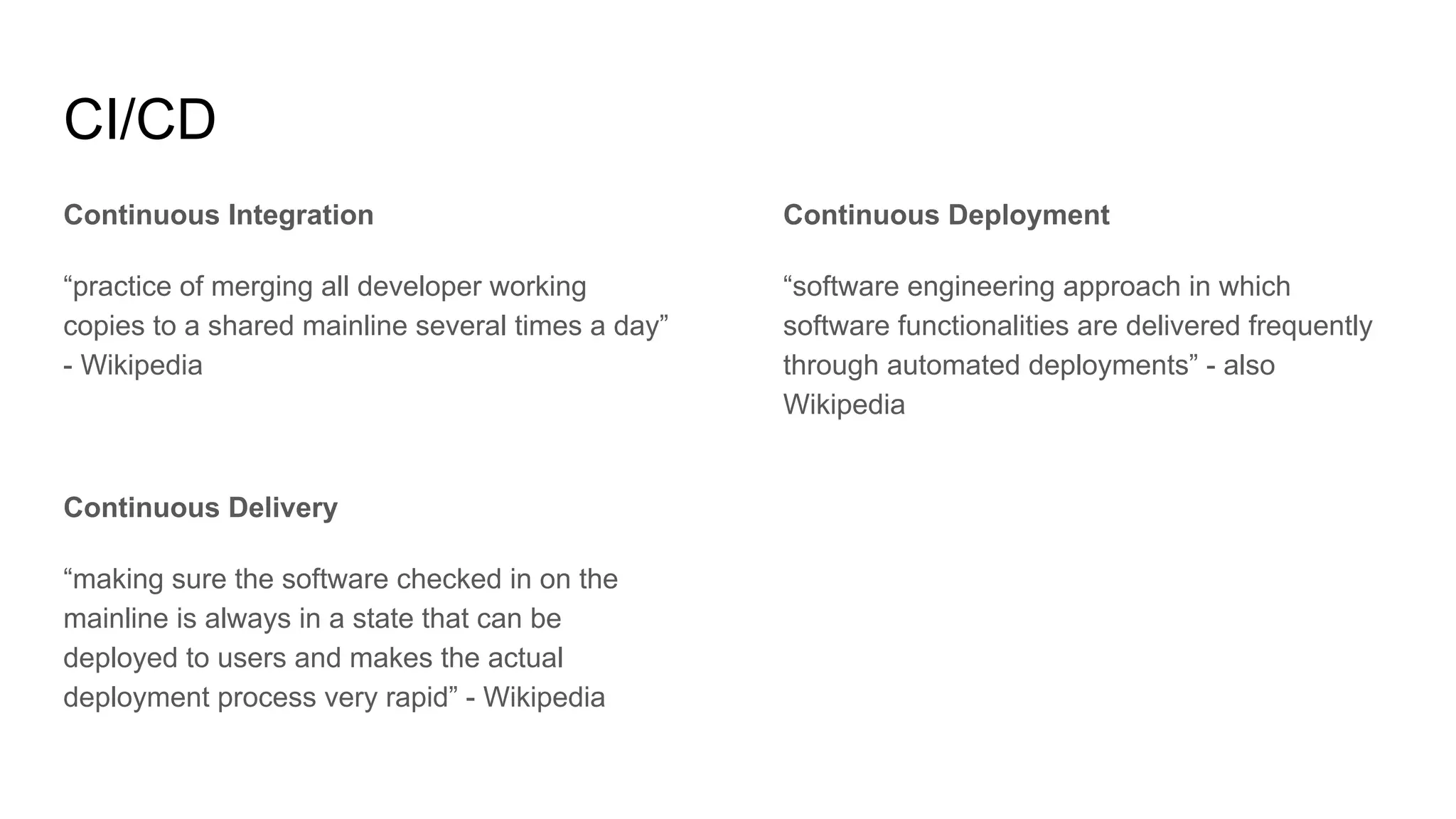

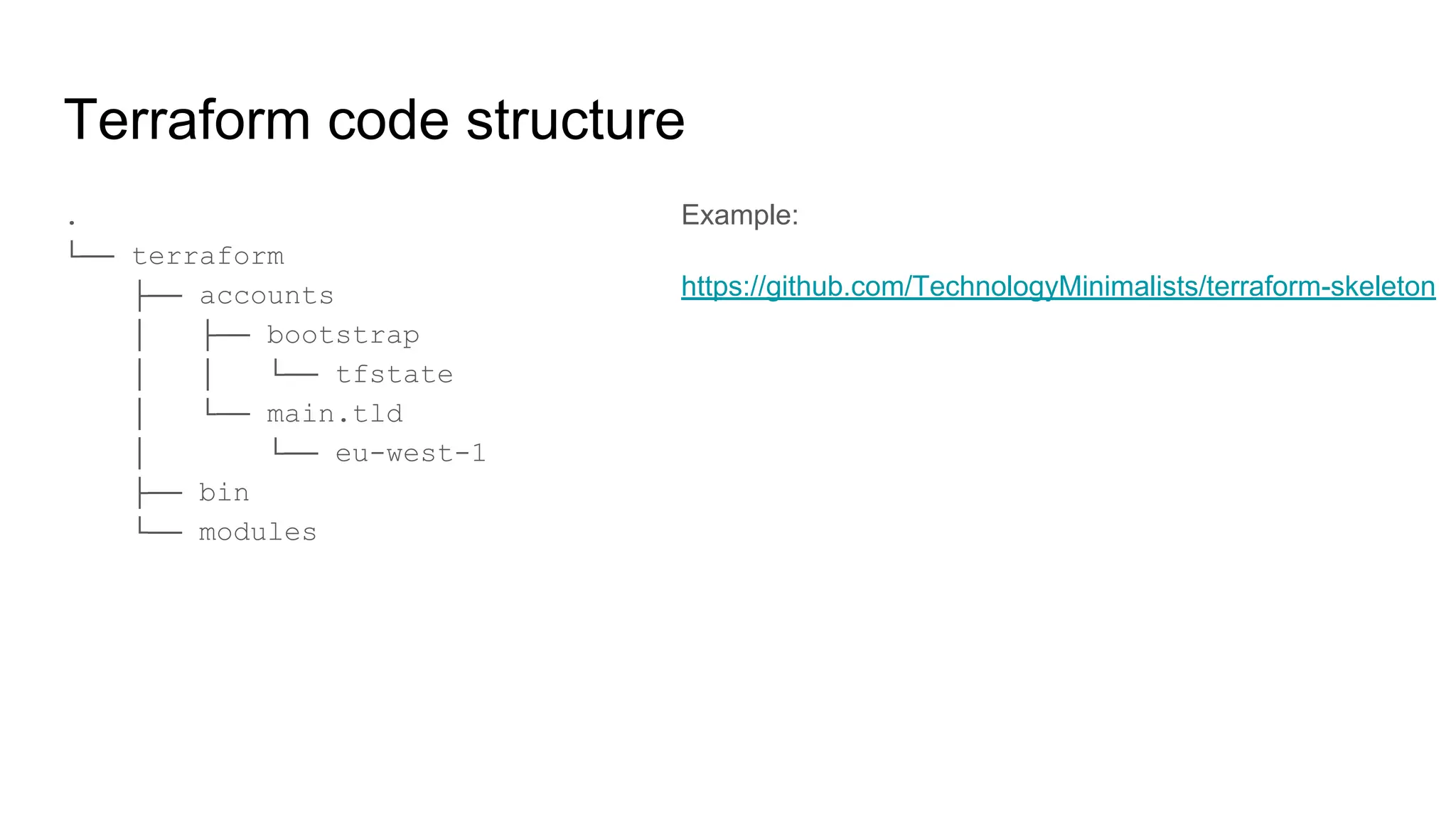

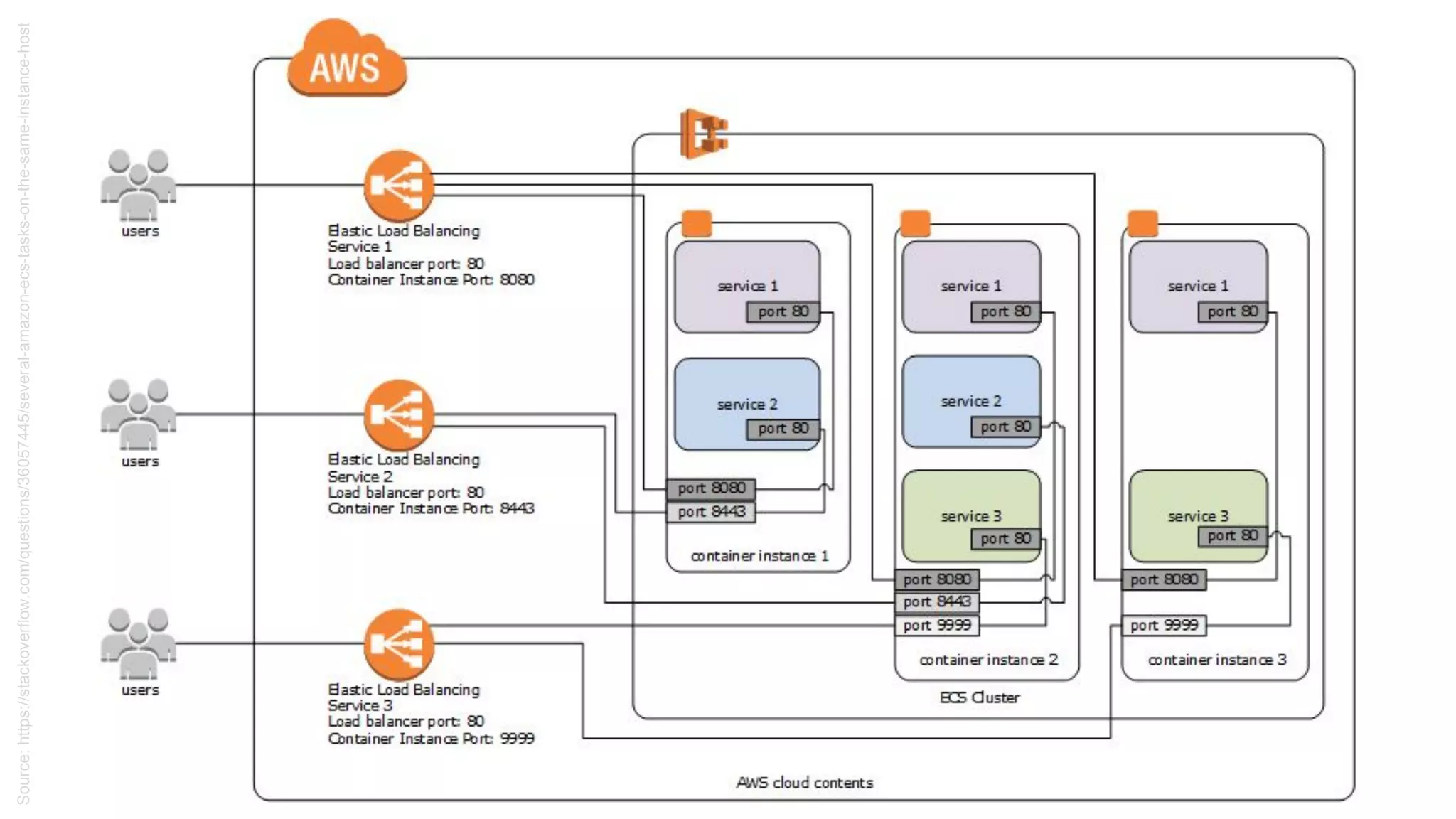

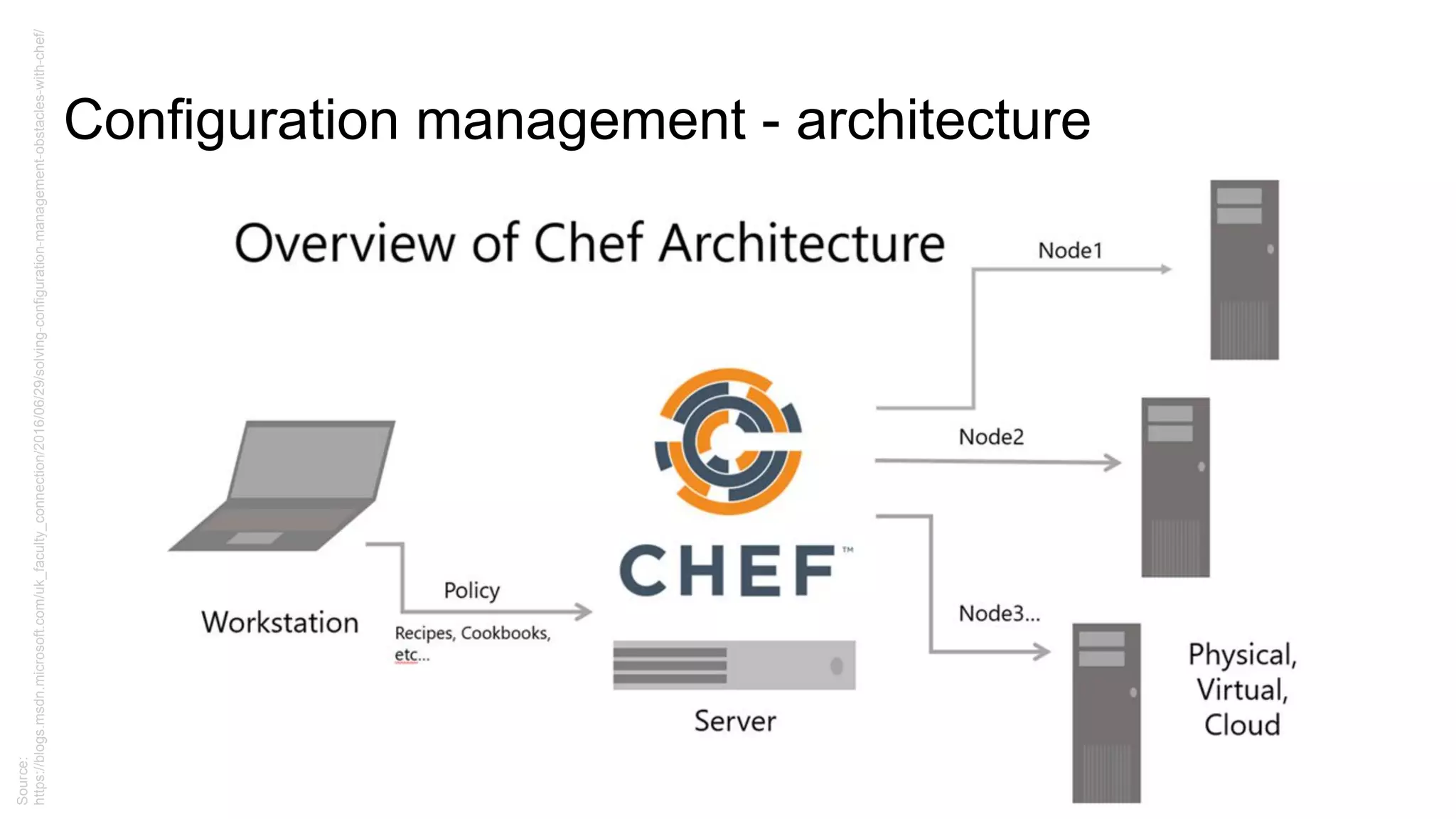

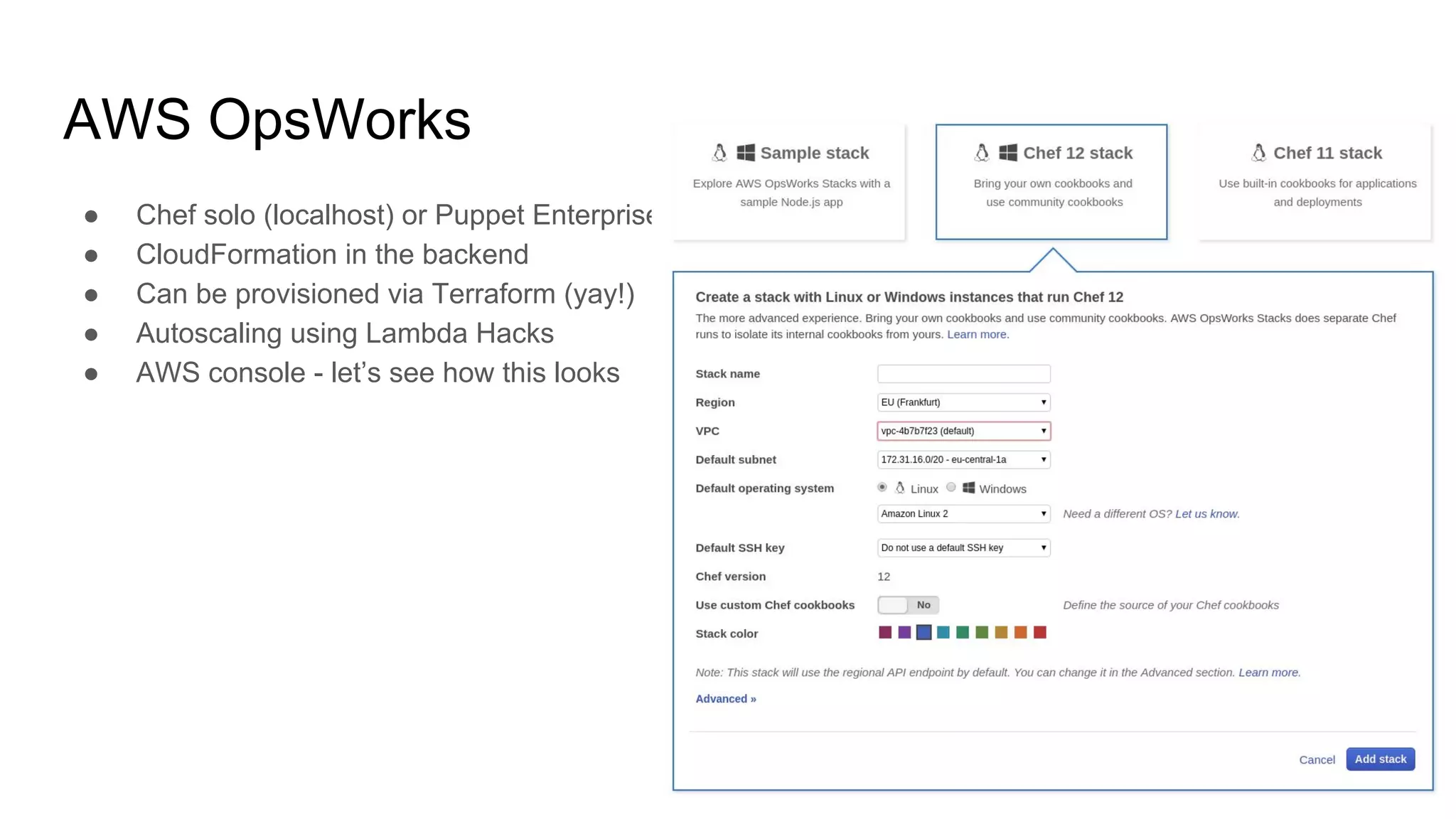

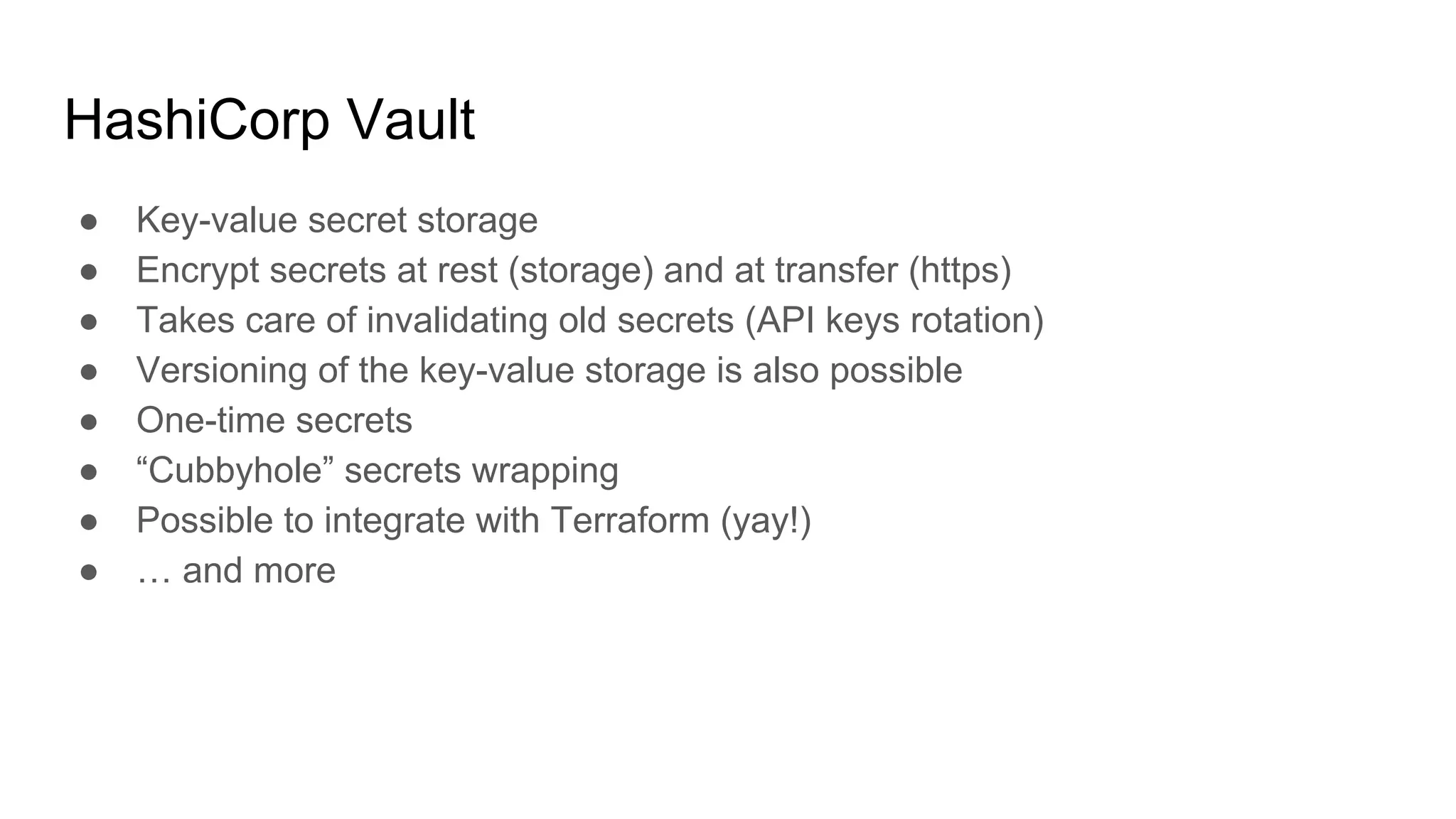

This document provides an agenda and notes for a 3-day AWS, Terraform, and advanced techniques training. Day 1 covers AWS networking, scaling techniques, automation with Terraform and covers setting up EC2 instances, autoscaling groups, and load balancers. Day 2 continues EC2 autoscaling, introduces Docker, ECS, monitoring, and continuous integration/delivery. Topics include IAM, VPC networking, NAT gateways, EC2, autoscaling policies, ECS clusters, Docker antipatterns, monitoring servers/applications/logs, and Terraform code structure. Day 3 will cover Docker, ECS, configuration management, Vault, databases, Lambda, and other advanced AWS and DevOps topics.

![IAM - examples

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"ec2:AttachVolume",

"ec2:DetachVolume"

],

"Resource": [

"arn:aws:ec2:*:*:volume/*",

"arn:aws:ec2:*:*:instance/*"

],

"Condition": {

"ArnEquals":

{"ec2:SourceInstanceARN":

"arn:aws:ec2:*:*:instance/<INSTANCE-ID>"}

}

}

]

}

{

"Version": "2012-10-17",

"Statement": {

"Effect": "Allow",

"Action":

"<SERVICE-NAME>:<ACTION-NAME>",

"Resource": "*",

"Condition": {

"DateGreaterThan":

{"aws:CurrentTime": "2017-07-01T00:00:00Z"},

"DateLessThan": {"aws:CurrentTime":

"2017-12-31T23:59:59Z"}

}

}

}](https://image.slidesharecdn.com/00-190625102242/75/AWS-DevOps-Terraform-Docker-HashiCorp-Vault-16-2048.jpg)

![Docker

● Single process

● No logs inside container

● No IP address for container

● Small images

● Use Dockerfile or Packer

● NO security credentials in container

● … but put your code in there

● Don’t use “latest” tag

● Don’t run as root user

● Stateless services - no dependencies

across containers

FROM ubuntu:18.04

RUN apt-get update &&

apt-get -y upgrade &&

DEBIAN_FRONTEND=noninteractive apt-get -y install

apache2 php7.2 php7.2-mysql

libapache2-mod-php7.2 curl lynx

EXPOSE 80

ENTRYPOINT ["/bin/sh"]

CMD ["/usr/sbin/apache2ctl", "-D", "FOREGROUND"]](https://image.slidesharecdn.com/00-190625102242/75/AWS-DevOps-Terraform-Docker-HashiCorp-Vault-21-2048.jpg)

![Terraform - basic EC2 instance

● Single EC2 instance in a public subnet

● t2.micro

● SSH open

● Must create SSH key in AWS

resource "aws_instance" "ssh_host" {

ami = "ami-0bdf93799014acdc4"

instance_type = "t2.micro"

key_name = "${aws_key_pair.admin.key_name}"

subnet_id = "${aws_subnet.public.id}"

vpc_security_group_ids = [

"${aws_security_group.allow_ssh.id}",

"${aws_security_group.allow_all_outbound.id}",

]

tags {

Name = "SSH bastion"

}

}](https://image.slidesharecdn.com/00-190625102242/75/AWS-DevOps-Terraform-Docker-HashiCorp-Vault-32-2048.jpg)

![NAT gateway and private subnets

resource "aws_subnet" "private_a" {

vpc_id = "${aws_vpc.main.id}"

cidr_block = "10.100.10.0/24"

map_public_ip_on_launch = false

availability_zone = "eu-central-1a"

tags {

Name = "Terraform main VPC, private

subnet zone A"

}

}

resource "aws_nat_gateway" "natgw_a" {

allocation_id =

"${element(aws_eip.nateip.*.id, 0)}"

subnet_id = "${aws_subnet.public_a.id}"

depends_on =

["aws_internet_gateway.default"]

}](https://image.slidesharecdn.com/00-190625102242/75/AWS-DevOps-Terraform-Docker-HashiCorp-Vault-36-2048.jpg)

![EC2 instance, security group, ssh key (bastion host)

resource "aws_security_group" "allow_ssh" {

name = "allow_ssh"

description = "Allow inbound SSH traffic"

vpc_id = "${aws_vpc.main.id}"

ingress {

from_port = 22

to_port = 22

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

}

resource "aws_key_pair" "admin" {

key_name = "admin-key"

public_key = "${var.ssh_key}"

}

resource "aws_instance" "ssh_host" {

ami = "ami-0bdf93799014acdc4"

instance_type = "t2.micro"

key_name = "${aws_key_pair.admin.key_name}"

subnet_id = "${aws_subnet.public_a.id}"

vpc_security_group_ids = [

"${aws_security_group.allow_ssh.id}",

"${aws_security_group.allow_all_outbound.id}",

]

tags {

Name = "SSH bastion"

}

}](https://image.slidesharecdn.com/00-190625102242/75/AWS-DevOps-Terraform-Docker-HashiCorp-Vault-37-2048.jpg)

![Launch configuration

resource "aws_launch_configuration" "as_conf" {

image_id = "${data.aws_ami.ubuntu.id}"

instance_type = "${var.instance_type}"

key_name = "${aws_key_pair.admin.key_name}"

user_data = "${data.template_file.init.rendered}"

security_groups = [

"${aws_security_group.http_server_public.id}",

"${aws_security_group.allow_ssh_ip.id}",

"${aws_security_group.allow_all_outbound.id}",

]

iam_instance_profile = "${aws_iam_instance_profile.ec2_default.name}"

associate_public_ip_address = "${var.associate_public_ip_address}"

}](https://image.slidesharecdn.com/00-190625102242/75/AWS-DevOps-Terraform-Docker-HashiCorp-Vault-39-2048.jpg)

![Autoscaling group

resource "aws_autoscaling_group" "application" {

name = "ASG"

launch_configuration = "${aws_launch_configuration.as_conf.name}"

vpc_zone_identifier = [

"${aws_subnet.private_a.id}",

"${aws_subnet.private_b.id}",

"${aws_subnet.private_c.id}"

]

min_size = "${var.min_size}"

max_size = "${var.max_size}"

load_balancers = ["${aws_elb.default-elb.name}"]

termination_policies = ["OldestInstance"]

tag {

key = "Name"

value = "EC2-sample-service"

propagate_at_launch = true

}

}](https://image.slidesharecdn.com/00-190625102242/75/AWS-DevOps-Terraform-Docker-HashiCorp-Vault-40-2048.jpg)

![Metric and alarm action

resource "aws_cloudwatch_metric_alarm" "cpu_utilization_high" {

alarm_name = "cpu-utilization"

comparison_operator = "GreaterThanOrEqualToThreshold"

evaluation_periods = "2"

metric_name = "CPUUtilization"

namespace = "AWS/EC2"

period = "60"

statistic = "Average"

threshold = "80"

dimensions {

AutoScalingGroupName = "${aws_autoscaling_group.application.name}"

}

alarm_description = "CPU Utilization high"

alarm_actions = ["${aws_autoscaling_policy.scale_up.arn}"]

}](https://image.slidesharecdn.com/00-190625102242/75/AWS-DevOps-Terraform-Docker-HashiCorp-Vault-42-2048.jpg)

![Jenkins - Jenkinsfile example

node("master") {

stage("Prep") {

deleteDir() // Clean up the workspace

checkout scm

withCredentials([file(credentialsId: 'tfvars', variable: 'tfvars')]) {

sh "cp $tfvars terraform.tfvars"

}

sh "terraform init --get=true"

}

stage("Plan") {

sh "terraform plan -out=plan.out -no-color"

}

if (env.BRANCH_NAME == "master") {

stage("Apply") {

input 'Do you want to apply this plan?'

sh "terraform apply -no-color plan.out"

}

}

}](https://image.slidesharecdn.com/00-190625102242/75/AWS-DevOps-Terraform-Docker-HashiCorp-Vault-56-2048.jpg)

![More on modules

ECS cluster

module "ecs-cluster" {

source = "azavea/ecs-cluster/aws"

version = "2.0.0"

vpc_id = "${aws_vpc.main.id}"

instance_type = "t2.small"

key_name = "blah"

root_block_device_type = "gp2"

root_block_device_size = "10"

health_check_grace_period = "600"

desired_capacity = "1"

min_size = "0"

max_size = "2"

enabled_metrics = [...]

private_subnet_ids = [...]

project = "Something"

environment = "Staging"

lookup_latest_ami = "true"

}

● It’s worth to invest time to prepare

modules tailored to your needs, but there

are great ones ready to use

● It’s going to take time to understand how

module works

● … but it’ll be shorter than creating your

own

● Not everything should be a module (do

NOT securityGroupModuleFactory)

● Group important things together](https://image.slidesharecdn.com/00-190625102242/75/AWS-DevOps-Terraform-Docker-HashiCorp-Vault-59-2048.jpg)

![ECS task definition

resource "aws_ecs_task_definition" "main" {

family = "some-name"

container_definitions = "${var.task_definition}"

task_role_arn = "${var.task_role_arn}"

network_mode = "${var.task_network_mode}"

cpu = "${var.task_cpu}"

memory = "${var.task_memory}"

requires_compatibilities = ["service_launch_type"]

execution_role_arn = execution_role_arn

}

https://github.com/TechnologyMinimalists/aws-containers

-task-definitions

[{

"environment": [{

"name": "SECRET",

"value": "KEY"

}],

"essential": true,

"memoryReservation": 128,

"cpu": 10,

"image": "nginx:latest",

"name": "nginx",

"portMappings": [

{

"hostPort": 80,

"protocol": "tcp",

"containerPort": 80

}

]

}

}]](https://image.slidesharecdn.com/00-190625102242/75/AWS-DevOps-Terraform-Docker-HashiCorp-Vault-63-2048.jpg)

![ECS - service

resource "aws_ecs_service" "awsvpc_alb" {

name = "service_name"

cluster = "ecs_cluster_id"

task_definition = "aws_ecs_task_definition"

desired_count = "1"

load_balancer = {

target_group_arn = "${aws_alb_target_group}"

container_name = "${thename}"

container_port = "80"

}

launch_type = "${var.service_launch_type}"

network_configuration {

security_groups = ["${security_groups}"]

subnets = ["${subnets}"]

}

}](https://image.slidesharecdn.com/00-190625102242/75/AWS-DevOps-Terraform-Docker-HashiCorp-Vault-64-2048.jpg)

![HashiCorp Vault + Consul - setting up Consul

{

"acl_datacenter": "dev1",

"server": true,

"datacenter": "dev1",

"data_dir": "/var/lib/consul",

"disable_anonymous_signature": true,

"disable_remote_exec": true,

"encrypt": "Owpx3FUSQPGswEAeIhcrFQ==",

"log_level": "DEBUG",

"enable_syslog": true,

"start_join": ["192.168.33.10",

"192.168.33.20", "192.168.33.30"],

"services": []

}

# consul agent -server

-bootstrap-expect=1 -data-dir

/var/lib/consul/data

-bind=192.168.33.10

-enable-script-checks=true

-config-dir=/etc/consul/bootstrap

CTRL+C when done

# servicectl start consul](https://image.slidesharecdn.com/00-190625102242/75/AWS-DevOps-Terraform-Docker-HashiCorp-Vault-81-2048.jpg)

![HashiCorp Vault - first secret

[vagrant@vault-01 ~]$ vault kv put secret/hello foo=world

Key Value

--- -----

created_time 2018-12-12T11:50:21.722423496Z

deletion_time n/a

destroyed false

version 1](https://image.slidesharecdn.com/00-190625102242/75/AWS-DevOps-Terraform-Docker-HashiCorp-Vault-85-2048.jpg)

![HashiCorp Vault - get secret

[vagrant@vault-01 ~]$ vault kv get secret/hello

====== Metadata ======

Key Value

--- -----

created_time 2018-12-12T11:50:21.722423496Z

deletion_time n/a

destroyed false

version 1

=== Data ===

Key Value

--- -----

foo world

[vagrant@vault-01 ~]$ vault kv get -format=json secret/hello](https://image.slidesharecdn.com/00-190625102242/75/AWS-DevOps-Terraform-Docker-HashiCorp-Vault-86-2048.jpg)

![HashiCorp Vault - token create

[vagrant@vault-01 ~]$ vault token create

Key Value

--- -----

token s.4fQYZpivxLRZVYGhjpTQm1Ob

token_accessor XYOqtACs0aatIkUBgAcI6qID

token_duration ∞

token_renewable false

token_policies ["root"]

identity_policies []

policies ["root"]](https://image.slidesharecdn.com/00-190625102242/75/AWS-DevOps-Terraform-Docker-HashiCorp-Vault-87-2048.jpg)

![HashiCorp Vault - login using token

[vagrant@vault-01 ~]$ vault login s.hAnm1Oj9YYoDtxkqQVkLyxr7

Success! You are now authenticated. The token information displayed below is already stored

in the token helper. You do NOT need to run "vault login" again. Future Vault requests will

automatically use this token.

Key Value

--- -----

token s.hAnm1Oj9YYoDtxkqQVkLyxr7

token_accessor 6bPASelFhdZ2ClSzwfq31Ucr

token_duration ∞

token_renewable false

token_policies ["root"]

identity_policies []

policies ["root"]](https://image.slidesharecdn.com/00-190625102242/75/AWS-DevOps-Terraform-Docker-HashiCorp-Vault-88-2048.jpg)

![HashiCorp Vault - token revoke

[vagrant@vault-01 ~]$ vault token revoke s.6WYXXVRPNmEKfaXfnyAjcMsR

Success! Revoked token (if it existed)

See more on auth: https://learn.hashicorp.com/vault/getting-started/authentication](https://image.slidesharecdn.com/00-190625102242/75/AWS-DevOps-Terraform-Docker-HashiCorp-Vault-89-2048.jpg)