This document describes extending the Parallel Fortran Unit Test framework (pFUnit) to support unit testing of Coarray Fortran (CAF) code. It introduces new CAF classes in pFUnit for parameterized CAF tests, setting test parameters like number of images, and collective communication routines. An example is given of a simple CAF code to distribute workload across images and its corresponding unit test using the extended pFUnit framework. The extension allows leveraging benefits of unit testing and test-driven development for scientific software written in CAF.

![Abdullahi Hassan, Cardellini, and Filippone

MPI, the parallel features are easier to follow, the code tends to be far shorter, and the impact of the parallel

statements is much smaller.

CAF started as an extension of Fortran for parallel processing and is now part of the Fortran 2008 standard.

CAF adopts the PGAS model for SPMD parallel programming, where multiple “images” share their address

space; the shared space is partitioned, and each image has a local portion. The standard is very careful not

to constrain what an “image” should be, so as to allow different implementations to make use of underlying

threads and/or processes. From a logical, user-centered point of view, each CAF program is replicated across

images, and the images execute independently until they reach a synchronization point, either explicit or

implicit. The number of images is not specified in the source code, and can be chosen at compile, link or

run-time, depending on the particular implementation of the language. Images are assigned unique indices

through which the user can control the flow of the program by conditional statements, similar to common

usage in MPI. Coherently with the language default rules, image indices range from 1 to a maximum index

which can be retrieved at runtime through an appropriate intrinsic function num_images(). Each image

has its own set of data objects; some of these objects may also be declared as coarray objects, meaning that

they can be accessed by other images as well. Coarrays are declared with a so-called codimension, indicated

by square brackets. The codimension spans the space of all the images:

i n t e g e r : : i [ ∗ ]

real : : a ( 1 0 ) [ ∗ ]

real : : b ( 0 : 9 ) [ 0 : 4 , ∗ ]

As already mentioned, one of the main advantages of CAF over MPI is its simplicity. Much of the parallel

bookkeeping is handled behind the scenes by the compiler, and the resulting parallel code is shorter and

more readable; this reduces the chances of injecting defects in the code. As an example, let us consider the

burden of passing a non-contiguous array (for example, a matrix row in Fortran) using MPI_DATATYPE:

! Define v e c t o r

c a l l MPI_Type_vector ( n , nb , n , MPI_REAL , myvector , i e r r )

c a l l MPI_Type_commit ( myvector , i e r r )

! Communicate

i f ( n_rank == 0) then

c a l l MPI_Send ( a , 1 , myvector ,1 ,100 , MPI_COMM_WORLD, i e r r )

endif

i f ( n_rank == 1) then

c a l l MPI_Recv ( a , 1 , myvector ,0 ,100 , MPI_COMM_WORLD, mystatus , i e r r )

endif

c a l l MPI_Type_free ( myvector , i e r r )

All of the above code is equivalent to just a single CAF statement:

a ( 1 , : ) [ 2 ] = a ( 1 , : ) [ 1 ]

with no need to write separate code for sending and receiving a message.

Currently, the number of compilers implementing CAF has increased: the Intel and Cray compilers sup-

port it. The GCC compiler provides CAF support via a communication library; the base GCC distribution

only handles a single image, but the OpenCoarrays project provides an MPI-based and a GASNet-based

communication library (Fanfarillo, Burnus, Cardellini, Filippone, Nagle, and Rouson 2014).

2.2 Unit Test and Test-Driven Development

Software testing can be applied at different levels: unit tests are fine-grained tests, focusing on small portions

of the code (a single module, function or subroutine) (Osherove 2015), while regression tests are coarse-](https://image.slidesharecdn.com/aframeworkforunittestingwithcoarrayfortran-230806142249-ff3a5e9b/75/A-Framework-For-Unit-Testing-With-Coarray-Fortran-3-2048.jpg)

![Abdullahi Hassan, Cardellini, and Filippone

grained tests, that encompass a large portion of the implementation and are used to verify that the software

still performs correctly after a modification.

Many scientific softwares rely on regression, disregarding unit tests. This choice presents several disadvan-

tages, spanning from the impossibility to perform verification in the early stages of software’s lifecycle, to

difficulties in locating the error responsible for failure, and to a long time required to run tests.

Additionally, the search for performance through parallelism increases complexity of the code and may

cause new classes of errors: race conditions and deadlocks are two such examples.

Race conditions occur when multiple images try to access concurrently the same resource. For example, in

the code fragment:

count [1]=0

do i =1 , n

count [ 1 ] = count [ 1 ] + 1

enddo

the final value of the variable count[1] is not uniquely determined by the code, and depends upon the

order in which the various images execute the statements.

A deadlock occurs when an image is waiting for an event that cannot possibly occur, e.g., when two images

wait for each other; as a consequence, the program makes no further progress. With CAF a deadlock occurs

in the following example:

i f ( this_image == 1) then

sync images ( num_images ( ) )

endif

because image 1 is waiting for synchronization with the last image, but the last image is not executing the

matching sync statement.

These new classes of errors enforce the need for systematic unit testing.

Test-Driven-Development (TDD) is a software development practice relying strongly on unit tests. TDD

combines a test-first approach with refactoring: before actually implementing the code, the programmer

writes automated unit tests for the functionality to be implemented.

Writing unit tests is a time-consuming process, and if the associated effort is perceived to be excessive, the

programmer may be inclined to reduce or skip it altogether. Unit testing frameworks are tools intended to

help the developers to write, execute and review tests and results more efficiently. Many testing frameworks

exist; they are often called “xUnit” frameworks, where “x” stands for the (initials of the) name of the

language they are developed for.Usually, a unit testing framework provides code libraries with basic classes,

attributes and assert methods; it also includes a test runner used to automatically identify and run tests,

and provides information about their number, failures, raised exceptions, location of failures. Good testing

frameworks are critical for the acceptance of TDD.

2.3 pFUnit: A Unit Testing Framework for Parallel Fortran

Given the above discussion, it should by now be clear that a unit testing framework for Coarray Fortran

applications is a desirable tool. A good basis for a CAF compatible unit testing framework ought to have

certain characteristics. First, it should be conceived for the Computational Science & Engineering (CSE)

and HPC development communities. The ideal framework should also handle parallelism and should be

able to detect the peculiar errors caused by concurrently execution of different images. Additionally, it must

be easy to extend, for example through object-oriented (OO) features.](https://image.slidesharecdn.com/aframeworkforunittestingwithcoarrayfortran-230806142249-ff3a5e9b/75/A-Framework-For-Unit-Testing-With-Coarray-Fortran-4-2048.jpg)

![Abdullahi Hassan, Cardellini, and Filippone

The implementation of these collectives is not fully optimized, since they are intended to be superseded by

the runtime of the compiler, but this is not too restrictive since they will will run only once for each test

suite.

From the user perspective, CAF tests have a single, mandatory argument of type CafTestCase and with

intent(INOUT). It provides two methods getNumImages() and getImageRank(), returning the

total number of images running for the test and the rank of the current image. In CAF, test failing assertions

of any types provide information about the rank of the process (or the ranks of the processes) that detects

the failure. This permits to recover not only on the test that has failed, but on the specific image that causes

the failure.

3.1 An Example of CAF Unit Test Using pFUnit

Let us now consider a simple example of testing a CAF code with pFUnit. The example has been run

on a Linux laptop, using GCC 6.1.0, MPICH 3.2, OpenCoarrays 1.6.2 and our development version of

pFUnit. Let us assume that we have an application where the CAF images are organized in a linear array,

and our computations need to deal with elements of an index space. Let us also assume that the amount of

computation per point in the index space is constant; naturally, we want our workload to be distributed as

evenly as possible. A possible solution would be to use the following routine which determines, for each

image, the size of the local number of indices and the first index assigned to it:

subroutine d a t a _ d i s t r i b u t i o n ( n , i l , nl )

integer , i n t e n t ( in ) : : n

integer , i n t e n t ( out ) : : i l , nl

i n t e g e r : : nmi

! Compute f i r s t l o c a l index IL and number of l o c a l i n d i c e s NL . We want the data to

! be ev enly spread , i . e . , f o r a l l images ! NL must be w i t h i n 1 of n / num_images ( )

! For a l l images we should have t h a t IL [ME+1]−IL [ME]=NL

a s s o c i a t e (me=> this_image ( ) , images=>num_images ( ) )

nmi = mod( n , images )

nl = n / images + merge (1 ,0 ,me<=nmi )

i l = min ( n+1 , merge ( ( 1 + ( me−1)∗ nl ) , &

& (1+ nmi ∗( nl +1) + (me−nmi−1)∗ nl ) ,&

& me <= nmi ) )

end a s s o c i a t e

end subroutine d a t a _ d i s t r i b u t i o n

It is easy to see that on NP images, this routine will assign to each image either N/NP indices or (N/NP)+1,

as necessary to make sure the indices add up to N. To check that our routine is working properly with pFUnit

we need to specify clearly which properties our data distribution is supposed to have; in our case we have:

1. The local sizes must add up to the global size N;

2. The local sizes must differ by at most 1 from N/NP;

3. For each image me<num_images(), il[me+1]-il[me] must be equal to nl.

Checking the first property is very simple when the underlying compiler (like GNU Fortran 6.1.0) supports

the collective intrinsics:

@test ( nimgs =[ s t d ] )

subroutine t e s t _ d i s t r i b u t i o n _ 1 ( t h i s )

i m p l i c i t none

Class ( CafTestMethod ) , i n t e n t ( inout ) : : t h i s

integer , parameter : : gsz =27

i n t e ge r : : ngl , i n f o

integer , a l l o c a t a b l e : : i l [ : ] , nl [ : ]](https://image.slidesharecdn.com/aframeworkforunittestingwithcoarrayfortran-230806142249-ff3a5e9b/75/A-Framework-For-Unit-Testing-With-Coarray-Fortran-6-2048.jpg)

![Abdullahi Hassan, Cardellini, and Filippone

a l l o c a t e ( i l [ ∗ ] , nl [ ∗ ] , s t a t = i n f o )

@assertEqual ( info , 0 , " F a i l e d a l l o c a t i o n ! " )

! Set up checks

a s s o c i a t e (me=> this_image ( ) , images=>num_images ( ) )

c a l l d a t a _ d i s t r i b u t i o n ( gsz , i l , nl )

! Build r e f e r e n c e data to check a g a i n s t

ngl = nl

c a l l co_sum ( ngl )

@assertEqual ( ngl , gsz , " Sizes do not add up ! " )

end a s s o c i a t e

end subroutine t e s t _ d i s t r i b u t i o n _ 1

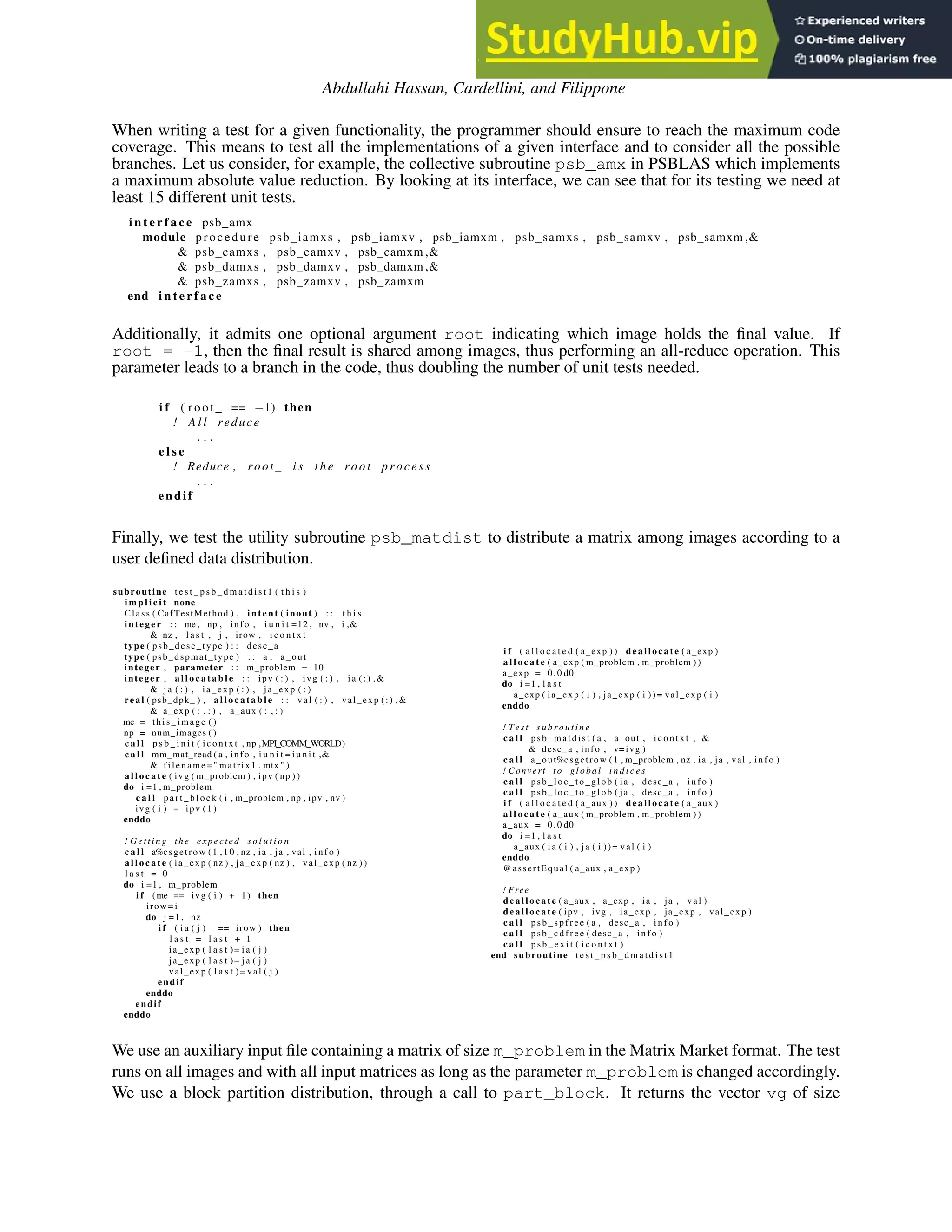

The macro @test(nimgs=[std]) indicates that the test we are running is a CAF test that runs on

all available images. The this argument is mandatory and must have intent(inout); the assertion

@assertEqual is used to verify that the actual result matches the expected output.

The coarrays remote access facilities make it very easy to check for consistency across process boundaries.

Since the OpenCoarrays installation we are using is built on top of MPICH, we execute the tests with the

mpirun command, as shown in Figure 2. The output gives us confidence that the data distribution is

computed correctly; the run with 15 processes tells us that the border case where we have more processes

than indices (in our case 13) is being handled correctly. Since the test has been run on an quad-core laptop,

running 15 MPI processes has a substantial overhead, which is entirely normal; the run with 4 processes was

very fast, as expected.

[ l o c a l h o s t CAF_pFUnit ] mpirun −np 4 . / testCAF

. . .

Time : 0.004 seconds

OK

(3 t e s t s )

[ l o c a l h o s t CAF_pFUnit ] mpirun −np 15 . / testCAF

. . .

Time : 6.207 seconds

OK

(3 t e s t s )

Figure 2: Test output

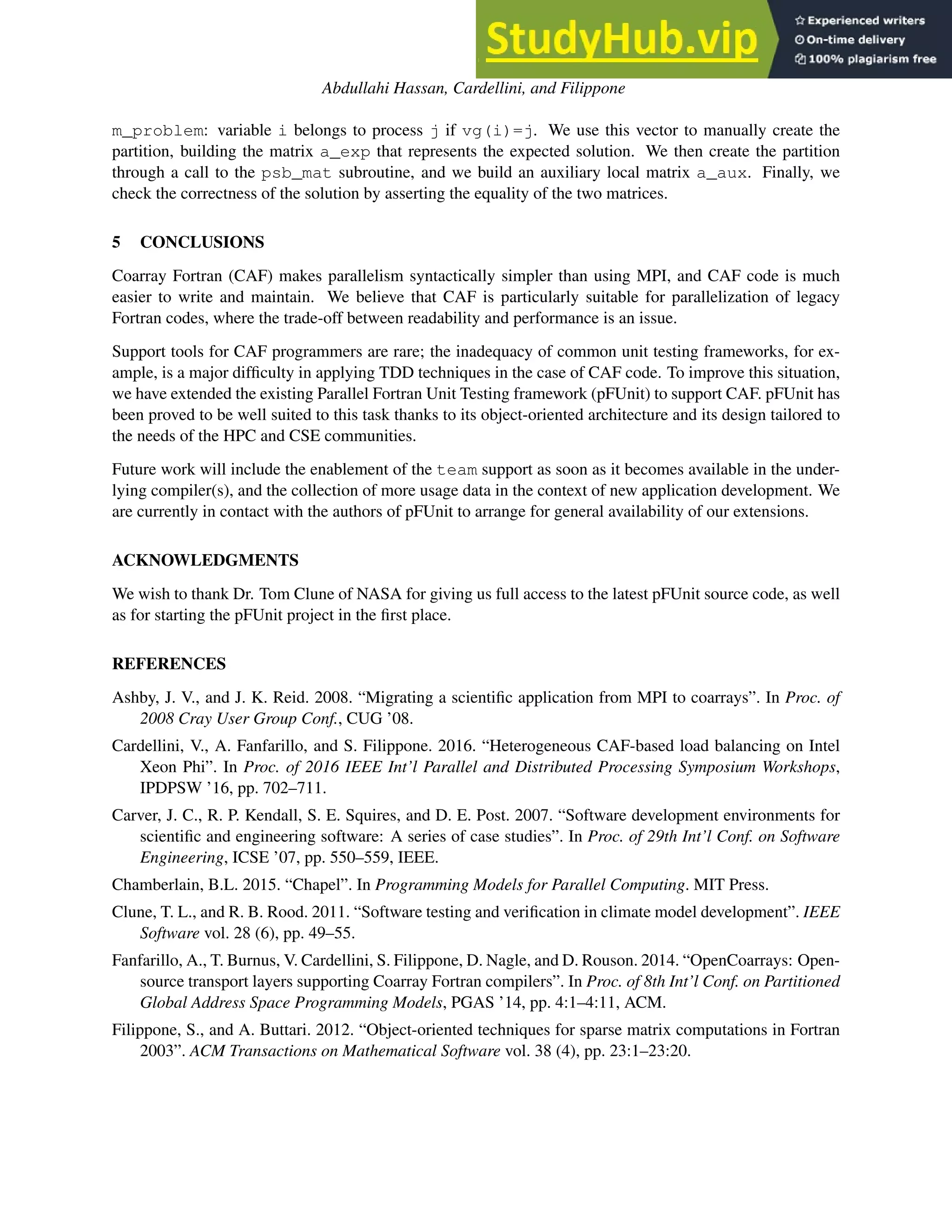

What happens if there is an error? To demonstrate this, we wrapped the data_distribution routine

injecting two errors on image 2: we alter both the local number of indices as well as the starting index. The

test output is shown in Figure 3. We get a fairly precise indication of what went wrong and where:

• An error on all images because the total size does not match the expected value;

• An error on image 2 because the local number of indices is not in the expected range

(N/NP):(N/NP)+1;

• An error on images 1 and 2, because having injected an error in the start index on image 2 affects

the checks on both of these images.

3.2 Limitations: Team Support

It is often desirable to be able to run tests using varying number of processes/images; this is because some

bugs reveal themselves only when running on a certain number of processes. In pFUnit, the user is al-](https://image.slidesharecdn.com/aframeworkforunittestingwithcoarrayfortran-230806142249-ff3a5e9b/75/A-Framework-For-Unit-Testing-With-Coarray-Fortran-7-2048.jpg)

![Abdullahi Hassan, Cardellini, and Filippone

[ l o c a l h o s t CAF_pFUnit ] mpirun −np 4 . / testCAF

. F . F . F

Time : 0.013 seconds

F a i l u r e in : C A F _ d i s t r i b u t i o n _ t e s t _ m o d _ s u i t e . t e s t _ d i s t r i b u t i o n _ 1 [ nimgs =4][ nimgs =4]

Location : [ testCAF . pf : 2 5 ]

D i s t r i b u t i o n does not add up ! expected 13 but found : 17; d i f f e r e n c e : | 4 | . (IMG=1)

. . . . . . . . . .

F a i l u r e in : C A F _ d i s t r i b u t i o n _ t e s t _ m o d _ s u i t e . t e s t _ d i s t r i b u t i o n _ 2 [ nimgs =4][ nimgs =4]

Location : [ testCAF . pf : 5 0 ]

One image i s g e t t i n g too many e n t r i e s expected 4 to be l e s s than or equal to : 1. (IMG=2)

F a i l u r e in : C A F _ d i s t r i b u t i o n _ t e s t _ m o d _ s u i t e . t e s t _ d i s t r i b u t i o n _ 3 [ nimgs =4][ nimgs =4]

Location : [ testCAF . pf : 8 1 ]

S t a r t i n d i c e s not c o n s i s t e n t expected 4 but found : −4; d i f f e r e n c e : | 8 | . (IMG=1)

F a i l u r e in : C A F _ d i s t r i b u t i o n _ t e s t _ m o d _ s u i t e . t e s t _ d i s t r i b u t i o n _ 3 [ nimgs =4][ nimgs =4]

Location : [ testCAF . pf : 8 1 ]

S t a r t i n d i c e s not c o n s i s t e n t expected 7 but found : 11; d i f f e r e n c e : | 4 | . (IMG=2)

FAILURES! ! !

Tests run : 3 , F a i l u r e s : 3 , E r r o r s : 0

t h e r e i s an e r r o r

Figure 3: Test output with errors

lowed to control this aspect by passing an optional argument nimgs=[<list>] (in the case of CAF) or

nprocs=[<list>] (in the case of MPI): in this way the test procedure will execute once for each item

in <list>. MPI makes this possible by constructing a sub-communicator of the appropriate size for each

execution. In principle, CAF allows for clustering of images in teams, which are meant to be similar to MPI

communicators; at any time an image executes as a member of a team (the current team). Constructs are

available to create and synchronize teams.

Unfortunately, at the time of this writing, the only compiler supporting teams is the OpenUH one (Khaldi,

Eachempati, Ge, Jouvelot, and Chapman 2015). However, this compiler does not support other standard

Fortran features; most importantly, it does not support the OO programming features, and therefore cannot

be used with pFUnit. As a result, the current version of pFUnit only allows the CAF user-defined tests to be

run with all images, and the only accepted values for the optional argument nimgs is [std].

4 CASE STUDY: PSBLAS

We illustrate a case study that shows how pFUnit can be used to detect errors. We applied pFUnit to perform

unit tests on the PSBLAS library (Filippone and Colajanni 2000) (Filippone and Buttari 2012) during the

library migration from MPI to CAF. At the time of writing, we have written a total of 12 test suites and 241

unit tests. PSBLAS is a library of Basic Linear Algebra Subroutines that implements iterative solvers for

sparse linear systems and includes subroutines for multiplying sparse matrices by dense matrices, solving

sparse triangular systems, and preprocessing sparse matrices, as well as additional routines for dense matrix

operations. It is implemented in Fortran 2003 and the current version uses message passing to address a

distributed memory execution model. We converted the code gradually from MPI to coarrays, thus having

MPI and CAF coexisting in the same code. In PSBLAS, we detected three communication patterns that had

to be modified: 1) the halo exchange, 2) the collective subroutines, and 3) the point-to-point communication

in data distribution. In PSBLAS, data allocation on the distributed-memory architecture is driven by the](https://image.slidesharecdn.com/aframeworkforunittestingwithcoarrayfortran-230806142249-ff3a5e9b/75/A-Framework-For-Unit-Testing-With-Coarray-Fortran-8-2048.jpg)

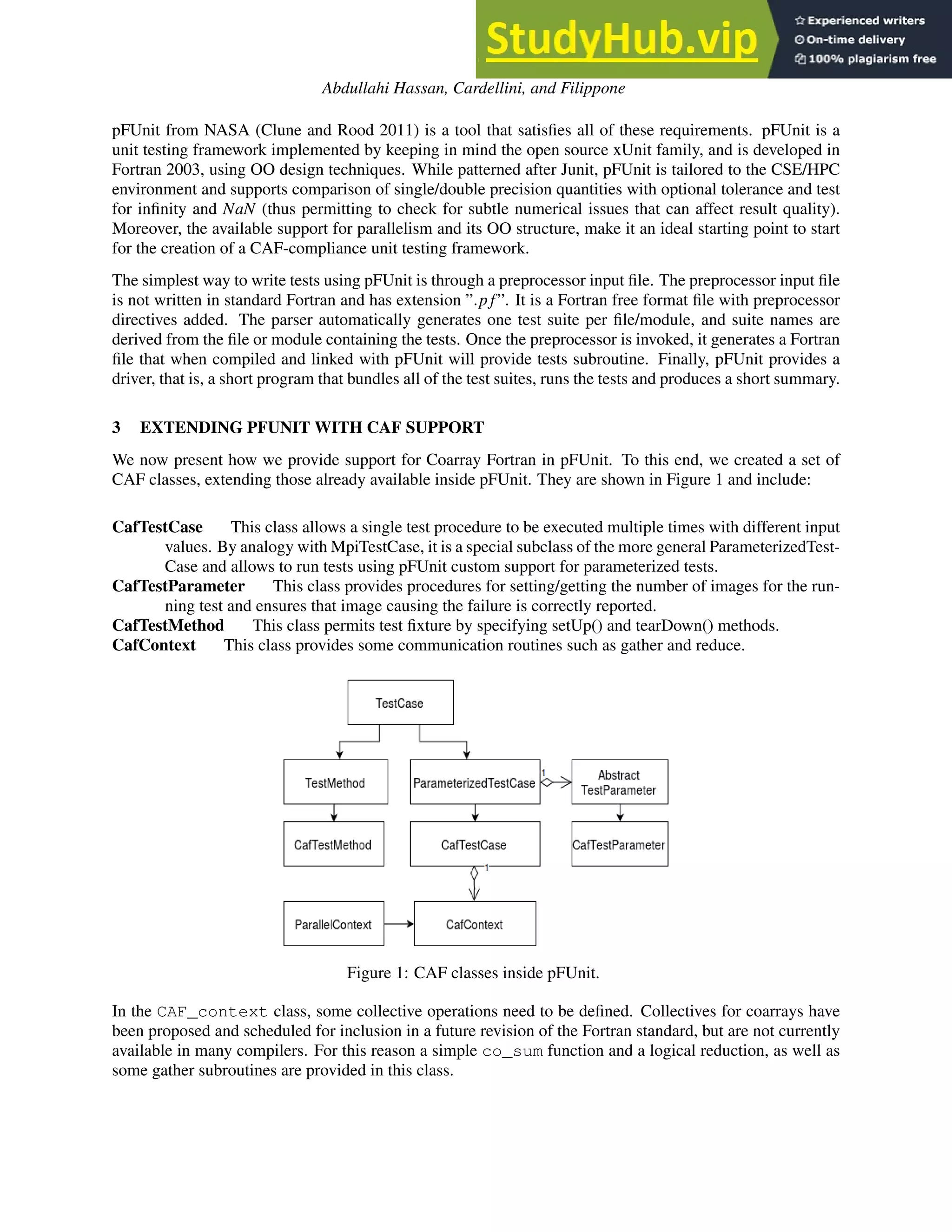

![Abdullahi Hassan, Cardellini, and Filippone

Figure 4: Example of halo exchange on 2 images.

discretization mesh of the original PDEs. Each variable is associated to one point of the discretization

mesh. If ai j ̸= 0 we say that point i depends on point j. After the partition of the discretization mesh into

subdomains, each point is assigned to a parallel process. An halo point is a point which belongs to another

domain, but there is at least one point in this domain that depends on it. Halo points are requested by other

domains when performing a computational step (for example a matrix-vector product) : every time this

happens we perform an halo exchange operation that can be considered as a sparse all-to-all communication.

The subroutine psb_halo performs such an exchange and gathers values of the halo elements: we used

our framework to unit test this procedure. We need to run the whole suite of tests multiple times if we want

to change the number of images participating in the test. To avoid that, in each test we distributed variables

not among all the available images, but only on a subset of them. In this way, while all tests run on the same

number of images, communication actually takes place only between some of the images. Of course this has

to be taken into account when asserting equality on all images (we have to do that, otherwise a deadlock can

occur). In the following example, we test the halo exchange of a vector. The variables are distributed only

between two images. When calling the assert statement, the expected solution check and the obtained

result v are multiplied by 1 if the image takes part to the communication, 0 otherwise.

@test ( nimgs =[ s t d ] )

subroutine test_psb_dhalo_2imgs_v ( t h i s )

i m p l i c i t none

Class ( CafTestMethod ) , i n t e n t ( inout ) : : t h i s

i n t e g e r : : me , t r u e

real ( psb_dpk_ ) , a l l o c a t a b l e : : v ( : ) , check ( : )

type ( psb_desc_type ) : : desc_a

! D i s t r i b u t i n g point , Creating i n p u t v e c t o r v and expected s o l u t i o n check .

. . .

! Cal l i ng the halo s u b r o u t i n e

c a l l psb_halo ( v , desc_a , i n f o )

@assertEqual (0 , info , "ERROR in psb_halo " )

i f ( ( me==1). or . ( me==2)) then

t r u e = 1

e l s e

t r u e =0

endif

@assertEqual ( t r u e ∗check , t r u e ∗v )

! Deallocate f r e e and e x i t psblas

. . .

end subroutine test_psb_dhalo_2imgs_v](https://image.slidesharecdn.com/aframeworkforunittestingwithcoarrayfortran-230806142249-ff3a5e9b/75/A-Framework-For-Unit-Testing-With-Coarray-Fortran-9-2048.jpg)