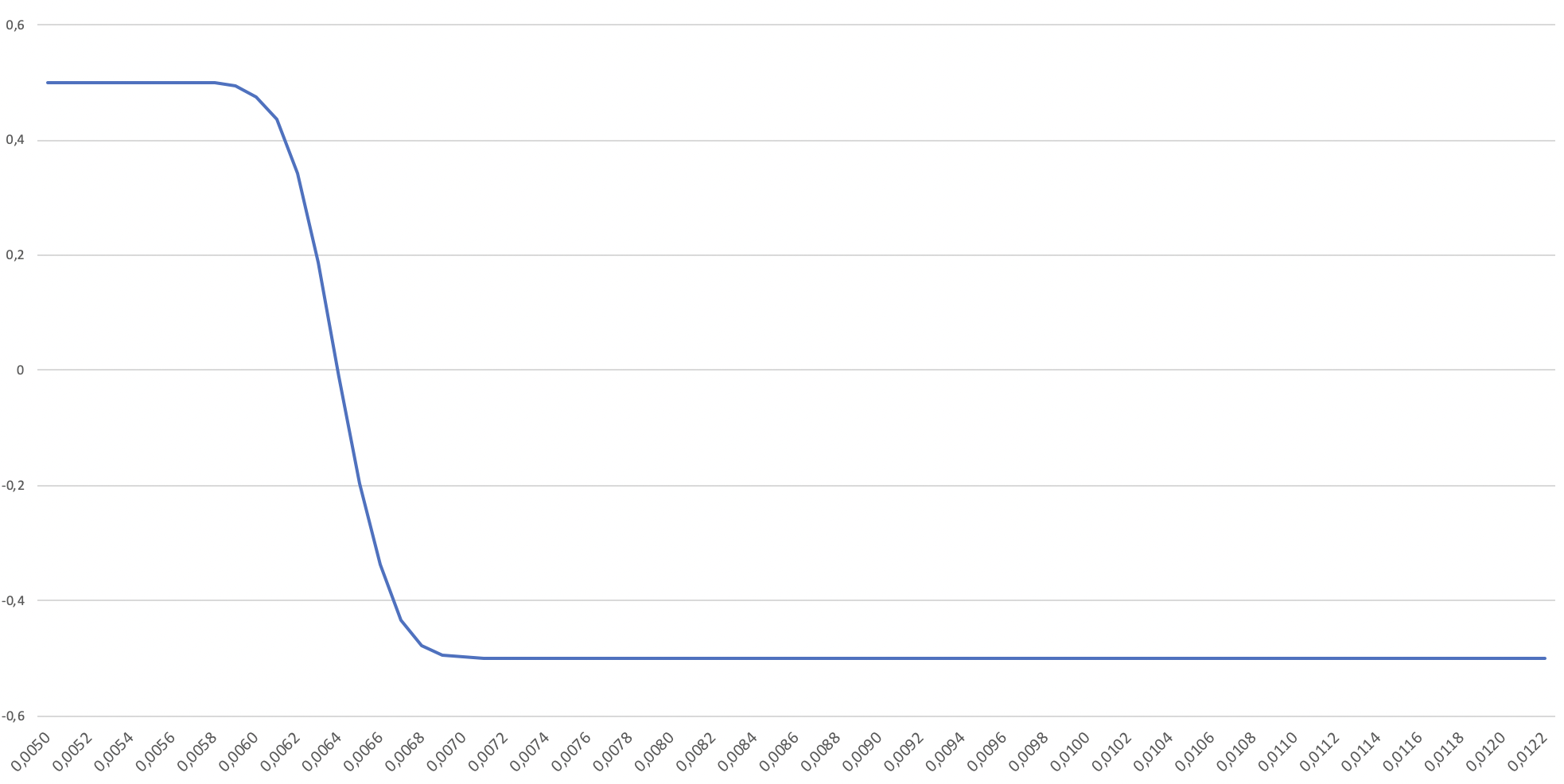

I am having a hard time finding the zero of (I think) a relatively simple function with the following form (also check attached image):

import numpy as np

from numpy.random import RandomState

from scipy import stats, optimize

state = RandomState()

def objective_function(x):

initial = np.ones(10000)

withdrawal = np.round(x, 4)

for idx in range(175):

sim_path = state.normal(1.001275, 0.004267, 10000)

initial = initial - withdrawal

initial[initial < 0] = 0

initial = initial * sim_path

percentile_cleared = 10000-sum(initial > 0)

return (5000-percentile_cleared) / 10000

I've been trying to use scipy.optimize.newton with minimal inputs:

solution = optimize.newton(objective_function, x0=0.0075)

but am actually surprised that it is so much sensitive to x0 provided. Small differences in x0 actually determine if a solution could be found or not. The solution is close to 0.0064 in that specific case, but I don't have a good way of determining it in general. And even here, providing x0 of 0.006 will not result in a correct solution.

Do you have any idea if I should provide more inputs to the solver or perhaps express my function in a different way to make it easier for the solver? Thanks a lot in advance!

initial_fvis not defined, so it isn't a working example.initial. Updated