Today (December 2, 2025), AI Assist’s conversational search and discovery experience is now fully integrated into Stack Overflow. AI Assist continues to provide learners with ways to understand community-verified answers and get help instantly. The enhancements released today mean that logged-in users can benefit from their saved chat history to jump back into the flow and pick up where they left off, or share conversations for collaborative problem-solving. This update also allows us to explore further integrations in the future, as explained below.

The story so far

AI Assist was launched as a beta in June 2025 as a standalone experience at stackoverflow.ai. We learned a great deal through observing usage, having discussions with community members on Meta, getting thoughts from various types of users via interviews and surveys, and reviewing user feedback submitted within AI Assist. Based on this, the overall conversational experience was refined and focused on providing maximum value and instilling trust in the responses. Human-verified answers from Stack Overflow and the Stack Exchange network are provided first, then LLM answers fill in any knowledge gaps when necessary. Sources are presented at the top and expanded by default, with in-line citations and direct quotes from community contributions for additional clarity and trust.

Since our last updates in September, AI Assist’s responses and have been further improved in several ways:

- At least a 35% response speed improvement

- Better responsive UI

- More relevant search results

- Using newer models

- Attribution on copied code

- Providing for the reality that not all questions are the same

- Depending on the query type, AI Assist now replies using one of 4 structures: solution-seeking, comparative/conceptual, methodology/process, or non-technical/career.

- Every response also has a "Next Steps" or "Further Learning" section to give the user something they can do.

What’s changed today?

While logged in to Stack Overflow, users of AI Assist can now:

- Access past conversations as a reference or pick up where they left off;

- Share conversations with others, to turn private insights into collective knowledge;

- Access AI Assist’s conversational search and discovery experiences on the site's home page (Stack Overflow only).

Example responses from AI Assist

Example responses from AI Assist

AI Assist at the top of the Stack Overflow homepage (logged-in)

AI Assist at the top of the Stack Overflow homepage (logged-in)

Conversations can be shared with others

Conversations can be shared with others

What’s next?

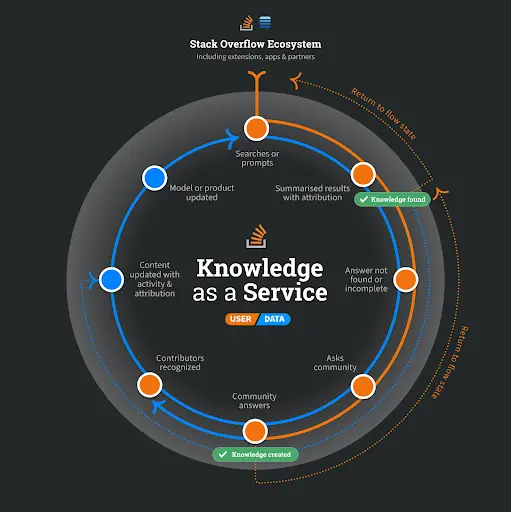

By showcasing a trusted human intelligence layer in the age of AI, we believe we can serve technologists with our mission to power learning and affirm the value of human community and collaboration.

Research with multiple user types has shown that users see the value of AI Assist as a learning and time-saving tool. It feels aligned with how they already use AI tools and there is value in having the deeper integrations. Transparency and trust remain key expectations.

Future opportunities we’ll be exploring are things like:

- Context awareness on question pages: making AI Assist adapt to where the user is

- Going further as a learning tool: help users understand why an answer works, surface related concepts, and support long-term learning

- Help more users learn how to use Stack Overflow: guide users on how to participate, helping them meet site standards

This is not the end of the work going into AI Assist, but the start of it on-platform. Expect to see iterations and improvements in the near future. We're looking forward to your feedback now and as we iterate.