-

Notifications

You must be signed in to change notification settings - Fork 26.3k

Enable DDPOptimizer by default in dynamo #88523

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

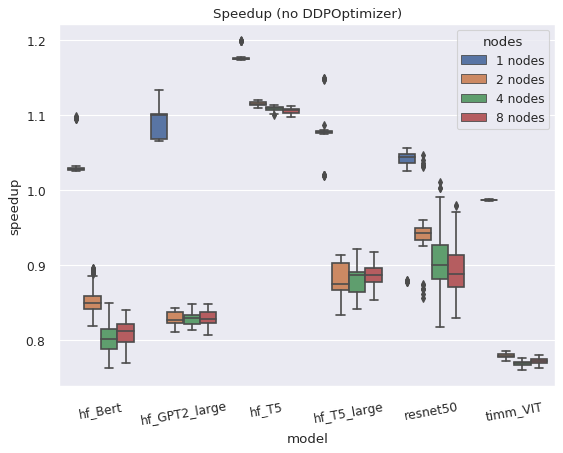

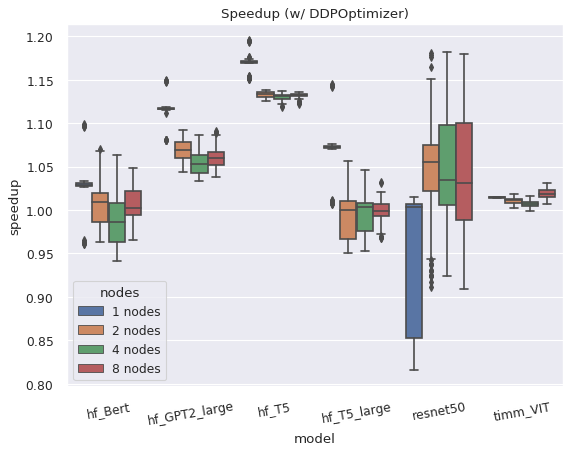

Conversation

Performance benchmarks on 6 popular models from 1-64 GPUs compiled with torchinductor show performance gains or parity with eager, and showed regressions without DDPOptimizer. (hf_Bert, hf_T5_large, hf_T5, hf_GPT2_large, timm_vision_transformer, resnet50) TODO: WIP adding/running these checks, land afterwards Correctness checks are implemented in CI (test_dynamo_distributed.py), via single-gpu benchmark scripts iterating over many models (benchmarks/dynamo/torchbench.py/timm_models.py/huggingface.py), and via (multi-gpu benchmark scripts in torchbench)[https://github.com/pytorch/benchmark/tree/main/userbenchmark/ddp_experiments]. [ghstack-poisoned]

🔗 Helpful Links🧪 See artifacts and rendered test results at hud.pytorch.org/pr/88523

Note: Links to docs will display an error until the docs builds have been completed. ❌ 1 FailuresAs of commit bba6c0a: The following jobs have failed:

This comment was automatically generated by Dr. CI and updates every 15 minutes. |

Performance benchmarks on 6 popular models from 1-64 GPUs compiled with torchinductor show performance gains or parity with eager, and showed regressions without DDPOptimizer. (hf_Bert, hf_T5_large, hf_T5, hf_GPT2_large, timm_vision_transformer, resnet50) TODO: WIP adding/running these checks, land afterwards Correctness checks are implemented in CI (test_dynamo_distributed.py), via single-gpu benchmark scripts iterating over many models (benchmarks/dynamo/torchbench.py/timm_models.py/huggingface.py), and via (multi-gpu benchmark scripts in torchbench)[https://github.com/pytorch/benchmark/tree/main/userbenchmark/ddp_experiments]. ghstack-source-id: 0d774d1 Pull Request resolved: #88523

|

can you link to the spreadsheet of benchmarks' performance numbers here |

|

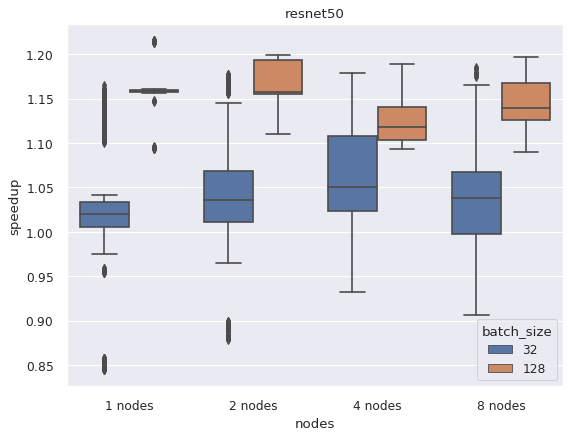

with DDPOptimizer and 1-GPU ResNet50 seems to have a slowdown below 1.0x, why is that? |

|

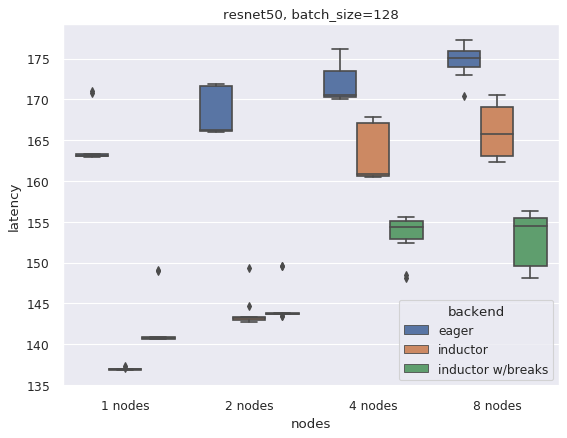

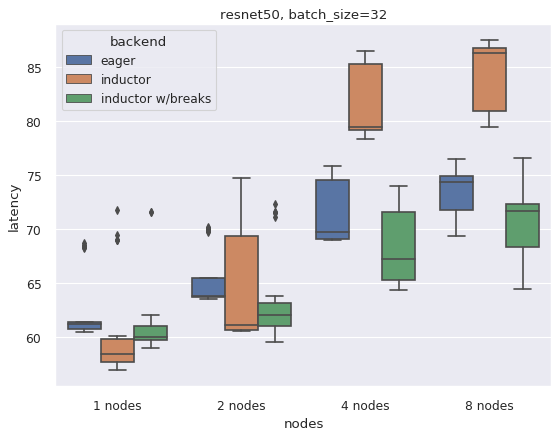

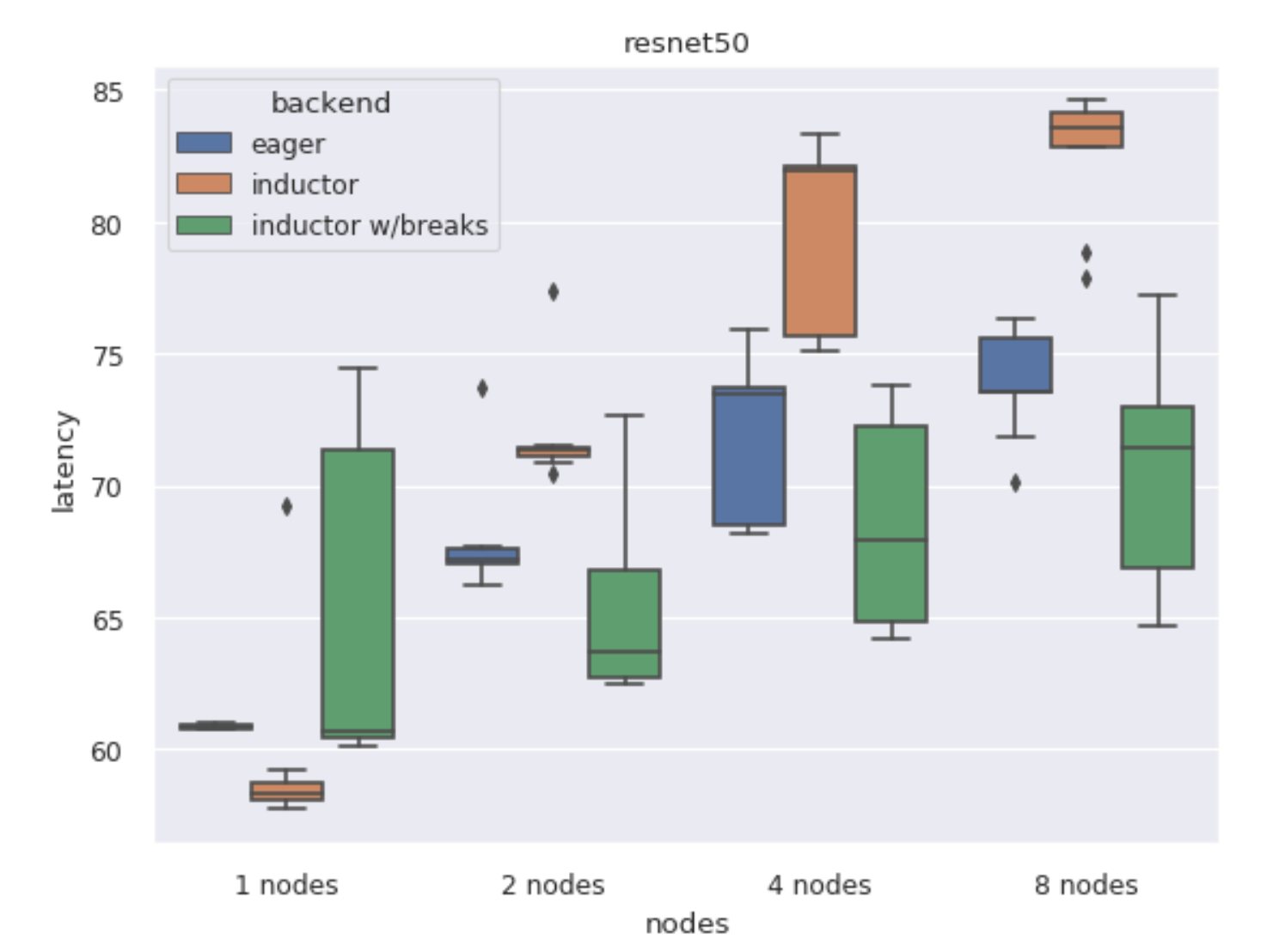

@soumith we think this is just noise plus the fact that resnet50 + 1 gpu spends too much time on communication to see a speedup from dynamo (at least on this batch size of 32, which is the torchbench default for resnet50). We re-ran over a larger number of samples (28, compared to 8 in the data above) on only the resnet50 model, results shown below (N2752413). We also ran on batch_size=128, where we do see a speedup. |

I'm surprised it is so pronounced. Earlier data https://www.internalfb.com/intern/anp/view/?id=2750232 However, we learned 2 things about resnet

We also changed bs from 32 to 128 at one point and got lower noise, but i'm not sure which bs is in these charts. The compilation failure is something that we need to fix at the AOTAutograd layer. It's being tracked in AOTAutograd 2.0 discussion, and afaiu it's better to not try to fix it in the DDPOptimizer layer and fix it in the autograd layer. (The alternative is we could try to make DDPOptimizer avoid splits that upset AOTAutograd, but that would complicate DDPOptimizer and also deviate from the buckets used by DDP. |

|

I've run an accuracy/functionality sweep across torchbench, timm_models, huggingface scripts. The sweep was done on master @ The data is here

While the pass rates are not great, only 1 issue in the bunch is unique to turning DDPOptimizer on, and it's an AotAutograd Input mutation issue which we'll depend on being fixed in the updated AotAutograd implementation that handles mutation. The remainder of the issues (either crashes or accuracy) happen either with dynamo-eager+ddp, or dynamo-inductor+ddp, but without using DDPOptimizer. The most common error categories i've sampled are

I therefore propose to land this enablement PR as-is, and file new issues for categories of DDP+dynamo/inductor failures to triage accordingly. |

|

landing it sounds reasonable |

Performance benchmarks on 6 popular models from 1-64 GPUs compiled with torchinductor show performance gains or parity with eager, and showed regressions without DDPOptimizer. *Note: resnet50 with small batch size shows a regression with optimizer, in part due to failing to compile one subgraph due to input mutation, which will be fixed. (hf_Bert, hf_T5_large, hf_T5, hf_GPT2_large, timm_vision_transformer, resnet50) Correctness checks are implemented in CI (test_dynamo_distributed.py), via single-gpu benchmark scripts iterating over many models (benchmarks/dynamo/torchbench.py/timm_models.py/huggingface.py), and via (multi-gpu benchmark scripts in torchbench)[https://github.com/pytorch/benchmark/tree/main/userbenchmark/ddp_experiments]. cc mlazos soumith voznesenskym yanboliang penguinwu anijain2305 EikanWang jgong5 Guobing-Chen chunyuan-w XiaobingSuper zhuhaozhe blzheng Xia-Weiwen wenzhe-nrv jiayisunx [ghstack-poisoned]

Performance benchmarks on 6 popular models from 1-64 GPUs compiled with torchinductor show performance gains or parity with eager, and showed regressions without DDPOptimizer. (hf_Bert, hf_T5_large, hf_T5, hf_GPT2_large, timm_vision_transformer, resnet50) TODO: WIP adding/running these checks, land afterwards Correctness checks are implemented in CI (test_dynamo_distributed.py), via single-gpu benchmark scripts iterating over many models (benchmarks/dynamo/torchbench.py/timm_models.py/huggingface.py), and via (multi-gpu benchmark scripts in torchbench)[https://github.com/pytorch/benchmark/tree/main/userbenchmark/ddp_experiments]. ghstack-source-id: 85a90ba Pull Request resolved: #88523

Performance benchmarks on 6 popular models from 1-64 GPUs compiled with torchinductor show performance gains or parity with eager, and showed regressions without DDPOptimizer. *Note: resnet50 with small batch size shows a regression with optimizer, in part due to failing to compile one subgraph due to input mutation, which will be fixed. (hf_Bert, hf_T5_large, hf_T5, hf_GPT2_large, timm_vision_transformer, resnet50) Correctness checks are implemented in CI (test_dynamo_distributed.py), via single-gpu benchmark scripts iterating over many models (benchmarks/dynamo/torchbench.py/timm_models.py/huggingface.py), and via (multi-gpu benchmark scripts in torchbench)[https://github.com/pytorch/benchmark/tree/main/userbenchmark/ddp_experiments]. cc mlazos soumith voznesenskym yanboliang penguinwu anijain2305 EikanWang jgong5 Guobing-Chen chunyuan-w XiaobingSuper zhuhaozhe blzheng Xia-Weiwen wenzhe-nrv jiayisunx [ghstack-poisoned]

Performance benchmarks on 6 popular models from 1-64 GPUs compiled with torchinductor show performance gains or parity with eager, and showed regressions without DDPOptimizer. (hf_Bert, hf_T5_large, hf_T5, hf_GPT2_large, timm_vision_transformer, resnet50) TODO: WIP adding/running these checks, land afterwards Correctness checks are implemented in CI (test_dynamo_distributed.py), via single-gpu benchmark scripts iterating over many models (benchmarks/dynamo/torchbench.py/timm_models.py/huggingface.py), and via (multi-gpu benchmark scripts in torchbench)[https://github.com/pytorch/benchmark/tree/main/userbenchmark/ddp_experiments]. ghstack-source-id: 488dae5 Pull Request resolved: #88523

|

New accuracy sweep here

@davidberard98 seems close to getting the accuracy checks running in the real perf benchmarks. @bdhirsh is working on getting AotAutograd improvements to a state we can at least confirm these graph-break cases are resolved. |

Performance benchmarks on 6 popular models from 1-64 GPUs compiled with torchinductor show performance gains or parity with eager, and showed regressions without DDPOptimizer. *Note: resnet50 with small batch size shows a regression with optimizer, in part due to failing to compile one subgraph due to input mutation, which will be fixed. (hf_Bert, hf_T5_large, hf_T5, hf_GPT2_large, timm_vision_transformer, resnet50) Correctness checks are implemented in CI (test_dynamo_distributed.py), via single-gpu benchmark scripts iterating over many models (benchmarks/dynamo/torchbench.py/timm_models.py/huggingface.py), and via (multi-gpu benchmark scripts in torchbench)[https://github.com/pytorch/benchmark/tree/main/userbenchmark/ddp_experiments]. cc mlazos soumith voznesenskym yanboliang penguinwu anijain2305 EikanWang jgong5 Guobing-Chen chunyuan-w XiaobingSuper zhuhaozhe blzheng Xia-Weiwen wenzhe-nrv jiayisunx [ghstack-poisoned]

Performance benchmarks on 6 popular models from 1-64 GPUs compiled with torchinductor show performance gains or parity with eager, and showed regressions without DDPOptimizer. *Note: resnet50 with small batch size shows a regression with optimizer, in part due to failing to compile one subgraph due to input mutation, which will be fixed. (hf_Bert, hf_T5_large, hf_T5, hf_GPT2_large, timm_vision_transformer, resnet50) Correctness checks are implemented in CI (test_dynamo_distributed.py), via single-gpu benchmark scripts iterating over many models (benchmarks/dynamo/torchbench.py/timm_models.py/huggingface.py), and via (multi-gpu benchmark scripts in torchbench)[https://github.com/pytorch/benchmark/tree/main/userbenchmark/ddp_experiments]. cc mlazos soumith voznesenskym yanboliang penguinwu anijain2305 EikanWang jgong5 Guobing-Chen chunyuan-w XiaobingSuper zhuhaozhe blzheng Xia-Weiwen wenzhe-nrv jiayisunx [ghstack-poisoned]

Performance benchmarks on 6 popular models from 1-64 GPUs compiled with torchinductor show performance gains or parity with eager, and showed regressions without DDPOptimizer. *Note: resnet50 with small batch size shows a regression with optimizer, in part due to failing to compile one subgraph due to input mutation, which will be fixed. (hf_Bert, hf_T5_large, hf_T5, hf_GPT2_large, timm_vision_transformer, resnet50) Correctness checks are implemented in CI (test_dynamo_distributed.py), via single-gpu benchmark scripts iterating over many models (benchmarks/dynamo/torchbench.py/timm_models.py/huggingface.py), and via (multi-gpu benchmark scripts in torchbench)[https://github.com/pytorch/benchmark/tree/main/userbenchmark/ddp_experiments]. cc mlazos soumith voznesenskym yanboliang penguinwu anijain2305 EikanWang jgong5 Guobing-Chen chunyuan-w XiaobingSuper zhuhaozhe blzheng Xia-Weiwen wenzhe-nrv jiayisunx [ghstack-poisoned]

Performance benchmarks on 6 popular models from 1-64 GPUs compiled with torchinductor show performance gains or parity with eager, and showed regressions without DDPOptimizer. *Note: resnet50 with small batch size shows a regression with optimizer, in part due to failing to compile one subgraph due to input mutation, which will be fixed. (hf_Bert, hf_T5_large, hf_T5, hf_GPT2_large, timm_vision_transformer, resnet50) Correctness checks are implemented in CI (test_dynamo_distributed.py), via single-gpu benchmark scripts iterating over many models (benchmarks/dynamo/torchbench.py/timm_models.py/huggingface.py), and via (multi-gpu benchmark scripts in torchbench)[https://github.com/pytorch/benchmark/tree/main/userbenchmark/ddp_experiments]. cc mlazos soumith voznesenskym yanboliang penguinwu anijain2305 EikanWang jgong5 Guobing-Chen chunyuan-w XiaobingSuper zhuhaozhe blzheng Xia-Weiwen wenzhe-nrv jiayisunx [ghstack-poisoned]

Performance benchmarks on 6 popular models from 1-64 GPUs compiled with torchinductor show performance gains or parity with eager, and showed regressions without DDPOptimizer. *Note: resnet50 with small batch size shows a regression with optimizer, in part due to failing to compile one subgraph due to input mutation, which will be fixed. (hf_Bert, hf_T5_large, hf_T5, hf_GPT2_large, timm_vision_transformer, resnet50) Correctness checks are implemented in CI (test_dynamo_distributed.py), via single-gpu benchmark scripts iterating over many models (benchmarks/dynamo/torchbench.py/timm_models.py/huggingface.py), and via (multi-gpu benchmark scripts in torchbench)[https://github.com/pytorch/benchmark/tree/main/userbenchmark/ddp_experiments]. cc mlazos soumith voznesenskym yanboliang penguinwu anijain2305 EikanWang jgong5 Guobing-Chen chunyuan-w XiaobingSuper zhuhaozhe blzheng Xia-Weiwen wenzhe-nrv jiayisunx [ghstack-poisoned]

|

@pytorchbot merge |

Merge startedYour change will be merged once all checks pass (ETA 0-4 Hours). Learn more about merging in the wiki. Questions? Feedback? Please reach out to the PyTorch DevX Team |

Merge failedReason: The following mandatory check(s) failed (Rule Dig deeper by viewing the failures on hud Details for Dev Infra teamRaised by workflow job |

|

@pytorchbot merge -f "Flaky CI" |

Merge startedYour change will be merged immediately since you used the force (-f) flag, bypassing any CI checks (ETA: 1-5 minutes). Learn more about merging in the wiki. Questions? Feedback? Please reach out to the PyTorch DevX Team |

Merge failedReason: Command Details for Dev Infra teamRaised by workflow job |

Performance benchmarks on 6 popular models from 1-64 GPUs compiled with torchinductor show performance gains or parity with eager, and showed regressions without DDPOptimizer. *Note: resnet50 with small batch size shows a regression with optimizer, in part due to failing to compile one subgraph due to input mutation, which will be fixed. (hf_Bert, hf_T5_large, hf_T5, hf_GPT2_large, timm_vision_transformer, resnet50) Correctness checks are implemented in CI (test_dynamo_distributed.py), via single-gpu benchmark scripts iterating over many models (benchmarks/dynamo/torchbench.py/timm_models.py/huggingface.py), and via (multi-gpu benchmark scripts in torchbench)[https://github.com/pytorch/benchmark/tree/main/userbenchmark/ddp_experiments]. cc mlazos soumith voznesenskym yanboliang penguinwu anijain2305 EikanWang jgong5 Guobing-Chen chunyuan-w XiaobingSuper zhuhaozhe blzheng Xia-Weiwen wenzhe-nrv jiayisunx desertfire [ghstack-poisoned]

|

@pytorchbot merge |

Merge startedYour change will be merged once all checks pass (ETA 0-4 Hours). Learn more about merging in the wiki. Questions? Feedback? Please reach out to the PyTorch DevX Team |

Merge failedReason: The following mandatory check(s) failed (Rule Dig deeper by viewing the failures on hud Details for Dev Infra teamRaised by workflow job |

|

@pytorchbot merge -f "Flaky CI (gpu SIGIOT)" |

Merge startedYour change will be merged immediately since you used the force (-f) flag, bypassing any CI checks (ETA: 1-5 minutes). Learn more about merging in the wiki. Questions? Feedback? Please reach out to the PyTorch DevX Team |

Performance benchmarks on 6 popular models from 1-64 GPUs compiled with torchinductor show performance gains or parity with eager, and showed regressions without DDPOptimizer. *Note: resnet50 with small batch size shows a regression with optimizer, in part due to failing to compile one subgraph due to input mutation, which will be fixed. (hf_Bert, hf_T5_large, hf_T5, hf_GPT2_large, timm_vision_transformer, resnet50) Correctness checks are implemented in CI (test_dynamo_distributed.py), via single-gpu benchmark scripts iterating over many models (benchmarks/dynamo/torchbench.py/timm_models.py/huggingface.py), and via (multi-gpu benchmark scripts in torchbench)[https://github.com/pytorch/benchmark/tree/main/userbenchmark/ddp_experiments]. Pull Request resolved: pytorch#88523 Approved by: https://github.com/davidberard98

Stack from ghstack (oldest at bottom):

Performance benchmarks on 6 popular models from 1-64 GPUs compiled with

torchinductor show performance gains or parity with eager, and showed

regressions without DDPOptimizer. *Note: resnet50 with small batch size shows a regression with optimizer, in part due to failing to compile one subgraph due to input mutation, which will be fixed.

(hf_Bert, hf_T5_large, hf_T5, hf_GPT2_large, timm_vision_transformer, resnet50)

Correctness checks are implemented in CI (test_dynamo_distributed.py),

via single-gpu benchmark scripts iterating over many models

(benchmarks/dynamo/torchbench.py/timm_models.py/huggingface.py),

and via (multi-gpu benchmark scripts in torchbench)[https://github.com/pytorch/benchmark/tree/main/userbenchmark/ddp_experiments].

cc @mlazos @soumith @voznesenskym @yanboliang @penguinwu @anijain2305 @EikanWang @jgong5 @Guobing-Chen @chunyuan-w @XiaobingSuper @zhuhaozhe @blzheng @Xia-Weiwen @wenzhe-nrv @jiayisunx @desertfire