-

Notifications

You must be signed in to change notification settings - Fork 26.3k

[JIT] Frozen Graph Linear-BatchNormNd Folding #86706

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

🔗 Helpful Links🧪 See artifacts and rendered test results at hud.pytorch.org/pr/86706

Note: Links to docs will display an error until the docs builds have been completed. ✅ No FailuresAs of commit 879df37: This comment was automatically generated by Dr. CI and updates every 15 minutes. |

|

|

|

@eellison @davidberard98, could you please help review this PR? |

|

@davidberard98 has imported this pull request. If you are a Meta employee, you can view this diff on Phabricator. |

|

I started the tests. I will review after they run. If I forget feel free to tag me @min-jean-cho. Also, please sign the CLA when you get a chance. |

|

@min-jean-cho I see some build failures. Please take a look. If you aren't sure what the issues are, ping me and I can help investigate. |

|

Thank you @davidberard98 for the help, I will ping you after resolving the build issues. Thank you! |

|

@davidberard98 has imported this pull request. If you are a Meta employee, you can view this diff on Phabricator. |

I have signed the CLA, seems like it's taking some time to process. |

|

|

|

@min-jean-cho, I think the CLA gets processed immediately with EasyCLA. If only the latest commit is considered by EasyCLA, then rebasing with GitHub website UI (as you did earlier once in this PR) would help pass this check. |

4a0db24 to

87f2c4a

Compare

|

@davidberard98 has imported this pull request. If you are a Meta employee, you can view this diff on Phabricator. |

87f2c4a to

86eca9f

Compare

|

Thank you @davidberard98 @sanchitintel for the guidance. CLA has passed now. |

|

@davidberard98 has imported this pull request. If you are a Meta employee, you can view this diff on Phabricator. |

|

Hi @davidberard98, looks like the meta internal CI/CD has passed as well. Could you please help review when available? Thanks! |

|

@min-jean-cho, just FYI - that checkmark only suggests that the commit of this PR imported for Meta internal CI checks is the same as the latest commit of this PR, but the status of those CI checks is not visible to folks outside Meta. |

davidberard98

left a comment

davidberard98

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@min-jean-cho minor nit: could you use linux newlines instead of dos newlines in the new files you added, just for consistency in the codebase?

still looking at the content of the PR, just wanted to mention that before I forget :)

Thanks @davidberard98 :) . |

Thanks, this reminded me that I might also need to fix this in the code already merged, and should proactively check for it in future PRs. |

|

@pytorchbot rebase -b master |

|

@pytorchbot successfully started a rebase job. Check the current status here |

|

Successfully rebased |

|

Successfully rebased |

d8800b4 to

879df37

Compare

|

@davidberard98 has imported this pull request. If you are a Meta employee, you can view this diff on Phabricator. |

|

@pytorchbot merge |

|

fyi, the previous failure was also a flaky test; if this one doesn't pass we can probably force merge. |

Merge startedYour change will be merged once all checks pass (ETA 0-4 Hours). Learn more about merging in the wiki. Questions? Feedback? Please reach out to the PyTorch DevX Team |

|

Thanks @davidberard98 ~ sounds good ! |

|

@davidberard98, I see it's merged! Appreciate your help and review to landing one of my first PRs to PyTorch! |

|

@min-jean-cho Glad to help - and sorry it took me so long, feel free to ping me more frequently if you need code review in the future, because sometimes I miss the notifications |

|

@pytorchbot revert -m "possibly causing internal build failures, will revert and investigate later" -c ghfirst |

|

@pytorchbot successfully started a revert job. Check the current status here. |

|

@min-jean-cho your PR has been successfully reverted. |

This reverts commit e585156. Reverted #86706 on behalf of https://github.com/davidberard98 due to possibly causing internal build failures, will revert and investigate later

|

@min-jean-cho sorry about that, we're seeing some internal failures associated with this PR that I need to investigate before we can try re-landing... do you think you can re-open a second PR with the same contents? (this will help avoid any issues with syncing between the internal and external version of the PR) |

) Re-landing #86706 This PR adds linear-batchnormNd folding for JIT frozen graphs. **Performance benchmark** A preliminary benchmark with a simple model of linear+bn1d tested on first socket, physical cores of skylake machine. **FP32, JIT** without linear-bn folding  with linear-bn folding  Pull Request resolved: #91020 Approved by: https://github.com/davidberard98

This PR adds linear-batchnormNd folding for JIT frozen graphs.

Performance benchmark

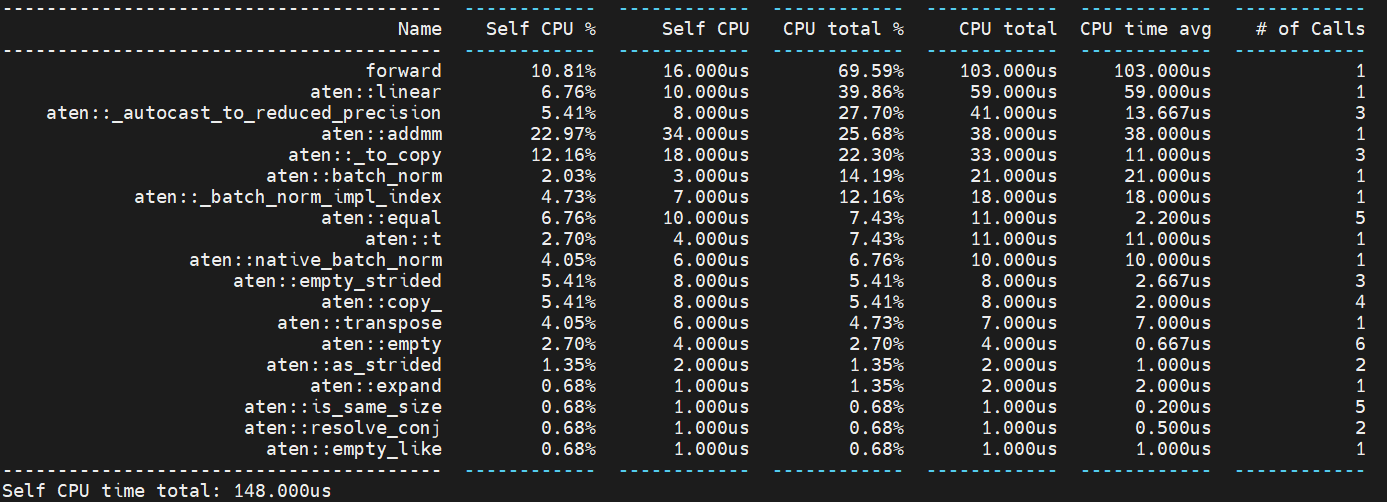

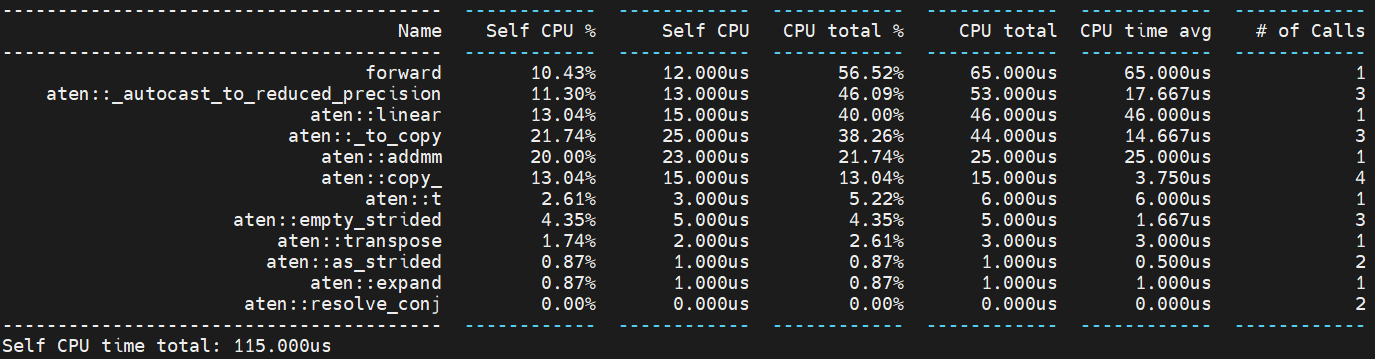

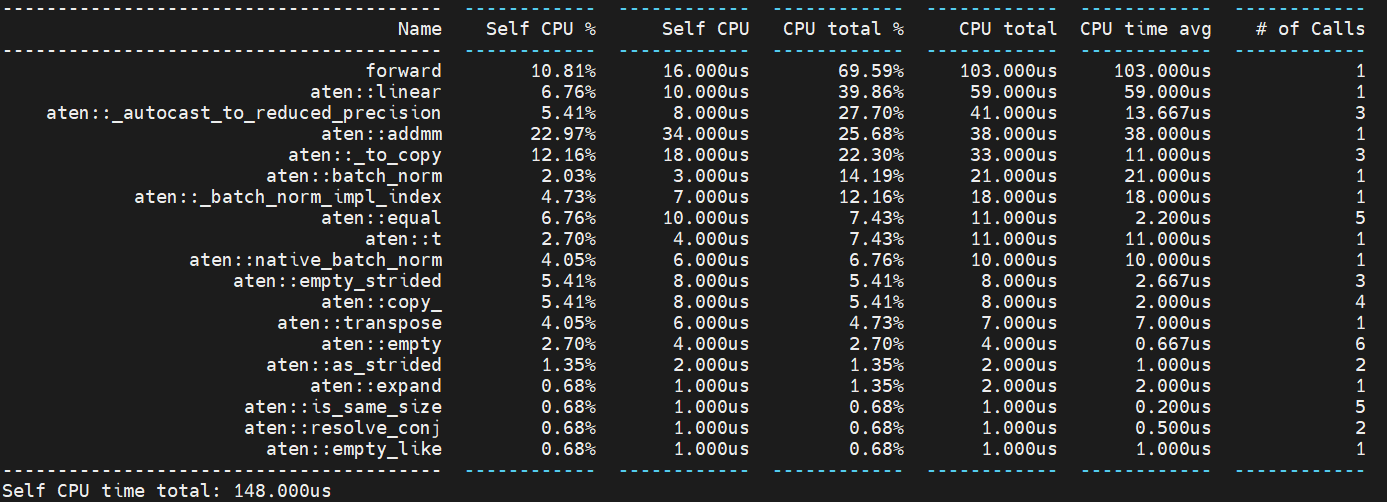

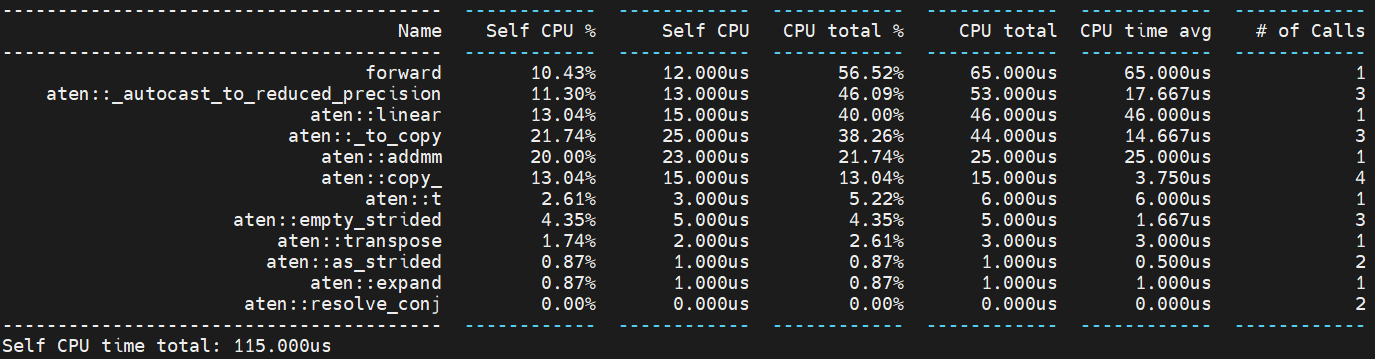

A preliminary benchmark with a simple model of linear+bn1d tested on first socket, physical cores of skylake machine.

FP32, JIT

without linear-bn folding

with linear-bn folding

cc @EikanWang @jgong5 @wenzhe-nrv @sanchitintel @mingfeima @XiaobingSuper @ashokei @jingxu10