-

Notifications

You must be signed in to change notification settings - Fork 26.3k

aten::set_grad_enabled should not push as it does not return a value #45559

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

💊 CI failures summary and remediationsAs of commit 4feb8fc (more details on the Dr. CI page):

❄️ 2 failures tentatively classified as flakybut reruns have not yet been triggered to confirm:

|

| "aten::set_grad_enabled(bool val) -> ()", | ||

| [](Stack* stack) { | ||

| torch::GradMode::set_enabled(pop(stack).toBool()); | ||

| push(stack, IValue()); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@eellison I remember we talked about this during the no_grad PR:

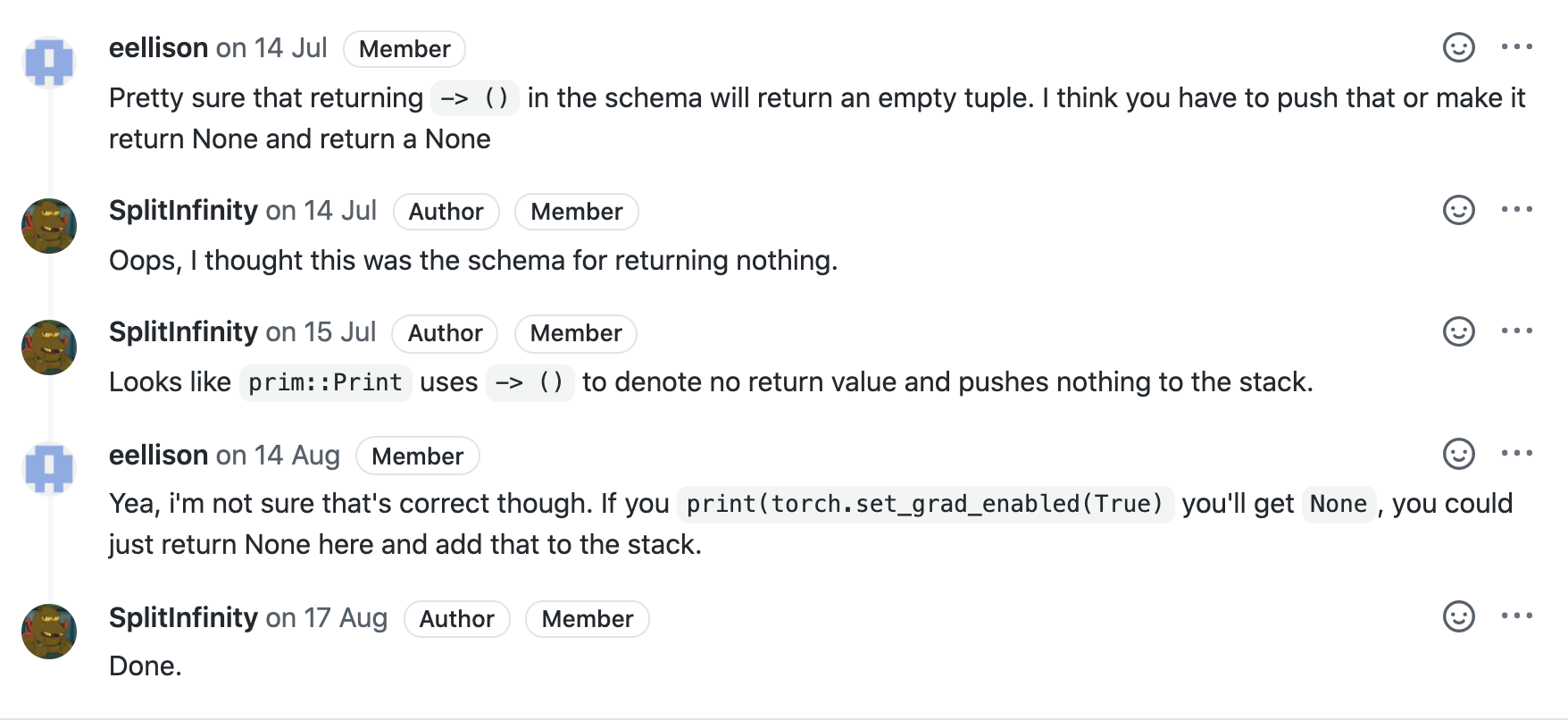

I agree with the PR author's assessment that what we thought is the return value of torch.set_grad_enabled(False) is actually just Python printing out none where there is no return value but I wanted to double-check.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yea, my suggestion was you push None on the stack but we should have also updated the schema to reflect that

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

No return value is also fine. I want to double check that the operator doesn't have a return value though when you annotate it with (). (printing the IR graph should show us)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Confirmed

graph():

%2 : None = prim::Constant() # test/elias.py:5:0

%0 : bool = prim::Constant[value=1]() # test/elias.py:6:27

= aten::set_grad_enabled(%0) # test/elias.py:6:4

return (%2)

eellison

left a comment

eellison

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM! Thx for PR! It would be nice if we could have caught this automatically - maybe a debug build that asserts the stack is the expected size after each operator. This isnt the first time we have an extra stack value....

facebook-github-bot

left a comment

facebook-github-bot

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@SplitInfinity has imported this pull request. If you are a Facebook employee, you can view this diff on Phabricator.

Checking stack size for each operator is a bit heavy, a debug build is appropriate. We can also add an always-on check in |

…45559) Summary: Fixes #45558 This assertion failure is caused by the incorrect implementation of ``aten::set_grad_enabled`` in [torch/csrc/jit/runtime/register_special_ops.cpp](https://github.com/pytorch/pytorch/blob/master/torch/csrc/jit/runtime/register_special_ops.cpp#L436). The current implementation is: ```cpp Operator( "aten::set_grad_enabled(bool val) -> ()", [](Stack* stack) { torch::GradMode::set_enabled(pop(stack).toBool()); push(stack, IValue()); }, aliasAnalysisConservative()), ``` which push a ``None`` on to the evaluation stack after calling ``set_enabled``. But according to the signature, the behavior is incorrect as the signature says this function won't return a value. I guess the original author might be confused by the behavior of Python, which pushes a ``None`` on to the evaluation stack when the function definition does not end with a return statement with an explicit result value. If ``aten::set_grad_enabled`` pushes a ``None`` on to the evaluation stack, each time it's called, the evaluation stack will accumulate an extra ``None``. In our case, ``with torch.no_grad():`` will cause ``aten::set_grad_enabled`` to be called twice, so when the ``forward`` method finishes, the evaluation stack will be ``[None, None, Tensor]``. But the return statement of ``GraphFunction::operator()`` in [torch/csrc/jit/api/function_impl.cpp](https://github.com/pytorch/pytorch/blob/master/torch/csrc/jit/api/function_impl.cpp#L51) is ``return stack.front();`` which will try to extract a tensor out of a ``None`` thus causes the assertion failure. The solution is simple, just remove the push in the implementation of ``aten::set_grad_enabled``. Pull Request resolved: #45559 Reviewed By: albanD Differential Revision: D24142153 Pulled By: SplitInfinity fbshipit-source-id: 75aad0e38bd912a437f7e1a1ee89ab4445e35b5d

|

@SplitInfinity merged this pull request in e3112e3. |

…45559) (#46060) Summary: Fixes #45558 This assertion failure is caused by the incorrect implementation of ``aten::set_grad_enabled`` in [torch/csrc/jit/runtime/register_special_ops.cpp](https://github.com/pytorch/pytorch/blob/master/torch/csrc/jit/runtime/register_special_ops.cpp#L436). The current implementation is: ```cpp Operator( "aten::set_grad_enabled(bool val) -> ()", [](Stack* stack) { torch::GradMode::set_enabled(pop(stack).toBool()); push(stack, IValue()); }, aliasAnalysisConservative()), ``` which push a ``None`` on to the evaluation stack after calling ``set_enabled``. But according to the signature, the behavior is incorrect as the signature says this function won't return a value. I guess the original author might be confused by the behavior of Python, which pushes a ``None`` on to the evaluation stack when the function definition does not end with a return statement with an explicit result value. If ``aten::set_grad_enabled`` pushes a ``None`` on to the evaluation stack, each time it's called, the evaluation stack will accumulate an extra ``None``. In our case, ``with torch.no_grad():`` will cause ``aten::set_grad_enabled`` to be called twice, so when the ``forward`` method finishes, the evaluation stack will be ``[None, None, Tensor]``. But the return statement of ``GraphFunction::operator()`` in [torch/csrc/jit/api/function_impl.cpp](https://github.com/pytorch/pytorch/blob/master/torch/csrc/jit/api/function_impl.cpp#L51) is ``return stack.front();`` which will try to extract a tensor out of a ``None`` thus causes the assertion failure. The solution is simple, just remove the push in the implementation of ``aten::set_grad_enabled``. Pull Request resolved: #45559 Reviewed By: albanD Differential Revision: D24142153 Pulled By: SplitInfinity fbshipit-source-id: 75aad0e38bd912a437f7e1a1ee89ab4445e35b5d Co-authored-by: huaidong.xiong <huaidong.xiong@mobvista.com>

Fixes #45558

This assertion failure is caused by the incorrect implementation of

aten::set_grad_enabledin torch/csrc/jit/runtime/register_special_ops.cpp. The current implementation is:which push a

Noneon to the evaluation stack after callingset_enabled. But according to the signature, the behavior is incorrect as the signature says this function won't return a value. I guess the original author might be confused by the behavior of Python, which pushes aNoneon to the evaluation stack when the function definition does not end with a return statement with an explicit result value.If

aten::set_grad_enabledpushes aNoneon to the evaluation stack, each time it's called, the evaluation stack will accumulate an extraNone. In our case,with torch.no_grad():will causeaten::set_grad_enabledto be called twice, so when theforwardmethod finishes, the evaluation stack will be[None, None, Tensor]. But the return statement ofGraphFunction::operator()in torch/csrc/jit/api/function_impl.cpp isreturn stack.front();which will try to extract a tensor out of aNonethus causes the assertion failure.The solution is simple, just remove the push in the implementation of

aten::set_grad_enabled.