-

Notifications

You must be signed in to change notification settings - Fork 26.3k

[doc] Add LSTM non-deterministic workaround #40893

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

cc @ptrblck |

💊 CI failures summary and remediationsAs of commit 7f71fa0 (more details on the Dr. CI page):

🕵️ 1 new failure recognized by patternsThe following CI failures do not appear to be due to upstream breakages:

|

torch/nn/modules/rnn.py

Outdated

| where :math:`k = \frac{1}{\text{hidden\_size}}` | ||

| .. warning:: | ||

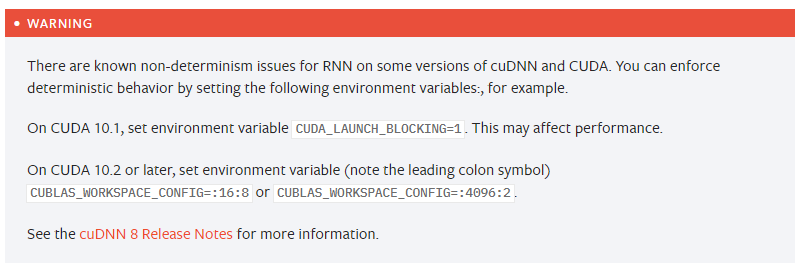

| There are known deterministic issues for LSTM using cuDNN 7.6.5, 8.0 on CUDA 10.1 or later. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

known non-determinism issues. Is it for LSTM only, or RNN/GRU are also affected?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Is the non-deterministic behavior really only on those two versions of cuDNN and only if the version of CUDA is 10.1 or later?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

It could be related to other RNN. I'll check that and add docs at other places if necessary.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

You may also want to cover yourself and say "On some versions of cuDNN and CUDA..." It's not great, since then people will never know if they may hit this issue or not, but it's better than telling them it may only happen in cases X and Y and then seeing it happen in case Z, too.

Too bad there's no way to query for whether the function will be deterministic or not in the current environment, or request that it be run deterministically.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I tested on cuda 10.2, cudnn 7.6.5. RNN and LSTM are affected. GRU is deterministic.

|

Can you also cross reference this from https://pytorch.org/docs/stable/notes/randomness.html ? We try to keep a list of all non-deterministic ops in that note. |

torch/nn/modules/rnn.py

Outdated

| This may affect performance. | ||

| On CUDA 10.2 or later, set environment variable | ||

| (note the leading colon symbol) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Which one is it?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Do you mean the CUBLAS_WORKSPACE_CONFIG values? Either one would be fine.

facebook-github-bot

left a comment

facebook-github-bot

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@ngimel has imported this pull request. If you are a Facebook employee, you can view this diff on Phabricator.

Related: #35661

Preview