-

Notifications

You must be signed in to change notification settings - Fork 26.3k

Batched CPU reductions #39512

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Batched CPU reductions #39512

Conversation

f659579 to

3dc6ce5

Compare

aten/src/ATen/native/cpu/Reduce.h

Outdated

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This needs to be discussed

💊 CI failures summary and remediationsAs of commit 69d910d (more details on the Dr. CI page):

ci.pytorch.org: 1 failedThis comment was automatically generated by Dr. CI (expand for details).Follow this link to opt-out of these comments for your Pull Requests.Please report bugs/suggestions on the GitHub issue tracker or post in the (internal) Dr. CI Users group. This comment has been revised 28 times. |

|

Hey @emcastillo , I tried to include your version in the error comparison for #39516 but found your non-vectorized loop has exactly the same error as master. The |

|

Yeah, you are right, let me give it a twist |

|

I added some code that removes the I still have to measure performance. |

1cbcb40 to

483ed1c

Compare

|

seems that some tests are affected, I will try to fix it |

aten/src/ATen/native/cpu/Reduce.h

Outdated

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I don't feel happy with this change so I am open to suggestions.

96c7a98 to

69d910d

Compare

|

Thanks! |

|

#39516 achieving the same goal was merged, can we close this? |

|

I am fine with closing it, I was just wondering that since this is a generic solution to the reduction algorithm, other reduction routines could benefit from this, but I can't think of other than sum/prod. |

Fixes #38716 and #37234

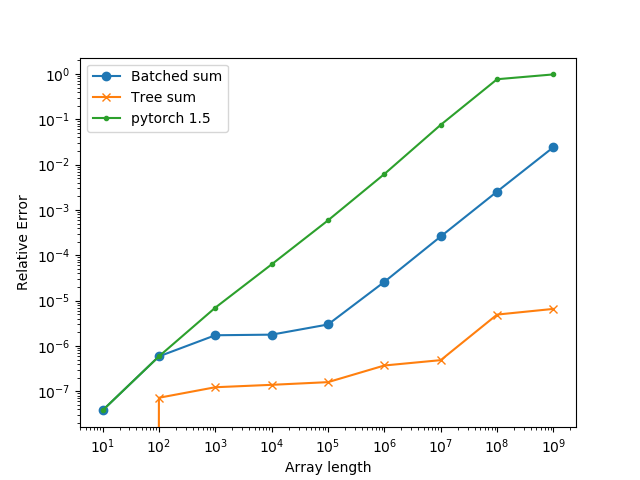

In some operations, just doing a running reduction over a large array can cause precision issues leading to incorrect results. This happens in the outer reduction case, as the reduction is parallelized across columns and there are no partial results.

This PR batches these operations so we have several intermediate results that can lead to more stable results and fewer precision issues. The changes are minimal and I believe it is better to do it here than in the specific reduction routine such as

sum.TODO: Decide a correct granularity, the 256 right now was put there almost randomly

Measure performance.

Test.

This PR is for discussing this alternative mostly, please give your thoughts and I will work on it.