-

Notifications

You must be signed in to change notification settings - Fork 26.3k

Simplify copy kernel #28352

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Merged

Merged

Simplify copy kernel #28352

Conversation

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This was referenced Oct 21, 2019

zasdfgbnm

added a commit

that referenced

this pull request

Oct 21, 2019

Using the new type promotion and dynamic casting added to

`TensorIterator`, the copy kernels could be greatly simplified.

**Script:**

```python

import torch

import timeit

import pandas

import itertools

from tqdm import tqdm

import math

print(torch.__version__)

print()

_10M = 10 * 1024 ** 2

d = {}

for from_, to in tqdm(itertools.product(torch.testing.get_all_dtypes(),

repeat=2)):

if from_ not in d:

d[from_] = {}

a = torch.zeros(_10M, dtype=from_)

min_ = math.inf

for i in range(100):

start = timeit.default_timer()

a.to(to)

end = timeit.default_timer()

elapsed = end - start

if elapsed < min_:

min_ = elapsed

d[from_][to] = int(elapsed * 1000 * 1000)

pandas.DataFrame(d)

```

**Before:**

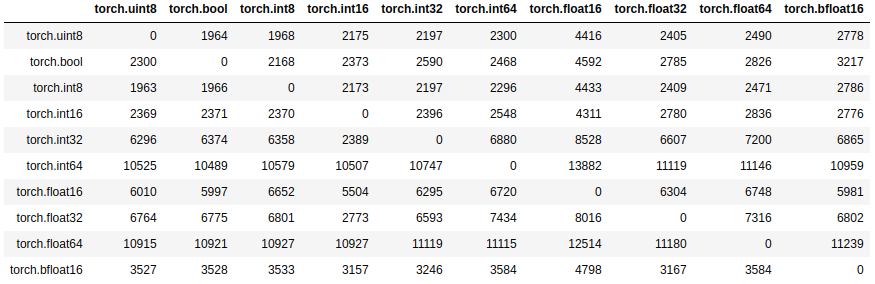

**After:**

ghstack-source-id: 3e43f97

Pull Request resolved: #28352

Using the new type promotion and dynamic casting added to

`TensorIterator`, the copy kernels could be greatly simplified.

**Script:**

```python

import torch

import timeit

import pandas

import itertools

from tqdm import tqdm

import math

print(torch.__version__)

print()

_10M = 10 * 1024 ** 2

d = {}

for from_, to in tqdm(itertools.product(torch.testing.get_all_dtypes(),

repeat=2)):

if from_ not in d:

d[from_] = {}

a = torch.zeros(_10M, dtype=from_)

min_ = math.inf

for i in range(100):

start = timeit.default_timer()

a.to(to)

end = timeit.default_timer()

elapsed = end - start

if elapsed < min_:

min_ = elapsed

d[from_][to] = int(elapsed * 1000 * 1000)

pandas.DataFrame(d)

```

**Before:**

**After:**

[ghstack-poisoned]

Collaborator

Author

|

Sorry there is a bug in my benchmark code: I am not reporting the minimum time but reporting the last result instead. Here is the fixed script and result: script: import torch

import timeit

import pandas

import itertools

from tqdm.notebook import tqdm

import math

print(torch.__version__)

print()

_10M = 10 * 1024 ** 2

d = {}

for from_, to in tqdm(itertools.product(torch.testing.get_all_dtypes(), repeat=2)):

if from_ not in d:

d[from_] = {}

a = torch.zeros(_10M, dtype=from_)

min_ = math.inf

for i in range(100):

start = timeit.default_timer()

a.to(to)

end = timeit.default_timer()

elapsed = end - start

if elapsed < min_:

min_ = elapsed

d[from_][to] = int(min_ * 1000 * 1000)

pandas.DataFrame(d) |

zasdfgbnm

added a commit

that referenced

this pull request

Oct 22, 2019

Using the new type promotion and dynamic casting added to

`TensorIterator`, the copy kernels could be greatly simplified.

**Script:**

```python

import torch

import timeit

import pandas

import itertools

from tqdm import tqdm

import math

print(torch.__version__)

print()

_10M = 10 * 1024 ** 2

d = {}

for from_, to in tqdm(itertools.product(torch.testing.get_all_dtypes(),

repeat=2)):

if from_ not in d:

d[from_] = {}

a = torch.zeros(_10M, dtype=from_)

min_ = math.inf

for i in range(100):

start = timeit.default_timer()

a.to(to)

end = timeit.default_timer()

elapsed = end - start

if elapsed < min_:

min_ = elapsed

d[from_][to] = int(elapsed * 1000 * 1000)

pandas.DataFrame(d)

```

**Before:**

**After:**

ghstack-source-id: d7fc960

Pull Request resolved: #28352

Closed

zasdfgbnm

added a commit

that referenced

this pull request

Oct 22, 2019

Using the new type promotion and dynamic casting added to `TensorIterator`, the copy kernels could be greatly simplified. For benchmark, see #28352 (comment) [ghstack-poisoned]

zasdfgbnm

added a commit

that referenced

this pull request

Oct 23, 2019

Using the new type promotion and dynamic casting added to `TensorIterator`, the copy kernels could be greatly simplified. For benchmark, see #28352 (comment) [ghstack-poisoned]

zasdfgbnm

added a commit

that referenced

this pull request

Oct 24, 2019

Using the new type promotion and dynamic casting added to `TensorIterator`, the copy kernels could be greatly simplified. For benchmark, see #28352 (comment) [ghstack-poisoned]

zasdfgbnm

added a commit

that referenced

this pull request

Oct 24, 2019

Using the new type promotion and dynamic casting added to `TensorIterator`, the copy kernels could be greatly simplified. For benchmark, see #28352 (comment) [ghstack-poisoned]

zasdfgbnm

added a commit

that referenced

this pull request

Oct 25, 2019

Using the new type promotion and dynamic casting added to `TensorIterator`, the copy kernels could be greatly simplified. For benchmark, see #28352 (comment) [ghstack-poisoned]

zasdfgbnm

added a commit

that referenced

this pull request

Oct 25, 2019

Using the new type promotion and dynamic casting added to `TensorIterator`, the copy kernels could be greatly simplified. For benchmark, see #28352 (comment) [ghstack-poisoned]

zasdfgbnm

added a commit

that referenced

this pull request

Oct 26, 2019

Using the new type promotion and dynamic casting added to `TensorIterator`, the copy kernels could be greatly simplified. For benchmark, see #28352 (comment) [ghstack-poisoned]

zasdfgbnm

added a commit

that referenced

this pull request

Oct 26, 2019

Using the new type promotion and dynamic casting added to `TensorIterator`, the copy kernels could be greatly simplified. For benchmark, see #28352 (comment) [ghstack-poisoned]

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

Stack from ghstack:

Using the new type promotion and dynamic casting added to

TensorIterator, the copy kernels could be greatly simplified.Script:

Before:

After: