-

Notifications

You must be signed in to change notification settings - Fork 26.3k

Closed

Labels

high prioritymodule: ciRelated to continuous integrationRelated to continuous integrationmodule: rocmAMD GPU support for PytorchAMD GPU support for PytorchtriagedThis issue has been looked at a team member, and triaged and prioritized into an appropriate moduleThis issue has been looked at a team member, and triaged and prioritized into an appropriate module

Description

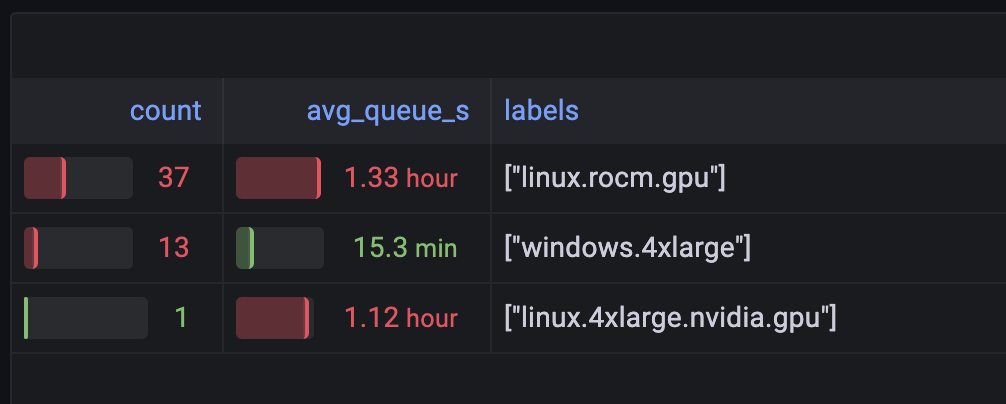

We observe jobs consistently waiting in 1h+ queues during US working hours. You can see the current state of the queue here: https://metrics.pytorch.org/?orgId=1&refresh=5m&viewPanel=85. At time of writing (2PM pacific on a thurssday), it looked like:

Queueing is bad because it increases the time to signal for our developers on PRs. 1h+ is quite bad, because the whole ROCm test suite can take hours to complete and we can't even get started. We have two options to reduce queuing:

- Increase supply by increasing size of the worker pool. This would involve spinning up more rocm runners.

- Decrease demand by decreasing the amount of work we do in CI. For example, we could move ROCm to trunk-only (with the ability to manually run rocm jobs on your PRs with CIFlow). Or we could restrict rocm workflows to commits that affect specific directories.

My preference would be to increase supply if possible, as then we don't have to do any custom stuff in the CI.

cc @ezyang @gchanan @zou3519 @jeffdaily @sunway513 @jithunnair-amd @ROCmSupport @KyleCZH @seemethere @malfet @pytorch/pytorch-dev-infra

seemethere

Metadata

Metadata

Assignees

Labels

high prioritymodule: ciRelated to continuous integrationRelated to continuous integrationmodule: rocmAMD GPU support for PytorchAMD GPU support for PytorchtriagedThis issue has been looked at a team member, and triaged and prioritized into an appropriate moduleThis issue has been looked at a team member, and triaged and prioritized into an appropriate module

Type

Projects

Status

Done