-

Notifications

You must be signed in to change notification settings - Fork 121

Refined loss function #205

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

The |

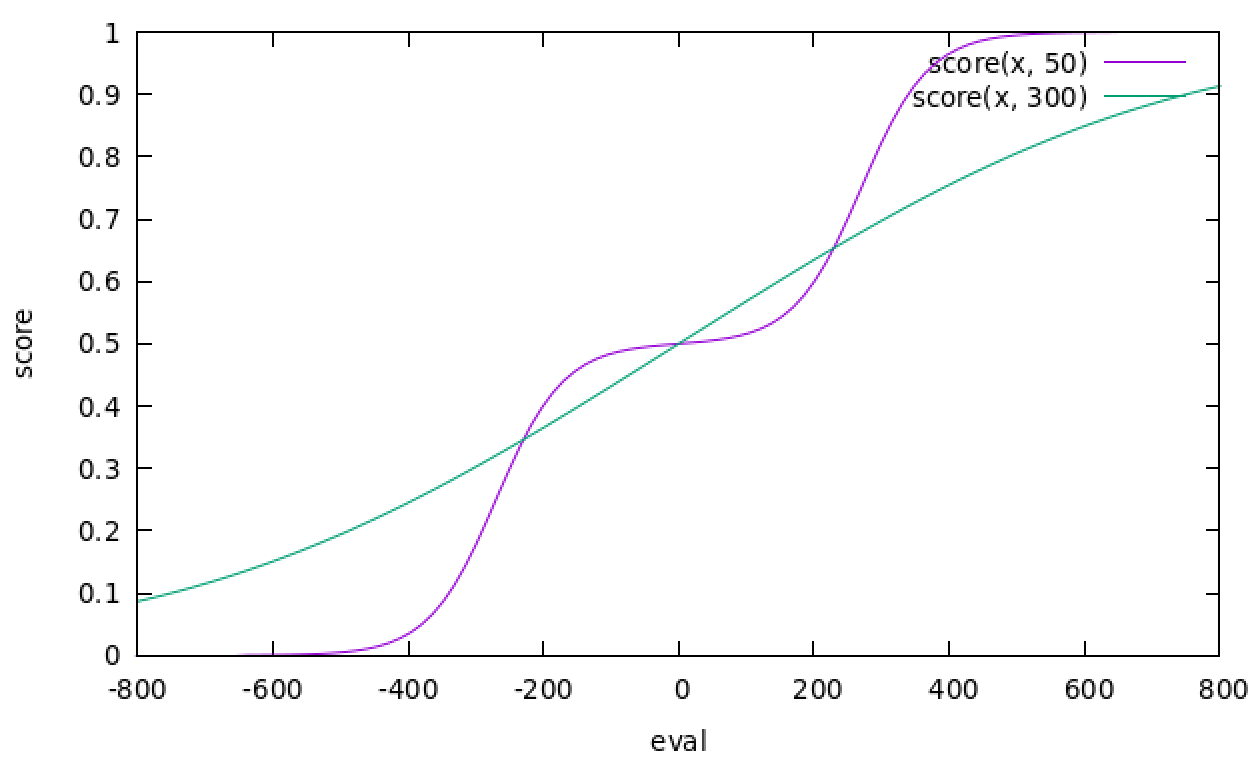

this refines the loss function to the form used for the new master net in official-stockfish/Stockfish#4100 The new loss function uses the expect game score to learn, making the the learning more sensitive to those scores between loss and draw, draw and win. Most visible for smaller values of the scaling parameter, but the current ones have been optimized. it also introduces param_index for simpler explorations of paramers, i.e. simple parameter scans.

|

Ah, wait, I didn't commit the needed cleanup changes.. |

|

The idea of the param_index is just to simplify exploration, I don't think we need to expose everything as parameters, but it is quite tedious to pass something down from the cli to the core (ideally even to the C++ data reader). Maybe there is a more elegant approach to doing so ? |

|

BTW, the previous state of the commit showed, how I have been using it. |

|

That makes sense now. Indeed, I've been usually adding a special parameter for stuff like that (usually varied per run). Looks good to me now. |

|

Do we have any picture(s) to help visualize the idea behind the double sigmoid for the loss function, and the effect of |

|

|

|

To make this more intuitive, we know games are quite won near 135 cp (270 in the above internal units), and quite lost near -135cp. They are also most likely draw in an interval around 0cp. This is reflected in the step like behavior for smaller b. The score formula is just a result of taking the win_rate_model, and computing the probability of loss, draw, win. The 'b' parameter describes how smoothly that transition is. For the high quality games in fishtest it is about 30-50, for the quick training data maybe more close to 300?. see also the plots in official-stockfish/Stockfish#3981 |

this refines the loss function to the form used for the new master net in official-stockfish/Stockfish#4100

The new loss function uses the expect game score to learn,

making the the learning more sensitive to those scores between loss and draw, draw and win.

Most visible for smaller values of the scaling parameter, but the current ones have been optimized.

it also introduces param_index for simpler explorations of paramers, i.e. simple parameter scans.