|

2 | 2 |

|

3 | 3 | This repository implements the model proposed in the paper: |

4 | 4 |

|

5 | | -Evangelos Kazakos, Jaesung Huh, Arsha Nagrani, Andrew Zisserman, Dima Damen, **With a Little Help from my Temporal Context: Multimodal Egocentric Action Recognition**, *BMVC*, 2021 |

| 5 | +Evangelos Kazakos, Jaesung Huh, Arsha Nagrani, Andrew Zisserman, Dima Damen, **With a Little Help from my Temporal Context: Multimodal Egocentric Action Recognition**, BMVC, 2021 |

6 | 6 |

|

7 | 7 | [Project webpage](https://ekazakos.github.io/MTCN-project/) |

8 | | -### Code & models coming soon! |

| 8 | + |

| 9 | +[arXiv paper](https://arxiv.org/abs/2111.01024) |

| 10 | + |

| 11 | +## Citing |

| 12 | +When using this code, kindly reference: |

| 13 | + |

| 14 | +``` |

| 15 | +@INPROCEEDINGS{kazakos2021MTCN, |

| 16 | + author={Kazakos, Evangelos and Huh, Jaesung and Nagrani, Arsha and Zisserman, Andrew and Damen, Dima}, |

| 17 | + booktitle={British Machine Vision Conference (BMVC)}, |

| 18 | + title={With a Little Help from my Temporal Context: Multimodal Egocentric Action Recognition}, |

| 19 | + year={2021}} |

| 20 | +``` |

| 21 | + |

| 22 | +## NOTE |

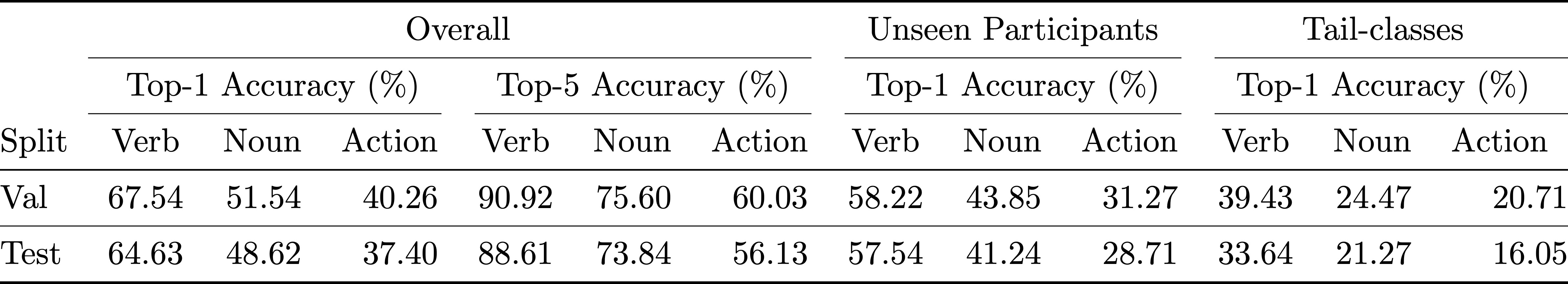

| 23 | +Although we train MTCN using visual SlowFast features extracted from a model trained with video clips of 2s, at Table 3 of our paper and Table 1 of Appendix (Table 6 in the arXiv version) where we compare MTCN with SOTA, the results of SlowFast are from [1] where the model is trained with video clips of 1s. In the following table, we provide the results of SlowFast trained with 2s, for a direct comparison as we use this model to extract the visual features. |

| 24 | + |

| 25 | + |

| 26 | + |

| 27 | +## Requirements |

| 28 | + |

| 29 | +Project's requirements can be installed in a separate conda environment by running the following command in your terminal: ```$ conda env create -f environment.yml```. |

| 30 | + |

| 31 | +## Features |

| 32 | + |

| 33 | +The extracted features for each dataset can be downloaded using the following links: |

| 34 | + |

| 35 | +### EPIC-KITCHENS-100: |

| 36 | + |

| 37 | +* [Train](https://www.dropbox.com/s/yb9jtzq24cd2hnl/audiovisual_slowfast_features_train.hdf5?dl=0) |

| 38 | +* [Val](https://www.dropbox.com/s/8yeb84ewd2meib8/audiovisual_slowfast_features_val.hdf5?dl=0) |

| 39 | +* [Test](https://www.dropbox.com/s/6vifpn3qurkyf96/audiovisual_slowfast_features_test.hdf5?dl=0) |

| 40 | + |

| 41 | +### EGTEA: |

| 42 | + |

| 43 | +* [Train-split1](https://www.dropbox.com/s/6hr994w3kkvbtv0/visual_slowfast_features_train_split1.hdf5?dl=0) |

| 44 | +* [Test-split1](https://www.dropbox.com/s/03aa8hmflv7depe/visual_slowfast_features_test_split1.hdf5?dl=0) |

| 45 | + |

| 46 | +## Pretrained models |

| 47 | + |

| 48 | +We provide pretrained models for EPIC-KITCHENS-100: |

| 49 | + |

| 50 | +* Audio-visual transformer [link](https://www.dropbox.com/s/vqe7esmqqwsebo6/mtcn_av_sf_epic-kitchens-100.pyth?dl=0) |

| 51 | +* Language model [link](https://www.dropbox.com/s/80lcnvsoq4y7tux/mtcn_lm_epic-kitchens-100.pyth?dl=0) |

| 52 | + |

| 53 | +## Ground-truth |

| 54 | + |

| 55 | +* The ground-truth of EPIC-KITCHENS-100 can be found at [this repository](https://github.com/epic-kitchens/epic-kitchens-100-annotations) |

| 56 | +* The ground-truth of EGTEA, processed by us to be in a cleaner format, can be downloaded from the following links: [[Train-split1]](https://www.dropbox.com/s/8zxdsi13v7oy106/train_split1.pkl?dl=0) [[Test-split1]](https://www.dropbox.com/s/50bkljl71njyj46/test_split1.pkl?dl=0) [[Action mapping]](https://www.dropbox.com/s/cg0pagu2px0f6k0/actions_egtea.csv?dl=0) |

| 57 | + |

| 58 | +## Train |

| 59 | + |

| 60 | +### EPIC-KITCHENS-100 |

| 61 | +To train the audio-visual transformer on EPIC-KITCHENS-100, run: |

| 62 | + |

| 63 | +``` |

| 64 | +python train_av.py --dataset epic-100 --train_hdf5_path /path/to/epic-kitchens-100/features/audiovisual_slowfast_features_train.hdf5 |

| 65 | +--val_hdf5_path /path/to/epic-kitchens-100/features/audiovisual_slowfast_features_val.hdf5 |

| 66 | +--train_pickle /path/to/epic-kitchens-100-annotations/EPIC_100_train.pkl |

| 67 | +--val_pickle /path/to/epic-kitchens-100-annotations/EPIC_100_validation.pkl |

| 68 | +--batch-size 32 --lr 0.005 --optimizer sgd --epochs 100 --lr_steps 50 75 --output_dir /path/to/output_dir |

| 69 | +--num_layers 4 -j 8 --classification_mode all --seq_len 9 |

| 70 | +``` |

| 71 | + |

| 72 | +To train the language model on EPIC-KITCHENS-100, run: |

| 73 | +``` |

| 74 | +python train_lm.py --dataset epic-100 --train_pickle /path/to/epic-kitchens-100-annotations/EPIC_100_train.pkl |

| 75 | +--val_pickle /path/to/epic-kitchens-100-annotations/EPIC_100_validation.pkl |

| 76 | +--verb_csv /path/to/epic-kitchens-100-annotations/EPIC_100_verb_classes.csv |

| 77 | +--noun_csv /path/to/epic-kitchens-100-annotations/EPIC_100_noun_classes.csv |

| 78 | +--batch-size 64 --lr 0.001 --optimizer adam --epochs 100 --lr_steps 50 75 --output_dir /path/to/output_dir |

| 79 | +--num_layers 4 -j 8 --num_gram 9 --dropout 0.1 |

| 80 | +``` |

| 81 | + |

| 82 | +### EGTEA |

| 83 | +To train the visual-only transformer on EGTEA (EGTEA does not have audio), run: |

| 84 | + |

| 85 | +``` |

| 86 | +python train_av.py --dataset egtea --train_hdf5_path /path/to/egtea/features/visual_slowfast_features_train_split1.hdf5 |

| 87 | +--val_hdf5_path /path/to/egtea/features/visual_slowfast_features_test_split1.hdf5 |

| 88 | +--train_pickle /path/to/EGTEA_annotations/train_split1.pkl --val_pickle /path/to/EGTEA_annotations/test_split1.pkl |

| 89 | +--batch-size 32 --lr 0.001 --optimizer sgd --epochs 50 --lr_steps 25 38 --output_dir /path/to/output_dir |

| 90 | +--num_layers 4 -j 8 --classification_mode all --seq_len 9 |

| 91 | +``` |

| 92 | + |

| 93 | +To train the language model on EGTEA, |

| 94 | +``` |

| 95 | +python train_lm.py --dataset egtea --train_pickle /path/to/EGTEA_annotations/train_split1.pkl |

| 96 | +--val_pickle /path/to/EGTEA_annotations/test_split1.pkl |

| 97 | +--action_csv /path/to/EGTEA_annotations/actions_egtea.csv |

| 98 | +--batch-size 64 --lr 0.001 --optimizer adam --epochs 50 --lr_steps 25 38 --output_dir /path/to/output_dir |

| 99 | +--num_layers 4 -j 8 --num_gram 9 --dropout 0.1 |

| 100 | +``` |

| 101 | + |

| 102 | +## Test |

| 103 | + |

| 104 | +### EPIC-KITCHENS-100 |

| 105 | +To test the audio-visual transformer on EPIC-KITCHENS-100, run: |

| 106 | + |

| 107 | +``` |

| 108 | +python test_av.py --dataset epic-100 --test_hdf5_path /path/to/epic-kitchens-100/features/audiovisual_slowfast_features_val.hdf5 |

| 109 | +--test_pickle /path/to/epic-kitchens-100-annotations/EPIC_100_validation.pkl |

| 110 | +--checkpoint /path/to/av_model/av_checkpoint.pyth --seq_len 9 --num_layers 4 --output_dir /path/to/output_dir |

| 111 | +--split validation |

| 112 | +``` |

| 113 | + |

| 114 | +To obtain scores of the model on the test set, simply use ```--test_hdf5_path /path/to/epic-kitchens-100/features/audiovisual_slowfast_features_test.hdf5```, |

| 115 | +```--test_pickle /path/to/epic-kitchens-100-annotations/EPIC_100_test_timestamps.pkl``` |

| 116 | +and ```--split test``` instead. Since the labels for the test set are not available the script will simply save the scores |

| 117 | +without computing the accuracy of the model. |

| 118 | + |

| 119 | +To evaluate your model on the validation set, follow the instructions in [this link](https://github.com/epic-kitchens/C1-Action-Recognition). |

| 120 | +In the same link, you can find instructions for preparing the scores of the model for submission in the evaluation server and obtain results |

| 121 | +on the test set. |

| 122 | + |

| 123 | +Finally, to filter out improbable sequences using LM, run: |

| 124 | + |

| 125 | +``` |

| 126 | +python test_av_lm.py --dataset epic-100 |

| 127 | +--test_pickle /path/to/epic-kitchens-100-annotations/EPIC_100_validation.pkl |

| 128 | +--test_scores /path/to/audio-visual-results.pkl |

| 129 | +--checkpoint /path/to/lm_model/lm_checkpoint.pyth |

| 130 | +--num_gram 9 --split validation |

| 131 | +``` |

| 132 | +Note that, ```--test_scores /path/to/audio-visual-results.pkl``` are the scores predicted from the audio-visual transformer. To obtain scores on the test set, use ```--test_pickle /path/to/epic-kitchens-100-annotations/EPIC_100_test_timestamps.pkl``` |

| 133 | +and ```--split test``` instead. |

| 134 | + |

| 135 | +Since we are providing the trained models for EPIC-KITCHENS-100, `av_checkpoint.pyth` and `lm_checkpoint.pyth` in the test scripts above could be either the provided pretrained models or `model_best.pyth` that is the your own trained model. |

| 136 | + |

| 137 | +### EGTEA |

| 138 | + |

| 139 | +To test the visual-only transformer on EGTEA, run: |

| 140 | + |

| 141 | +``` |

| 142 | +python test_av.py --dataset egtea --test_hdf5_path /path/to/egtea/features/visual_slowfast_features_test_split1.hdf5 |

| 143 | +--test_pickle /path/to/EGTEA_annotations/test_split1.pkl |

| 144 | +--checkpoint /path/to/v_model/model_best.pyth --seq_len 9 --num_layers 4 --output_dir /path/to/output_dir |

| 145 | +--split test_split1 |

| 146 | +``` |

| 147 | + |

| 148 | +To filter out improbable sequences using LM, run: |

| 149 | +``` |

| 150 | +python test_av_lm.py --dataset egtea |

| 151 | +--test_pickle /path/to/EGTEA_annotations/test_split1.pkl |

| 152 | +--test_scores /path/to/visual-results.pkl |

| 153 | +--checkpoint /path/to/lm_model/model_best.pyth |

| 154 | +--num_gram 9 --split test_split1 |

| 155 | +``` |

| 156 | + |

| 157 | +In each case, you can extract attention weights by simply including ```--extract_attn_weights``` at the input arguments of the test script. |

| 158 | + |

| 159 | +## References |

| 160 | +[1] Dima Damen, Hazel Doughty, Giovanni Maria Farinella, , Antonino Furnari, Jian Ma,Evangelos Kazakos, Davide Moltisanti, Jonathan Munro, Toby Perrett, Will Price, andMichael Wray, **Rescaling Egocentric Vision: Collection Pipeline and Challenges for EPIC-KITCHENS-100**, IJCV, 2021 |

| 161 | + |

| 162 | +## License |

| 163 | + |

| 164 | +The code is published under the Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License, found [here](https://creativecommons.org/licenses/by-nc-sa/4.0/). |

0 commit comments