Fix validation_split has no output and add validation_data parameter to model.fit#1014

Fix validation_split has no output and add validation_data parameter to model.fit#1014Oceania2018 merged 2 commits intoSciSharp:masterfrom Wanglongzhi2001:master

Conversation

|

|

||

| IEnumerable<(string, Tensor)> logs = null; | ||

| //Dictionary<string, float>? logs = null; | ||

| var logs = new Dictionary<string, float>(); |

There was a problem hiding this comment.

Because I wasn't very familiar with C# at the time of my last commit, so I change the definition of logs this time to align with the form of Model.fit,

| var train_x = x; | ||

| var train_y = y; | ||

|

|

||

| if (validation_split != 0f && validation_data == null) |

There was a problem hiding this comment.

When validation_split is not equals to 0 and validation_data is not null, we ignore the validation_split.

| int verbose = 1, | ||

| List<ICallback> callbacks = null, | ||

| float validation_split = 0f, | ||

| (NDArray val_x, NDArray val_y)? validation_data = null, |

There was a problem hiding this comment.

Add a validation_data parameter to align with python

| int verbose = 1, | ||

| List<ICallback> callbacks = null, | ||

| float validation_split = 0f, | ||

| //float validation_split = 0f, |

There was a problem hiding this comment.

I haven't figured out how to split when input is IDatasetV2 type

| Model = this, | ||

| StepsPerExecution = _steps_per_execution | ||

| }); | ||

| foreach( var (x,y) in dataset) |

There was a problem hiding this comment.

oops, this is a typo, I will commit again to delete it.

| { | ||

| logs["val_" + log.Key] = log.Value; | ||

| } | ||

| callbacks.on_train_batch_end(End_step, logs); |

There was a problem hiding this comment.

Because some new log has been added to the logs after the evaluation, so we need to call it again

| void on_test_begin(); | ||

| void on_test_batch_begin(long step); | ||

| void on_test_batch_end(long end_step, IEnumerable<(string, Tensor)> logs); | ||

| void on_test_batch_end(long end_step, Dictionary<string, float> logs); |

There was a problem hiding this comment.

Since I rectify the definition of logs in Model.Evaluate, so here and somewhere need to change either.

| bool use_multiprocessing = false, | ||

| bool return_dict = false) | ||

| bool return_dict = false, | ||

| bool is_val = false |

There was a problem hiding this comment.

Because when we call evaluate using validation_split in model.fit, we don't need to call the on_test_batch_end, which will interfere with our output on the screen. And when we call model.evaluate , we does need the on_test_batch_end. So I pass a parameter to distinguish between the two cases

| return callbacks.History; | ||

| } | ||

|

|

||

| History FitInternal(DataHandler data_handler, int epochs, int verbose, List<ICallback> callbackList, (IEnumerable<Tensor>, NDArray)? validation_data, |

There was a problem hiding this comment.

I overload FitInternal to handle different types of validation_data

|

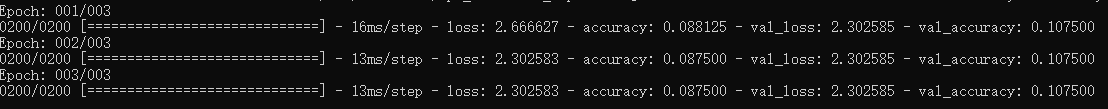

BTW, I often come across model.fit does not converge, this has been bothering me for a long time, but I have not found the reason. |

Fix validation_split has no output and add validation_data parameter to model.fit and the definition of logs has been modified to align with the log form of train (DIctionary<string, float>)