-

Notifications

You must be signed in to change notification settings - Fork 8.1k

Send telemetry lazy at startup #14281

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

I didn't know you were doing something similar :)

No, we cannot delay the 'sending telemetry work' by 500 ms. This will likely cause telemetry to not be sent at all when a user executes command directly using

What do you mean by "is sync"? Is it sending out package to the server synchronously (I doubt it ...)? |

I am collecting all in #14268 issue to sync the work with others.

Yes, I think it is sending synchronously to to the server. I read docs and best practices and found the recommendation to put it in background. Traces show the same for me.

I see how you approached it. But my thoughts is again about low-power hardware -

|

Where do you see that? Can you share the links? I will also check with @JamesWTruher on this.

On low-power hardware, it's even less deteministic about whether a task/worker thread can even be scheduled to run, and thus less deteministic about whether what we do here actually make a difference -- it may even be a penalty on those hardwares due to context switching or contention. For #14304, I think I will leverage worker thread only if |

https://github.com/degant/application-insights-best-practices

If you could confirm it is always async it would be nice. Another question how do we ensure the telemetry has been sent before PowerShell exit? I see TelemetryClient.Flush() async too.

That is why I am convinced that we should do fewer threads - there are too many of them. We need to reconsider this. Regarding ApplicationInsights, unfortunately we will have to move this to a background thread. In last commits I have tried to make the process more deterministic. Update:after some thought, I suppose that we'd better wait and first do all the other optimizations that we can, as well as get and evaluate all the benefits of future versions of .Net 6.0 - if we get performance comparable to WinPS, then it generally makes no sense to complicate the code for the sake of ApplicationInsights. It's much better to ask the ApplicationInsights owners to improve this scenario. We have several months to wait. If they do this, then we won't have to do anything and complicate the code.

In the case we should make more common improvement and think about all threads - perhaps this helps with scenarios like Wasm (and maybe PSES). Doing this for ApplicationInsights only looks redundant.

|

95b6799 to

9fb85c2

Compare

|

I checked with @JamesWTruher and he confirmed that ApplicationInsight does not send telemetry packets to the endpoint (server) in synchronous way. It maintains a in-memory buffer and uses thread pool thread for sending telemetry packets. That's how a tracing library should work -- never sending packets on wire synchronous. Log4Net is bad just for that exact reason. Pre-aggregation is more about processing the data before sending on the wire, so as to "reducing the volume of data sent from the SDK to the Application Insights collection endpoint". According to Jim,

It's a best effort thing. It's possible that a proces may exit before the packets are sent out, even today. But that also means if a task is used for that job, we shouldn't enforce a delay on the task. Also, we probably should wait on that task to finish before giving the initial prompt to user (or something similar when it's

I want to remind you again on the philosophy here, leveraging parallel processing doesn't contradict with the work that improves specific/individual code paths. When it's handled correctly, they amplify the final result. As for your concern about the background thread used by ApplicationInsight, I think you overestimated it's impact. The expensive part that I see in the startup telemetry collection, is the loading of the ApplicationInsight assembly and the initialization of types from it.

I agree. I don't mean to rush my PR to the code base :) I'm not happy with the changes yet, and also it needs more testing to fine-tune the decisions like how many tasks to use, and what work load shall be done in tasks. But we also cannot wait till near the end of the release cycle for a PR like #14304. About the .NET 6 runtime initialization improvement, can you share links about it? |

I collect all references in #14268. Many thanks for clarifications about ApplicationInsight!

My worries are only about the initialization. It would be great if you could ask ApplicationInsight owners to improve this.

It is again question for ApplicationInsight owners. I hope they can do more and better than us.

I completely trust your experience (many thanks for discussions with me), but I have absolutely no idea how this is implemented in depth (after Stephan Toub's articles, I only have an understanding that everything is complicated) and how we could reliably check this in tests, especially for a startup script.

:-)))) So it seems we have a chance to see how the open PR counter is rapidly decreasing from 150 to 0 - half of them you and your team can merge in a day as very simple. I updated #14268 with our last thoughts - feel free to add more there. Thanks! |

I'm afraid the answer would be "go ahead take a look at our code base, any perf improvement contributions are welcome!" 😄 Thanks for the compilation in #14268, and I'm glad that we are on the same page now. |

Threaten them migrating to OpenTelemetry 😄

You could open new tracking issue for this and share where we could investigate - I could get involved, maybe prepare fixes and open issues in .Net if needed - now is the right time. Also we could see Source Generators. I made an experiment for TypeGen. This works but the .Net feature is not ready for production (I already sent some feedbacks) and .Net team will actively improve and use them in .Net 6.0. |

|

Like |

|

This pull request has been automatically marked as Review Needed because it has been there has not been any activity for 7 days. |

|

@iSazonov I went through our comments and it looks we were on the same page that this PR is not needed. I will close it now. |

PR Summary

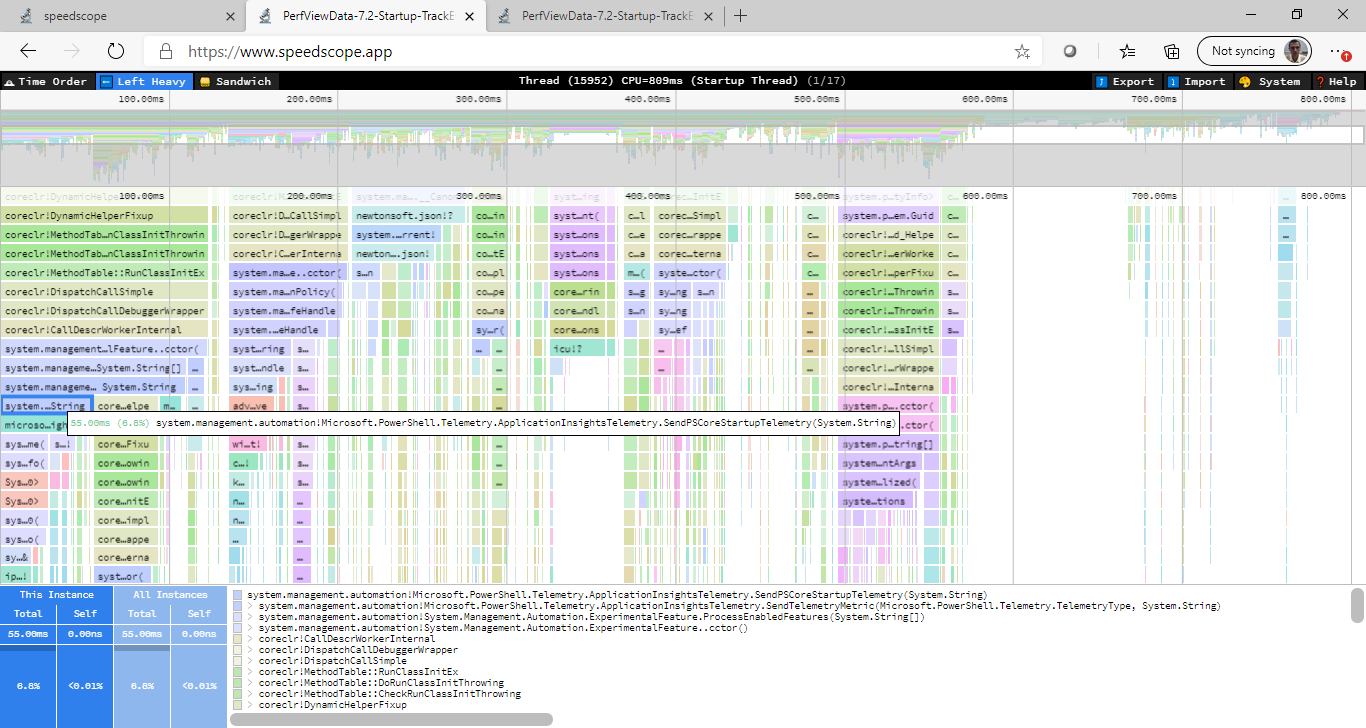

At startup we send a telemetry that delays the startup.

The delay is from 10 to 100 ms on my notebook but under other conditions this delay may be even worse (issues with DNS, network, target telemetry service).

The fix is to send startup telemetry in a background thread with 500 ms delay.

Open question is how many do we want to postpone the send? Current value is 500 ms. This corresponds to the start time of pwsh. If we reduce this time, it may slow down the launch. If we increase this time, we can lose telemetry for short processes.

Additionally, we could add a wait for this task to complete in PowerShell exit code to ensure that the telemetry is transmitted

Perf win on my notebook:

Screenshots show the delays we removed:

PR Context

Tracking issue #14268

PR Checklist

.h,.cpp,.cs,.ps1and.psm1files have the correct copyright headerWIP:or[ WIP ]to the beginning of the title (theWIPbot will keep its status check atPendingwhile the prefix is present) and remove the prefix when the PR is ready.