I haven’t found a LaTeX IDE that I am happy with (texmaker comes close, but I don’t like the fact that it doesn’t properly underline the trigger letter in menus, even if Windows is set to do that), and so I ended up defaulting to just editing my book and papers with notepad++ and running pdflatex manually. But it’s a bit of a nuisance to get the preview: ctrl-s to save, alt-tab to command-line, up-arrow and enter to re-run pdflatex, alt-tab to pdf viewer. So I wrote a little python script that watches my current directory and if any .tex file changes in it, it re-runs pdflatex. So now it’s just ctrl-s, alt-tab to get the preview. I guess it’s only four keystrokes saved, but it feels more seamless and straightforward. The script also launches notepad++ and my pdf viewer at the start of the session to save me some typing.

Tuesday, February 4, 2025

Wednesday, October 23, 2024

A new kind of project

Sunday, August 18, 2024

317600 points in Eggsplode!

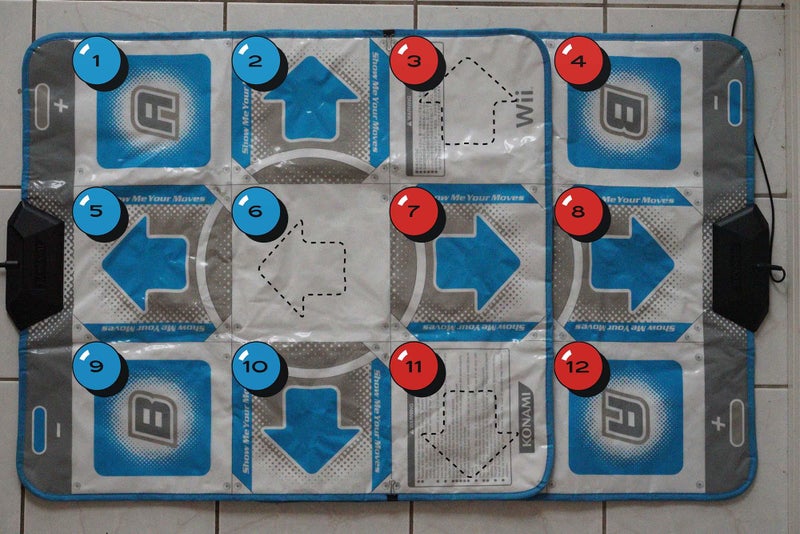

Here's my TwinGalaxies record run of Eggsplode! from last year. It's using NES emulation (fceumm, with my Power Pad support code) on the Raspberry 3B+ using fceumm, and I am using two overlapped Wii DDR pads in place of the Power Pad controller (instructions here). The middle of the video is sped up 20X.

To be fair, there were no other competitors on TG for the emulation track of Eggsplode! (The score was higher than their best original hardware score, but I don't know if it's harder or easier to get this score on emulation rather than original hardware. The main differences are that I was using a larger, but perhaps better quality, pad.)

Sunday, February 18, 2024

Disable Windows double-finger-click

Sunday, January 28, 2024

Measure screen latency

Wednesday, September 13, 2023

Ontology and duck typing

Some computer languages (notably Python) favor duck-typing: instead of relying on checking whether an object officially falls under a type like duck, one checks whether it quacks, i.e., whether it has the capabilities of a duck object. You can have a dog object that behaves like a vector, and a vector object that behaves like a dog.

It would be useful to explore how well one could develop an ontology based on duck-typing rather than on categories. For instance, instead of some kind of categorical distinction between particulars and universals, one simply distinguishes between objects that have the capability to instantiate and objects that have the capability to be instantiated, without any prior insistence that if you can be instantiated, then you are abstract, non-spatiotemporal, etc. Now it may turn out that either contingently or necessarily none of the things that are spatiotemporal can be instantiated, but on the paradigm I am suggesting, the explanation of this would not lie in a categorical difference between spatiotemporal entities and entities that have the capability of being instantiated. It may lie in some incompatibility between the capabilities of being instantiated and occupying spacetime (though it’s hard to see what that incompatibility would be) or it may just be a contingent fact that there is no object has both capabilities.

As a theist, I think there is a limit to the duck typing. There will, at least, need to be a categorical difference between God and creature. But what if that’s the only categorical difference?

Wednesday, July 19, 2023

Video splitter python script

I'm working on submitting my climbing record to Guinness. They require video--including slow motion video!--but they have a 1gb limit on uploads, and recommend splitting videos into 1gb portions with five second overlap. I made a little python script to do this using ffmpeg. You can specify the maximum size (default: 999999999 bytes) and the aimed-at overlap (default: 6 seconds, to be on the safe side for Guinness), and it will estimate how many parts you need, and split the file into approximately these many. If any of the resulting parts is too big, it will try again with more parts.

Wednesday, May 24, 2023

Magnetic sensor arcade spinner

For a while I've wanted to have an arcade spinner for games like Tempest and Arkanoid. I made one with an Austria Microsystems hall-effect magnetic sensor. The spinner is mounted on ball-bearings and has satisfyingly smooth motion with lots of inertia.

Build instructions are here.

Monday, January 9, 2023

Three non-philosophy projects

Here are some non-philosophy hobby projects I’ve been doing over the break:

Measuring exercise bike power output

Dumping NES ROMs

Adapting Dance Dance Revolution and other mat controllers to work as NES Power Pad controllers for emulation.

Wednesday, December 14, 2022

Web-based tool for adding timed text and a timer to a video

When making my Guinness application record video, I wanted to include a time display in the video and Guinness also required a running count display. I ended up writing a Python script using OpenCV2 to generate a video of the time and lap count, and overlaid it with the main video in Adobe Premiere Rush.

Since then, I wrote a web-based tool for generating a WebP animation of a timer and text synchronized to a set of times. The timer can be in seconds or tenths of a second, and you can specify a list of text messages and the times to display them (or to hide them). You can then overlay it on a video in Premiere Rush or Pro. There is alpha support, so you can have a transparent or translucent background if you like, and a bunch of fonts to choose from (including the geeky-looking Hershey font that I used in my Python script.)

The code uses webpxmux.js, though it was a little bit tricky because in-browser Javascript may not have enough memory to store all the uncompressed images that webpxmux.js needs to generate an animation. So instead I encode each frame to WebP using webpxmux.js, extract the compressed ALPH and VP8 chunks from the WebP file, and store only the compressed chunks, writing them all at the end. (It would be even better from the memory point of view to write the chunks one by one rather than storing them in memory, but a WebP file has a filesize in its header, and that’s not known until all the compressed chunks have been generated. One could get around this limitation by generating the video twice, but that would be twice as slow.)

Tuesday, May 24, 2022

GreaseWeazle

I'm trying to thin the herd of old computers at home. I realized that the only real reason I had a 20-year-old Linux box at home was if I ever wanted to use a 3.5" drive in it to deal with floppies for various systems, especially my HP 1653B oscilloscope (I could get a USB floppy drive for one of the laptops at home, but they aren't usually compatible with non-DOS disk formats).

Moreover, the 3.5" drive in the computer wasn't even working. Aligning the heads on the drive solved that problem, and then I assembled a GreaseWeazle using one of the blue pill microcontroller boards I have lying around. Then I made a 3D printable case for the messy assembly.

And now I can read and copy floppies for my oscilloscope on my laptop. :-)

Tuesday, May 10, 2022

Towards a static solution to Wordle

Wednesday, March 9, 2022

Greedy strategies for Wordle

Today, I wanted to see how well the following greedy strategy works on Wordle. Given a set H of words, a word w partitions H into equivalence classes or “cells”, where two words count as equivalent if they give the same evaluation if w is played. We can measure the fineness of a partition by the size of its largest cell. Then, the greedy strategy is this:

Start with the 12792 five-letter Scrabble words of the original Wordle (things will be a bit different for the bowdlerized New York Times version).

Guess a word that results in a finest partition of the remaining words, with ties broken by favoring guesses that are themselves one of the remaining words, and remaining ties broken alphabetically.

If not guessed correctly, repeat 2–3 with the remaining words replaced by the cell of the partition that fits the guess.

A quick and dirty python (I recommend pypy) implementation is here.

Note that this greedy strategy does not cheat by using the secret list of answer words, but only uses the publicly available list of five-letter Scrabble words.

Here some observations:

The greedy strategy succeeds within six guesses for all but one of the 2315 answer words in the original Wordle. The remaining word needs seven guesses.

A human might be able to guess the answer word that the algorithm fails for, because a real human would not be partitioning all the five-letter Scrabble words, but only the more common words that are a better bet for being on the answer list.

The greedy algorithm’s first guess word is “serai”, and the maximum cell size in the resulting partition is only 697 words.

While only one answer list word remained that the greedy strategy fails for, it looks like Wardle may have deliberately removed from the answer list some other common, clean and ordinary English words that the greedy strategy does not guess in six guesses.

It would be a cheat, but one can optimize the algorithm by starting with only the 2315 answer words. Then indeed the greedy strategy guesses every answer word in six guesses, and all but two can be done in five.

A human can do something in-between, by having some idea of what words an ordinary person is likely to know.

Wednesday, February 9, 2022

Game Boy Fiver [Wordle clone]: How to compress 12972 five-letter words to 17871 bytes

Update: You can play an updated version online here in the binjgb Game Boy emulator. This is the version with the frequency-based answer list rather than the official Wordle list, for copyright reasons.

There is a Game Boy version of Wordle, using a bloom filter, a reduced vocabulary and a reduced list of guess words, all fitting on one 32K cartridge. I decided to challenge myself and see if I could fit in the whole 12972 word Wordle vocabulary, with the whole 2315 word answer list. So the challenge is:

Compress 12972 five-letter words (Vocabulary)

Compress a distinguished 2315 word subset (Answers).

I managed it (download ROM here), and it works in a Game Boy emulator. There is more than one way, and what I did may be excessively complicated, but I don’t have a good feel for how fast the Game Boy runs, so I did a bit of speed optimization.

Step 0: We start with 12972 × 5 = 64860 bytes of uncompressed data.

Step 1: Divide the 12972 word list into 26 lists, based on the first letter of the word. Since in each list, the first letter is the same, we now need only store four letters per word, along with some overhead for each list. (The overhead in the final analysis will be 108 bytes.) If we stop here,

Step 2: Each four letter “word” (or tail of a word) can be stored with 5 bits per letter, thereby yielding a 20 bit unsigned integer. If we stop here, we can store each word in 2.5 bytes, for a total of 32430. That would fit on the cartridge if there was no code, but it is some progress.

Step 3: Here was my one clever idea. Each of the lists of four letter “words”, is in alphabetical order, and encoded the natural way as 20 bit numbers, the numbers will be in ascending order. Instead of storing these numbers, we need only store their arithmetical differences, starting with an initial (invalid) 0.

Step 4: Since the differences are always at least 1, we can subtract one from each difference to make the numbers slightly smaller. (This is a needless complication, but I had it, and don’t feel like removing it.)

Step 5: Store a stream of bytes encoding the difference-minus-ones. Each number is encoded as one, two or three bytes, seven-bits in each byte, with the high bit of each byte being 1 if it’s the last 7-bit sequence and 0 if it’s not. It turns out that the result is 17763 bytes, plus 108 bytes of overhead, for a total of 17871 bytes, or 28% of the original list, with very, very simple decompression.

Step 6: Now we replace each word in the alphabetically-sorted Answers list with an index into the vocabulary list. Since each index fits into 2 bytes, this would let us store the 2315 words of the Answers as 2315 × 2 = 4630 bytes.

Step 7: However, it turns out that the difference between two successive indexes is never bigger than 62. So we can re-use the trick of storing successive differences, and store the Answers in 2315 bytes. (In fact, since we only need 6 bits for the differences, we could go down to 1737 bytes, but it would complicate the code significantly.)

Result: Vocabulary plus Answers goes down to

108+17763+2315=20186 bytes. This was too big to fit on a 32K cartridge

using the existing code. But it turns out that most of the existing code

was library support code for gprintf(), and replacing the

single gprintf() call, which was just being used to format

a string containing a single-digit integer variable, with

gprint(), seemed to get everything to fit in 32K.

Example of the Vocabulary compression:

The first six words are: aahed, aalii, aargh, aarti, abaca, abaci.

Dropping the initial “a”, we get ahed, alii, argh, arti, baca, baci.

Encoding as 20-bit integers and adding an initial zero, we get 0, 7299, 11528, 17607, 18024, 32832, 32840.

The differences-minus-one are 7298, 4228, 6078, 416, 14807, 7.

Each of these fits in 14-bits (two bytes, given the high-bit usage), with the last one in 7-bits. In practice, there are a lot of differences that fit in 7-bits, so this ends up being more efficient than it looks—the first six words are not representative.

Notes:

With the code as described above, there are 250 bytes to spare in the cartridge.

One might wonder whether making up the compression algorithm saves much memory over using a standard general purpose compressor. Yes.

gziprun on the 64860 bytes of uncompressed Vocabulary yields 30338 bytes, which is rather worse than my 17871 byte compression of the Vocabulary. Plus the decompression code would, I expect, be quite a bit more complex.One could save a little memory by encoding the four-letter “words” in Step 2 in base-26 instead of four 5-bit sequences. But it would save only about 0.5K of memory, and the code would be much nastier (the Game Boy uses library functions for division!).

The Answers could be stored as a bitmap of length 12972, which would be 1622 bytes. But this would make the code for generating a random word more complicated and slower.

Monday, December 13, 2021

An introduction to simple motion detection with Python and OpenCV

Wednesday, January 6, 2021

Four-bit microcomputer trainer in Scratch

When I was a kid, I had a Radio Shack Microcomputer Trainer. This device was programmable in machine code, and was a 4-bit system with 112 nibbles(!) of RAM. It actually ran as a virtual machine on a more powerful TMS-1x00 4-bit processor. These days, kids learn coding with much higher level languages than machine code, including graphical languages like Scratch. So I had some fun over the break and bridged the gap between then and now by making an emulator (or maybe more precisely simulator) of the trainer in Scratch. For computations (though probably not for I/O) it runs in the browser (shift click the flag to get Turbo Mode, though) faster than the original did according to my benchmark.

[Instructables link with usage instructions.]

Friday, November 27, 2020

Scratch coding in Minecraft

Years ago, for my older kids' coding education, I made a Minecraft mod that lets you program in Python. Now I made a Scratch extension that works with that mod for block-based programming, that I am hoping to get my youngest into. Instructions and links are here.

Sunday, May 24, 2020

Wii Remote Lightgun

Monday, May 18, 2020

Wobble board, gamified

Last year, I made an adjustable wobble board for balance practice: a plywood disc with a 3D-printed plastic dome rocker. One thing that I always wanted was some sort of a device that would measure how long I was staying up on the board, detecting when the board edge hit the ground. I imagined things like switches under the board or even a camera trained on the board.

But what I forgot is that I already carry the electronics for the detection in my pocket. A smartphone has an accelerometer, and so if it’s placed on the board, it can measure the board angle and thus detect the edge’s approximately touching the ground. I adapted my stopwatch app to start and stop based on accelerometer values, and made this Android app. Now all I need to do is lay my phone on the board, and when the board straightens out the timer starts, going on until the board hits the ground. There are voice announcements as to how long I’ve been on the board, and a voice announcement of the final time.

Source code is here.

Instructions on building the wobble board and links to the 3D printable files are here.

One forgets how many things can be done with a phone.

I think my best time is just under a minute, with the board set to a 19 degree maximum angle.

Saturday, May 2, 2020

Relativity, brains and the unity of consciousness

I was grading undergraduate metaphysics papers last night and came across a very interesting observation in a really smart student’s paper on Special Relativity and time (I have the student’s permission to share the observation): different parts of the brain have different reference frames, and so must experience time slightly differently.

Of course, the deviation in reference frames is very, very small. It comes from such facts as that

the lower parts of the brain are closer to a massive object—the earth—which causes a slight amount of time dilation, and

we are constantly wobbling our heads in a way that makes different parts of the brain move at different speeds relative to the earth.

Does such a small difference matter? As I understand their argument, my student thought it would make the A-theory less plausible. For it makes it questionable whether we can say that we really perceive the true objective now in the way that A-theorists would want to say we do. That’s an interesting thought.

I also think the line of thought might create a problem for someone who thinks that mental states supervene on physical states. For consider the unity of consciousness whereby we are aware of multiple things at once. If the consciousness of these different things is partly constituted by different chunks of the brain, then it seems that what precise stream of consciousness we have will depend on what reference frame we choose. For instance, I might hear a sound and feel a pinch at exactly the same moment in one reference frame, but in another reference frame the sound comes before the feeling, and in other the feeling comes before the sound. But that seems wrong: the precise stream of consciousness should not depend on the reference frame.

This shows that if the order of succession within the stream of consciousness does not depend on the reference frame (and it is plausible that it does not), then the precise stream of conciousness cannot supervene on physical states. This is clear if there is no privileged reference frame in the physical world. But even if there is a metaphysically privileged reference frame as A-theorists have to say, it seems reasonable to say that this frame is “metaphysical” rather than “physical”, and hence a dependence of consciousness on this frame is not a case of supervenience of mind on the physical.

Here is what I think we should say: If the A-theory is true, then the mind somehow catches on to the absolute now. If the B-theory is true, then the mind has its own subjective timeline, which is not the timeline of the brain or any part of it.

I think a really careful materialist might be able to affirm the latter option, by analogy to how in a modern digital computer, even though at the electronic hardware level there is analog time (perhaps itself an approximation to some frothy weird quantum time), synchronization of computation to clock ticks results in the possibility of abstracting a precisely defined discrete time that “pretends” that all combinatorial logic happens instantaneously. Roughly speaking, the assembly language programmer works with respect to the discrete time, while the FPGA programmer works primarily with respect to the discrete time but has to constantly bear in mind the constraints that come from the underlying analog time. However, the correspondence between the two levels of time is only vague. Similarly, I think that it is likely that the connection between the mind’s timeline and the physical timelines is going to suffer from vagueness (though perhaps only epistemic). How philosophically happy a materialist would be with such a view is unclear, and there is a serious empirical assumption here for the materialist, namely that the brain has a global synchronizing process similar to a microprocessor’s or FPGA’s synchronizing clock. I doubt that there is, but I know very little of neuroscience.